TL;DR#

Traditional cryptographic methods for secure computation, like multi-party computation and zero-knowledge proofs, struggle with complex, unstructured problems and large datasets. These methods often rely on strict mathematical assumptions, which become computationally infeasible for complex tasks. Moreover, programming these tasks requires a deep technical understanding, limiting accessibility for non-specialists.

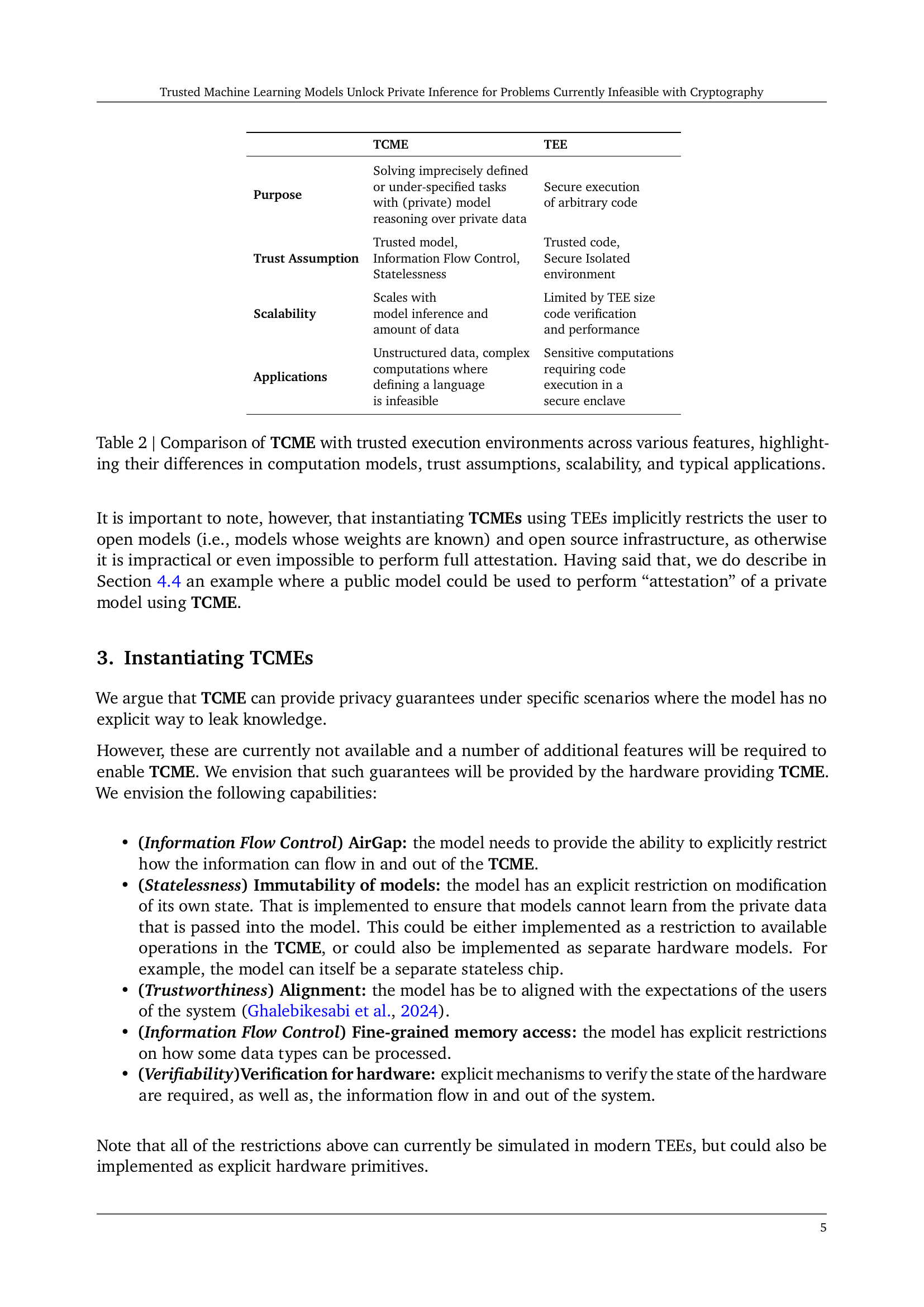

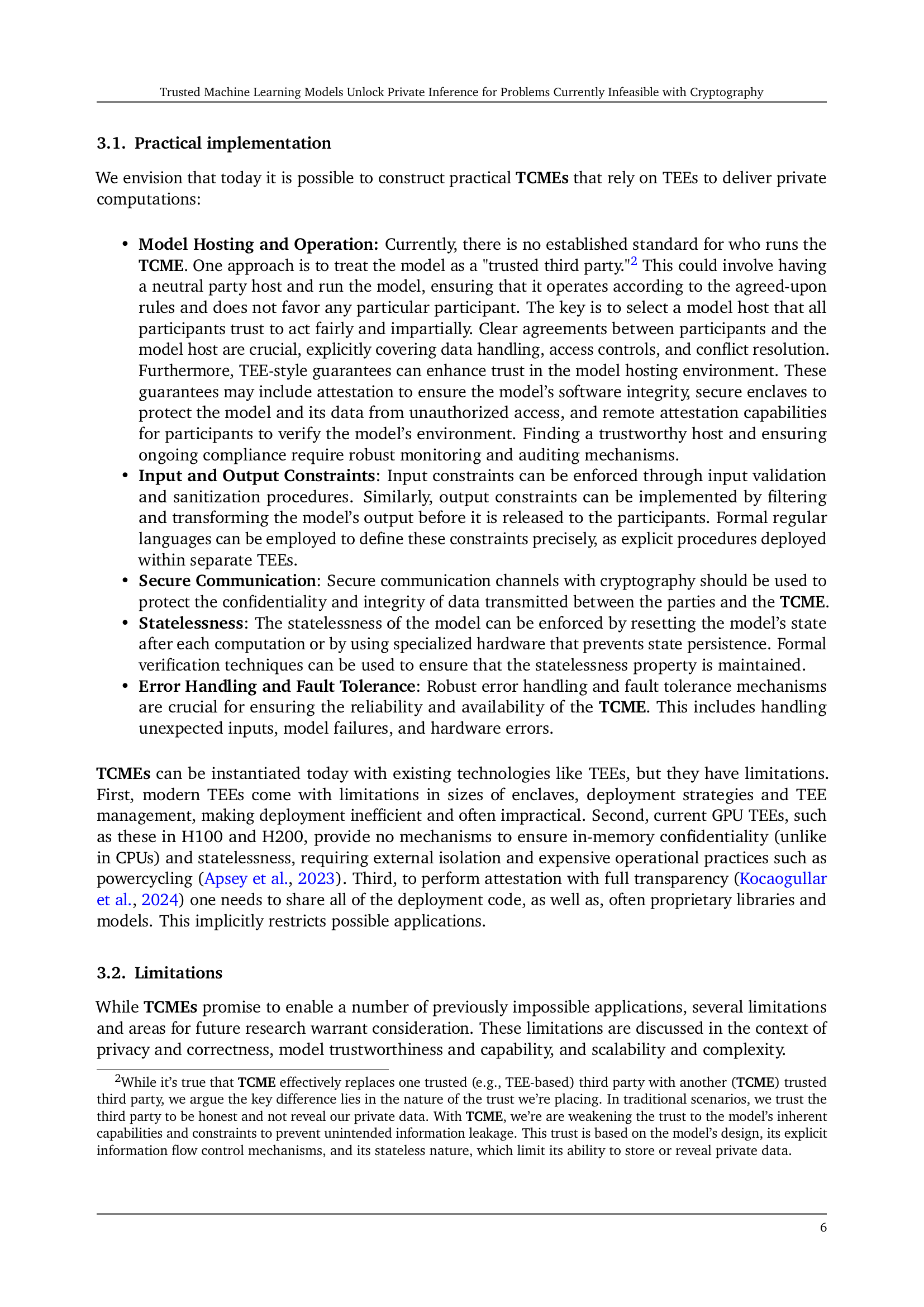

This paper introduces Trusted Capable Model Environments (TCMEs) as an alternative. TCMEs utilize capable machine learning models as trusted third parties to enable secure computation under input/output constraints and explicit information flow control. This approach addresses both the computational limitations and usability issues of traditional methods, enabling private inference for previously intractable applications. The authors describe the key properties of a TCME (statelessness, explicit information flow, and trustworthy model) and provide several compelling practical examples, including multi-agent non-competition, auditing for data breaches and property damage monitoring, showcasing the practicality and versatility of this new approach. The research also compares TCMEs with existing methods, highlighting their strengths and limitations, and pointing towards potential future research avenues.

Key Takeaways#

Why does it matter?#

This paper is important because it proposes a novel approach to secure computation that leverages the capabilities of machine learning models. This has the potential to unlock private inference for complex problems that are currently infeasible with traditional cryptographic methods. It opens new avenues for research in secure computation and privacy-preserving machine learning, particularly in addressing the limitations of existing cryptographic techniques in handling unstructured data and complex computations.

Visual Insights#

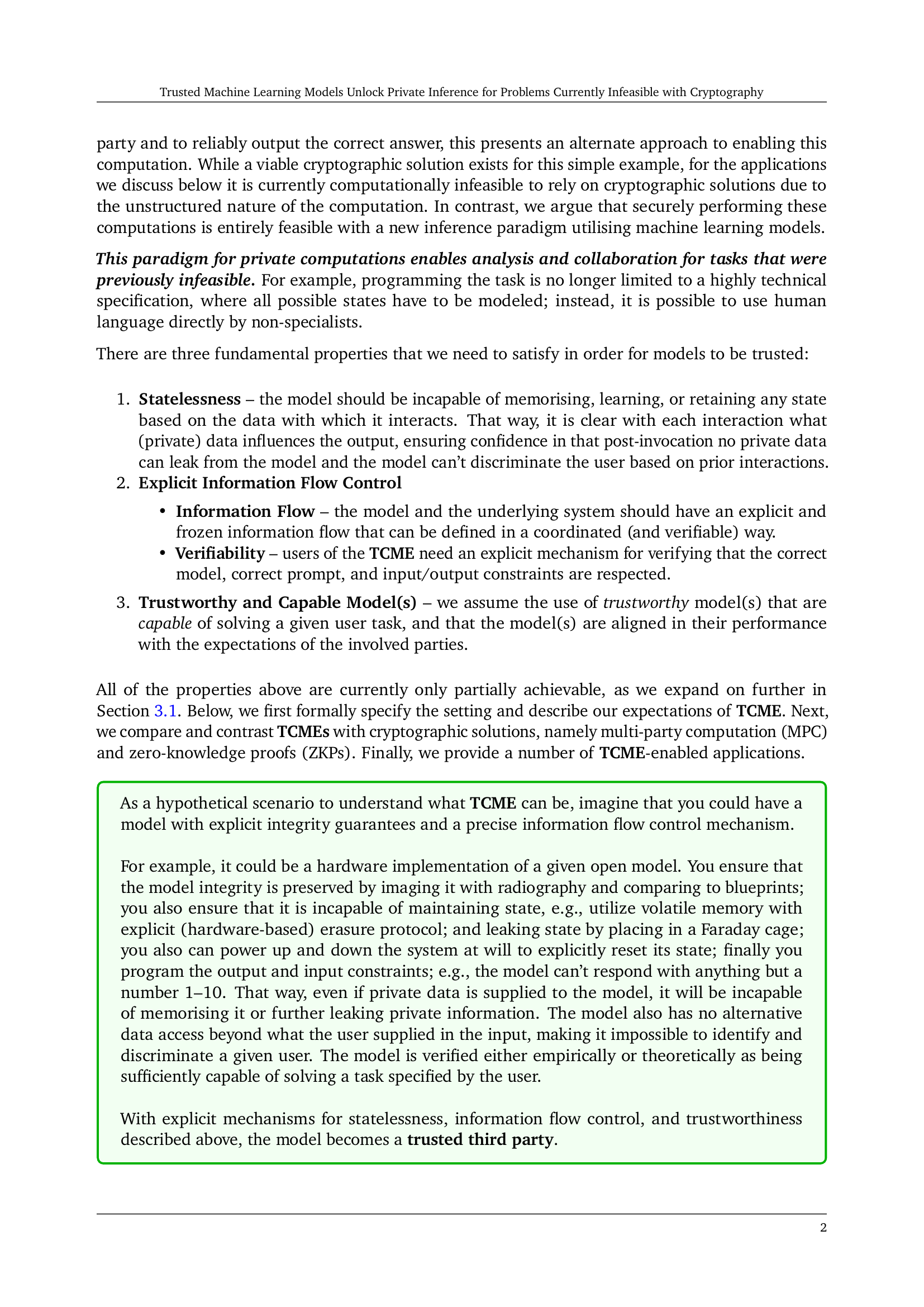

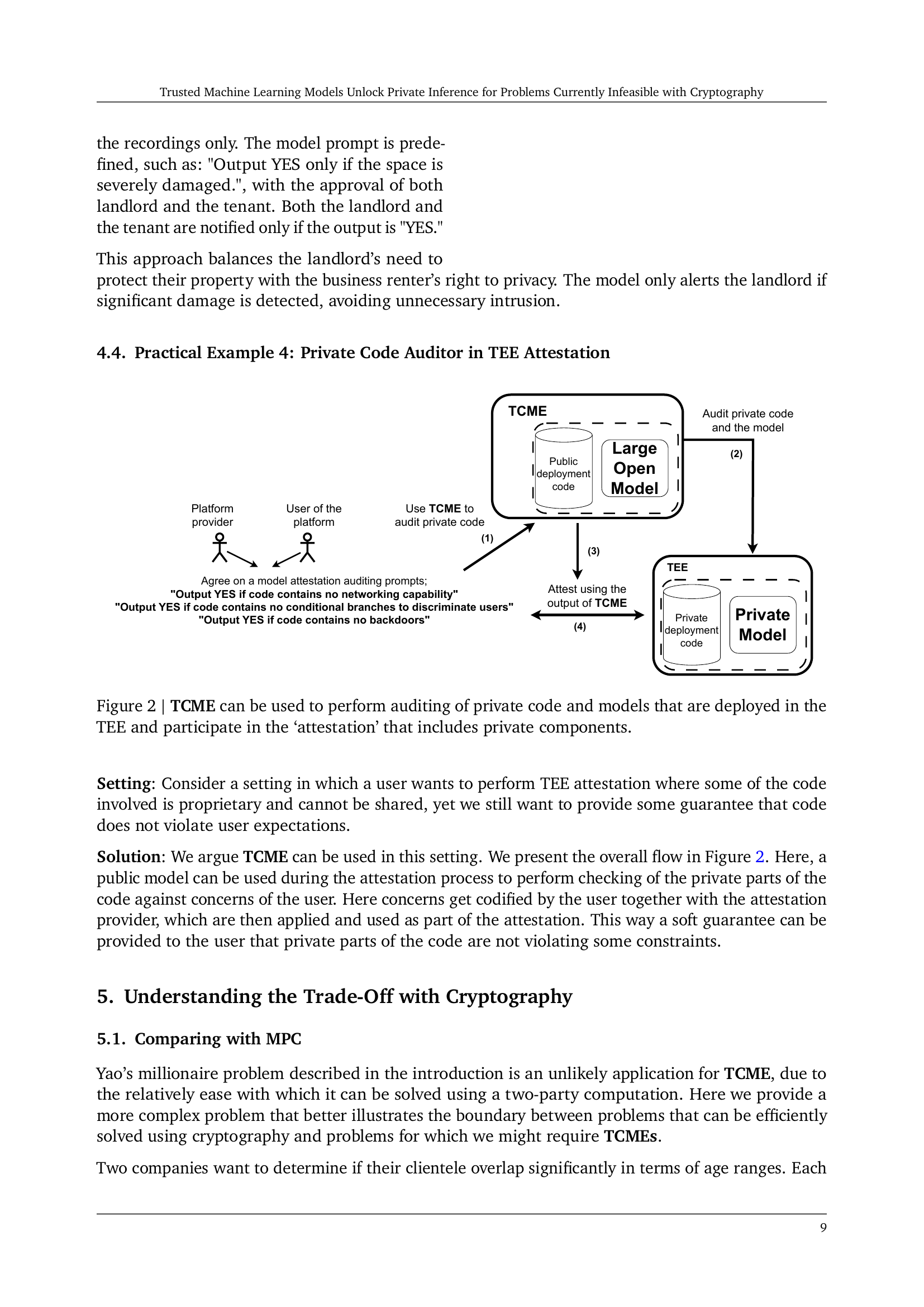

🔼 This figure illustrates a practical application of Trusted Capable Model Environments (TCMEs) in damage monitoring. A landlord wants to monitor their property for damage without infringing on renters’ privacy. A pre-agreed machine learning model within a TCME analyzes camera recordings. The model is constrained to only output ‘YES’ if significant damage is detected, thus balancing the landlord’s security needs with the renters’ privacy. The system includes input and output constraints to protect private information. The only information revealed is whether or not significant damage has occurred.

read the caption

Figure 1: Practical Example of TCME in Damage Monitoring: TCME can be used to monitor potential damage to business space while preserving privacy. The system, utilizing a pre-agreed model and prompt, analyzes camera recordings. It is restricted to output only 'YES' if significant damage is detected, ensuring minimal intrusion.

In-depth insights#

TCME: A New Paradigm#

TCMEs offer a novel approach to private inference, leveraging the capabilities of advanced machine learning models to circumvent the limitations of traditional cryptographic methods. Instead of relying on complex and computationally expensive cryptographic protocols, TCMEs utilize trained models as trusted third parties, executing computations under carefully defined input/output constraints and rigorous information flow controls. This paradigm shift enables secure computation for tasks previously considered infeasible with existing cryptographic techniques, particularly those involving unstructured data or complex, ill-defined problems. The key advantage lies in the simplicity and scalability, allowing for human-language-based task specifications rather than highly technical cryptographic protocols. However, this new paradigm introduces its own set of challenges, including ensuring model integrity, statelessness, and verifiability. Further research is needed to fully address these concerns and establish robust mechanisms for validating model behavior, ultimately enhancing the trustworthiness and reliability of TCMEs for broader applications.

Beyond Crypto Limits#

The concept of “Beyond Crypto Limits” in the context of secure computation suggests that traditional cryptographic methods are insufficient for handling complex, unstructured data and tasks. The paper proposes that advanced machine learning models, operating within carefully designed environments (TCMEs), offer a viable alternative. This approach leverages the model’s inherent capabilities to perform computations while maintaining privacy, sidestepping the computational limitations of existing cryptographic techniques. The focus is not on replacing cryptography, but augmenting its capabilities by shifting from purely mathematical proofs to heuristic, empirically verifiable models. This shift promises scalability for problems deemed previously infeasible, such as those involving unstructured data or imprecisely defined tasks where human language plays a critical role. However, the approach necessitates robust mechanisms for ensuring model integrity, statelessness, and controlled information flow within the TCME to maintain privacy guarantees. The key challenge lies in verifying the trustworthiness and capabilities of the machine learning model itself, which requires further research and development.

Model Trust & Privacy#

The core of the research revolves around establishing trustworthy and private machine learning models for computations previously infeasible with traditional cryptographic methods. This involves creating a novel paradigm called Trusted Capable Model Environments (TCMEs). The central idea is to leverage the capabilities of advanced machine learning models to act as trusted third parties, thereby enabling secure computations without requiring complex cryptographic protocols. Trust in these models is paramount; ensuring statelessness, explicit information flow control, and verifiable model integrity are crucial aspects. Privacy is maintained by carefully defining input/output constraints and preventing unauthorized access to the model’s internal state. The inherent trade-off between the efficiency and scalability of TCMEs versus classical cryptographic approaches is carefully considered, highlighting TCMEs’ strengths in addressing complex, unstructured problems. The work also explores the practical limitations of implementing TCMEs, including the need for reliable hardware and software to guarantee model integrity and statelessness. Future research directions focus on strengthening the formal guarantees of privacy and correctness, improving model trustworthiness, and enhancing scalability to handle more complex scenarios.

Practical Applications#

The concept of using machine learning models as trusted third parties for secure computation opens numerous practical avenues. Applications previously infeasible with traditional cryptography, due to complexity or data structure, become attainable. Examples cited include solving unstructured problems such as multi-agent non-competition in research collaborations, where models help ensure fairness and prevent conflicts without revealing sensitive details. Another example highlights auditing for confidentiality violations, where models assess data handling practices without accessing sensitive business secrets. The ability of models to handle unstructured data and nuanced scenarios is a significant advantage over traditional cryptographic approaches which often require rigid data structures. Furthermore, applications like damage detection in a business setting showcase the potential for TCMEs (Trusted Capable Model Environments) to monitor sensitive information without continuous monitoring, providing a balance between privacy and security. However, practical implementation currently relies on TEEs (Trusted Execution Environments), which poses size and deployment challenges. Addressing issues like model trustworthiness and scalability are key to broader adoption of this novel approach to private inference.

Future Research#

Future research should prioritize addressing the limitations of Trusted Capable Model Environments (TCMEs). This includes developing more rigorous methods for verifying model trustworthiness and capability, moving beyond heuristic assumptions to more formal guarantees of correctness and privacy. A key area is exploring mechanisms for robustly handling potential side-channel attacks that could compromise the privacy of data within TCMEs. Research should also focus on improving the scalability of TCMEs to handle more complex tasks and larger datasets, as well as exploring efficient methods for coordinating interactions among multiple parties. Finally, further investigation into the trade-offs between TCMEs and existing cryptographic approaches is needed to better understand the optimal scenarios for applying each technology.

More visual insights#

More on figures

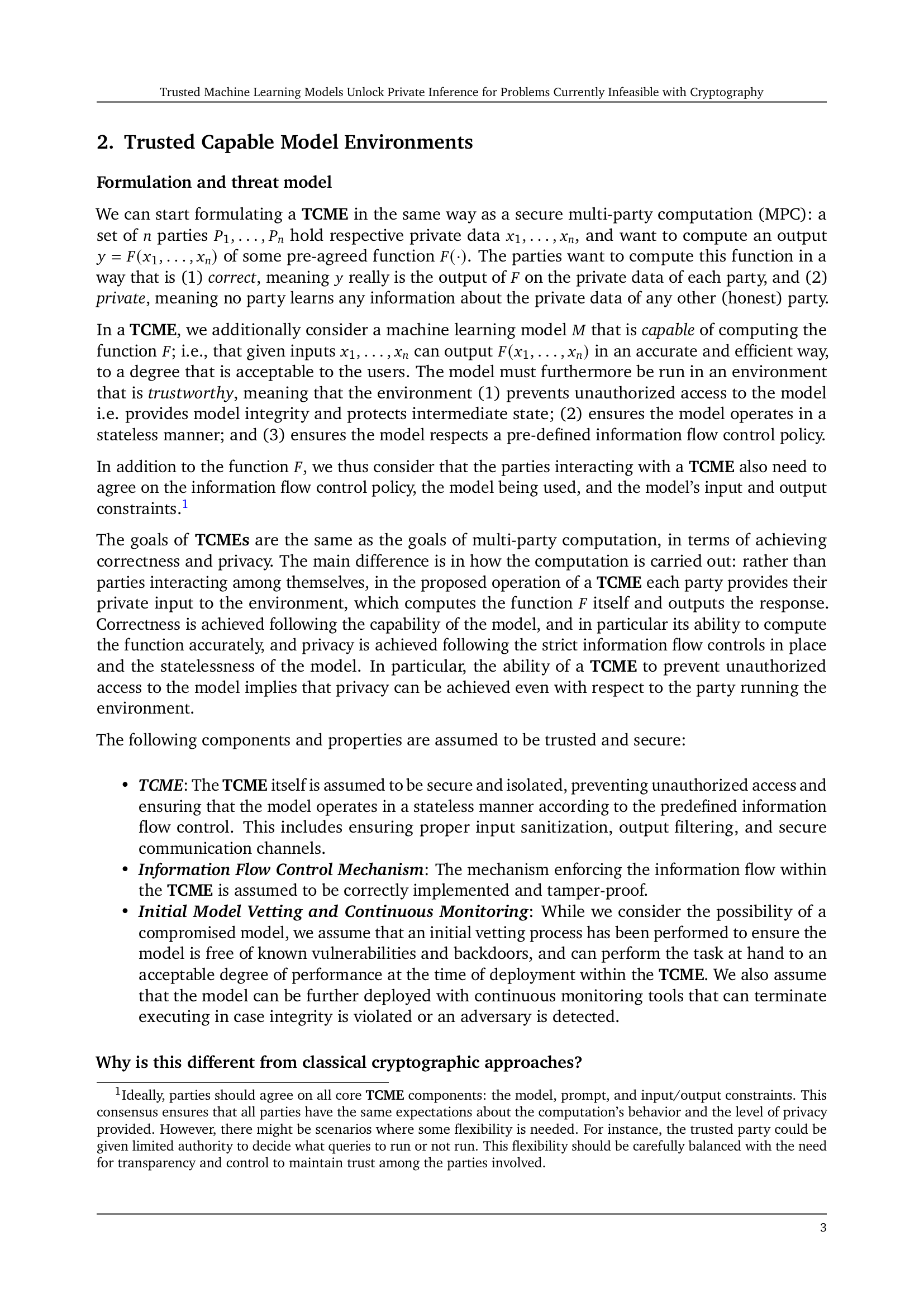

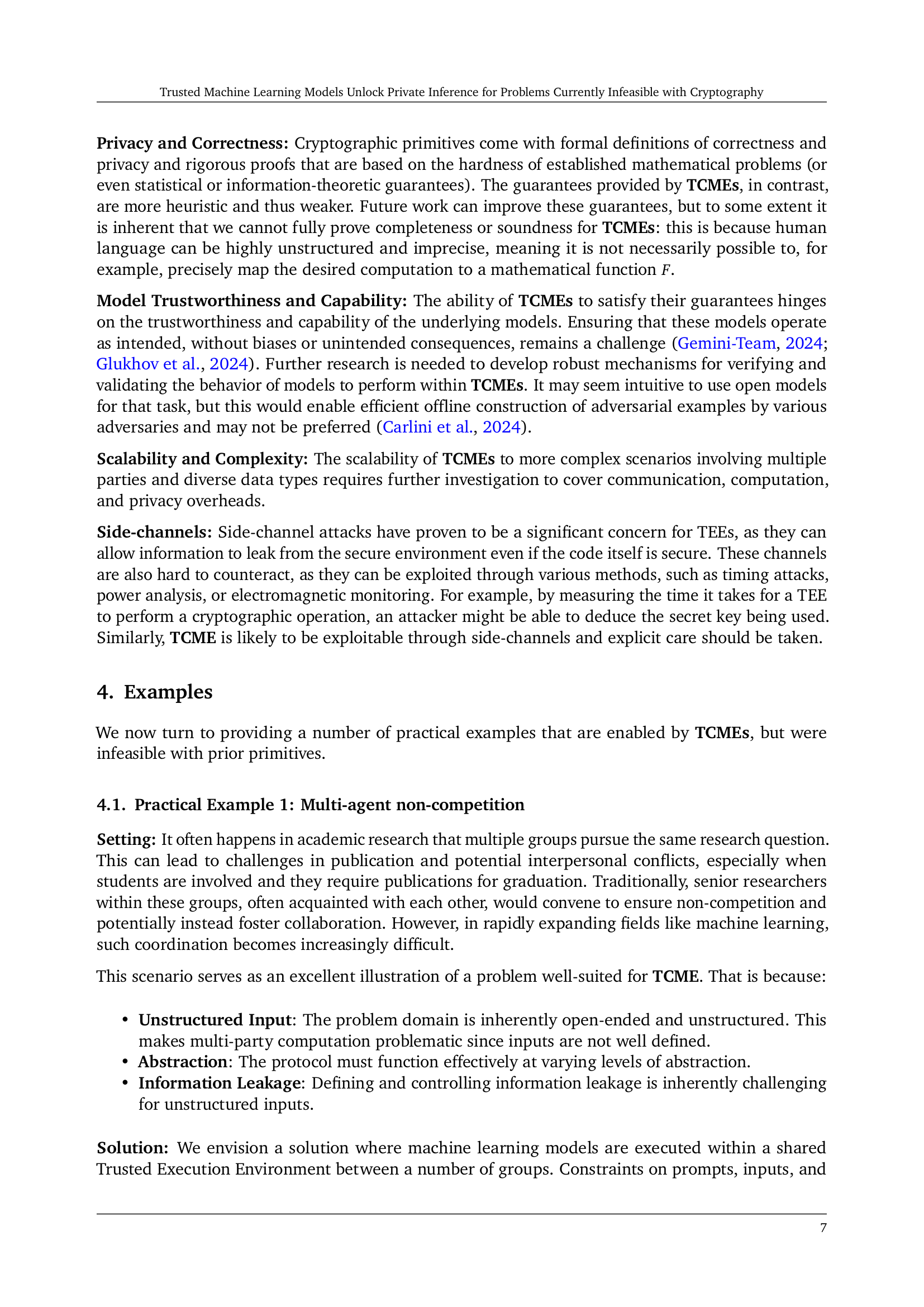

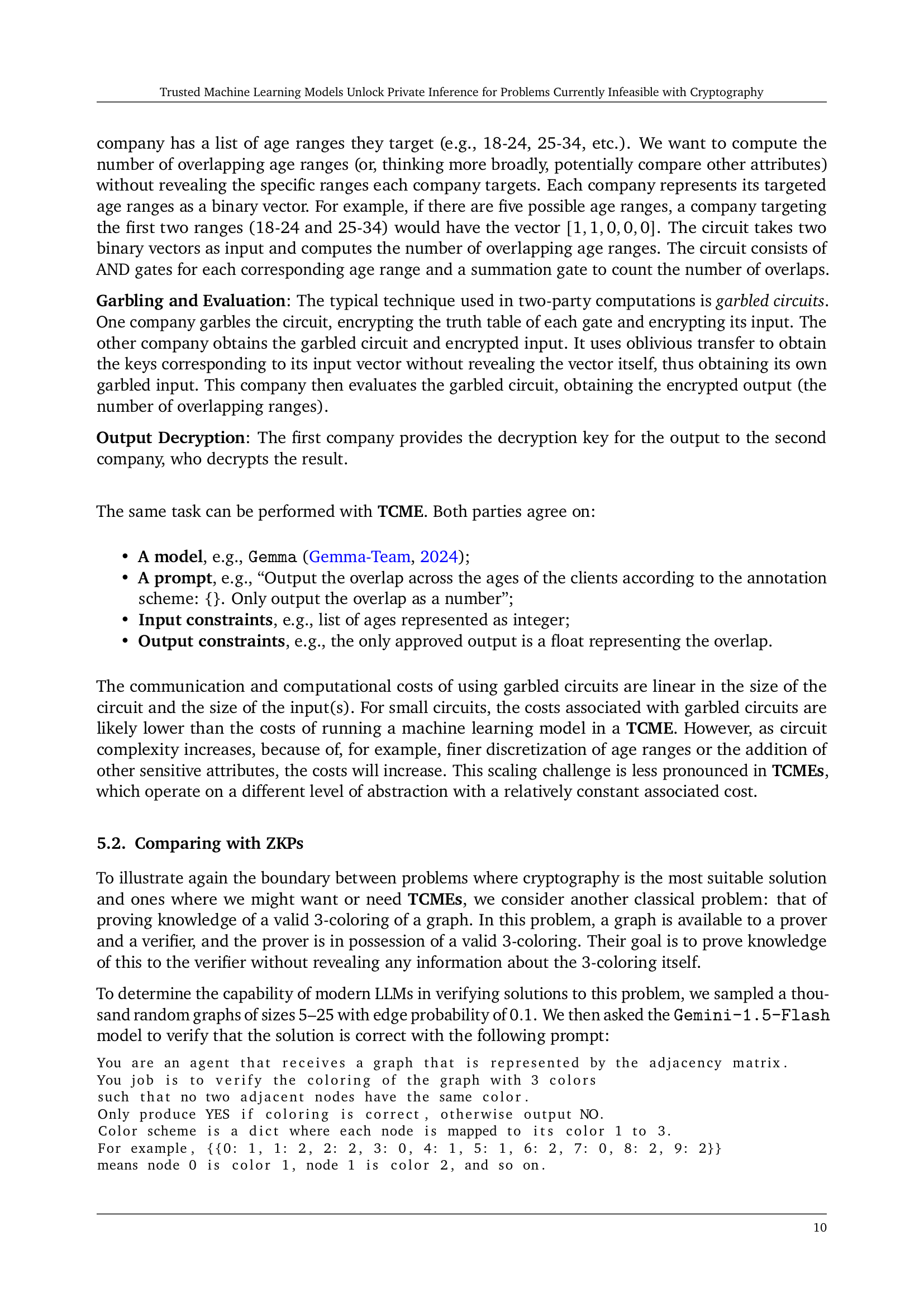

🔼 Figure 2 illustrates a system where a Trusted Capable Model Environment (TCME) is used to audit private code and models residing within a Trusted Execution Environment (TEE). The process involves an agreement between the user and an attestation provider on specific auditing prompts. These prompts are then used by the TCME, which leverages a large, public model to analyze the private code. The TCME provides an output indicating whether the code meets the specified criteria. This process allows for verification of private code components without compromising the confidentiality of the proprietary code.

read the caption

Figure 2: TCME can be used to perform auditing of private code and models that are deployed in the TEE and participate in the ‘attestation’ that includes private components.

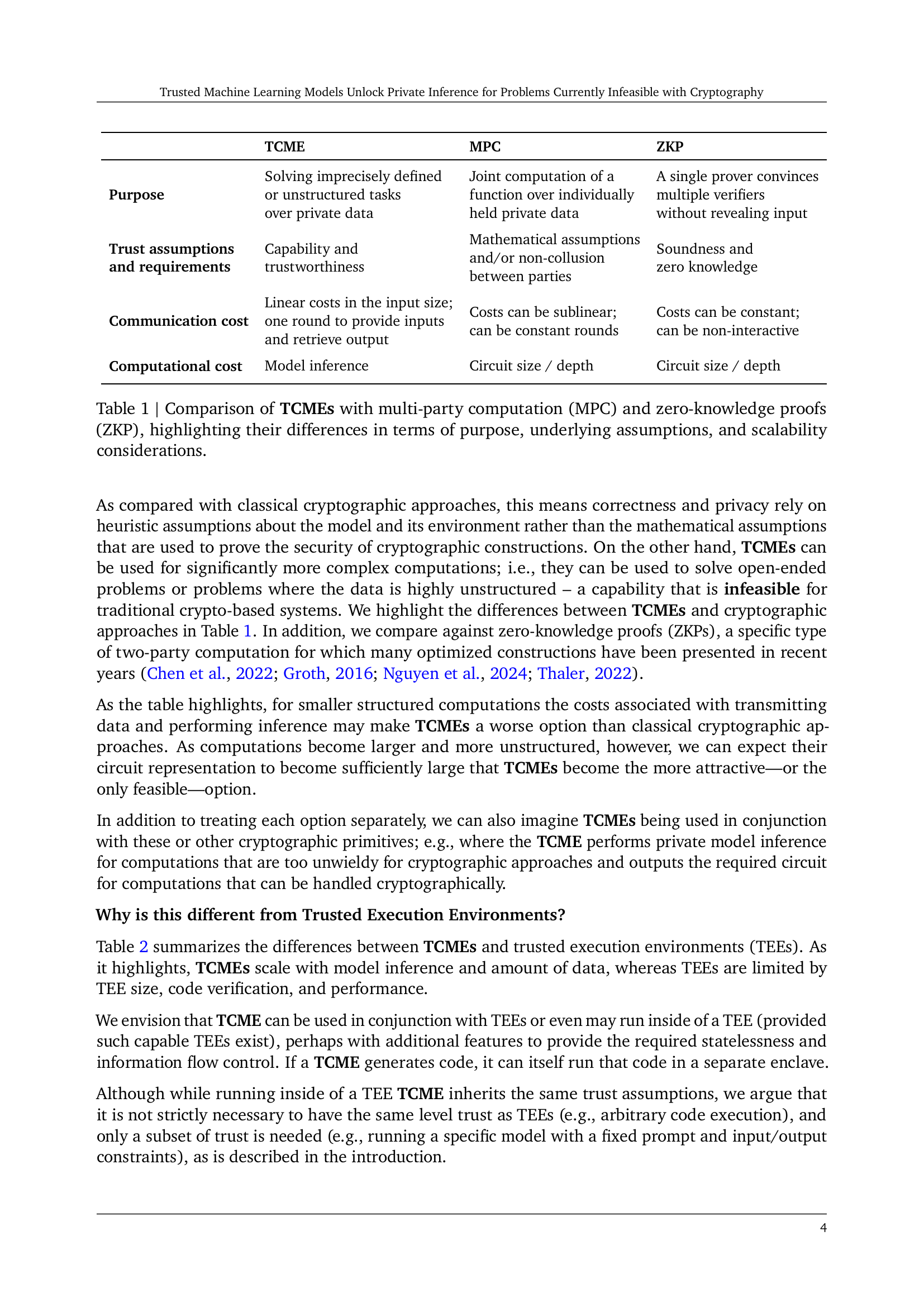

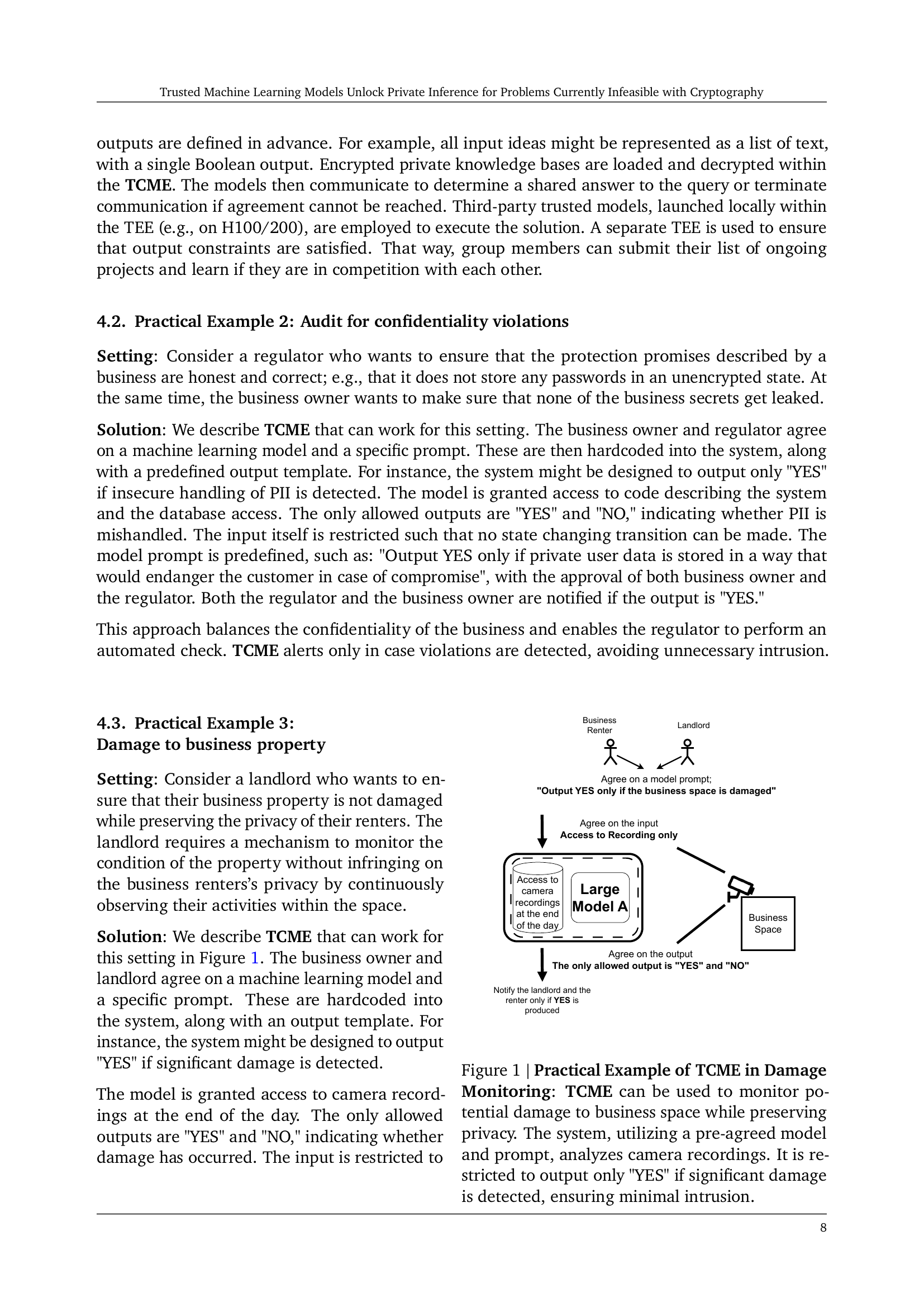

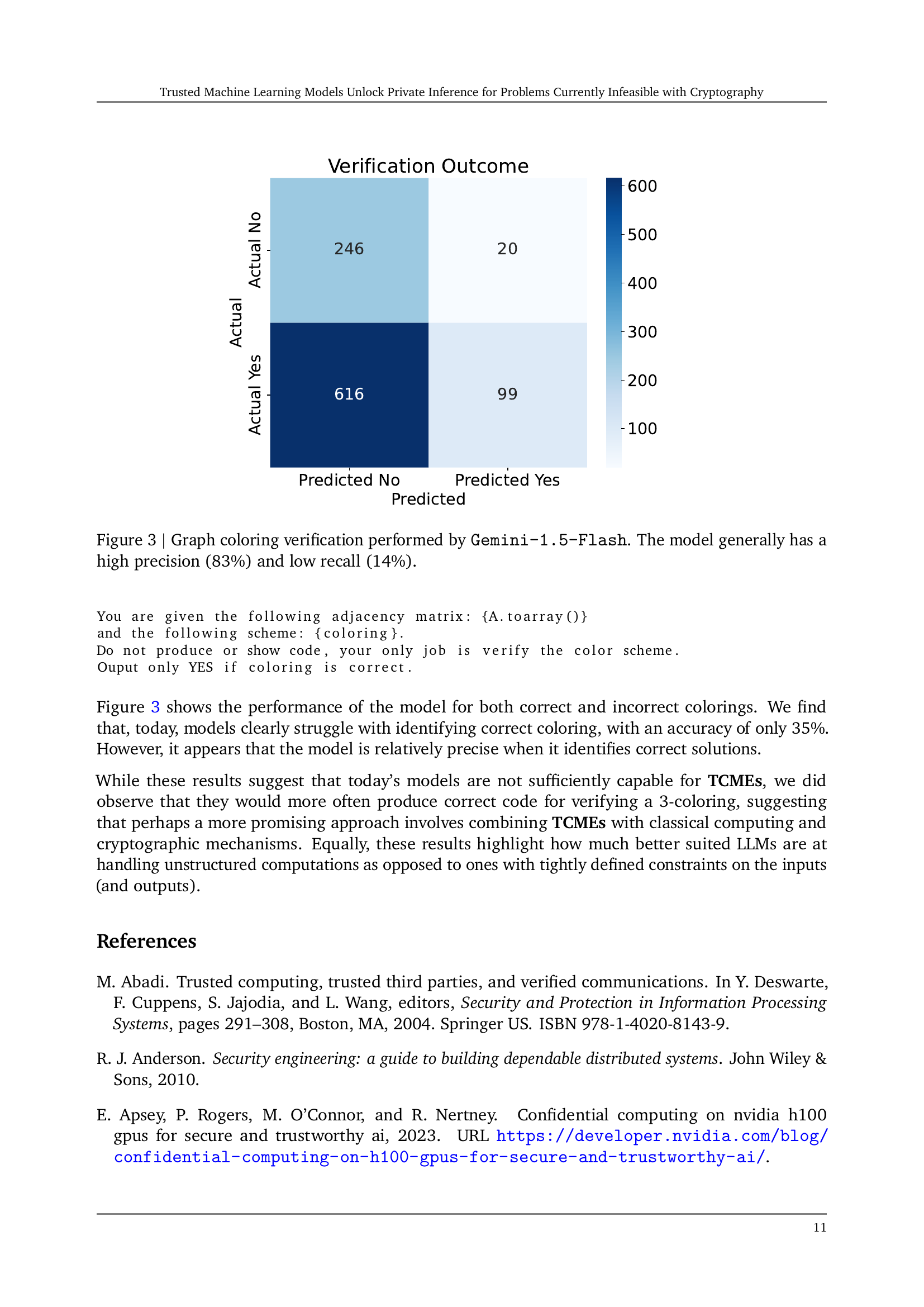

🔼 The figure shows the performance of the Gemini-1.5-Flash large language model (LLM) in verifying graph 3-colorings. The model was given an adjacency matrix representing a graph and a proposed 3-coloring. The model then determined whether the coloring was valid (meaning no two adjacent nodes shared the same color). The confusion matrix shows that while the model had high precision (83%, meaning that when it predicted a coloring was correct, it was usually correct), it had low recall (14%, meaning that it only correctly identified a small percentage of the actually correct colorings). This indicates that the model is better at identifying when a coloring is incorrect than identifying when a coloring is correct.

read the caption

Figure 3: Graph coloring verification performed by Gemini-1.5-Flash. The model generally has a high precision (83%) and low recall (14%).

Full paper#