TL;DR#

Creating interactive 2D cartoon characters is time-consuming and requires expertise. Existing methods are often inefficient and lack flexibility. This paper introduces Textoon, a novel framework that addresses these limitations.

Textoon leverages cutting-edge language and vision models to generate Live2D characters directly from text descriptions. It employs a pipeline including accurate text parsing to extract detailed features from user input, controllable appearance generation, re-editing tools, and component completion for seamless character creation. ARKit’s capabilities are integrated for enhanced animation and accuracy. The result is a significant reduction in time and effort for 2D character production, improving both accessibility and efficiency.

Key Takeaways#

Why does it matter?#

This paper is important because it presents Textoon, the first method to generate diverse and interactive 2D cartoon characters from text descriptions within a minute. This significantly reduces the time and effort required for creating such characters, opening up new avenues for researchers in digital character creation and animation. It also demonstrates the effective combination of cutting-edge language and vision models for creative content generation, influencing other areas of AI research.

Visual Insights#

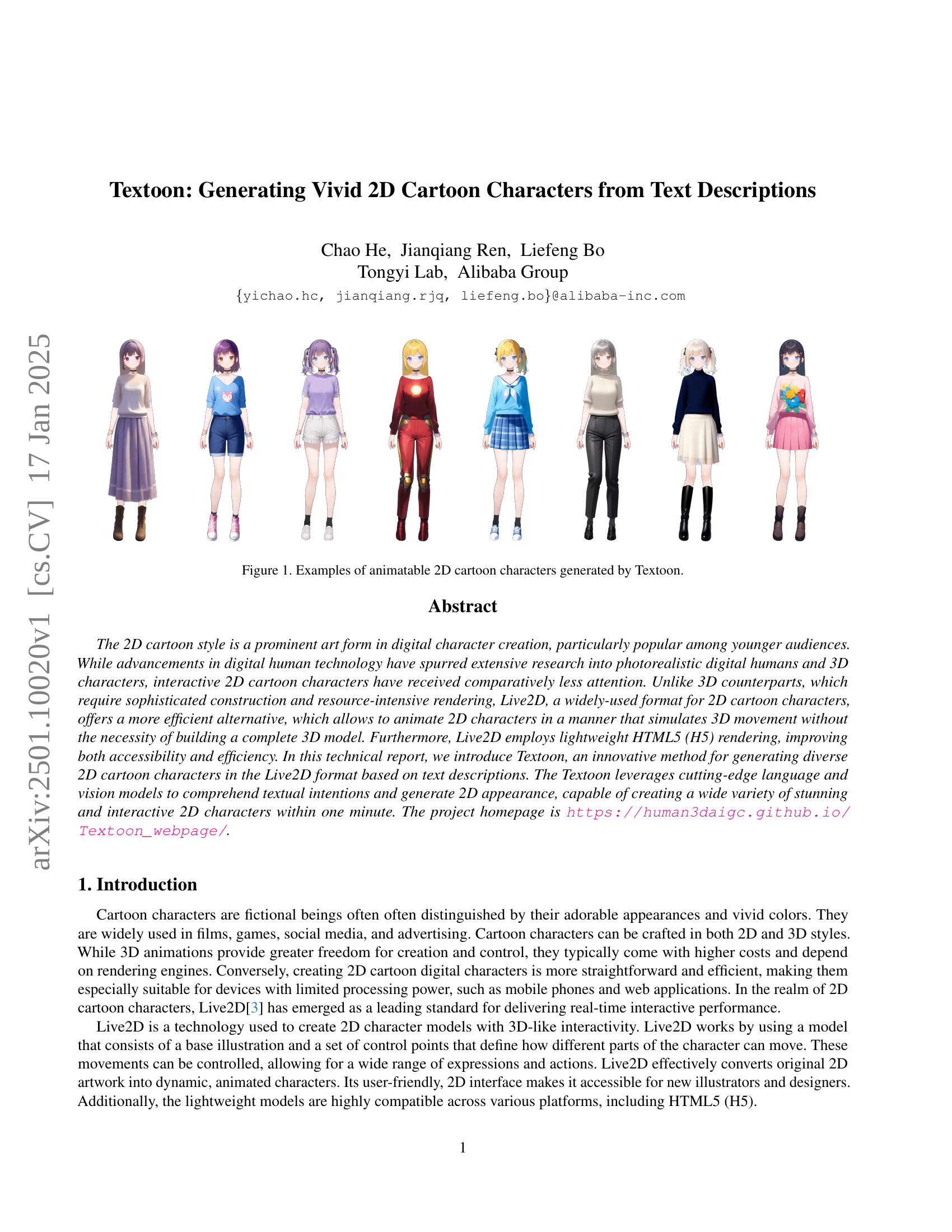

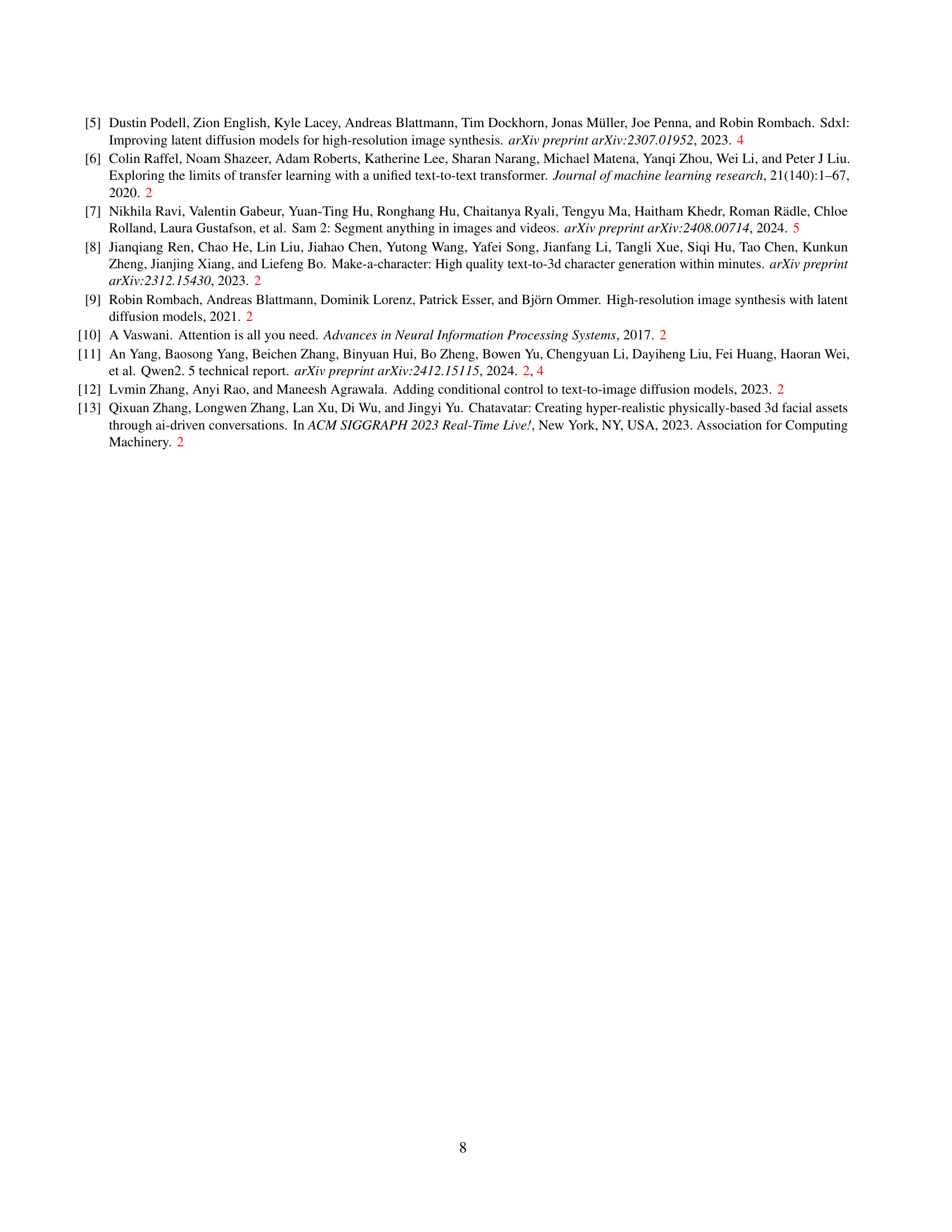

🔼 This figure displays a variety of 2D cartoon characters created using the Textoon system. The characters showcase the diverse styles and animation capabilities achievable with the model, demonstrating variations in hair, clothing, expressions and overall appearance. Each character is an example of the output generated by the Textoon method, which is capable of producing a wide range of customizable 2D cartoon characters from text descriptions.

read the caption

Figure 1: Examples of animatable 2D cartoon characters generated by Textoon.

In-depth insights#

Live2D Text-to-Avatar#

A hypothetical ‘Live2D Text-to-Avatar’ system presents exciting possibilities. It leverages the efficiency and expressiveness of the Live2D format, allowing for the creation of dynamic 2D animated characters directly from text descriptions. This streamlines the character creation process, bypassing the labor-intensive manual steps involved in traditional Live2D modeling. The system would likely involve sophisticated natural language processing (NLP) to understand nuanced textual descriptions, and powerful generative models (likely diffusion models) to translate those descriptions into visual components, which are then assembled into a coherent Live2D model. Control over the level of detail and the various features (hair style, clothing, etc.) would be crucial, and an intuitive user interface for editing and fine-tuning the generated character is essential for broader adoption. Challenges might include ensuring high-fidelity output, accurately representing specific features reliably, and efficient handling of complex descriptions. The system’s success hinges on the accuracy of the text parsing and the quality of the generative models, necessitating extensive training data and innovative model architectures. Ultimately, a successful ‘Live2D Text-to-Avatar’ system would significantly lower the barrier to entry for creating high-quality animated 2D characters, democratizing the creation of engaging digital content.

Controllable Generation#

The concept of “Controllable Generation” in the context of generating 2D cartoon characters from text descriptions is crucial. It speaks to the ability of a system to not just generate characters, but to do so with a high degree of precision and customization based on user specifications. Accurate text parsing is the foundation; the system must correctly interpret nuanced details from user prompts, identifying features like hair style, clothing type, and color. The success hinges on the effective synthesis of these parsed elements into a comprehensive character template. This implies a sophisticated understanding of character anatomy and how different features interrelate. Advanced image generation models are then leveraged, likely employing techniques like diffusion models, to translate these templates into detailed visual representations. The level of control extends beyond initial generation. The framework likely incorporates features allowing users to refine the output. This could include tools for editing specific components or adjusting parameters to fine-tune the appearance. The system’s capability to manage this level of control, ensuring both creative freedom and predictable results, is a significant technological achievement and a key factor determining its usefulness to designers and illustrators.

Component Splitting#

The concept of “Component Splitting” in the context of generating Live2D characters is a crucial optimization strategy. By merging smaller, intricate layers within the Live2D model, the authors aim to reduce computational complexity and streamline the generation process. This simplification trades off some level of detailed movement expressiveness for improved efficiency and ease of generation. The trade-off appears acceptable, as the authors prioritize the generation of a wide variety of character designs within a minute. Larger elements are used to generate smaller-scale elements, which simplifies the process without significantly diminishing visual quality. This approach highlights a pragmatic balance between model fidelity and the speed and scalability necessary for efficient text-to-Live2D character generation. The success of this technique underscores the importance of efficient model design when dealing with real-time interactive applications like Live2D animation.

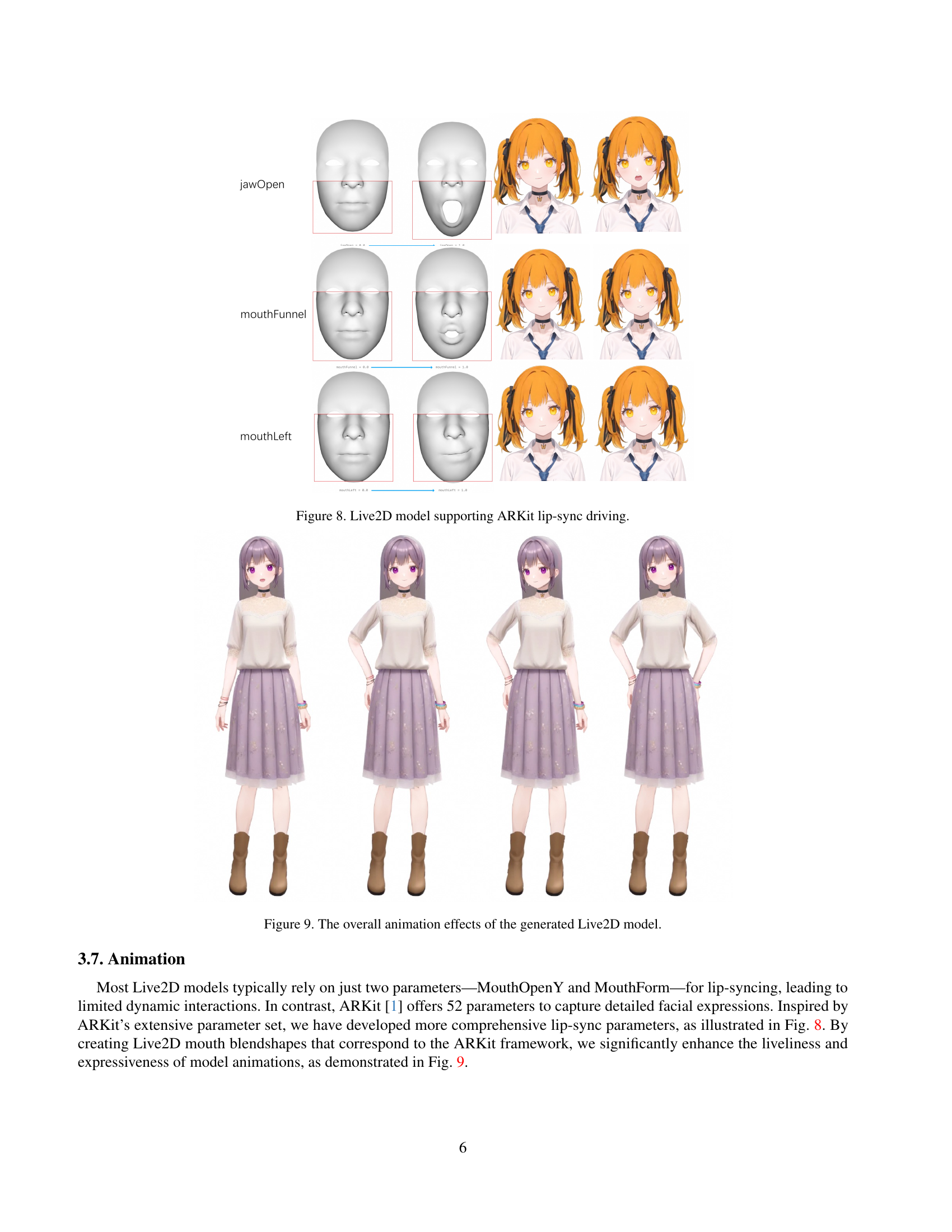

Animation Enhancements#

Animation enhancements in 2D cartoon character generation are crucial for achieving realistic and engaging visuals. The research paper likely details methods to improve the quality of animation beyond simple lip-sync. Improving the accuracy of mouth movements, for example, is key, as the existing methods using only two parameters (MouthOpenY and MouthForm) result in limited expressiveness. The integration of a more comprehensive facial animation system, like ARKit’s 52 parameters, likely leads to more nuanced and lifelike facial expressions. Addressing inconsistencies between the mouth and other facial features during animation, such as eye movements, is another area for improvement, possibly through advanced control techniques. The paper might explore different techniques for creating smooth, fluid animations. This could involve refining how the control points in the Live2D model are manipulated or introducing more sophisticated interpolation methods. Furthermore, enhancing the responsiveness of animations to the input text description allows for greater flexibility and control, offering more potential for creative applications. Ultimately, the improvements enhance the overall realism and interactivity of the generated 2D characters, making them more suitable for use in games, films, or virtual environments.

Future of Textoon#

The future of Textoon hinges on several key advancements. Improving the text parsing model is crucial; more nuanced descriptions should allow for finer control over generated character details, including intricate clothing patterns and highly individualized facial features. Expanding the stylistic range is another important area. Currently, Textoon’s capabilities are limited by the available Live2D model components; increasing this library of assets, perhaps through community contributions or automated generation, is essential to broadening the platform’s aesthetic possibilities. Enhanced animation capabilities are also vital; improved lip-sync and a wider array of dynamic movements would enhance realism and interactive potential. Furthermore, integrating advanced AI tools such as more sophisticated image editing and manipulation techniques would allow for more precise control over the generation process, reducing the need for manual adjustments. Finally, exploring the potential for 3D model generation from text input—building upon Textoon’s core strengths to create fully realized 3D avatars—presents a significant avenue for future development. These advancements, coupled with ongoing improvements to efficiency and usability, would solidify Textoon’s position as a leading-edge platform for 2D and potentially 3D character creation.

More visual insights#

More on figures

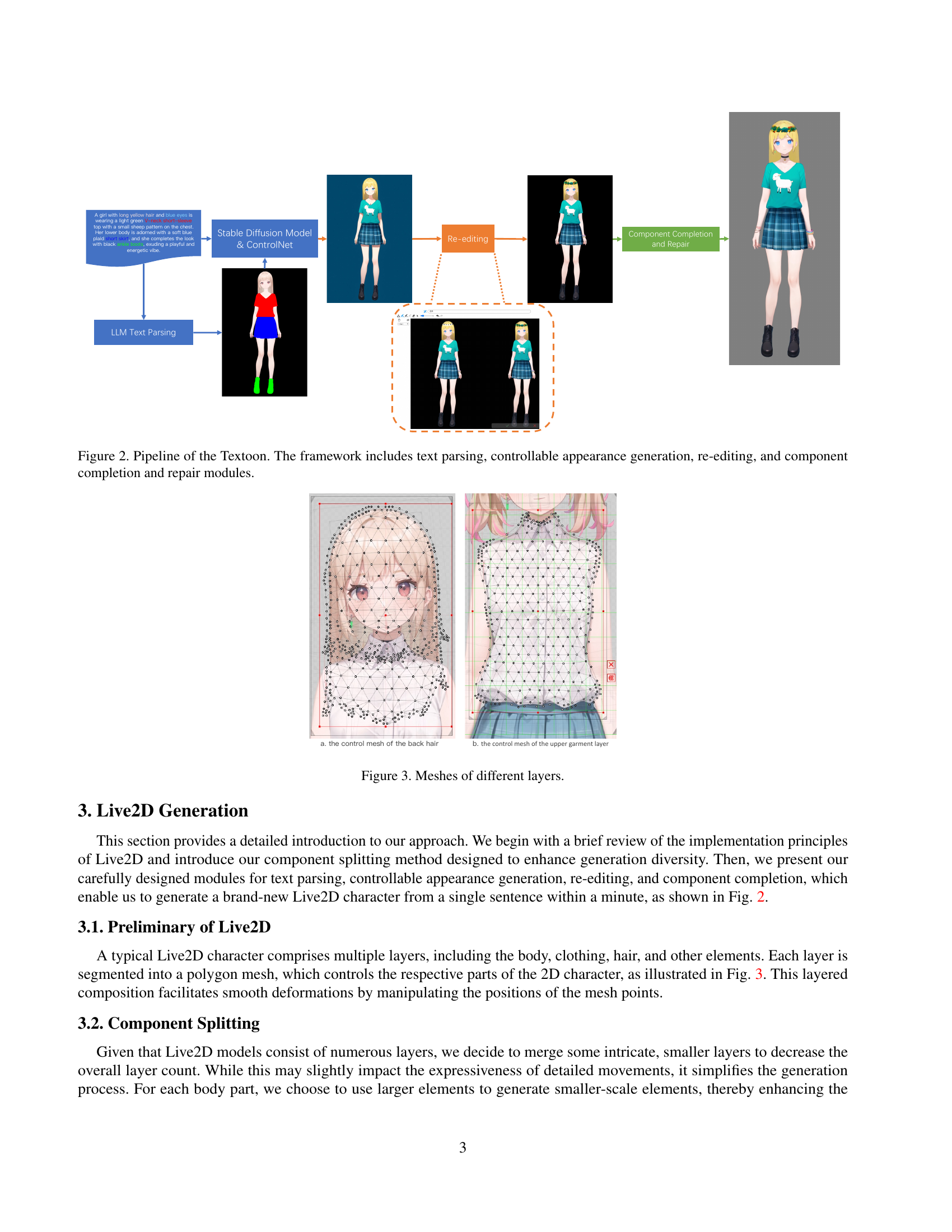

🔼 This figure illustrates the Textoon framework’s pipeline, which consists of four key modules: text parsing, controllable appearance generation, re-editing, and component completion and repair. The text parsing module processes user text descriptions to extract relevant features. The controllable appearance generation module utilizes these features to generate the initial character appearance. The re-editing module allows users to make adjustments to the generated character. Finally, the component completion and repair module ensures that all components of the character are properly generated and connected.

read the caption

Figure 2: Pipeline of the Textoon. The framework includes text parsing, controllable appearance generation, re-editing, and component completion and repair modules.

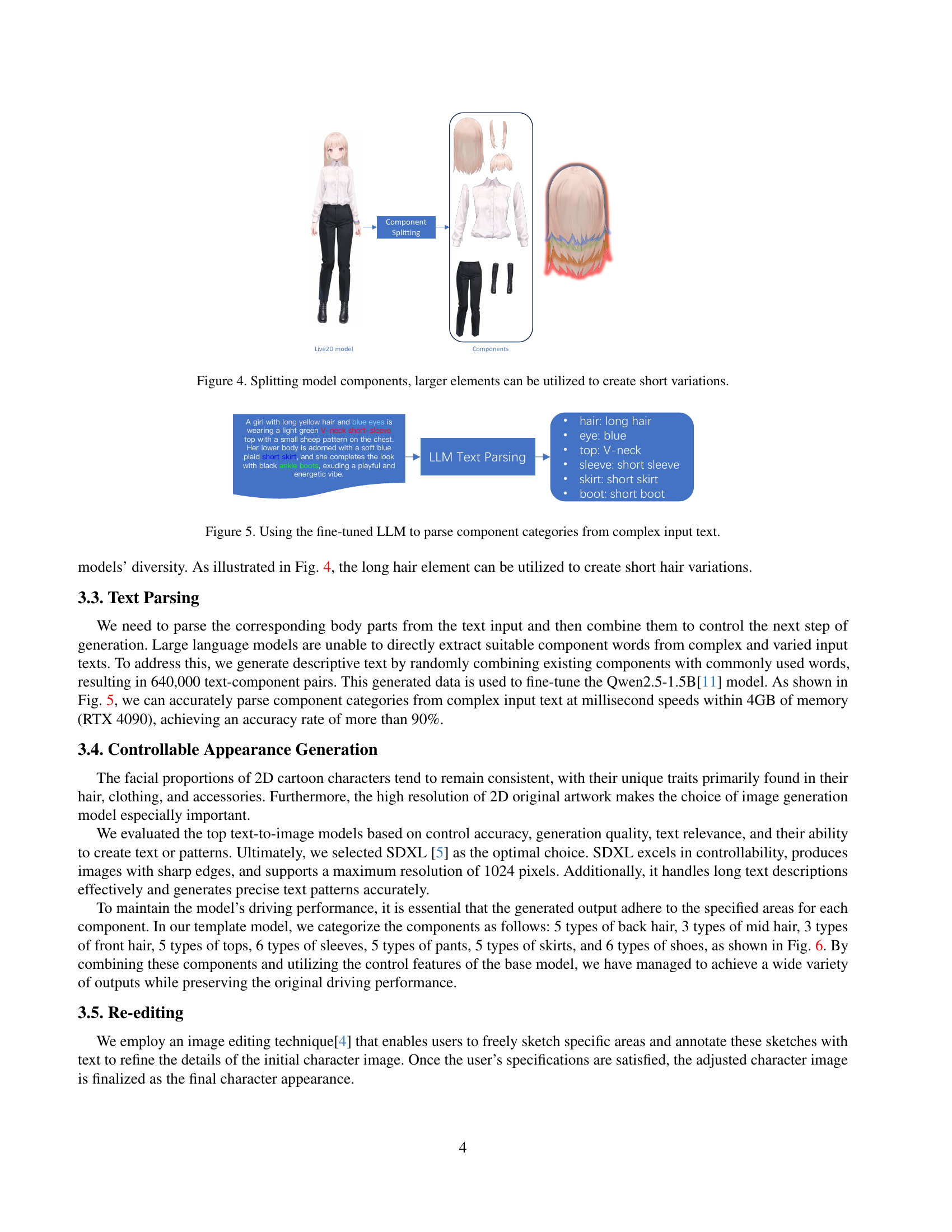

🔼 This figure shows the layered structure of a Live2D model, specifically illustrating the control meshes for the back hair and the upper garment layer. Each layer in Live2D characters is segmented into a polygon mesh; manipulating the mesh points allows for smooth deformations and animation. This layered structure facilitates smooth deformations by manipulating the positions of the mesh points. The image helps to explain the concept of component splitting within the Live2D generation process, where smaller layers are merged to simplify generation while potentially slightly impacting the expressiveness of detailed movements.

read the caption

Figure 3: Meshes of different layers.

🔼 This figure illustrates the concept of component splitting in the Live2D model generation process. By combining larger, simpler components, the system can efficiently create variations in hair length or other details. The image shows how breaking down complex elements into fewer, larger ones can simplify the creation of diverse character designs while maintaining a reasonable level of detail. This method streamlines the generation process and improves efficiency.

read the caption

Figure 4: Splitting model components, larger elements can be utilized to create short variations.

🔼 This figure demonstrates the effectiveness of a fine-tuned large language model (LLM) in parsing complex text descriptions to extract relevant component categories for generating 2D cartoon characters. The input is a detailed text description of a character, and the output shows how the LLM successfully identifies and extracts key features such as hair style, eye color, top type, sleeve type, skirt type, and boot type. This accurate parsing is crucial for the subsequent steps of controllable appearance generation in the Textoon framework.

read the caption

Figure 5: Using the fine-tuned LLM to parse component categories from complex input text.

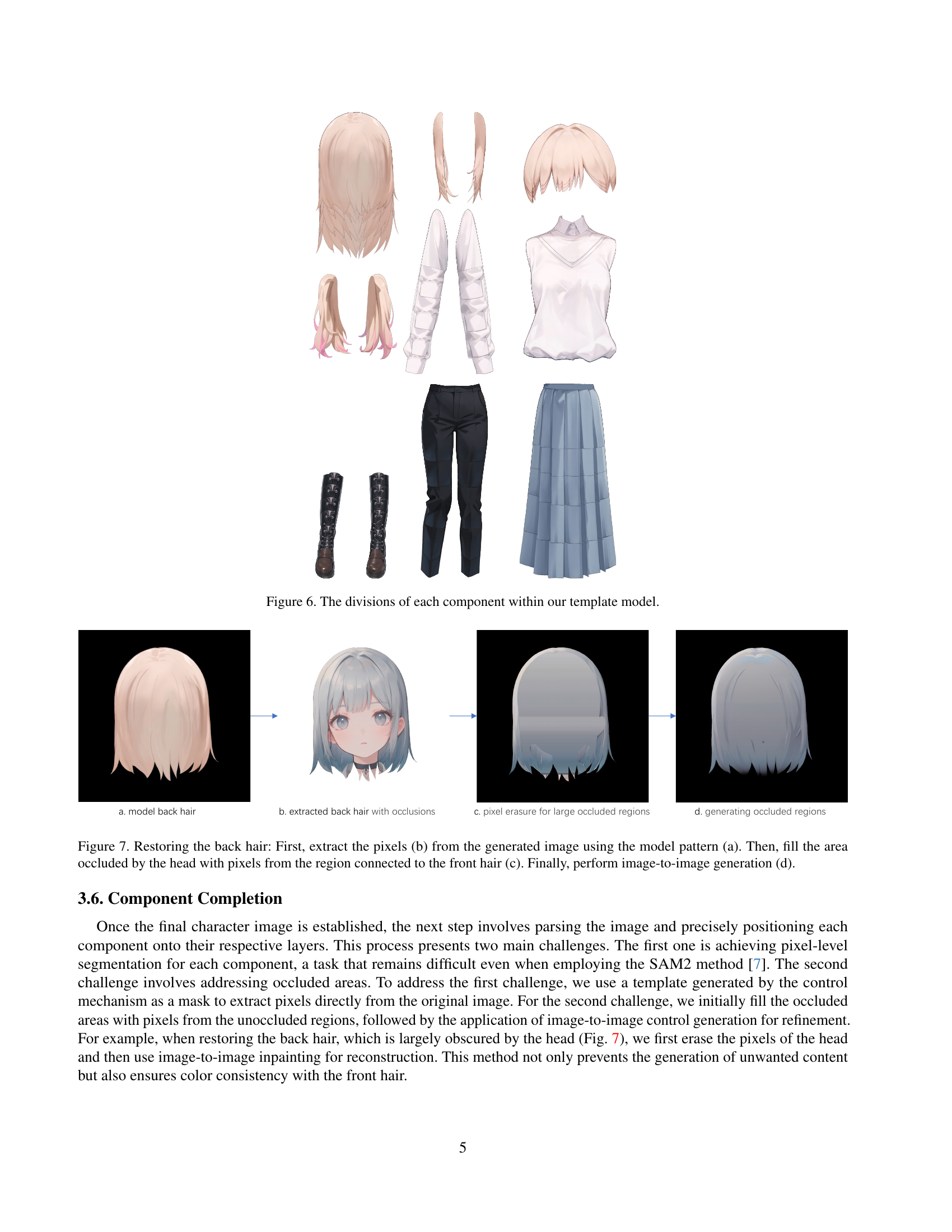

🔼 This figure shows a breakdown of the component parts used in the Textoon model for generating Live2D characters. It visually depicts how the model separates a character’s visual elements into distinct layers or components. These include the back hair, mid hair, front hair, top, sleeves, pants/skirt, and shoes. This modular design is crucial for the system’s ability to generate diverse and controllable character appearances. Each component is shown separately as a distinct part, highlighting how the model treats each element independently before assembling them into the final character image.

read the caption

Figure 6: The divisions of each component within our template model.

🔼 This figure illustrates the process of restoring the back hair in a generated Live2D character image. The process involves three steps. First, pixels corresponding to the back hair are extracted from the generated image using a pre-defined model pattern (a). Second, the area of the back hair occluded by the head is filled using pixels from the adjacent front hair region (c). Finally, an image-to-image generation technique is employed to refine the back hair area (d), ensuring color consistency with the front hair and a natural appearance.

read the caption

Figure 7: Restoring the back hair: First, extract the pixels (b) from the generated image using the model pattern (a). Then, fill the area occluded by the head with pixels from the region connected to the front hair (c). Finally, perform image-to-image generation (d).

🔼 This figure demonstrates the integration of ARKit’s facial tracking capabilities into a Live2D model for enhanced lip-sync animation. It shows several examples of mouth movements generated using the combined system, highlighting the increased realism and precision achieved by incorporating the more detailed facial expression data from ARKit’s 52 parameters, compared to the usual two parameters in most Live2D models. The improved accuracy in syncing mouth movements to speech results in more natural and expressive animations.

read the caption

Figure 8: Live2D model supporting ARKit lip-sync driving.

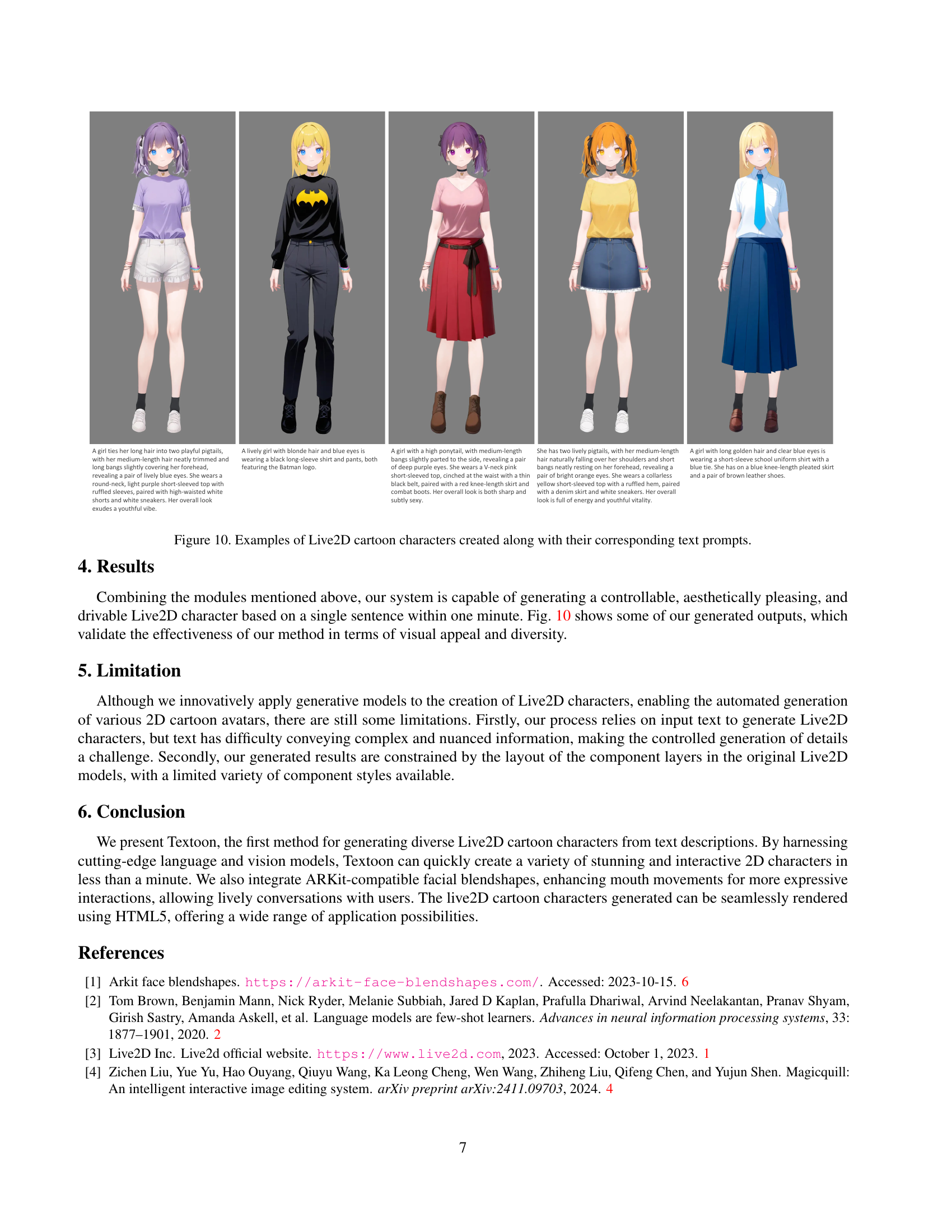

🔼 This figure showcases the animation capabilities of a Live2D model generated using the Textoon framework. It demonstrates the smooth and realistic movement achieved through the integration of ARKit’s face blendshapes, which significantly enhances the liveliness and expressiveness of the model’s animations, specifically focusing on lip-sync accuracy and facial expressions.

read the caption

Figure 9: The overall animation effects of the generated Live2D model.

🔼 Figure 10 showcases several examples of Live2D cartoon characters automatically generated by the Textoon system. Each character is accompanied by the text prompt used to create it, demonstrating the system’s ability to translate textual descriptions into diverse and visually appealing character designs. The figure highlights the variety of hairstyles, clothing styles, facial features, and overall aesthetics achievable using the model.

read the caption

Figure 10: Examples of Live2D cartoon characters created along with their corresponding text prompts.

Full paper#