TL;DR#

Large Language Models (LLMs) are increasingly integrated with real-world APIs, but evaluating their ability to handle complex function calls remains challenging. Existing benchmarks often simplify real-world complexity, failing to capture multi-step processes, constraints, and long contexts necessary for practical applications. This limitation hinders the development of robust and reliable LLMs for real-world use.

To address these issues, the researchers introduced ComplexFuncBench, a benchmark dataset containing 1000 complex function calls across five real-world domains. They also developed ComplexEval, an automatic evaluation framework that uses a multi-dimensional approach to assess function call correctness. Experiments on various LLMs revealed their significant weaknesses in handling complex function calls, particularly concerning parameter value errors. The work provides a valuable resource for advancing LLM capabilities and evaluating future models.

Key Takeaways#

Why does it matter?#

This paper is important because it addresses the critical need for benchmarking complex function calling in LLMs, which is crucial for advancing LLM capabilities. It introduces a novel benchmark, ComplexFuncBench, and evaluation framework, ComplexEval, filling a significant gap in the field. This work is relevant to current trends in LLM tool integration and will likely spur further research into improving LLMs’ ability to handle complex real-world tasks involving multiple API calls, constraint reasoning and long contexts. The findings offer valuable insights for future LLM development and evaluation.

Visual Insights#

🔼 Figure 1 presents a comparison between simple and complex function calling scenarios. (a) depicts a simple function call, where the user’s request is directly translated into a single API call. (b) shows a complex function call, which involves multiple steps, constraints specified by the user, inferring parameter values based on implicit user information, handling long parameter values, and processing long contexts. Different colors highlight these features in the illustration.

read the caption

Figure 1: (a) Simple Function Calling. (b) Complex Function Calling with multi-step, constraints, parameter value reasoning, long parameter values and long context. Different colors correspond to the corresponding features marked in the figure.

| Real API Response | Multi-Step | Constraints | Parameter Value Reasoning | Long Parameter Values | Long-Context | |

| API-Bench | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ |

| ToolBench | ✓ | ✓ | ✗ | ✗ | ✗ | ✗ |

| T-Eval | ✓ | ✓ | ✗ | ✗ | ✗ | ✗ |

| BFCL | ✗ | ✓ | ✗ | ✗ | ✓ | ✓ |

| Tool Sandbox | ✗ | ✓ | ✗ | ✗ | ✗ | ✗ |

| ComplexFuncBench | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

🔼 This table compares ComplexFuncBench with other existing function calling benchmarks. It highlights the key features of each benchmark, such as whether they support real API responses, multi-step function calls, constraints, parameter value reasoning, long parameter values, and long contexts. This comparison helps to demonstrate the unique capabilities and improvements of ComplexFuncBench over existing benchmarks.

read the caption

Table 1: ComplexFuncBench compare with other function calling benchmarks.

In-depth insights#

Func. Call Benchmark#

A Func. Call Benchmark in a research paper would critically evaluate the capabilities of large language models (LLMs) in interacting with external functions and APIs. Robust benchmarks must encompass a diverse range of function types, including simple single-step calls and more complex multi-step scenarios, potentially spanning various domains and requiring intricate parameter value reasoning. Real-world application is key; the benchmark should reflect practical use cases, including long-context processing and handling implicit user constraints. A comprehensive benchmark necessitates thorough evaluation metrics such as accuracy of function calls, correctness of parameter values, and the overall efficiency of the LLM’s strategy in solving complex tasks. The ultimate aim is to provide researchers with a reliable tool for comparing different LLMs’ functional capabilities and to identify areas for improvement. Data annotation is crucial, requiring expert human annotators to ensure high-quality and unambiguous data, while scalability in data creation poses a significant challenge. A well-designed benchmark is thus vital for driving advancements in LLM functionality and bridging the gap between research and practical application.

Multi-step Func. Calls#

The concept of “Multi-step Func. Calls” in the context of large language models (LLMs) signifies a significant advancement in their capabilities. It moves beyond the limitations of single-step function calls, enabling LLMs to execute complex tasks requiring multiple API calls or external tool interactions. This multi-step functionality mimics real-world problem-solving more effectively, where tasks often involve a sequence of actions. The challenge lies in effectively managing the flow of information between steps. LLMs must accurately interpret intermediate results, reason about parameter values based on previous outputs, and make informed decisions on which functions to call next. Successful implementation requires sophisticated planning and reasoning abilities, going beyond simple pattern matching or keyword recognition. Furthermore, the evaluation of such models becomes more complex, demanding benchmarks that assess not only the final result but also the correctness of intermediate steps and the overall efficiency of the process. The development of robust methods to evaluate multi-step function calls is crucial for advancing LLM capabilities in handling intricate, real-world applications.

LLM Func. Call Eval.#

Evaluating Large Language Model (LLM) function-calling capabilities presents significant challenges. Robust evaluation necessitates benchmarks that extend beyond simple, single-step calls to encompass the complexities of real-world scenarios. These scenarios include multi-step interactions, handling constraints, reasoning with implicit parameters, processing long parameter values, and managing extensive context lengths. Current methods often fall short, relying on simplistic rule-based matching or focusing solely on final output accuracy, ignoring intermediate steps and subtle variations in API responses. A comprehensive evaluation framework must incorporate multiple dimensions such as accuracy of parameter inference, correctness of API calls, and the completeness of the response. This demands careful design of both the benchmark tasks and the evaluation metrics, going beyond exact matching to account for different valid approaches and considering the quality and context of both the intermediate steps and the final output. Further research should prioritize the development of advanced evaluation techniques that align more closely with the complexities of practical LLM applications.

Model Response Eval#

In evaluating large language models (LLMs), assessing the quality of their generated responses is crucial. A dedicated ‘Model Response Eval’ section would delve into methods for evaluating both the completeness and correctness of LLM outputs. Completeness examines whether the response fully addresses all aspects of the user’s query, encompassing all requested information. Correctness, on the other hand, focuses on the accuracy of the information provided, verifying its alignment with ground truth or external knowledge sources, and potentially penalizing hallucinations or factual inaccuracies. This evaluation often goes beyond simple keyword matching and uses more sophisticated techniques such as multi-dimensional matching to consider semantic equivalence and different phrasing, incorporating both rule-based and LLM-based comparisons to ensure a comprehensive assessment. Furthermore, a robust ‘Model Response Eval’ would account for the model’s ability to self-correct, potentially penalizing responses that are factually incorrect, but which the model later corrects based on additional information or interactions. Ideally, this evaluation should also consider various response metrics like clarity, conciseness, and overall readability, offering a holistic view of the model’s response generation capabilities beyond mere factual correctness.

Future Research#

Future research directions stemming from this work on complex function calling in LLMs could focus on several key areas. Improving the robustness of LLMs to handle ambiguous or incomplete user inputs is crucial. This might involve developing new training techniques or incorporating more sophisticated natural language understanding modules. Furthermore, exploring alternative evaluation metrics beyond those presented is important to gain a more complete understanding of LLM capabilities. The current metrics might not fully capture subtle nuances of complex function calls. Investigating the scalability and efficiency of complex function calling is vital for real-world applications. The computational cost and latency associated with multi-step calls could hinder practical implementation. Finally, research should concentrate on enhancing the explainability of LLM decisions. Understanding why an LLM made a particular function call or chose specific parameters is crucial for building trust and improving the models’ reliability. This could involve developing novel techniques for visualizing or interpreting the reasoning processes within LLMs.

More visual insights#

More on figures

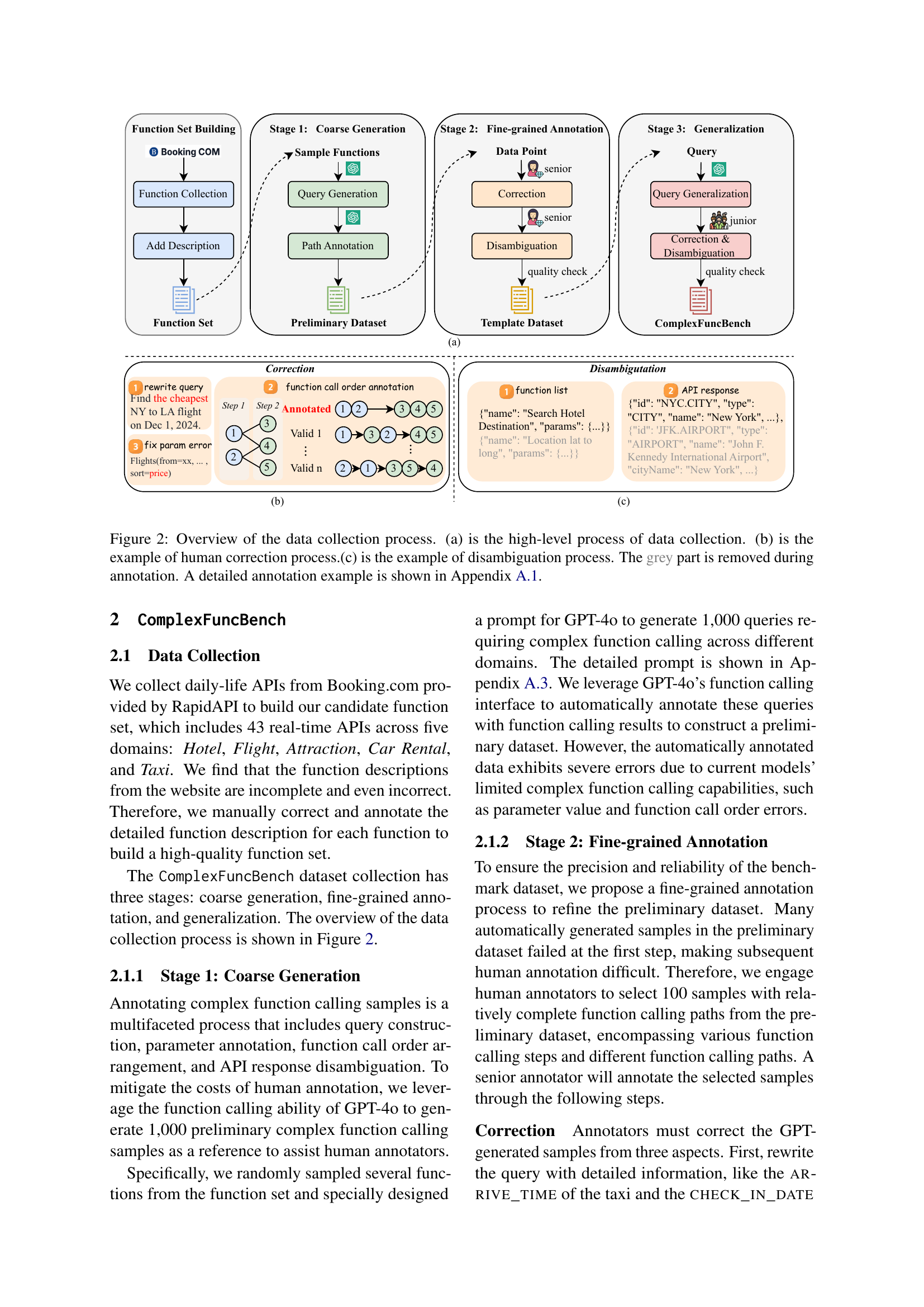

🔼 Figure 2 illustrates the multi-stage data collection process for ComplexFuncBench. Panel (a) provides a high-level overview of the three stages: coarse generation, fine-grained annotation, and generalization. Panel (b) shows an example of the human correction process, focusing on refining queries, adjusting the order of function calls, and correcting errors in parameter values. Panel (c) details the disambiguation process used to eliminate ambiguity from API responses. The greyed-out sections in (b) and (c) represent the parts removed during annotation to ensure a single valid function call path for each sample. A more detailed example of the annotation process is provided in Appendix A.1.

read the caption

Figure 2: Overview of the data collection process. (a) is the high-level process of data collection. (b) is the example of human correction process.(c) is the example of disambiguation process. The grey part is removed during annotation. A detailed annotation example is shown in Appendix A.1.

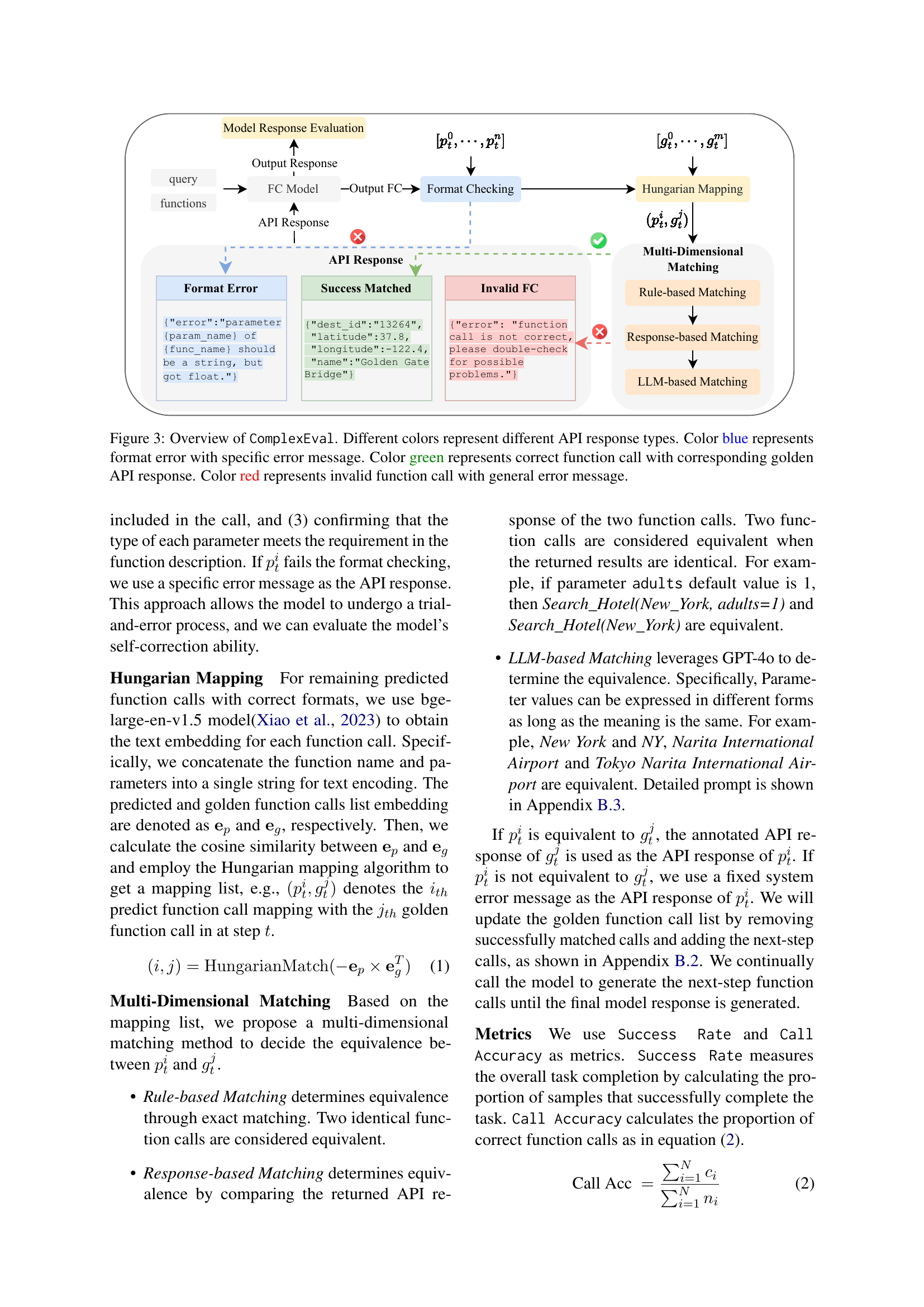

🔼 ComplexEval is an automatic evaluation framework for assessing the quality of complex function calls generated by LLMs. The figure provides a visual representation of the process. It begins with a user query and the available functions, then shows how the model generates function calls. These calls are checked for correct format and compared to the ‘golden’ (correct) function call path using a three-part matching system: rule-based (exact match), response-based (comparing API responses), and LLM-based (using an LLM to judge equivalence). Different colors indicate different outcomes. Blue indicates format errors, green indicates a successful match with the golden standard, and red shows an invalid function call.

read the caption

Figure 3: Overview of ComplexEval. Different colors represent different API response types. Color blue represents format error with specific error message. Color green represents correct function call with corresponding golden API response. Color red represents invalid function call with general error message.

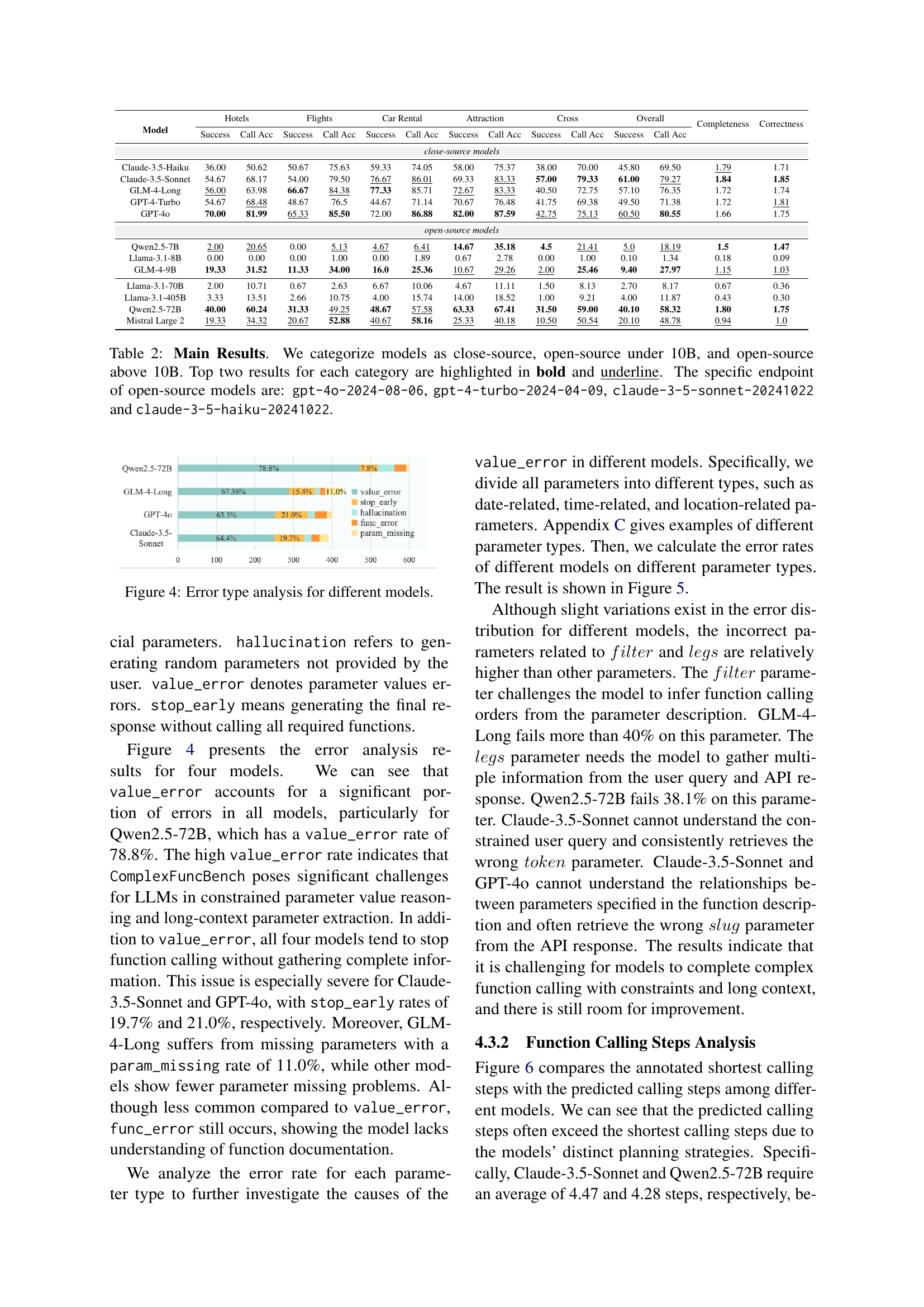

🔼 This bar chart displays the percentage breakdown of different error types for four selected Large Language Models (LLMs) during complex function calling. The error types include:

value_error(incorrect parameter values),stop_early(premature termination of function calls),hallucination(generating non-existent parameters),func_error(calling the wrong function), andparam_missing(missing parameters). The chart allows for a comparison of the relative frequency of each error type across the four models, highlighting their respective strengths and weaknesses in handling different aspects of the complex function calling task. The models are GPT-40, Claude-3.5-Sonnet, GLM-4-Long, and Qwen2.5-72B.read the caption

Figure 4: Error type analysis for different models.

🔼 This bar chart visualizes the error rates of different Large Language Models (LLMs) when dealing with various parameter types during complex function calls. Each parameter type (e.g., filter, legs, token, slug, date, location, etc.) is represented on the x-axis. The y-axis shows the error rate (percentage) for each parameter type. Multiple bars for each parameter type correspond to the performance of different LLMs (Claude-3.5-Sonnet, GPT-40, GLM-4-Long, and Qwen2.5-72B). The chart allows for a comparison of the models’ accuracy across different parameter types, revealing which parameters pose the most significant challenge for each model.

read the caption

Figure 5: Error rates for each parameter type of different models

🔼 This figure displays the distribution of function call steps in a complex function calling task. It shows the number of steps predicted by different models compared to the shortest (optimal) number of steps needed to accomplish the task. The distributions show that the models often require more steps than the shortest path, indicating areas where model efficiency can be improved. The chart facilitates a comparison of model performance in terms of planning and step efficiency during complex multi-step function calls.

read the caption

Figure 6: Function calling steps distribution.

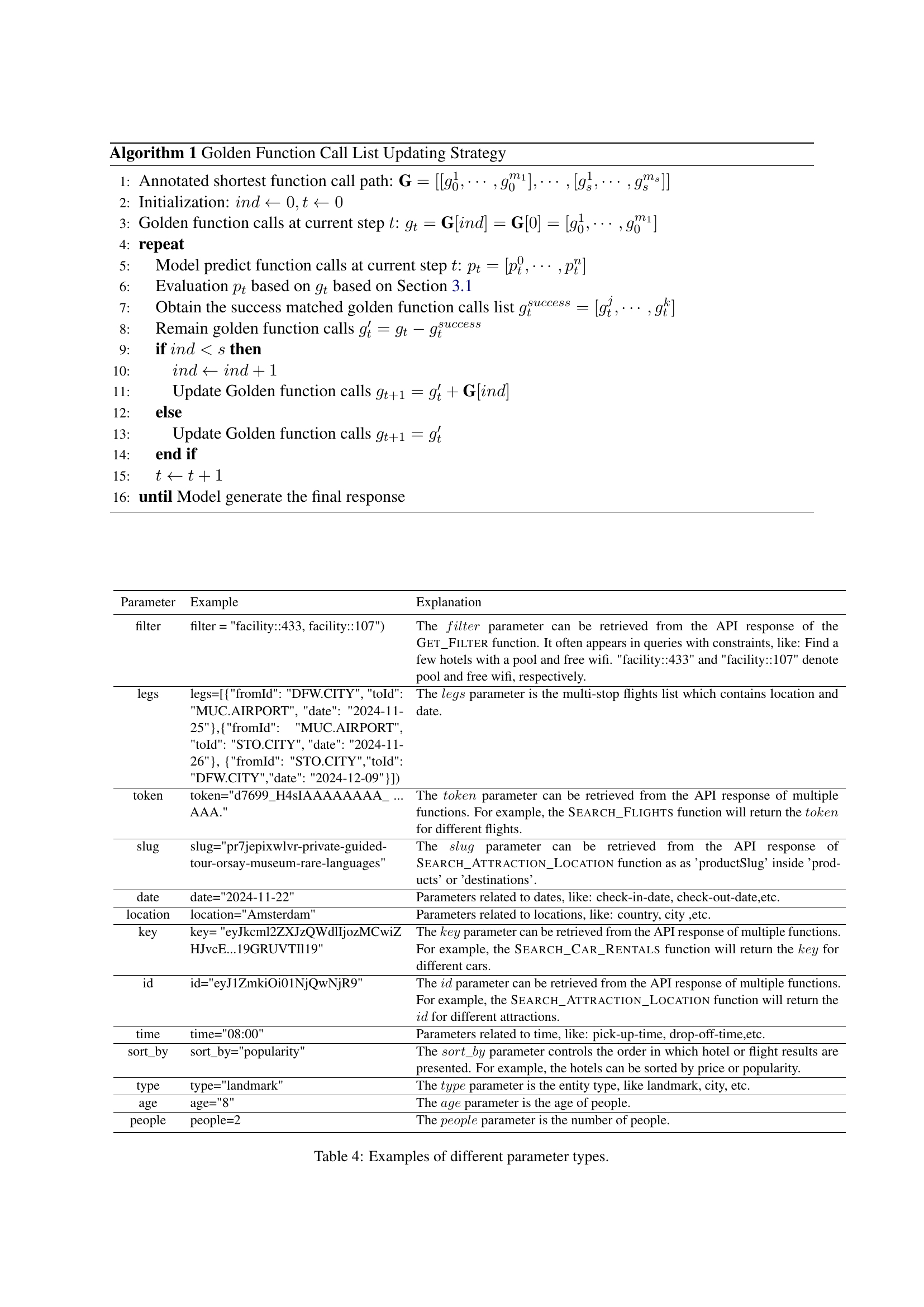

🔼 This figure illustrates the process of updating the golden function call list during the evaluation of complex function calling. The left side shows the annotated shortest function call path, serving as the ground truth, consisting of three steps. The right side dynamically updates this list based on model predictions. As the model generates function calls, the list is refined step-by-step, adding successful calls and discarding unsuccessful ones, reflecting a progressive refinement of the solution toward the correct path.

read the caption

Figure 7: An example for golden function call updating. Path on the left is the annotated shortest function call path with three steps.

🔼 This figure shows the prompt used to instruct GPT-4 to generate queries for the ComplexFuncBench dataset. The prompt emphasizes the creation of diverse and realistic queries involving multiple API calls and complex constraints. It provides a template and guidelines for constructing queries, specifying the required format and the need to avoid ambiguous or unrealistic parameters. The instructions highlight the importance of clear, detailed queries that can be solved using a series of API calls.

read the caption

Figure 8: Prompt for Query Generation.

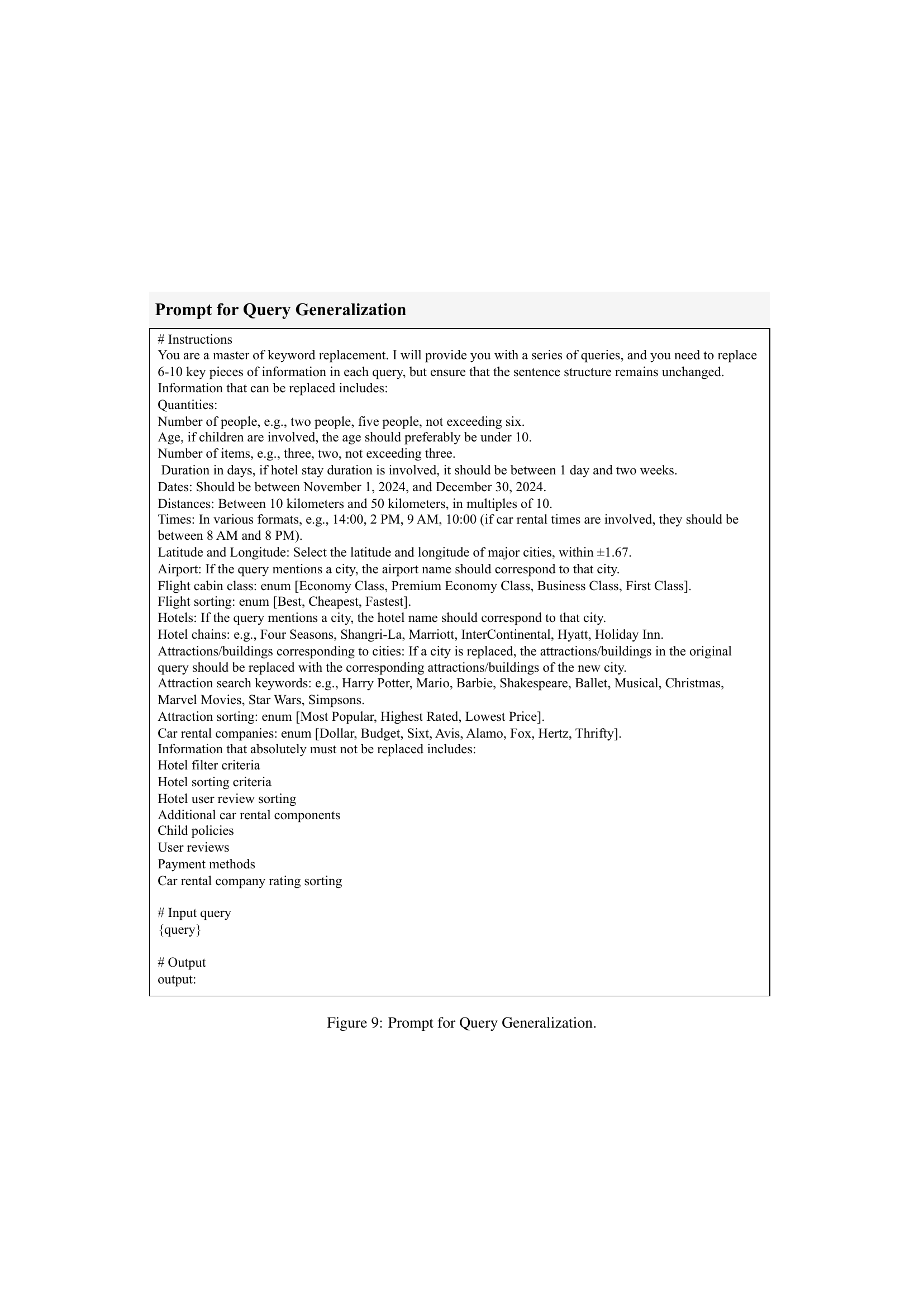

🔼 This prompt instructs a large language model (LLM) to generate variations of a given query by replacing specific pieces of information while maintaining the original sentence structure. The goal is to create a diverse set of queries for a dataset used to evaluate complex function-calling capabilities in LLMs. The prompt specifies which types of information can be modified (e.g., quantities, dates, locations, times, etc.) and provides concrete examples to guide the LLM. It emphasizes the need to keep the essential structure of the query intact while introducing varied data.

read the caption

Figure 9: Prompt for Query Generalization.

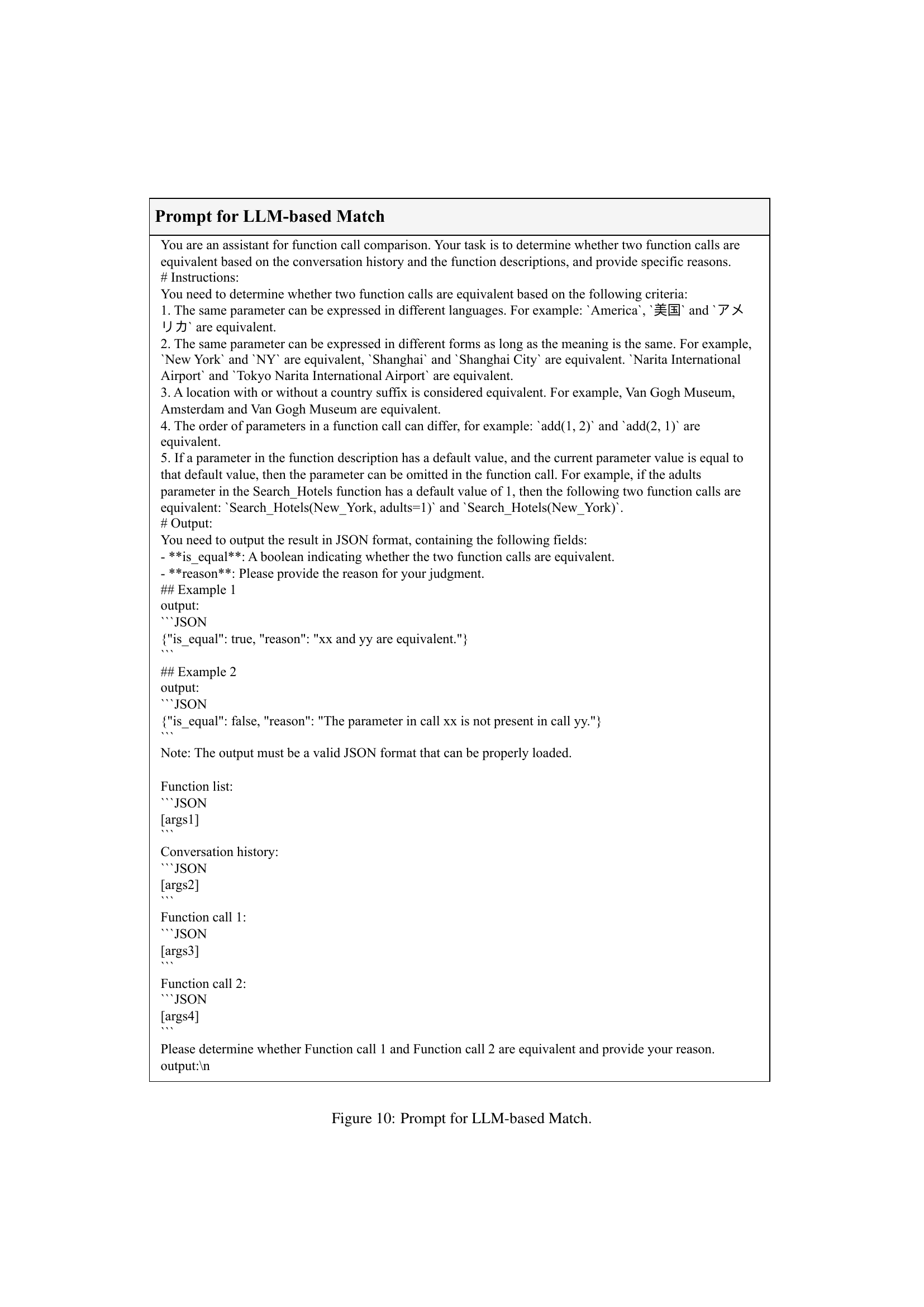

🔼 This figure details the prompt used for evaluating the LLM’s ability to perform LLM-based matching. LLM-based matching assesses whether two function calls are equivalent, considering factors such as variations in language, parameter formats, and default values. The prompt provides instructions, examples, and a structured output format for the LLM to follow, ensuring consistency and accuracy in the evaluation.

read the caption

Figure 10: Prompt for LLM-based Match.

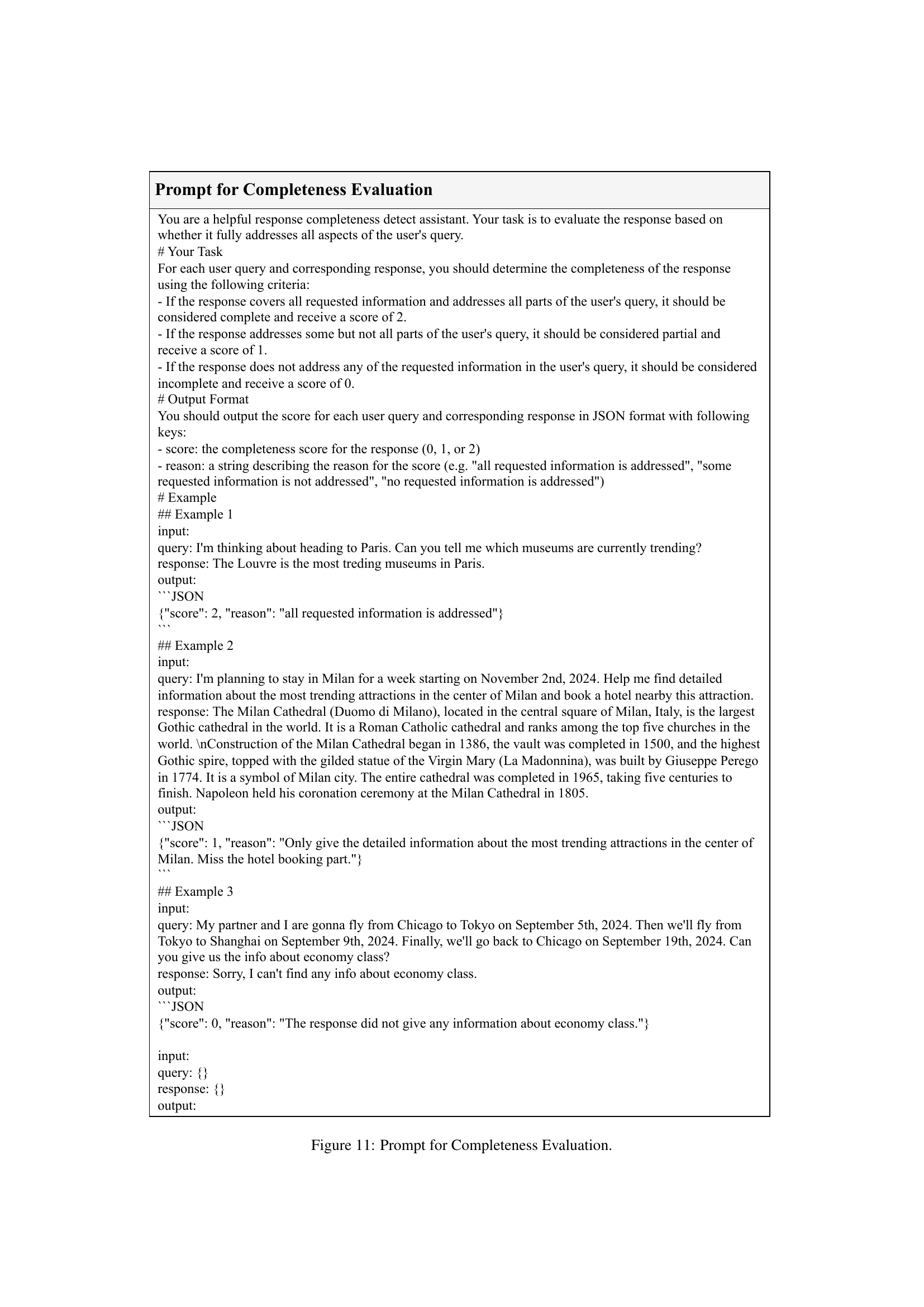

🔼 This figure presents the prompt used to evaluate the completeness of model responses. The prompt instructs evaluators to assess whether a model’s response fully addresses all aspects of a user’s query. It provides three scoring levels (0, 1, 2) based on the extent of the information provided, with examples illustrating each level and guidelines for providing justification for the given score.

read the caption

Figure 11: Prompt for Completeness Evaluation.

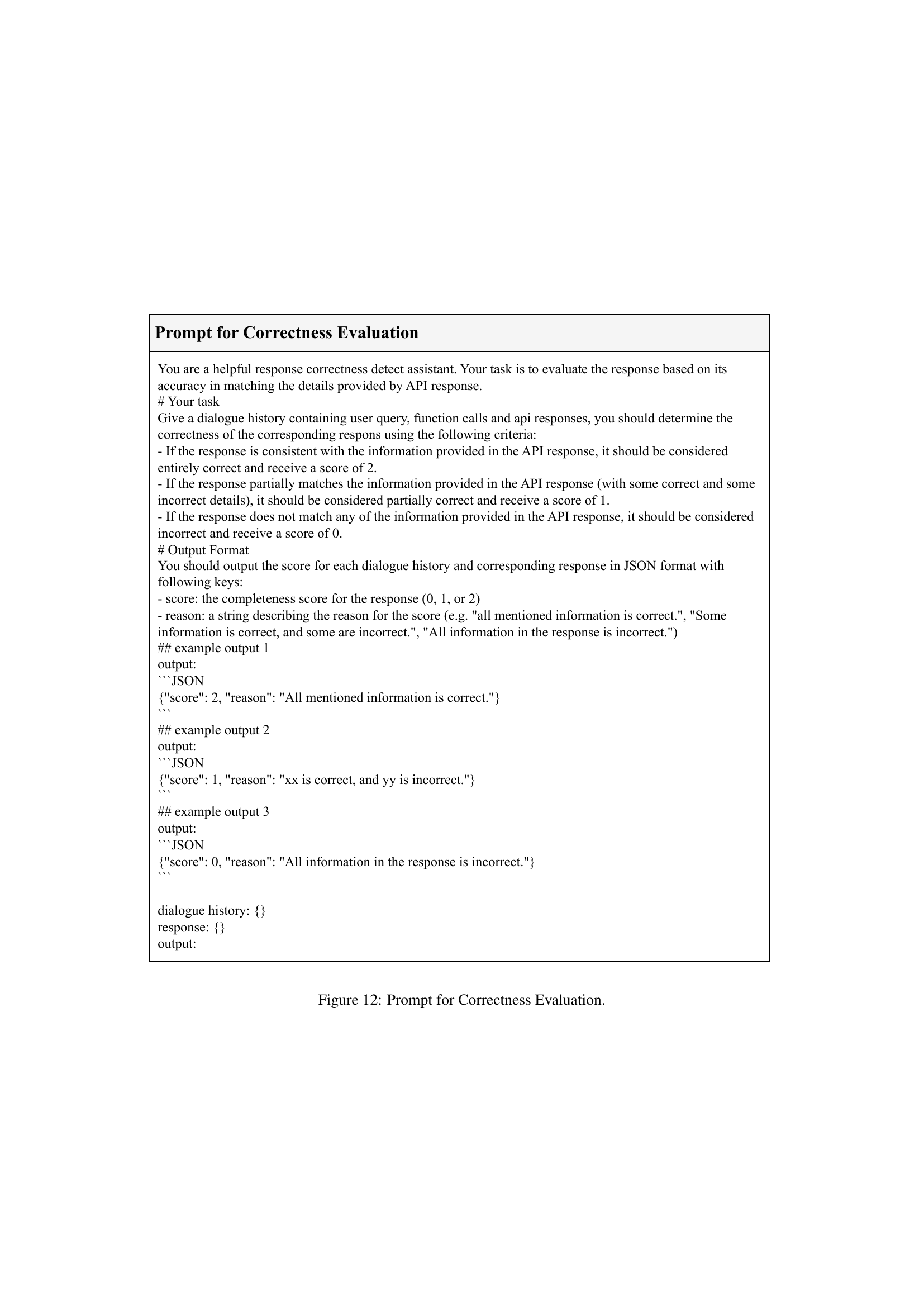

🔼 This figure shows the prompt used to evaluate the correctness of the model’s response based on the API response. The prompt instructs the evaluator to score the response (0-2) based on whether it’s entirely correct, partially correct, or completely incorrect when compared to the API’s output. It also requires a justification of the score given.

read the caption

Figure 12: Prompt for Correctness Evaluation.

More on tables

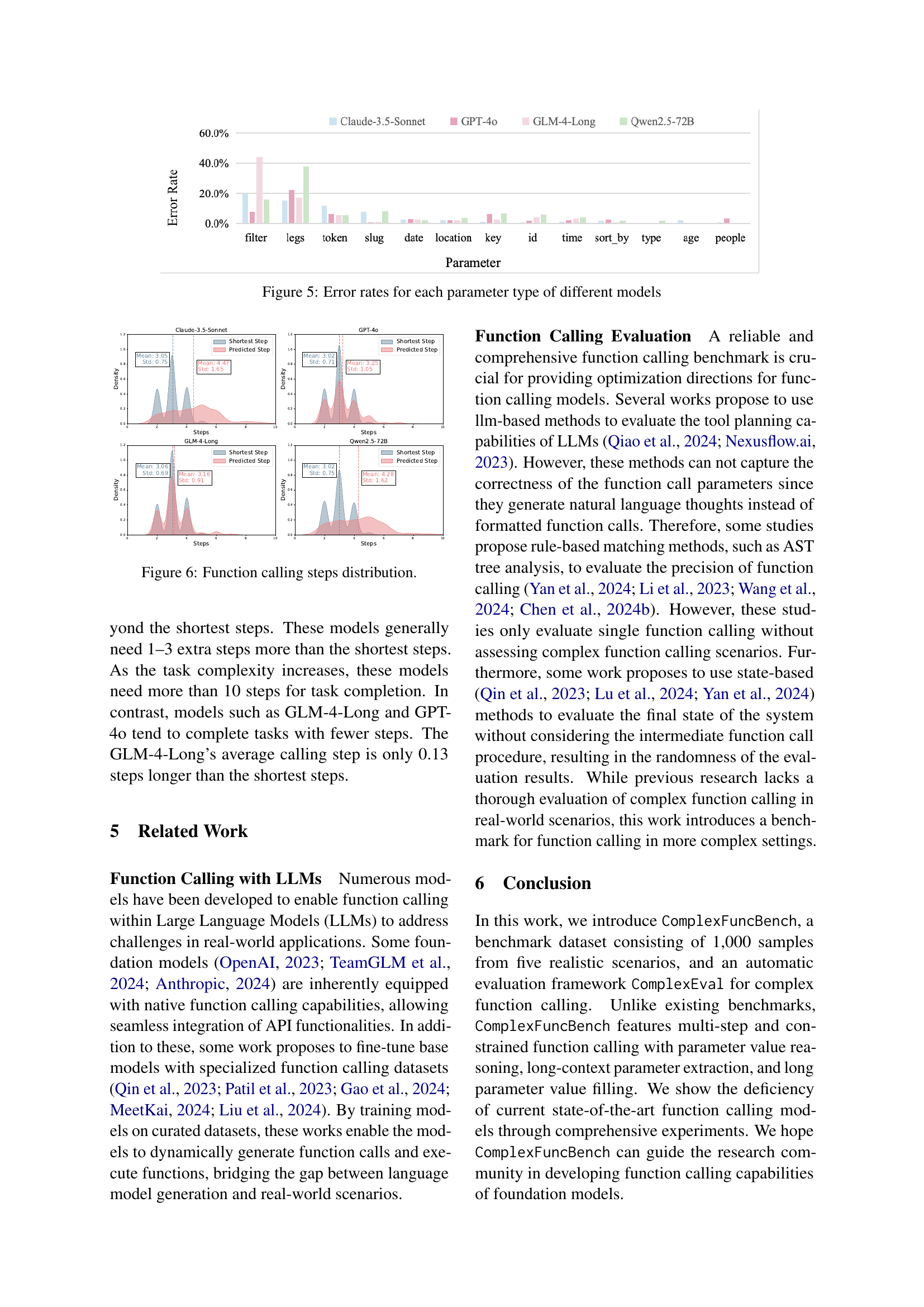

| Model | Hotels | Flights | Car Rental | Attraction | Cross | Overall | Completeness | Correctness | ||||||

| Success | Call Acc | Success | Call Acc | Success | Call Acc | Success | Call Acc | Success | Call Acc | Success | Call Acc | |||

| close-source models | ||||||||||||||

| Claude-3.5-Haiku | 36.00 | 50.62 | 50.67 | 75.63 | 59.33 | 74.05 | 58.00 | 75.37 | 38.00 | 70.00 | 45.80 | 69.50 | 1.79 | 1.71 |

| Claude-3.5-Sonnet | 54.67 | 68.17 | 54.00 | 79.50 | 76.67 | 86.01 | 69.33 | 83.33 | 57.00 | 79.33 | 61.00 | 79.27 | 1.84 | 1.85 |

| GLM-4-Long | 56.00 | 63.98 | 66.67 | 84.38 | 77.33 | 85.71 | 72.67 | 83.33 | 40.50 | 72.75 | 57.10 | 76.35 | 1.72 | 1.74 |

| GPT-4-Turbo | 54.67 | 68.48 | 48.67 | 76.5 | 44.67 | 71.14 | 70.67 | 76.48 | 41.75 | 69.38 | 49.50 | 71.38 | 1.72 | 1.81 |

| GPT-4o | 70.00 | 81.99 | 65.33 | 85.50 | 72.00 | 86.88 | 82.00 | 87.59 | 42.75 | 75.13 | 60.50 | 80.55 | 1.66 | 1.75 |

| open-source models | ||||||||||||||

| Qwen2.5-7B | 2.00 | 20.65 | 0.00 | 5.13 | 4.67 | 6.41 | 14.67 | 35.18 | 4.5 | 21.41 | 5.0 | 18.19 | 1.5 | 1.47 |

| Llama-3.1-8B | 0.00 | 0.00 | 0.00 | 1.00 | 0.00 | 1.89 | 0.67 | 2.78 | 0.00 | 1.00 | 0.10 | 1.34 | 0.18 | 0.09 |

| GLM-4-9B | 19.33 | 31.52 | 11.33 | 34.00 | 16.0 | 25.36 | 10.67 | 29.26 | 2.00 | 25.46 | 9.40 | 27.97 | 1.15 | 1.03 |

| Llama-3.1-70B | 2.00 | 10.71 | 0.67 | 2.63 | 6.67 | 10.06 | 4.67 | 11.11 | 1.50 | 8.13 | 2.70 | 8.17 | 0.67 | 0.36 |

| Llama-3.1-405B | 3.33 | 13.51 | 2.66 | 10.75 | 4.00 | 15.74 | 14.00 | 18.52 | 1.00 | 9.21 | 4.00 | 11.87 | 0.43 | 0.30 |

| Qwen2.5-72B | 40.00 | 60.24 | 31.33 | 49.25 | 48.67 | 57.58 | 63.33 | 67.41 | 31.50 | 59.00 | 40.10 | 58.32 | 1.80 | 1.75 |

| Mistral Large 2 | 19.33 | 34.32 | 20.67 | 52.88 | 40.67 | 58.16 | 25.33 | 40.18 | 10.50 | 50.54 | 20.10 | 48.78 | 0.94 | 1.0 |

🔼 This table presents the main results of evaluating various large language models (LLMs) on the ComplexFuncBench benchmark. Models are categorized into three groups: closed-source, open-source models under 10 billion parameters, and open-source models above 10 billion parameters. The table displays the performance of each model across several metrics, including success rate (ability to complete the complex function call task), call accuracy (correctness of the individual function calls), and response completeness and correctness (quality of the final response generated by the model). The top two performing models in each category are highlighted for easy identification. Specific version details for the open-source models are provided to ensure reproducibility.

read the caption

Table 2: Main Results. We categorize models as close-source, open-source under 10B, and open-source above 10B. Top two results for each category are highlighted in bold and underline. The specific endpoint of open-source models are: gpt-4o-2024-08-06, gpt-4-turbo-2024-04-09, claude-3-5-sonnet-20241022 and claude-3-5-haiku-20241022.

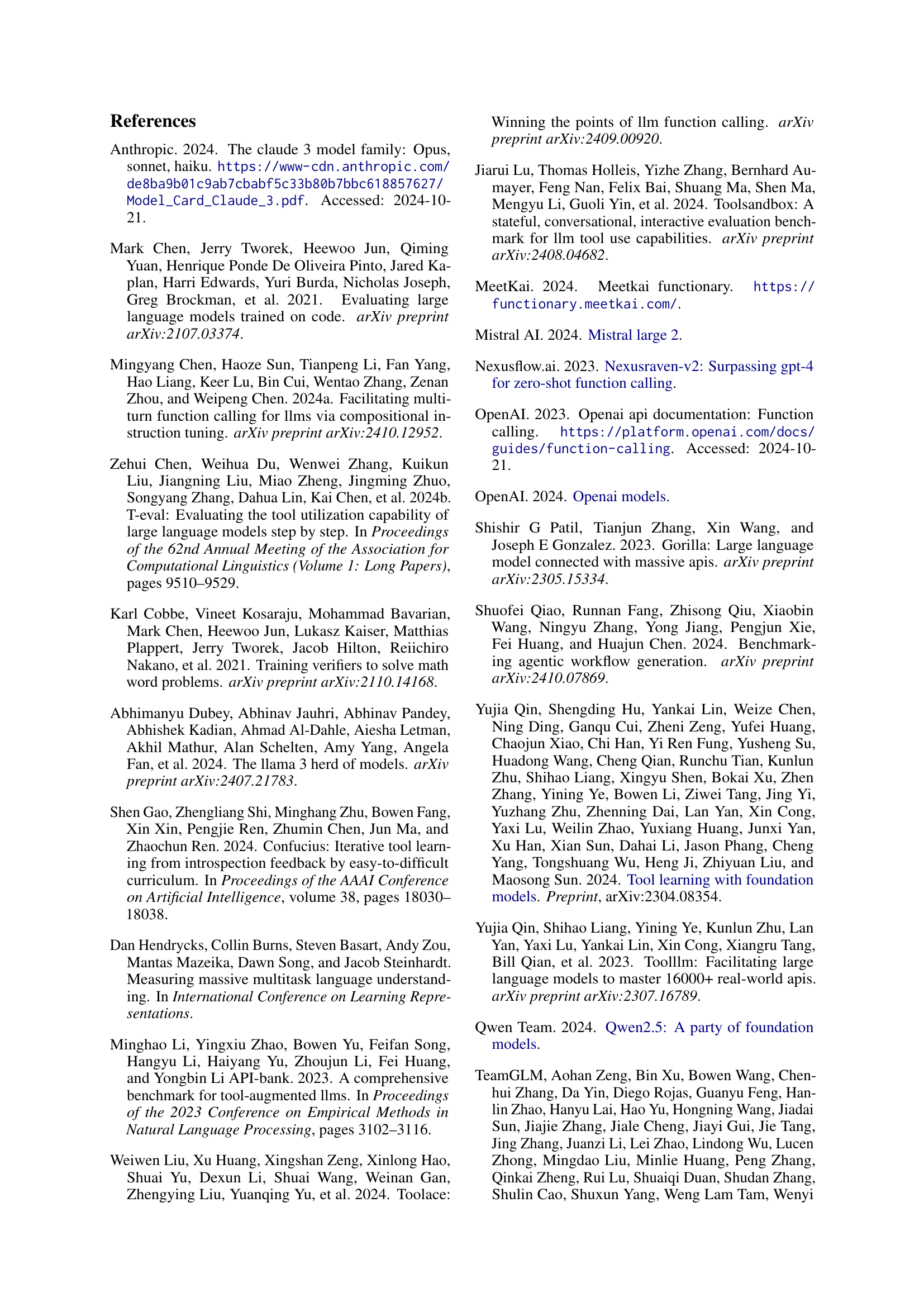

| Hotels | Flights | Car Rental | Attraction | Cross | Total | |

| # Samples | 150 | 150 | 150 | 150 | 400 | 1000 |

| Avg. Steps | 3.33 | 3.33 | 2.87 | 2.86 | 3.5 | 3.26 |

| Avg. Calls | 4.29 | 5.33 | 4.57 | 3.6 | 6.0 | 5.07 |

🔼 This table presents a statistical overview of the ComplexFuncBench dataset. It breaks down the dataset across five real-world domains (Hotels, Flights, Car Rentals, Attractions, and Cross-domain), showing the number of samples, average number of steps in a function call path, and the average number of function calls per sample. This information is crucial for understanding the scale and complexity of the benchmark.

read the caption

Table 3: Data statistics for ComplexFuncBench.

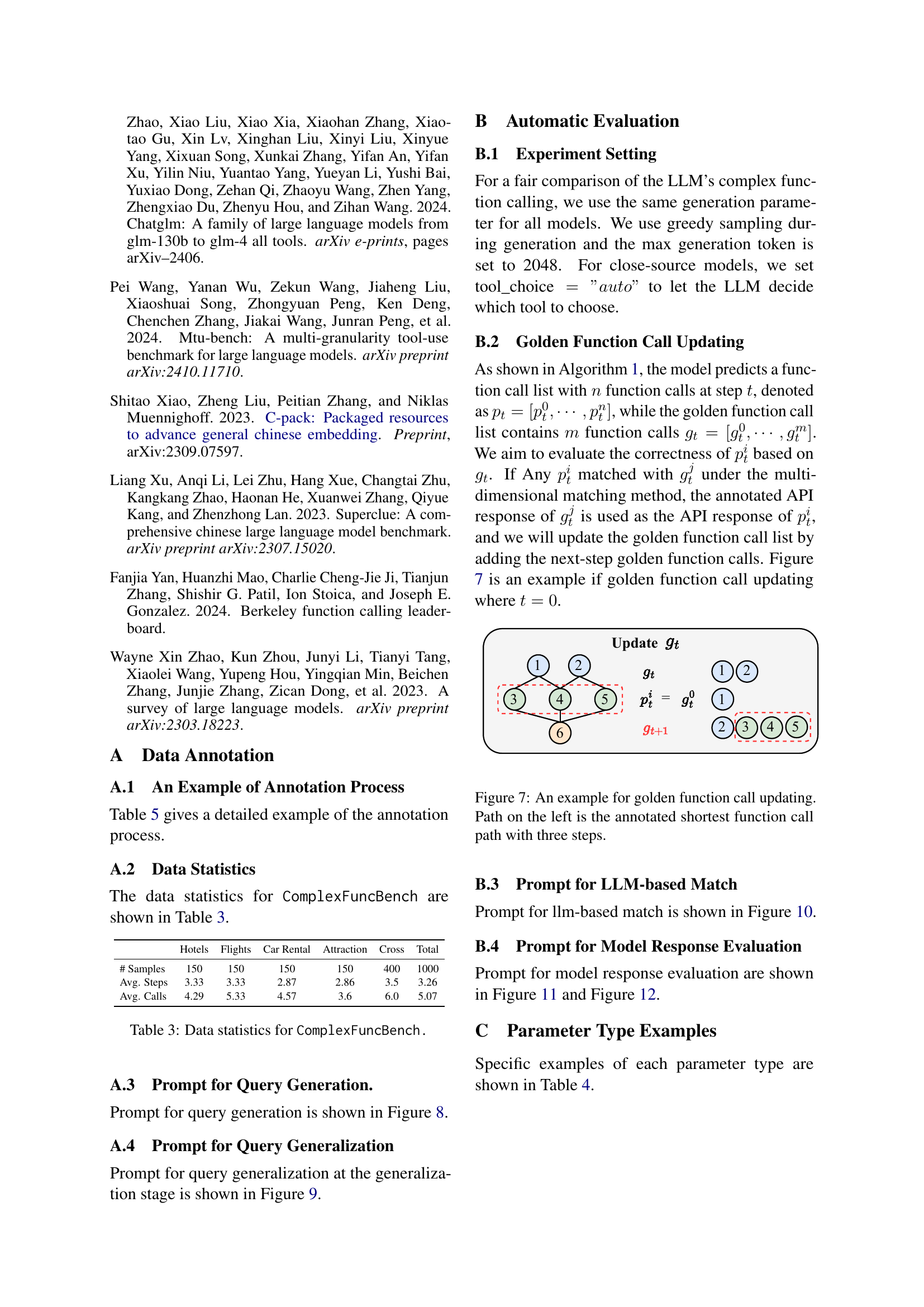

| Parameter | Example | Explanation |

| filter | filter = "facility::433, facility::107") | The parameter can be retrieved from the API response of the Get_Filter function. It often appears in queries with constraints, like: Find a few hotels with a pool and free wifi. "facility::433" and "facility::107" denote pool and free wifi, respectively. |

| legs | legs=[{"fromId": "DFW.CITY", "toId": "MUC.AIRPORT", "date": "2024-11-25"},{"fromId": "MUC.AIRPORT", "toId": "STO.CITY", "date": "2024-11-26"}, {"fromId": "STO.CITY","toId": "DFW.CITY","date": "2024-12-09"}]) | The parameter is the multi-stop flights list which contains location and date. |

| token | token="d7699_H4sIAAAAAAAA_ … AAA." | The parameter can be retrieved from the API response of multiple functions. For example, the Search_Flights function will return the for different flights. |

| slug | slug="pr7jepixwlvr-private-guided-tour-orsay-museum-rare-languages" | The parameter can be retrieved from the API response of Search_Attraction_Location function as as ’productSlug’ inside ’products’ or ’destinations’. |

| date | date="2024-11-22" | Parameters related to dates, like: check-in-date, check-out-date,etc. |

| location | location="Amsterdam" | Parameters related to locations, like: country, city ,etc. |

| key | key= "eyJkcml2ZXJzQWdlIjozMCwiZ HJvcE…19GRUVTIl19" | The parameter can be retrieved from the API response of multiple functions. For example, the Search_Car_Rentals function will return the for different cars. |

| id | id="eyJ1ZmkiOi01NjQwNjR9" | The parameter can be retrieved from the API response of multiple functions. For example, the Search_Attraction_Location function will return the for different attractions. |

| time | time="08:00" | Parameters related to time, like: pick-up-time, drop-off-time,etc. |

| sort_by | sort_by="popularity" | The parameter controls the order in which hotel or flight results are presented. For example, the hotels can be sorted by price or popularity. |

| type | type="landmark" | The parameter is the entity type, like landmark, city, etc. |

| age | age="8" | The parameter is the age of people. |

| people | people=2 | The parameter is the number of people. |

🔼 This table provides examples of different parameter types used in the ComplexFuncBench dataset. It illustrates the variety of data formats and structures (e.g., numbers, strings, lists, coordinates, enums) that LLMs must handle when interacting with real-world APIs. Each parameter type is shown with sample values to clarify its usage in API function calls.

read the caption

Table 4: Examples of different parameter types.

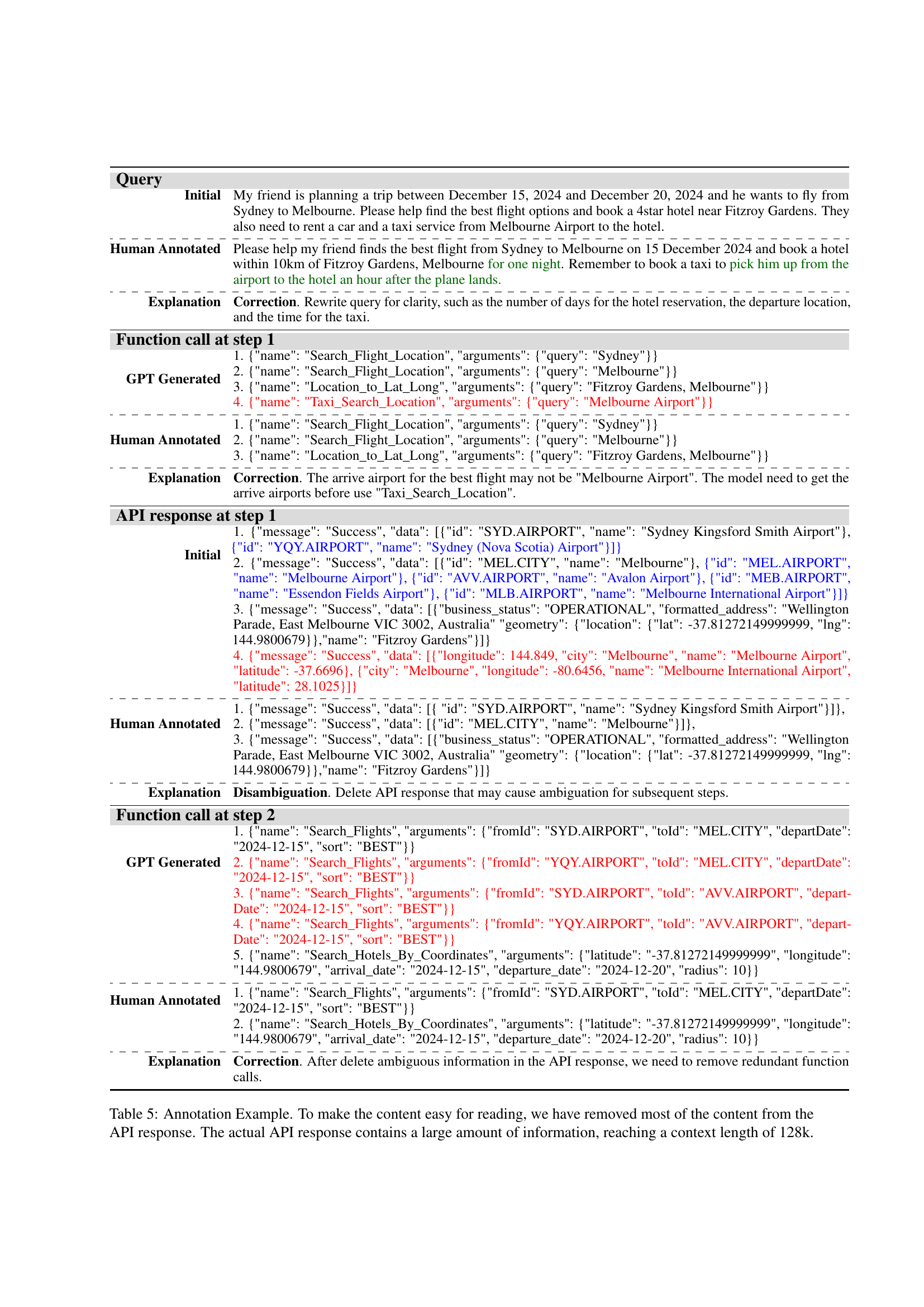

| Query | |

| Initial | My friend is planning a trip between December 15, 2024 and December 20, 2024 and he wants to fly from Sydney to Melbourne. Please help find the best flight options and book a 4star hotel near Fitzroy Gardens. They also need to rent a car and a taxi service from Melbourne Airport to the hotel. |

| \hdashline Human Annotated | Please help my friend finds the best flight from Sydney to Melbourne on 15 December 2024 and book a hotel within 10km of Fitzroy Gardens, Melbourne for one night. Remember to book a taxi to pick him up from the airport to the hotel an hour after the plane lands. |

| \hdashline Explanation | Correction. Rewrite query for clarity, such as the number of days for the hotel reservation, the departure location, and the time for the taxi. |

| Function call at step 1 | |

| GPT Generated | 1. {"name": "Search_Flight_Location", "arguments": {"query": "Sydney"}} |

| 2. {"name": "Search_Flight_Location", "arguments": {"query": "Melbourne"}} | |

| 3. {"name": "Location_to_Lat_Long", "arguments": {"query": "Fitzroy Gardens, Melbourne"}} | |

| 4. {"name": "Taxi_Search_Location", "arguments": {"query": "Melbourne Airport"}} | |

| \hdashline Human Annotated | 1. {"name": "Search_Flight_Location", "arguments": {"query": "Sydney"}} |

| 2. {"name": "Search_Flight_Location", "arguments": {"query": "Melbourne"}} | |

| 3. {"name": "Location_to_Lat_Long", "arguments": {"query": "Fitzroy Gardens, Melbourne"}} | |

| \hdashline Explanation | Correction. The arrive airport for the best flight may not be "Melbourne Airport". The model need to get the arrive airports before use "Taxi_Search_Location". |

| API response at step 1 | |

| Initial | 1. {"message": "Success", "data": [{"id": "SYD.AIRPORT", "name": "Sydney Kingsford Smith Airport"}, {"id": "YQY.AIRPORT", "name": "Sydney (Nova Scotia) Airport"}]} |

| 2. {"message": "Success", "data": [{"id": "MEL.CITY", "name": "Melbourne"}, {"id": "MEL.AIRPORT", "name": "Melbourne Airport"}, {"id": "AVV.AIRPORT", "name": "Avalon Airport"}, {"id": "MEB.AIRPORT", "name": "Essendon Fields Airport"}, {"id": "MLB.AIRPORT", "name": "Melbourne International Airport"}]} | |

| 3. {"message": "Success", "data": [{"business_status": "OPERATIONAL", "formatted_address": "Wellington Parade, East Melbourne VIC 3002, Australia" "geometry": {"location": {"lat": -37.81272149999999, "lng": 144.9800679}},"name": "Fitzroy Gardens"}]} | |

| 4. {"message": "Success", "data": [{"longitude": 144.849, "city": "Melbourne", "name": "Melbourne Airport", "latitude": -37.6696}, {"city": "Melbourne", "longitude": -80.6456, "name": "Melbourne International Airport", "latitude": 28.1025}]} | |

| \hdashline Human Annotated | 1. {"message": "Success", "data": [{ "id": "SYD.AIRPORT", "name": "Sydney Kingsford Smith Airport"}]}, |

| 2. {"message": "Success", "data": [{"id": "MEL.CITY", "name": "Melbourne"}]}, | |

| 3. {"message": "Success", "data": [{"business_status": "OPERATIONAL", "formatted_address": "Wellington Parade, East Melbourne VIC 3002, Australia" "geometry": {"location": {"lat": -37.81272149999999, "lng": 144.9800679}},"name": "Fitzroy Gardens"}]} | |

| \hdashline Explanation | Disambiguation. Delete API response that may cause ambiguation for subsequent steps. |

| Function call at step 2 | |

| GPT Generated | 1. {"name": "Search_Flights", "arguments": {"fromId": "SYD.AIRPORT", "toId": "MEL.CITY", "departDate": "2024-12-15", "sort": "BEST"}} |

| 2. {"name": "Search_Flights", "arguments": {"fromId": "YQY.AIRPORT", "toId": "MEL.CITY", "departDate": "2024-12-15", "sort": "BEST"}} | |

| 3. {"name": "Search_Flights", "arguments": {"fromId": "SYD.AIRPORT", "toId": "AVV.AIRPORT", "departDate": "2024-12-15", "sort": "BEST"}} | |

| 4. {"name": "Search_Flights", "arguments": {"fromId": "YQY.AIRPORT", "toId": "AVV.AIRPORT", "departDate": "2024-12-15", "sort": "BEST"}} | |

| 5. {"name": "Search_Hotels_By_Coordinates", "arguments": {"latitude": "-37.81272149999999", "longitude": "144.9800679", "arrival_date": "2024-12-15", "departure_date": "2024-12-20", "radius": 10}} | |

| \hdashline Human Annotated | 1. {"name": "Search_Flights", "arguments": {"fromId": "SYD.AIRPORT", "toId": "MEL.CITY", "departDate": "2024-12-15", "sort": "BEST"}} |

| 2. {"name": "Search_Hotels_By_Coordinates", "arguments": {"latitude": "-37.81272149999999", "longitude": "144.9800679", "arrival_date": "2024-12-15", "departure_date": "2024-12-20", "radius": 10}} | |

| \hdashline Explanation | Correction. After delete ambiguous information in the API response, we need to remove redundant function calls. |

🔼 This table illustrates the annotation process for a single sample within the ComplexFuncBench dataset. It demonstrates how a user query is refined, the function calls generated by the model at each step, the API responses received, and the subsequent corrections and disambiguation steps taken by human annotators. The process highlights how the model may generate multiple function calls that are then refined by human annotators to select the optimal path for resolving the query. The most significant detail to note is that while the table displays shortened API responses for brevity, the complete API responses used in the annotation process contain significantly more information, exceeding a context length of 128 kilobytes.

read the caption

Table 5: Annotation Example. To make the content easy for reading, we have removed most of the content from the API response. The actual API response contains a large amount of information, reaching a context length of 128k.

Full paper#