TL;DR#

Current Large Language Model (LLM) agents often struggle in real-world applications due to their inability to recover from errors. Collecting enough step-level critique data for training is expensive and difficult. Existing methods relying on behavior cloning from perfect trajectories do not teach the agents how to recover from errors. This limits their ability to handle complex, interactive tasks.

Agent-R tackles this by using Monte Carlo Tree Search (MCTS) to automatically construct self-critique datasets. Instead of solely relying on the final outcome, Agent-R identifies and revises errors early in the trajectory using a model-guided critique mechanism. This iterative self-training process continuously improves the model’s error correction and data generation capabilities. Experiments show that Agent-R significantly outperforms baseline methods across various interactive environments, demonstrating its effectiveness in equipping agents with self-reflection and self-correction abilities.

Key Takeaways#

Why does it matter?#

This paper is important because it addresses a critical limitation in current LLM agents: the inability to effectively recover from errors in interactive environments. It introduces a novel self-training framework that allows agents to learn from mistakes by generating and revising their own trajectories. This method is highly efficient and could significantly improve the robustness and adaptability of future LLM agents in real-world applications, which is a highly relevant area of current research. It opens avenues for further exploration of timely error correction, scalable self-improvement paradigms, and the role of self-reflection in improving agent decision-making.

Visual Insights#

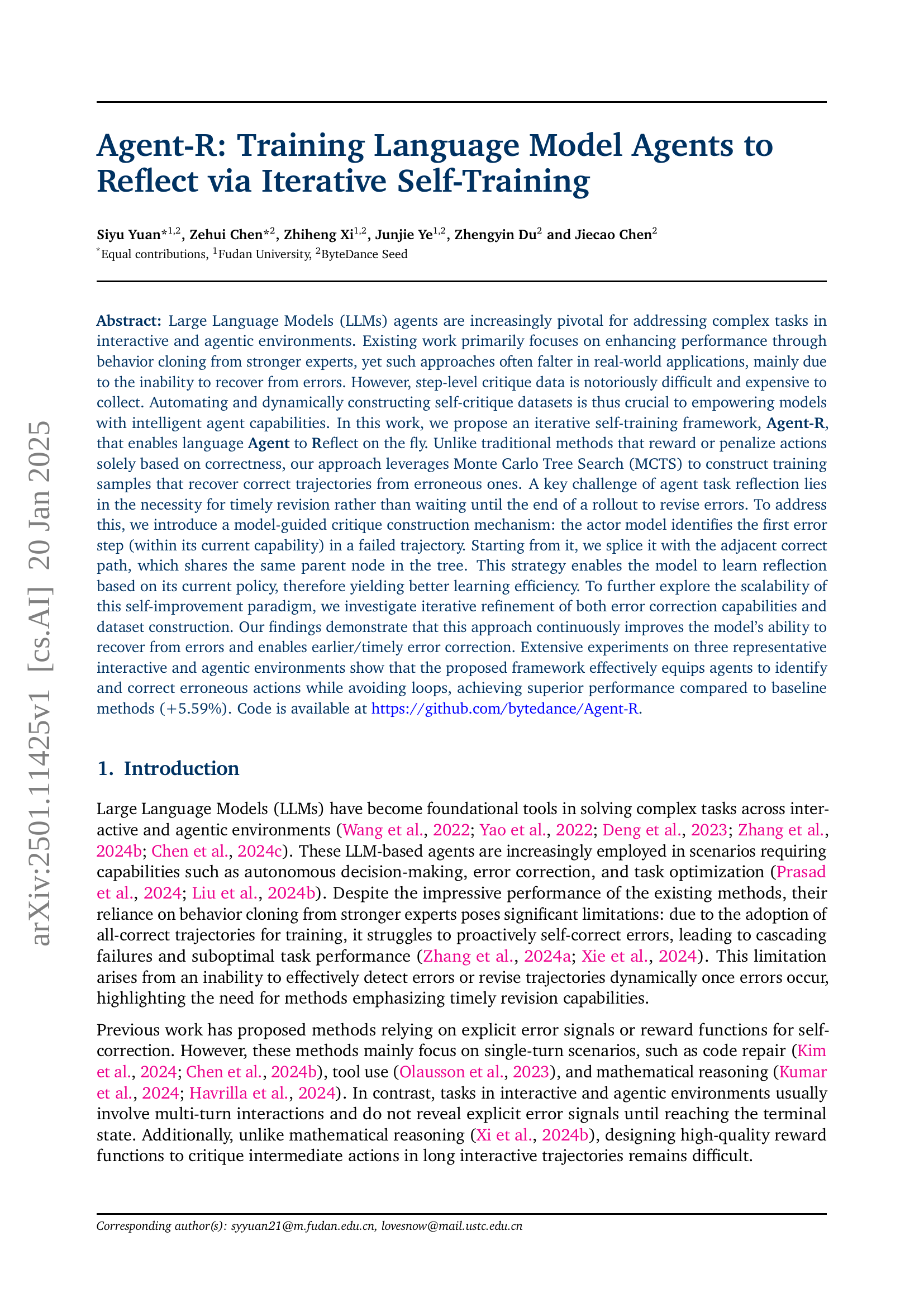

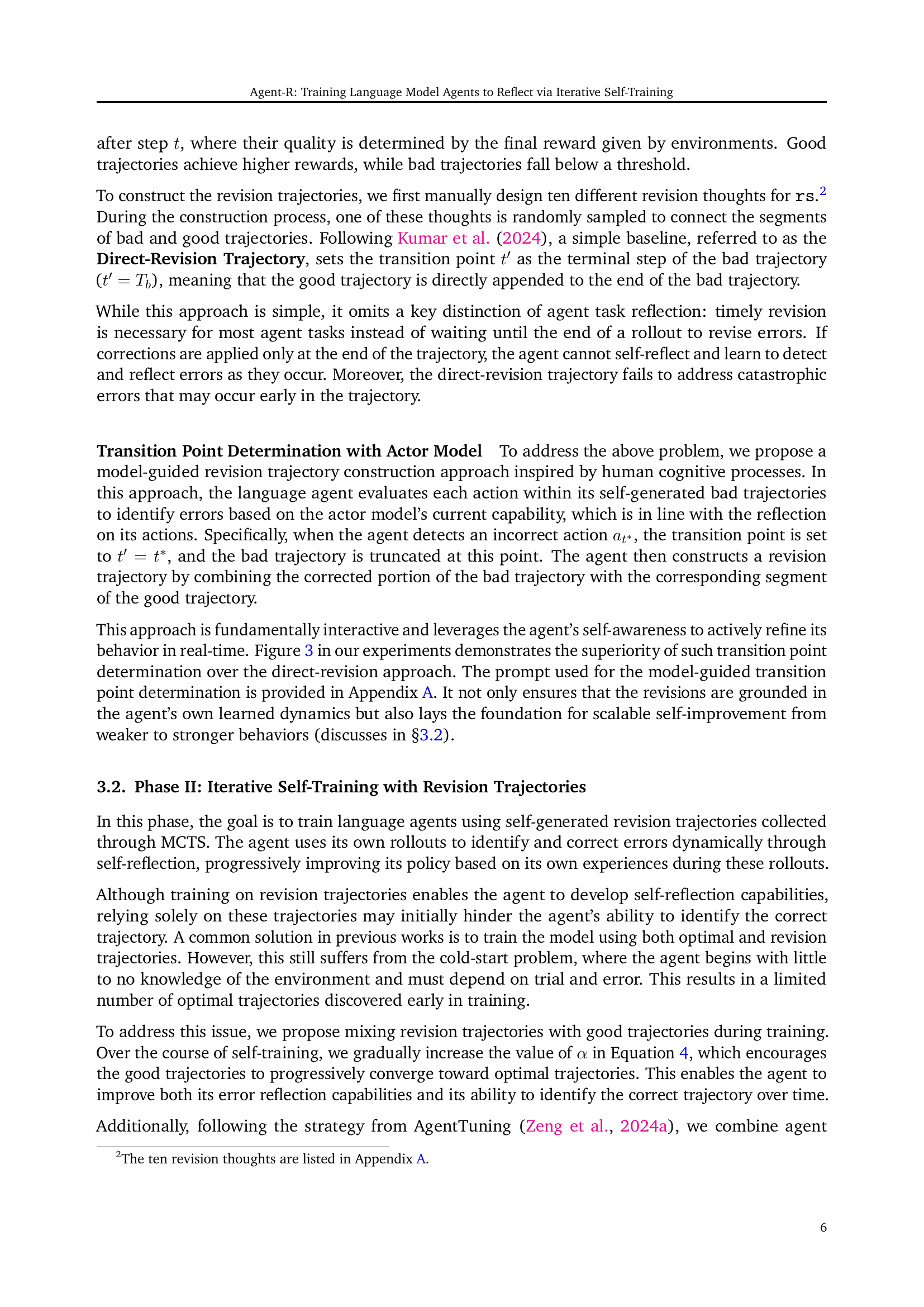

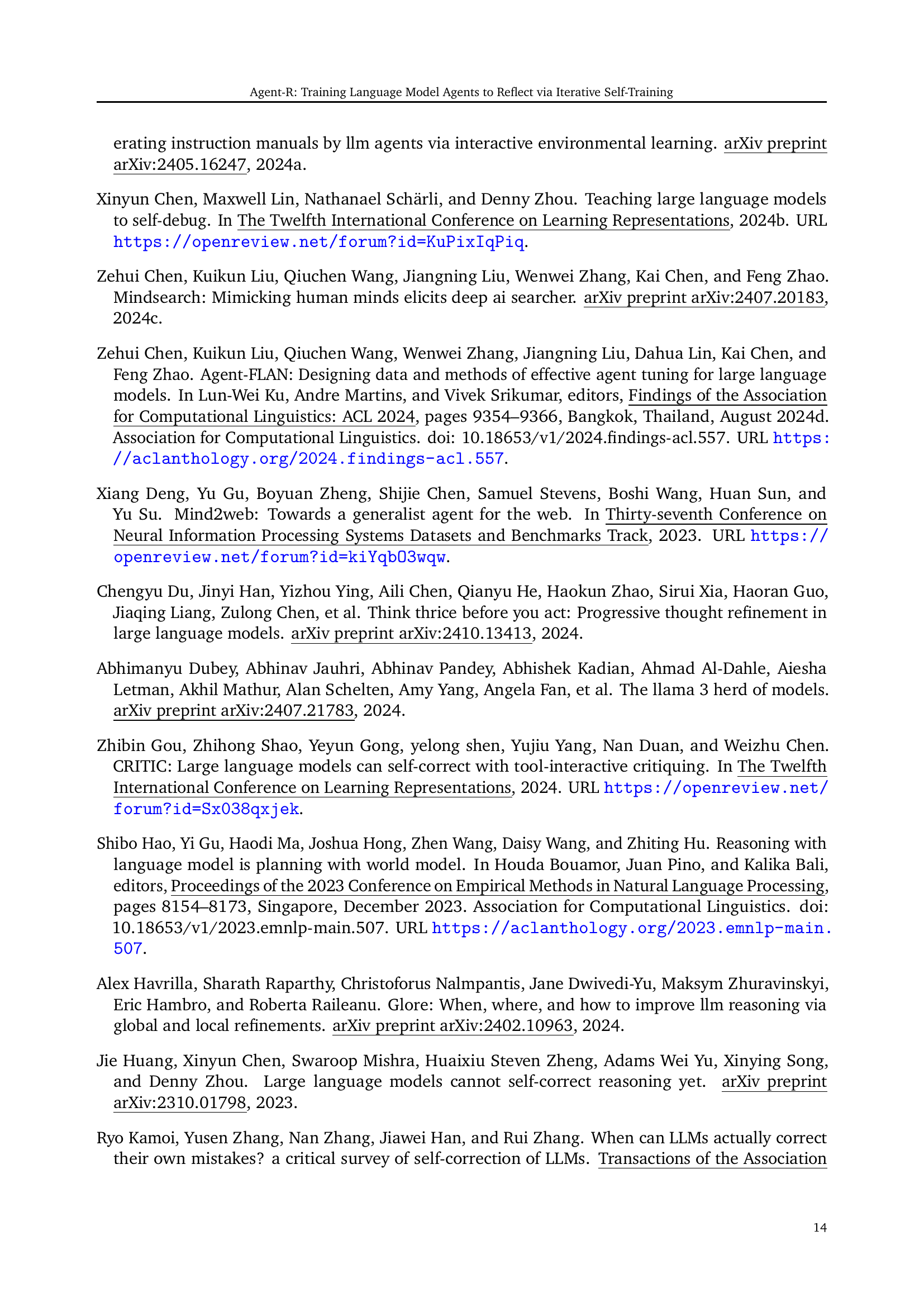

🔼 The figure illustrates the challenges language models face when correcting errors during multi-step task completion. The left panel shows an example where the agent takes a wrong path (‘Open door to hallway’), realizing its mistake later, but finding it difficult to recover. The right panel showcases a scenario where the agent gets stuck in a loop (‘Open door to kitchen’), repeatedly performing an ineffective action. Agent-R, the proposed method, is designed to overcome these issues. It allows agents to detect and correct errors in real-time, improving the ability to complete long and complex tasks without getting stuck in unproductive loops.

read the caption

Figure 1: Illustration of language agents struggling with error correction in trajectory generation. These errors can cause agents to enter loops, hindering recovery in long trajectories and resulting in suboptimal outcomes. Agent-R enables agents to effectively detect and address errors in real-time, handling long-horizon tasks and avoiding loops with greater self-reflection capabilities.

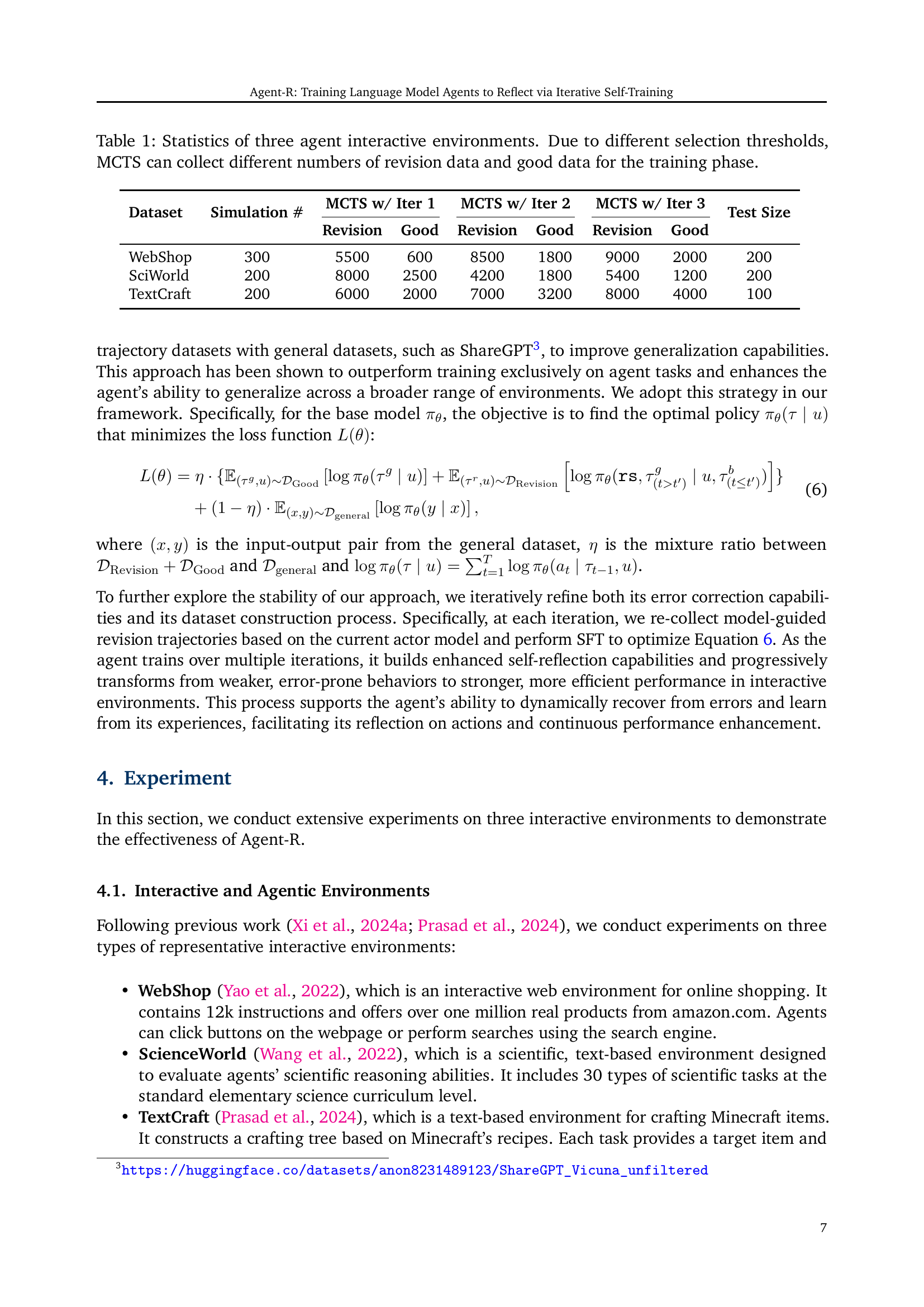

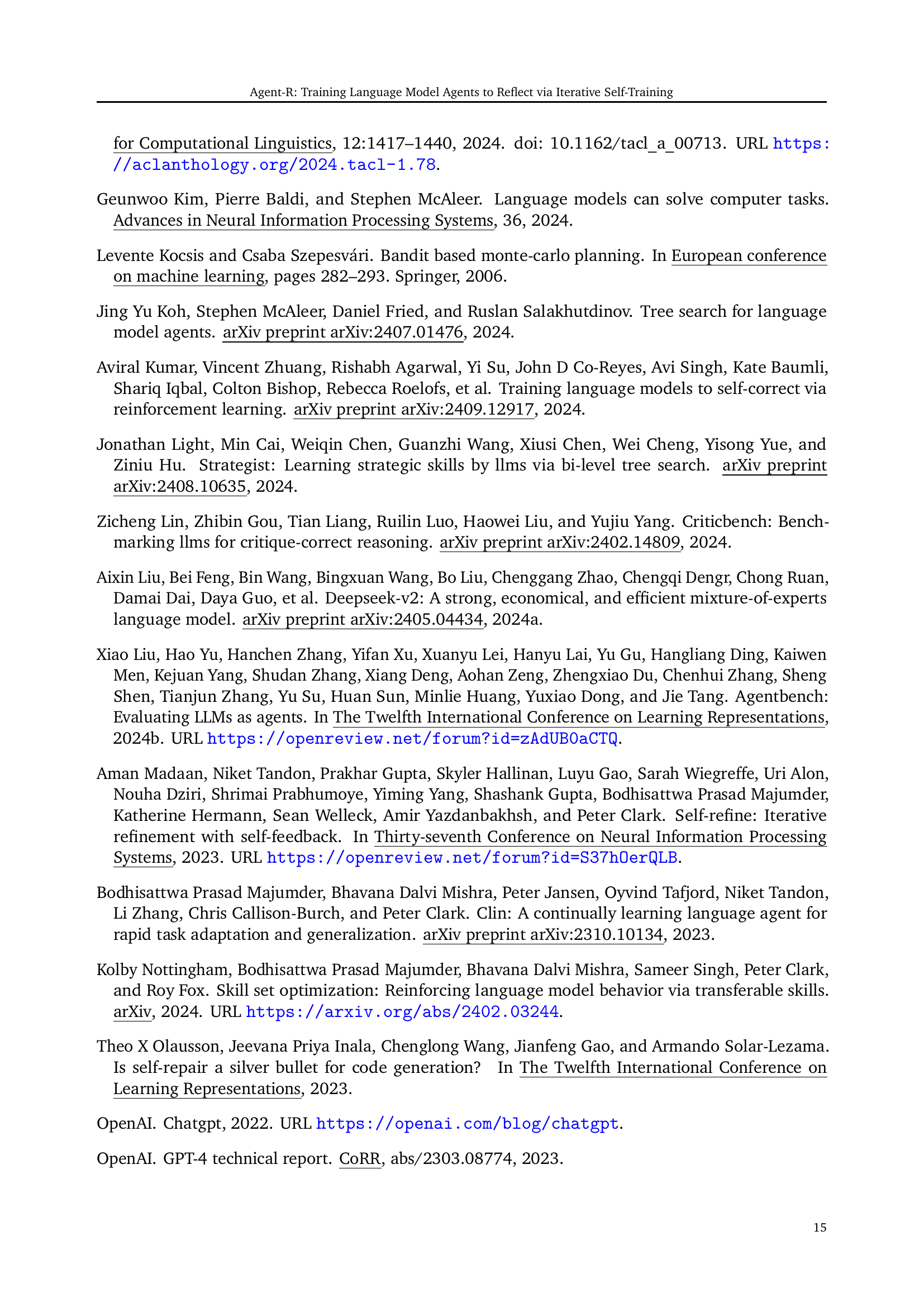

| Dataset | Simulation # | MCTS w/ Iter 1 | MCTS w/ Iter 2 | MCTS w/ Iter 3 | Test Size | |||

| Revision | Good | Revision | Good | Revision | Good | |||

| WebShop | 300 | 5500 | 600 | 8500 | 1800 | 9000 | 2000 | 200 |

| SciWorld | 200 | 8000 | 2500 | 4200 | 1800 | 5400 | 1200 | 200 |

| TextCraft | 200 | 6000 | 2000 | 7000 | 3200 | 8000 | 4000 | 100 |

🔼 This table presents a statistical overview of three interactive agent environments used in the study: WebShop, SciWorld, and TextCraft. It details the number of simulations run in each environment, the quantity of revision trajectories (incorrect attempts corrected by the model) and good trajectories (successful attempts) collected using Monte Carlo Tree Search (MCTS) across three iterative training phases. The variation in data collection across iterations is due to different selection thresholds employed for identifying good and bad trajectories, illustrating the dynamic data generation aspect of the Agent-R framework. The test size indicates the number of trajectories used for evaluating model performance after each training phase.

read the caption

Table 1: Statistics of three agent interactive environments. Due to different selection thresholds, MCTS can collect different numbers of revision data and good data for the training phase.

In-depth insights#

Agent-R Framework#

The Agent-R framework is an iterative self-training method designed to enhance the self-correction capabilities of Language Model (LLM) agents within interactive environments. It addresses the critical challenge of timely error correction, unlike traditional methods that only revise errors at the trajectory’s end. Agent-R cleverly leverages Monte Carlo Tree Search (MCTS) to generate revision trajectories by identifying the first erroneous step and splicing it with a correct path. This model-guided critique construction mechanism improves learning efficiency. Furthermore, Agent-R iteratively refines both error correction capabilities and dataset construction, showcasing scalability and continuous improvement. Its dynamic approach allows for timely error detection and correction, enabling agents to handle complex, long-horizon tasks effectively while avoiding loops. The framework’s effectiveness is demonstrated through extensive experiments across diverse interactive environments, consistently surpassing baseline methods.

MCTS-based Reflection#

Employing Monte Carlo Tree Search (MCTS) for reflection in language model agents presents a powerful approach to self-correction. MCTS’s inherent ability to explore various action sequences allows the agent to not only identify erroneous actions, but also to generate alternative trajectories leading to successful task completion. This is a significant improvement over methods that only penalize or reward based on final outcomes, as MCTS enables on-the-fly corrections, preventing cascading errors. The process of constructing reflection datasets is streamlined, eliminating the need for expensive, manual annotation. However, the computational cost of MCTS needs to be considered, particularly in complex environments with large action spaces. Further research should focus on optimizing MCTS for efficiency while maintaining its exploration capabilities, and also exploring techniques that efficiently handle partial observability inherent in many real-world interactive tasks.

Iterative Self-Training#

Iterative self-training, as a training paradigm, presents a powerful mechanism for enhancing the capabilities of language models, particularly in interactive and agentic environments. The core concept revolves around the iterative refinement of both error correction capabilities and the dataset itself. Unlike traditional methods relying solely on expert-demonstrated perfect trajectories, iterative self-training allows the model to learn from its mistakes. This is achieved by generating and incorporating ‘revision trajectories’ which correct errors within initially faulty trajectories. The process leverages techniques such as Monte Carlo Tree Search (MCTS) to efficiently explore the trajectory space and identify points for correction. This iterative approach fosters continuous improvement, enabling earlier and more timely error correction. The model proactively self-corrects errors, avoiding cascading failures, and ultimately achieving superior performance compared to baselines. A key advantage is the automation of data generation, reducing the reliance on expensive and time-consuming human annotation. The iterative aspect further allows the model to learn from progressively harder revision tasks, enhancing its adaptability and robustness in complex scenarios.

Error Correction#

The concept of error correction is central to the paper, addressing the limitations of existing language models in handling errors during complex, interactive tasks. Current methods often fail due to a lack of real-world error recovery mechanisms. The core of the proposed approach revolves around an iterative self-training framework that enables on-the-fly reflection and correction. This approach leverages Monte Carlo Tree Search (MCTS) to dynamically generate training samples, enabling the model to learn how to identify and correct errors efficiently and timely, improving its overall performance and reducing cascading failures. The model-guided critique construction mechanism is crucial, pinpointing the first error and correcting it immediately. This contrasts with traditional methods that wait until the end of a trajectory before making corrections. This timely correction also helps avoid issues of the agent getting stuck in loops due to earlier errors. The iterative refinement of both error correction capabilities and the dataset construction further enhances the model’s ability to continuously improve its self-correction abilities. The experimental results demonstrate substantial performance gains compared to the baseline methods, validating the effectiveness of the timely, self-reflective error correction process.

Future Work#

Future research directions stemming from this work could explore several promising avenues. Improving the efficiency and scalability of the model-guided critique construction mechanism is crucial, potentially through more sophisticated error detection methods or reinforcement learning techniques. Investigating alternative search algorithms beyond Monte Carlo Tree Search (MCTS) to generate revision trajectories is warranted; exploring options that better balance exploration and exploitation could significantly improve performance and efficiency. Expanding the scope of the framework to a wider range of interactive environments and tasks is also necessary; rigorous testing across diverse domains is needed to demonstrate the framework’s robustness and generalizability. The integration of external knowledge bases or tools could enhance the agent’s capacity for error detection and correction. Finally, a deeper investigation into the theoretical underpinnings of timely self-correction and the interplay between self-reflection and reinforcement learning would provide valuable insights and support further advancements in building truly intelligent and adaptive language model agents.

More visual insights#

More on figures

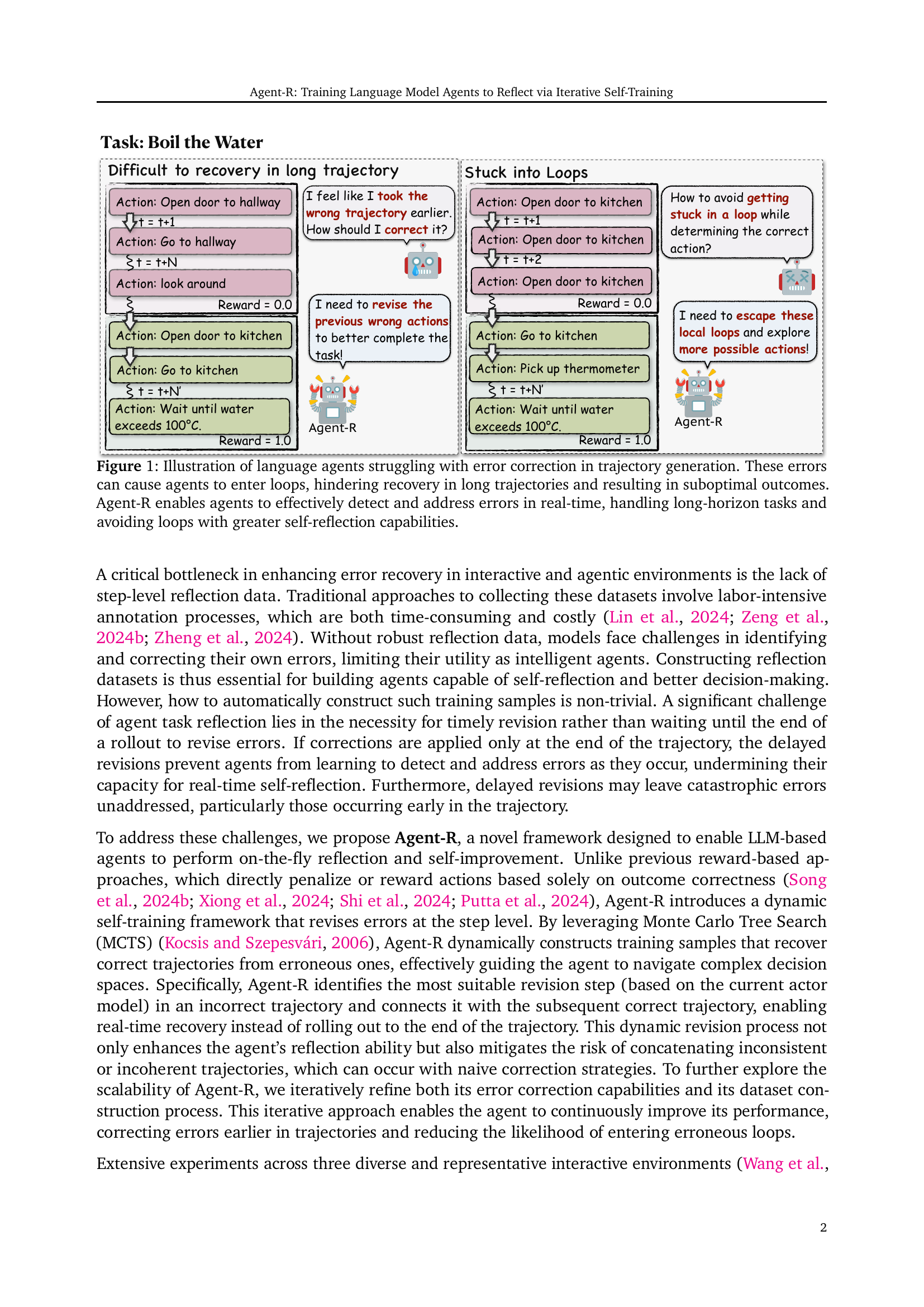

🔼 Agent-R’s framework consists of two iterative phases. Phase 1 uses Monte Carlo Tree Search (MCTS) and a model-guided reflection mechanism to identify and correct errors in agent trajectories, creating ‘revision trajectories’. These corrected trajectories are then used in Phase 2 to fine-tune the agent’s model using a loss function (L(θ)) that balances learning from both the revised and originally correct trajectories. This two-phase process repeats iteratively for continuous improvement. Key elements are the revision signal (rs), which marks the point of correction within the trajectory, and the transition point (t’), which is where the corrected segment of the trajectory begins.

read the caption

Figure 2: The framework of Agent-R consists of two phases. In Phase I, we adopt MCTS and a model-guided reflection mechanism to construct revision trajectories. In Phase II, the agents are trained using the collected revision trajectories. These two phases can be repeated iteratively. rs is the revision signal, t′superscript𝑡′t^{\prime}italic_t start_POSTSUPERSCRIPT ′ end_POSTSUPERSCRIPT is the transition point between the bad and good trajectories, and L(θ)𝐿𝜃L(\theta)italic_L ( italic_θ ) is the loss function to be optimized.

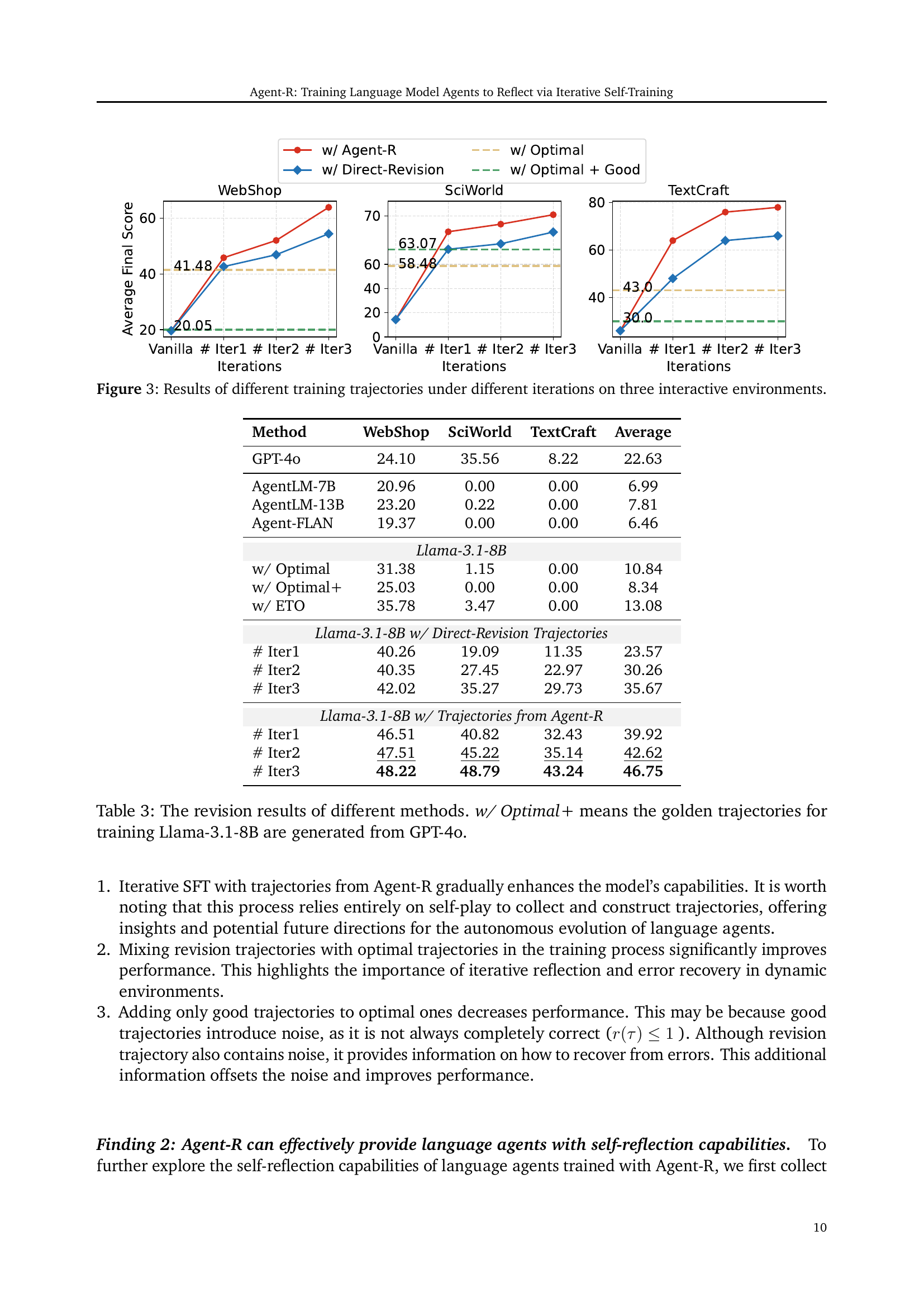

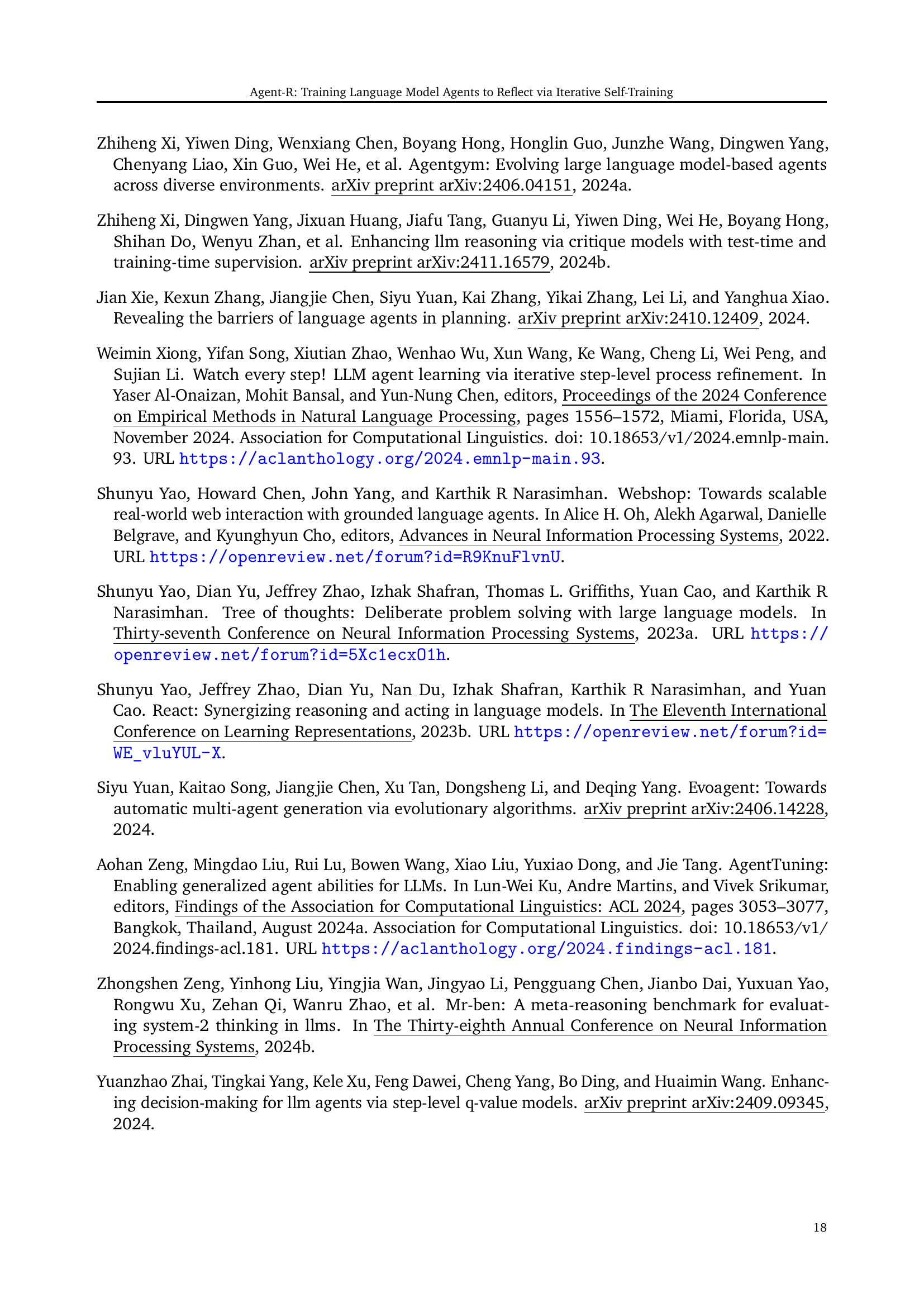

🔼 This figure displays the average final scores achieved by Llama-3.1-8B language model agents trained on different trajectory types across three iterations in three interactive environments (WebShop, SciWorld, TextCraft). The training trajectories include those generated by Agent-R (the proposed method), optimal trajectories only, and trajectories using a direct revision strategy. The graph visually compares the performance gains obtained through each training method across the three environments over the course of three iterative training phases, showcasing the effectiveness of Agent-R in improving agent performance compared to baselines.

read the caption

Figure 3: Results of different training trajectories under different iterations on three interactive environments.

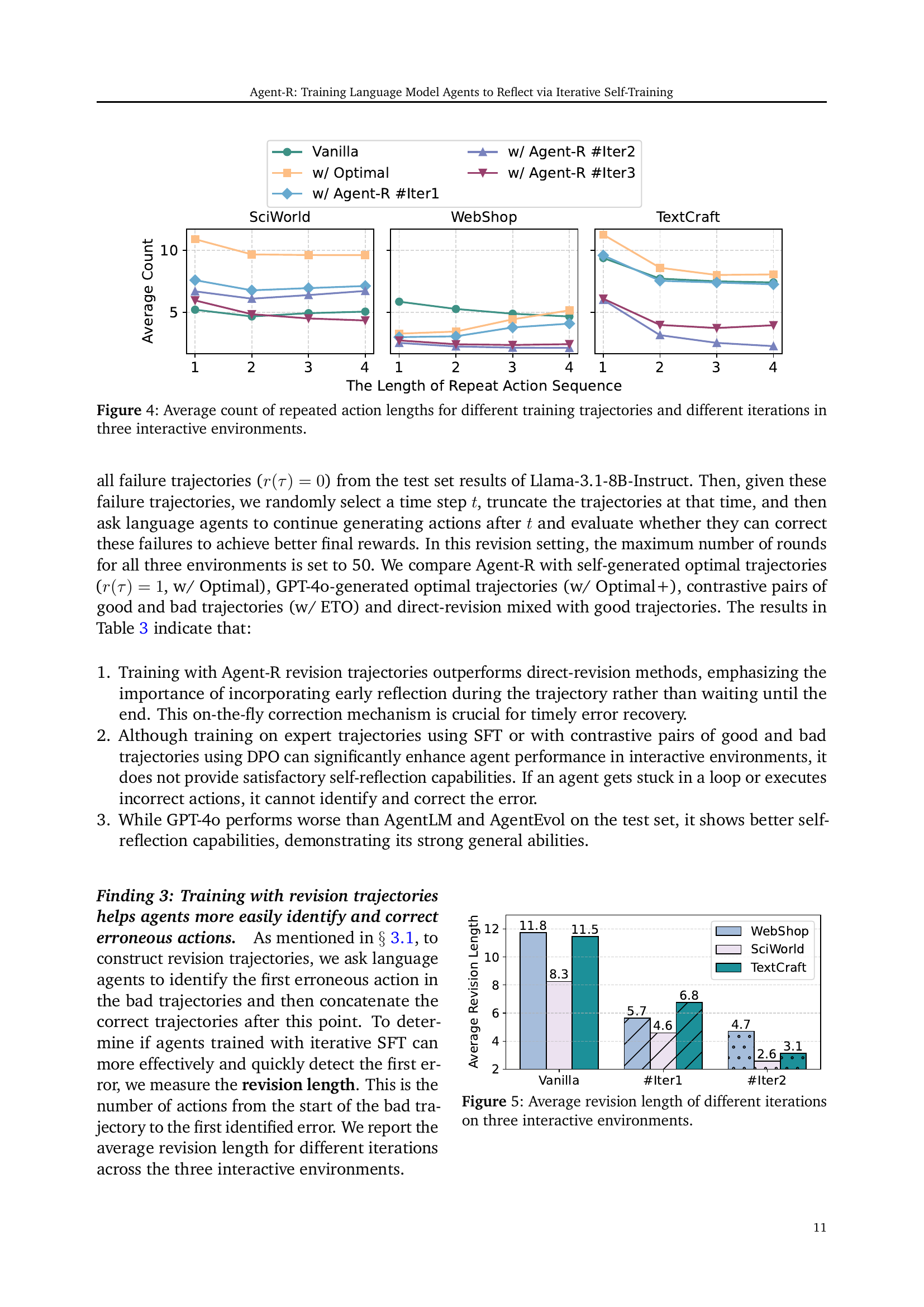

🔼 This figure displays the average number of times an agent repeats the same sequence of actions in the WebShop, SciWorld, and TextCraft environments. It compares the performance of agents trained with different methods: a vanilla model, a model trained with optimal trajectories, and a model trained with Agent-R’s trajectories (at various iterations). The x-axis represents the length of repeated action sequences, and the y-axis shows the average count of those sequences. This visualization helps to illustrate how Agent-R training reduces repetitive actions, a sign of the agent getting stuck in unproductive loops or failing to make progress towards the goal.

read the caption

Figure 4: Average count of repeated action lengths for different training trajectories and different iterations in three interactive environments.

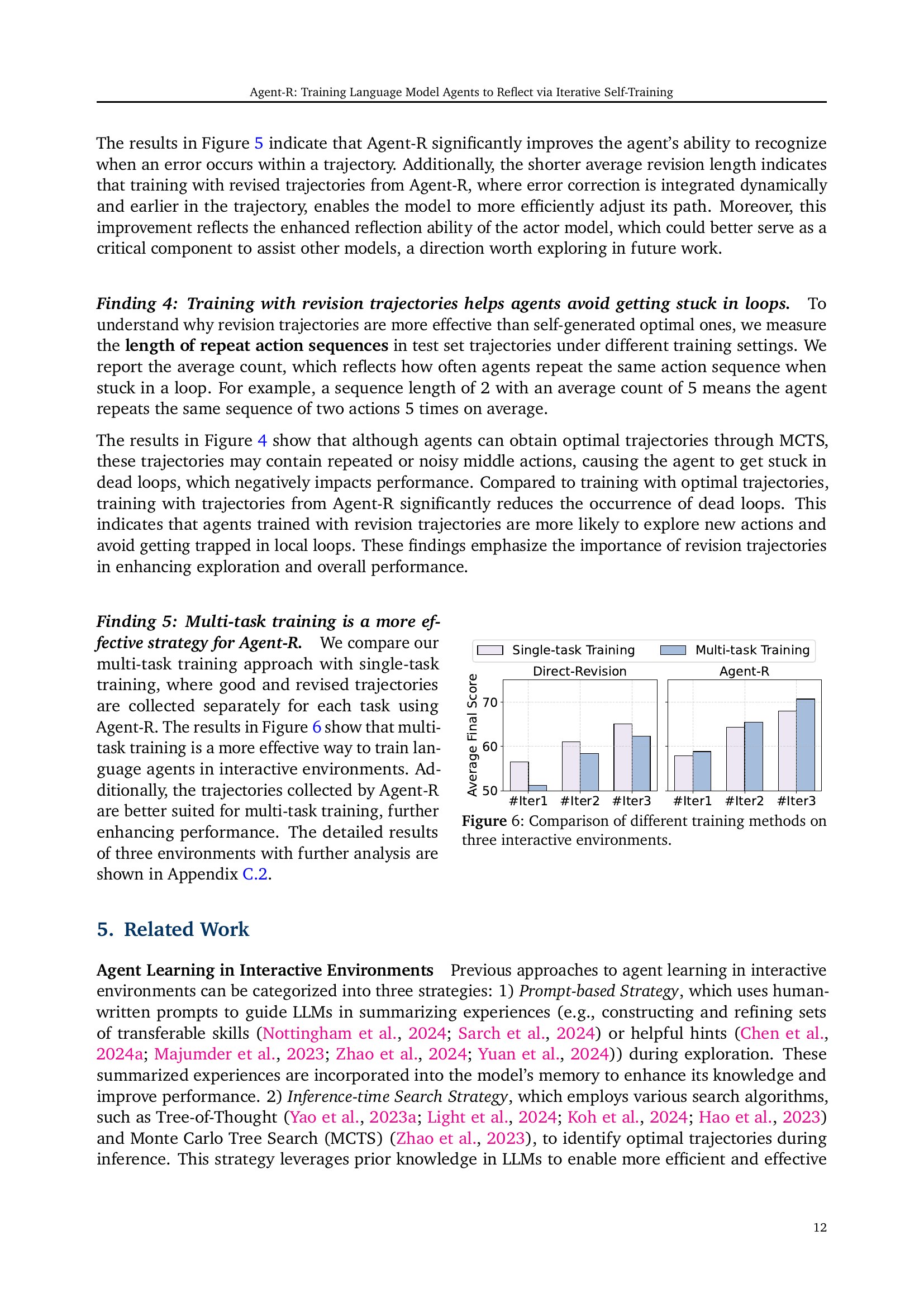

🔼 This figure shows the average number of steps taken by the language agent from the start of a bad trajectory to the detection of the first error, across three iterations of the Agent-R training process. The three interactive environments used are WebShop, SciWorld, and TextCraft. Shorter average lengths indicate quicker error detection and correction by the agent, showcasing the effectiveness of Agent-R’s iterative self-training in improving real-time self-reflection and error-recovery capabilities.

read the caption

Figure 5: Average revision length of different iterations on three interactive environments.

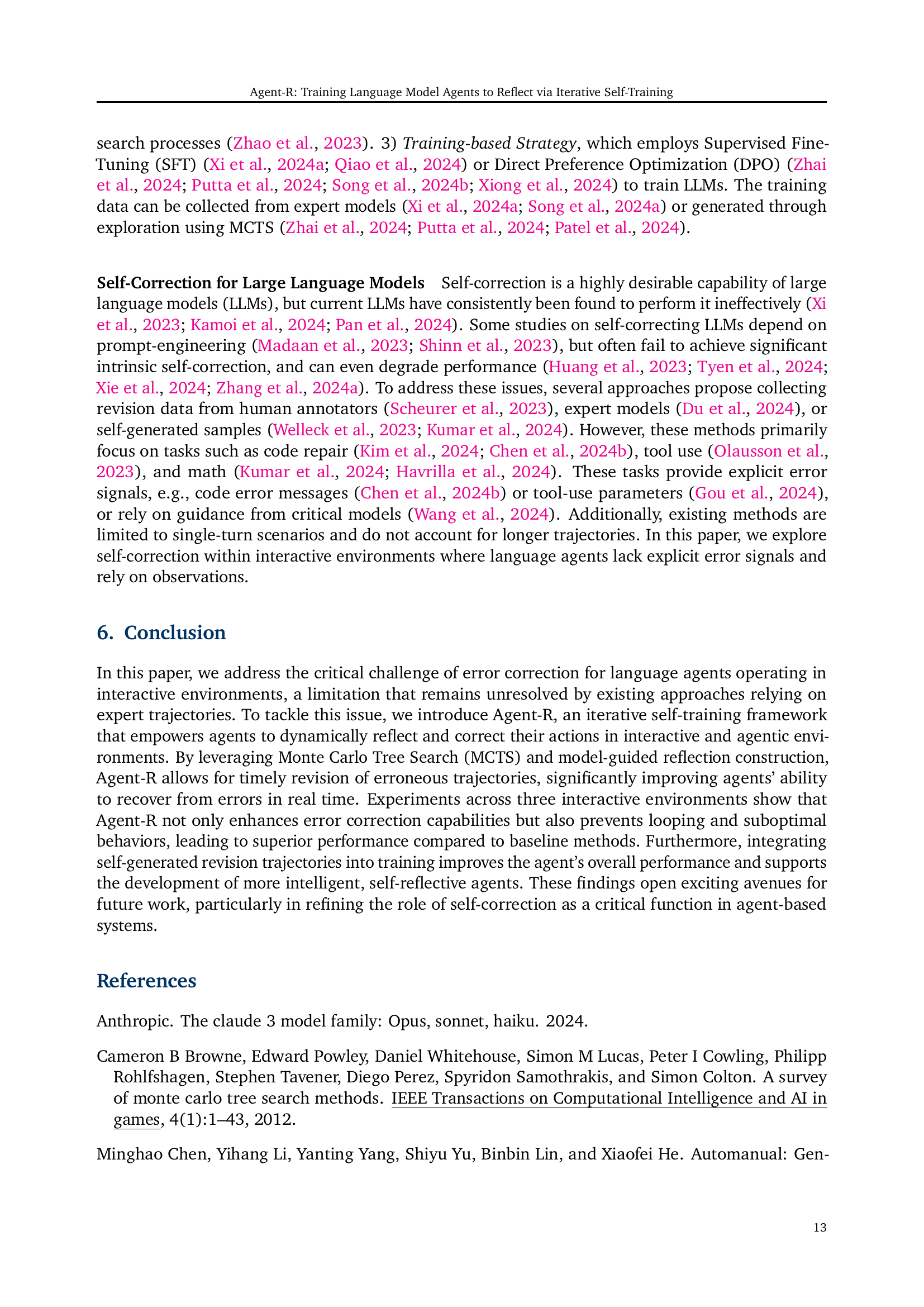

🔼 The figure displays a comparison of the average final scores achieved by different training methods across three interactive environments (WebShop, SciWorld, and TextCraft). The methods compared include single-task training with Agent-R, multi-task training with Agent-R, single-task training using a direct revision approach, and multi-task training using a direct revision approach. The graph visually represents the performance of each method across three iterations, illustrating how the average final score changes over multiple training iterations. This demonstrates the impact of the different training methods and whether multi-task learning offers better scalability and performance.

read the caption

Figure 6: Comparison of different training methods on three interactive environments.

More on tables

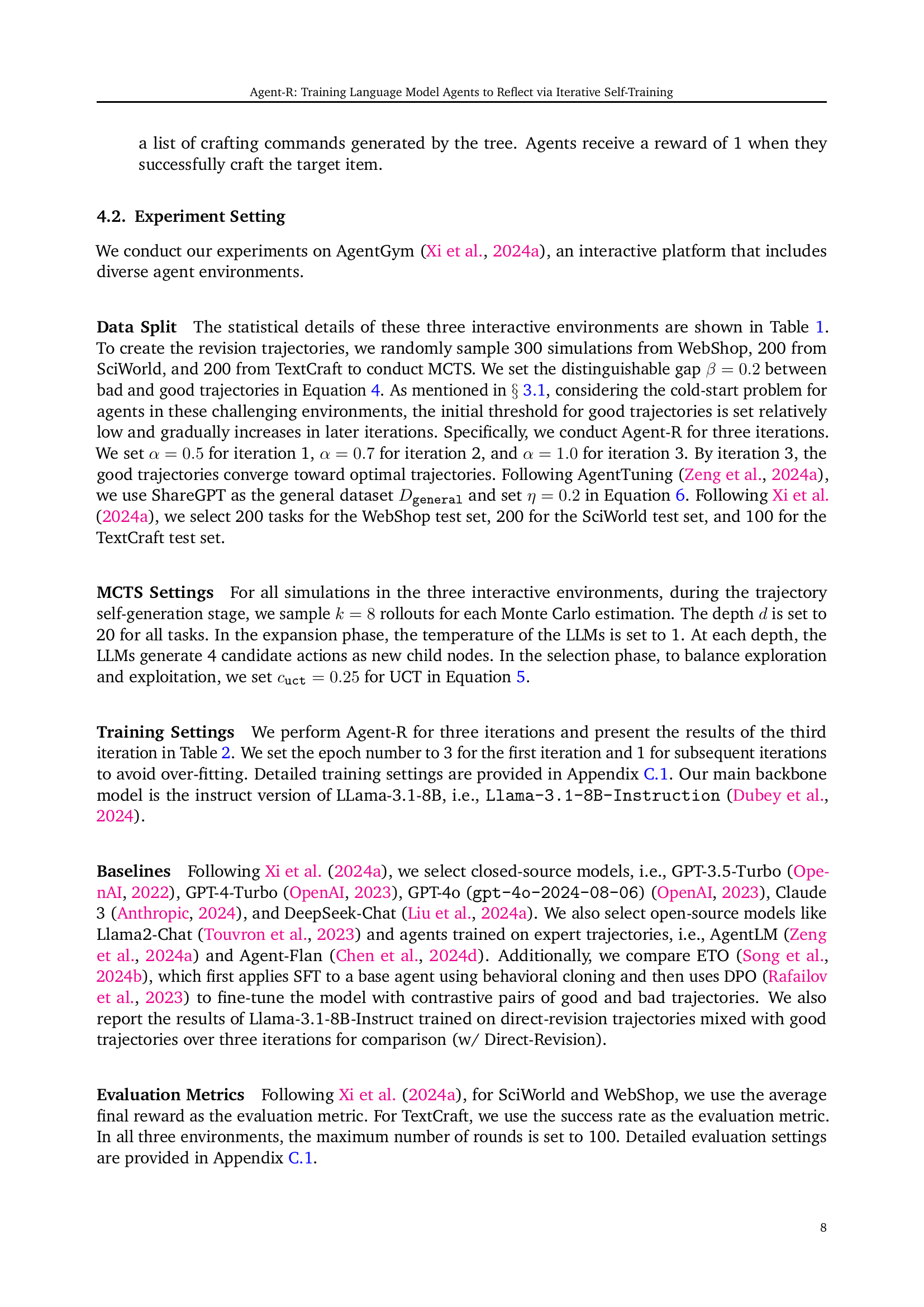

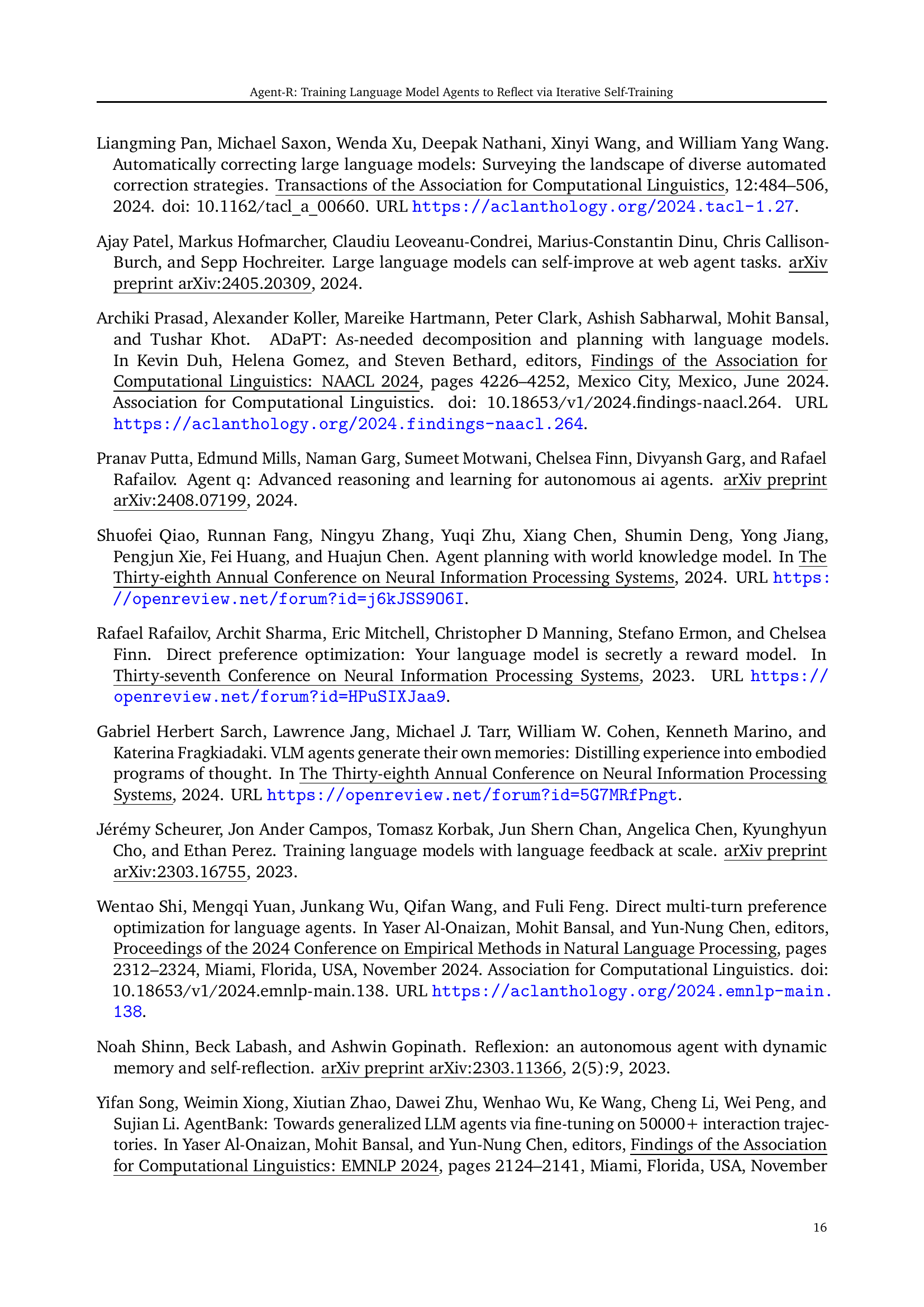

| Method | WebShop | SciWorld | TextCraft | Average |

| Close-sourced Models | ||||

| DeepSeek-Chat (Liu et al., 2024a) | 11.00 | 16.80 | 23.00 | 16.93 |

| Claude-3-Haiku (Anthropic, 2024) | 5.50 | 0.83 | 0.00 | 2.11 |

| Claude-3-Sonnet (Anthropic, 2024) | 1.50 | 2.78 | 38.00 | 14.09 |

| GPT-3.5-Turbo (OpenAI, 2022) | 12.50 | 7.64 | 47.00 | 22.38 |

| GPT-4-Turbo (OpenAI, 2023) | 15.50 | 14.38 | 77.00 | 35.63 |

| GPT-4o (OpenAI, 2023) | 25.48 | 46.91 | 64.00 | 45.46 |

| Open-sourced Models | ||||

| Llama2-Chat-13B (Touvron et al., 2023) | 1.00 | 0.83 | 0.00 | 0.61 |

| AgentLM-7B (Zeng et al., 2024a) | 36.50 | 2.75 | 0.00 | 13.08 |

| AgentLM-13B (Zeng et al., 2024a) | 39.50 | 10.68 | 4.00 | 18.06 |

| Agent-FLAN (Chen et al., 2024d) | 40.35 | 28.64 | 16.00 | 28.33 |

| Llama-3.1-8B-Instruct (Dubey et al., 2024) | 19.65 | 14.36 | 26.00 | 20.00 |

| w/ ETO (Song et al., 2024b) | 52.80 | 67.55 | 75.00 | 65.12 |

| w/ Direct-Revision | 54.44 | 66.65 | 66.00 | 62.36 |

| \cdashline1-5 w/ Agent-R | 63.91 | 70.23 | 78.00 | 70.71 |

🔼 This table presents the performance comparison of various language models on three interactive tasks: WebShop, SciWorld, and TextCraft. The Llama-3.1-8B model is trained using revision trajectories generated by the Agent-R method across three iterations. The results are then compared against several other well-known closed-source and open-source language models as well as other agent-based methods. The comparison highlights the improved performance achieved by using Agent-R’s self-correction mechanism.

read the caption

Table 2: Results of three interactive environments. We train Llama-3.1-8B on revision trajectories collected from Agent-R for three iterations and compare its performance with various models.

| Method | WebShop | SciWorld | TextCraft | Average |

| GPT-4o | 24.10 | 35.56 | 8.22 | 22.63 |

| AgentLM-7B | 20.96 | 0.00 | 0.00 | 6.99 |

| AgentLM-13B | 23.20 | 0.22 | 0.00 | 7.81 |

| Agent-FLAN | 19.37 | 0.00 | 0.00 | 6.46 |

| Llama-3.1-8B | ||||

| w/ Optimal | 31.38 | 1.15 | 0.00 | 10.84 |

| w/ Optimal+ | 25.03 | 0.00 | 0.00 | 8.34 |

| w/ ETO | 35.78 | 3.47 | 0.00 | 13.08 |

| Llama-3.1-8B w/ Direct-Revision Trajectories | ||||

| # Iter1 | 40.26 | 19.09 | 11.35 | 23.57 |

| # Iter2 | 40.35 | 27.45 | 22.97 | 30.26 |

| # Iter3 | 42.02 | 35.27 | 29.73 | 35.67 |

| Llama-3.1-8B w/ Trajectories from Agent-R | ||||

| # Iter1 | 46.51 | 40.82 | 32.43 | 39.92 |

| # Iter2 | 47.51 | 45.22 | 35.14 | 42.62 |

| # Iter3 | 48.22 | 48.79 | 43.24 | 46.75 |

🔼 Table 3 presents a comparison of the performance of Llama-3.1-8B language model trained using different sets of trajectories. It contrasts the results of training with: trajectories generated by Agent-R, optimal trajectories (where all actions lead to the highest reward), optimal trajectories generated by GPT-40, trajectories generated by combining optimal and direct revision trajectories, and trajectories exclusively generated using a direct revision approach. The table quantifies this performance across three interactive agent environments (WebShop, SciWorld, TextCraft) and provides an average score across them all.

read the caption

Table 3: The revision results of different methods. w/ Optimal+ means the golden trajectories for training Llama-3.1-8B are generated from GPT-4o.

| You are a good verifier of interactive environments. You will be given a history log that memorizes an agent interacting with the environment to solve a task. The format of the log is |

| ### |

| Action: Action |

| Observation: Observation |

| ### |

| Log: Task Description: {task description} |

| {history log} |

| Current Action: {node action} |

| Current Observation: {node observation} |

| You need to verify whether the current action is good or bad or uncertain. |

| - A good action is one that is greatly helpful to solve the task. |

| - A bad action is one that is greatly harmful to solve the task. |

| - An uncertain action is one that is neither good nor bad. You cannot judge based on the current information. |

| You must give reasons first and then give the response with the format: Judgment: <Good or Bad or Uncertain> |

🔼 This table presents the prompt templates used in the Agent-R framework to guide the model in identifying the transition point between a bad trajectory and a good trajectory during the self-correction process. The prompt provides the model with the task description, the history log of the agent’s interactions with the environment, the current action and observation, and instructions on how to determine whether the current action is good, bad, or uncertain, based on the provided information. This step is crucial for the model-guided reflection trajectory generation phase of Agent-R.

read the caption

Table 4: Prompt templates of determining transition point in Agent-R.

| Revision Thoughts |

| Thought 1: I realize my approach was flawed. I need to revise it. |

| Thought 2: I took the wrong actions. I need to identify the right path. |

| Thought 3: My actions were incorrect. I must adjust my strategy. |

| Thought 4: I see an error in my actions. I need to fix it. |

| Thought 5: My judgment was incorrect. I need to rethink it. |

| Thought 6: I overlooked something important. I need to address it. |

| Thought 7: I recognize my mistake. Let’s find a better solution. |

| Thought 8: I recognize my failure. I need to learn and move forward. |

| Thought 9: My decision was wrong. I should reevaluate. |

| Thought 10: I made an error. I must determine how to correct it. |

🔼 This table lists ten example sentences that can be used as revision signals to guide the agent in correcting erroneous trajectories. These phrases reflect different ways an agent might recognize and articulate its errors, prompting it to revise its course of action and generate improved trajectories.

read the caption

Table 5: Ten revision thoughts to construct revision trajectories.

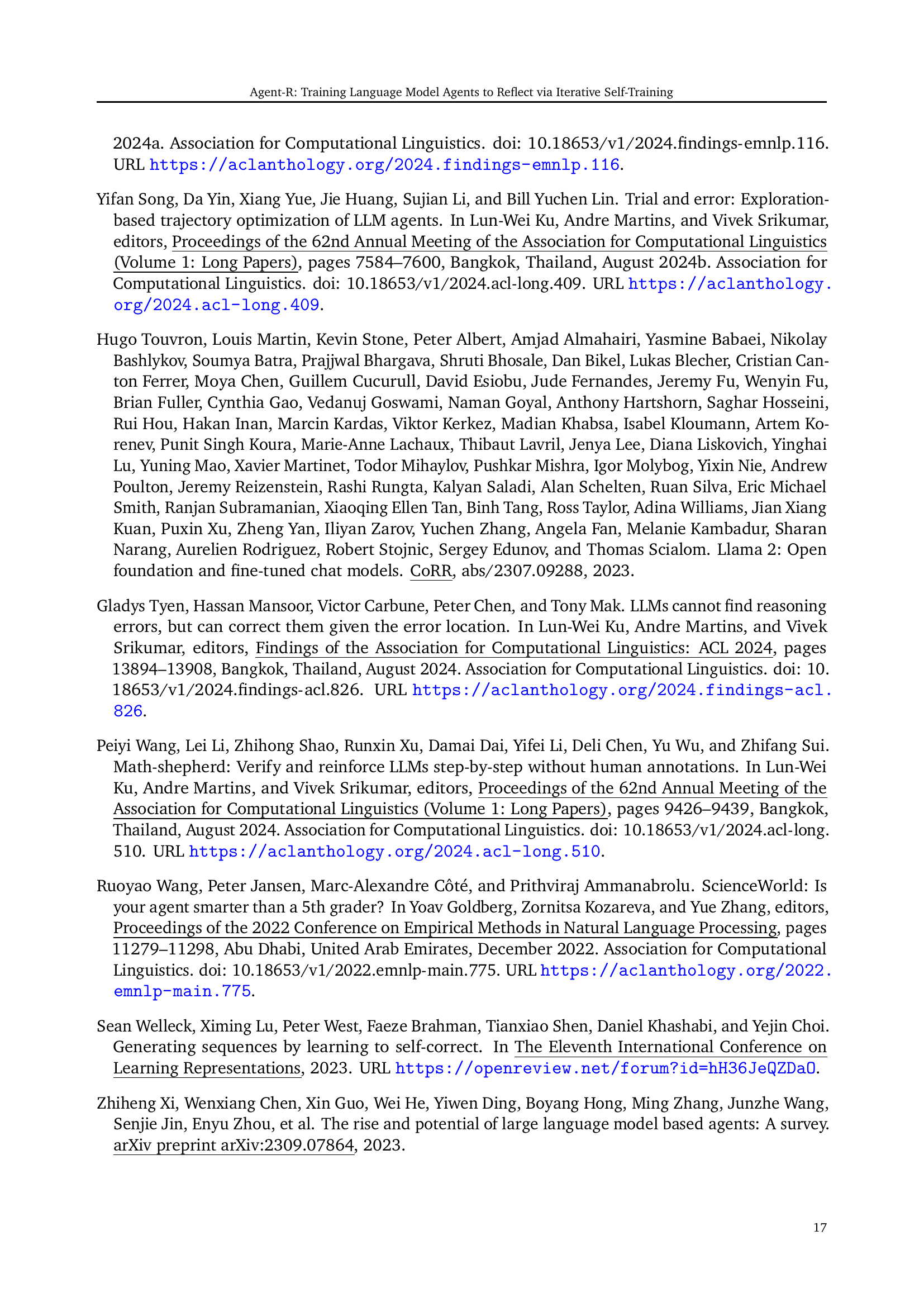

| Dataset | Iteration | Method | WebShop | SciWorld | TextCraft | Average |

| Direct-Revision + Good Trajectory | 1 | Single | 48.06 | 64.52 | 57.00 | 56.53 |

| Multi | 42.66 | 63.10 | 48.00 | 51.25 | ||

| \cdashline2-7 | 2 | Single | 55.56 | 64.52 | 63.00 | 61.03 |

| Multi | 46.93 | 64.23 | 64.00 | 58.39 | ||

| \cdashline2-7 | 3 | Single | 58.82 | 65.51 | 71.00 | 65.11 |

| Multi | 54.44 | 66.65 | 66.00 | 62.36 | ||

| Trajectory from Agent-R | 1 | Single | 49.80 | 64.02 | 60.00 | 57.94 |

| Multi | 45.84 | 66.71 | 64.00 | 58.85 | ||

| \cdashline2-7 | 2 | Single | 56.34 | 65.62 | 71.00 | 64.32 |

| Multi | 52.05 | 68.28 | 76.00 | 65.44 | ||

| \cdashline2-7 | 3 | Single | 60.66 | 67.22 | 76.00 | 67.96 |

| Multi | 63.91 | 70.23 | 78.00 | 70.71 |

🔼 Table 6 presents a detailed comparison of the performance achieved by different training methods on three interactive environments: WebShop, SciWorld, and TextCraft. It shows the average performance across multiple iterations (1, 2, and 3) using both single-task and multi-task training approaches. The methods compared include training with trajectories generated by Agent-R (the proposed method), a direct-revision approach combined with good trajectories, and training with optimal and good trajectories. The table allows readers to assess the effectiveness of Agent-R against other approaches and understand the impact of single-task versus multi-task training strategies on the final results.

read the caption

Table 6: Detailed Results of different training methods on three interactive environments.

| WebShop |

| Example 1: |

| Assistant: Thought: I think I should search for light blue shorts in a specific size and price range to get more accurate results. Action: search[light blue 36w 30l price:40] |

| Human: [Search Result] |

| Assistant: Thought: I went in the wrong direction with my search. I need to reset and try again. |

| Human: ok. |

| Assistant: Thought: I need to back to search. Action: click[Back to Search] |

| Human: [Search Result] |

| Example 2: |

| Assistant: Thought: I think the second option is a bit off-topic, but it’s a good price, so I’ll consider it. Action: click[B08226NDZW] |

| Human: [Search Result] |

| Assistant: Thought: I recognize my mistake. Let’s find a better solution. Action: wait |

| Human: ok. |

| Assistant: Thought: I think the search query is not specific enough, so I’ll try to refine it by adding more keywords. Action: search[machine wash men’s dress shirts polyester heathered cotton classic fit men 3t price:50.00] |

| Human: [Search Result] |

| Sciworld |

| Example 1: |

| Assistant: Thought: I should open door to kitchen. Action: open door to kitchen |

| Human: The door is now open. |

| Assistant: Thought: I took the wrong steps. I need to identify the right path. Action: wait |

| Human: ok. |

| Assistant: Thought: I should go to kitchen. Action: go to kitchen |

| Human: You move to the kitchen. |

| Example 2: |

| Assistant: Thought: I think I should try to determine the temperature of the unknown substance B. Action: use thermometer on unknown substance B |

| Human: the thermometer measures a temperature of 19 degrees celsius |

| Assistant: Thought: I misunderstood the situation. Time to reassess. Action: wait |

| Human: ok. |

| Assistant: Thought: I think I should check the unknown substance B again to make sure the temperature is accurate. Action: use thermometer on unknown substance B |

| Human: the thermometer measures a temperature of 16 degrees celsius |

| TextCraft |

| Example 1: |

| Assistant: Thought: I should check if I can get blackstone directly from the environment or the inventory. Action: inventory |

| Human: Inventory: You are not carrying anything. |

| Assistant: Thought: I recognize my mistake. Let’s find a better solution. Action: wait |

| Human: ok. |

| Assistant: Thought: I should go get the blackstone from the environment. Action: get 3 blackstone |

| Human: Got 3 blackstone |

| Example 2: |

| Assistant: Thought: I can use the quartz slab to craft something else that might be useful. Action: craft quartz wall using 4 quartz slab |

| Human: Could not find enough items to craft minecraft:quartz_wall |

| Assistant: Thought: I realize I chose poorly. I must change direction. Action: wait |

| Human: ok. |

| Assistant: Thought: I think I should try to get more quartz to craft the quartz wall. Action: get 2 quartz slab |

| Human: Got 2 quartz slab |

🔼 This table showcases examples of how Llama-3.1-8B, a language model trained using the Agent-R framework, corrects errors within its generated trajectories. Specifically, it presents instances where the model identifies and rectifies incorrect actions during three distinct tasks: WebShop (online shopping), SciWorld (scientific reasoning), and TextCraft (Minecraft item crafting). For each task, the table displays the initial erroneous actions of the model, the model’s reflection on its mistakes, and the subsequent corrective steps. WebShop’s search results are excluded due to their extensive length. This table demonstrates the Agent-R method’s effectiveness in enabling real-time error correction and improved trajectory generation in complex, interactive tasks.

read the caption

Table 7: Example of error correction from the trajectory generated by Llama-3.1-8B, trained on trajectories from Agent-R during the third iteration. The search results in WebShop are omitted due to their length.

| WebShop |

| Judgment (Invalid Actions): The action is bad because it does not match the original search criteria. Therefore, Judgment: Bad |

| Judgment (Mismatch Observation): However, the product does not match the description of a machine wash men’s dress shirt with polyester heathers, heathers cotton, cotton heather, needle sleeve, classic fit, and color heather grey, as specified in the task description. Therefore, Judgment: Bad |

| Judgment (Irrelevant Actions): The action is bad because it is not relevant to the task of finding men’s shirts with specific characteristics. Therefore, Judgment: Bad |

| Sciworld |

| Judgment (Invalid Actions): There is no orange mentioned in the environment, so this action is invalid. It’s not a helpful action, but rather an invalid input. Therefore, Judgment: Bad |

| Judgment (Mismatch Observation): The current task is to determine if the aluminum foil is electrically conductive. The agent has already connected the aluminum foil to the battery and the green light bulb, and observed that the light bulb turned on. This suggests that the aluminum foil is electrically conductive. Moving the aluminum foil to the yellow box would mean that the agent is giving up on the possibility of the aluminum foil being conductive, which is not in line with the current observation. Therefore, Judgment: Bad |

| Judgment (Irrelevant Actions): The agent is not using the art studio’s resources effectively, as the cup containing red paint is not related to the task of creating green paint. Therefore, Judgment: Bad |

| TextCraft |

| Judgment (Invalid Actions): The agent is stuck in an infinite loop of trying to execute an invalid action, which is not helpful in solving the task. Therefore, Judgment: Bad |

| Judgment (Mismatch Observation): The action is not good because it is not possible to craft a dried kelp block with 9 dried kelp. The observation suggests that the agent lacks the necessary items to craft the block. This action is actually harmful to the task of crafting the block. Therefore, Judgment: Bad |

| Judgment (Irrelevant Actions): The agent is trying to craft diamond boots, which requires 4 diamonds. The agent has already obtained 4 diamonds, which is sufficient to craft the boots. However, the agent is trying to craft a diamond block instead, which requires 9 diamonds. This action is not helpful in achieving the goal of crafting diamond boots, as it will waste the 4 diamonds the agent already has. Therefore, Judgment: Bad |

🔼 This table displays example results from evaluating the adaptive transition points method within Agent-R. The evaluations were performed using the Llama-3.1-8B language model, trained on trajectories generated by Agent-R during its third iteration. For each example, the table shows a judgment (categorized as Invalid Actions, Mismatch Observation, or Irrelevant Actions), the reasoning behind that judgment, and the final judgment made (Good or Bad). The examples illustrate how the model identifies and classifies errors made in an agent’s trajectory within the interactive environments.

read the caption

Table 8: Example results from the evaluation of adaptive transition points. These judgments are all generated by Llama-3.1-8B, trained on trajectories from Agent-R during the third iteration.

Full paper#