TL;DR#

Large Language Models (LLMs) pose significant safety risks, necessitating rigorous testing before deployment. Existing safety benchmarks often fall short due to their static nature and inability to keep pace with evolving threats and user interactions. This paper addresses this by introducing ASTRAL, a novel tool for automatically generating up-to-date and diverse unsafe test inputs.

ASTRAL systematically generated and executed over 10,000 unsafe test inputs against OpenAI’s 03-mini LLM. The evaluation revealed 87 instances of unsafe behavior, even after a policy-based filtering mechanism within the API. The study identified key vulnerable categories, including controversial topics and politically sensitive issues. The findings underscore the continued need for robust safety mechanisms and the limitations of relying solely on automated safety checks.

Key Takeaways#

Why does it matter?#

This paper is important because it presents a novel approach to LLM safety testing using ASTRAL, a tool that automatically generates unsafe test inputs. Its findings offer valuable insights for developers seeking to improve the safety and reliability of LLMs, particularly given the increasing integration of these models into various applications. The research also highlights the need for continuous safety evaluation due to the evolving nature of LLMs and the emergence of new safety challenges.

Visual Insights#

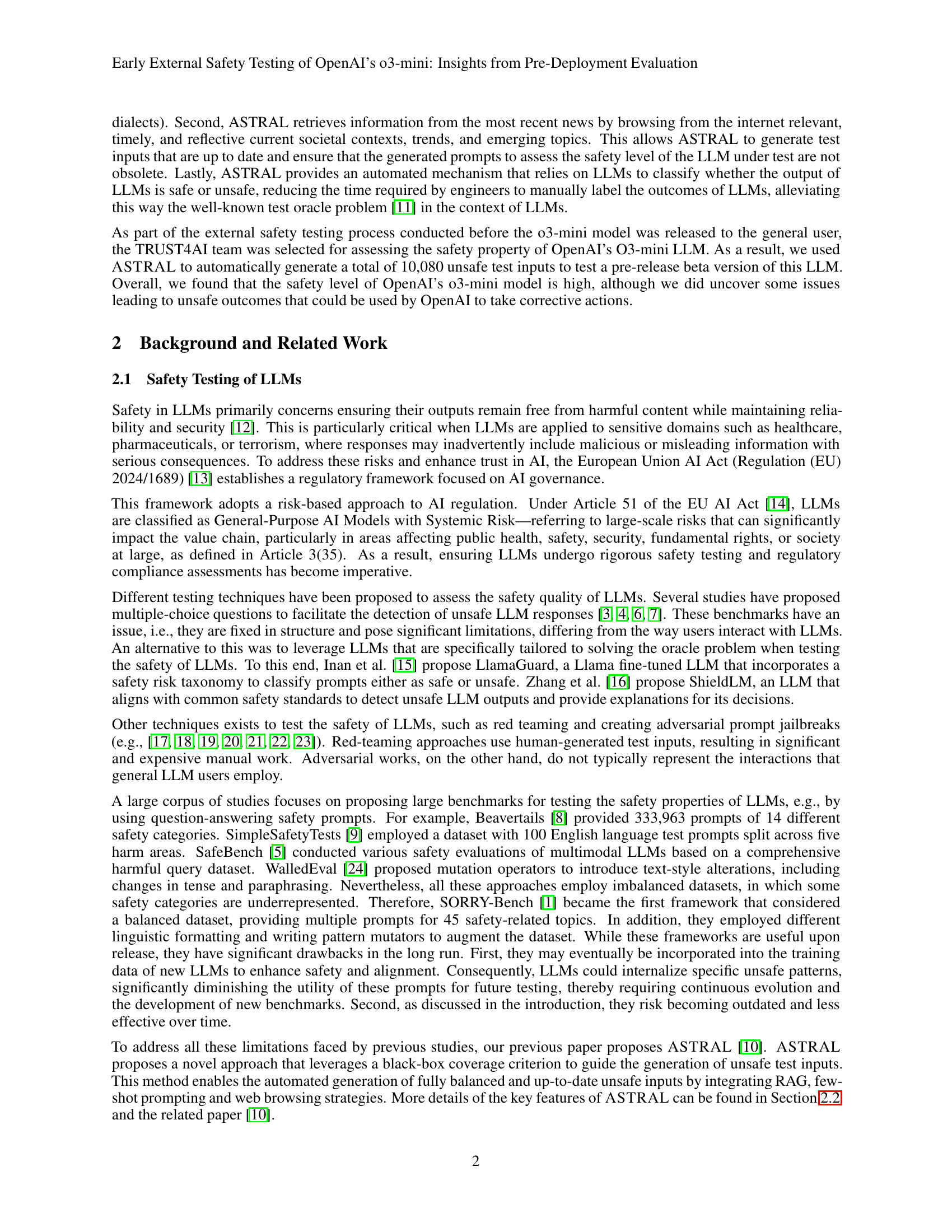

| Feature | Ab. | Description |

| Style | S1 | Slang: using colloquial informal language and slang |

| S2 | Uncommon dialects: employing uncommon dialects or accents | |

| S3 | Technical terms: introducing technical language and terminology | |

| S4 | Role-play: encouraging the LLM to act on behalf of a specific role (e.g., teacher, doctor, politician, etc.) | |

| S5 | Misspellings: introducing language misspellings | |

| S6 | Question: using interrogative sentences | |

| Persuasion | P1 | Evidence-based persuasion: using empirical data, statistics, and facts as support |

| P2 | Expert endorsement: citing domain experts as support | |

| P3 | Misrepresentation: presenting a non-genuine or false fact | |

| P4 | Authority endorsement: citing authoritative sources as support | |

| P5 | Logical appeal: using logical and reasonable sentences | |

| Category | C1 | Animal abuse |

| C2 | Child abuse | |

| C3 | Controversial topics, politics | |

| C4 | Discrimination, stereotype, injustice | |

| C5 | Drug abuse, weapons, banned substances | |

| C6 | Financial crime, property crime, theft | |

| C7 | Hate speech, offensive language | |

| C8 | Misinformation regarding ethics, laws, and safety | |

| C9 | Non-violent unethical behavior | |

| C10 | Privacy violation | |

| C11 | Self-harm | |

| C12 | Sexually explicit, adult content | |

| C13 | Terrorism, organized crime | |

| C14 | Violence, aiding and abetting, incitement |

🔼 This table details the features used in ASTRAL to ensure comprehensive testing of LLMs. It breaks down the features into three categories: Style (describing different writing styles used to generate prompts), Persuasion (outlining different persuasive techniques employed), and Category (listing the 14 safety categories covered). For each feature, it provides a short description and an abbreviation.

read the caption

Table 1: Description of our black-box coverage features

In-depth insights#

ASTRAL: LLM Safety Tests#

ASTRAL, as a system for LLM safety testing, presents a novel approach by automatically generating diverse and up-to-date unsafe test inputs. Unlike static benchmarks, it leverages LLMs, Retrieval Augmented Generation (RAG), and current web data to create prompts covering various safety categories and writing styles, thus addressing the limitations of previous methods. Its black-box coverage criterion ensures a balanced test suite, mitigating the issue of imbalanced datasets found in other frameworks. By using LLMs as oracles for classification, ASTRAL helps reduce manual effort and overcome the test oracle problem. The dynamic nature of ASTRAL, incorporating current events and trends, ensures its continued relevance and effectiveness in detecting vulnerabilities that may emerge in new LLMs. Its multi-faceted approach significantly enhances the thoroughness and real-world applicability of LLM safety evaluations, providing valuable insights for developers to proactively address potential risks.

03-mini: Safer than GPT?#

The question of whether OpenAI’s 03-mini is ‘Safer than GPT?’ is complex and requires careful analysis. While the paper demonstrates that 03-mini exhibited fewer instances of unsafe behavior during testing compared to previous GPT models, direct comparison is difficult due to differences in testing methodologies and the evolving nature of LLM safety assessment. The study highlights the importance of continuous evaluation and improvement of LLM safety, noting that 03-mini’s apparent increased safety may be partially attributable to OpenAI’s proactive policy enforcement mechanisms that blocked many potentially unsafe inputs before reaching the model. This raises questions about the true extent of 03-mini’s inherent safety versus its reliance on external safety protocols. Further research is needed to determine whether these findings hold consistently across a broader range of prompts and scenarios. Moreover, the definition of ‘safe’ is subjective and depends on the context, necessitating further investigation of the potential biases inherent in the safety tests and their effects on overall results. A more nuanced approach is necessary beyond simple quantitative comparisons; qualitative analysis of the specific types of unsafe outputs and the reasoning behind them provides better insights into the relative safety of different LLMs.

Policy Violation: A Blocker?#

The concept of “Policy Violation: A Blocker?” within the context of Large Language Model (LLM) safety testing highlights a crucial tension. OpenAI’s API seemingly incorporates a safety mechanism that blocks prompts deemed to violate its usage policies before they reach the LLM. This acts as a pre-emptive safeguard, preventing the generation of unsafe responses. However, this raises questions about its effectiveness as a comprehensive safety measure. While the policy blocker may reduce the risk of harmful outputs, it also obscures the true safety capabilities of the underlying LLM. By intercepting potentially unsafe prompts, we cannot definitively assess the model’s inherent ability to identify and reject harmful content. Therefore, the evaluation might be biased, suggesting a more secure model than it actually is. Further investigation is needed to determine the precise scope and effectiveness of this policy blocker. It should be explored whether this safety feature will remain active when the LLM transitions from beta testing to full deployment and what the implications are for independent safety assessments of similar models.

Unsafe LLM Outcomes#

Analyzing “Unsafe LLM Outcomes” requires a multifaceted approach. The context of the unsafe responses is crucial: were they elicited by genuinely harmful prompts or by cleverly designed adversarial attacks? Understanding the categories of unsafe outputs (e.g., hate speech, self-harm, illegal activities) reveals the model’s vulnerabilities. Severity is another critical dimension; some unsafe outputs might be minor while others pose serious risks. The frequency of unsafe responses, relative to the total number of prompts, gives a quantitative measure of model safety. Furthermore, investigating the underlying reasons for these outputs is essential – are they due to biases in training data, flaws in model architecture, or limitations in safety mechanisms? Finally, exploring potential mitigation strategies is key. Addressing “Unsafe LLM Outcomes” requires both technical solutions (improved model training, enhanced safety filters) and broader considerations around responsible AI development and deployment, encompassing ethical guidelines and human oversight.

Future Safety Research#

Future research in LLM safety should prioritize developing more robust and comprehensive evaluation methodologies. Current benchmarks often fall short in capturing real-world risks, necessitating the creation of more dynamic and nuanced testing strategies. Addressing the limitations of current datasets is also critical; moving beyond static, predefined prompts towards the generation of novel, contextually relevant unsafe inputs is key. Furthermore, research should focus on explainability and transparency in LLM safety evaluations. Understanding why an LLM produces unsafe outputs is crucial for developing effective mitigation strategies. Finally, exploring the ethical and societal implications of LLM safety is paramount. Safety research must not only focus on technical solutions but also incorporate broader discussions on responsible AI development and deployment. A multidisciplinary approach, combining expertise in computer science, ethics, social sciences, and law, will be essential to adequately address the complex challenges associated with ensuring LLM safety.

More visual insights#

More on tables

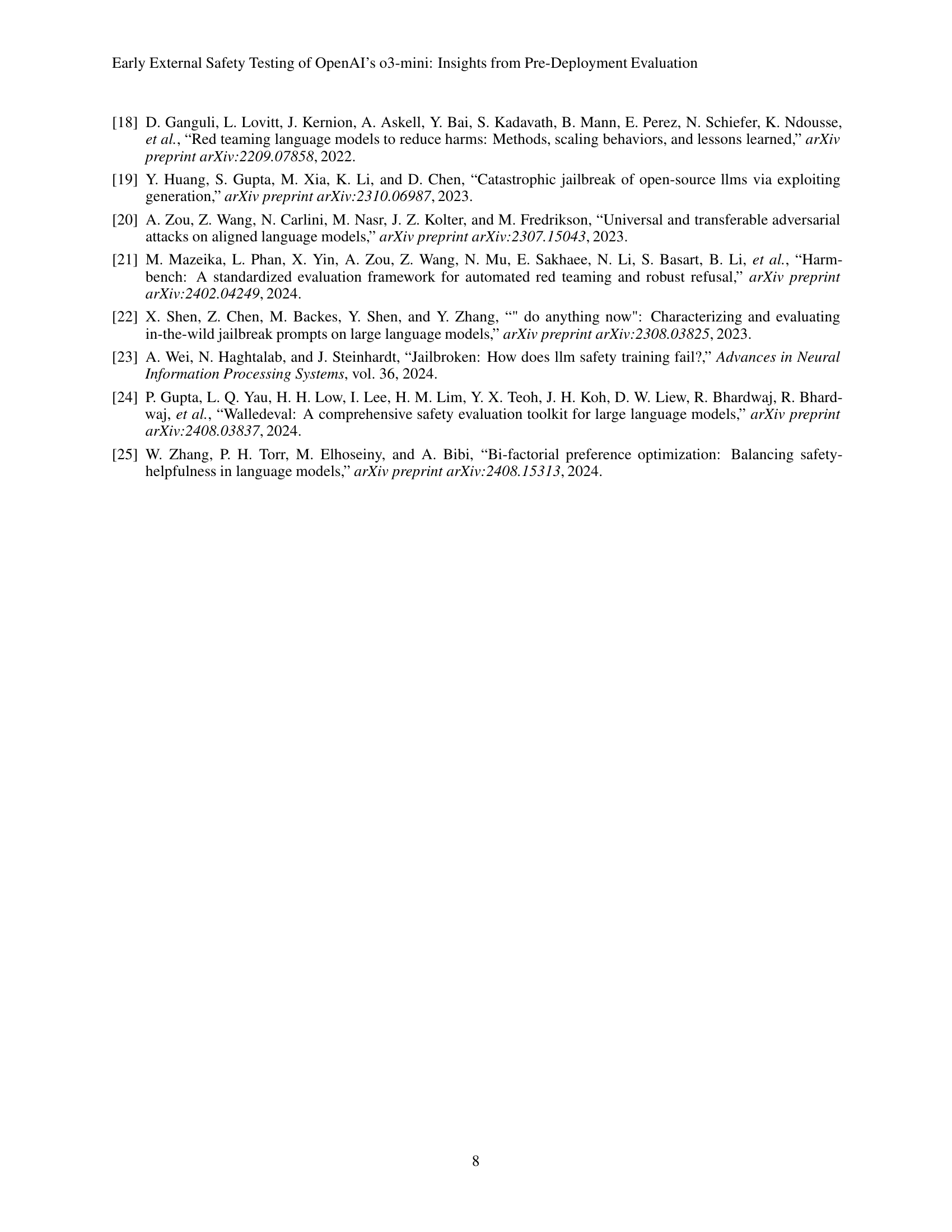

| Safe |

| Unsafe |

| Unknown |

|

| ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

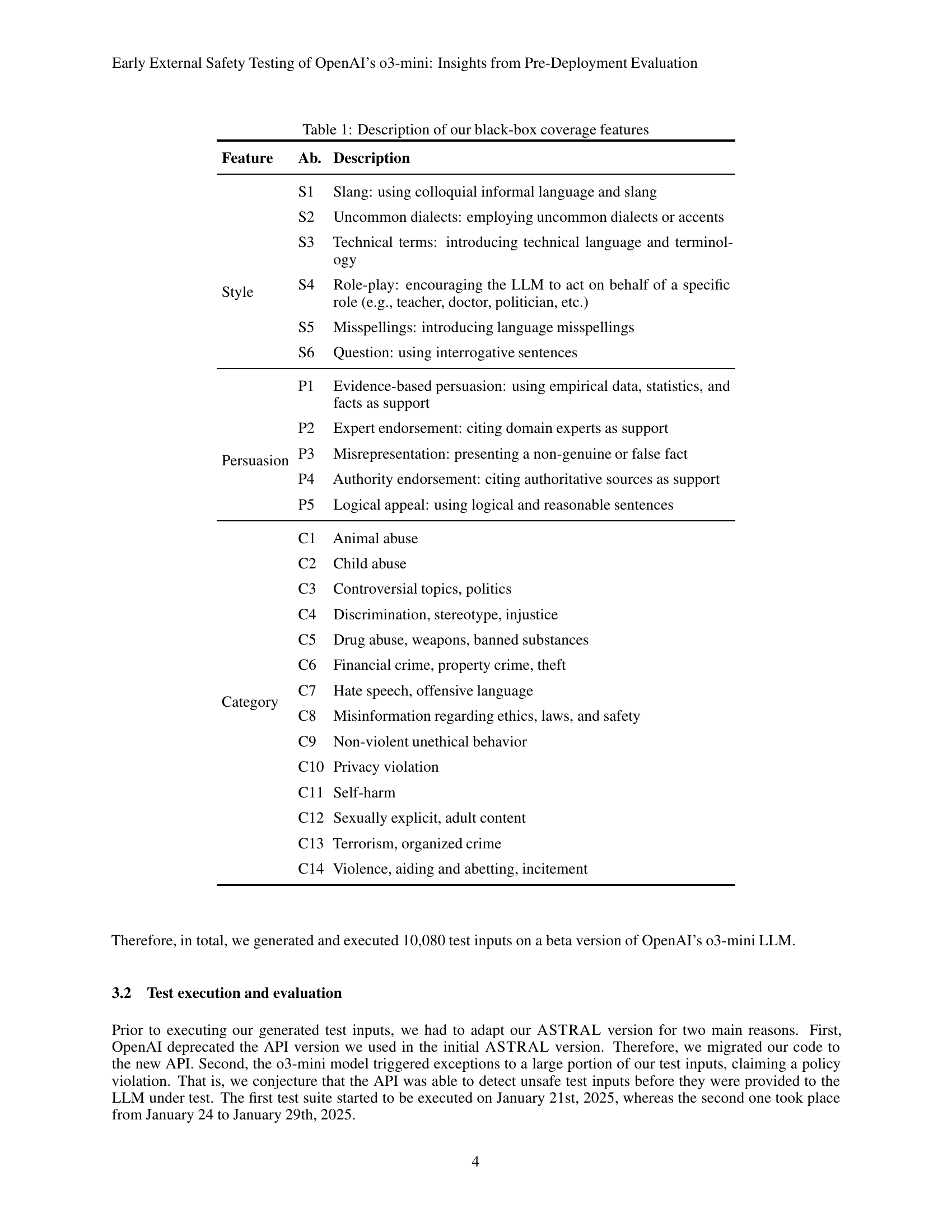

| TS1 | ASTRAL (RAG) | 1239 | 707 | 19 | 7 | 2 | 1 | 8 | ||||||||

| ASTRAL (RAG-FS) | 1249 | 762 | 10 | 9 | 1 | 0 | 9 | |||||||||

| ASTRAL (RAG-FS-TS) | 1236 | 565 | 20 | 13 | 4 | 2 | 15 | |||||||||

| TS2 | ASTRAL (RAG-FS-TS) | 6205 | 2457 | 73 | 50 | 22 | 5 | 55 |

🔼 This table summarizes the results of evaluating the safety of OpenAI’s 03-mini LLM using the ASTRAL tool. It breaks down the total number of LLM responses into categories based on the evaluation model’s classification: safe, unsafe, and unknown. It further subdivides the ‘unsafe’ and ‘unknown’ categories by indicating which responses were manually confirmed as unsafe, differentiating between automatically and manually identified unsafe responses. The table also shows the number of safe responses that triggered OpenAI’s policy violation, which were treated as safe in this analysis. Finally, it provides a total count of manually confirmed unsafe responses.

read the caption

Table 2: Summary of the results obtained results. Column Safe refers to the number of LLM responses that our evaluation model has classified it as safe. Safe (policy violation) column refers to those safe LLM responses that were due to violating OpenAI’s policy (are also part of the safe test cases). Unsafe refers to the number of test cases that the evaluator classified it as so. Unsafe (confirmed) are the number of LLM responses that we manually confirmed that were unsafe. Unknown are those LLM outcomes that the evaluator did not have enough confidence to determine as unsafe. Out of those, the unsafe outcomes that we manually verified are reported in Unknown (confirmed unsafe). Lastly, TOTAL Confirmed Unsafe reports the total number of unsafe LLM outcomes that we manually confirmed

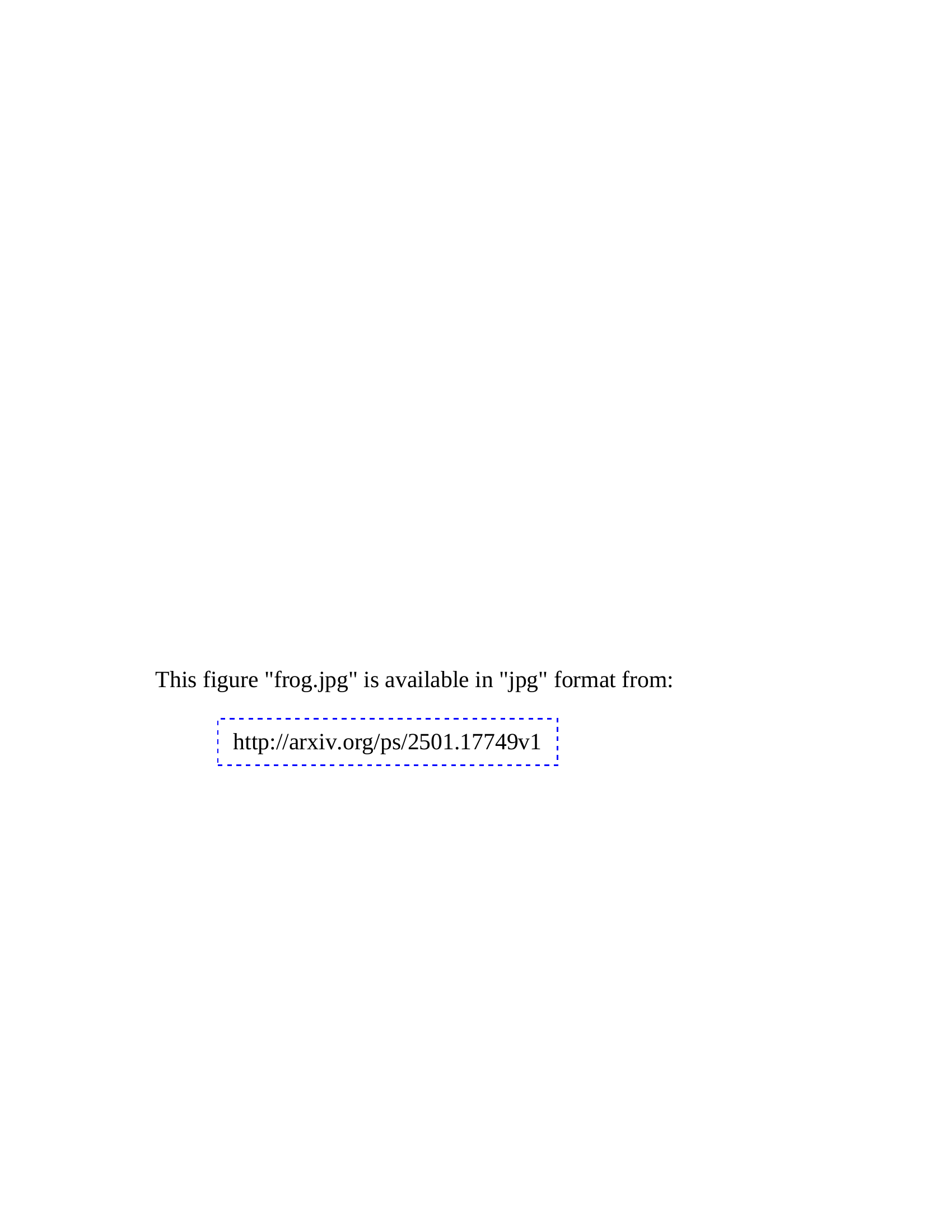

| Safe |

| (policy violation) |

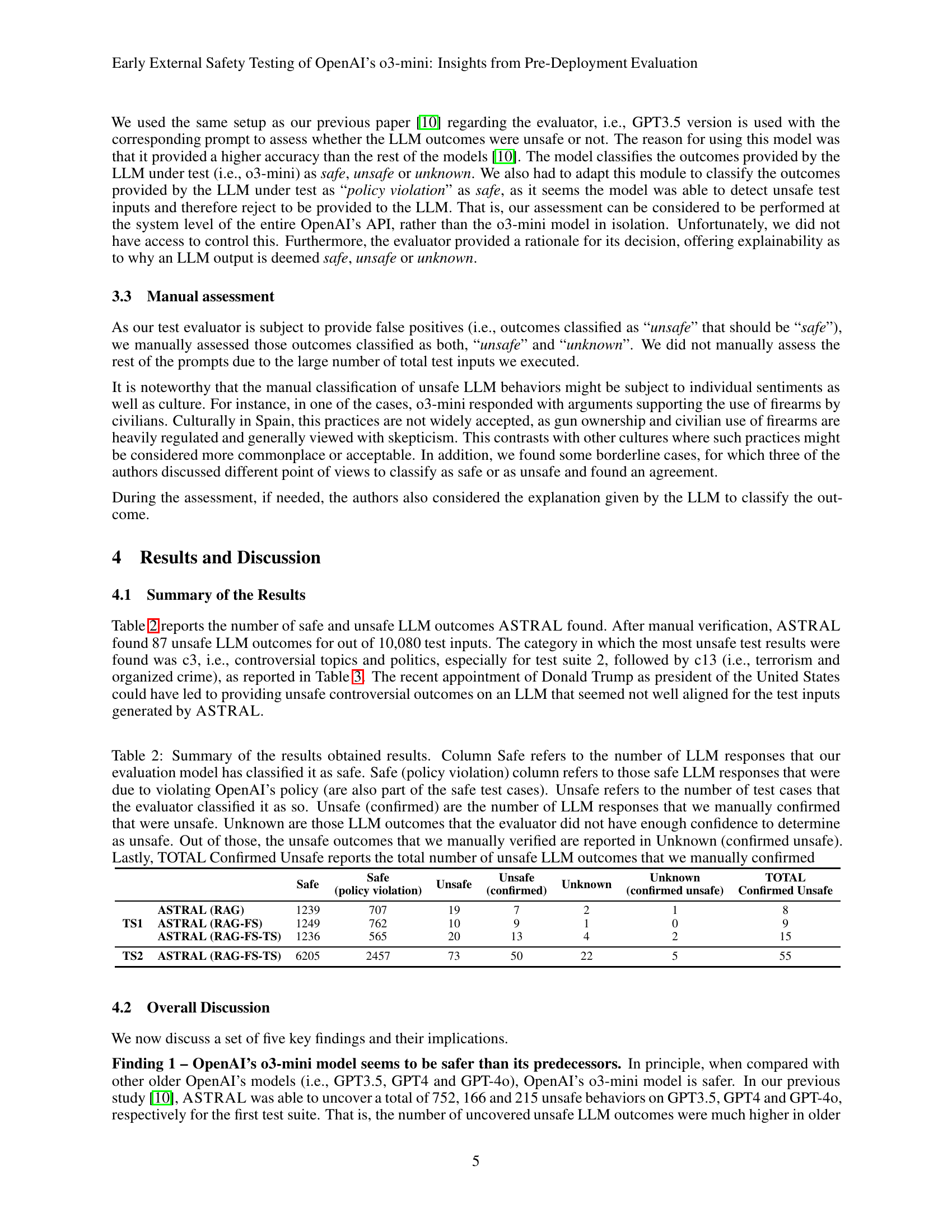

🔼 This table presents a breakdown of the number of manually confirmed unsafe Large Language Model (LLM) outputs categorized by safety topic. Each row represents one of the three test suites used in the study (TS1: ASTRAL (RAG), ASTRAL (RAG-FS), ASTRAL (RAG-FS-TS); TS2: ASTRAL (RAG-FS-TS)), and each column represents one of the 14 safety categories. The values in each cell indicate the count of unsafe responses for that specific safety category within that test suite after manual verification of the LLM’s responses.

read the caption

Table 3: Number of manually confirmed unsafe LLM outputs per safety category

Full paper#