TL;DR#

Supervised Fine-Tuning (SFT) enhances LLM reasoning, but faces data costs and performance plateaus. To address these challenges, the paper introduces Thinking Preference Optimization (ThinkPO), a post-SFT method. ThinkPO enhances long chain-of-thought reasoning without needing new CoT responses. It uses easily available short CoT responses as rejected answers and long CoT responses as chosen ones, encouraging the model to favor longer outputs.

ThinkPO significantly improves SFT-ed model’s reasoning performance. It increases math reasoning accuracy and output length. The method continually boosts the performance of distilled SFT models. For example, the method increased DeepSeek-R1-Distill-Qwen-7B’s performance on MATH500 from 87.4% to 91.2%. ThinkPO maximizes the value of existing long reasoning data and enhances reasoning performance, requiring no additional CoT responses.

Key Takeaways#

Why does it matter?#

This paper is vital for enhancing LLM reasoning. ThinkPO’s efficiency in leveraging existing data offers a cost-effective solution, aligning with the trend towards resource-efficient AI. It inspires further research into preference optimization and continual learning strategies, overcoming performance bottlenecks and improving real-world applicability.

Visual Insights#

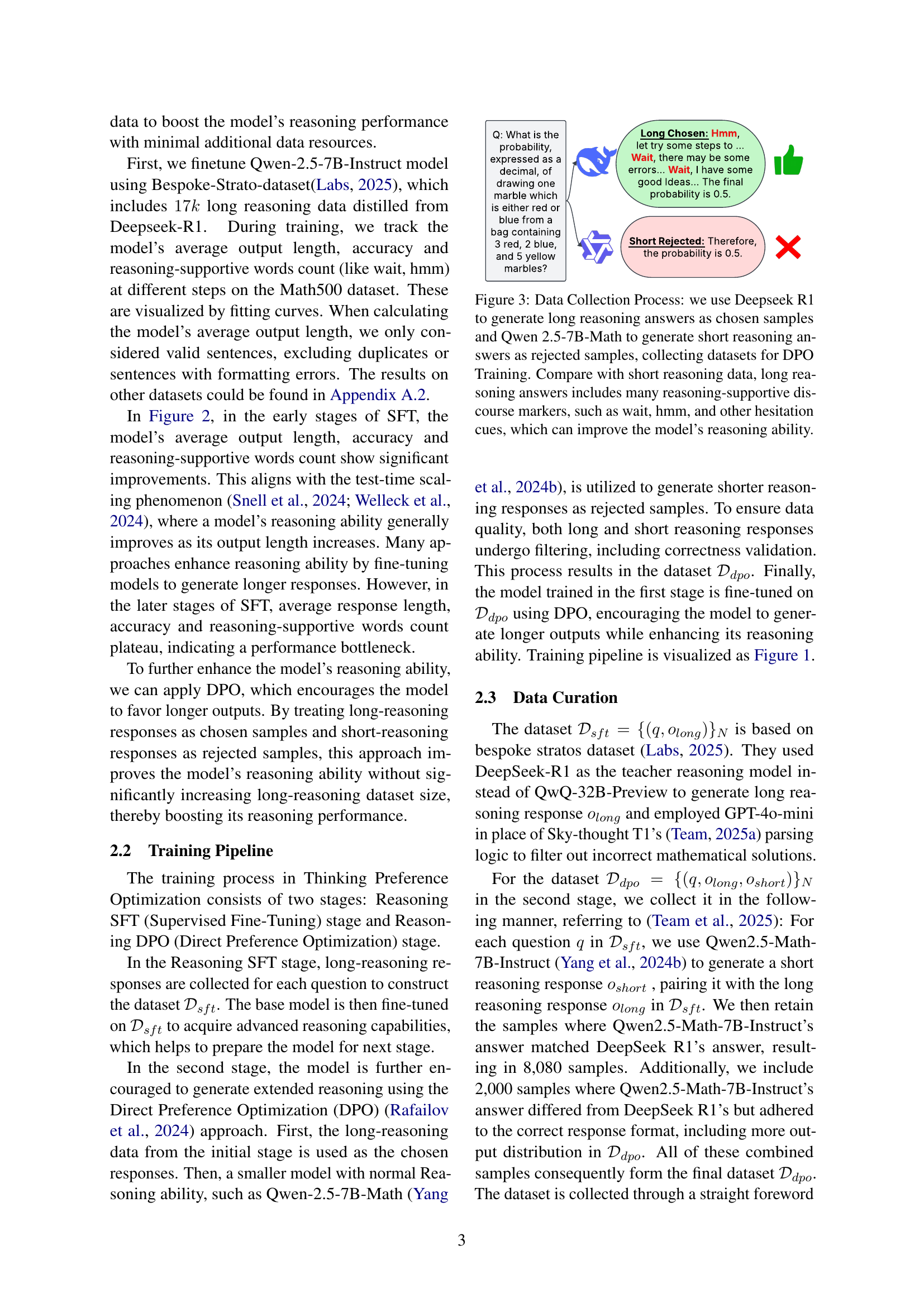

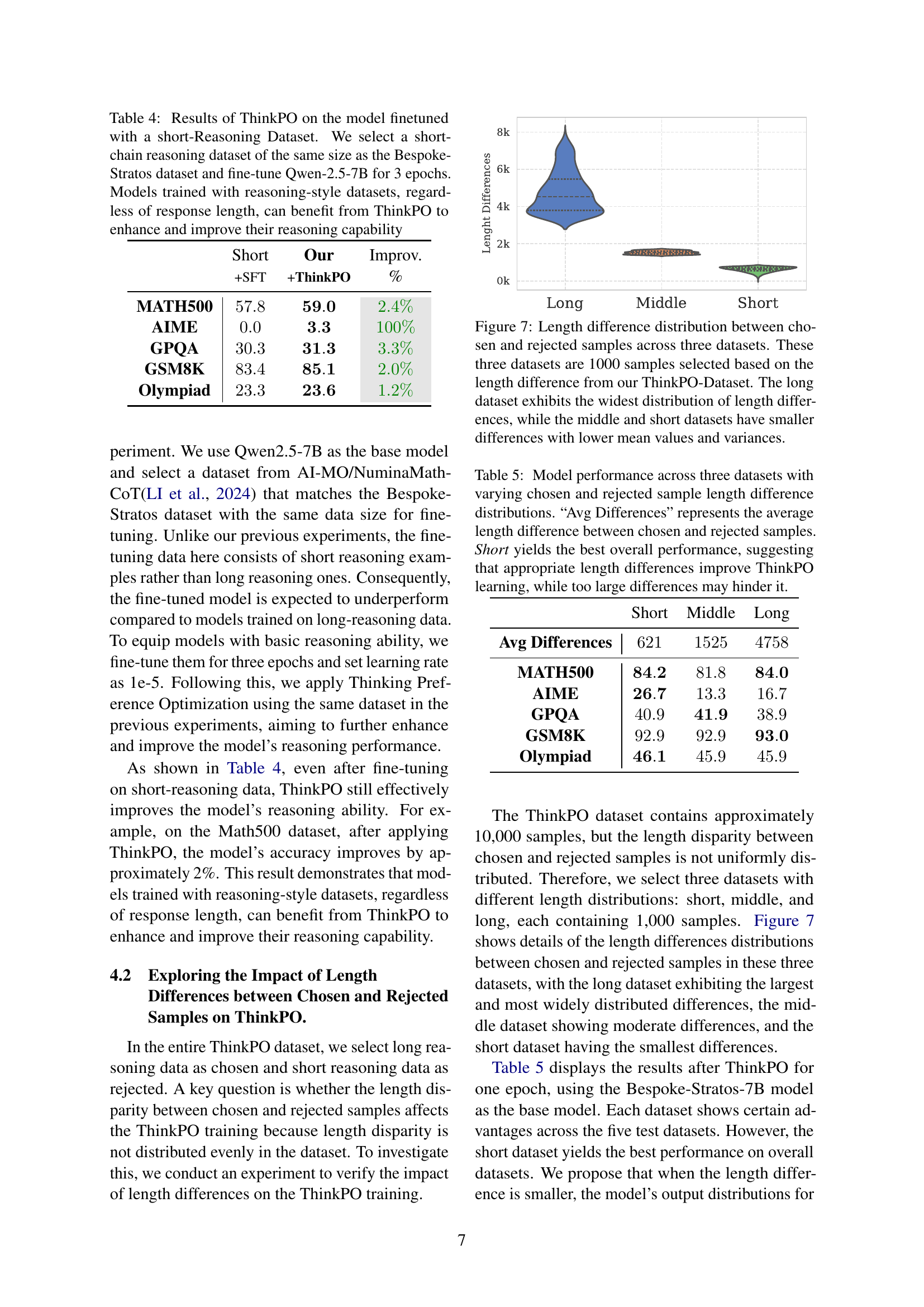

🔼 Figure 1 illustrates the Thinking Preference Optimization (ThinkPO) method and its impact on mathematical reasoning. The top panel shows how ThinkPO works: it uses long chain-of-thought (CoT) reasoning responses as positive examples and short CoT responses as negative examples during training. The bottom-left panel displays the improved performance of ThinkPO across various math reasoning benchmarks (AIME, GPQA, Olympiad, MATH500). ThinkPO significantly increased accuracy compared to both the baseline model and a model that only underwent supervised fine-tuning (SFT). The bottom-right panel demonstrates that ThinkPO led to substantially longer model outputs (measured in tokens). Specifically, for the AIME benchmark, the average response length increased from 0.94K to 23.9K tokens, showing ThinkPO’s ability to foster more detailed and thorough solutions.

read the caption

Figure 1: The illustration of our method ThinkPO and its performance on math reasoning tasks. Top: Our ThinkPO enhances fine-tuned LLMs (+SFT) by promoting detailed problem-solving—using long chain-of-thought reasoning answers as positive (chosen) samples and short chain-of-thought reasoning answers as negative (rejected) samples. Bottom Left: ThinkPO significantly boosts performance across mathematical benchmarks (e.g., 83.4% on MATH500 vs. 82.8% for +SFT and 74.0% for the Base model). Bottom Right: ThinkPO generates more detailed solutions, with average completion lengths on AIME increasing from 0.94K to 21.57K to 23.9K tokens. These results underscore Think Preference Optimization’s effectiveness in fostering and enhancing advanced mathematical reasoning.

| Accuracy | Average Response Length | |||||||

|---|---|---|---|---|---|---|---|---|

| Dataset | Base | +SFT | +ThinkPO | Improv.(%) | Base | +SFT | +ThinkPO | Improv.(%) |

| MATH500 | ||||||||

| AIME | ||||||||

| GPQA | ||||||||

| GSM8K | ||||||||

| Olympiad | ||||||||

| Avg. | 25.9% | |||||||

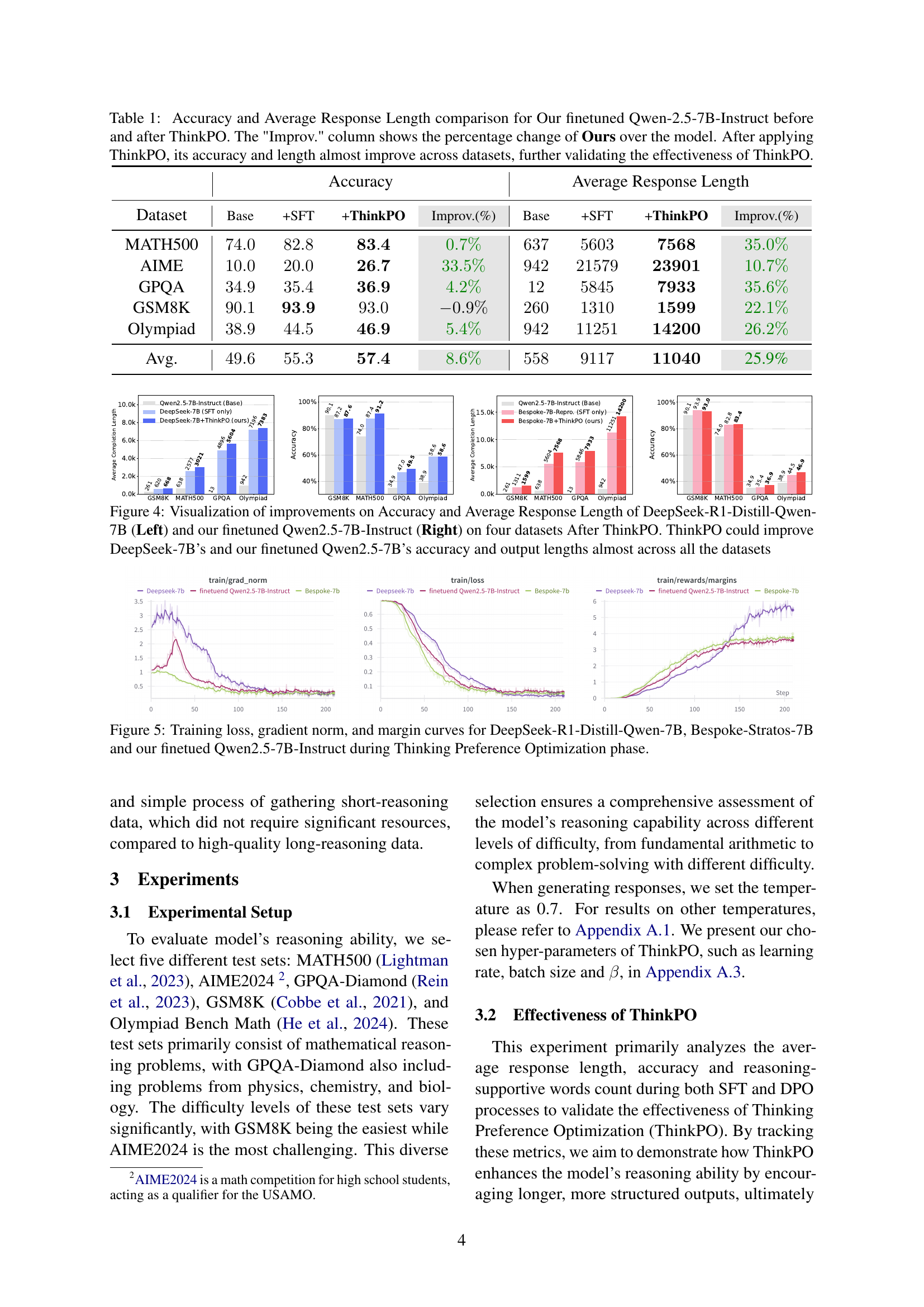

🔼 Table 1 presents a comparison of the performance of a fine-tuned Qwen-2.5-7B-Instruct language model before and after applying Thinking Preference Optimization (ThinkPO). The table shows the accuracy and average response length achieved on five different datasets (MATH500, AIME, GPQA, GSM8K, and Olympiad). The ‘Improv.’ column indicates the percentage improvement in accuracy and response length after ThinkPO is applied. The results demonstrate that ThinkPO consistently improves both the accuracy and the length of the model’s responses across the datasets, suggesting its effectiveness in enhancing reasoning abilities.

read the caption

Table 1: Accuracy and Average Response Length comparison for Our finetuned Qwen-2.5-7B-Instruct before and after ThinkPO. The 'Improv.' column shows the percentage change of Ours over the model. After applying ThinkPO, its accuracy and length almost improve across datasets, further validating the effectiveness of ThinkPO.

In-depth insights#

CoT Enhancement#

While the research paper does not explicitly delve into ‘CoT Enhancement,’ its core contribution, Thinking Preference Optimization (ThinkPO), inherently serves as a Chain-of-Thought (CoT) enhancement technique. ThinkPO refines LLMs’ reasoning by prioritizing longer, more detailed CoT outputs, which translates to encouraging models to generate more thorough and structured thought processes when problem-solving. ThinkPO enhances the ability to produce detailed, step-by-step reasoning chains without requiring expensive, newly collected data. By leveraging easily accessible short CoT responses as ’negative’ examples, ThinkPO effectively guides the model to favor expansive, articulate CoT solutions. Experimentation suggests that ThinkPO can consistently boost the CoT reasoning performance of SFT models, leading to enhanced accuracy and more detailed reasoning chains. The model’s capacity for complex problem-solving is enhanced by the increase in output length, which corresponds to more complex and detailed solutions. The ability of ThinkPO to work consistently across model sizes as well as improve performance with limited data makes it a valuable resource for CoT Enhancement.

ThinkPO Method#

ThinkPO aims to enhance reasoning in LLMs. It is built upon supervised fine-tuning (SFT) by leveraging both existing high-quality long reasoning data and readily available, or easily obtainable, short CoT reasoning responses. ThinkPO frames this as a preference optimization problem. Long CoT responses are treated as preferred or ‘chosen’ examples, while shorter, perhaps less complete, responses are used as ‘rejected’ examples. The system is trained using a direct preference optimization strategy to encourage the model to generate longer, more detailed reasoning chains. The core idea is that by favoring more elaborate reasoning, the model learns to solve problems more effectively. This approach improves the use of existing SFT data, mitigates the cost of acquiring new, high-quality data, and combats performance decline from repeated training. The goal is to produce more structured reasoning processes by leveraging easily obtained data

Model Tuning Boost#

While the paper doesn’t explicitly mention a section titled “Model Tuning Boost,” we can infer its importance in the context of improving LLM reasoning. Model tuning likely involves strategies to optimize the model’s performance after initial training. This could encompass techniques like hyperparameter optimization, where the learning rate, batch size, and other training parameters are meticulously adjusted to achieve the best possible results. Furthermore, model tuning might involve techniques like ensembling. Fine-tuning with preference optimization methods such as ThinkPO can be also effective. This is because the model learns to favor outputs that align with desired characteristics, leading to enhanced performance.

Length Impact#

Based on the study’s focus on reasoning capabilities in LLMs, the “Length Impact” likely refers to the effect of reasoning chain length on model performance. It could explore whether longer chains of thought (CoT) consistently improve accuracy or if there’s a point of diminishing returns. The research could investigate the relationship between output length and reasoning depth, examining if longer outputs simply reflect verbosity or if they indicate more thorough exploration of the problem space. Analyzing the types of reasoning errors made at different chain lengths is also possible - whether shorter chains lead to shallow analysis while longer ones result in tangential or irrelevant steps. Furthermore, the “Length Impact” section may delve into the computational trade-offs associated with increased output length, like increased inference time and memory consumption, and compare the efficiency of different CoT strategies.

Data Dynamics#

Data dynamics are crucial for understanding the ever-changing nature of data, particularly in machine learning. Datasets are rarely static; they evolve due to various factors like new data collection, changes in user behavior, or shifts in the underlying environment. Understanding the dynamics is key to model robustness, generalization, and overall effectiveness. Techniques like concept drift detection and continual learning try to monitor those drifts. Further analysis helps adapting models to avoid performance degradation. Ignoring data dynamics leads to suboptimal performance and unreliable predictions, emphasizing the importance of monitoring.

More visual insights#

More on tables

| DeepSeek-R1-Distill-Qwen-7B (Deepseek) | ||||||

|---|---|---|---|---|---|---|

| Accuracy | Average Response Length | |||||

| Dataset | Deepseek | Ours | Improv. | Deepseek | Ours | Improv. |

| (SFT) | (+ThinkPO) | (%) | (SFT) | (+ThinkPO) | (%) | |

| MATH500 | ||||||

| AIME | ||||||

| GPQA | ||||||

| GSM8K | ||||||

| Olympiad | ||||||

| Bespoke-Stratos-7B (Bespoke) | ||||||

| Accuracy | Average Response Length | |||||

| Dataset | Bespoke | Ours | Improv. | Bespoke | Ours | Improv. |

| (SFT) | (+ThinkPO) | (%) | (SFT) | (+ThinkPO) | (%) | |

| MATH500 | ||||||

| AIME | 1.1% | |||||

| GPQA | ||||||

| GSM8K | ||||||

| Olympiad | ||||||

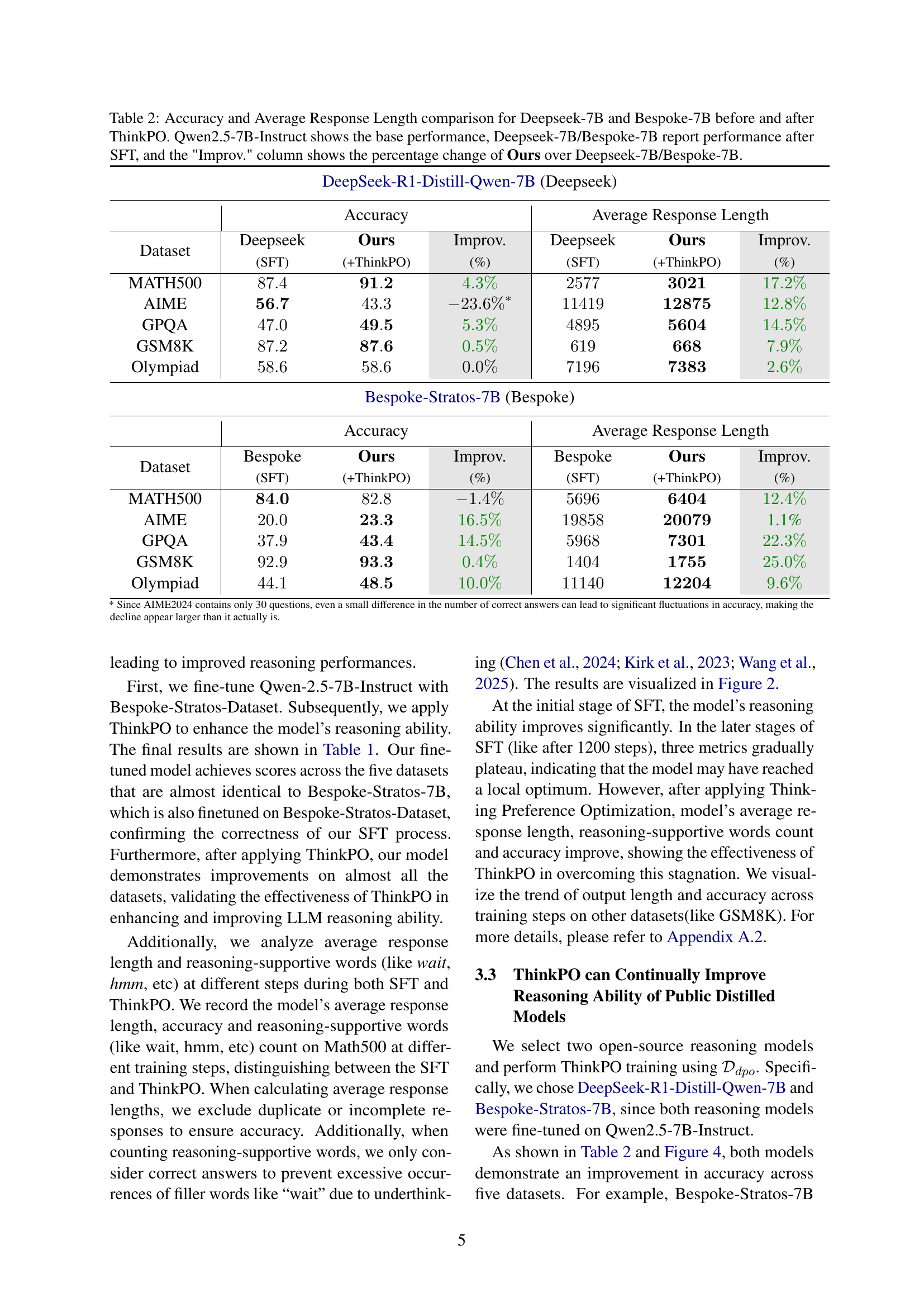

🔼 Table 2 presents a comparison of the accuracy and average response length achieved by DeepSeek-7B and Bespoke-7B models, both before and after applying the ThinkPO optimization technique. The table shows the baseline performance using Qwen2.5-7B-Instruct as a reference. For both DeepSeek-7B and Bespoke-7B, the performance after supervised fine-tuning (SFT) is provided. Finally, the improvement in performance resulting from ThinkPO is quantified as a percentage increase relative to the SFT results for each model.

read the caption

Table 2: Accuracy and Average Response Length comparison for Deepseek-7B and Bespoke-7B before and after ThinkPO. Qwen2.5-7B-Instruct shows the base performance, Deepseek-7B/Bespoke-7B report performance after SFT, and the 'Improv.' column shows the percentage change of Ours over Deepseek-7B/Bespoke-7B.

| Qwen 2.5-3B | Qwen 2.5-7B | Qwen 2.5-14B | |||||||

|---|---|---|---|---|---|---|---|---|---|

| +SFT | +ThinkPO | Improv. | +SFT | +ThinkPO | Improv. | +SFT | +ThinkPO | Improv. | |

| MATH500 | |||||||||

| AIME | |||||||||

| GPQA | |||||||||

| GSM8K | 0.9% | ||||||||

| Olympiad | |||||||||

🔼 This table presents the results of evaluating the reasoning ability of three different sized language models (3B, 7B, and 14B parameters) from the Qwen-2.5 family. Each model was first fine-tuned using Supervised Fine-Tuning (SFT) on the Bespoke-Strato dataset for a single epoch. Then, Think Preference Optimization (ThinkPO) was applied to each model. The table shows the accuracy achieved by each model (before and after ThinkPO) on five different benchmark datasets (MATH500, AIME, GPQA, GSM8K, and Olympiad). The results demonstrate that larger models generally perform better, and that ThinkPO consistently improves the reasoning accuracy of models of all sizes.

read the caption

Table 3: Results of Models with Different Sizes (3B, 7B, 14B) on the Qwen-2.5 Family. We evaluate models of different sizes (3B, 7B, 14B) trained with Supervised Fine-Tuning (SFT) and Think Preference Optimization (ThinkPO). Models are fine-tuned on the Bespoke-Strato-Dataset for 1 epoch. As model size increases, accuracy improves across all five test datasets. After ThinkPO training, accuracy improves consistently for models of all sizes, including the smallest (3B), demonstrating that ThinkPO enhances reasoning ability across different model scales.

| Short | Our | Improv. | |

|---|---|---|---|

| +SFT | +ThinkPO | % | |

| MATH500 | |||

| AIME | |||

| GPQA | |||

| GSM8K | |||

| Olympiad |

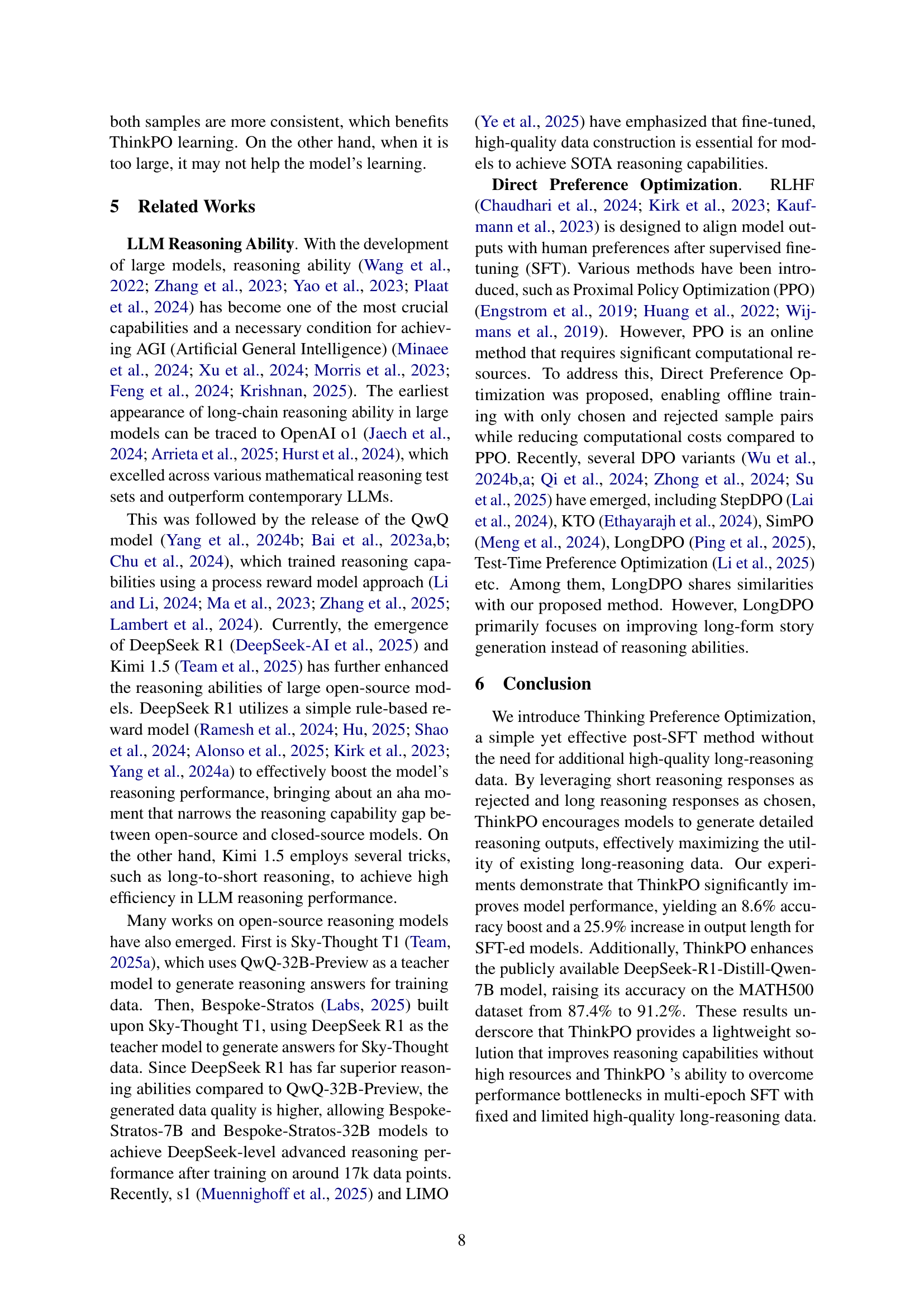

🔼 This table presents the results of applying Thinking Preference Optimization (ThinkPO) to a Qwen-2.5-7B model that was initially fine-tuned using a short-chain reasoning dataset. The experiment aimed to determine if ThinkPO could still improve reasoning capabilities even when the model wasn’t initially trained with long reasoning chains. The short-chain dataset used in the pre-training phase was the same size as the Bespoke-Stratos dataset. The model was fine-tuned for three epochs before ThinkPO was applied. The table shows the improvements in reasoning capability across several benchmark datasets (MATH500, AIME, GPQA, GSM8K, Olympiad) after the application of ThinkPO, indicating that this method can benefit models trained on both short and long reasoning datasets.

read the caption

Table 4: Results of ThinkPO on the model finetuned with a short-Reasoning Dataset. We select a short-chain reasoning dataset of the same size as the Bespoke-Stratos dataset and fine-tune Qwen-2.5-7B for 3 epochs. Models trained with reasoning-style datasets, regardless of response length, can benefit from ThinkPO to enhance and improve their reasoning capability

| Short | Middle | Long | |

|---|---|---|---|

| Avg Differences | |||

| MATH500 | |||

| AIME | |||

| GPQA | |||

| GSM8K | |||

| Olympiad |

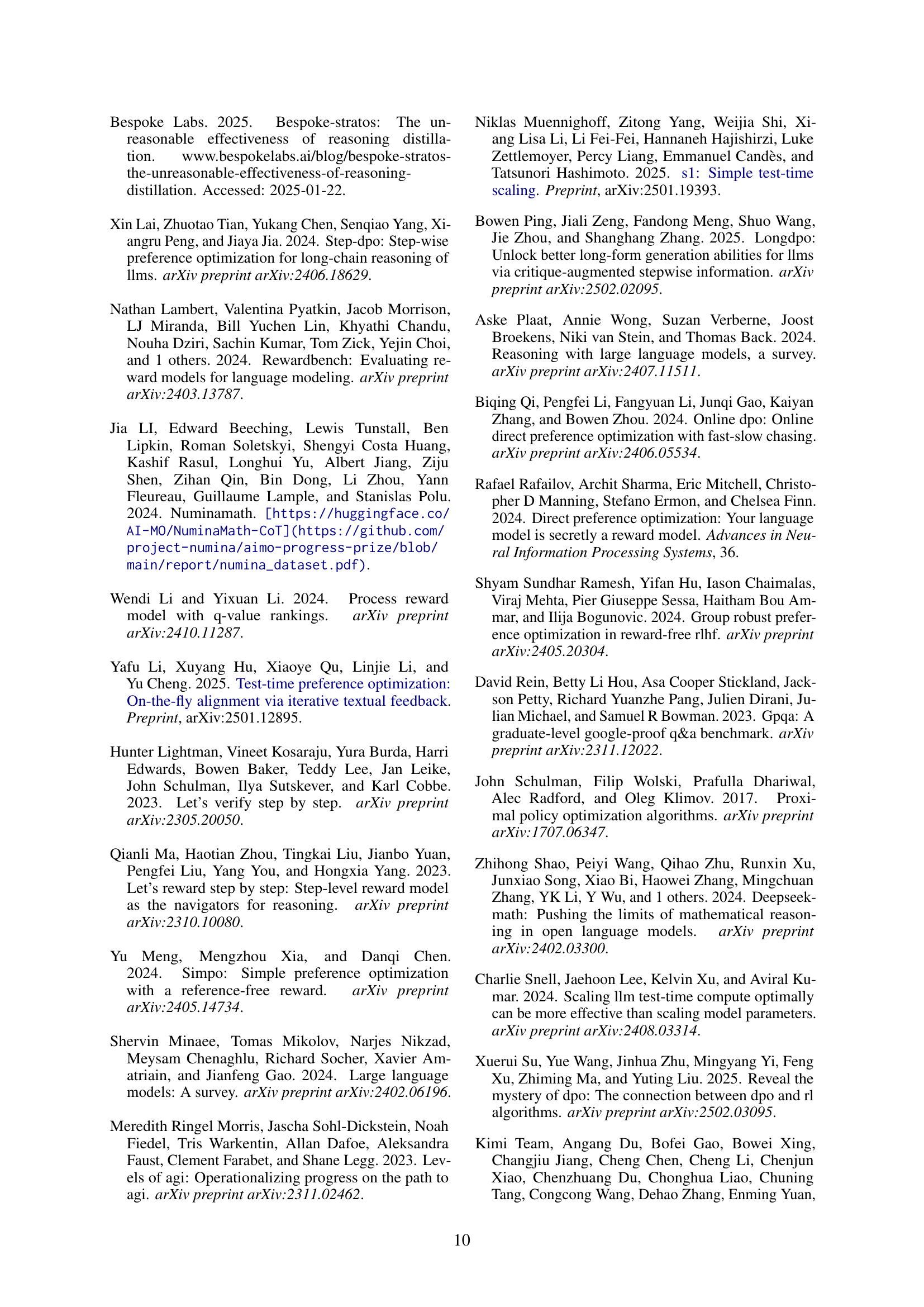

🔼 This table presents the results of an experiment designed to analyze how the length difference between chosen and rejected samples in the ThinkPO dataset impacts the model’s performance. Three datasets were created with varying length differences: short, middle, and long. The table shows the average length difference between chosen and rejected samples for each dataset, along with the model’s performance on three different tasks (MATH500, AIME, and GPQA). The results indicate that the dataset with shorter length differences yields the best overall performance, suggesting that a moderate length difference between samples is optimal for ThinkPO learning. Larger differences may hinder the learning process.

read the caption

Table 5: Model performance across three datasets with varying chosen and rejected sample length difference distributions. “Avg Differences” represents the average length difference between chosen and rejected samples. Short yields the best overall performance, suggesting that appropriate length differences improve ThinkPO learning, while too large differences may hinder it.

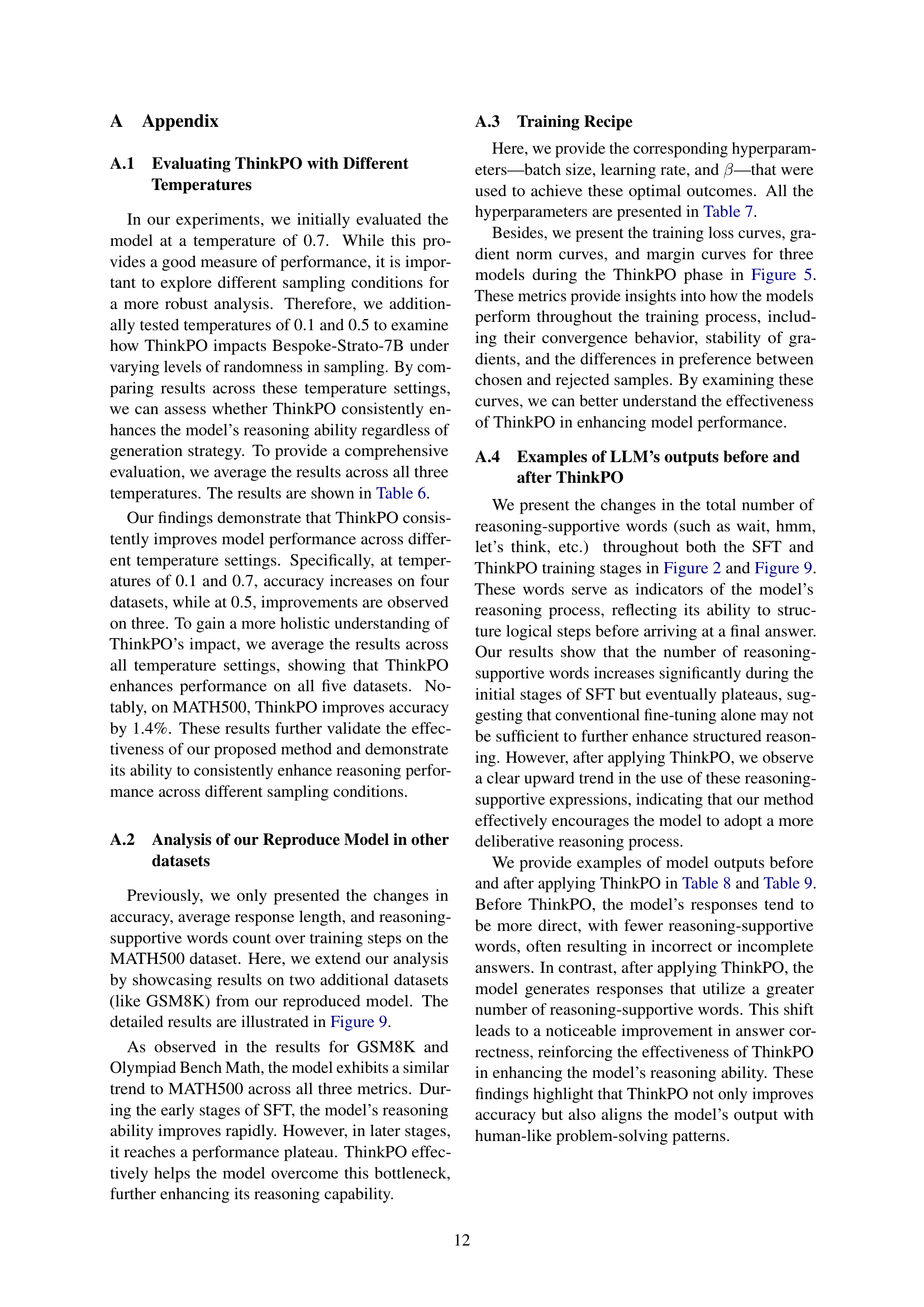

| Temperature=0.1 | Temperature=0.5 | Temperature=0.7 | Average | ||||||

|---|---|---|---|---|---|---|---|---|---|

| +SFT | +ThinkPO | +SFT | +ThinkPO | +SFT | +ThinkPO | +SFT | +ThinkPO | Improv. | |

| MATH500 | 70.2 | 73.4 | 81.4 | 82.6 | 84.0 | 82.8 | 78.5 | 79.6 | 1.4% |

| AIME | 10.0 | 16.7 | 20.0 | 16.7 | 20.0 | 23.3 | 16.7 | 18.9 | 13.2% |

| GPQA | 34.9 | 30.8 | 33.8 | 41.0 | 37.9 | 43.4 | 35.5 | 38.4 | 8.1% |

| GSM8K | 89.3 | 91.0 | 92.4 | 92.3 | 92.9 | 93.3 | 91.5 | 92.2 | 0.7% |

| Olympiad | 32.8 | 39.6 | 42.3 | 44.8 | 44.1 | 48.5 | 39.7 | 44.3 | 11.6% |

🔼 This table presents the results of evaluating the Bespoke-Strato-7B model’s performance with different temperature settings (0.1, 0.5, and 0.7) when using the Thinking Preference Optimization (ThinkPO) method. For each temperature, the table shows the accuracy achieved on five different datasets before and after applying ThinkPO, along with the percentage improvement. The final row presents the average accuracy across all temperatures and datasets, highlighting the consistent performance enhancement provided by ThinkPO.

read the caption

Table 6: Evaluation of Bespoke-Strato-7B with different temperatures(0.1,0.5,0.7). Across different values of temperatures, the model achieves accuracy improvements on most datasets. After averaging the results, ThinkPO consistently enhances the model’s performance across all five datasets.

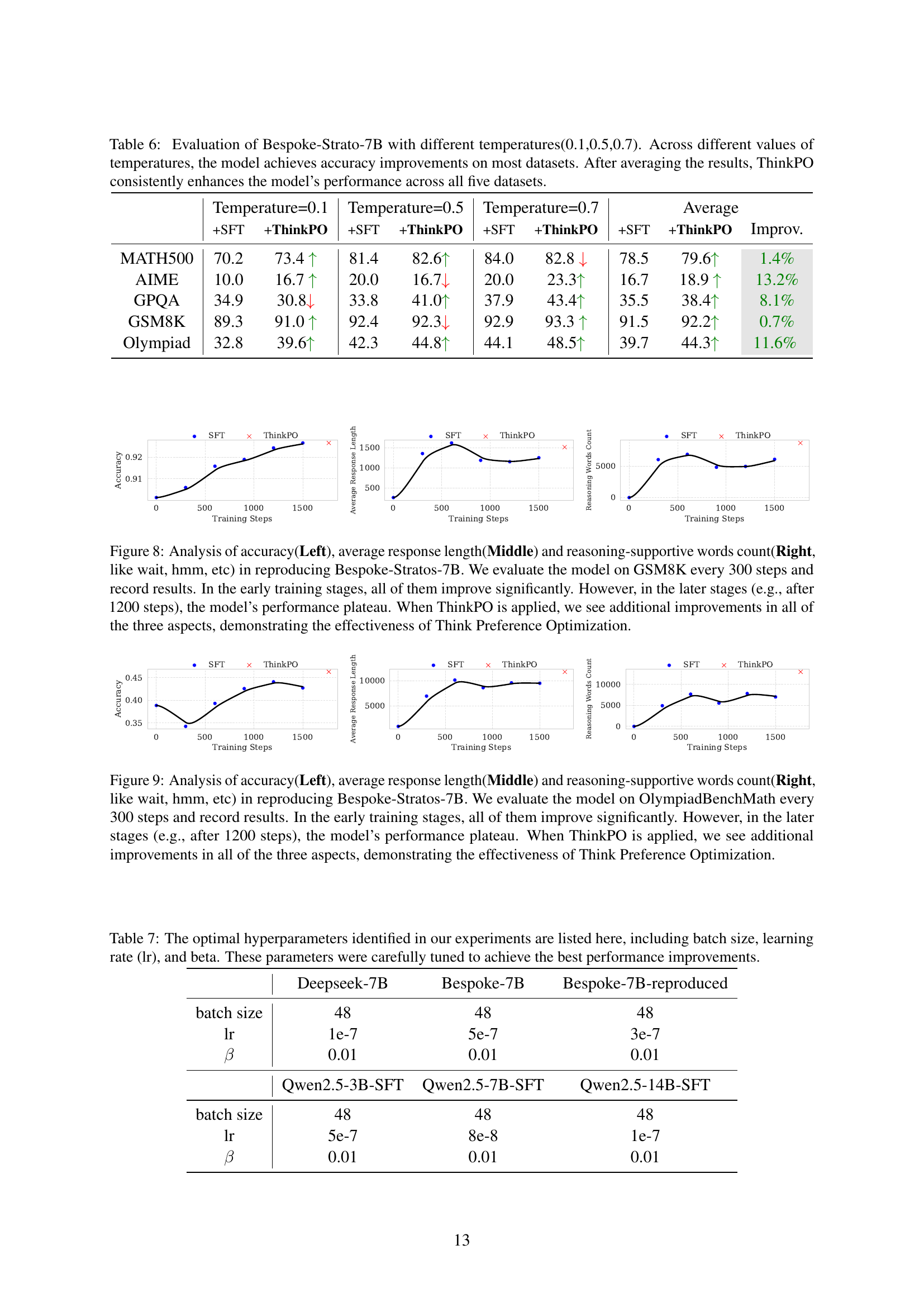

| Deepseek-7B | Bespoke-7B | Bespoke-7B-reproduced | |

| batch size | 48 | 48 | 48 |

| lr | 1e-7 | 5e-7 | 3e-7 |

| 0.01 | 0.01 | 0.01 | |

| Qwen2.5-3B-SFT | Qwen2.5-7B-SFT | Qwen2.5-14B-SFT | |

| batch size | 48 | 48 | 48 |

| lr | 5e-7 | 8e-8 | 1e-7 |

| 0.01 | 0.01 | 0.01 |

🔼 Table 7 presents the optimal hyperparameters discovered during the experiments. These hyperparameters include the batch size, learning rate (lr), and beta value. They were meticulously adjusted to maximize performance improvements in the model.

read the caption

Table 7: The optimal hyperparameters identified in our experiments are listed here, including batch size, learning rate (lr), and beta. These parameters were carefully tuned to achieve the best performance improvements.

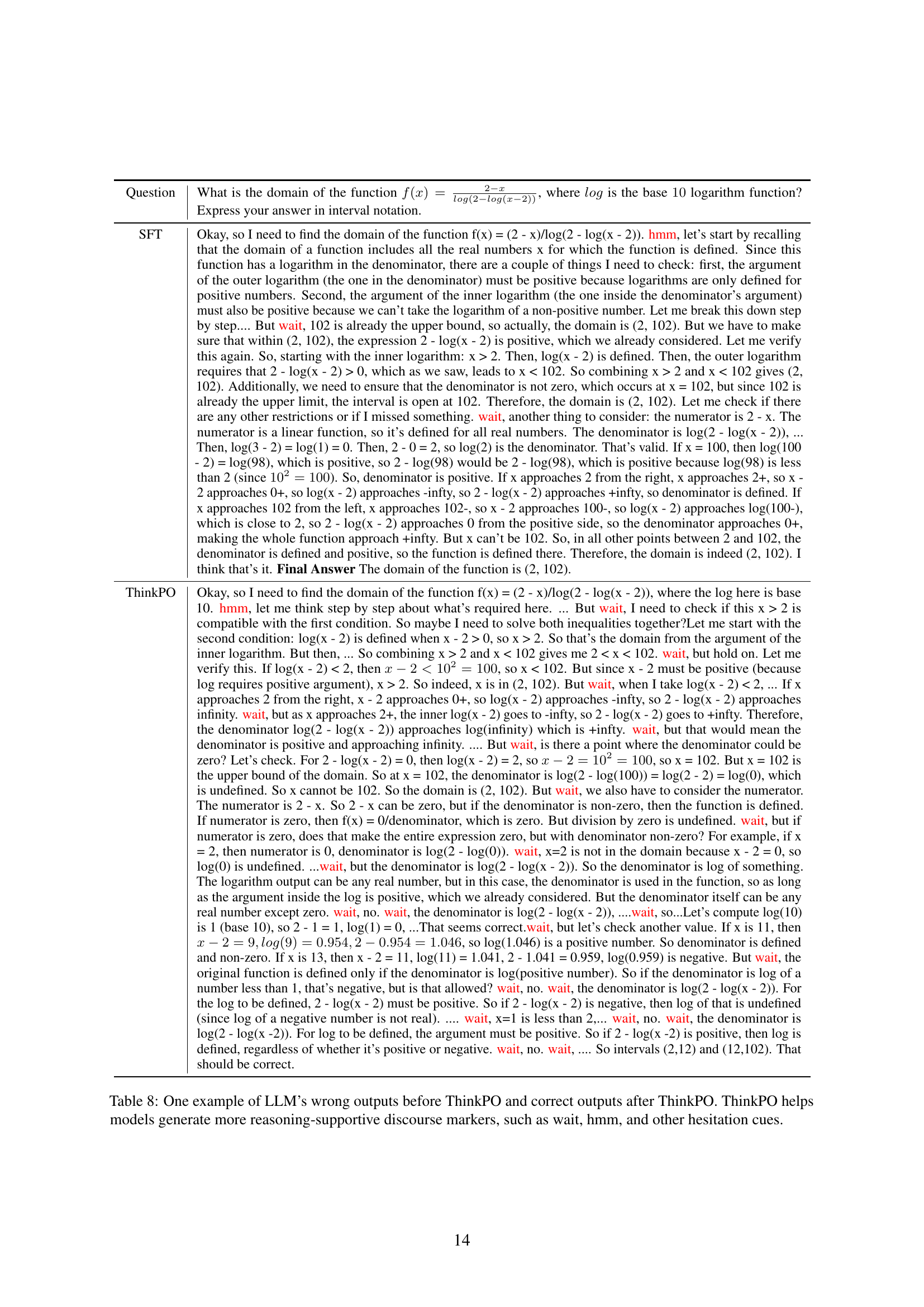

| Question | What is the domain of the function , where is the base logarithm function? Express your answer in interval notation. |

|---|---|

| SFT | Okay, so I need to find the domain of the function f(x) = (2 - x)/log(2 - log(x - 2)). hmm, let’s start by recalling that the domain of a function includes all the real numbers x for which the function is defined. Since this function has a logarithm in the denominator, there are a couple of things I need to check: first, the argument of the outer logarithm (the one in the denominator) must be positive because logarithms are only defined for positive numbers. Second, the argument of the inner logarithm (the one inside the denominator’s argument) must also be positive because we can’t take the logarithm of a non-positive number. Let me break this down step by step…. But wait, 102 is already the upper bound, so actually, the domain is (2, 102). But we have to make sure that within (2, 102), the expression 2 - log(x - 2) is positive, which we already considered. Let me verify this again. So, starting with the inner logarithm: x > 2. Then, log(x - 2) is defined. Then, the outer logarithm requires that 2 - log(x - 2) > 0, which as we saw, leads to x < 102. So combining x > 2 and x < 102 gives (2, 102). Additionally, we need to ensure that the denominator is not zero, which occurs at x = 102, but since 102 is already the upper limit, the interval is open at 102. Therefore, the domain is (2, 102). Let me check if there are any other restrictions or if I missed something. wait, another thing to consider: the numerator is 2 - x. The numerator is a linear function, so it’s defined for all real numbers. The denominator is log(2 - log(x - 2)), … Then, log(3 - 2) = log(1) = 0. Then, 2 - 0 = 2, so log(2) is the denominator. That’s valid. If x = 100, then log(100 - 2) = log(98), which is positive, so 2 - log(98) would be 2 - log(98), which is positive because log(98) is less than 2 (since ). So, denominator is positive. If x approaches 2 from the right, x approaches 2+, so x - 2 approaches 0+, so log(x - 2) approaches -infty, so 2 - log(x - 2) approaches +infty, so denominator is defined. If x approaches 102 from the left, x approaches 102-, so x - 2 approaches 100-, so log(x - 2) approaches log(100-), which is close to 2, so 2 - log(x - 2) approaches 0 from the positive side, so the denominator approaches 0+, making the whole function approach +infty. But x can’t be 102. So, in all other points between 2 and 102, the denominator is defined and positive, so the function is defined there. Therefore, the domain is indeed (2, 102). I think that’s it. Final Answer The domain of the function is (2, 102). |

| ThinkPO | Okay, so I need to find the domain of the function f(x) = (2 - x)/log(2 - log(x - 2)), where the log here is base 10. hmm, let me think step by step about what’s required here. … But wait, I need to check if this x > 2 is compatible with the first condition. So maybe I need to solve both inequalities together?Let me start with the second condition: log(x - 2) is defined when x - 2 > 0, so x > 2. So that’s the domain from the argument of the inner logarithm. But then, … So combining x > 2 and x < 102 gives me 2 < x < 102. wait, but hold on. Let me verify this. If log(x - 2) < 2, then , so x < 102. But since x - 2 must be positive (because log requires positive argument), x > 2. So indeed, x is in (2, 102). But wait, when I take log(x - 2) < 2, … If x approaches 2 from the right, x - 2 approaches 0+, so log(x - 2) approaches -infty, so 2 - log(x - 2) approaches infinity. wait, but as x approaches 2+, the inner log(x - 2) goes to -infty, so 2 - log(x - 2) goes to +infty. Therefore, the denominator log(2 - log(x - 2)) approaches log(infinity) which is +infty. wait, but that would mean the denominator is positive and approaching infinity. …. But wait, is there a point where the denominator could be zero? Let’s check. For 2 - log(x - 2) = 0, then log(x - 2) = 2, so , so x = 102. But x = 102 is the upper bound of the domain. So at x = 102, the denominator is log(2 - log(100)) = log(2 - 2) = log(0), which is undefined. So x cannot be 102. So the domain is (2, 102). But wait, we also have to consider the numerator. The numerator is 2 - x. So 2 - x can be zero, but if the denominator is non-zero, then the function is defined. If numerator is zero, then f(x) = 0/denominator, which is zero. But division by zero is undefined. wait, but if numerator is zero, does that make the entire expression zero, but with denominator non-zero? For example, if x = 2, then numerator is 0, denominator is log(2 - log(0)). wait, x=2 is not in the domain because x - 2 = 0, so log(0) is undefined. …wait, but the denominator is log(2 - log(x - 2)). So the denominator is log of something. The logarithm output can be any real number, but in this case, the denominator is used in the function, so as long as the argument inside the log is positive, which we already considered. But the denominator itself can be any real number except zero. wait, no. wait, the denominator is log(2 - log(x - 2)), ….wait, so…Let’s compute log(10) is 1 (base 10), so 2 - 1 = 1, log(1) = 0, …That seems correct.wait, but let’s check another value. If x is 11, then , so log(1.046) is a positive number. So denominator is defined and non-zero. If x is 13, then x - 2 = 11, log(11) = 1.041, 2 - 1.041 = 0.959, log(0.959) is negative. But wait, the original function is defined only if the denominator is log(positive number). So if the denominator is log of a number less than 1, that’s negative, but is that allowed? wait, no. wait, the denominator is log(2 - log(x - 2)). For the log to be defined, 2 - log(x - 2) must be positive. So if 2 - log(x - 2) is negative, then log of that is undefined (since log of a negative number is not real). …. wait, x=1 is less than 2,… wait, no. wait, the denominator is log(2 - log(x -2)). For log to be defined, the argument must be positive. So if 2 - log(x -2) is positive, then log is defined, regardless of whether it’s positive or negative. wait, no. wait, …. So intervals (2,12) and (12,102). That should be correct. |

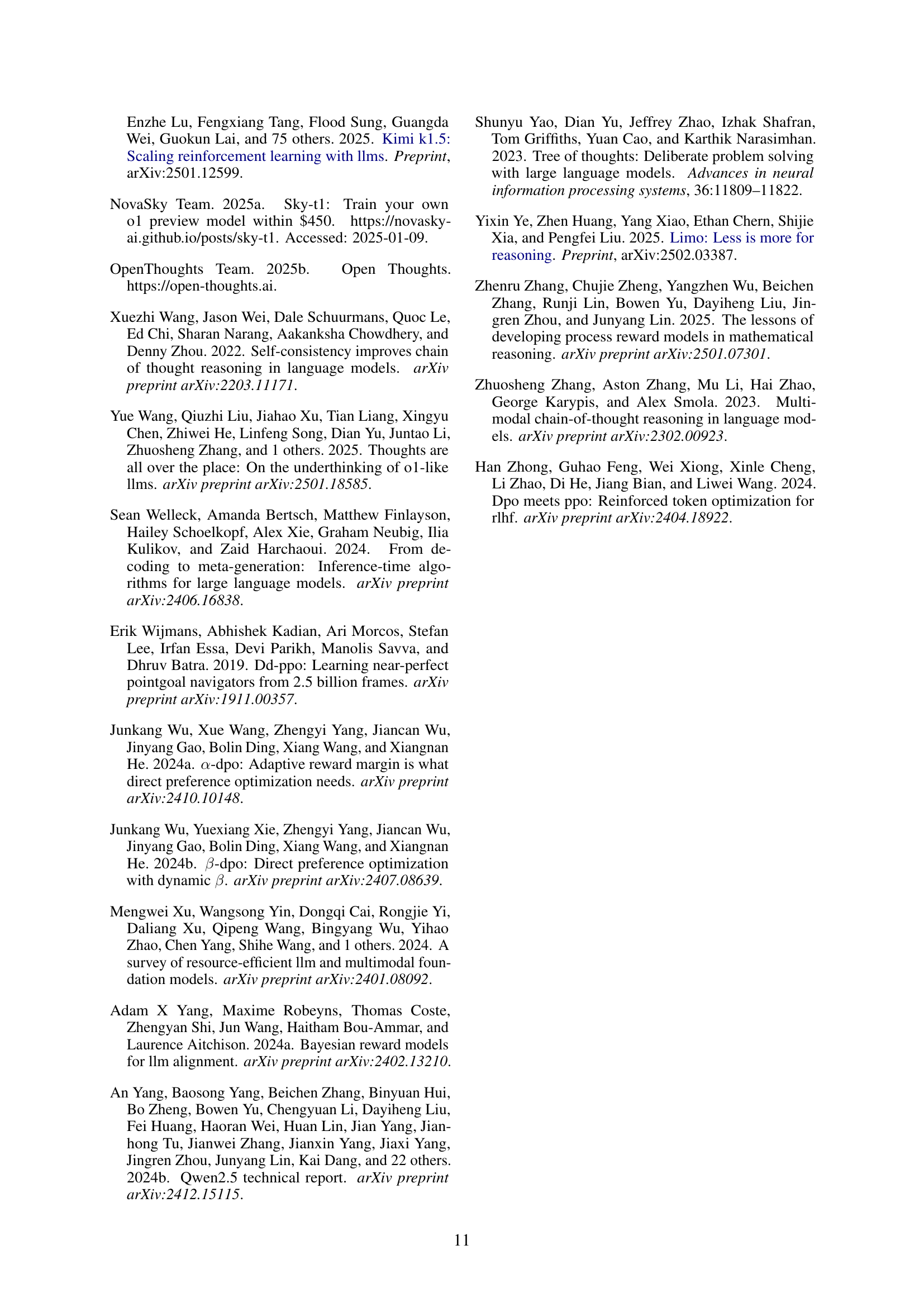

🔼 This table showcases a comparative analysis of Large Language Model (LLM) outputs for a specific mathematical reasoning task, both before and after applying the Thinking Preference Optimization (ThinkPO) method. The example highlights how ThinkPO influences the model’s reasoning process by prompting it to generate more detailed and structured responses. The inclusion of ‘wait’, ‘hmm’, etc., reflects the model’s increased deliberation and self-correction during problem-solving, demonstrating ThinkPO’s effectiveness in improving the quality of reasoning and reducing errors.

read the caption

Table 8: One example of LLM’s wrong outputs before ThinkPO and correct outputs after ThinkPO. ThinkPO helps models generate more reasoning-supportive discourse markers, such as wait, hmm, and other hesitation cues.

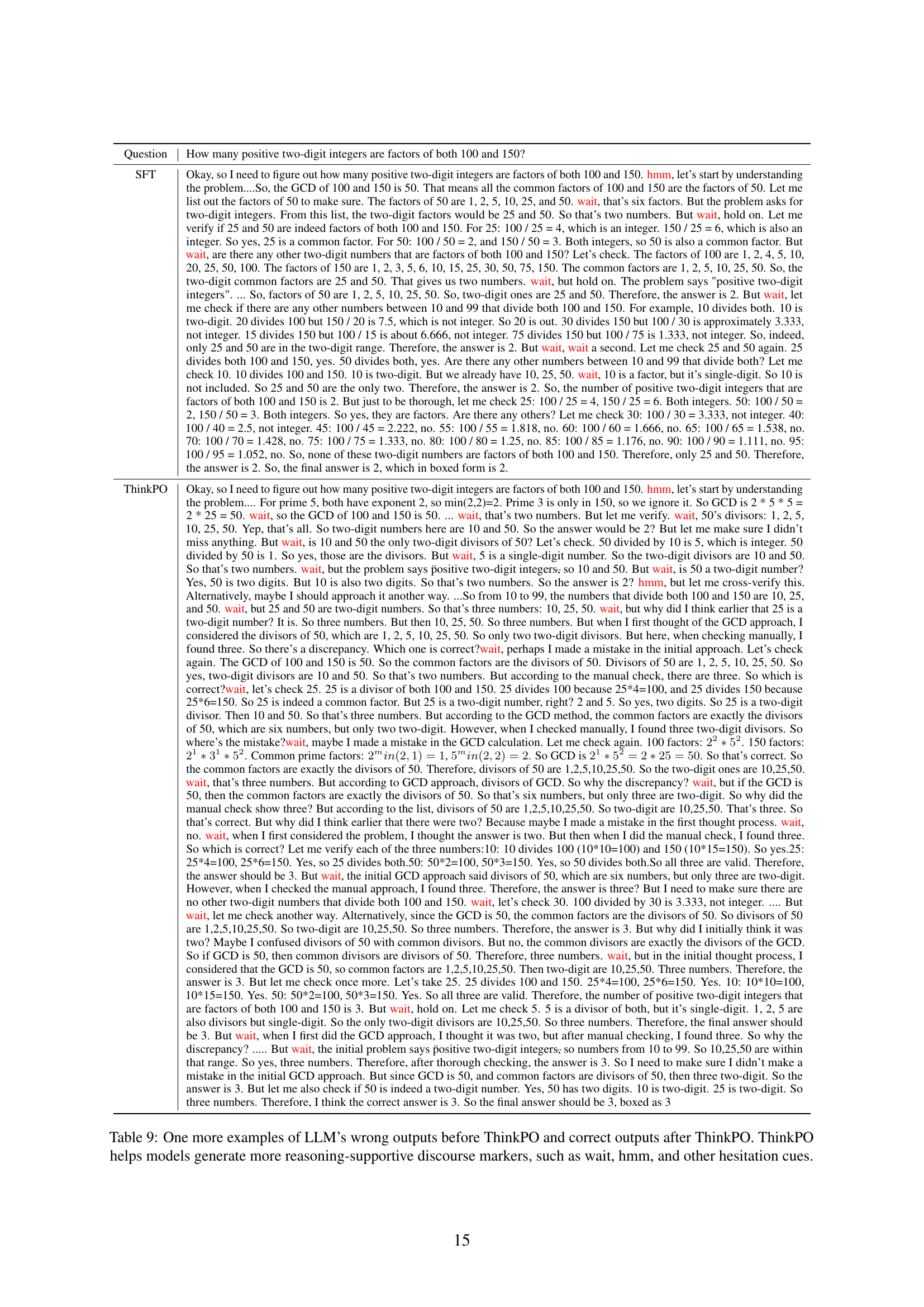

| Question | How many positive two-digit integers are factors of both 100 and 150? |

|---|---|

| SFT | Okay, so I need to figure out how many positive two-digit integers are factors of both 100 and 150. hmm, let’s start by understanding the problem….So, the GCD of 100 and 150 is 50. That means all the common factors of 100 and 150 are the factors of 50. Let me list out the factors of 50 to make sure. The factors of 50 are 1, 2, 5, 10, 25, and 50. wait, that’s six factors. But the problem asks for two-digit integers. From this list, the two-digit factors would be 25 and 50. So that’s two numbers. But wait, hold on. Let me verify if 25 and 50 are indeed factors of both 100 and 150. For 25: 100 / 25 = 4, which is an integer. 150 / 25 = 6, which is also an integer. So yes, 25 is a common factor. For 50: 100 / 50 = 2, and 150 / 50 = 3. Both integers, so 50 is also a common factor. But wait, are there any other two-digit numbers that are factors of both 100 and 150? Let’s check. The factors of 100 are 1, 2, 4, 5, 10, 20, 25, 50, 100. The factors of 150 are 1, 2, 3, 5, 6, 10, 15, 25, 30, 50, 75, 150. The common factors are 1, 2, 5, 10, 25, 50. So, the two-digit common factors are 25 and 50. That gives us two numbers. wait, but hold on. The problem says "positive two-digit integers". … So, factors of 50 are 1, 2, 5, 10, 25, 50. So, two-digit ones are 25 and 50. Therefore, the answer is 2. But wait, let me check if there are any other numbers between 10 and 99 that divide both 100 and 150. For example, 10 divides both. 10 is two-digit. 20 divides 100 but 150 / 20 is 7.5, which is not integer. So 20 is out. 30 divides 150 but 100 / 30 is approximately 3.333, not integer. 15 divides 150 but 100 / 15 is about 6.666, not integer. 75 divides 150 but 100 / 75 is 1.333, not integer. So, indeed, only 25 and 50 are in the two-digit range. Therefore, the answer is 2. But wait, wait a second. Let me check 25 and 50 again. 25 divides both 100 and 150, yes. 50 divides both, yes. Are there any other numbers between 10 and 99 that divide both? Let me check 10. 10 divides 100 and 150. 10 is two-digit. But we already have 10, 25, 50. wait, 10 is a factor, but it’s single-digit. So 10 is not included. So 25 and 50 are the only two. Therefore, the answer is 2. So, the number of positive two-digit integers that are factors of both 100 and 150 is 2. But just to be thorough, let me check 25: 100 / 25 = 4, 150 / 25 = 6. Both integers. 50: 100 / 50 = 2, 150 / 50 = 3. Both integers. So yes, they are factors. Are there any others? Let me check 30: 100 / 30 = 3.333, not integer. 40: 100 / 40 = 2.5, not integer. 45: 100 / 45 = 2.222, no. 55: 100 / 55 = 1.818, no. 60: 100 / 60 = 1.666, no. 65: 100 / 65 = 1.538, no. 70: 100 / 70 = 1.428, no. 75: 100 / 75 = 1.333, no. 80: 100 / 80 = 1.25, no. 85: 100 / 85 = 1.176, no. 90: 100 / 90 = 1.111, no. 95: 100 / 95 = 1.052, no. So, none of these two-digit numbers are factors of both 100 and 150. Therefore, only 25 and 50. Therefore, the answer is 2. So, the final answer is 2, which in boxed form is 2. |

| ThinkPO | Okay, so I need to figure out how many positive two-digit integers are factors of both 100 and 150. hmm, let’s start by understanding the problem…. For prime 5, both have exponent 2, so min(2,2)=2. Prime 3 is only in 150, so we ignore it. So GCD is 2 * 5 * 5 = 2 * 25 = 50. wait, so the GCD of 100 and 150 is 50. … wait, that’s two numbers. But let me verify. wait, 50’s divisors: 1, 2, 5, 10, 25, 50. Yep, that’s all. So two-digit numbers here are 10 and 50. So the answer would be 2? But let me make sure I didn’t miss anything. But wait, is 10 and 50 the only two-digit divisors of 50? Let’s check. 50 divided by 10 is 5, which is integer. 50 divided by 50 is 1. So yes, those are the divisors. But wait, 5 is a single-digit number. So the two-digit divisors are 10 and 50. So that’s two numbers. wait, but the problem says p̈ositive two-digit integers,̈ so 10 and 50. But wait, is 50 a two-digit number? Yes, 50 is two digits. But 10 is also two digits. So that’s two numbers. So the answer is 2? hmm, but let me cross-verify this. Alternatively, maybe I should approach it another way. …So from 10 to 99, the numbers that divide both 100 and 150 are 10, 25, and 50. wait, but 25 and 50 are two-digit numbers. So that’s three numbers: 10, 25, 50. wait, but why did I think earlier that 25 is a two-digit number? It is. So three numbers. But then 10, 25, 50. So three numbers. But when I first thought of the GCD approach, I considered the divisors of 50, which are 1, 2, 5, 10, 25, 50. So only two two-digit divisors. But here, when checking manually, I found three. So there’s a discrepancy. Which one is correct?wait, perhaps I made a mistake in the initial approach. Let’s check again. The GCD of 100 and 150 is 50. So the common factors are the divisors of 50. Divisors of 50 are 1, 2, 5, 10, 25, 50. So yes, two-digit divisors are 10 and 50. So that’s two numbers. But according to the manual check, there are three. So which is correct?wait, let’s check 25. 25 is a divisor of both 100 and 150. 25 divides 100 because 25*4=100, and 25 divides 150 because 25*6=150. So 25 is indeed a common factor. But 25 is a two-digit number, right? 2 and 5. So yes, two digits. So 25 is a two-digit divisor. Then 10 and 50. So that’s three numbers. But according to the GCD method, the common factors are exactly the divisors of 50, which are six numbers, but only two two-digit. However, when I checked manually, I found three two-digit divisors. So where’s the mistake?wait, maybe I made a mistake in the GCD calculation. Let me check again. 100 factors: . 150 factors: . Common prime factors: , . So GCD is . So that’s correct. So the common factors are exactly the divisors of 50. Therefore, divisors of 50 are 1,2,5,10,25,50. So the two-digit ones are 10,25,50. wait, that’s three numbers. But according to GCD approach, divisors of GCD. So why the discrepancy? wait, but if the GCD is 50, then the common factors are exactly the divisors of 50. So that’s six numbers, but only three are two-digit. So why did the manual check show three? But according to the list, divisors of 50 are 1,2,5,10,25,50. So two-digit are 10,25,50. That’s three. So that’s correct. But why did I think earlier that there were two? Because maybe I made a mistake in the first thought process. wait, no. wait, when I first considered the problem, I thought the answer is two. But then when I did the manual check, I found three. So which is correct? Let me verify each of the three numbers:10: 10 divides 100 (10*10=100) and 150 (10*15=150). So yes.25: 25*4=100, 25*6=150. Yes, so 25 divides both.50: 50*2=100, 50*3=150. Yes, so 50 divides both.So all three are valid. Therefore, the answer should be 3. But wait, the initial GCD approach said divisors of 50, which are six numbers, but only three are two-digit. However, when I checked the manual approach, I found three. Therefore, the answer is three? But I need to make sure there are no other two-digit numbers that divide both 100 and 150. wait, let’s check 30. 100 divided by 30 is 3.333, not integer. …. But wait, let me check another way. Alternatively, since the GCD is 50, the common factors are the divisors of 50. So divisors of 50 are 1,2,5,10,25,50. So two-digit are 10,25,50. So three numbers. Therefore, the answer is 3. But why did I initially think it was two? Maybe I confused divisors of 50 with common divisors. But no, the common divisors are exactly the divisors of the GCD. So if GCD is 50, then common divisors are divisors of 50. Therefore, three numbers. wait, but in the initial thought process, I considered that the GCD is 50, so common factors are 1,2,5,10,25,50. Then two-digit are 10,25,50. Three numbers. Therefore, the answer is 3. But let me check once more. Let’s take 25. 25 divides 100 and 150. 25*4=100, 25*6=150. Yes. 10: 10*10=100, 10*15=150. Yes. 50: 50*2=100, 50*3=150. Yes. So all three are valid. Therefore, the number of positive two-digit integers that are factors of both 100 and 150 is 3. But wait, hold on. Let me check 5. 5 is a divisor of both, but it’s single-digit. 1, 2, 5 are also divisors but single-digit. So the only two-digit divisors are 10,25,50. So three numbers. Therefore, the final answer should be 3. But wait, when I first did the GCD approach, I thought it was two, but after manual checking, I found three. So why the discrepancy? ….. But wait, the initial problem says p̈ositive two-digit integers,̈ so numbers from 10 to 99. So 10,25,50 are within that range. So yes, three numbers. Therefore, after thorough checking, the answer is 3. So I need to make sure I didn’t make a mistake in the initial GCD approach. But since GCD is 50, and common factors are divisors of 50, then three two-digit. So the answer is 3. But let me also check if 50 is indeed a two-digit number. Yes, 50 has two digits. 10 is two-digit. 25 is two-digit. So three numbers. Therefore, I think the correct answer is 3. So the final answer should be 3, boxed as 3 |

🔼 This table showcases two examples where a large language model (LLM) initially produces incorrect answers before applying the Thinking Preference Optimization (ThinkPO) method and provides correct answers after applying ThinkPO. The examples highlight how ThinkPO improves the LLM’s reasoning process by encouraging the model to generate more detailed and structured reasoning steps, often indicated by the inclusion of discourse markers such as ‘wait,’ ‘hmm,’ and other expressions of hesitation. These markers are often a byproduct of the increased deliberation and exploration of reasoning paths that ThinkPO promotes.

read the caption

Table 9: One more examples of LLM’s wrong outputs before ThinkPO and correct outputs after ThinkPO. ThinkPO helps models generate more reasoning-supportive discourse markers, such as wait, hmm, and other hesitation cues.

Full paper#