TL;DR#

Hallucinations in LLM outputs limit their reliability, especially in knowledge-intensive tasks. Existing methods for detecting hallucinations either rely heavily on internal knowledge or involve complex, multi-step processes. This leads to limitations in low-resource languages and potential inaccuracies in aligning modified responses with the original LLM output. These challenges underscore the need for effective hallucination detection to ensure the development of safe and trustworthy AI.

To address these challenges, this paper introduces REFIND, a novel framework for detecting hallucinated spans by directly leveraging retrieved documents. REFIND quantifies the context sensitivity of each token using a novel Context Sensitivity Ratio (CSR). The method measures the token’s dependence on external contextual information. REFIND achieves high accuracy and efficiency, demonstrating robustness across nine languages and outperforming baseline models.

Key Takeaways#

Why does it matter?#

This paper is important for researchers because it introduces a more accurate and efficient method for detecting hallucinations in LLMs, enhancing the reliability of AI systems. The multilingual capabilities and superior performance of REFIND open new avenues for building trustworthy AI applications across diverse languages and contexts.

Visual Insights#

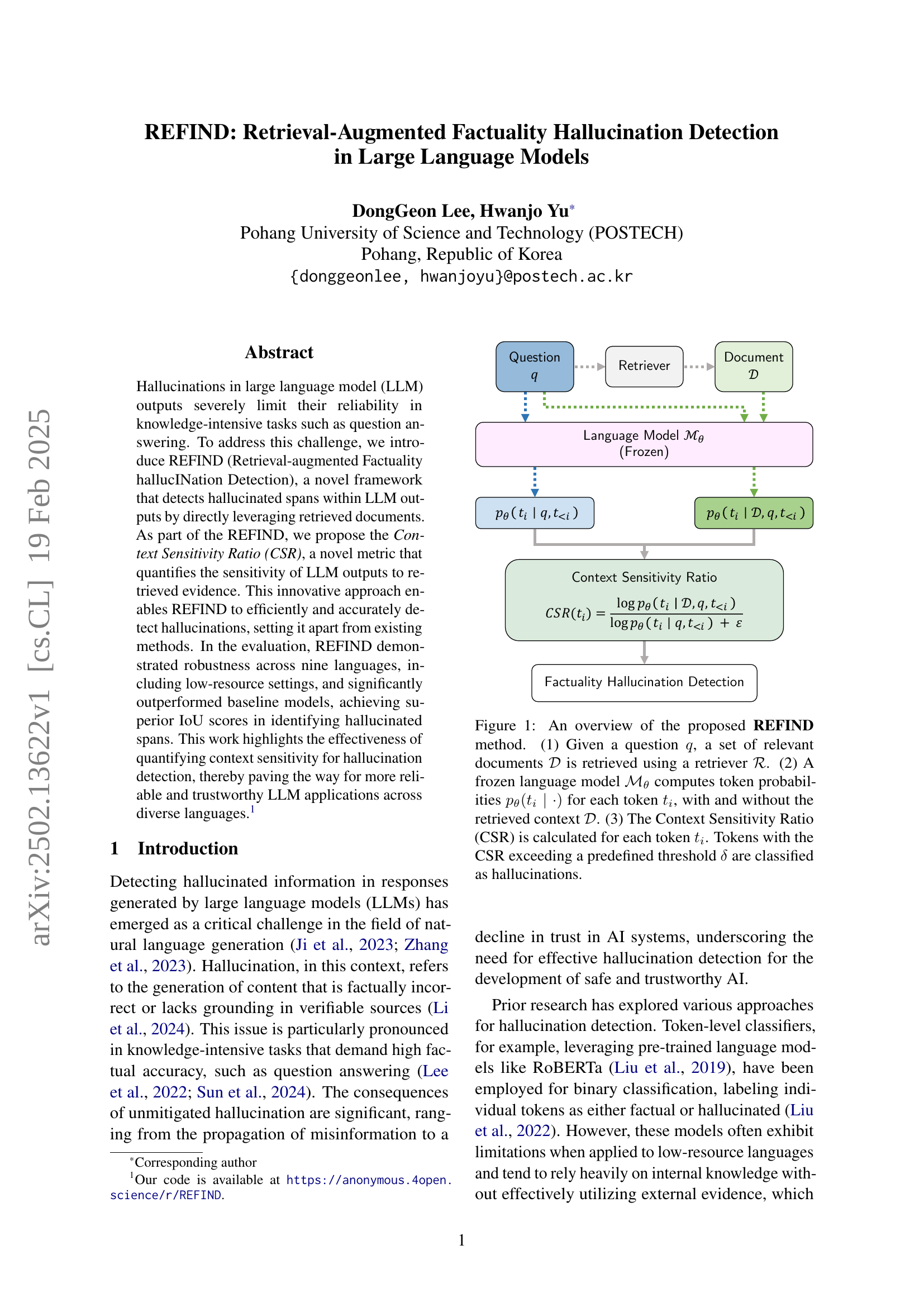

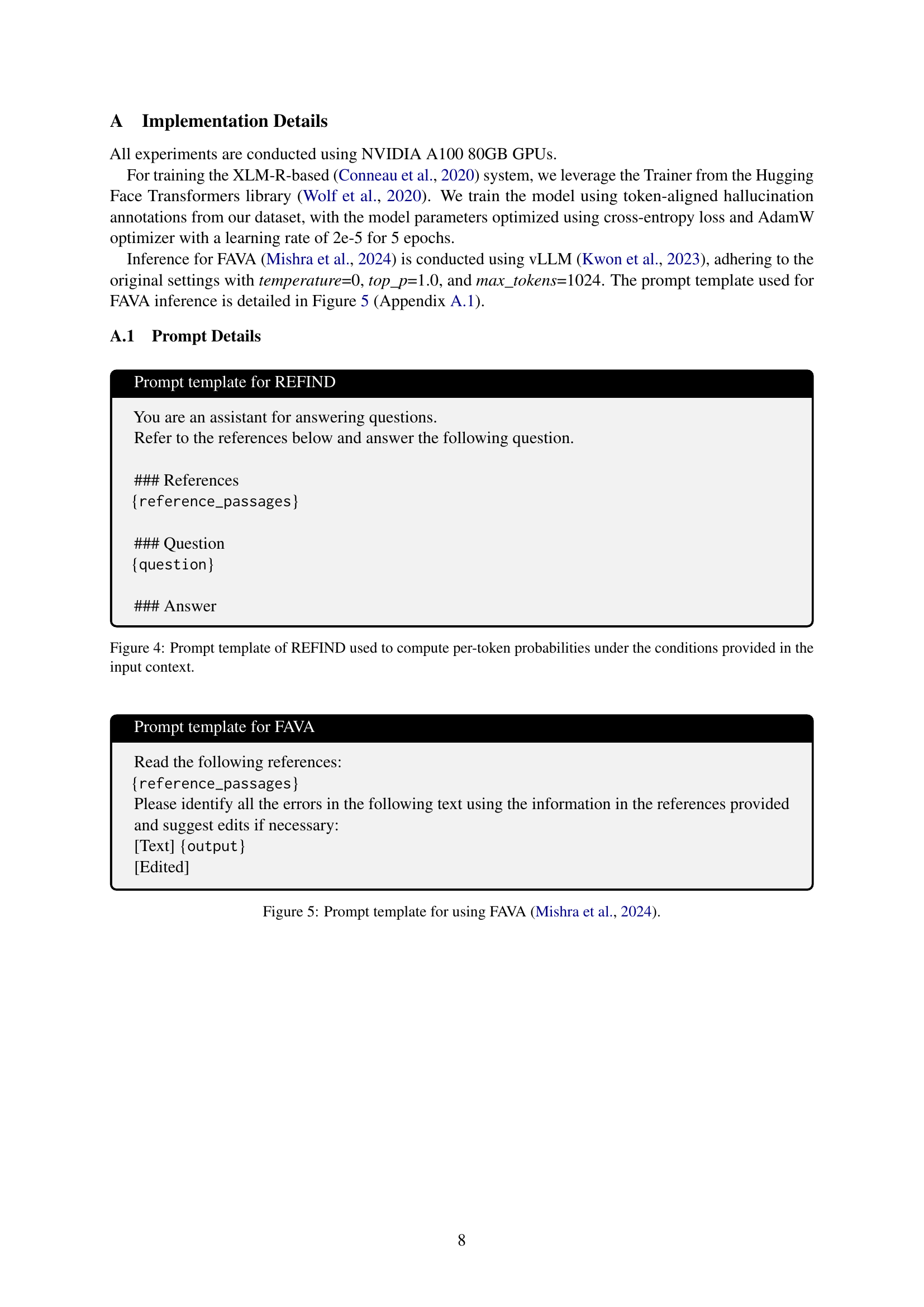

🔼 The figure illustrates the REFIND method’s workflow for detecting hallucinated spans in Large Language Model outputs. First, a retriever identifies relevant documents (D) based on a given question (q). Then, a frozen language model (Mθ) calculates the probability of generating each token (ti) both with and without the retrieved context. A Context Sensitivity Ratio (CSR) is computed for each token; tokens with a CSR exceeding a threshold (δ) are deemed as hallucinations.

read the caption

Figure 1: An overview of the proposed REFIND method. (1) Given a question q𝑞qitalic_q, a set of relevant documents 𝒟𝒟\mathcal{D}caligraphic_D is retrieved using a retriever ℛℛ\mathcal{R}caligraphic_R. (2) A frozen language model ℳθsubscriptℳ𝜃\mathcal{M}_{\theta}caligraphic_M start_POSTSUBSCRIPT italic_θ end_POSTSUBSCRIPT computes token probabilities pθ(ti∣⋅)subscript𝑝𝜃conditionalsubscript𝑡𝑖⋅p_{\theta}(t_{i}\mid\cdot)italic_p start_POSTSUBSCRIPT italic_θ end_POSTSUBSCRIPT ( italic_t start_POSTSUBSCRIPT italic_i end_POSTSUBSCRIPT ∣ ⋅ ) for each token tisubscript𝑡𝑖t_{i}italic_t start_POSTSUBSCRIPT italic_i end_POSTSUBSCRIPT, with and without the retrieved context 𝒟𝒟\mathcal{D}caligraphic_D. (3) The Context Sensitivity Ratio (CSR) is calculated for each token tisubscript𝑡𝑖t_{i}italic_t start_POSTSUBSCRIPT italic_i end_POSTSUBSCRIPT. Tokens with the CSR exceeding a predefined threshold δ𝛿\deltaitalic_δ are classified as hallucinations.

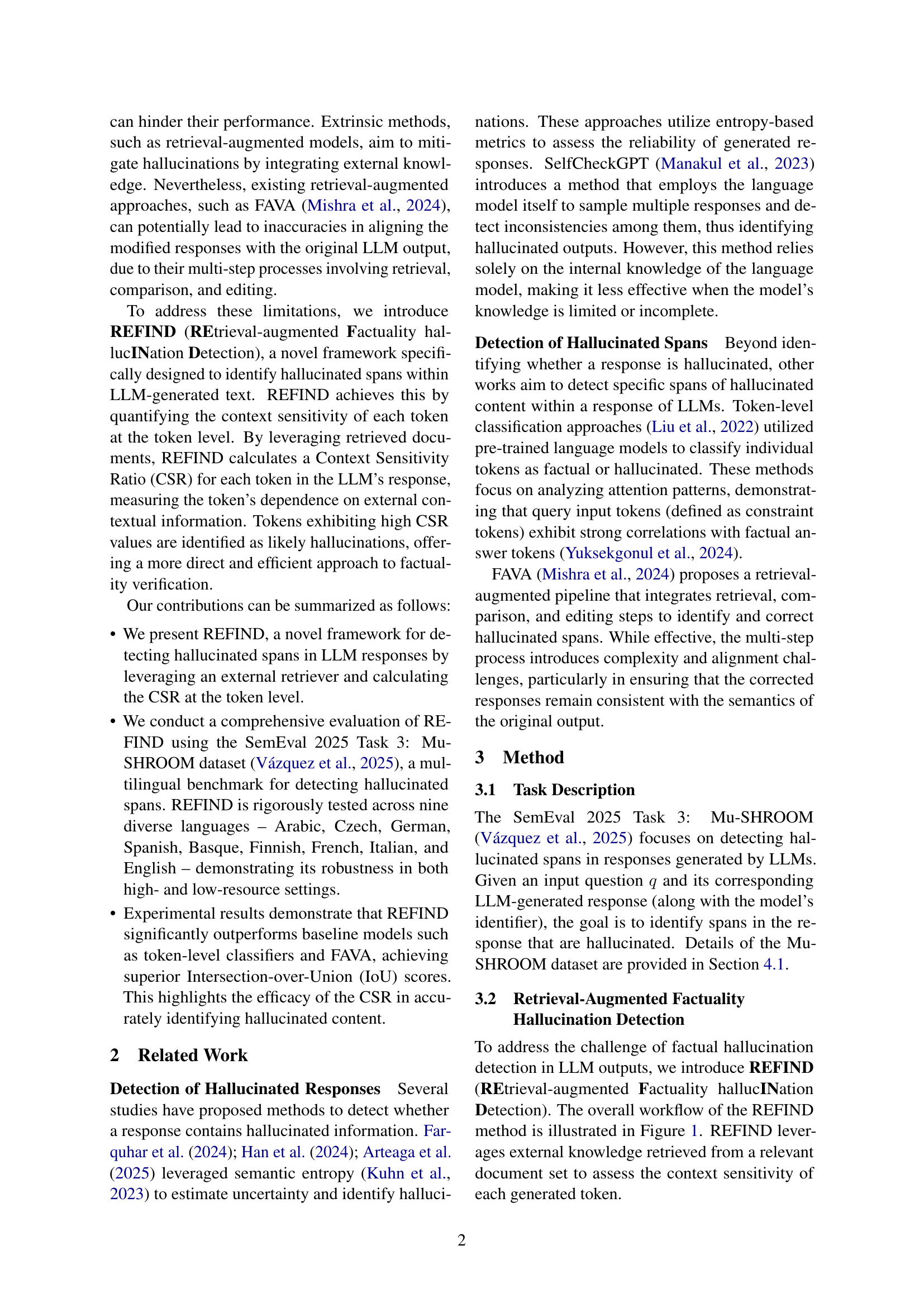

| Method | AR | CS | DE | EN | ES | EU | FI | FR | IT | Average |

|---|---|---|---|---|---|---|---|---|---|---|

| XLM-R | 0.0418 | 0.0957 | 0.0318 | 0.0310 | 0.0724 | 0.0208 | 0.0042 | 0.0022 | 0.0104 | 0.0345 |

| FAVA | 0.2168 | 0.2353 | 0.3862 | 0.2812 | 0.2348 | 0.3869 | 0.2300 | 0.2120 | 0.3255 | 0.2787 |

| REFIND | 0.3743 | 0.2761 | 0.3518 | 0.3525 | 0.2152 | 0.4074 | 0.5061 | 0.4734 | 0.3127 | 0.3633 |

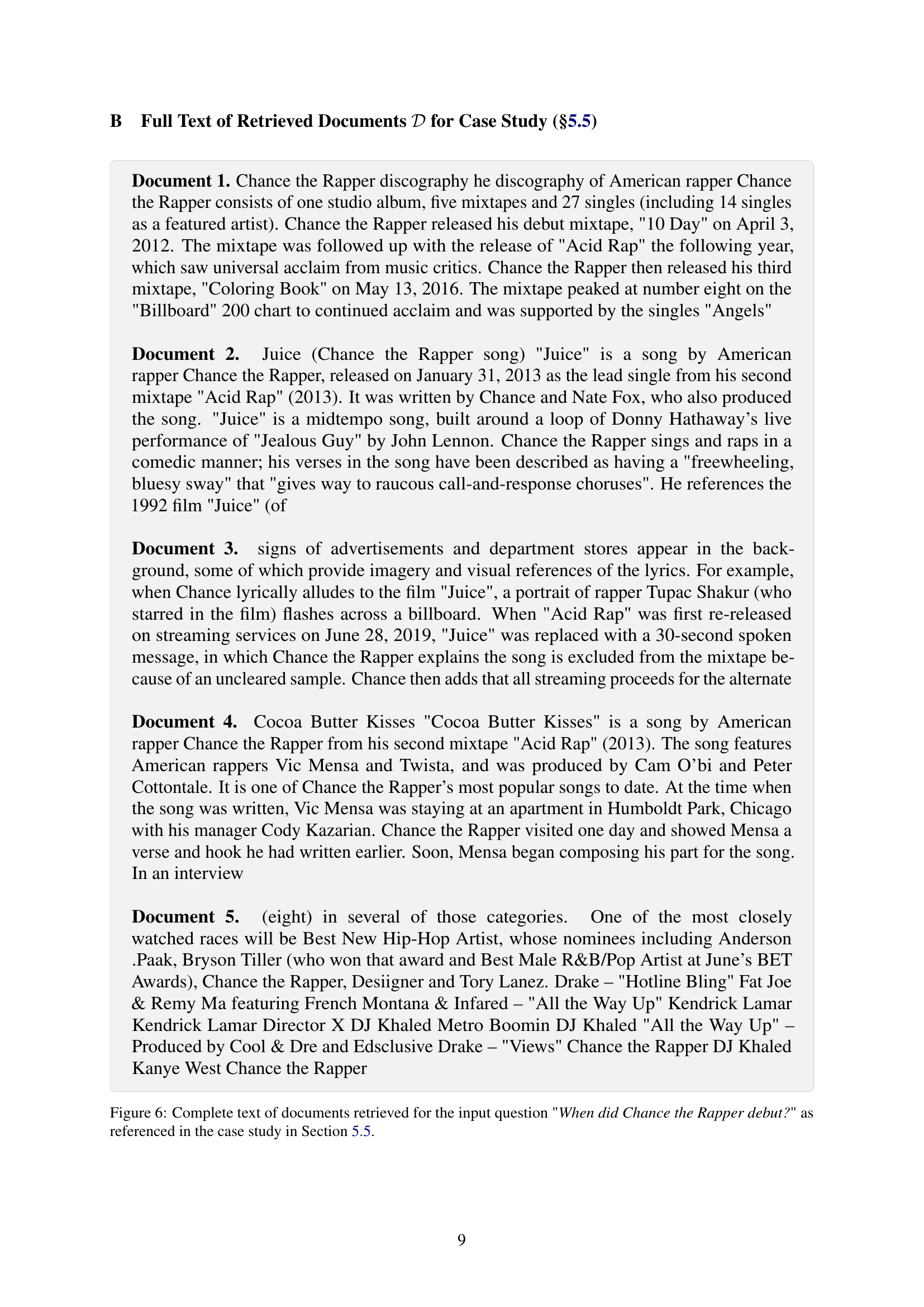

🔼 This table presents a quantitative comparison of the performance of three different methods for multilingual hallucination detection on the Mu-SHROOM dataset. The methods compared are REFIND (the proposed method), XLM-R (a token-level classifier), and FAVA (a retrieval-augmented approach). Performance is measured using the Intersection over Union (IoU) metric across eight languages: Arabic, Czech, German, English, Spanish, Basque, Finnish, French, and Italian. The results demonstrate REFIND’s superior performance across languages, particularly excelling in lower-resource languages, highlighting its effectiveness in multilingual settings.

read the caption

Table 1: Evaluation results on the Mu-SHROOM dataset Vázquez et al. (2025) using the IoU metric across eight languages: Arabic (AR), Czech (CS), German (DE), English (EN), Spanish (ES), Basque (EU), Finnish (FI), French (FR), and Italian (IT). The proposed method, REFIND, achieves the highest average IoU score, outperforming the baselines XLM-R and FAVA in most languages, demonstrating its effectiveness for multilingual hallucination detection.

Full paper#