TL;DR#

Diffusion Transformers(DiT) are powerful for text-to-image/video generation, existing methods suffer from high computational costs due to inefficient resource allocation, neglecting the varying importance of control info across layers. This leads to redundant parameters & computations, hindering efficiency in training/inference.

To solve this, the paper introduces RelaCtrl, a framework using “ControlNet Relevance Score” to evaluate each layer’s importance. It tailors control layer placement, parameter scale, & capacity based on relevance, reducing unnecessary resources. They propose Two-Dimensional Shuffle Mixer (TDSM) in copy blocks for efficient token/channel mixing. Experiments show RelaCtrl achieves better performance with fewer resources.

Key Takeaways#

Why does it matter?#

This research introduces RelaCtrl, a method that optimizes resource allocation in diffusion transformers. By evaluating layer relevance and streamlining control mechanisms, it offers significant efficiency gains, guiding future research in controllable generation and efficient model design.

Visual Insights#

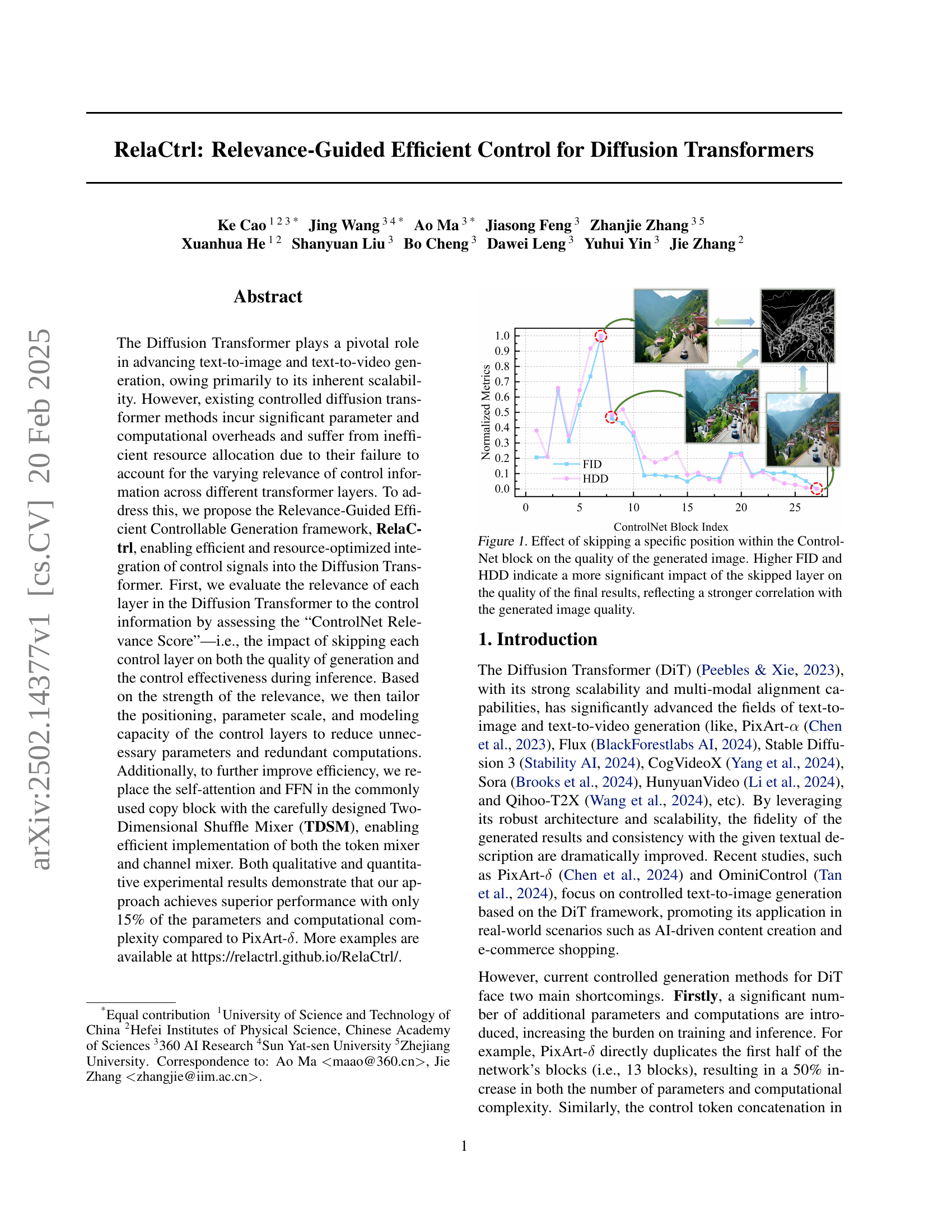

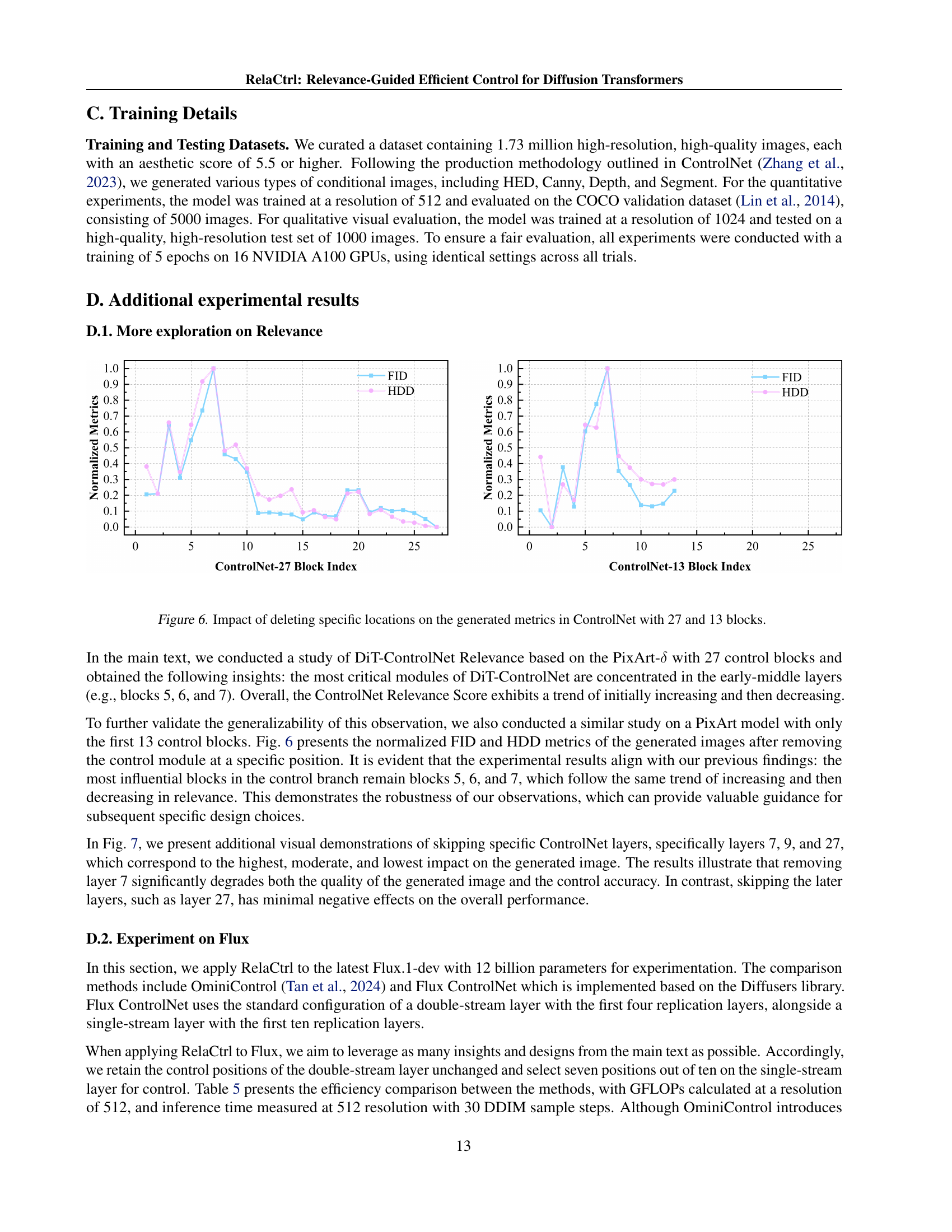

🔼 This figure shows the impact of removing individual ControlNet blocks on the quality of generated images. The x-axis represents the index of the ControlNet block, while the y-axis shows FID (Fréchet Inception Distance) and HDD (Hausdorff Distance) scores. Higher FID and HDD values indicate a greater negative impact on image quality and faithfulness to the control input, revealing a stronger correlation between that specific block and the final image generation. In essence, this plot visualizes the relevance of each ControlNet block to the overall quality and control effectiveness.

read the caption

Figure 1: Effect of skipping a specific position within the ControlNet block on the quality of the generated image. Higher FID and HDD indicate a more significant impact of the skipped layer on the quality of the final results, reflecting a stronger correlation with the generated image quality.

| Model | Method | Controllability | Quality | Text Consistency | Controllability | Quality | Text Consistency | ||

|---|---|---|---|---|---|---|---|---|---|

| Canny | Hed | ||||||||

| HDD | FID | CLIP-Ae | CLIP-Score | HDD | FID | CLIP-Ae | CLIP-Score | ||

| SD1.5 | Uni-ControlNet | 95.40 | 33.81 | 5.207 | 0.259 | 98.78 | 59.72 | 5.086 | 0.252 |

| Uni-Control | 97.90 | 91.29 | 4.965 | 0.249 | 100.52 | 91.94 | 4.819 | 0.251 | |

| SDXL | ControlNet-XS | 101.34 | 21.57 | 5.134 | 0.286 | - | - | - | - |

| ControlNext | 117.59 | 49.32 | 4.816 | 0.275 | - | - | - | - | |

| PixArt- | PixArt- | 96.26 | 21.38 | 5.508 | 0.279 | 98.91 | 29.22 | 5.243 | 0.275 |

| RelaCtrl | 94.04 | 20.34 | 5.584 | 0.282 | 96.11 | 27.73 | 5.451 | 0.276 | |

| Model | Method | Depth | Segmentation | ||||||

| MSE-d | FID | CLIP-Ae | CLIP-Score | mIoU | FID | CLIP-Ae | CLIP-Score | ||

| SD1.5 | Uni-ControlNet | 102.75 | 43.17 | 5.230 | 0.250 | 0.316 | 40.83 | 5.270 | 0.255 |

| Uni-Control | 102.46 | 91.94 | 5.327 | 0.249 | 0.382 | 40.74 | 5.462 | 0.258 | |

| SDXL | ControlNet-XS | 99.20 | 34.38 | 5.235 | 0.281 | - | - | - | - |

| ControlNext | 101.63 | 73.26 | 4.919 | 0.253 | - | - | - | - | |

| PixArt- | PixArt- | 96.26 | 21.38 | 5.508 | 0.279 | 0.379 | 35.50 | 5.668 | 0.282 |

| RelaCtrl | 99.11 | 33.93 | 5.887 | 0.285 | 0.405 | 33.76 | 5.702 | 0.287 | |

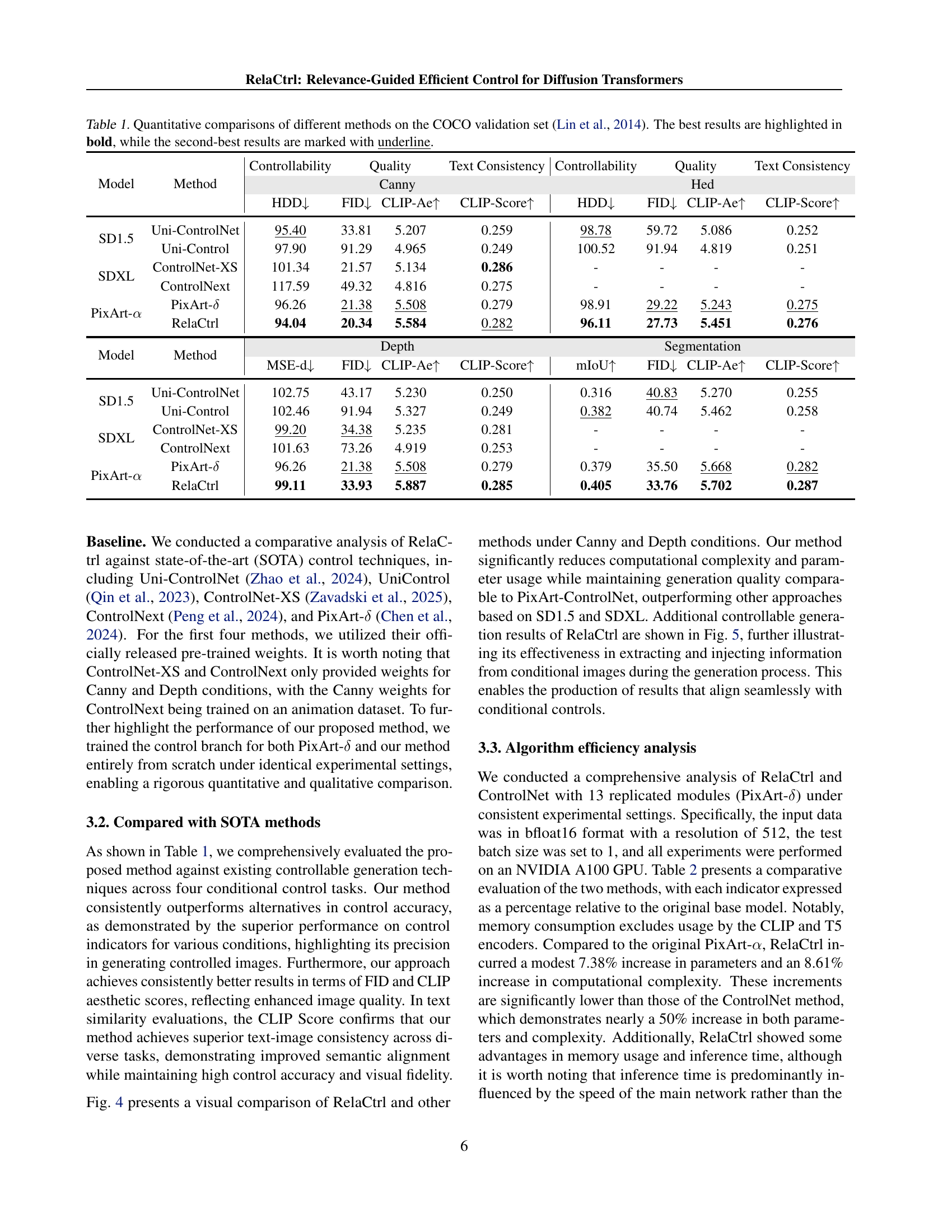

🔼 Table 1 presents a quantitative comparison of various controlled image generation methods on the COCO validation set. The table evaluates these methods across four different control tasks: Canny edge detection, depth estimation, semantic segmentation, and text-based control. For each control task and method, the table provides metrics assessing the quality of the generated images (FID, CLIP-Aesthetic, CLIP-Score) and how well the method followed the specified control (HDD, MSE-depth, mIoU). The best performing method for each metric is shown in bold, and the second-best is underlined. This allows for a direct comparison of the different methods’ performance and effectiveness for various controlled generation scenarios.

read the caption

Table 1: Quantitative comparisons of different methods on the COCO validation set (Lin et al., 2014). The best results are highlighted in bold, while the second-best results are marked with underline.

In-depth insights#

RelaCtrl’s Core#

RelaCtrl’s core innovation lies in its relevance-guided approach to efficient controllable generation within diffusion transformers. Unlike existing methods that apply uniform control settings across all layers, RelaCtrl intelligently allocates computational resources by analyzing the relevance of each transformer layer to the control information. This is achieved through the “ControlNet Relevance Score”, which quantifies the impact of each layer on both generation quality and control effectiveness. By prioritizing layers with high relevance and tailoring the parameter scale and modeling capacity of control layers accordingly, RelaCtrl minimizes unnecessary parameters and redundant computations. Furthermore, the introduction of the Two-Dimensional Shuffle Mixer (TDSM) as a replacement for self-attention and FFN in the copy block contributes to efficiency by enabling effective token and channel mixing with reduced complexity. This relevance-guided and resource-optimized integration of control signals constitutes the core of RelaCtrl, enabling superior performance with significantly reduced computational overhead compared to existing approaches like PixArt-d. The core also emphasizes on the point where RelaCtrl leverages the framework by designing relevance-guided allocation and steering strategies for efficient resource utilization. Control blocks are positioned at locations having high control information relevance, while locations having weak relevance are devoid of control blocks. Furthermore, it uses a Two-Dimensional Shuffle Mixer (TDSM) in order to reduce parameters and the copy block operation, and to replace the self-attention.

DiT Relevance#

Analyzing ‘DiT Relevance’ reveals that not all layers in Diffusion Transformers (DiTs) contribute equally to controlled generation. Early and middle layers often exhibit higher relevance to control signals, while deeper layers show diminished impact. This understanding challenges naive approaches like uniform copying of layers, highlighting the need for selective control integration. Resource allocation should prioritize layers with strong control relevance, leading to efficient and high-quality generation. Identifying crucial modules within DiTs enables targeted design and placement of control mechanisms, optimizing performance while minimizing computational overhead. This insight contrasts with observations in other models, emphasizing the unique control dynamics within DiTs.

TDSM Efficiently#

The paper introduces a Two-Dimensional Shuffle Mixer (TDSM) to enhance efficiency in diffusion transformers, specifically within control blocks. The core idea revolves around replacing standard self-attention and FFN layers with a more streamlined operation. TDSM achieves this by performing attention calculations within randomly divided channel and token groups, reducing computational complexity. The shuffle operation within TDSM disrupts token relationships to some extent. The shuffle operations help with the goal to model non-local relationships at both the token and channel levels. A reversible transformation pair should also be applied to ensure information preservation. TDSM is more efficient than the method it replaces.

SOTA Killer#

While “SOTA Killer” isn’t explicitly present, the paper implicitly aims to outperform existing state-of-the-art (SOTA) methods. The core strategy involves analyzing the relevance of control information across different layers of a Diffusion Transformer, enabling more efficient resource allocation. This contrasts with existing methods that often uniformly apply control signals, leading to redundancy. By introducing the Relevance-Guided Efficient Controllable Generation framework (RelaCtrl), the paper seeks to minimize computational overhead and parameter increases, common drawbacks of current controlled generation techniques. Furthermore, the proposal of the Two-Dimensional Shuffle Mixer (TDSM) suggests a novel approach to token and channel mixing, potentially enhancing performance while reducing complexity. The ultimate goal is to achieve superior or comparable results with significantly fewer parameters and computations, effectively “killing” the SOTA by offering a more efficient and resource-optimized solution for controlled diffusion transformer-based image generation.

Flux’s Blessing#

While the heading ‘Flux’s Blessing’ isn’t explicitly present, we can discuss potential blessings stemming from ‘Flux,’ a flow-matching-based video generation model integrating Transformer architecture. A key advantage might be enhanced efficiency in video creation. Flux’s architecture could lead to more streamlined and faster generation processes, reducing the computational resources needed. The integration of flow-matching may result in improved consistency and coherence across video frames. This could address common issues in video generation, such as flickering or disjointed transitions. Another blessing could be the potential for greater control over the generated content. The Transformer architecture offers precise manipulation of video elements based on textual prompts or conditional inputs. Also, It leads to better scalability.

More visual insights#

More on figures

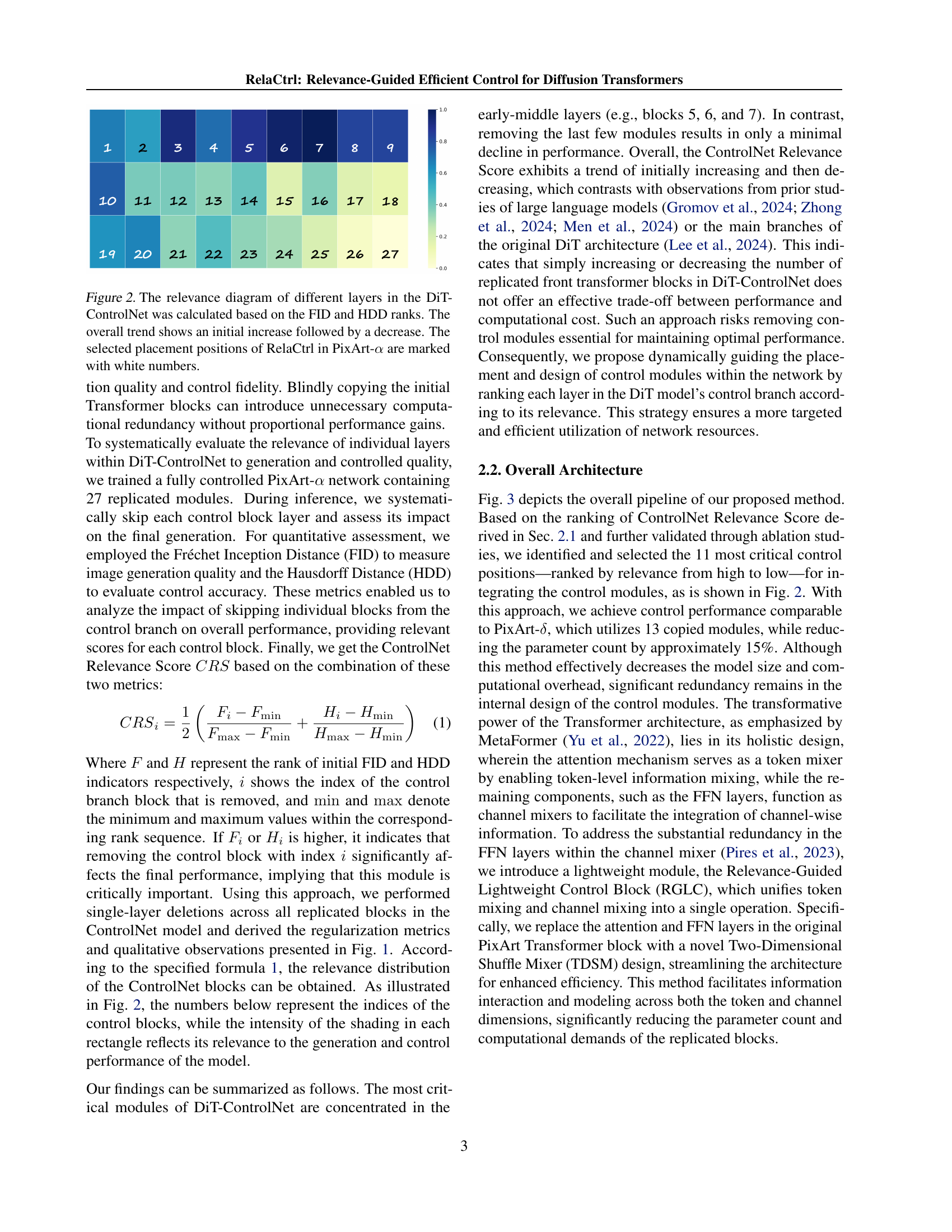

🔼 Figure 2 illustrates the relevance scores of different layers within the DiT-ControlNet architecture. These scores were computed using a combination of FID (Fréchet Inception Distance) and HDD (Hausdorff Distance) ranks, which measure image quality and control accuracy, respectively. A lower rank indicates higher quality or better control. The graph plots the relevance score for each layer, revealing a pattern of increasing relevance in the initial layers followed by a decline in deeper layers. The white numbers in the figure highlight the layers selected for RelaCtrl’s control mechanism in the PixArt-α model. This demonstrates that RelaCtrl strategically integrates control only into the most relevant layers, thereby increasing efficiency and reducing unnecessary computation.

read the caption

Figure 2: The relevance diagram of different layers in the DiT-ControlNet was calculated based on the FID and HDD ranks. The overall trend shows an initial increase followed by a decrease. The selected placement positions of RelaCtrl in PixArt-α𝛼\alphaitalic_α are marked with white numbers.

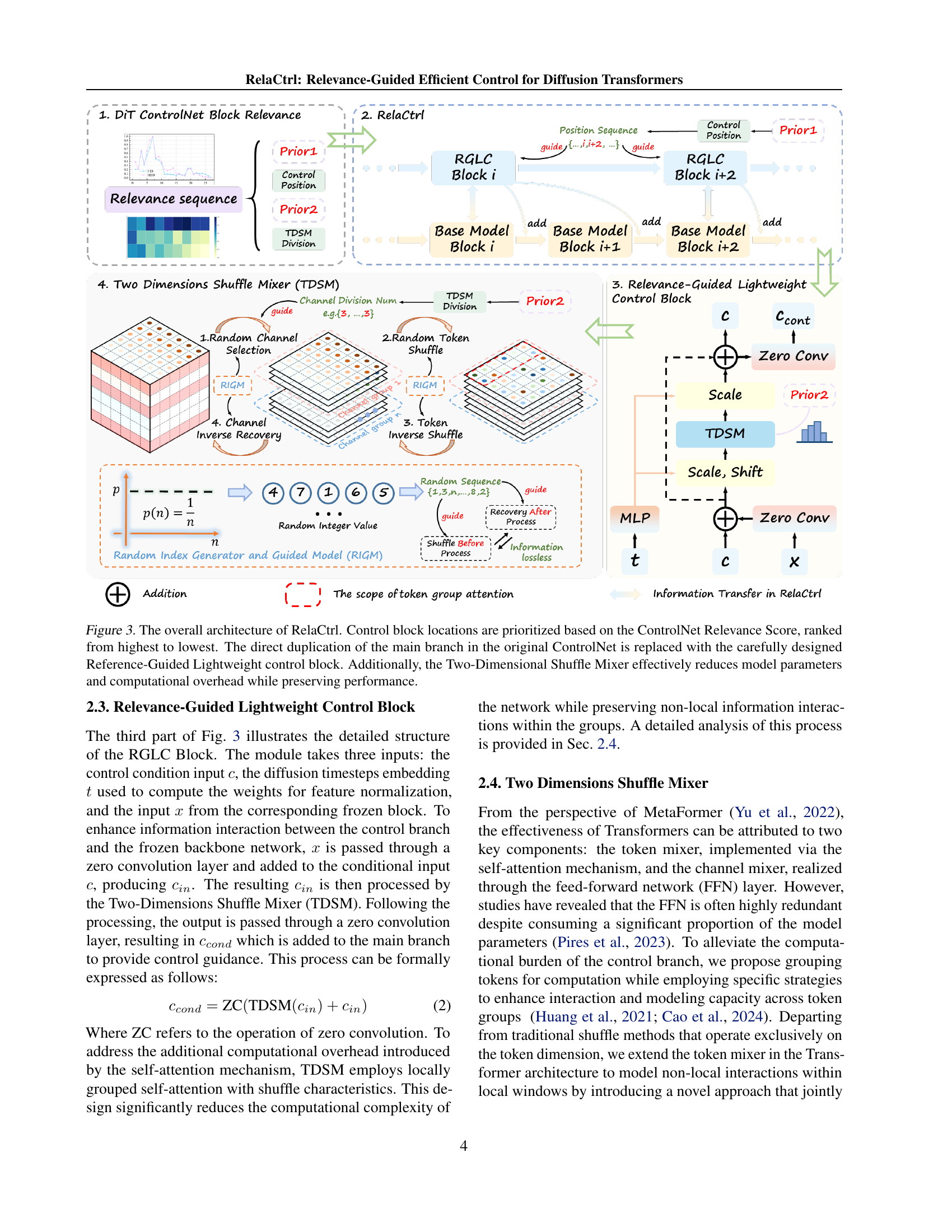

🔼 Figure 3 illustrates RelaCtrl’s architecture, which improves upon previous methods by prioritizing control block placement based on a relevance score. Instead of directly copying blocks from the main branch (as in prior ControlNet implementations), RelaCtrl uses a ‘Relevance-Guided Lightweight Control Block’ for efficiency. The figure highlights the use of a Two-Dimensional Shuffle Mixer (TDSM) within the control blocks. The TDSM replaces the computationally expensive self-attention and feed-forward network (FFN) layers in each control block, resulting in a reduced parameter count and computational overhead while maintaining performance.

read the caption

Figure 3: The overall architecture of RelaCtrl. Control block locations are prioritized based on the ControlNet Relevance Score, ranked from highest to lowest. The direct duplication of the main branch in the original ControlNet is replaced with the carefully designed Reference-Guided Lightweight control block. Additionally, the Two-Dimensional Shuffle Mixer effectively reduces model parameters and computational overhead while preserving performance.

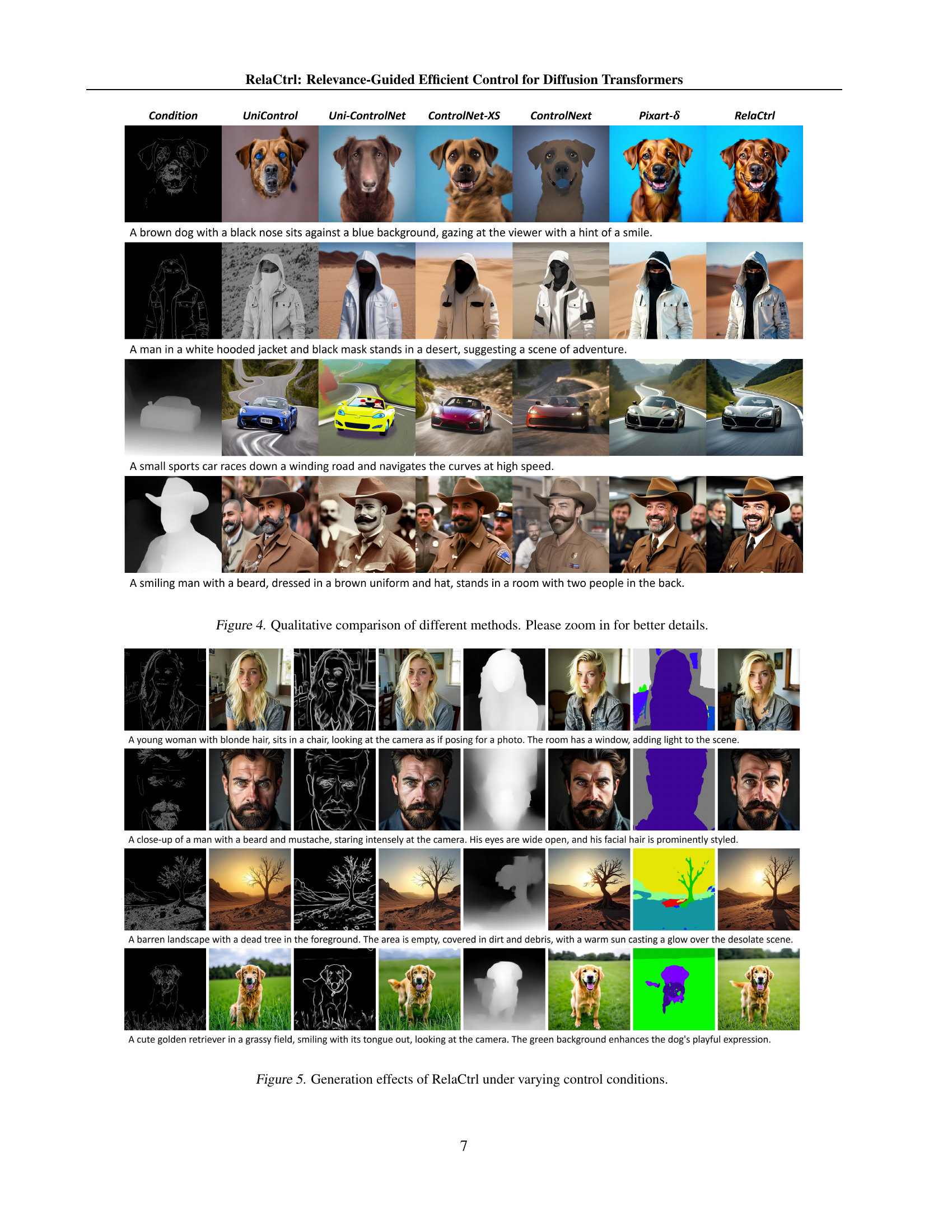

🔼 This figure shows a qualitative comparison of different image generation models’ performance on various control tasks. Each row represents a different image generation task with a text prompt, while each column displays the results of a different model. The models included are UniControl, Uni-ControlNet, ControlNet-XS, ControlNext, PixArt-8, and RelaCtrl. The images visually demonstrate the quality, control accuracy, and overall fidelity achieved by each model for each task. Zooming in allows for detailed comparison of the generated images.

read the caption

Figure 4: Qualitative comparison of different methods. Please zoom in for better details.

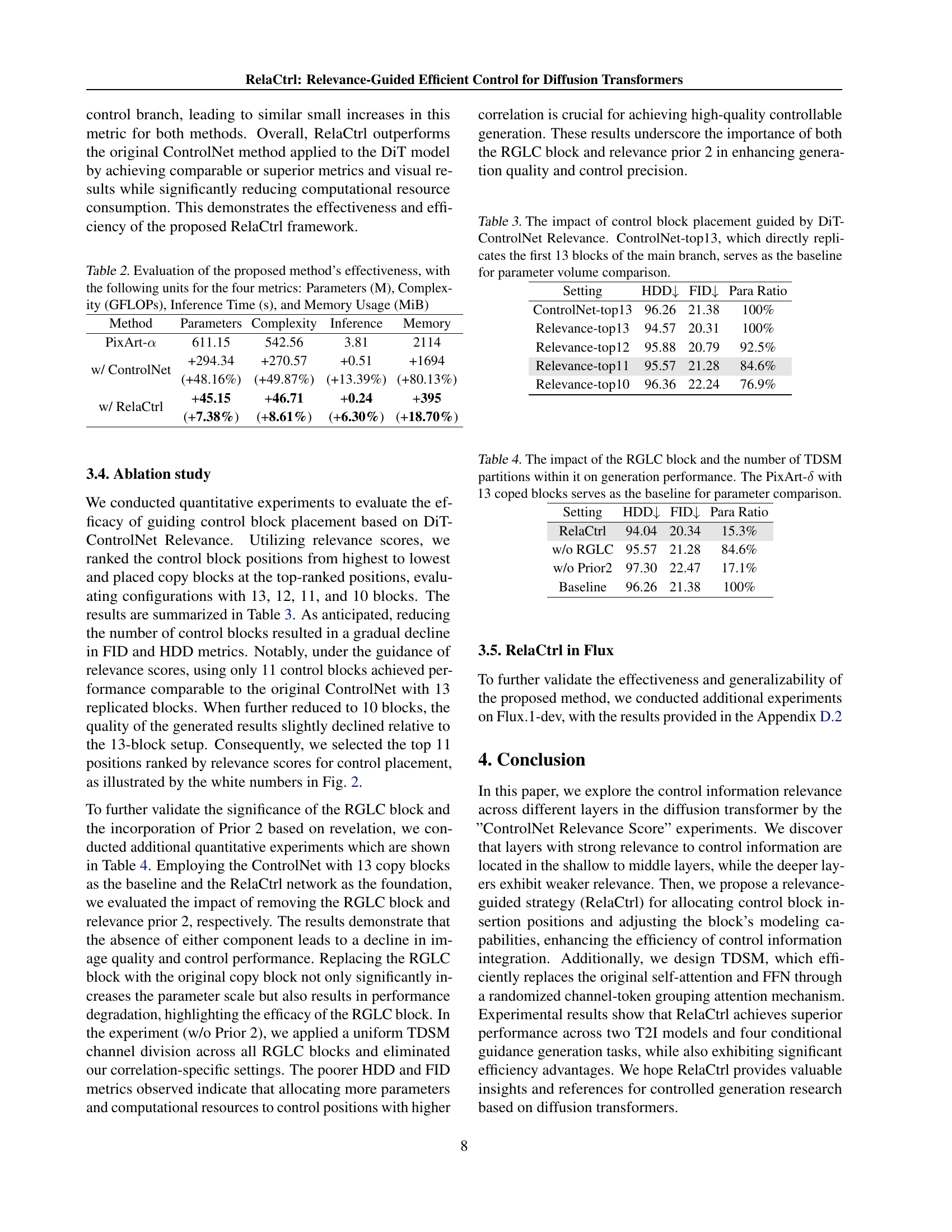

🔼 This figure shows several examples of images generated using the RelaCtrl model under different control conditions. Each row represents a different image generation task, with the control condition specified in text to the left of the corresponding images. The images generated with RelaCtrl exhibit various styles and features consistent with the provided control conditions. This demonstrates the model’s ability to produce high-quality and controlled images with a wide range of creative directions.

read the caption

Figure 5: Generation effects of RelaCtrl under varying control conditions.

🔼 This figure shows the impact of removing individual control blocks from the ControlNet architecture on the quality and control fidelity of generated images. Two separate experiments are shown: one with a ControlNet consisting of 27 blocks and another with 13 blocks. For each experiment, the FID (Fréchet Inception Distance) and HDD (Hausdorff Distance) scores are plotted against the index of the removed control block. The plots illustrate the relative importance of different control blocks for both image generation quality and effectiveness of control. The results highlight that the effects of removing a block vary across the model, with some blocks showing a more significant impact than others.

read the caption

Figure 6: Impact of deleting specific locations on the generated metrics in ControlNet with 27 and 13 blocks.

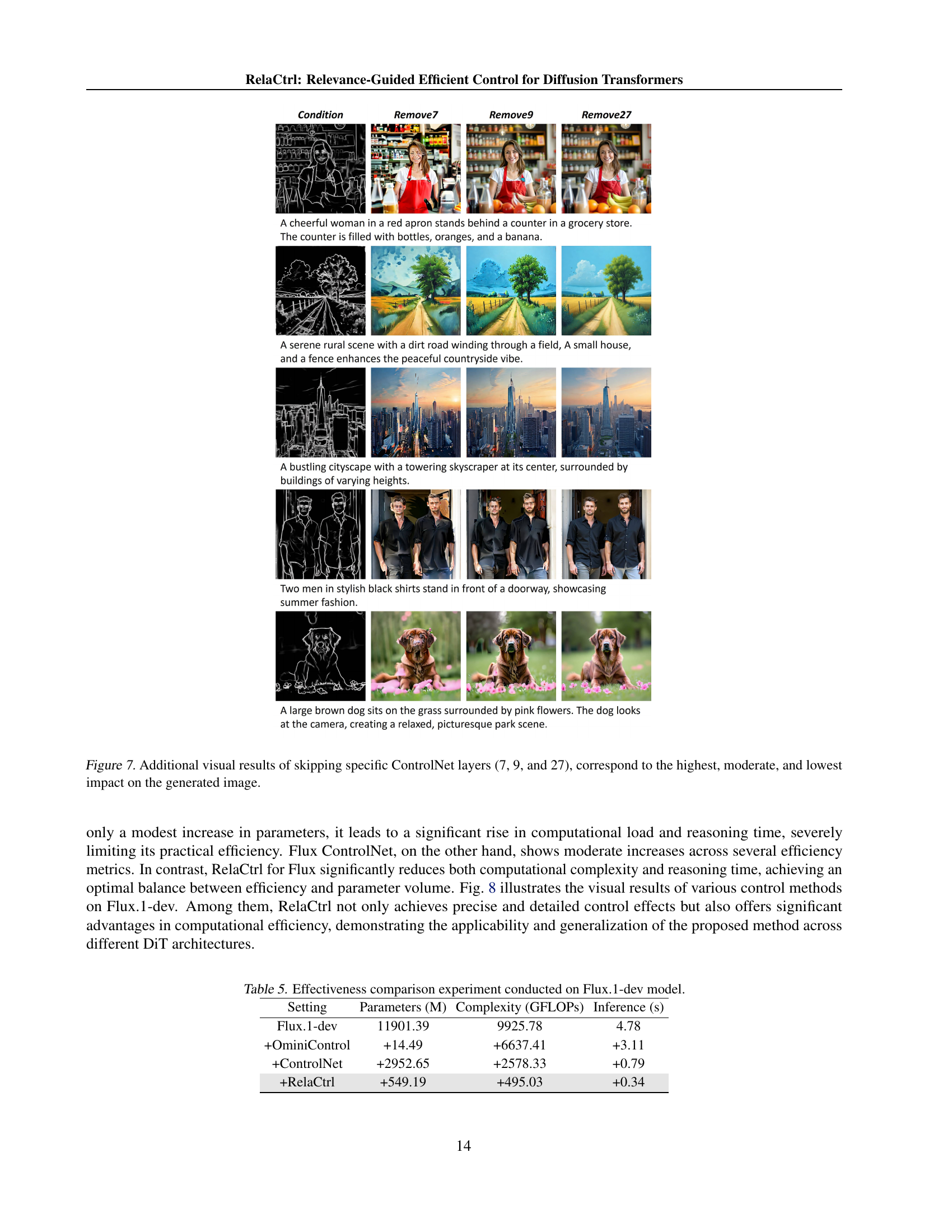

🔼 This figure shows additional examples illustrating the impact of removing different ControlNet layers on image generation quality. Specifically, it presents qualitative results for three scenarios: removing layer 7 (highest impact), layer 9 (moderate impact), and layer 27 (lowest impact). By comparing the generated images across these scenarios, the visualization emphasizes the varying degrees of influence that each layer exerts on both the generation quality and the accuracy of control.

read the caption

Figure 7: Additional visual results of skipping specific ControlNet layers (7, 9, and 27), correspond to the highest, moderate, and lowest impact on the generated image.

🔼 This figure shows a comparison of image generation results from three different methods: OminiControl, ControlNet, and RelaCtrl. Each method is applied to the Flux.1-dev model, demonstrating their respective capabilities in controlling the image generation process based on a given condition. The images show that each method produces different levels of control, with RelaCtrl achieving a better balance between high-quality generation and efficiency.

read the caption

Figure 8: Visual comparison of different control methods on Flux.1-dev.

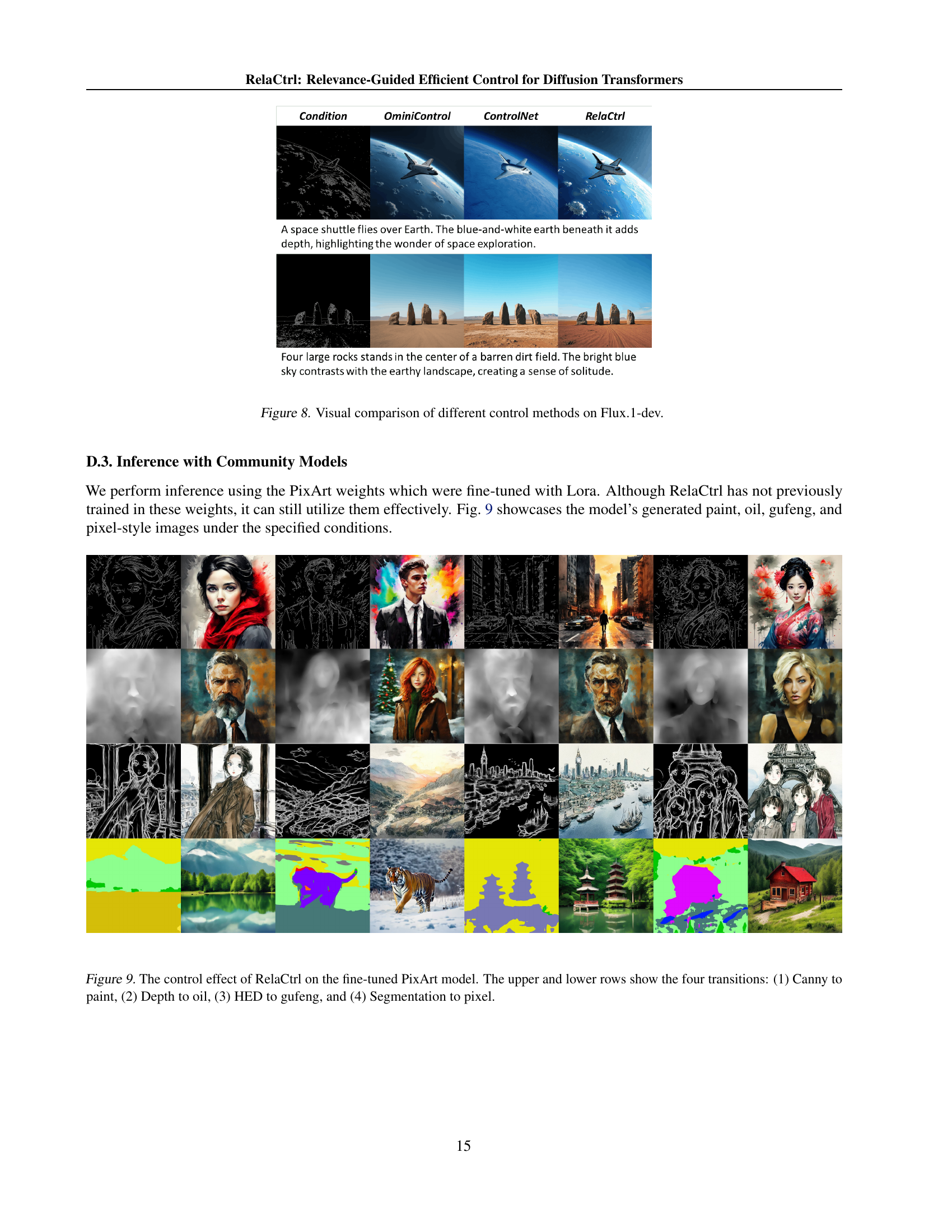

🔼 This figure showcases the results of applying the RelaCtrl method to a fine-tuned PixArt model. The images demonstrate the model’s ability to effectively translate different control inputs into various artistic styles. Four image transformation examples are presented: (1) A Canny edge detection input transformed into a painting style, (2) Depth information translated into an oil painting style, (3) HED edge detection transformed into a ‘gufeng’ (Chinese classical painting) style, and (4) A segmentation map resulting in a pixel art style image. Each row illustrates the input and output for a single transition, highlighting RelaCtrl’s capacity for diverse style transfer under different controlled conditions.

read the caption

Figure 9: The control effect of RelaCtrl on the fine-tuned PixArt model. The upper and lower rows show the four transitions: (1) Canny to paint, (2) Depth to oil, (3) HED to gufeng, and (4) Segmentation to pixel.

More on tables

| Method | Parameters | Complexity | Inference | Memory |

| PixArt- | 611.15 | 542.56 | 3.81 | 2114 |

| w/ ControlNet | +294.34 | +270.57 | +0.51 | +1694 |

| (+48.16%) | (+49.87%) | (+13.39%) | (+80.13%) | |

| w/ RelaCtrl | +45.15 | +46.71 | +0.24 | +395 |

| (+7.38%) | (+8.61%) | (+6.30%) | (+18.70%) |

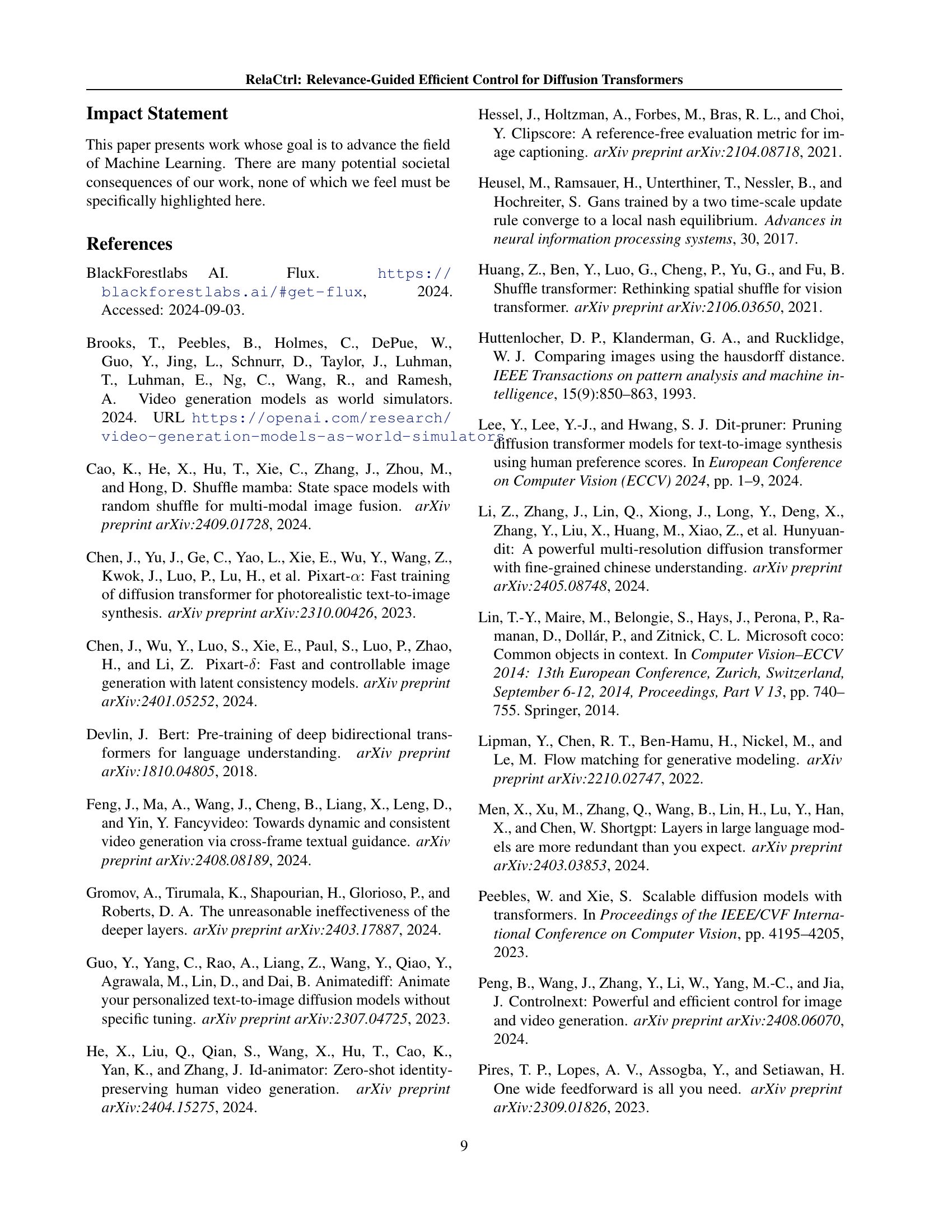

🔼 This table presents a quantitative comparison of the computational efficiency and resource usage of the proposed RelaCtrl model against the baseline PixArt-a model with a ControlNet. It shows the number of parameters (in millions), computational complexity (in GFLOPs), inference time (in seconds), and memory usage (in MiBs) for both models. The results highlight the significant reduction in computational cost achieved by RelaCtrl while maintaining comparable performance.

read the caption

Table 2: Evaluation of the proposed method’s effectiveness, with the following units for the four metrics: Parameters (M), Complexity (GFLOPs), Inference Time (s), and Memory Usage (MiB)

| Setting | HDD | FID | Para Ratio |

|---|---|---|---|

| ControlNet-top13 | 96.26 | 21.38 | 100% |

| Relevance-top13 | 94.57 | 20.31 | 100% |

| Relevance-top12 | 95.88 | 20.79 | 92.5% |

| Relevance-top11 | 95.57 | 21.28 | 84.6% |

| Relevance-top10 | 96.36 | 22.24 | 76.9% |

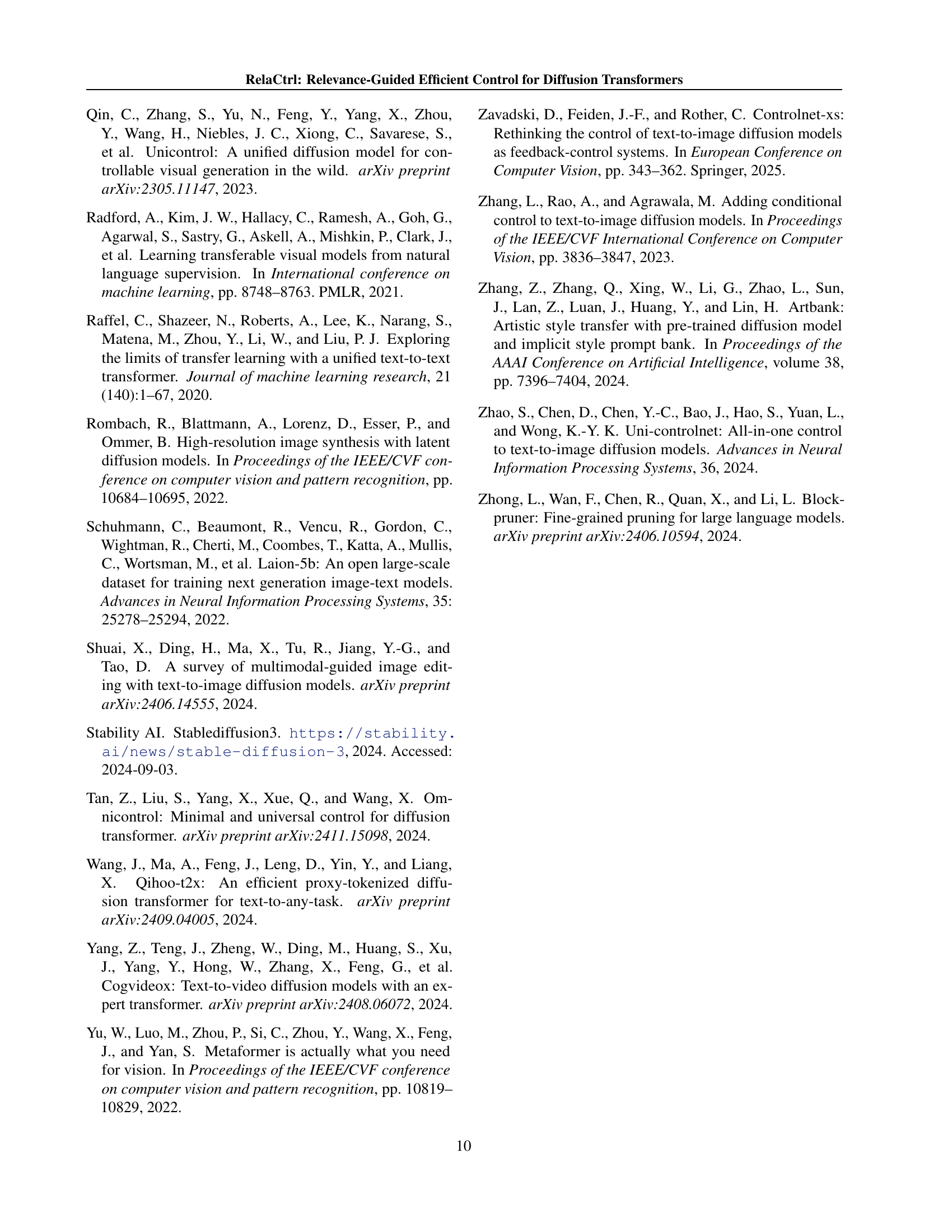

🔼 This table presents a quantitative analysis of the impact of using DiT-ControlNet Relevance scores to guide the placement of control blocks in a diffusion transformer model. It compares the performance of using different numbers of control blocks (10, 11, 12, and 13) in terms of FID (Fréchet Inception Distance) and HDD (Hausdorff Distance). The experiment is designed to evaluate the efficiency of the proposed Relevance-Guided approach. The baseline for comparison is ControlNet-top13, a method that directly replicates the first 13 blocks of the main branch, thus showcasing the relative efficiency gains from using fewer, more strategically placed control blocks.

read the caption

Table 3: The impact of control block placement guided by DiT-ControlNet Relevance. ControlNet-top13, which directly replicates the first 13 blocks of the main branch, serves as the baseline for parameter volume comparison.

| Setting | HDD | FID | Para Ratio |

|---|---|---|---|

| RelaCtrl | 94.04 | 20.34 | 15.3% |

| w/o RGLC | 95.57 | 21.28 | 84.6% |

| w/o Prior2 | 97.30 | 22.47 | 17.1% |

| Baseline | 96.26 | 21.38 | 100% |

🔼 This table presents an ablation study analyzing the impact of the Relevance-Guided Lightweight Control Block (RGLC) and the Two-Dimensional Shuffle Mixer (TDSM) on the performance of the RelaCtrl model. It compares RelaCtrl’s performance against a baseline model (PixArt-d with 13 copied blocks), showing the effects of removing the RGLC block and altering the number of TDSM partitions. The metrics evaluated are HDD (Hausdorff Distance), FID (Fréchet Inception Distance), and the relative parameter count compared to the baseline.

read the caption

Table 4: The impact of the RGLC block and the number of TDSM partitions within it on generation performance. The PixArt-δ𝛿\deltaitalic_δ with 13 coped blocks serves as the baseline for parameter comparison.

| Setting | Parameters (M) | Complexity (GFLOPs) | Inference (s) |

|---|---|---|---|

| Flux.1-dev | 11901.39 | 9925.78 | 4.78 |

| +OminiControl | +14.49 | +6637.41 | +3.11 |

| +ControlNet | +2952.65 | +2578.33 | +0.79 |

| +RelaCtrl | +549.19 | +495.03 | +0.34 |

🔼 This table presents a comparison of the efficiency of different methods for controlled image generation using the Flux.1-dev model. It compares the number of parameters, computational complexity (GFLOPs), and inference time for four approaches: the base Flux.1-dev model, Flux.1-dev with OminiControl, Flux.1-dev with ControlNet, and Flux.1-dev with RelaCtrl. The results highlight the computational efficiency gains achieved by RelaCtrl.

read the caption

Table 5: Effectiveness comparison experiment conducted on Flux.1-dev model.

Full paper#