TL;DR#

Conversational assistants require question rewriting algorithms to provide accurate answers. The exact rewriting approach depends on the use case and application-specific tasks. Two approaches are investigated: rewriting, which focuses on refining the question, and fusion, which combines elements from previous interactions. These are evaluated on two fundamentally different generation tasks.

The paper systematically investigates these approaches on text-to-text and multimodal generation tasks. The results show that the specific approach depends on the underlying use case and generative task. Query rewriting works best for conversational question-answering, while query fusion excels for data analysis assistants generating visualizations and data tables.

Key Takeaways#

Why does it matter?#

This paper enhances conversational assistants by systematically investigating query rewriting approaches. The findings reveal the importance of context-aware strategies, offering valuable insights and guidance for future research and development in dialogue systems and data analysis assistance.

Visual Insights#

| Conversation | Rewritten Question | |

|---|---|---|

| 1 | compare monthly revenue by country | compare monthly revenue by country |

| 2 | yearly | compare yearly revenue by country |

| 3 | show it as a line chart | compare yearly revenue by country as line chart |

| 4 | now change to marketing channel | compare yearly revenue by marketing channel as line chart |

| 5 | what about month over month as bar | compare month over month revenue by marketing channel as bar |

| 6 | replace with pageviews | compare month over month pageviews by marketing channel as bar |

| 7 | show top-3 | compare month over month pageviews by top-3 marketing channels as bar |

| 8 | what about top-5 | compare month over month pageviews by top-5 marketing channels as bar |

| 9 | show only this month | compare this month pageviews by top-5 marketing channels as bar |

| 10 | add revenue | compare this month pageviews and revenue by top-5 marketing channels as bar |

🔼 This table presents example interactions between a user and a conversational AI assistant. Each row shows a sequence of user queries (Conversation) and the corresponding rewritten question generated by the query fusion method (described in Section 3.3 of the paper) with a context window of the last query (k=1). The rewritten questions aim to be more precise and comprehensive than the user’s original input, facilitating accurate responses from the assistant.

read the caption

Table 1: Conversation that a user may have with an assistant and the corresponding rewritten question from our query fusion in Sec. 3.3 with k=1𝑘1k=1italic_k = 1.

In-depth insights#

LLM QR Adaptivity#

The adaptivity of LLMs in Query Rewriting (QR) is a critical area. LLMs, while powerful, may not always perform optimally across diverse tasks without careful adaptation. Specialized modules tailored to specific query types and use cases might be necessary instead of a one-size-fits-all approach. Context-dependent strategies are crucial because generic terms can have specific, context-dependent meanings. Practitioners must consider their specific use case and the nature of queries to design effective rewrite strategies, requiring a more nuanced, context-dependent approach is essential. LLM-based query rewriting can also experience concept drift, deviating from the original intention. A systematic evaluation across domains is needed to determine if specialized modules are indeed necessary, ensuring that the design of robust conversational assistants benefits from understanding whether to make use of context or not. The context includes short and long conversational data analysis tasks for generating visualizations, as well as text-based question answering tasks.

Rewrite vs. Fusion#

The paper explores two distinct approaches to query modification for conversational AI: rewriting and fusion. Rewriting aims to generate a more self-contained and explicit query based on the context of past interactions, essentially reformulating the user’s intent. Fusion, on the other hand, focuses on combining elements from previous queries to create a new query that synthesizes the ongoing conversation. The effectiveness of each approach seems to depend heavily on the specific task. Rewriting excels when the user’s intent requires clarification and a clear restatement of the question, particularly for question-answering scenarios. Fusion shines when the task involves iteratively building upon previous information, such as in data analysis where users progressively refine visualizations or data tables. In essence, rewriting offers clarity, while fusion provides a concise summary of the interaction. The choice between them involves a trade-off between explicit clarification and contextual synthesis, influenced by the nature of the task.

Context is key#

In conversational AI, the context of a user’s query is paramount for accurate and relevant responses. Context encompasses the history of the conversation, including previous questions, answers, and any implied information. Failing to consider context can lead to misinterpretations and irrelevant answers. Effective context management involves tracking the dialogue history and employing mechanisms to resolve ambiguities and maintain coherence. Question rewriting (QR) techniques become crucial to properly take the context into account to respond accurately to users. Furthermore, the ability to seamlessly integrate information gleaned from prior interactions is essential for providing a satisfying user experience. The dynamic nature of conversations underscores the importance of adaptive models capable of adjusting their understanding as new information is revealed. Models must be capable of weighting recent history more strongly than the older history. Finally, accurately discerning user intent amidst complex and evolving dialogues requires sophisticated contextual analysis, highlighting the importance of context.

QR:Text & Vis#

Query Rewriting (QR) for Text and Visualizations addresses the problem of adapting user queries in conversational interfaces that involve both textual and visual data. It’s essential when users interact with systems that generate charts or visualizations based on their input. The core challenge lies in maintaining context across turns, ensuring the generated visualizations accurately reflect the user’s evolving intent. Effective QR methods in this domain must handle ambiguity, resolve underspecification, and seamlessly integrate new information into existing queries. QR approaches can involve query expansion with relevant terms, or rewriting the query with similar phrases, leveraging LLMs. The ultimate goal is to improve the accuracy and relevance of the generated visualizations, leading to more efficient and insightful data analysis.

Fusion for data#

While the provided text doesn’t explicitly have a section labeled “Fusion for Data,” the concept of “query fusion,” which is discussed in the paper, is closely related. Query fusion, in this context, seems to involve combining the current user query with a summary or representation of past interactions to create a more contextually aware and effective query. This is particularly relevant in data analysis scenarios where user questions often build upon previous ones. The study highlights that for conversational data analysis assistants generating visualizations, query fusion outperforms query rewriting. This suggests that summarizing the conversational history and integrating it directly into the query leads to better results than simply rewriting the current query in isolation. The paper also finds that query fusion can handle conversations of arbitrary length, as it recursively generates a rewritten question that summarizes the conversation up to that point. This contrasts with query rewriting, which requires specifying a fixed length of chat history to consider, a process prone to errors. Thus, query fusion creates a more adaptable and user-centric experience by organically adapting to different interactions.

More visual insights#

More on tables

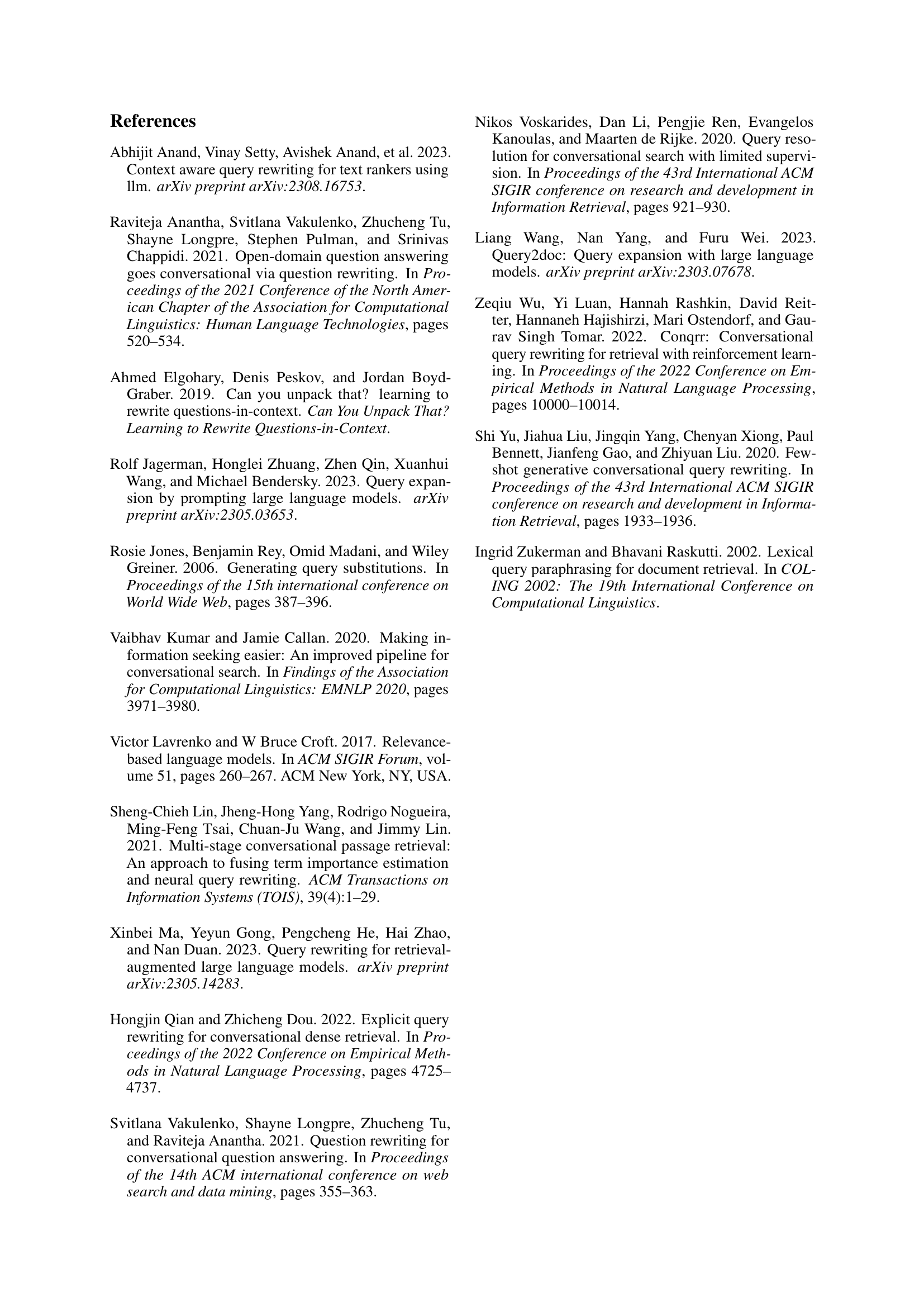

| # Questions | # Questions with Chat History | Chat Length | Question Types | |

|---|---|---|---|---|

| Text-based Q&A | 179 | 136 | 2-5 | 3 |

| Text-to-Vis (long conv.) | 794 | 715 | 10 | 7 |

| Text-to-Vis (short conv.) | 171 | 161 | 2 | 3 |

🔼 This table presents a summary of the statistics for three datasets used in the paper’s experiments. It details the number of questions in each dataset, the number of those questions that have associated chat history (indicating a conversational context), the average length of the chat history (number of turns in the conversation), and the types of questions included in each dataset.

read the caption

Table 2: Summary of the datasets and their statistics

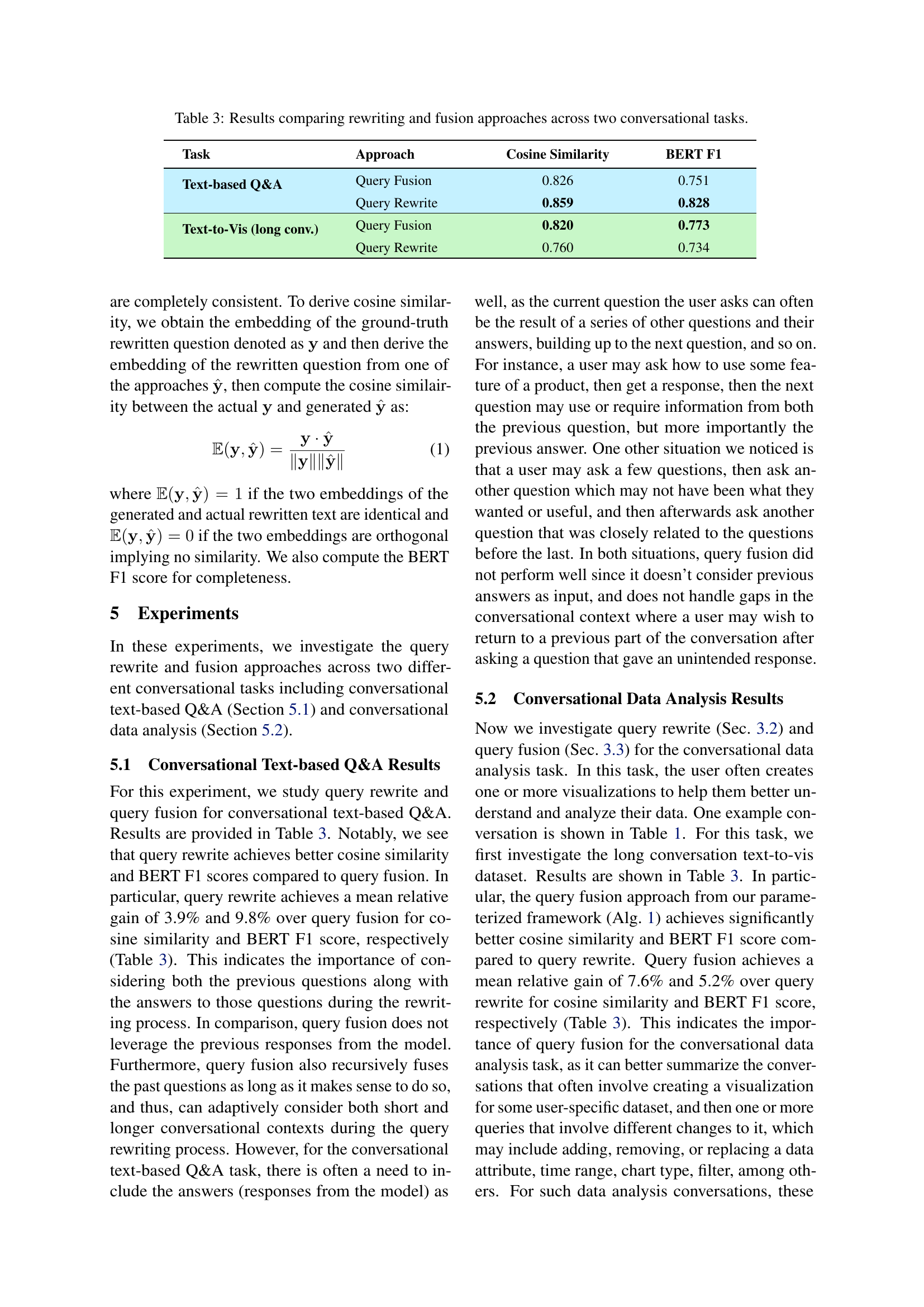

| Task | Approach | Cosine Similarity | BERT F1 |

|---|---|---|---|

| Text-based Q&A | Query Fusion | 0.826 | 0.751 |

| Query Rewrite | 0.859 | 0.828 | |

| Text-to-Vis (long conv.) | Query Fusion | 0.820 | 0.773 |

| Query Rewrite | 0.760 | 0.734 | |

🔼 This table presents a comparison of two query rewriting approaches (rewriting and fusion) applied to two distinct conversational tasks: text-based question answering and text-to-visualization. For each task, the table shows the performance of both approaches using two metrics: cosine similarity and BERT F1 score. This allows for a quantitative assessment of which approach (rewriting or fusion) is more effective for each specific task.

read the caption

Table 3: Results comparing rewriting and fusion approaches across two conversational tasks.

| Approach | Cosine Sim. | BERT F1 |

|---|---|---|

| Query Fusion | 0.925 | 0.856 |

| Query Rewrite | 0.857 | 0.837 |

🔼 This table presents a comparison of the performance of query rewriting and query fusion approaches on a short text-to-visualization conversational task. It shows the cosine similarity and BERT F1 scores achieved by each approach, providing a quantitative assessment of their effectiveness in generating accurate and relevant rewritten questions for this specific type of conversational task.

read the caption

Table 4: Results comparing rewriting and fusion approaches for short text-to-vis conversational task.

| Task | Cosine Sim. | BERT F1 |

|---|---|---|

| Text-based Q&A | 0.871 | 0.859 |

| Text-to-Vis (long conv.) | 0.769 | 0.740 |

🔼 This table presents the results of a comparative analysis between two query rewriting approaches, with and without an ambiguity detection classifier. It shows the performance of each approach on two conversational tasks: text-based question answering and long conversational text-to-visualization, using cosine similarity and BERT F1 score as evaluation metrics. The inclusion of an ambiguity detection step aims to enhance the performance of query rewriting by addressing ambiguous queries.

read the caption

Table 5: Results comparing rewriting with an ambiguity detection classifier.

Full paper#