TL;DR#

Creating high-fidelity 3D meshes with arbitrary topology remains a big challenge. Existing implicit field methods often need costly conversions that degrade details, and other approaches struggle with high resolutions. To tackle these issues, this paper introduces a novel sparse-structured isosurface representation that enables differentiable mesh reconstruction at high resolutions directly from rendering losses.

The authors introduce SparseFlex, combining the accuracy of Flexicubes with a sparse voxel structure, focusing on surface-adjacent regions and efficiently handling open surfaces. A frustum-aware sectional voxel training strategy is introduced, activating only relevant voxels during rendering, dramatically reducing memory consumption and enabling high-resolution training. A variational autoencoder and a rectified flow transformer enable high-quality 3D shape generation with state-of-the-art reconstruction accuracy.

Key Takeaways#

Why does it matter?#

This paper introduces SparseFlex, a novel representation for high-fidelity 3D shape modeling. Its capacity to handle arbitrary topologies and complex geometries will be invaluable for researchers aiming to enhance 3D generative models and achieve more realistic and detailed 3D reconstructions.

Visual Insights#

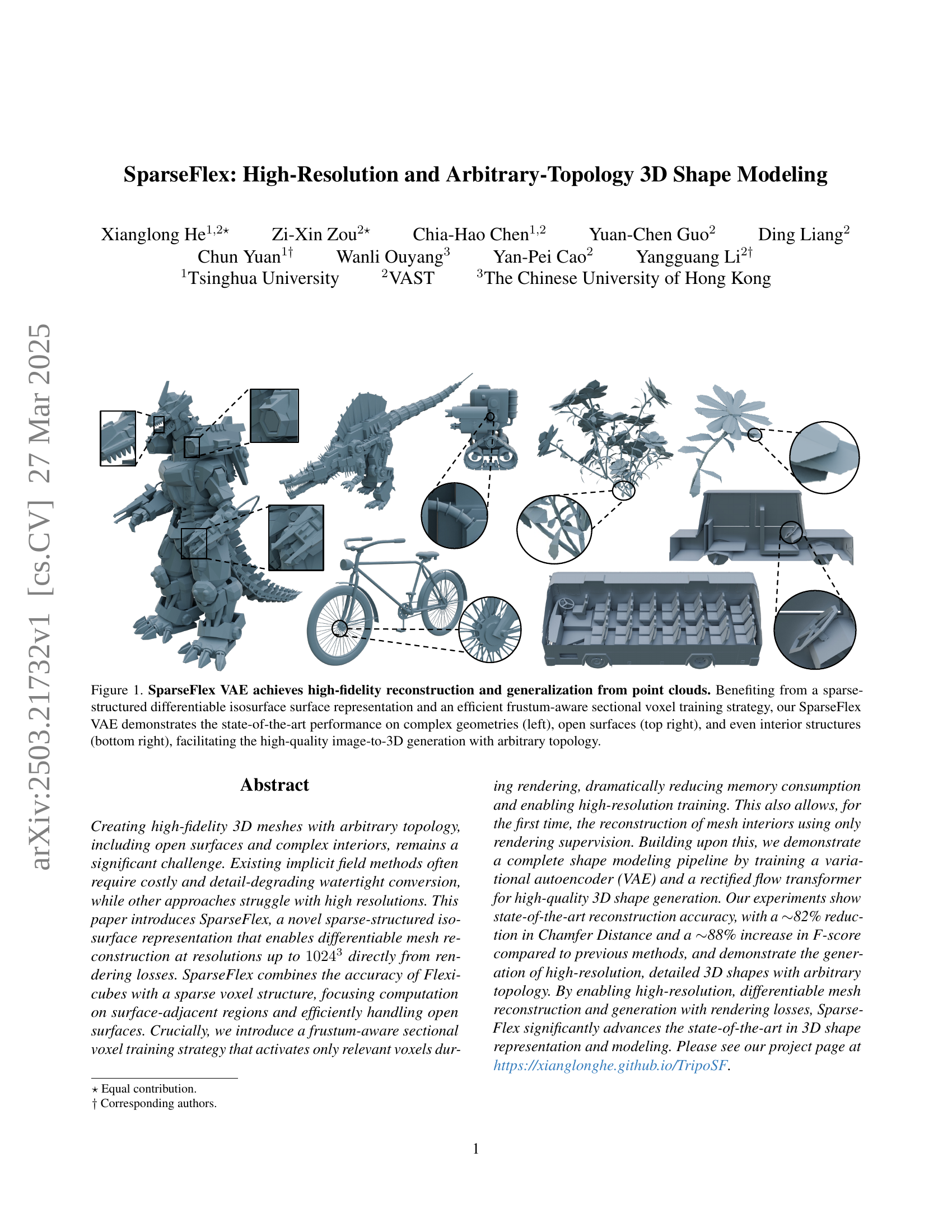

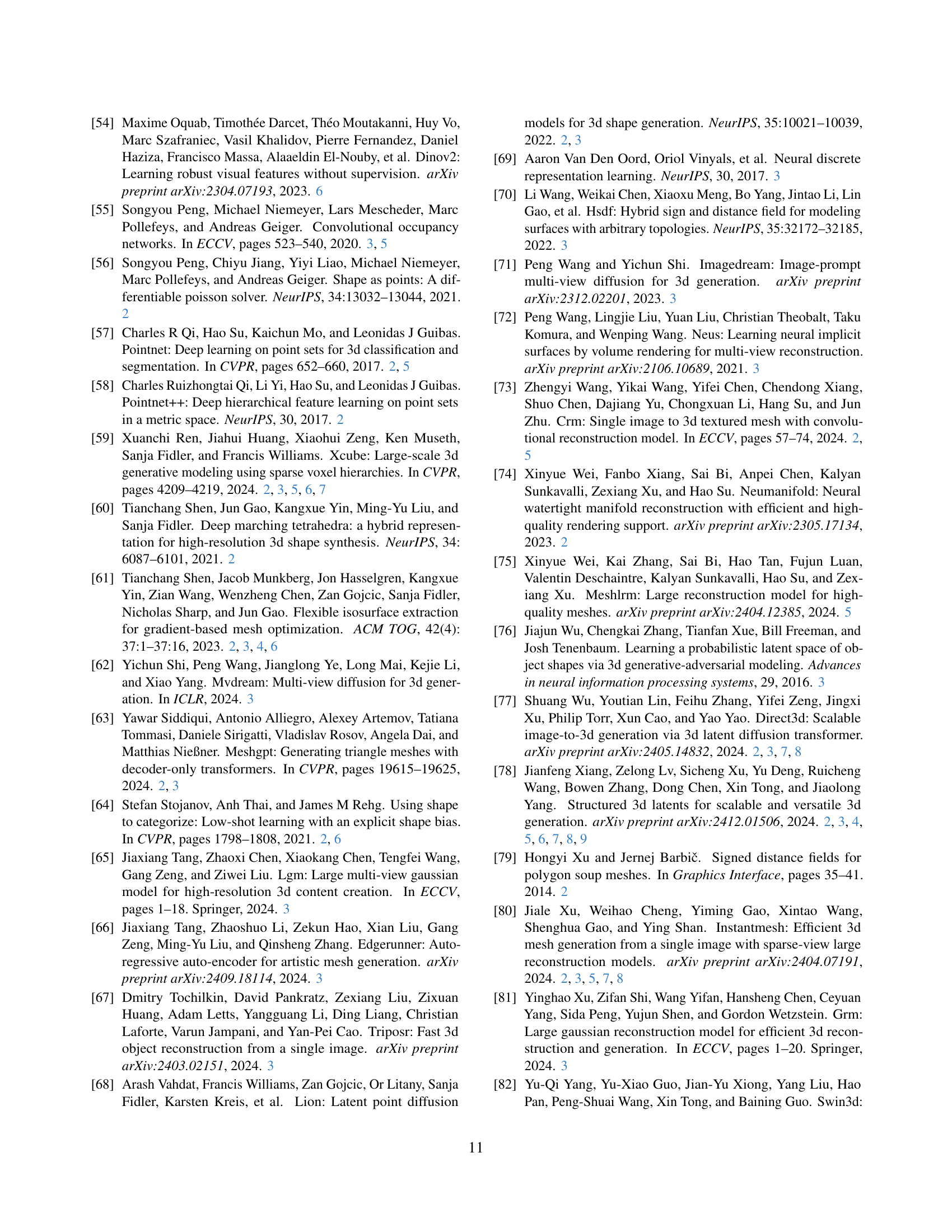

🔼 SparseFlex VAE, a novel variational autoencoder, achieves high-fidelity 3D shape reconstruction and generation from point cloud inputs. Its success stems from a sparse-structured, differentiable isosurface representation and an efficient training strategy (frustum-aware sectional voxel training). This allows it to surpass state-of-the-art performance on complex shapes with arbitrary topology, including intricate geometries, open surfaces, and even internal structures, paving the way for high-quality image-to-3D generation.

read the caption

Figure 1: SparseFlex VAE achieves high-fidelity reconstruction and generalization from point clouds. Benefiting from a sparse-structured differentiable isosurface surface representation and an efficient frustum-aware sectional voxel training strategy, our SparseFlex VAE demonstrates the state-of-the-art performance on complex geometries (left), open surfaces (top right), and even interior structures (bottom right), facilitating the high-quality image-to-3D generation with arbitrary topology.

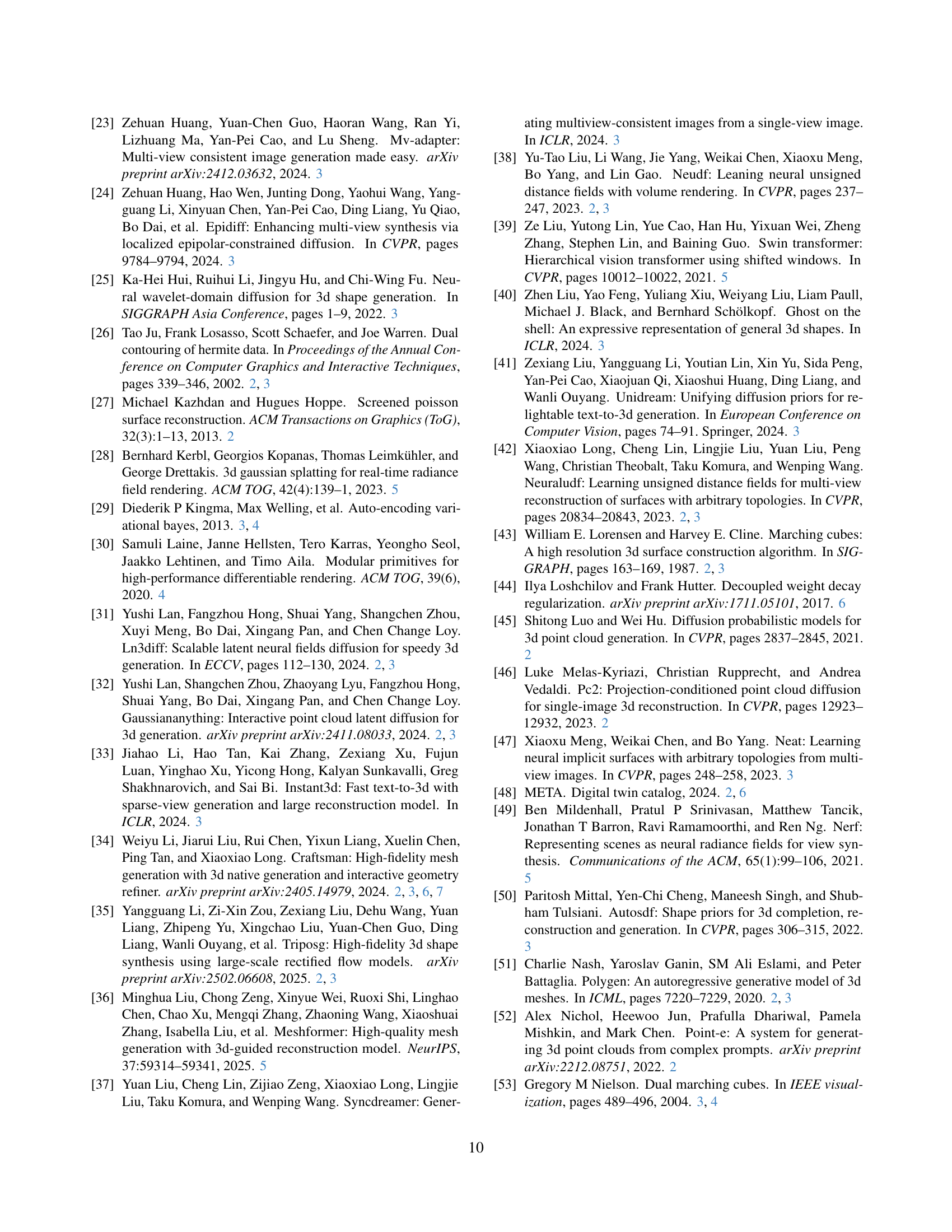

| Method | Toys4k | Dora Benchmark | ||||

|---|---|---|---|---|---|---|

| Craftsman [34] | 13.08/4.63 | 10.13/15.15 | 56.51/85.02 | 13.54/2.06 | 6.30/11.14 | 73.71/91.95 |

| Dora [5] | 11.15/2.13 | 17.29/26.55 | 81.54/93.84 | 16.61/1.08 | 13.65/25.78 | 78.73/96.40 |

| Trellis [78] | 12.90/11.89 | 4.05/4.93 | 59.65/64.05 | 17.42/9.83 | 3.81/6.20 | 62.70/71.95 |

| XCube [59] | 4.35/3.14 | 1.61/13.49 | 74.65/79.62 | 4.74/2.37 | 1.31/0.84 | 75.64/86.50 |

| 3PSDF∗ [6] | 4.51/3.69 | 11.33/14.10 | 81.70/86.13 | 7.45/1.68 | 7.52/12.50 | 79.43/91.17 |

| Ours256 | 2.56/1.25 | 18.31/27.23 | 85.35/92.01 | 1.93/0.53 | 16.24/28.37 | 88.76/97.31 |

| Ours512 | 1.67/0.84 | 23.74/34.10 | 90.39/95.60 | 1.36/0.23 | 21.85/36.03 | 91.55/98.51 |

| Ours1024 | 1.33/0.60 | 25.95/35.69 | 92.30/96.22 | 0.86/0.12 | 25.71/39.50 | 94.71/99.14 |

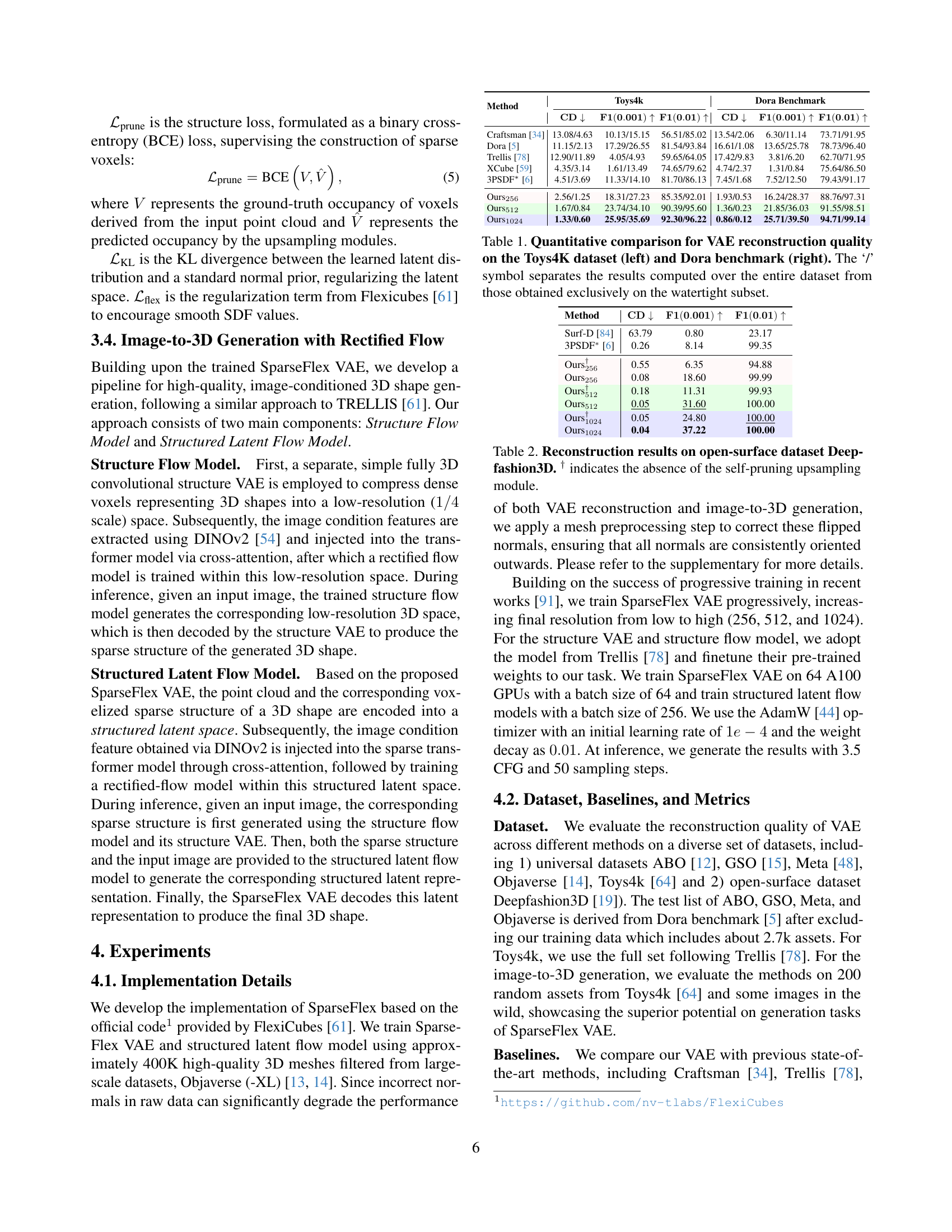

🔼 This table presents a quantitative comparison of the reconstruction performance of different Variational Autoencoders (VAEs) on two datasets: Toys4K and the Dora benchmark. For each dataset, the table shows the Chamfer Distance (CD) and F1-scores (at thresholds of 0.001 and 0.01). The results are broken down into two groups: performance on the entire dataset and performance only on the watertight subset of models (those without open surfaces). The ‘/’ symbol separates these two sets of results within each dataset’s columns. This allows for a direct comparison of how well each VAE handles both complete and incomplete (open-surface) 3D shapes.

read the caption

Table 1: Quantitative comparison for VAE reconstruction quality on the Toys4K dataset (left) and Dora benchmark (right). The ‘/’ symbol separates the results computed over the entire dataset from those obtained exclusively on the watertight subset.

In-depth insights#

SparseFlex Intro#

The paper introduces SparseFlex as a novel solution to address the challenges in creating high-fidelity 3D meshes with arbitrary topologies, including open surfaces and complex interiors. Existing implicit field methods often require costly, detail-degrading watertight conversion, while other approaches struggle with high resolutions. SparseFlex tackles these limitations with a sparse-structured isosurface representation, enabling differentiable mesh reconstruction at high resolutions directly from rendering losses. This representation combines the accuracy of Flexicubes with a sparse voxel structure, focusing computation on surface-adjacent regions and efficiently handling open surfaces. A key contribution is the frustum-aware sectional voxel training strategy, which activates only relevant voxels during rendering, dramatically reducing memory consumption and enabling high-resolution training. This enables the reconstruction of mesh interiors using only rendering supervision.

Frustum Voxel#

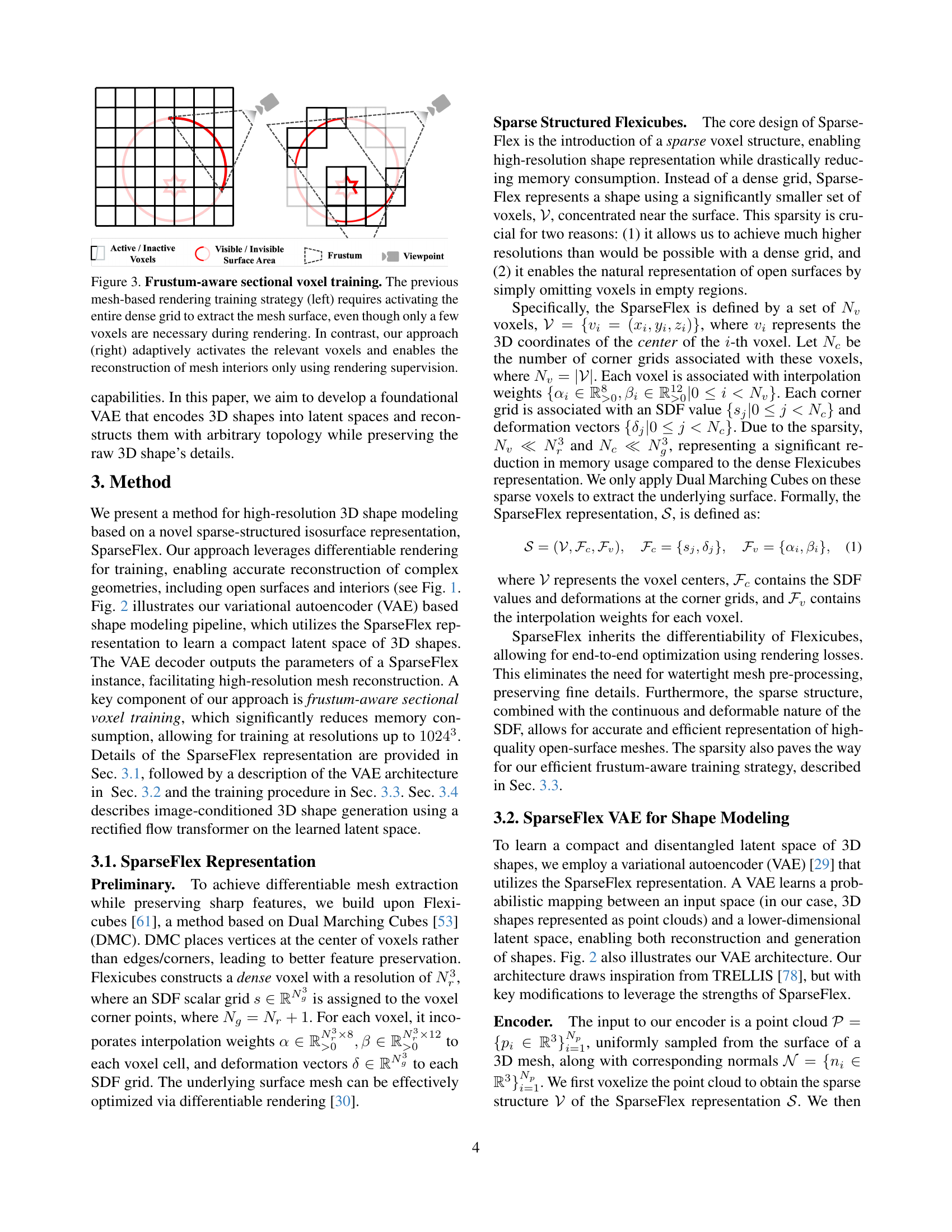

The frustum voxel approach represents a significant advancement in 3D scene processing. By focusing computation on the visible voxels within the camera’s frustum, it drastically reduces memory consumption, which is a major bottleneck in high-resolution 3D modeling. This selective activation allows for efficient rendering and manipulation of complex geometries. Furthermore, this technique enables the reconstruction of interior details by strategically positioning the camera. The adaptive nature of the frustum, adjusting its clipping planes based on voxel occupancy, further optimizes resource allocation. This results in a more efficient and scalable system for handling detailed 3D shapes.

VAE Pipeline#

VAE pipeline is used for high-resolution 3D shape modeling. The pipeline takes point clouds as input, voxelizes them, and uses a sparse transformer encoder-decoder to compress features. It employs a self-pruning upsampling module for higher resolution. The VAE is trained using rendering losses and frustum-aware sectional voxel training, improving efficiency by focusing on relevant voxels during training. This addresses limitations of implicit field methods by avoiding watertight conversion and enabling detail preservation. It achieves state-of-the-art reconstruction accuracy and generates high-resolution, detailed 3D shapes with arbitrary topology and open surfaces.

Open Surfaces#

Open surface modeling presents unique challenges in 3D geometry. Unlike closed, watertight meshes, open surfaces lack a defined interior, complicating tasks like inside/outside determination. Traditional methods often struggle, leading to artifacts or instabilities. The paper addresses this with SparseFlex, a novel approach designed to handle open surfaces efficiently. Unsigned Distance Fields (UDFs) are often used, but face inaccuracies in gradient estimation, hindering high-quality results. SparseFlex tackles these issues by focusing computation on surface-adjacent regions, crucial for defining open boundaries. The sparse voxel structure allows for efficient pruning of voxels near open boundaries, naturally representing these surfaces. By combining Flexicubes with this sparsity, SparseFlex achieves a more accurate and stable representation, a significant advancement for modeling complex, non-closed 3D shapes.

Image-to-3D#

Image-to-3D generation represents a significant leap in AI, bridging the gap between 2D visual understanding and 3D spatial reasoning. This field aims to create 3D models from single or multiple images, a task that requires overcoming challenges like inferring depth, handling occlusions, and generating consistent geometry and texture. Current approaches often combine deep learning techniques such as Generative Adversarial Networks (GANs), Variational Autoencoders (VAEs), and diffusion models with neural rendering to produce high-quality 3D assets. The ability to generate 3D models from images has broad applications, including virtual reality, augmented reality, gaming, e-commerce, and robotics. Future research directions include improving the fidelity and realism of generated 3D models, reducing the computational cost of training and inference, and developing methods that can handle more complex and diverse input images, ultimately leading to more accessible and versatile 3D content creation.

More visual insights#

More on figures

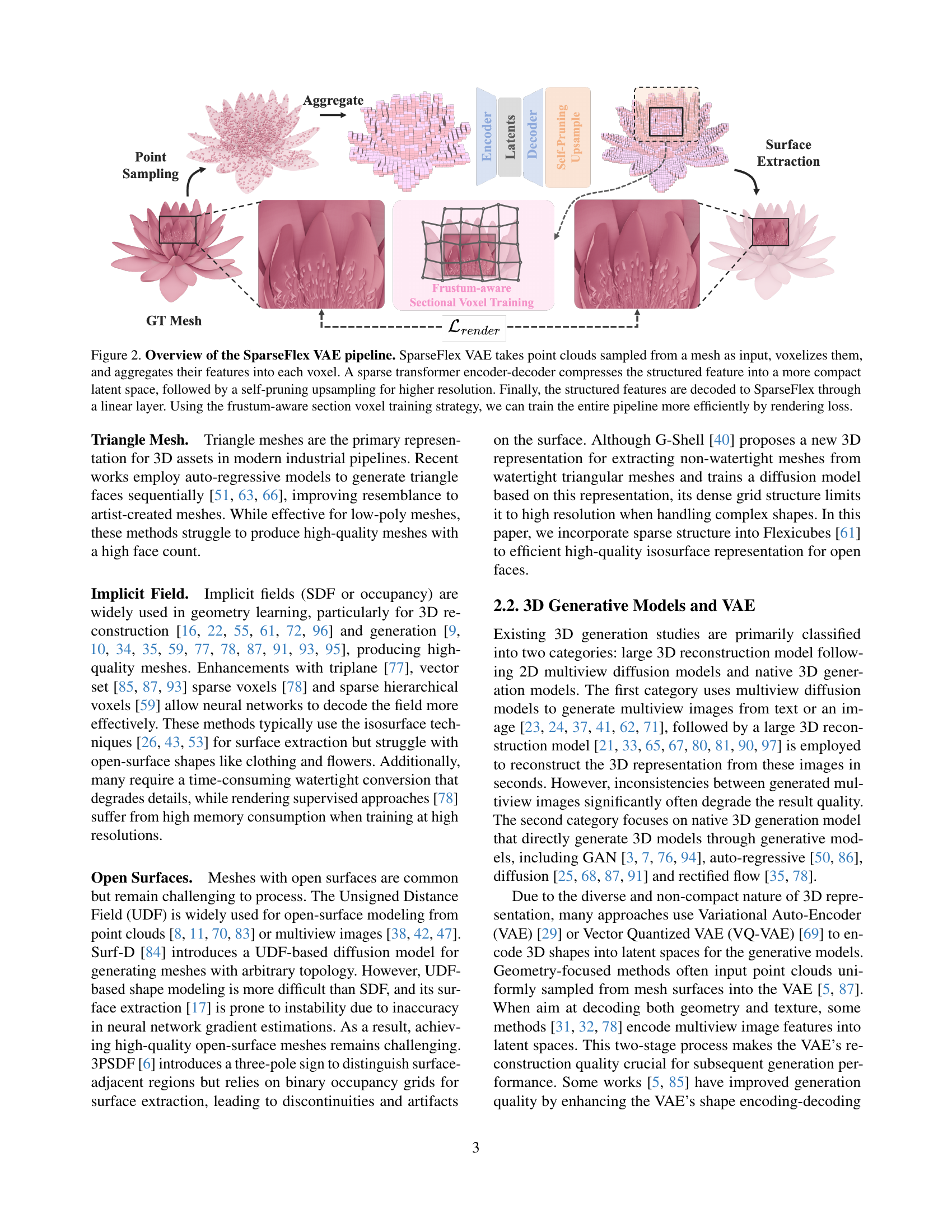

🔼 This figure illustrates the SparseFlex VAE pipeline. The process begins with point cloud data sampled from a 3D mesh. These points are voxelized, meaning they’re grouped into volumetric units (voxels), and their features are combined within each voxel. A sparse transformer network (encoder-decoder) then compresses these structured voxel features into a lower-dimensional latent space, which efficiently represents the 3D shape. The process then uses a self-pruning upsampling technique to increase the resolution of the representation. Finally, a linear layer decodes the latent space features back into the SparseFlex representation (a sparse collection of voxels representing the shape’s surface). Importantly, the entire pipeline uses a ‘frustum-aware sectional voxel training strategy,’ significantly increasing training efficiency by rendering losses (only calculating the loss for voxels currently visible from the camera viewpoint).

read the caption

Figure 2: Overview of the SparseFlex VAE pipeline. SparseFlex VAE takes point clouds sampled from a mesh as input, voxelizes them, and aggregates their features into each voxel. A sparse transformer encoder-decoder compresses the structured feature into a more compact latent space, followed by a self-pruning upsampling for higher resolution. Finally, the structured features are decoded to SparseFlex through a linear layer. Using the frustum-aware section voxel training strategy, we can train the entire pipeline more efficiently by rendering loss.

🔼 This figure illustrates the core concept of frustum-aware sectional voxel training. The left panel depicts the conventional method of mesh-based rendering, which necessitates activating every voxel in the dense grid to extract the mesh surface. This method is highly inefficient, especially when only a few voxels are essential for rendering. In contrast, the right panel demonstrates the proposed approach. This method selectively activates only the relevant voxels within the camera’s viewing frustum, resulting in significant computational and memory savings. Furthermore, this approach uniquely allows for the reconstruction of mesh interiors, using only rendering supervision, by strategically positioning the virtual camera. The figure highlights the superior efficiency and capabilities of the proposed method compared to the conventional dense grid approach.

read the caption

Figure 3: Frustum-aware sectional voxel training. The previous mesh-based rendering training strategy (left) requires activating the entire dense grid to extract the mesh surface, even though only a few voxels are necessary during rendering. In contrast, our approach (right) adaptively activates the relevant voxels and enables the reconstruction of mesh interiors only using rendering supervision.

🔼 Figure 4 presents a qualitative comparison of 3D shape reconstruction results from various state-of-the-art Variational Autoencoders (VAEs), including the proposed SparseFlex VAE. The figure showcases the superior performance of SparseFlex in handling complex geometries, open surfaces (shapes with incomplete boundaries), and even interior structures (reconstructing the insides of 3D objects). The comparison is visual, highlighting the detailed reconstruction capabilities of SparseFlex compared to other leading methods, demonstrating its ability to accurately capture fine details and complex topologies.

read the caption

Figure 4: Qualitative comparison of VAE reconstruction between ours and other state-of-the-art baselines. Our approach demonstrate superior performance in reconstructing complex shapes, open surfaces, and even interior structures.

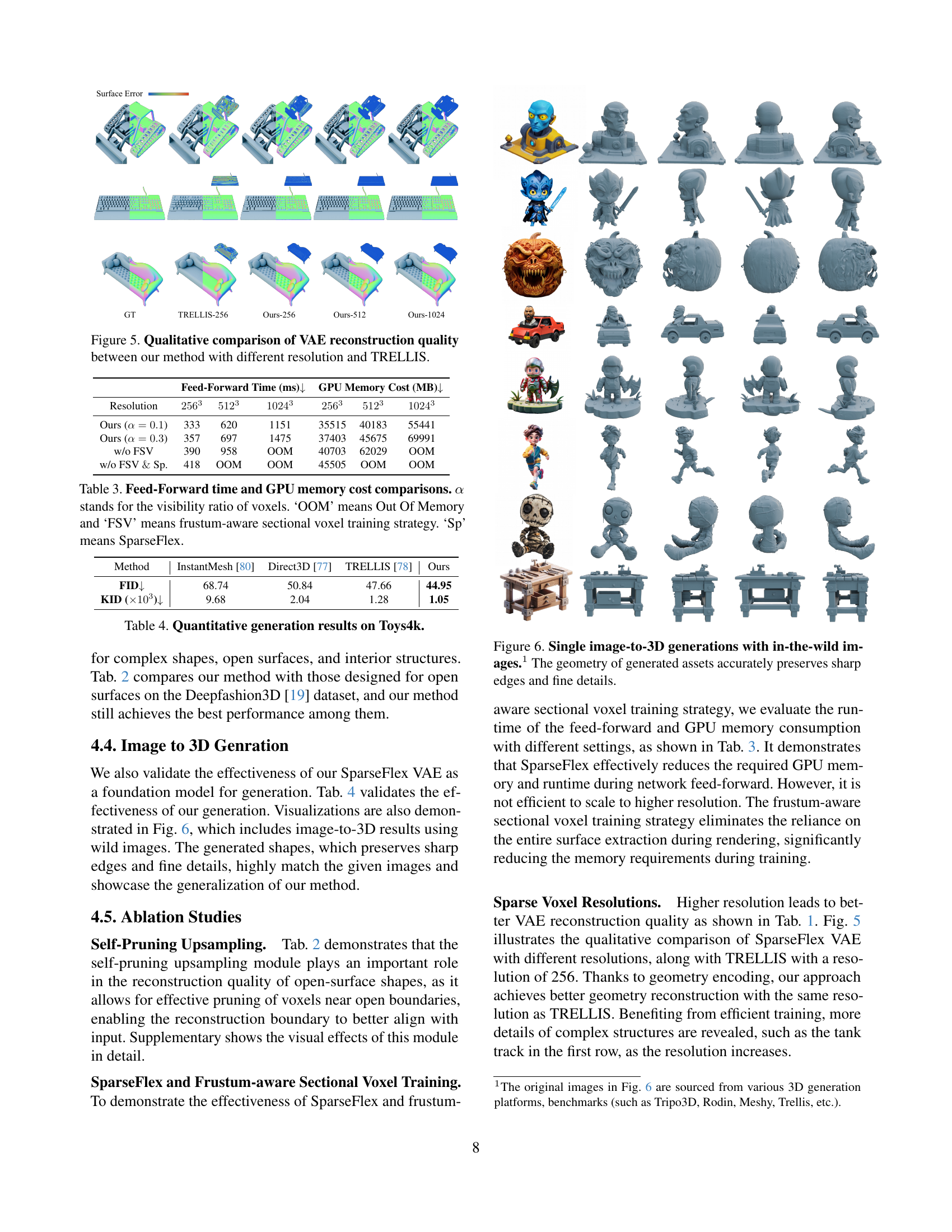

🔼 Figure 5 presents a qualitative comparison of 3D shape reconstruction results obtained using the SparseFlex VAE with different resolutions (256³, 512³, and 1024³) and the TRELLIS method. The figure visually showcases the impact of increasing resolution on the fidelity of reconstructed 3D shapes. By comparing the output of SparseFlex VAE at various resolutions to the TRELLIS results, the improvements in accuracy and detail preservation with higher resolutions are highlighted.

read the caption

Figure 5: Qualitative comparison of VAE reconstruction quality between our method with different resolution and TRELLIS.

More on tables

| Method | |||

|---|---|---|---|

| Surf-D [84] | 63.79 | 0.80 | 23.17 |

| 3PSDF∗ [6] | 0.26 | 8.14 | 99.35 |

| Ours | 0.55 | 6.35 | 94.88 |

| Ours256 | 0.08 | 18.60 | 99.99 |

| Ours | 0.18 | 11.31 | 99.93 |

| Ours512 | 0.05 | 31.60 | 100.00 |

| Ours | 0.05 | 24.80 | 100.00 |

| Ours1024 | 0.04 | 37.22 | 100.00 |

🔼 Table 2 presents a quantitative comparison of reconstruction performance on the DeepFashion3D dataset, focusing on open surfaces. It shows Chamfer Distance (CD) and F1-scores (at thresholds of 0.001 and 0.01) for different models, including variations of the proposed SparseFlex model with and without the self-pruning upsampling module. Lower CD values and higher F1-scores indicate better reconstruction accuracy.

read the caption

Table 2: Reconstruction results on open-surface dataset Deepfashion3D. † indicates the absence of the self-pruning upsampling module.

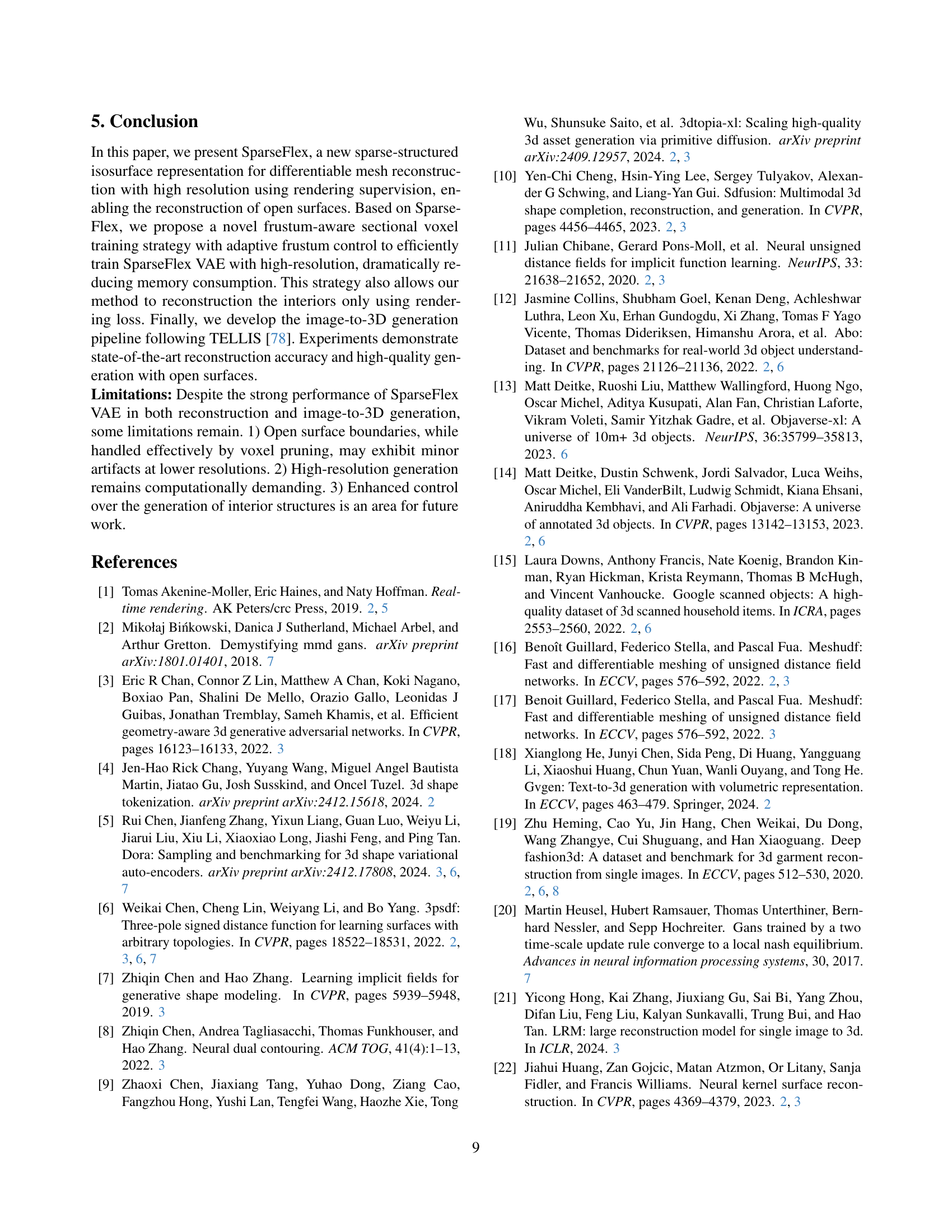

| Feed-Forward Time (ms) | GPU Memory Cost (MB) | |||||

|---|---|---|---|---|---|---|

| Resolution | ||||||

| Ours () | 333 | 620 | 1151 | 35515 | 40183 | 55441 |

| Ours () | 357 | 697 | 1475 | 37403 | 45675 | 69991 |

| w/o FSV | 390 | 958 | OOM | 40703 | 62029 | OOM |

| w/o FSV Sp. | 418 | OOM | OOM | 45505 | OOM | OOM |

🔼 This table compares the feed-forward time and GPU memory consumption of the SparseFlex VAE model with different settings. It shows the impact of the visibility ratio (α), which controls the fraction of voxels processed during each training iteration, and the effects of using the frustum-aware sectional voxel training strategy (FSV) and the SparseFlex representation (Sp). The table helps demonstrate the efficiency gains achieved by SparseFlex and the FSV strategy, especially at higher resolutions where traditional methods often run out of memory (OOM).

read the caption

Table 3: Feed-Forward time and GPU memory cost comparisons. α𝛼\alphaitalic_α stands for the visibility ratio of voxels. ‘OOM’ means Out Of Memory and ‘FSV’ means frustum-aware sectional voxel training strategy. ‘Sp’ means SparseFlex.

🔼 This table presents a quantitative comparison of the image-to-3D generation performance of different methods on the Toys4k dataset. The metrics used are the Fréchet Inception Distance (FID) and Kernel Inception Distance (KID), which are common measures for assessing the quality of generated images. Lower FID and KID scores indicate better generation quality, reflecting a closer match between the generated images and real images.

read the caption

Table 4: Quantitative generation results on Toys4k.

Full paper#