TL;DR#

Large language models (LLMs) have shown promise in various AI tasks, but they often struggle with reliable planning. Even with advanced prompting or fine-tuning, LLMs tend to produce incorrect plans and fail to generalize to larger tasks. Existing approaches often rely on manually crafted or learned heuristics for specific domains, incurring significant human effort or computational cost whenever new domains are introduced.

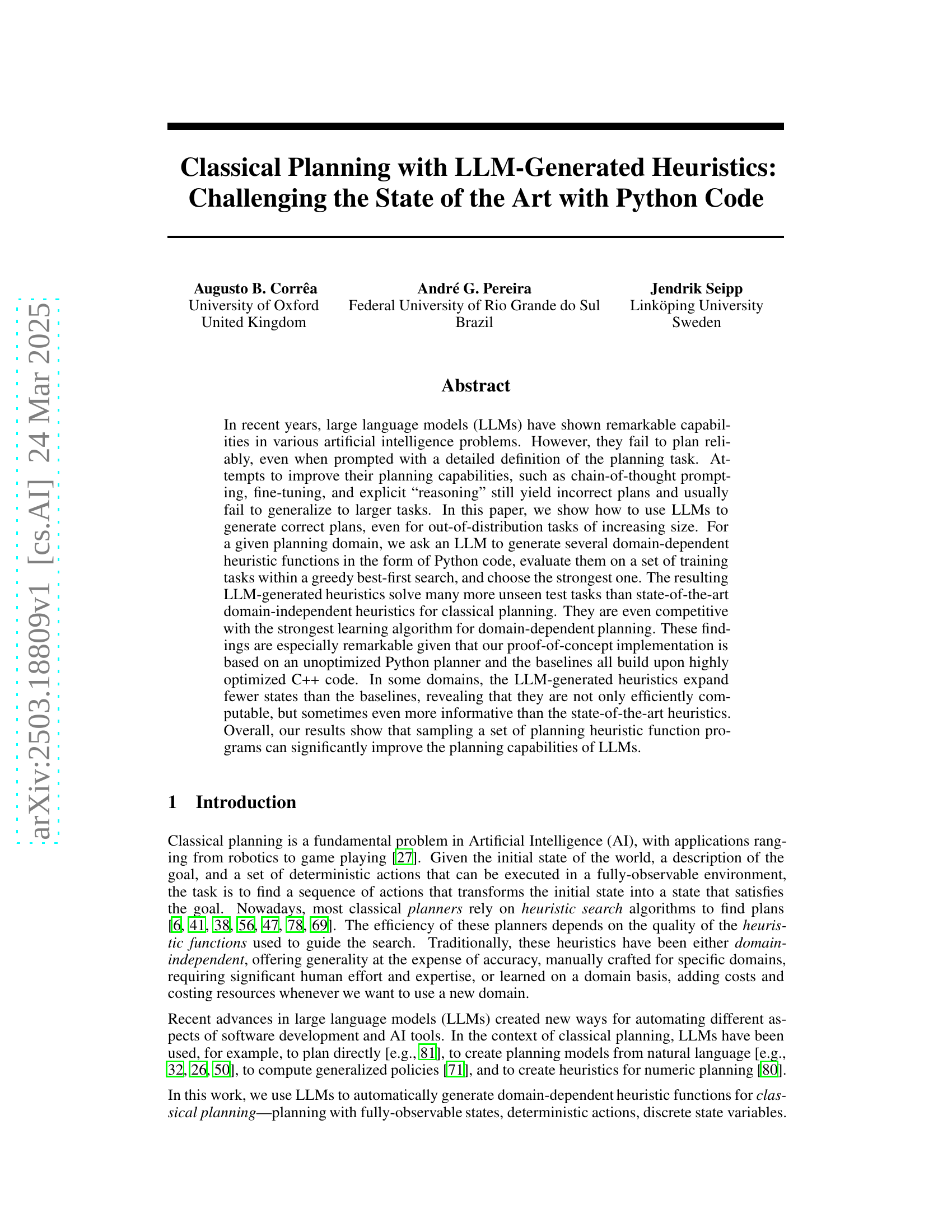

This paper introduces a novel approach: using LLMs to automatically generate domain-dependent heuristic functions in Python. This method involves prompting the LLM to generate multiple candidate heuristics, evaluating them on a training set, and selecting the strongest one. This process yielded heuristics that outperformed state-of-the-art domain-independent heuristics and were competitive with learning algorithms. The results showed LLM-generated heuristics sometimes expand fewer states than existing heuristics.

Key Takeaways#

Why does it matter?#

This work offers a new perspective on leveraging LLMs for automated planning, potentially shifting the focus from direct planning to heuristic generation. By demonstrating the effectiveness of LLM-generated heuristics, it opens new avenues for integrating LLMs into existing planning systems and improving their efficiency and scalability. It also highlights the importance of task-dependent heuristics.

Visual Insights#

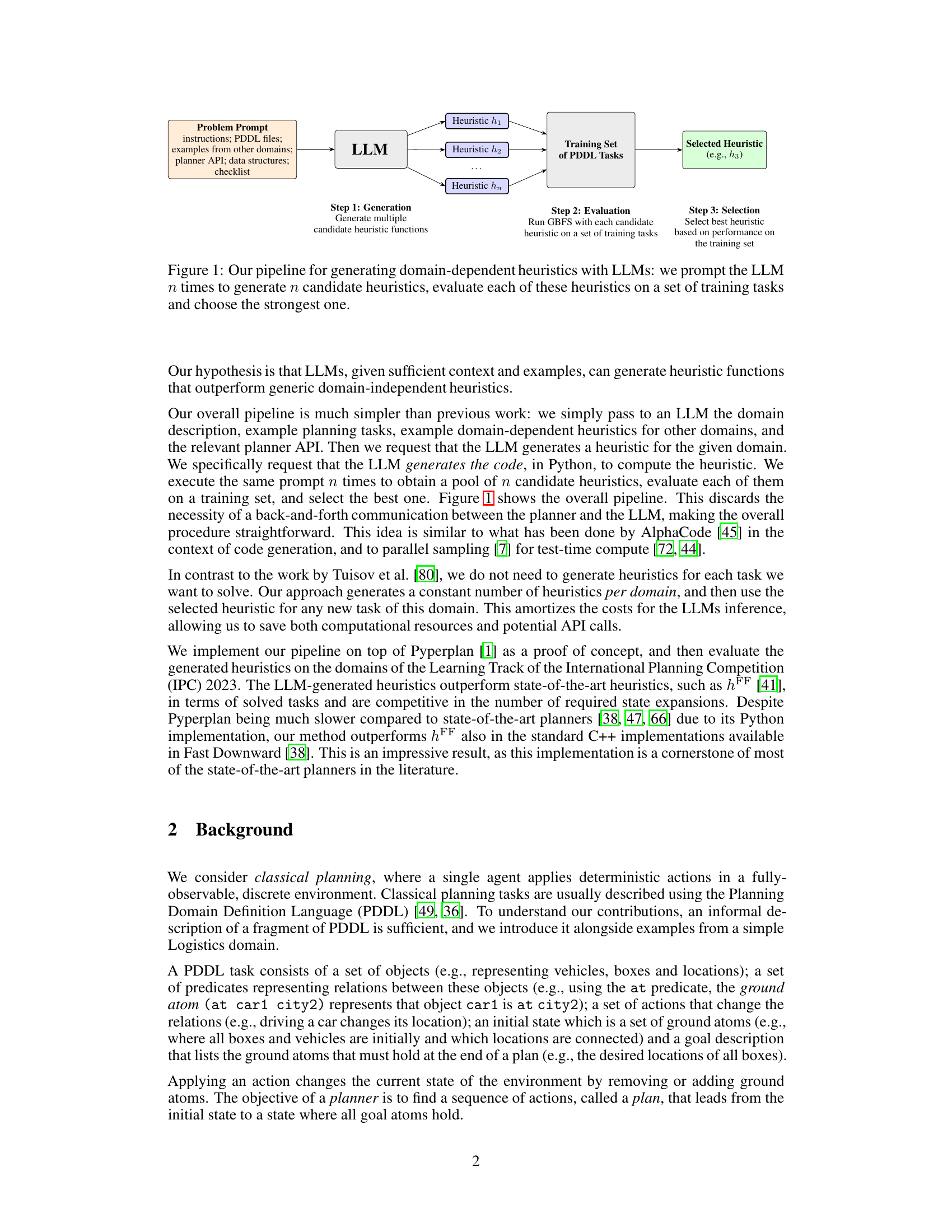

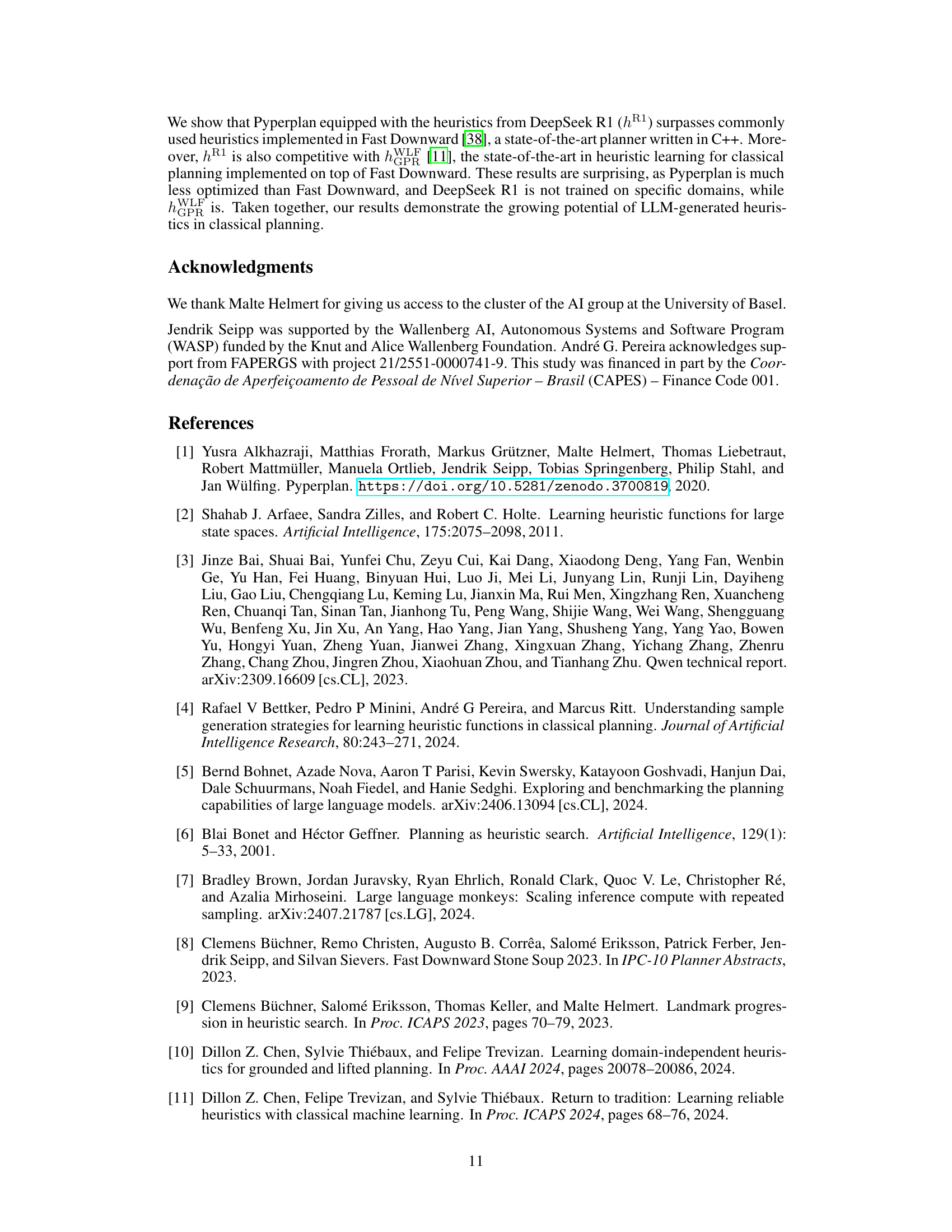

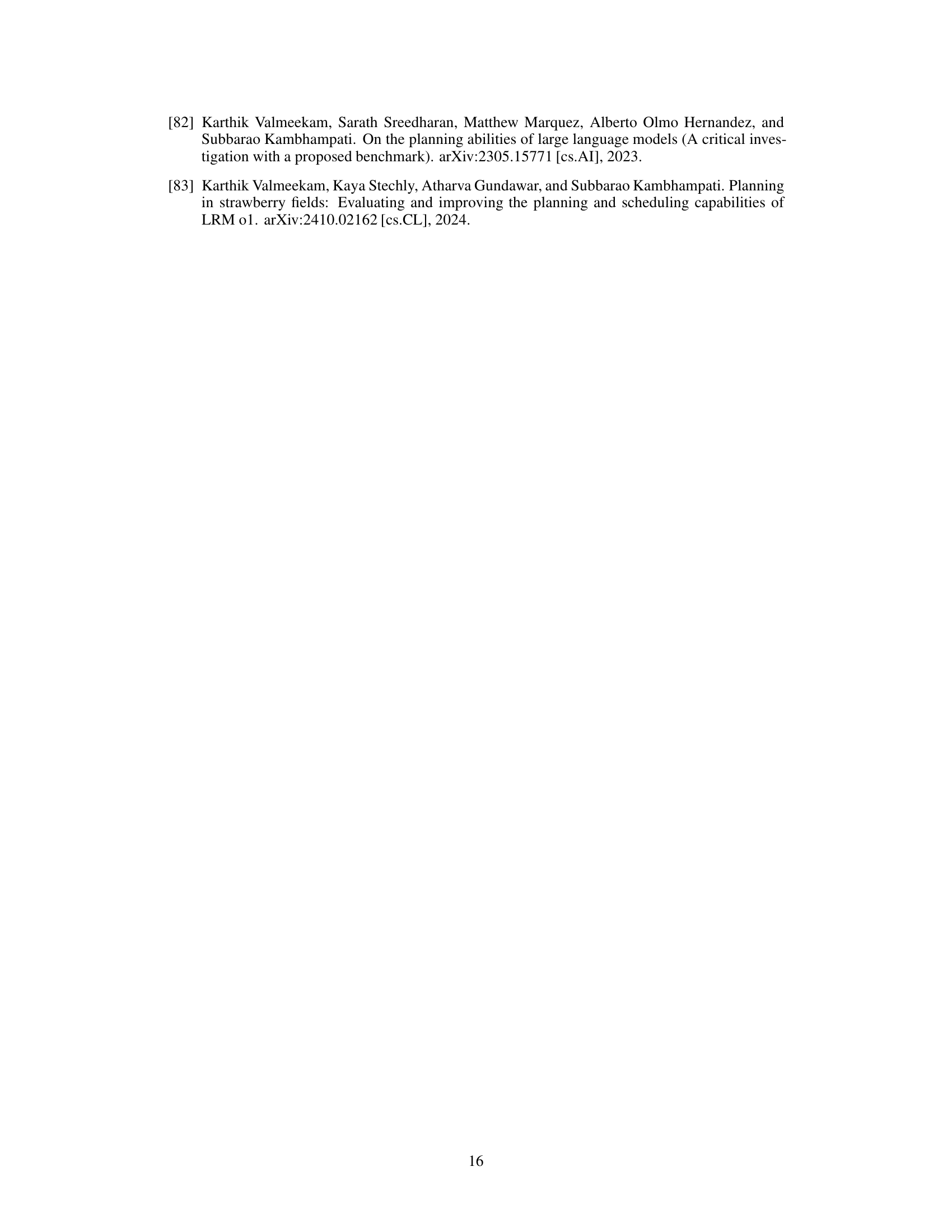

| Gemini 2.0 | DeepSeek | ||||

|---|---|---|---|---|---|

| Model | Flash | Flash Think. | V3 | R1 Dist. | R1 |

| Estimated Cost (USD) | $0.70 | – | $0.25 | – | $6.12 |

| Cost per Heuristic (USD) | $0.00350 | – | $0.00125 | – | $0.03060 |

| Cost per Domain (USD) | $0.08750 | – | $0.03125 | – | $0.76500 |

| Failure Rate (% heuristics) | 22.0% | 12.5% | 14.0% | 64.5% | 8.5% |

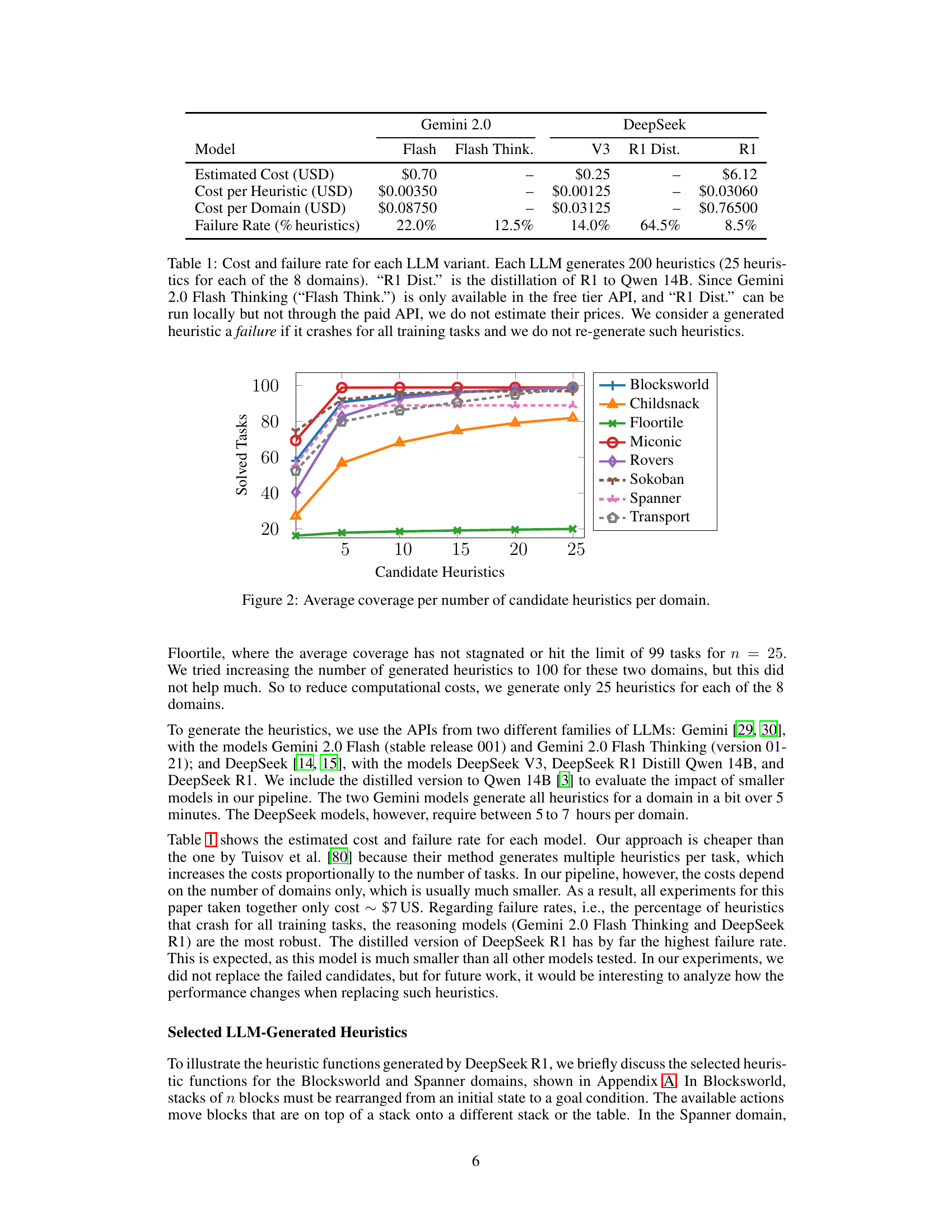

🔼 This table presents a cost-benefit analysis of using different Large Language Models (LLMs) to generate heuristics for classical planning. It shows the estimated cost (in USD) of generating 200 heuristics using each LLM (25 heuristics per domain, across 8 domains). The failure rate, representing the percentage of generated heuristics that failed to function correctly across all training tasks, is also reported. Note that pricing for Gemini 2.0 Flash Thinking and the distilled R1 model (R1 Dist.) is not provided due to their availability only through free tiers or local execution, respectively.

read the caption

Table 1: Cost and failure rate for each LLM variant. Each LLM generates 200 heuristics (25 heuristics for each of the 8 domains). “R1 Dist.” is the distillation of R1 to Qwen 14B. Since Gemini 2.0 Flash Thinking (“Flash Think.”) is only available in the free tier API, and “R1 Dist.” can be run locally but not through the paid API, we do not estimate their prices. We consider a generated heuristic a failure if it crashes for all training tasks and we do not re-generate such heuristics.

In-depth insights#

LLM Heuristic++#

While “LLM Heuristic++” isn’t a specific heading in the document, we can infer its meaning based on the paper’s content. It likely refers to using Large Language Models (LLMs) to generate heuristics for classical planning problems, going beyond existing LLM-based heuristic generation techniques. The ‘++’ suggests an enhancement or improvement. A LLM could automatically produce domain-dependent heuristic functions by sampling various candidate functions and selecting the best one, improving performance in unseen tasks. This could involve better prompt engineering, more efficient search algorithms, or even LLMs generating code for heuristic functions that are both accurate and computationally efficient. This approach challenges traditional domain-independent heuristics and potentially outperforms them. Furthermore, it hints at a future where LLMs can play a more significant role in solving complex AI problems by generating high-quality, domain-specific knowledge.

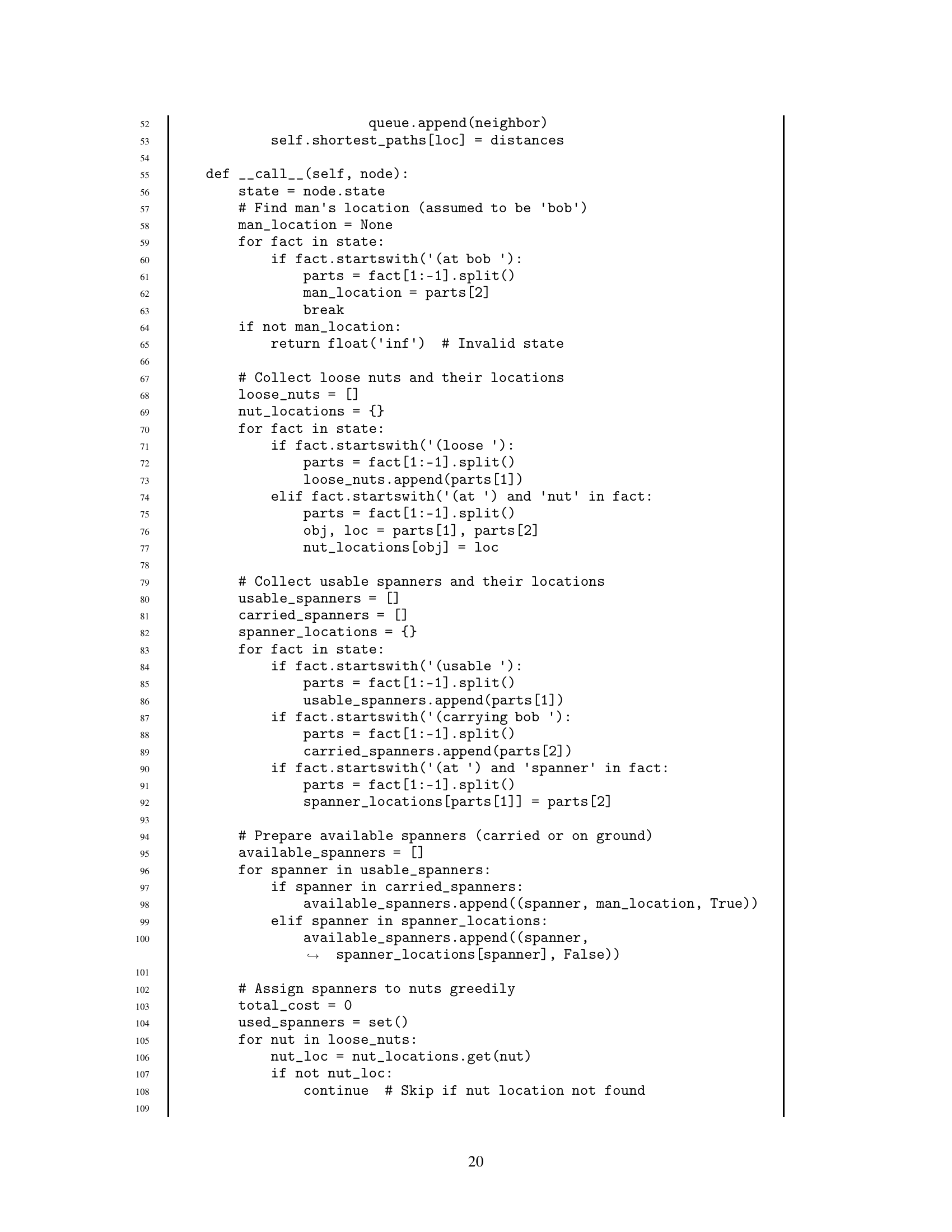

Python’s Edge#

Python’s edge in AI, particularly in planning, stems from its accessibility and rapid prototyping capabilities. The research paper cleverly leverages this by using Python-based Pyperplan, despite its performance limitations compared to C++ planners. This allows for easier integration with LLMs, as demonstrated by code injection. The unoptimized nature of Pyperplan emphasizes the strength of LLM-generated heuristics. LLMs demonstrate a proficiency in Python code generation, surpassing their capabilities in other languages. This facilitates code manipulation, making Python the ideal platform. The LLM’s capacity to generate effective Python code for heuristic functions allows for a modular approach to classical planning. This approach is competitive and can generate new powerful tools. Python acts as a bridge between high-level AI reasoning and concrete planning implementations.

Beyond HFF#

Beyond hFF signifies exploring heuristic functions for classical planning that surpass the limitations of the hFF heuristic. The hFF heuristic, while widely used, often suffers from inaccuracies due to its reliance on a relaxed plan. Consequently, future research should focus on alternative approaches that can provide more accurate and informative estimates of the distance to the goal. This includes incorporating domain knowledge through machine learning or other techniques to learn heuristics tailored to specific problem characteristics. Efforts should be directed towards creating heuristics that more effectively navigate the search space, leading to faster plan generation and improved overall performance. Such approaches might involve learning intricate relationships between state variables, extracting relevant features, and combining multiple heuristic estimates. By moving beyond hFF, the potential exists to develop planning systems capable of solving more complex problems with greater efficiency.

Scalable Plans#

While the paper does not explicitly discuss ‘Scalable Plans’, we can infer relevant insights from its focus on LLM-generated heuristics for classical planning. The success of LLMs in generating heuristics that outperform traditional methods suggests a pathway to addressing the scalability challenges inherent in classical planning. The LLM-generated heuristics allow plans to scale better as they capture domain-specific knowledge, enabling more efficient search. Traditional domain-independent heuristics often suffer in larger problem instances due to their lack of awareness of the specific problem structure. The LLMs, by being exposed to domain descriptions and examples, create heuristics that better guide the search, potentially leading to plans that scale more effectively. Future research is to explore how LLMs can be used to create hierarchical planning strategies or automatically decompose large problems into smaller, more manageable subproblems to generate scalable plans.

Prompt Strength#

The efficacy of a prompt in eliciting desired responses from Large Language Models (LLMs) hinges on several factors. A strong prompt provides clear instructions, a well-defined context, and relevant examples to guide the LLM’s generation process. It effectively communicates the task’s objective and constraints, leaving minimal room for ambiguity. Moreover, it can incorporate elements like chain-of-thought reasoning or step-by-step guidance to encourage more structured and logical outputs. The strength of a prompt is also related to how well it aligns with the LLM’s pre-training data and the degree to which it leverages the model’s inherent capabilities. A carefully crafted prompt can significantly enhance the quality, accuracy, and coherence of the generated content, enabling LLMs to tackle complex tasks with improved performance and reliability. Finding the balance between being overly prescriptive, which may stifle creativity, and too vague, which would lead to irrelevant responses, is crucial in building the best prompt. Furthermore, prompt engineering techniques such as iteratively refining and testing different prompt variations can help to identify the optimal prompt for a given task. A strong prompt can effectively unlock the full potential of LLMs, resulting in more valuable and insightful outcomes.

More visual insights#

More on tables

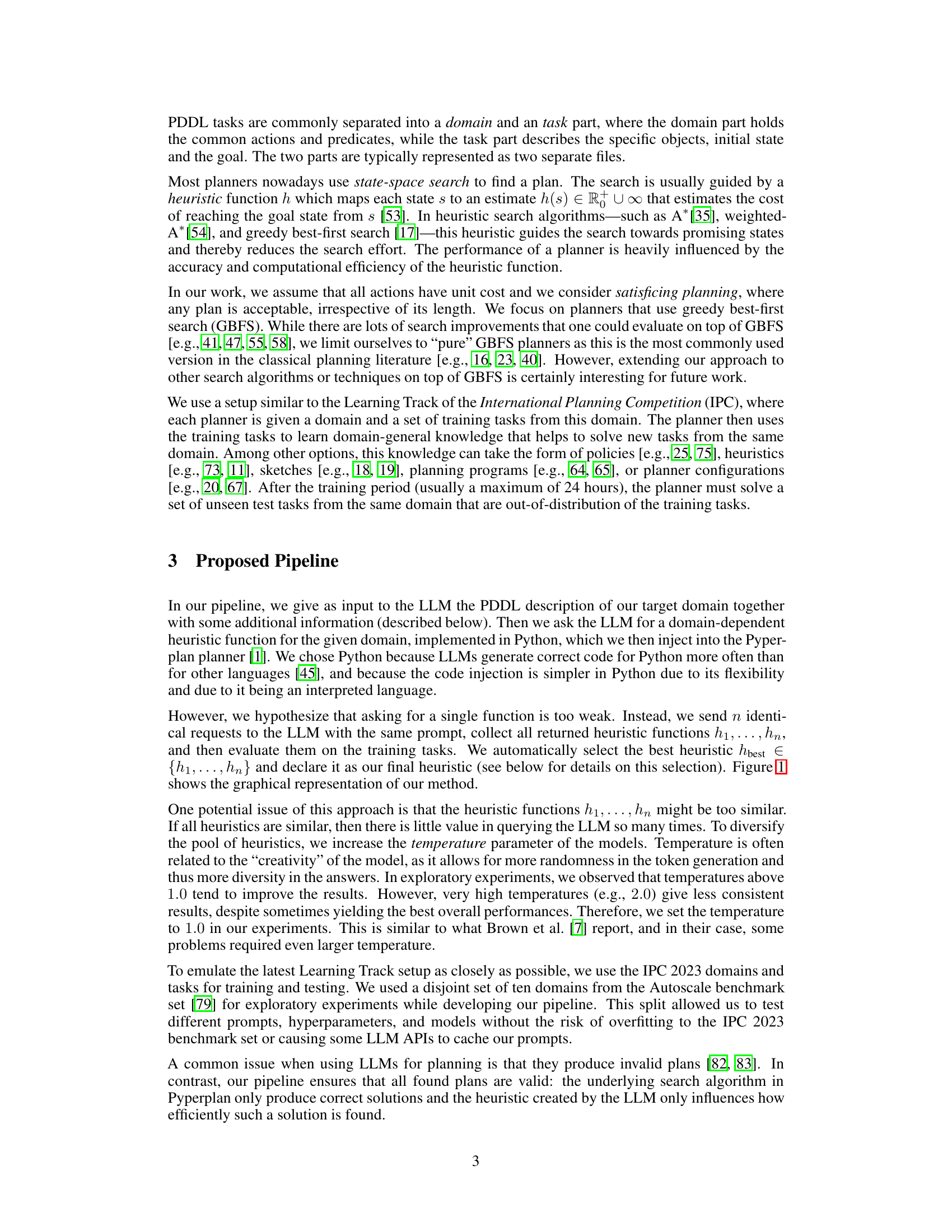

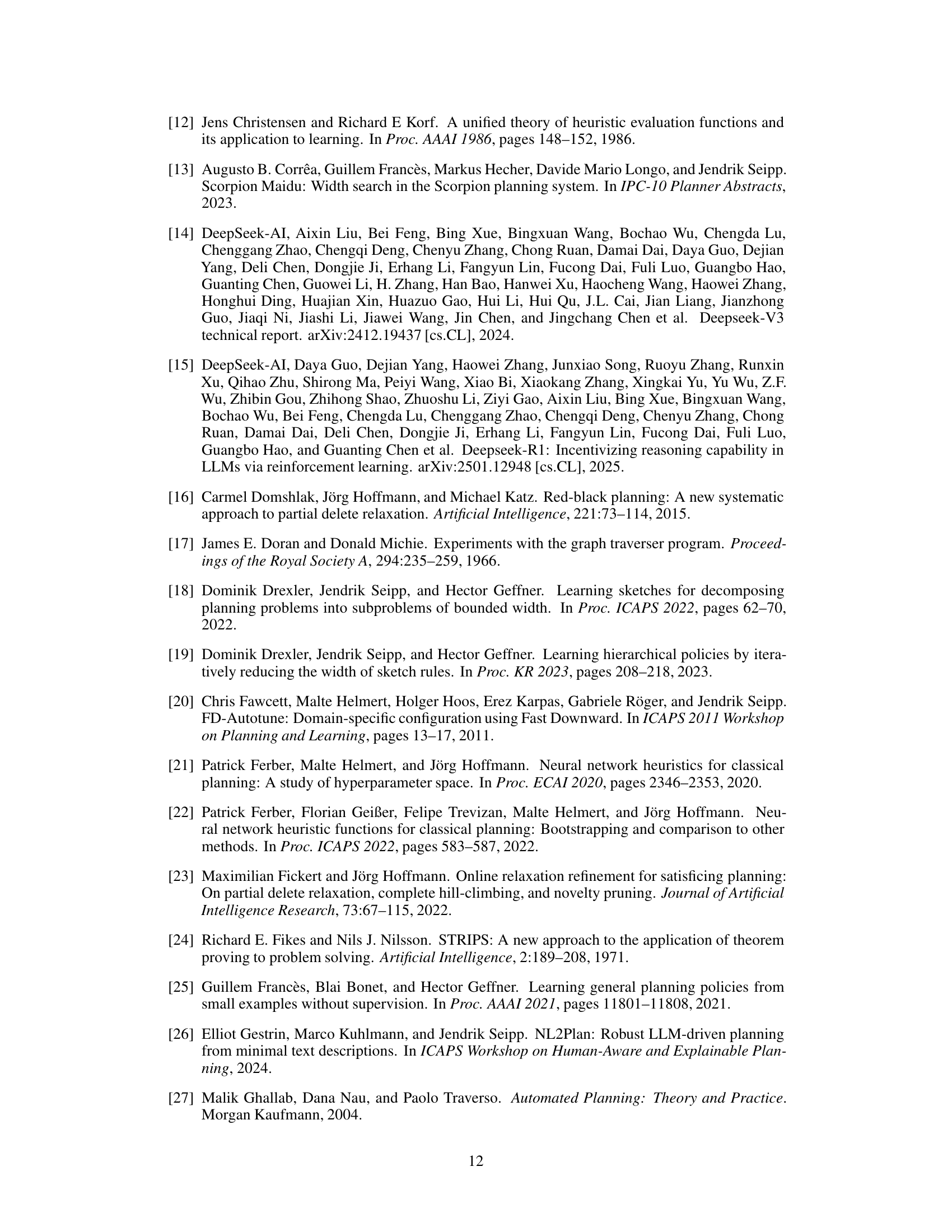

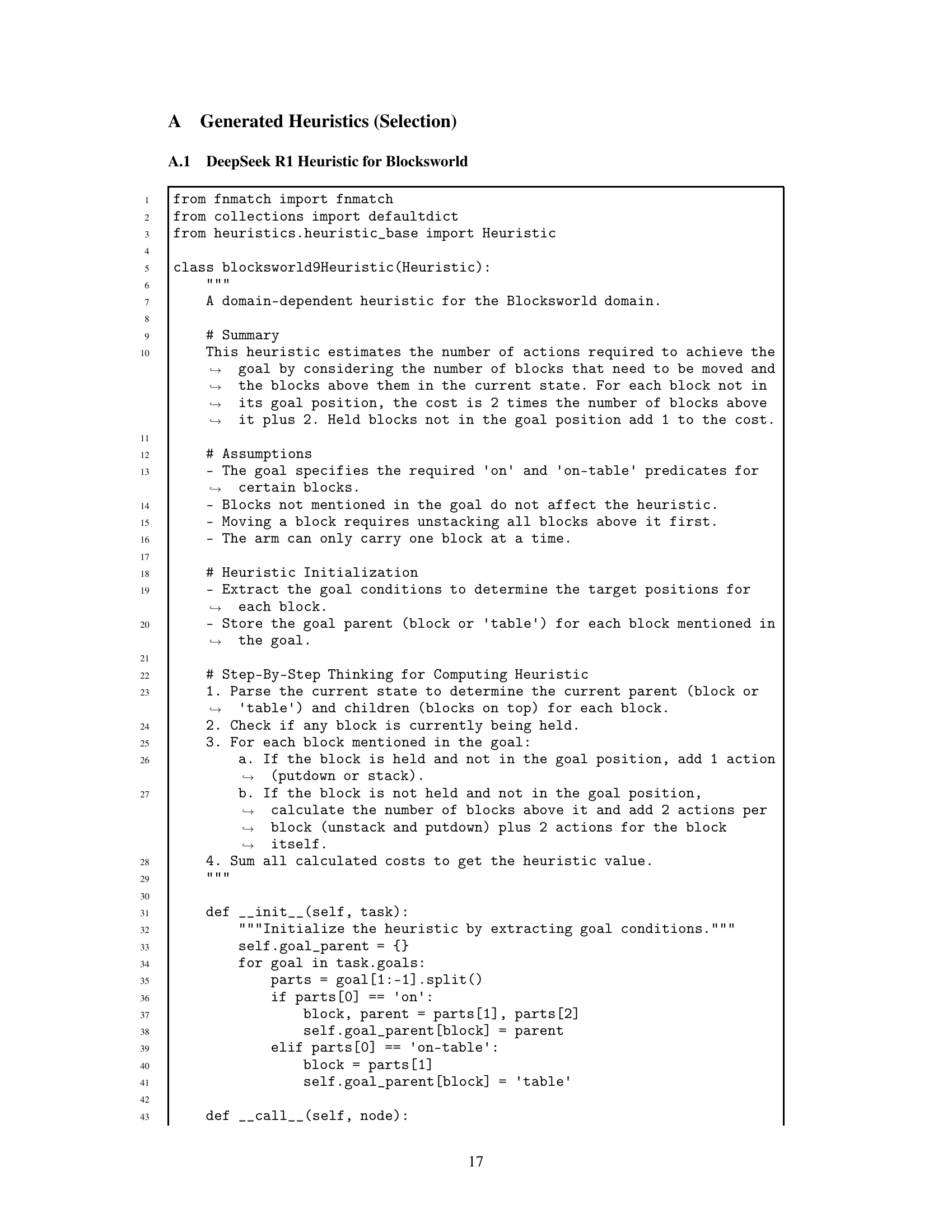

| Gemini 2.0 | DeepSeek | ||||||

|---|---|---|---|---|---|---|---|

| Domain | Flash | Flash Think. | V3 | R1 Dist. | R1 | ||

| Blocksworld (90) | 6 | 24 | 35 | 37 | 37 | 34 | 66 |

| Childsnack (90) | 9 | 17 | 32 | 14 | 32 | 16 | 22 |

| Floortile (90) | 1 | 10 | 4 | 8 | 4 | 3 | 4 |

| Miconic (90) | 30 | 74 | 90 | 88 | 74 | 30 | 90 |

| Rovers (90) | 12 | 28 | 32 | 39 | 32 | 32 | 32 |

| Sokoban (90) | 24 | 31 | 31 | 32 | 30 | 24 | 30 |

| Spanner (90) | 30 | 30 | 30 | 30 | 30 | 30 | 70 |

| Transport (90) | 8 | 29 | 42 | 57 | 44 | 45 | 59 |

| Sum (720) | 120 | 243 | 296 | 305 | 283 | 214 | 373 |

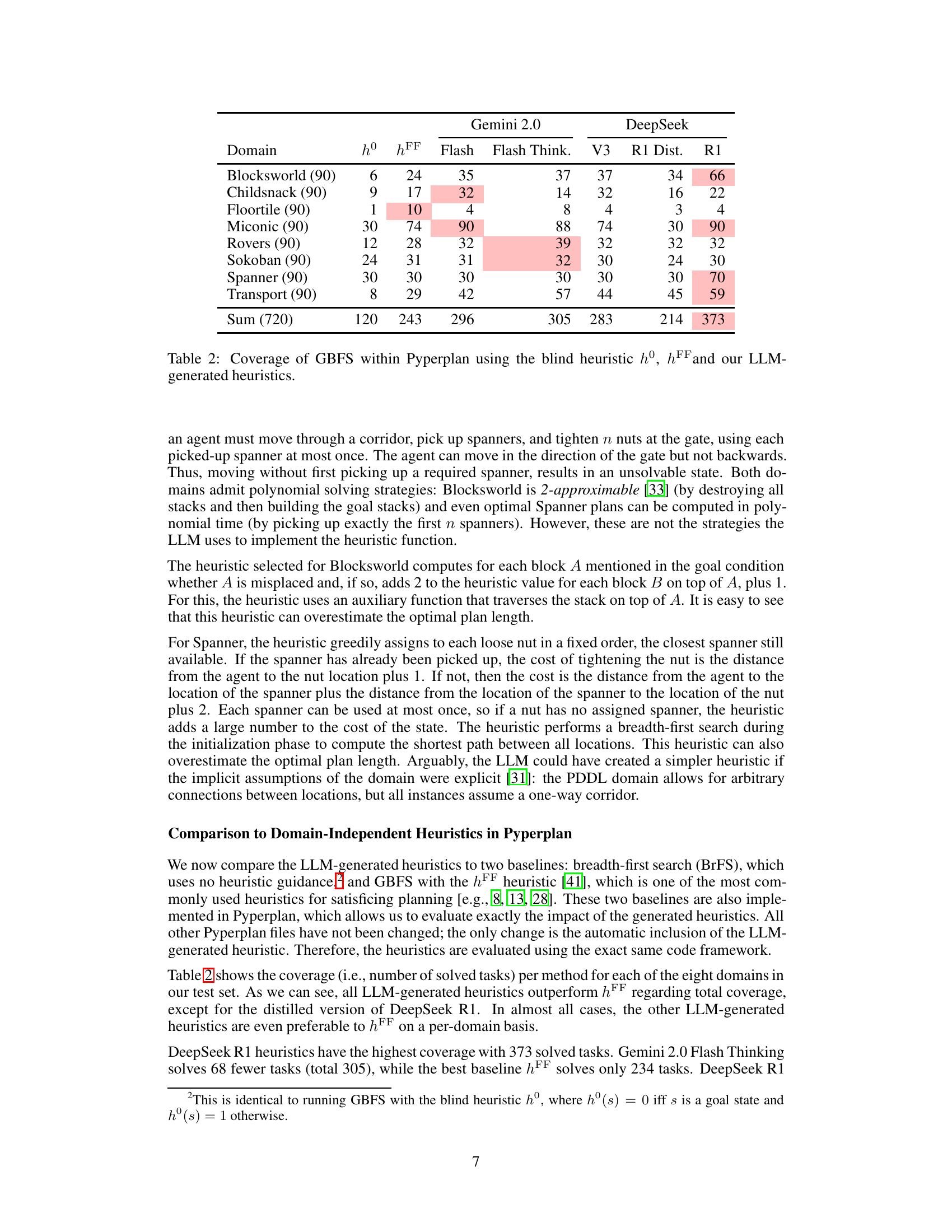

🔼 This table presents the performance comparison of different heuristic functions used within the Greedy Best-First Search (GBFS) algorithm implemented in the Pyperplan planner. It shows the number of tasks successfully solved (coverage) by three methods across eight different planning domains. The methods compared are: (1) a blind heuristic (h0), which doesn’t use any heuristic information; (2) the hFF heuristic, a commonly used domain-independent heuristic; and (3) several LLM-generated heuristics (one for each of eight planning domains). The results indicate how effectively the LLM-generated domain-dependent heuristics improve the planner’s ability to solve tasks compared to the baseline methods.

read the caption

Table 2: Coverage of GBFS within Pyperplan using the blind heuristic h0superscriptℎ0h^{0}italic_h start_POSTSUPERSCRIPT 0 end_POSTSUPERSCRIPT, hFFsuperscriptℎFFh^{\mathrm{FF}}italic_h start_POSTSUPERSCRIPT roman_FF end_POSTSUPERSCRIPTand our LLM-generated heuristics.

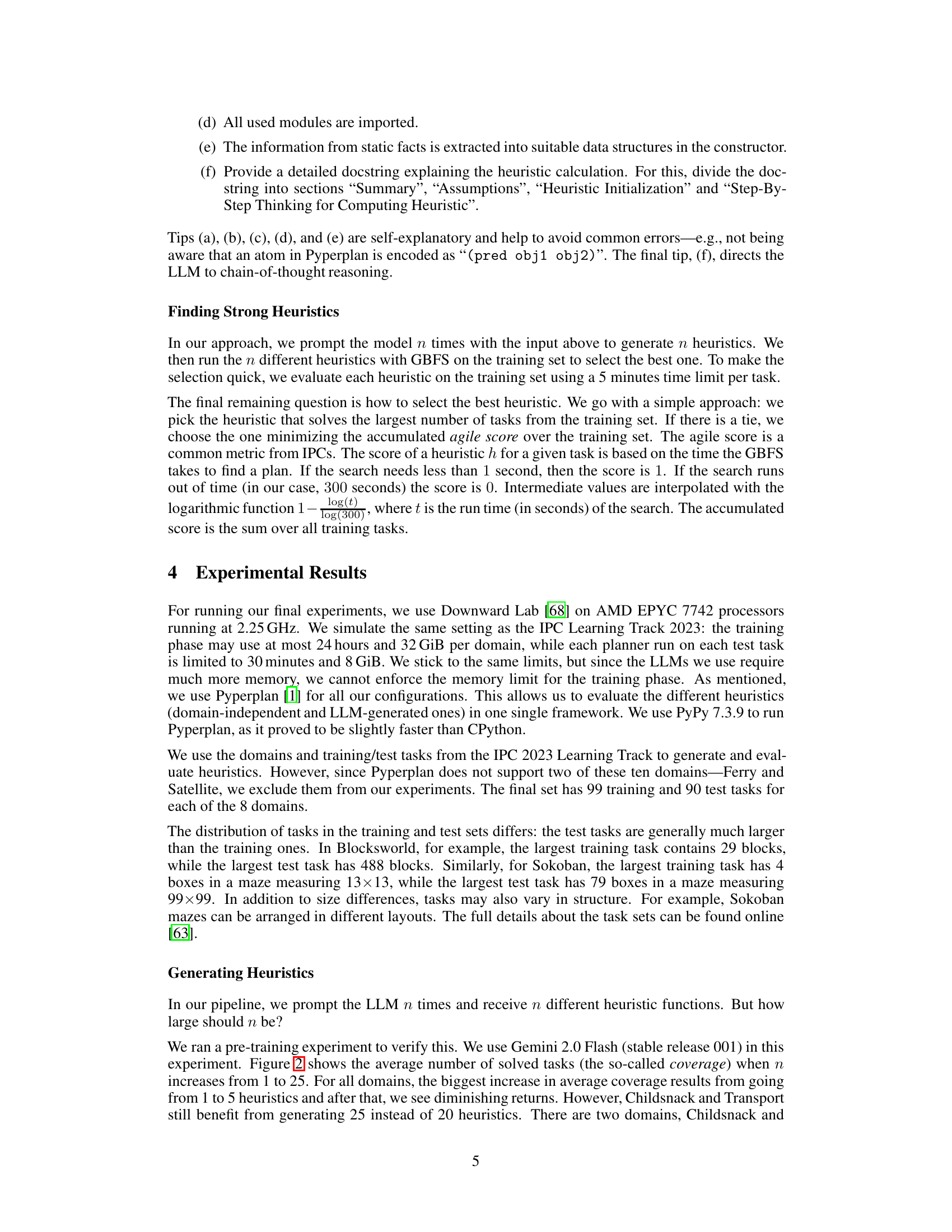

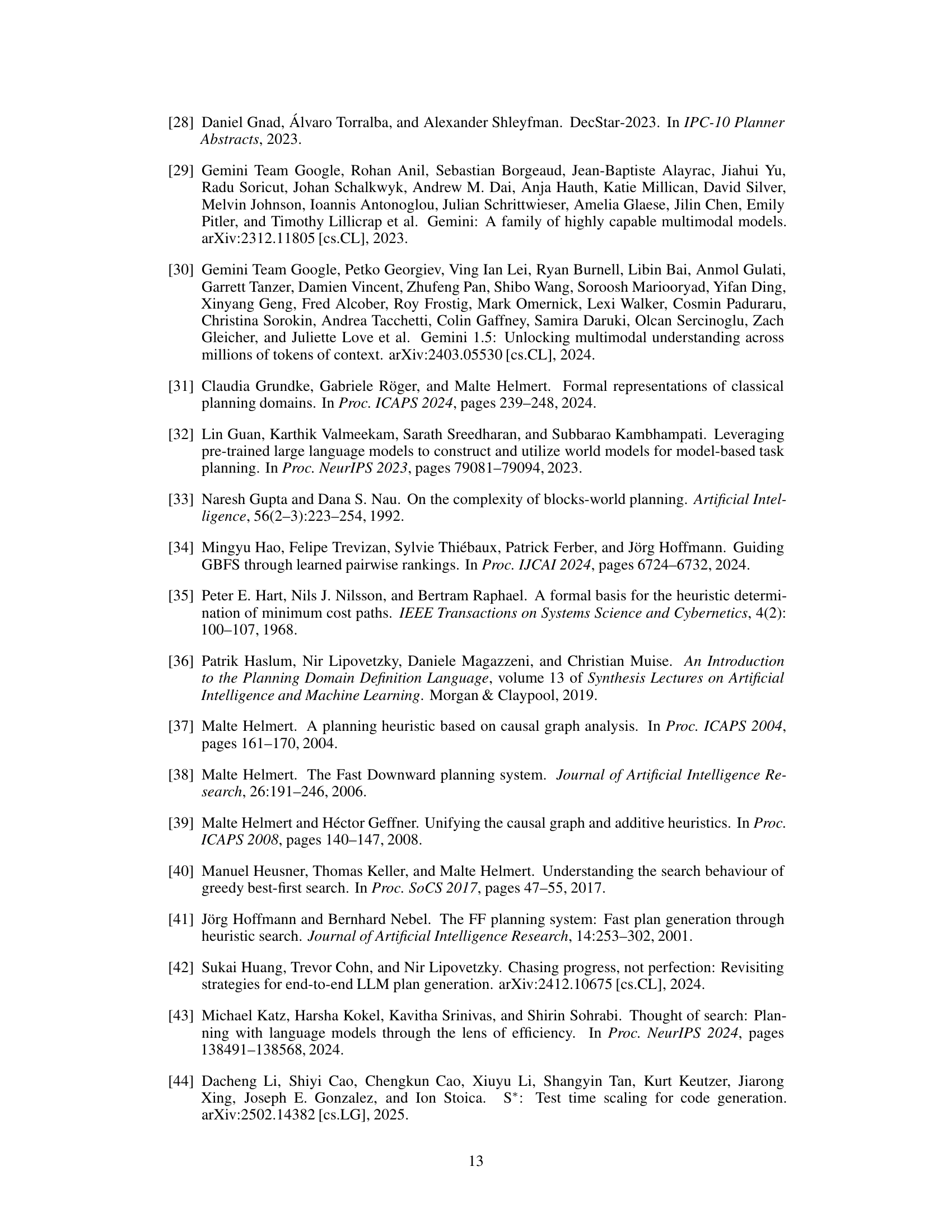

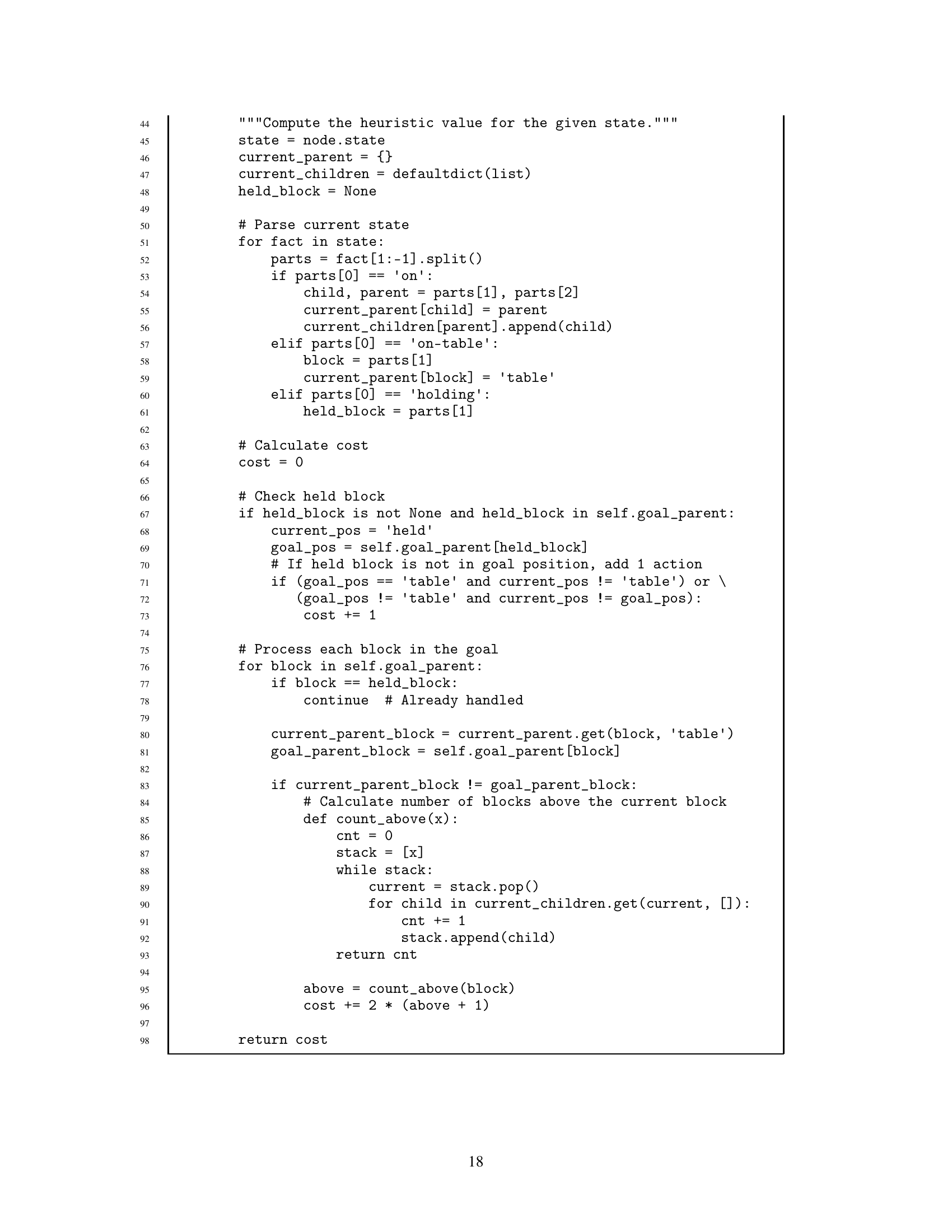

| Fast Downward (C++) | Pyperplan | ||||||||

| Domain | |||||||||

| Blocksworld (90) | 32 | 39 | 27 | 40 | 34 | 44 | 72 | 24 | 66 |

| Childsnack (90) | 23 | 13 | 25 | 29 | 29 | 29 | 31 | 17 | 22 |

| Floortile (90) | 3 | 3 | 12 | 10 | 7 | 14 | 2 | 10 | 4 |

| Miconic (90) | 90 | 90 | 90 | 79 | 90 | 90 | 90 | 74 | 90 |

| Rovers (90) | 38 | 41 | 34 | 36 | 39 | 33 | 37 | 28 | 32 |

| Sokoban (90) | 42 | 43 | 36 | 33 | 35 | 33 | 38 | 31 | 30 |

| Spanner (90) | 30 | 30 | 30 | 30 | 30 | 30 | 73 | 30 | 70 |

| Transport (90) | 36 | 36 | 41 | 49 | 54 | 51 | 28 | 29 | 59 |

| Sum (720) | 294 | 295 | 295 | 306 | 318 | 324 | 371 | 243 | 373 |

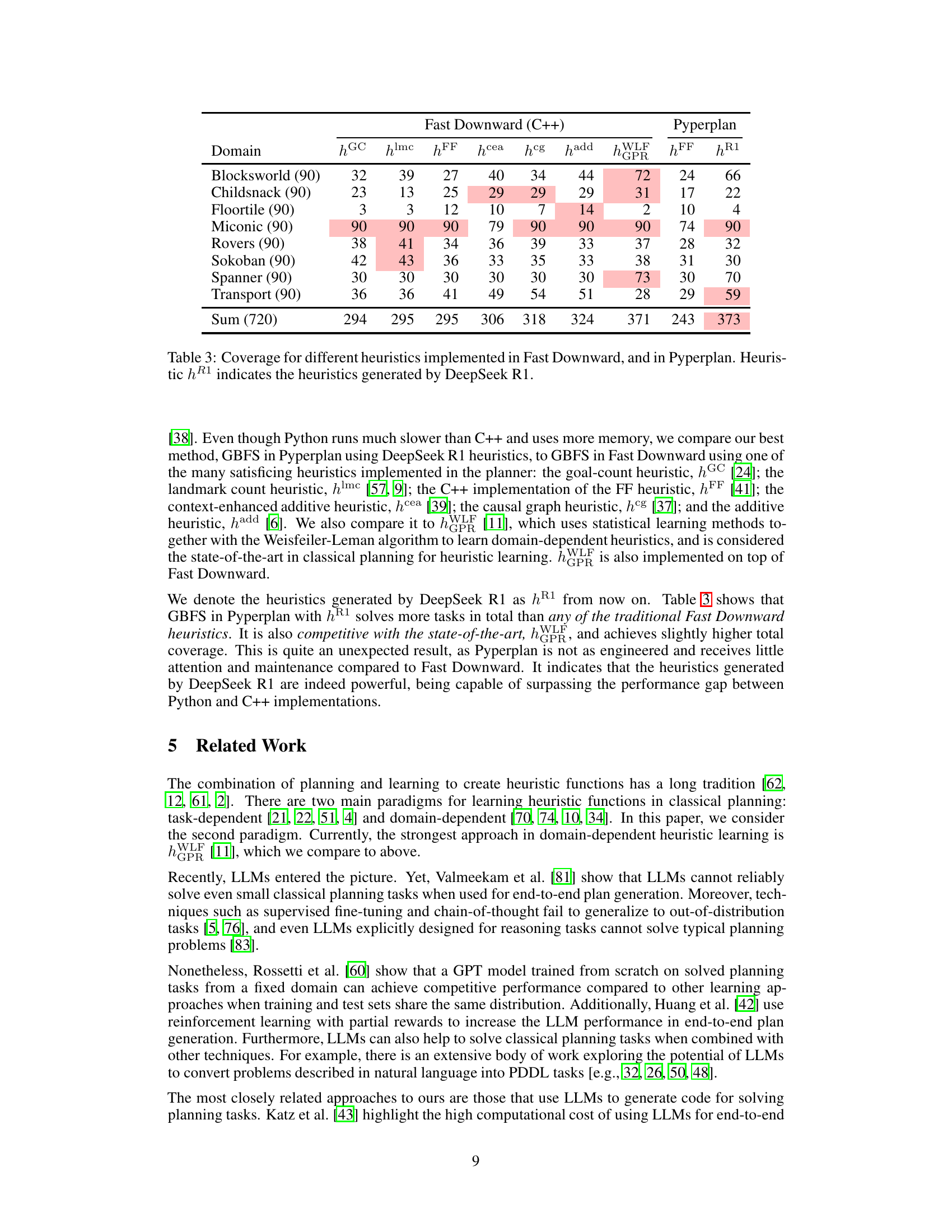

🔼 Table 3 presents a comparison of the performance of various heuristic functions in two different planning systems: Fast Downward and Pyperplan. Fast Downward is a highly optimized C++ planner, while Pyperplan is a Python-based planner, less optimized but easier to use for experimentation. The table shows the number of successfully solved tasks (coverage) out of a total of 720 tasks for each heuristic across different planning domains. Heuristics used include several state-of-the-art domain-independent heuristics such as hGC, hlmc, hFF, hcea, hcg, and hadd, as well as a domain-independent learned heuristic, hWLF. The table also includes the performance of the heuristics generated by the DeepSeek R1 large language model (LLM), denoted as hR1. This allows for a direct comparison of LLM-generated heuristics against standard domain-independent and learned heuristics, and a comparison between the performance of the heuristics in a highly optimized vs a less optimized planning system.

read the caption

Table 3: Coverage for different heuristics implemented in Fast Downward, and in Pyperplan. Heuristic hR1superscriptℎ𝑅1h^{R1}italic_h start_POSTSUPERSCRIPT italic_R 1 end_POSTSUPERSCRIPT indicates the heuristics generated by DeepSeek R1.

Full paper#