TL;DR#

Existing computer use agents face challenges in GUI grounding, long-horizon task planning, and performance bottlenecks due to reliance on single generalist models. These agents struggle with accurately interpreting GUI elements and handling tasks with distractions and evolving user contexts. Current methods often underperform compared to specialist models in domain-specific subtasks, limiting overall effectiveness.

This paper introduces Agent S2, a compositional framework that delegates cognitive responsibilities across generalist and specialist models. It uses a Mixture-of-Grounding technique for precise GUI localization and Proactive Hierarchical Planning for dynamic action plan refinement. Agent S2 achieves state-of-the-art performance on computer use benchmarks like OSWorld, WindowsAgentArena, and AndroidWorld, demonstrating its effectiveness and scalability.

Key Takeaways#

Why does it matter?#

This paper introduces Agent S2, a novel framework for computer use agents that surpasses existing methods on OSWorld, WindowsAgentArena, and AndroidWorld benchmarks. Agent S2 leverages a composition of generalist and specialist models, addressing key challenges such as GUI grounding and long-horizon task planning. This advancement promises to streamline human-computer interaction and opens avenues for more efficient and adaptable AI-driven automation. The modular approach allows for easier integration and optimization of specialized models, potentially revolutionizing how agents interact with digital environments.

Visual Insights#

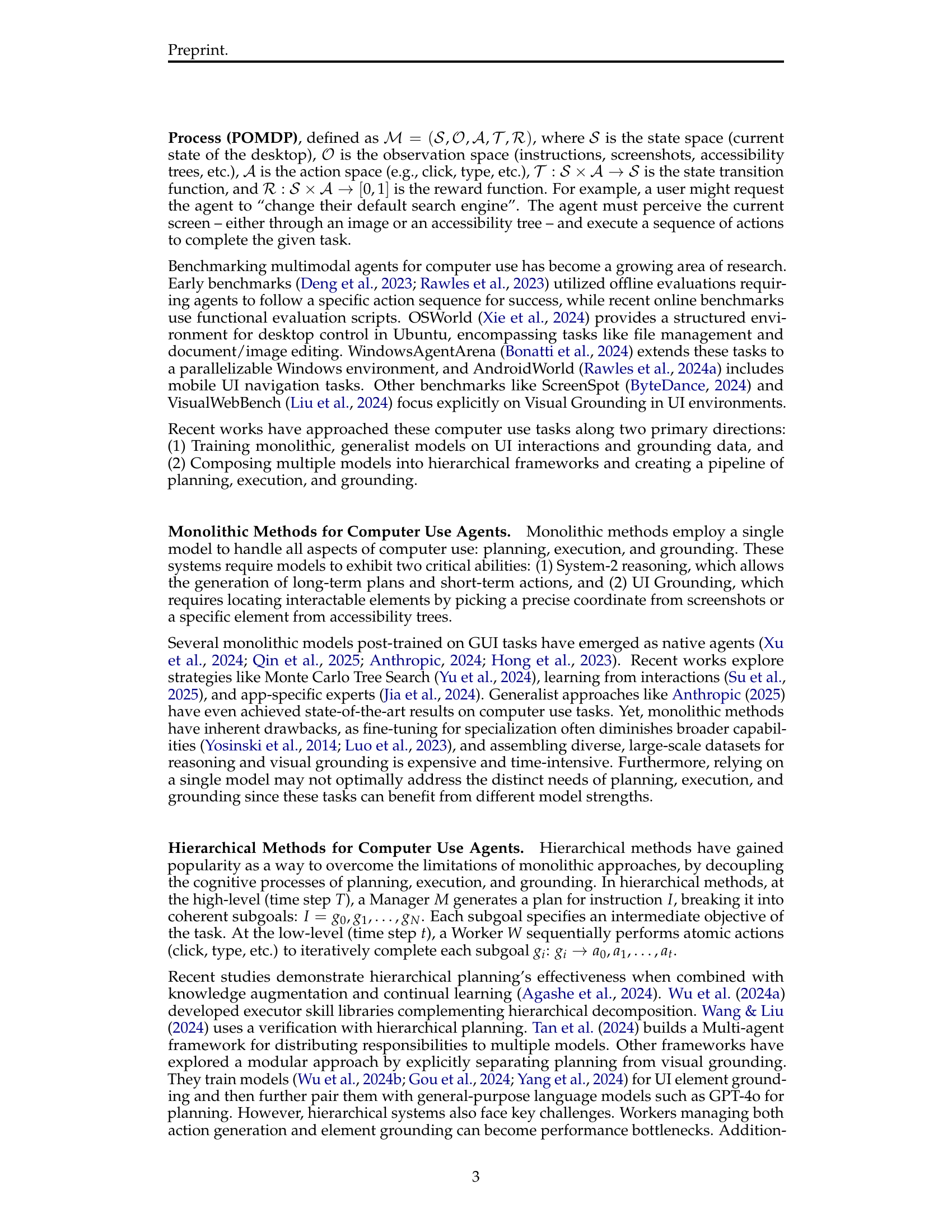

🔼 The bar chart compares the success rates of Agent S2 and several other state-of-the-art computer use agents on the OSWorld benchmark. The benchmark involves completing computer use tasks within 15 and 50 steps. Agent S2 significantly outperforms all other agents on both the 15-step and 50-step evaluations, highlighting its superior performance in automating digital tasks.

read the caption

Figure 1: Agent S2 achieves new SOTA results (Success Rate) on computer use tasks on both 15-step and 50-step evaluation in OSWorld.

| Method | 15-step | 50-step |

|---|---|---|

| Aria-UI w/ GPT-4o (Yang et al., 2024) | 15.2 | – |

| Aguvis-72B w/ GPT-4o (Xu et al., 2024) | 17.0 | – |

| Agent S w/ GPT-4o (Agashe et al., 2024) | 20.6 | – |

| Agent S w/ Claude-3.5-Sonnet (Agashe et al., 2024) | 20.5 | – |

| UI-TARS-72B-SFT (Qin et al., 2025) | 18.7 | 18.8 |

| UI-TARS-72B-DPO (Qin et al., 2025) | 22.7 | 24.6 |

| OpenAI CUA (OpenAI, 2025) | 19.7 | 32.6 |

| CCU w/ Claude-3.5-Sonnet (new) (Anthropic, 2024) | 14.9 | 22.0 |

| CCU w/ Claude-3.7-Sonnet (Anthropic, 2025) | 15.5 | 26.0 |

| \hdashlineOurs | ||

| Agent S2 w/ Claude-3.5-Sonnet (new) | 24.5 | 33.7 |

| Agent S2 w/ Claude-3.7-Sonnet | 27.0 | 34.5 |

🔼 This table presents the success rates of various computer use agents on the OSWorld benchmark, a test of AI agents’ ability to complete complex tasks by interacting with a computer’s graphical user interface (GUI). The benchmark involves two evaluation settings: 15-step and 50-step, representing tasks requiring shorter or longer sequences of actions. The table compares the performance of Agent S2 (a new method proposed in the paper) against several existing state-of-the-art (SOTA) models, demonstrating Agent S2’s superior performance in both scenarios. Notably, all agents except Agent S rely solely on screenshots as input for the GUI, while Agent S uses both accessibility trees and screenshots. This comparison highlights the effectiveness of Agent S2’s approach.

read the caption

Table 1: Success Rate (%) on OSWorld for different agents. Agent S2 achieves new state-of-the-art results on OSWorld for both 15 and 50-step evaluations. All Agents use only screenshots as input, except Agent S, which uses accessibility tree and screenshots.

In-depth insights#

S2: Compositional AI#

Compositional AI like Agent S2 represents a significant shift in how we approach building computer use agents. Instead of relying on a single, massive generalist model, it advocates for delegating specific cognitive responsibilities to specialized modules. This mimics how humans solve complex tasks by breaking them down into smaller, more manageable components handled by experts. The use of specialized components for grounding, planning, and execution allows for greater precision and efficiency, especially in tasks requiring intricate interaction with graphical user interfaces. This approach tackles the grounding bottleneck. By strategically combining generalist and specialist modules, it could allow systems to outperform monolithic models. The ability to refine action plans dynamically is crucial for robustness. The emergent behaviors resulting from compositional architectures also lead to improved capabilities.

Mixture of Grounding#

Mixture of Grounding addresses the challenge of precisely localizing GUI elements. Instead of relying on a single model, the agent uses a gating mechanism to route actions to specialized grounding experts (Visual, Textual, Structural). This approach improves grounding by distributing the cognitive load, allowing the primary model to focus on reasoning. The Visual Grounding Expert uses description-based visual grounding, eliminating the need for accessibility trees. Textual Grounding Expert uses OCR to address the need for fine-grained text grounding. Structural Grounding Expert handles structured data. By composing these diverse specialists, the agent improves the accuracy and robustness of GUI interactions.

Proactive Planning#

Proactive planning refines task execution by adapting to new data. Unlike reactive planning, which corrects after failure, proactive planning updates the strategy after each step. It minimizes noise by accounting for current states. The paper demonstrates its benefits in computer-use tasks where initial states can vary. By re-evaluating subgoals and integrating observations, it improves context awareness and reduces errors, contrasting with rigid, pre-set plans. This dynamic approach makes the system more robust to changes.

SOTA on 3 OS#

Agent S2 excels as SOTA on OSWorld, WindowsAgentArena, and AndroidWorld. It implies broad applicability. OSWorld tests desktop tasks in Ubuntu, WindowsAgentArena parallels in Windows, and AndroidWorld tests mobile UI navigation. This multi-OS focus is crucial because real-world agents must operate across diverse platforms. Achieving SOTA in all three demonstrates Agent S2’s robust ability to handle various GUIs and task demands. Such versatility implies compositional design and a capacity for environmental adaptation, thus leading to efficiency across platforms.

Scaling Agent S2#

Scaling Agent S2 involves several critical aspects that are not explicitly detailed in the provided text, but can be inferred. The original paper emphasized that scaling compute and timesteps enhances the agent’s performance. One can expect that increasing computational resources would lead to better model performance. As the model is allowed more steps, it is also more likely to complete the task at hand. Therefore, the Agent can now go through more data to make a better plan for completing the tasks. From the paper, the more steps that it takes, the better Agent S2 does. It can also leverage Proactive Hierarchical planning as more time steps are given. Also, by making use of the Mixture of Grounding, it will be able to delegate tasks effectively and properly as time steps increase.

More visual insights#

More on figures

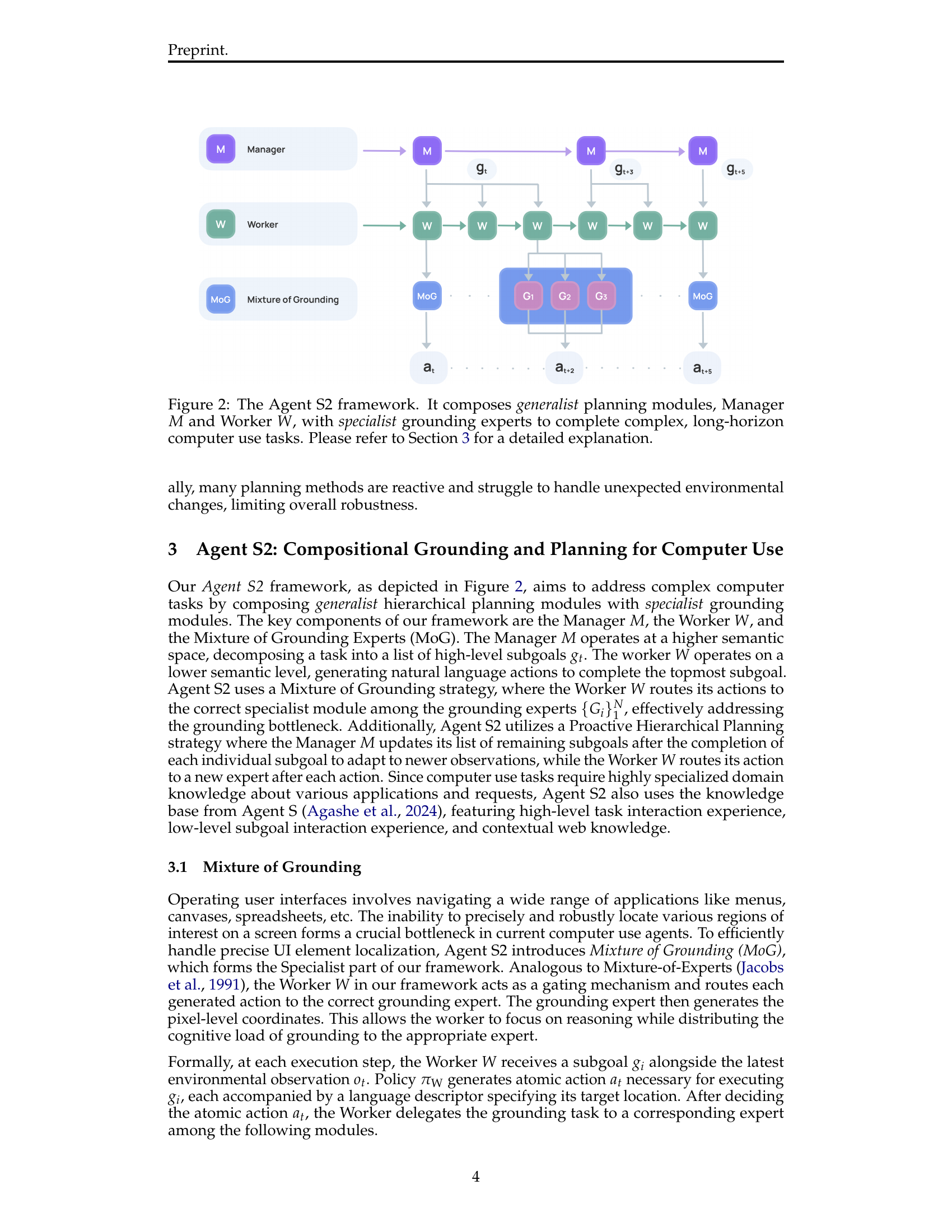

🔼 Agent S2 is a compositional framework that uses both generalist and specialist modules to handle complex computer tasks that require long-term planning. The figure shows the system’s architecture. The Manager (M) handles high-level planning, breaking down complex tasks into smaller sub-goals. The Worker (W) executes these sub-goals, using specialist grounding experts (MoG) to accurately locate and interact with GUI elements. The interaction between these modules is dynamic and iterative, allowing Agent S2 to adapt to changing circumstances and unexpected events.

read the caption

Figure 2: The Agent S2 framework. It composes generalist planning modules, Manager M𝑀Mitalic_M and Worker W𝑊Witalic_W, with specialist grounding experts to complete complex, long-horizon computer use tasks. Please refer to Section 3 for a detailed explanation.

🔼 This figure illustrates the core difference between reactive and proactive planning approaches in the context of hierarchical planning for computer use agents. Reactive planning rigidly follows a pre-defined plan, only adjusting if a subtask fails. In contrast, proactive planning dynamically updates the remaining plan after each completed subtask, allowing for adaptation to evolving observations and increased robustness in response to unforeseen circumstances.

read the caption

Figure 3: Comparison between Reactive and Proactive Planning. Proactive planning re-evaluates and updates the remainder of the plan after every subtask, while reactive planning adheres to a fixed plan and only revises it in response to subtask failures.

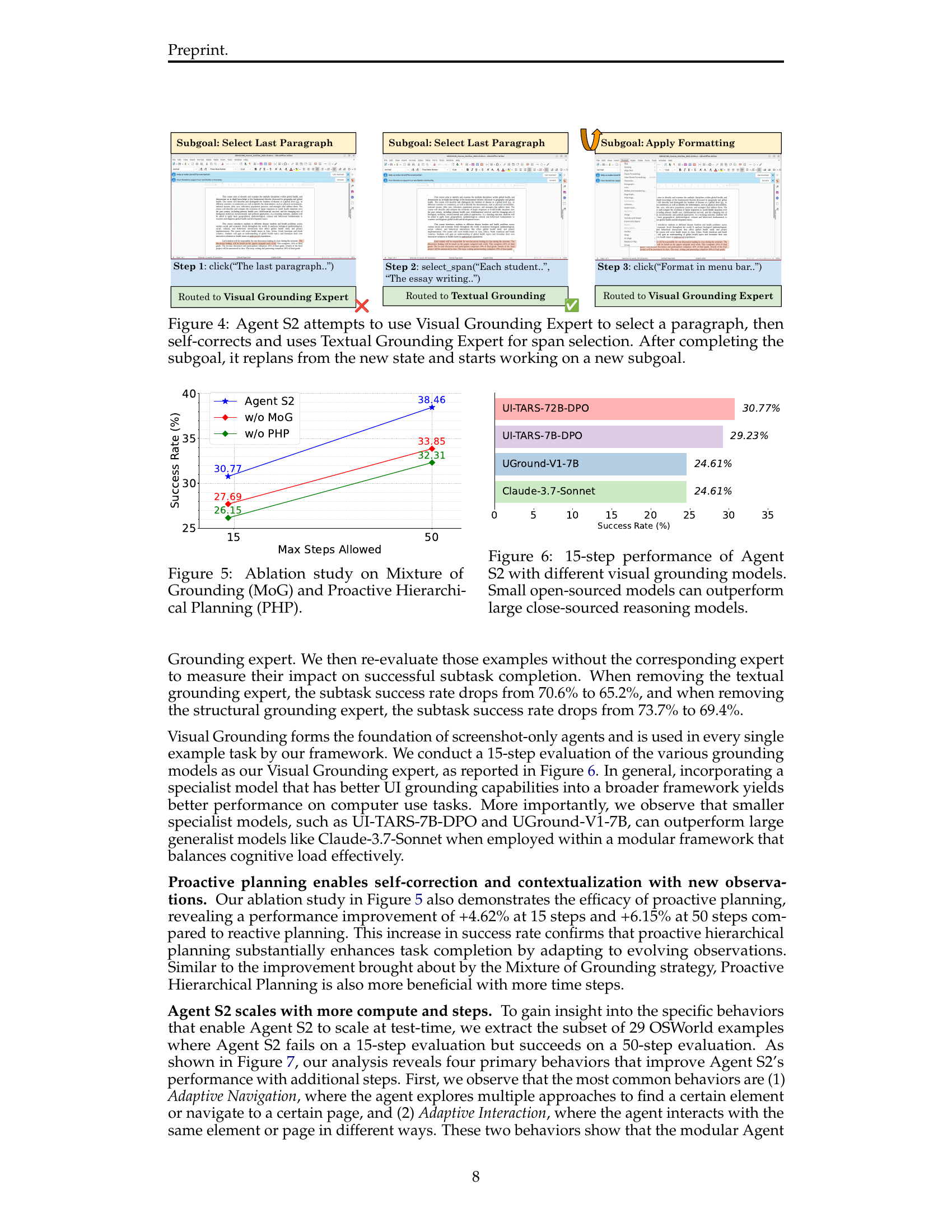

🔼 Agent S2 initially tries to select a paragraph using the Visual Grounding Expert, but this attempt fails. It then self-corrects by switching to the Textual Grounding Expert, which successfully selects the desired span of text. Once this subgoal is achieved, Agent S2 proactively replan its actions, updating its internal state to reflect the successful completion of the subgoal and then proceeds to the next subgoal in its plan.

read the caption

Figure 4: Agent S2 attempts to use Visual Grounding Expert to select a paragraph, then self-corrects and uses Textual Grounding Expert for span selection. After completing the subgoal, it replans from the new state and starts working on a new subgoal.

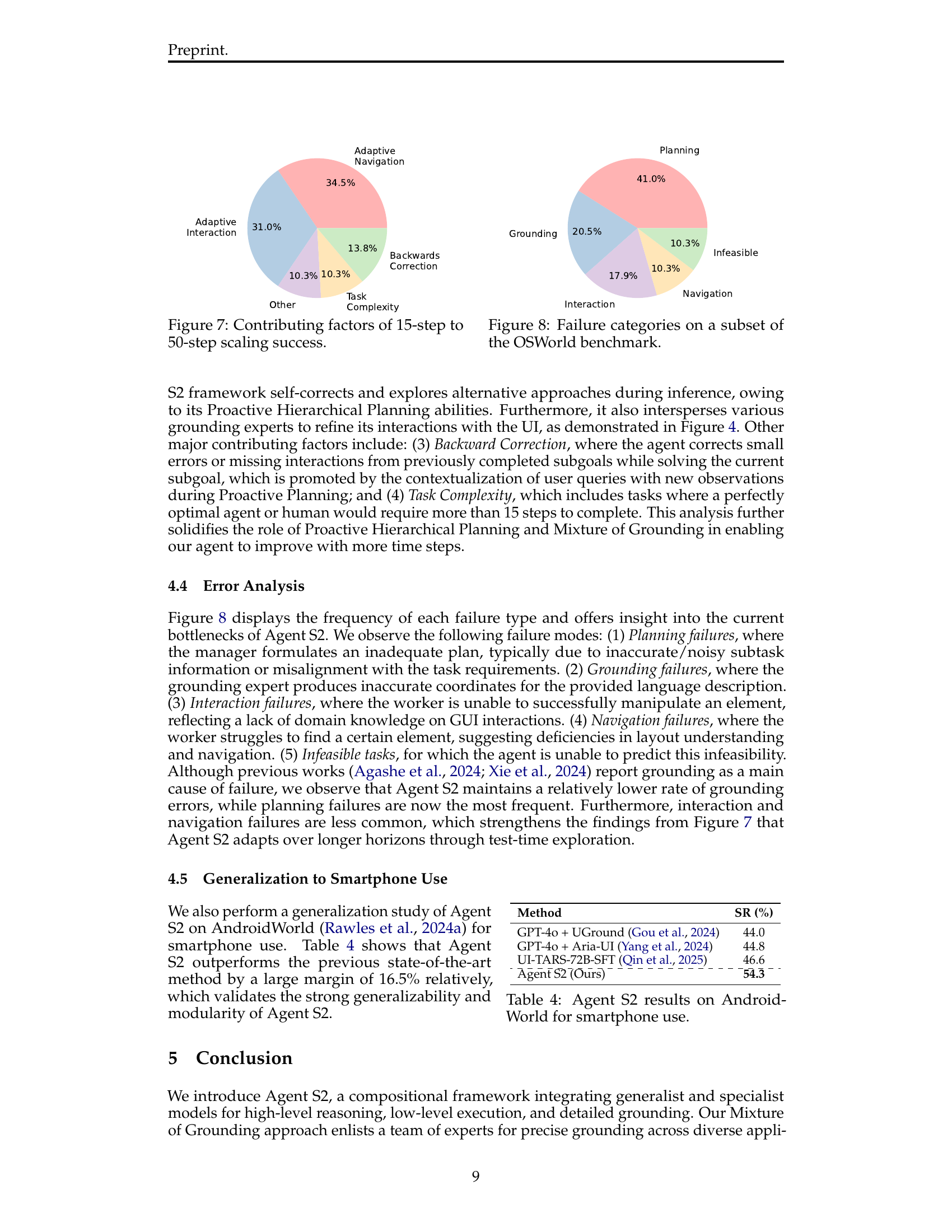

🔼 This ablation study investigates the individual and combined effects of Mixture of Grounding (MoG) and Proactive Hierarchical Planning (PHP) on the success rate of Agent S2. The graph displays success rates for different configurations: Agent S2 with both MoG and PHP, Agent S2 without MoG, Agent S2 without PHP, and several baseline agents (UI-TARS-72B-DPO, UI-TARS-7B-DPO, UGround-V1-7B, and Claude-3.7-Sonnet). The results show the performance gains achieved by incorporating both MoG and PHP, highlighting their importance for improved performance on 15-step and 50-step tasks.

read the caption

Figure 5: Ablation study on Mixture of Grounding (MoG) and Proactive Hierarchical Planning (PHP).

🔼 This figure presents a bar chart comparing the 15-step success rates of Agent S2 using different visual grounding models. The results show that smaller, open-source models achieved higher success rates compared to larger, closed-source reasoning models. This highlights the potential advantage of leveraging smaller, more specialized models for specific tasks within a larger, compositional agent architecture.

read the caption

Figure 6: 15-step performance of Agent S2 with different visual grounding models. Small open-sourced models can outperform large close-sourced reasoning models.

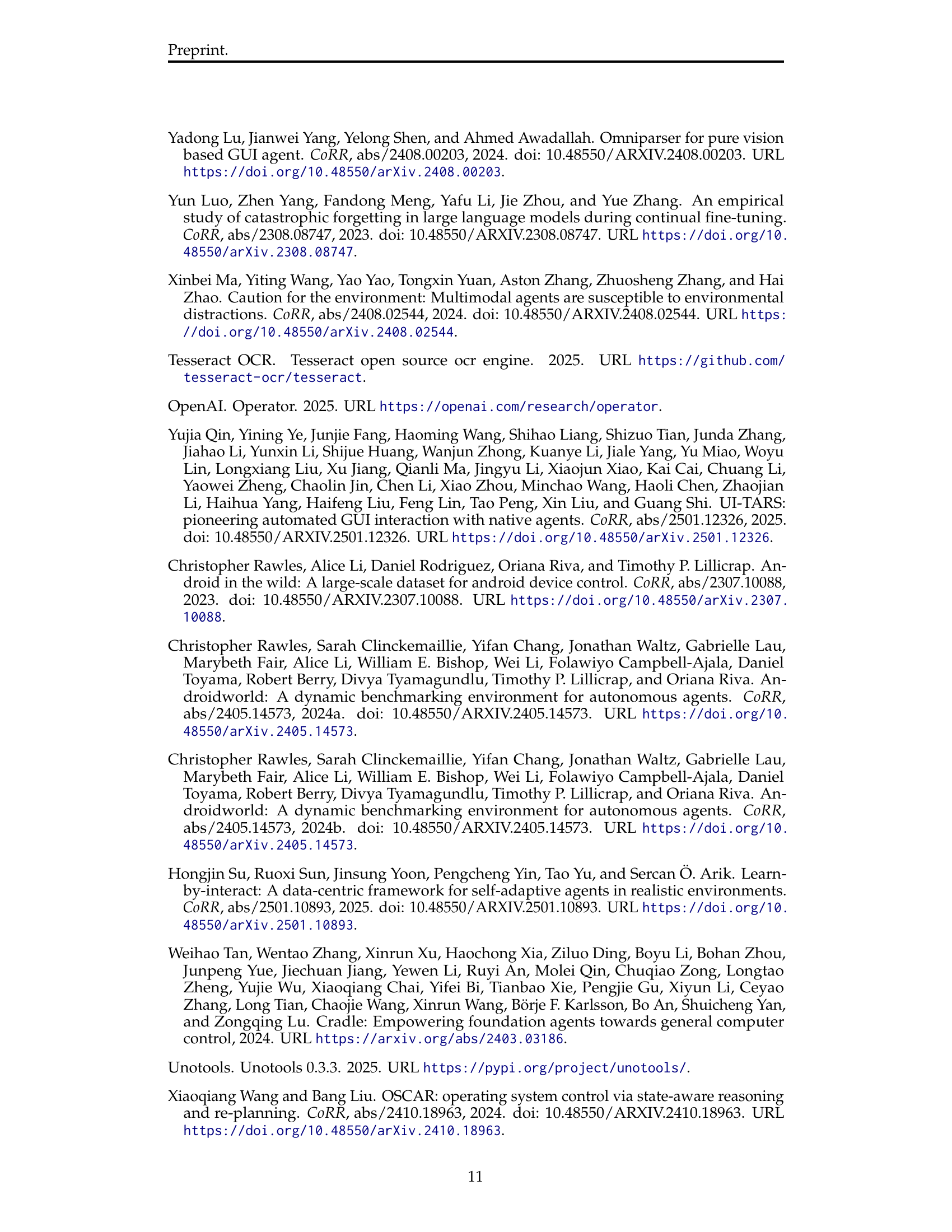

🔼 This figure shows the breakdown of reasons why Agent S2, a computer-use agent, improves its performance when given more time steps (from 15 to 50). The analysis focuses on successful cases and identifies four key contributing factors: Adaptive Navigation (exploring different ways to interact), Adaptive Interaction (trying different methods to achieve a goal), Backward Correction (correcting previous mistakes), and Task Complexity (inherent difficulty of tasks). The percentages indicate the contribution of each factor to the overall improvement in success rate.

read the caption

Figure 7: Contributing factors of 15-step to 50-step scaling success.

🔼 This figure shows a breakdown of the reasons why Agent S2 failed on a subset of tasks from the OSWorld benchmark. It categorizes failures into several key areas, such as problems with the overall plan, issues with grounding (locating specific UI elements), difficulties interacting with elements, problems with navigation within the application, and tasks that were inherently infeasible to complete. The size of each category’s slice reflects the proportion of failures attributed to that particular cause. This helps to identify and analyze the main challenges faced by the agent.

read the caption

Figure 8: Failure categories on a subset of the OSWorld benchmark.

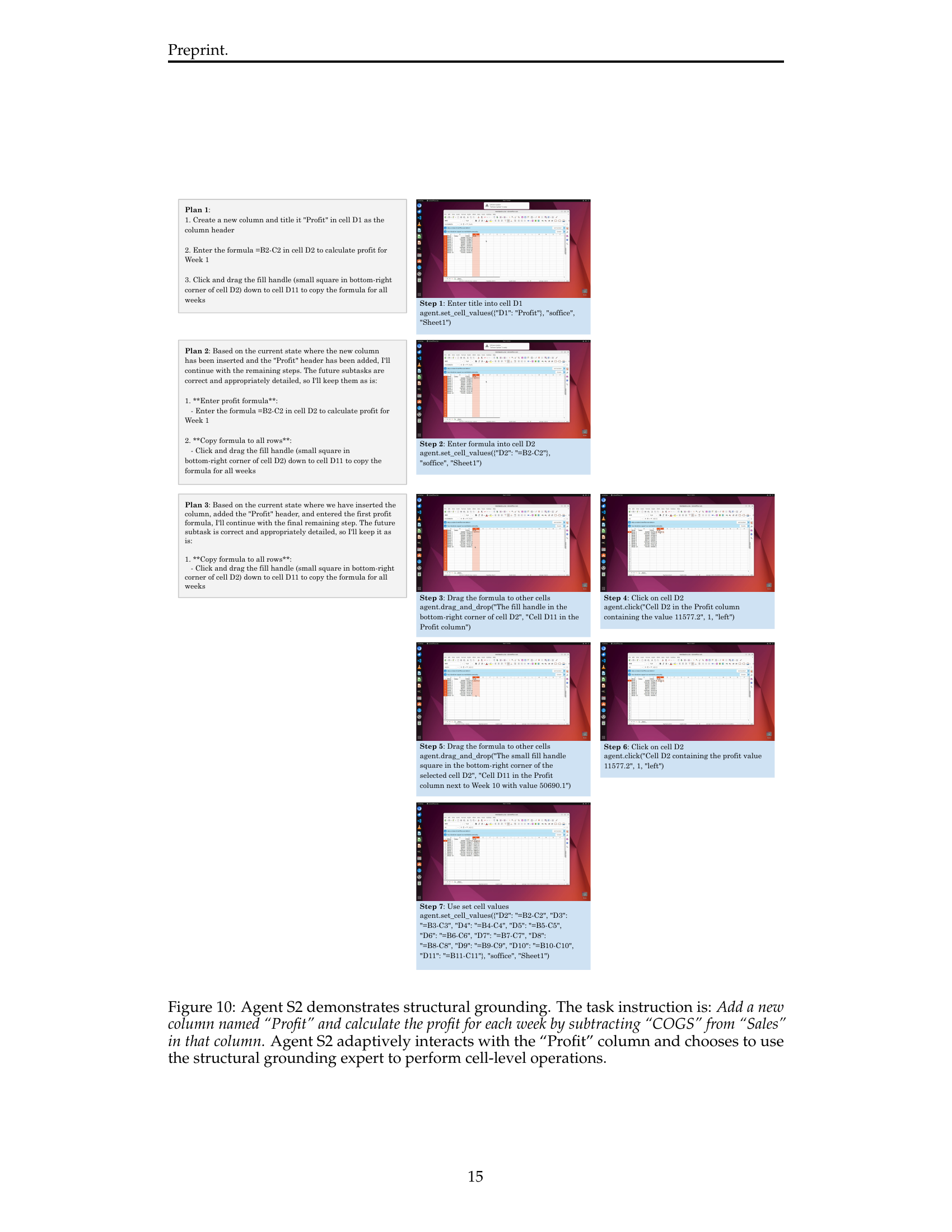

🔼 Agent S2 is tasked with adding strikethrough formatting to the last paragraph of a document. The figure shows the agent’s step-by-step process using textual grounding. Initially, it attempts visual grounding but self-corrects, demonstrating adaptive behavior. It then leverages the textual grounding expert to precisely select the target words before applying the formatting. This highlights the system’s ability to accurately identify and manipulate specific text within a document, showcasing its advanced grounding capabilities. The precise word selection is key, avoiding the limitations of selecting larger text blocks, demonstrating sophisticated text understanding.

read the caption

Figure 9: Agent S2 demonstrates textual grounding. The task instruction is: I think the last paragraph is redundant so I want to add strike-through on words in the last paragraph. Can you do this for me? Agent S2 intelligently utilizes the textual grounding expert to perform a precise word-level selection of text.

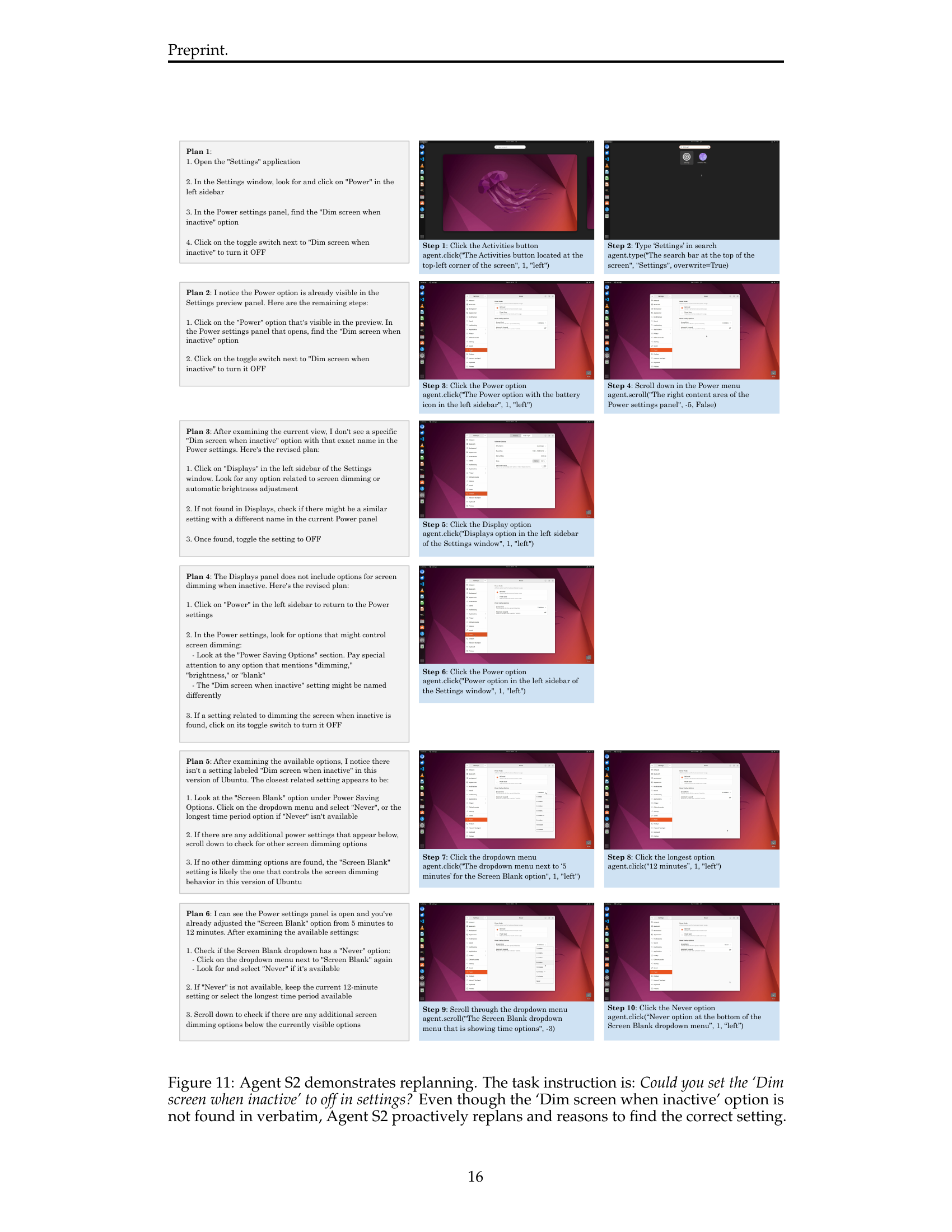

🔼 Agent S2 is shown using structural grounding to add a new column named ‘Profit’ to a spreadsheet. The goal is to calculate profit for each week by subtracting the COGS (cost of goods sold) from the Sales figures. The figure visually shows the steps involved, highlighting how Agent S2 interacts with the spreadsheet cells at a low level. This involves using a specialized ‘structural grounding expert’ that understands spreadsheet structure to perform fine-grained actions within cells, such as entering formulas and dragging to fill in values. The adaptive nature of Agent S2 is apparent, dynamically adjusting its actions based on the evolving state of the spreadsheet. This illustrates Agent S2’s ability to handle detailed, low-level operations within specific applications.

read the caption

Figure 10: Agent S2 demonstrates structural grounding. The task instruction is: Add a new column named “Profit” and calculate the profit for each week by subtracting “COGS” from “Sales” in that column. Agent S2 adaptively interacts with the “Profit” column and chooses to use the structural grounding expert to perform cell-level operations.

🔼 Agent S2 receives the instruction to disable the ‘Dim screen when inactive’ setting in the system settings. However, this exact setting name is not present in the user interface. The figure shows how Agent S2, using its proactive hierarchical planning, dynamically adjusts its plan. Initially, it attempts to locate the setting directly. Upon failure to find the verbatim setting, it re-evaluates the situation, exploring related settings (like screen brightness or power saving modes). It revises its plan, identifying alternative options that achieve the user’s intent. This process of replanning and exploration, illustrated through screenshots of the user interface, demonstrates Agent S2’s adaptive behavior and problem-solving capabilities in uncertain environments.

read the caption

Figure 11: Agent S2 demonstrates replanning. The task instruction is: Could you set the ‘Dim screen when inactive’ to off in settings? Even though the ‘Dim screen when inactive’ option is not found in verbatim, Agent S2 proactively replans and reasons to find the correct setting.

🔼 Agent S2 successfully completes a complex task on the WindowsAgentArena benchmark. The task involves creating a desktop shortcut for a folder, requiring multiple steps and interaction with the Windows GUI. The figure showcases Agent S2’s ability to dynamically adjust its plan, refining its actions based on the evolving state of the environment and feedback from the interface. This highlights the effectiveness of the Proactive Hierarchical Planning component of the Agent S2 framework.

read the caption

Figure 12: Agent S2 on the WindowsAgentArena environment. The task instruction is: Create a shortcut on the Desktop for the folder named ”Projects” that is located in the Documents folder. Name the shortcut ”Projects - Shortcut”. Through consistent replanning, Agent S2 is able to dynamically update its plan and revise its current subtask in more detail.

🔼 Agent S2 successfully navigates the AndroidWorld environment to create a new contact. The figure displays a sequence of screenshots showing the agent’s interactions, starting with opening the Contacts app and culminating in entering all requested contact details (First Name, Last Name, Phone number, and Phone Label). This demonstrates Agent S2’s ability to handle touch, typing, and dropdown menu selection actions within a mobile GUI.

read the caption

Figure 13: Agent S2 on the AndroidWorld mobile environment. It utilizes open, touch, and type interactions to complete the instruction “Go to the new contact screen and enter the following details: First Name: Grace, Last Name: Taylor, Phone: 799-802-XXXX, Phone Label: Work”.

More on tables

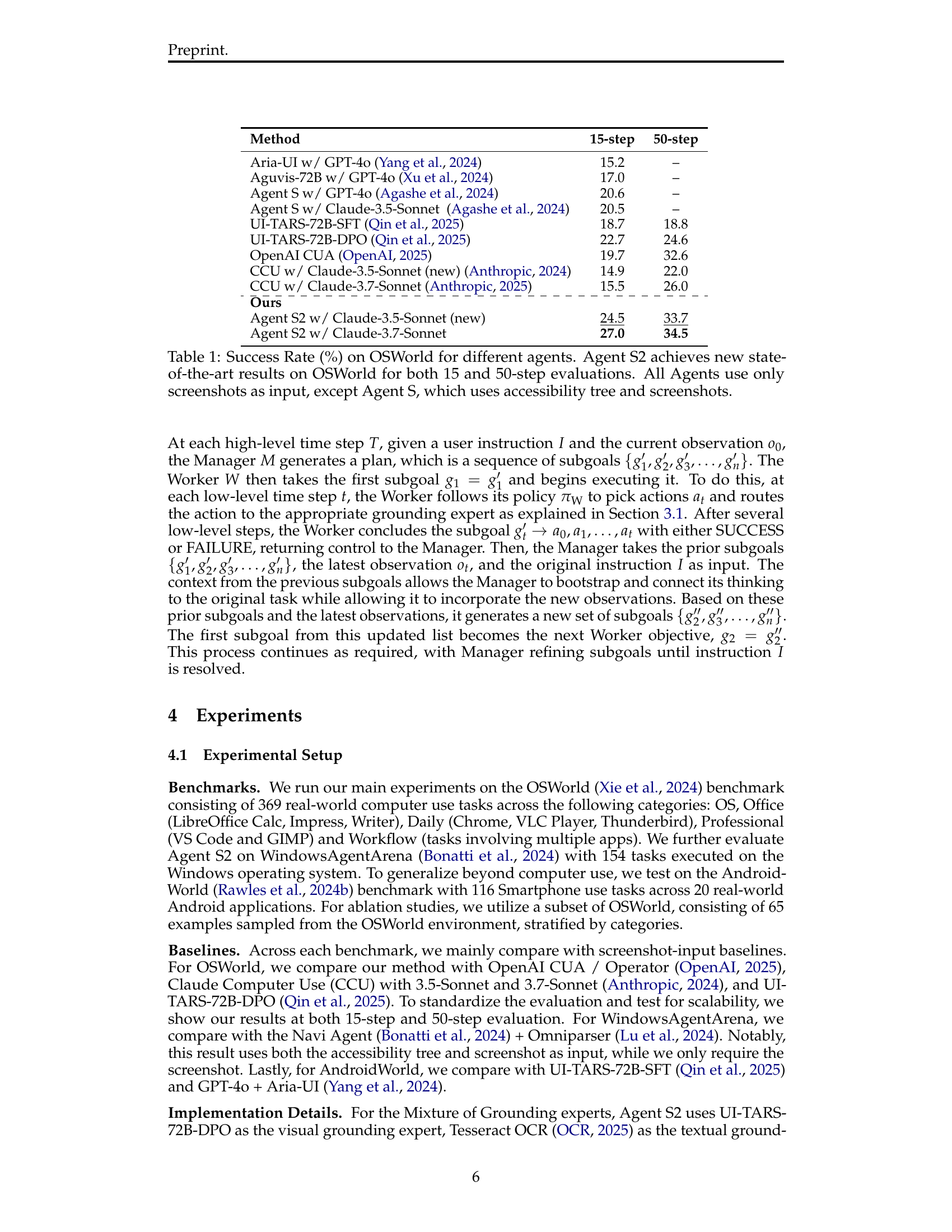

| Model | OS | Daily | Office | Professional | Workflow | Overall |

|---|---|---|---|---|---|---|

| GPT-4o | 50.00 | 30.70 | 18.97 | 51.02 | 14.93 | 26.62 |

| Claude-3.5-Sonnet (new) | 58.33 | 48.44 | 29.06 | 51.02 | 13.46 | 33.71 |

| Claude-3.7-Sonnet | 50.00 | 49.73 | 25.64 | 57.14 | 18.21 | 34.47 |

🔼 This table presents a breakdown of Agent S2’s performance on the OSWorld 50-step benchmark, categorized by task type (OS, Daily, Office, Professional, Workflow). Success rates are shown, illustrating the model’s effectiveness across various task domains. The results are further broken down to show the impact of using different large language models (LLMs) as the Manager and Worker components within the Agent S2 framework.

read the caption

Table 2: Categorized Success Rate (%) of Agent S2 on the OSWorld 50-step evaluation. We report results with various MLLMs as Manager and Worker.

| Method | Office | Web | Windows System | Coding | Media & Video | Windows Utils | Overall |

|---|---|---|---|---|---|---|---|

| Agent S (Agashe et al., 2024) | 0.0 | 13.3 | 45.8 | 29.2 | 19.1 | 22.2 | 18.2 |

| NAVI (Bonatti et al., 2024) | 0.0 | 27.3 | 33.3 | 27.3 | 30.3 | 8.3 | 19.5 |

| \hdashlineAgent S2 (Ours) | 7.0 | 16.4 | 54.2 | 62.5 | 28.6 | 33.3 | 29.8 |

🔼 This table presents the success rates of different computer use agents on the WindowsAgentArena benchmark, focusing on the performance within 15 steps. It highlights Agent S2’s state-of-the-art (SOTA) achievement by comparing its performance against baselines like Agent S and NAVI. A key differentiator is that Agent S2 uniquely relies only on screenshots as input, unlike Agent S and NAVI, which also utilize accessibility trees. This comparison underscores Agent S2’s superior performance in achieving the SOTA despite its use of less information.

read the caption

Table 3: Success Rate (%) on the WindowsAgentArena test set (within 15 steps). Note that both Agent S and NAVI use screenshots and accessibility trees, while our agent only takes screenshots as the input. Agent S2 sets new SOTA on WindowsAgentArena.

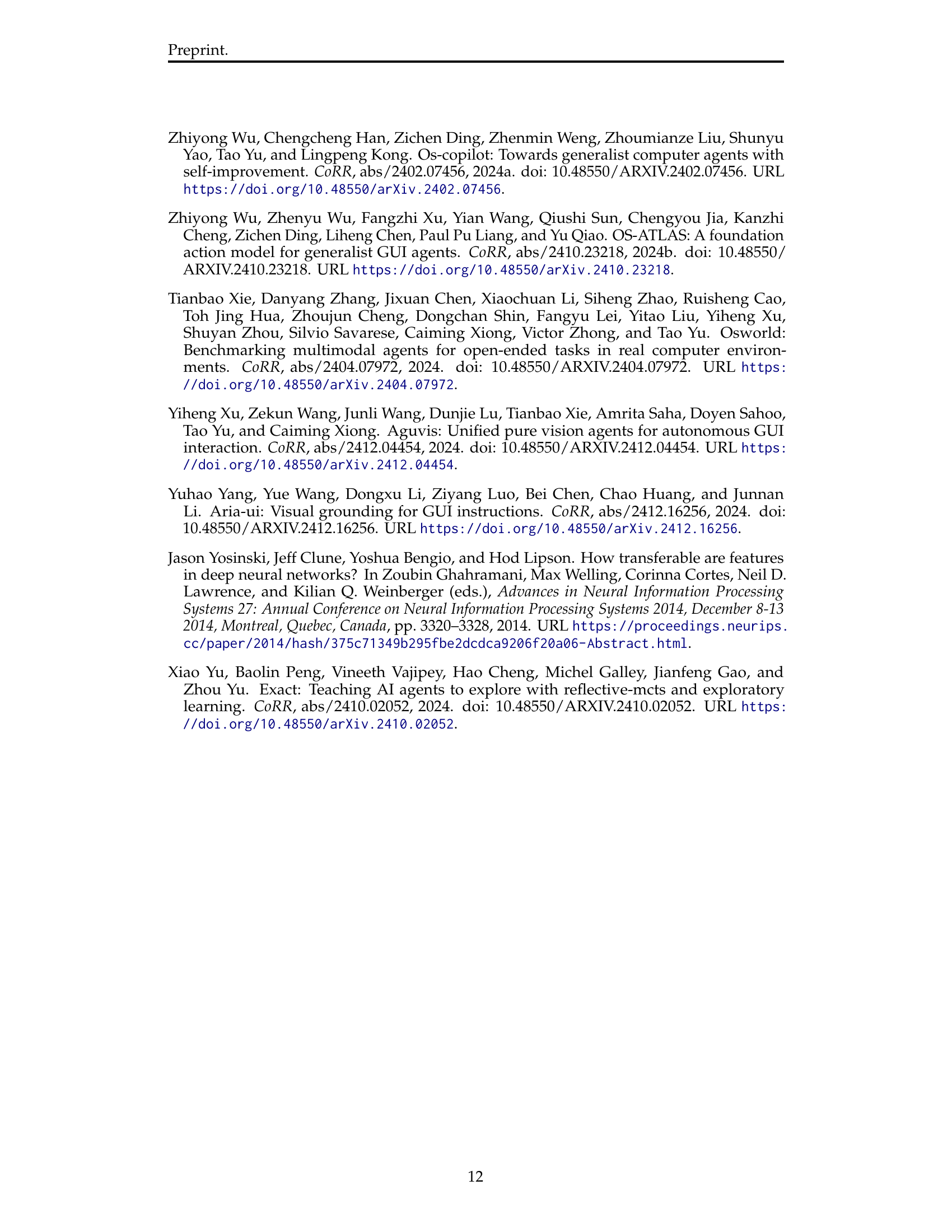

| Method | SR (%) |

| GPT-4o + UGround (Gou et al., 2024) | 44.0 |

| GPT-4o + Aria-UI (Yang et al., 2024) | 44.8 |

| UI-TARS-72B-SFT (Qin et al., 2025) | 46.6 |

| \hdashlineAgent S2 (Ours) | 54.3 |

🔼 This table presents the results of Agent S2’s performance on the AndroidWorld benchmark, specifically focusing on tasks involving smartphone use. It compares Agent S2’s success rate to other state-of-the-art methods.

read the caption

Table 4: Agent S2 results on AndroidWorld for smartphone use.

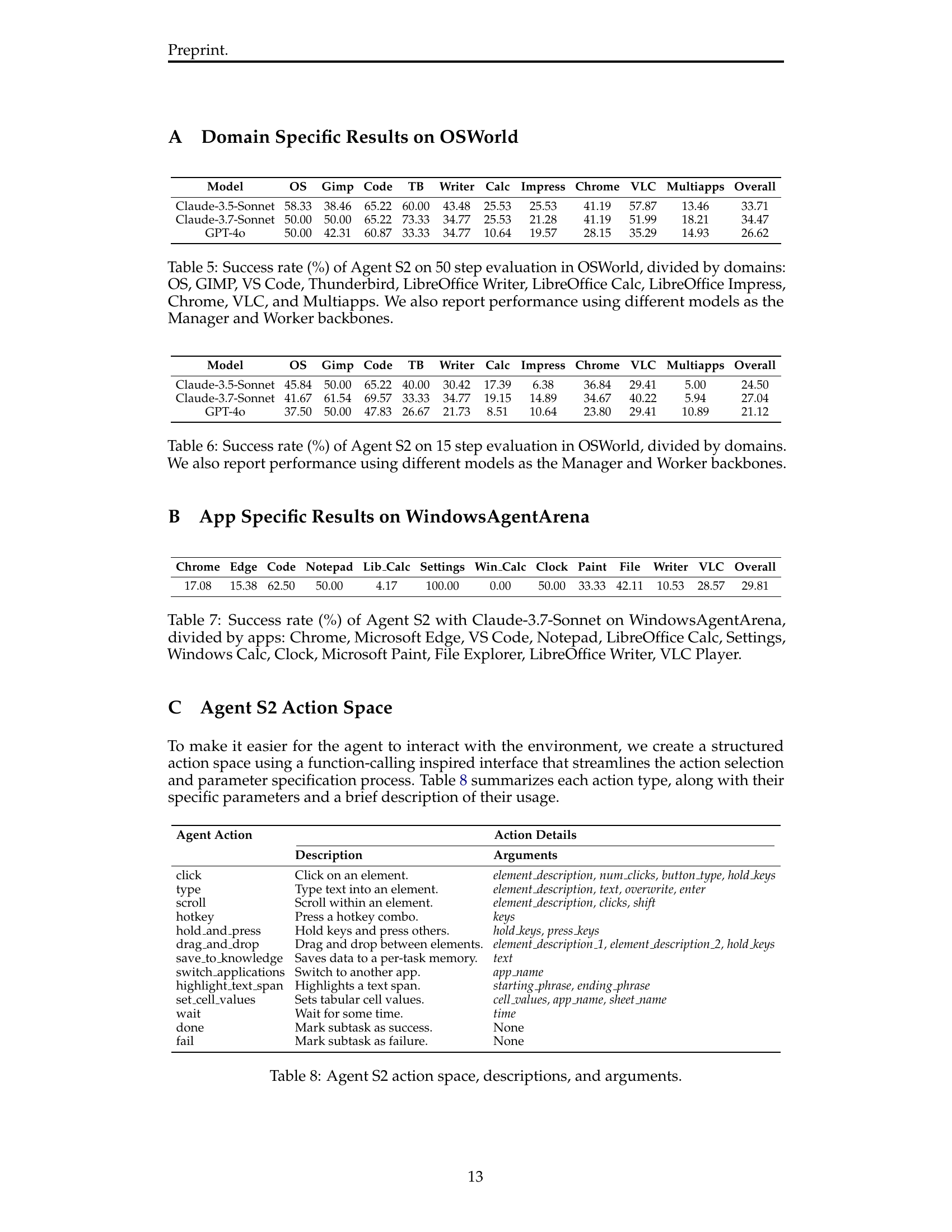

| Model | OS | Gimp | Code | TB | Writer | Calc | Impress | Chrome | VLC | Multiapps | Overall |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Claude-3.5-Sonnet | 58.33 | 38.46 | 65.22 | 60.00 | 43.48 | 25.53 | 25.53 | 41.19 | 57.87 | 13.46 | 33.71 |

| Claude-3.7-Sonnet | 50.00 | 50.00 | 65.22 | 73.33 | 34.77 | 25.53 | 21.28 | 41.19 | 51.99 | 18.21 | 34.47 |

| GPT-4o | 50.00 | 42.31 | 60.87 | 33.33 | 34.77 | 10.64 | 19.57 | 28.15 | 35.29 | 14.93 | 26.62 |

🔼 This table presents a detailed breakdown of Agent S2’s performance on the OSWorld benchmark’s 50-step evaluation. It shows success rates across different application domains (OS, GIMP, VS Code, Thunderbird, LibreOffice Writer, Calc, Impress, Chrome, VLC, and Multiapps). Importantly, it also demonstrates how performance varies depending on the specific large language models (LLMs) used as the ‘Manager’ and ‘Worker’ components within the Agent S2 framework.

read the caption

Table 5: Success rate (%) of Agent S2 on 50 step evaluation in OSWorld, divided by domains: OS, GIMP, VS Code, Thunderbird, LibreOffice Writer, LibreOffice Calc, LibreOffice Impress, Chrome, VLC, and Multiapps. We also report performance using different models as the Manager and Worker backbones.

| Model | OS | Gimp | Code | TB | Writer | Calc | Impress | Chrome | VLC | Multiapps | Overall |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Claude-3.5-Sonnet | 45.84 | 50.00 | 65.22 | 40.00 | 30.42 | 17.39 | 6.38 | 36.84 | 29.41 | 5.00 | 24.50 |

| Claude-3.7-Sonnet | 41.67 | 61.54 | 69.57 | 33.33 | 34.77 | 19.15 | 14.89 | 34.67 | 40.22 | 5.94 | 27.04 |

| GPT-4o | 37.50 | 50.00 | 47.83 | 26.67 | 21.73 | 8.51 | 10.64 | 23.80 | 29.41 | 10.89 | 21.12 |

🔼 This table presents the success rate of Agent S2, a computer use agent, on the OSWorld benchmark. The success rate is broken down by specific application domains (OS, GIMP, Code, Thunderbird, LibreOffice Writer, LibreOffice Calc, LibreOffice Impress, Chrome, VLC, Multiapps), providing a granular view of performance across different task types. The results are shown for a 15-step evaluation, and the table also includes comparative results using different models (Claude-3.5-Sonnet, Claude-3.7-Sonnet, GPT-40) as the Manager and Worker components within the Agent S2 framework.

read the caption

Table 6: Success rate (%) of Agent S2 on 15 step evaluation in OSWorld, divided by domains. We also report performance using different models as the Manager and Worker backbones.

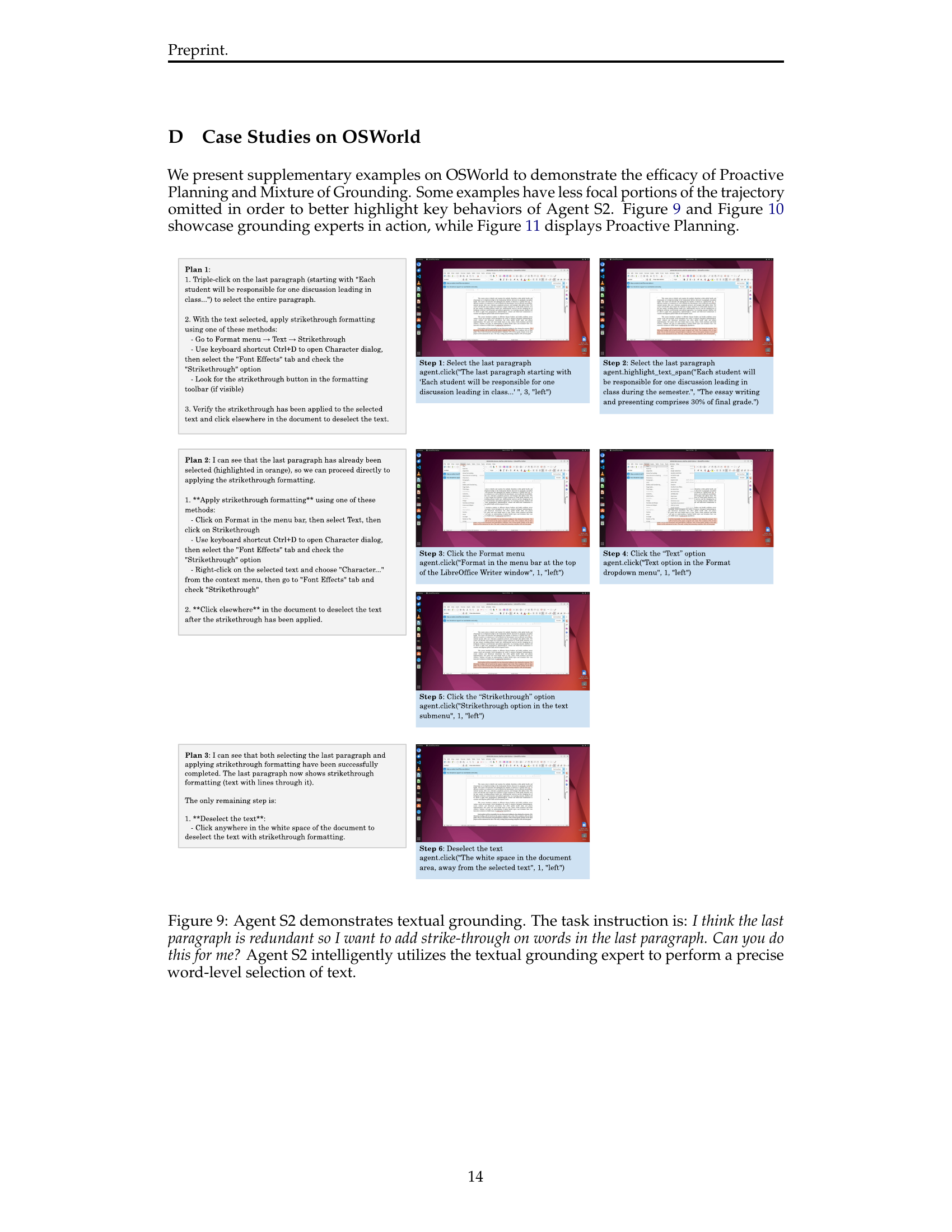

| Chrome | Edge | Code | Notepad | Lib_Calc | Settings | Win_Calc | Clock | Paint | File | Writer | VLC | Overall |

| 17.08 | 15.38 | 62.50 | 50.00 | 4.17 | 100.00 | 0.00 | 50.00 | 33.33 | 42.11 | 10.53 | 28.57 | 29.81 |

🔼 This table presents a detailed breakdown of Agent S2’s performance on the WindowsAgentArena benchmark. It shows the success rate achieved for each of 12 different applications, categorized by application type (browser, code editor, office suite, etc.). Agent S2, using the Claude-3.7-Sonnet model, is evaluated on tasks within these applications. The success rate is expressed as a percentage and provides a granular view of the agent’s capabilities across various software types.

read the caption

Table 7: Success rate (%) of Agent S2 with Claude-3.7-Sonnet on WindowsAgentArena, divided by apps: Chrome, Microsoft Edge, VS Code, Notepad, LibreOffice Calc, Settings, Windows Calc, Clock, Microsoft Paint, File Explorer, LibreOffice Writer, VLC Player.

| Agent Action | Action Details | |

|---|---|---|

| Description | Arguments | |

| click | Click on an element. | element_description, num_clicks, button_type, hold_keys |

| type | Type text into an element. | element_description, text, overwrite, enter |

| scroll | Scroll within an element. | element_description, clicks, shift |

| hotkey | Press a hotkey combo. | keys |

| hold_and_press | Hold keys and press others. | hold_keys, press_keys |

| drag_and_drop | Drag and drop between elements. | element_description_1, element_description_2, hold_keys |

| save_to_knowledge | Saves data to a per-task memory. | text |

| switch_applications | Switch to another app. | app_name |

| highlight_text_span | Highlights a text span. | starting_phrase, ending_phrase |

| set_cell_values | Sets tabular cell values. | cell_values, app_name, sheet_name |

| wait | Wait for some time. | time |

| done | Mark subtask as success. | None |

| fail | Mark subtask as failure. | None |

🔼 This table details the actions Agent S2 can perform when interacting with a graphical user interface (GUI). For each action (e.g., click, type, scroll), it lists the action type, a description of its purpose, and the arguments required to specify the action’s parameters. This provides a comprehensive overview of Agent S2’s capabilities in manipulating GUI elements.

read the caption

Table 8: Agent S2 action space, descriptions, and arguments.

Full paper#