↗ arXiv ↗ Hugging Face ↗ Papers with Code

TL;DR#

Many multilingual communities, especially in India, use code-mixed languages in online social media groups. This presents a challenge for information retrieval systems, which often struggle with the unstructured and informal nature of this type of text. Extracting relevant information from such conversations is difficult because of variations in spelling and grammar as well as the complex interplay of different languages.

RetrieveGPT directly addresses this challenge. It uses a novel combination of prompt engineering with GPT-3.5 Turbo and a mathematical model to analyze the relevance of documents in a sequence. This approach outperforms traditional methods by considering the contextual relationship between documents. The effectiveness of the method is validated through experiments on a dataset of Facebook conversations, demonstrating that the system can extract relevant information from complex code-mixed conversations more accurately.

Key Takeaways#

Why does it matter?#

This paper is important because it tackles the challenging problem of information retrieval in code-mixed social media conversations, a significant issue in multilingual societies. The proposed method using GPT-3.5 Turbo and a mathematical model offers a novel approach to improve accuracy and efficiency, opening avenues for enhancing information accessibility in diverse online communities. This research is particularly relevant to the growing field of multilingual NLP and contributes to the development of effective IR systems for complex, real-world scenarios.

Visual Insights#

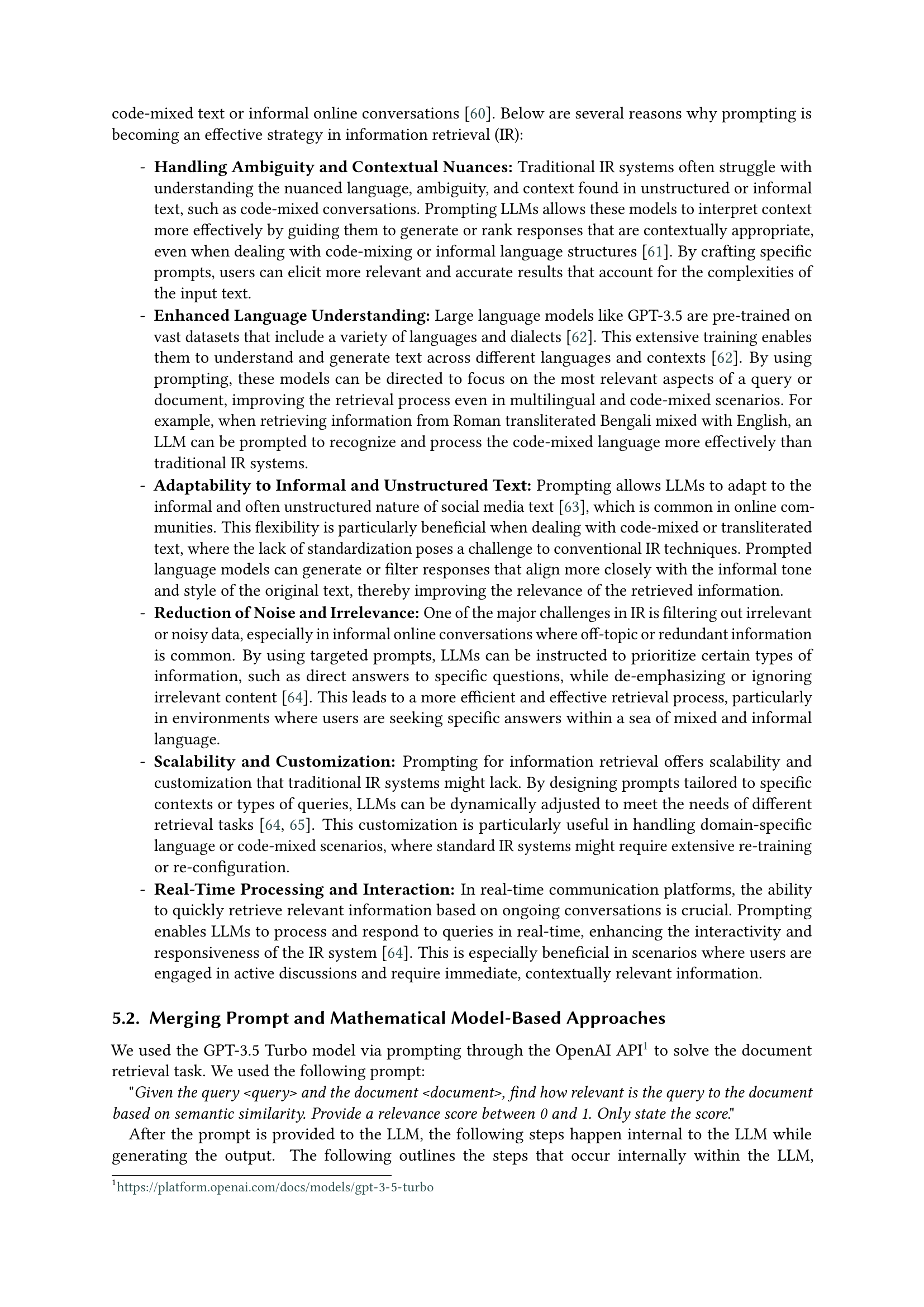

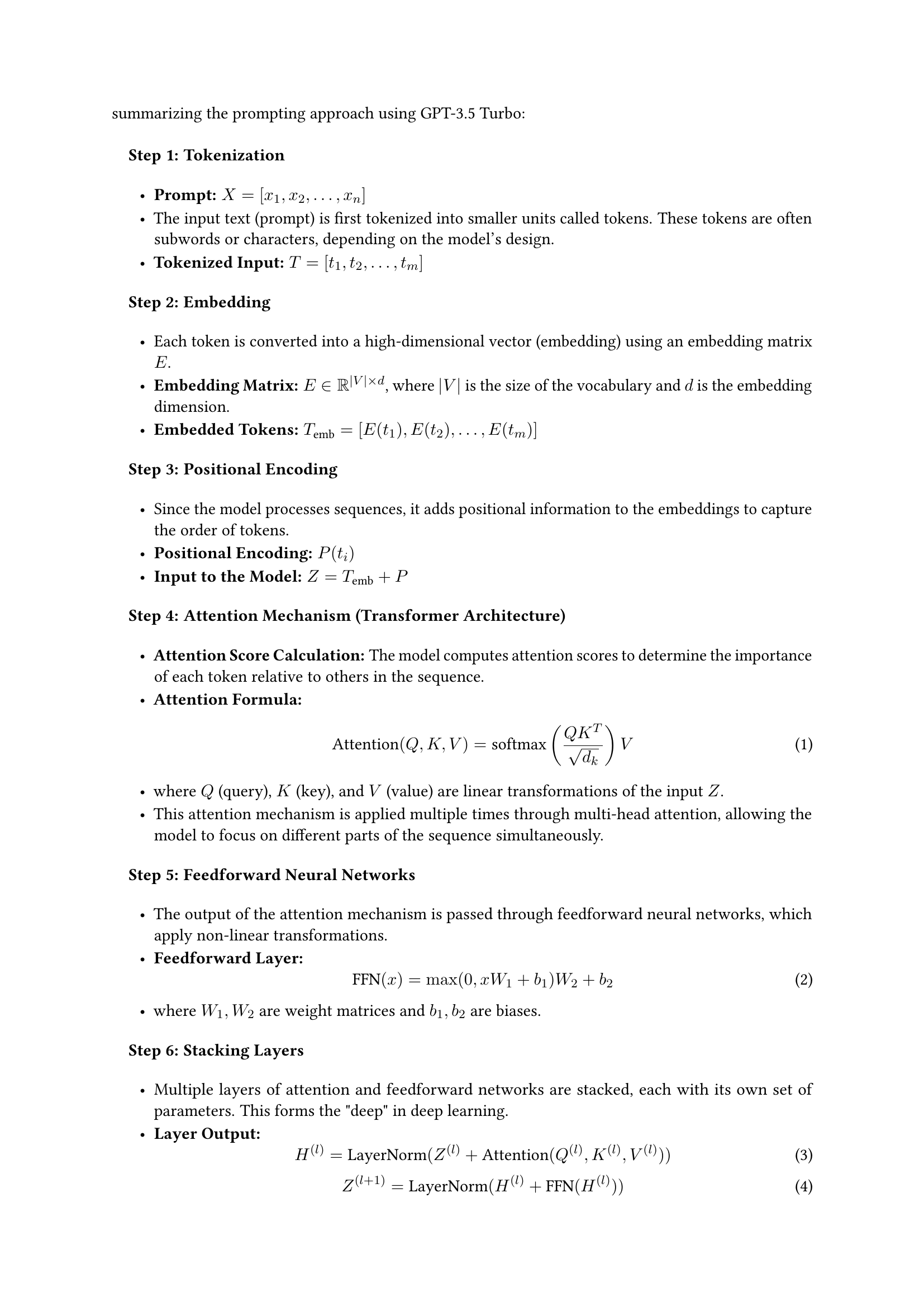

🔼 This figure illustrates the architecture of the GPT-3.5 Turbo model, highlighting the key components involved in processing text input and generating output. It shows the flow of information from tokenization and embedding to attention mechanisms (transformer architecture), feedforward neural networks, and finally, output generation through a softmax layer. The layered structure of the model, including multiple decoder blocks stacked together to achieve a deeper understanding of the input sequence, is also visualized. The diagram shows the different stages of processing: tokenization, embedding, positional encoding, attention mechanisms, feedforward neural networks, and output generation via a softmax layer.

read the caption

Figure 1: An overview of the GPT-3.5 Turbo architecture.

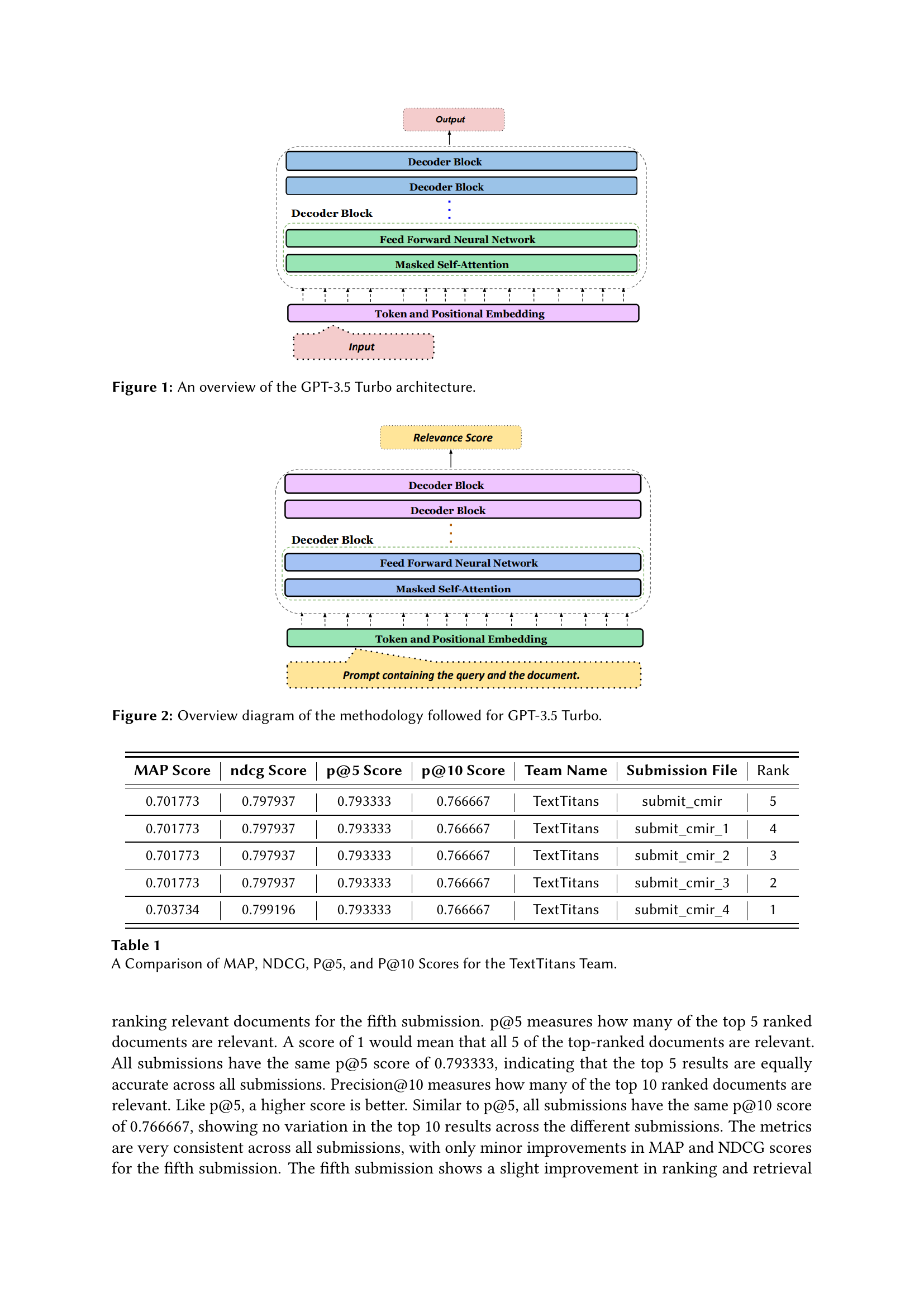

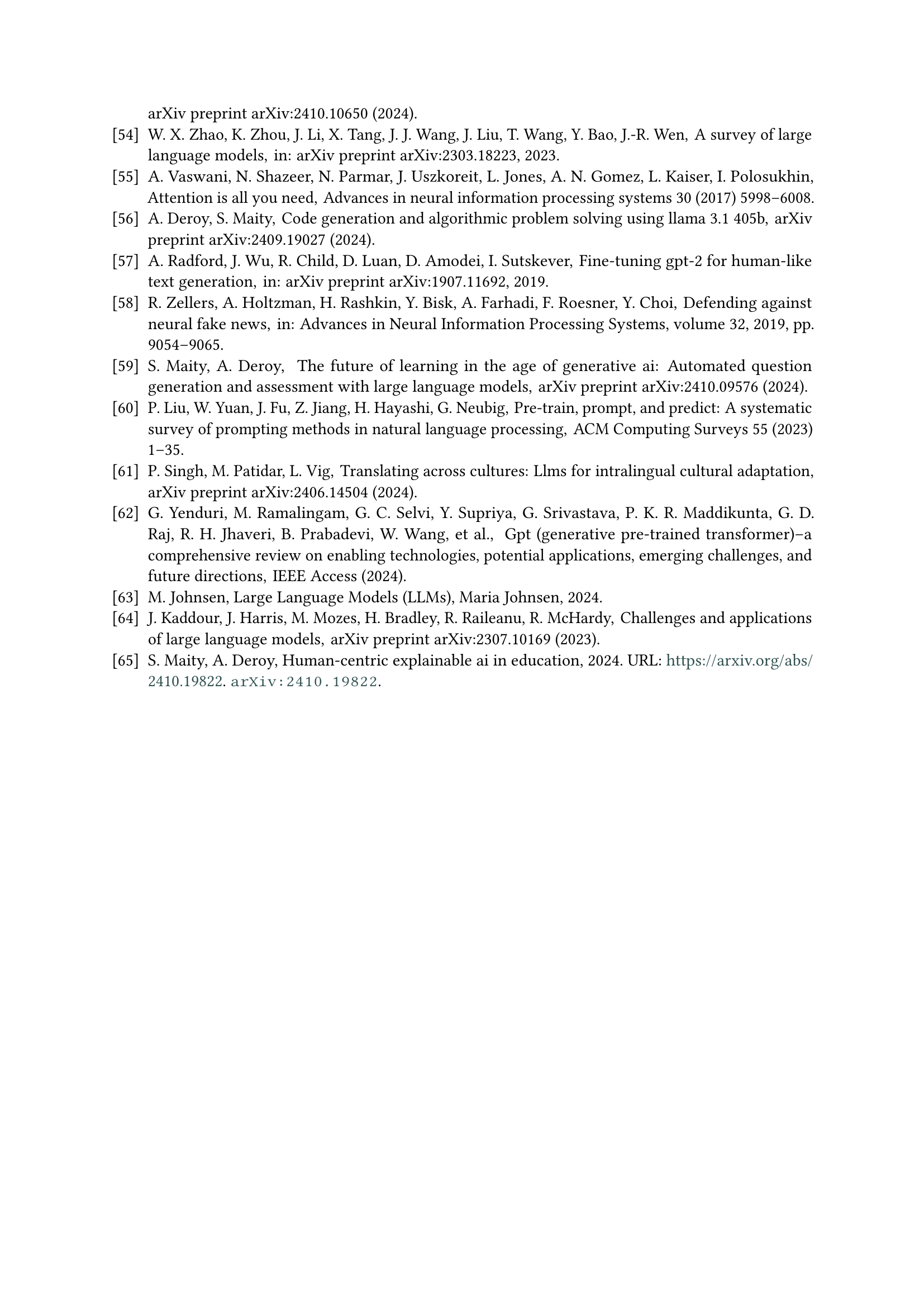

| MAP Score | ndcg Score | p@5 Score | p@10 Score | Team Name | Submission File | Rank |

|---|---|---|---|---|---|---|

| 0.701773 | 0.797937 | 0.793333 | 0.766667 | TextTitans | submit_cmir | 5 |

| 0.701773 | 0.797937 | 0.793333 | 0.766667 | TextTitans | submit_cmir_1 | 4 |

| 0.701773 | 0.797937 | 0.793333 | 0.766667 | TextTitans | submit_cmir_2 | 3 |

| 0.701773 | 0.797937 | 0.793333 | 0.766667 | TextTitans | submit_cmir_3 | 2 |

| 0.703734 | 0.799196 | 0.793333 | 0.766667 | TextTitans | submit_cmir_4 | 1 |

🔼 Table 1 presents the evaluation metrics for five different submissions from the team named ‘TextTitans’ for a code-mixed information retrieval task. The metrics used include Mean Average Precision (MAP), Normalized Discounted Cumulative Gain (NDCG), Precision at 5 (P@5), and Precision at 10 (P@10). These metrics assess the ranking quality of the retrieved documents. The table shows consistent performance across the first four submissions, with a slight improvement observed in the fifth submission, indicating minor gains in retrieval accuracy. The identical P@5 and P@10 scores across all submissions suggest consistent top-k retrieval performance.

read the caption

Table 1: A Comparison of MAP, NDCG, P@5, and P@10 Scores for the TextTitans Team.

Full paper#