↗ arXiv ↗ Hugging Face ↗ Papers with Code

TL;DR#

Many recent advancements in video generation allow for controllable camera trajectories, but these methods are limited to videos generated by the model itself and cannot be directly applied to user-provided videos. This is a significant issue because it prevents users from easily generating videos with custom camera perspectives from their own video footage. Existing methods either require synchronized multi-view videos or accurate camera pose and depth estimation, which is not always practical or feasible for real-world applications.

To tackle these issues, the paper introduces ReCapture, a novel method that effectively reangles videos by first creating a noisy anchor video from user-provided footage and a new camera trajectory using either multiview diffusion models or depth-based point cloud rendering. This noisy video is then refined into a temporally consistent video using a masked video fine-tuning technique with spatial and temporal LoRAs. This approach avoids the need for paired video data or accurate depth estimation, enabling realistic re-angling of user-provided videos with complex scene motion and dynamic content. The results demonstrate that ReCapture outperforms other methods in both qualitative and quantitative evaluations.

Key Takeaways#

Why does it matter?#

This paper is important because it presents a novel approach to generating videos with customized camera trajectories from user-provided videos. This addresses a significant limitation of existing methods that struggle to handle user-provided videos with complex scene motion and dynamic content. The proposed method opens up new avenues for video editing, digital content creation, and immersive experiences, offering significant advancements in video generation and manipulation.

Visual Insights#

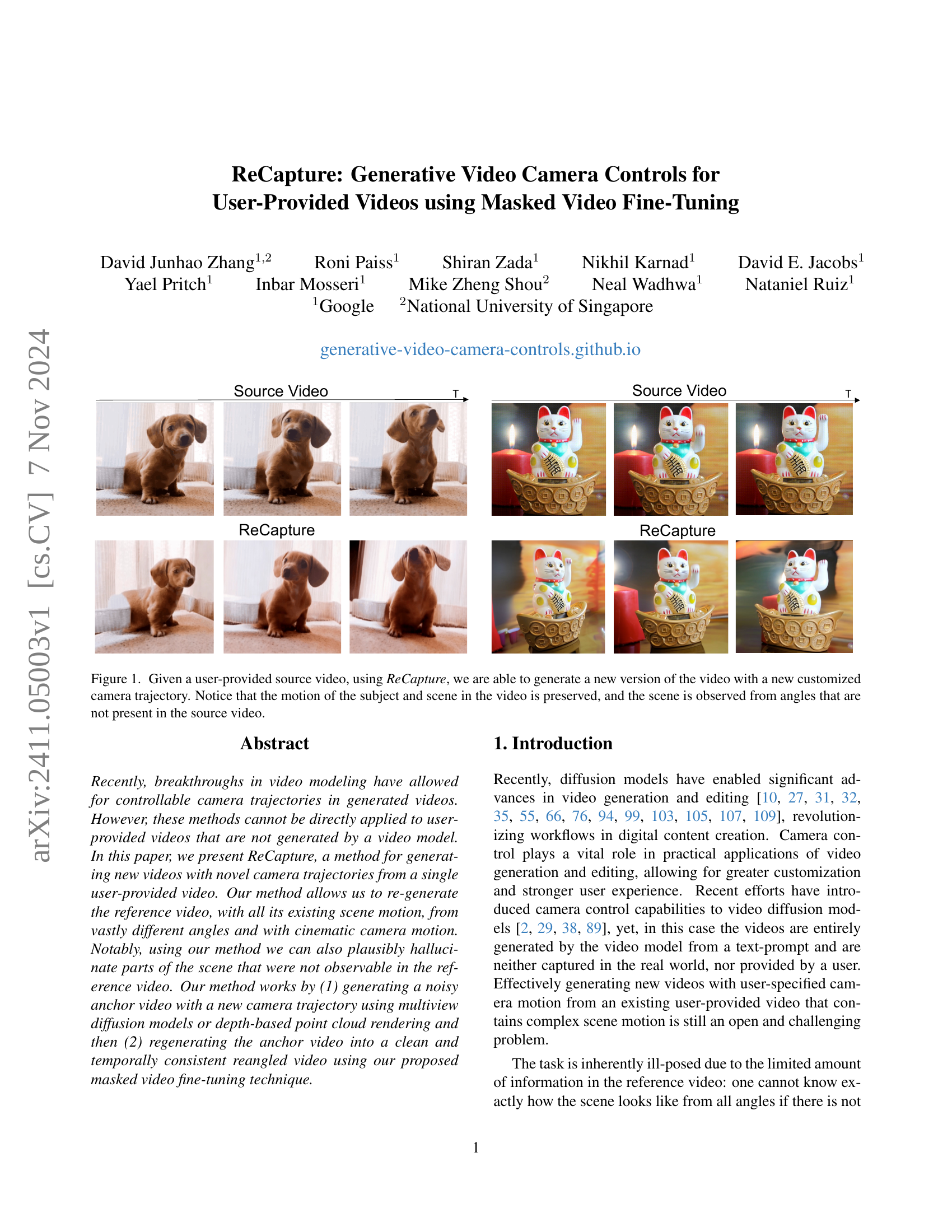

🔼 ReCapture takes a user-provided video as input and generates a new video with a different camera trajectory. The generated video maintains the original video’s scene motion and subject movements, but shows the scene from novel viewpoints not present in the original.

read the caption

Figure 1: Given a user-provided source video, using ReCapture, we are able to generate a new version of the video with a new customized camera trajectory. Notice that the motion of the subject and scene in the video is preserved, and the scene is observed from angles that are not present in the source video.

| Models | Subject | |||||||

|---|---|---|---|---|---|---|---|---|

| Consistency | Background | |||||||

| Consistency | Temporal | |||||||

| Flickering | Motion | |||||||

| Smoothness | Dynamic | |||||||

| Degree | Aesthetic | |||||||

| Quality | Imaging | |||||||

| Quality | Object | |||||||

| Class | ||||||||

| Generative Camera Dolly [82] | 83.02% | 80.42% | 74.64% | 82.33% | 51.24% | 38.67% | 58.62% | 76.46% |

| Ours | 88.53% | 92.02% | 91.12% | 98.24% | 49.03% | 57.35% | 64.75% | 82.07% |

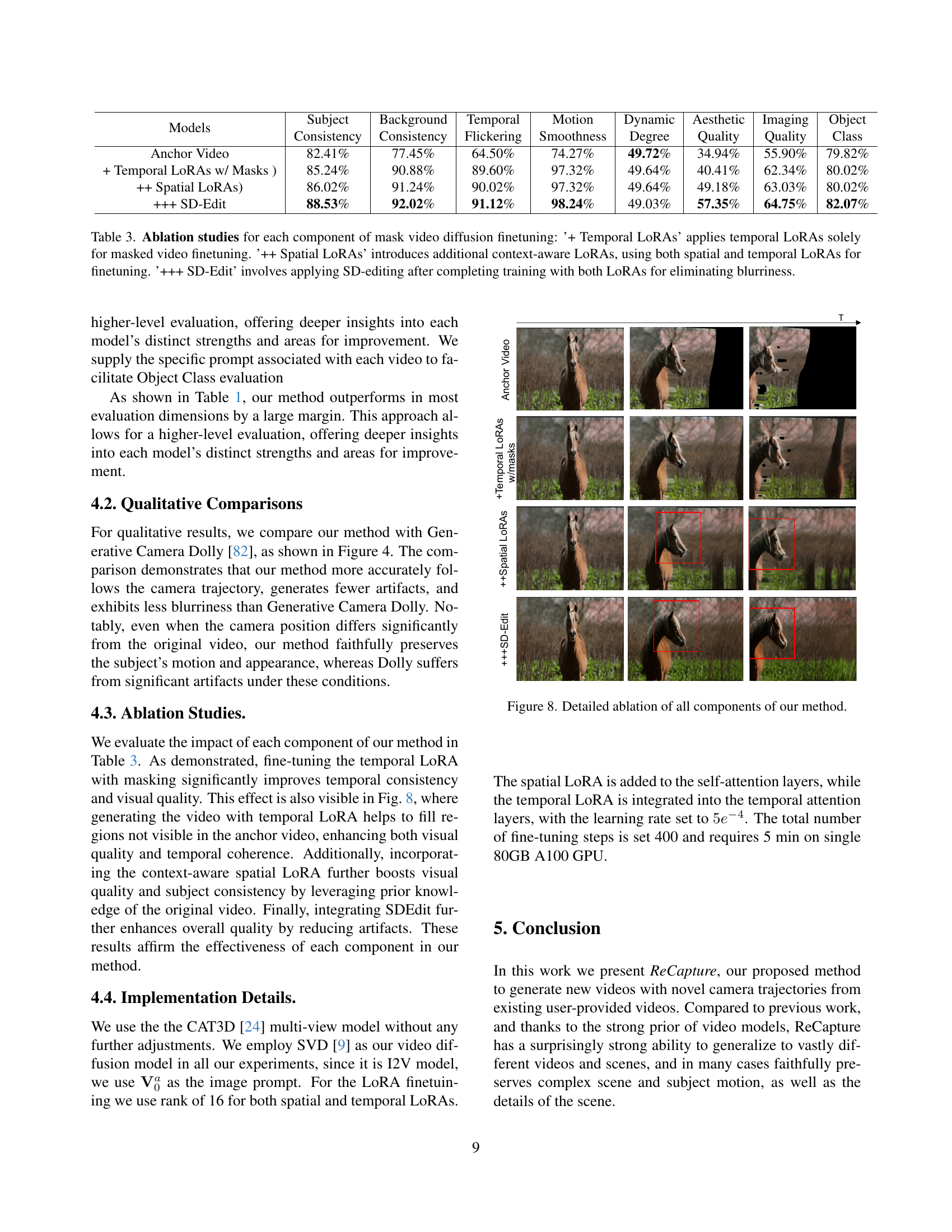

🔼 This table presents a quantitative comparison of the proposed ReCapture method and the Generative Camera Dolly method on the VBench benchmark. It evaluates several aspects of video generation quality including subject and background consistency, the presence of flickering or motion smoothness issues, the dynamic range of the generated videos, the aesthetic quality, image quality, and object class consistency. The results are presented as percentages, allowing for a direct comparison of the two methods across these key dimensions.

read the caption

Table 1: Quantitative comparisons with Generative Camera Dolly on VBench.

In-depth insights#

Masked Video Tuning#

Masked video fine-tuning, as a novel technique, tackles the challenge of generating high-quality videos from noisy, incomplete anchor videos produced in the first stage of video re-angling. By employing a masked loss function, the model focuses solely on the reliable regions of the anchor video, effectively mitigating the impact of artifacts and missing data. This clever approach leverages the strong prior knowledge of the video diffusion model and avoids overfitting to the corrupted parts of the input. Further enhancing the method, a context-aware spatial LoRA is introduced to inject visual context from the original video, ensuring seamless integration and fixing structural inconsistencies. The spatial LoRA, trained on the source video data, enhances the realism and coherence of the output. Coupled with a temporal motion LoRA that refines temporal consistency, this masked video fine-tuning approach proves exceptionally effective in producing clean, temporally consistent re-angled videos with novel camera trajectories, while preserving the original video content and scene dynamics. This two-pronged LoRA approach significantly improves the quality of the final video compared to using only one. The synergy between masked loss and LoRA adaptation allows for a more efficient and accurate completion of the video content.

Novel View Synthesis#

Novel view synthesis, a core problem in computer vision and graphics, aims to generate realistic views of a scene from viewpoints not present in the original observations. Traditional methods often rely on multi-view stereo or depth estimation, limiting their applicability to scenarios with multiple cameras or accurate depth data. Recent advances leverage deep learning, particularly diffusion models, to address these limitations. These models learn complex relationships between different views, enabling the generation of novel viewpoints even from a single input video. However, challenges remain in handling dynamic scenes, temporal consistency, and hallucination of occluded regions. Successful methods require careful consideration of scene motion, potentially combining 3D representations with generative models to synthesize consistent video sequences. The trade-off between realism, efficiency, and the need for training data is also a significant consideration. Future research may focus on improving generalization to diverse scenes and enhancing the controllability and efficiency of these sophisticated synthesis techniques.

Diffusion Model Advances#

Diffusion models have significantly advanced video generation and editing. Early methods focused on image diffusion and adapting them to the temporal domain, often using 3D U-Net architectures or transformers. Recent breakthroughs, however, have yielded more sophisticated models capable of generating high-fidelity videos directly, leveraging advancements in attention mechanisms and training techniques. A key area of progress lies in the incorporation of camera control, enabling users to specify desired trajectories during video generation. While some approaches require paired video data for training, others use novel techniques to generate new views from a single user-provided video, often employing 4D scene reconstruction or multi-view techniques. Despite this progress, challenges remain, including handling complex scene motion in user-provided videos, accurately predicting occluded regions, and ensuring temporal consistency in the generated output. Future research will likely focus on improving efficiency, reducing artifact generation, and enhancing control over finer aspects of the generated content.

4D Video Generation#

4D video generation aims to create videos that are not only temporally consistent but also spatially rich, capturing the scene from multiple viewpoints and enabling novel view synthesis. This goes beyond traditional video generation which focuses mainly on temporal consistency. The challenge lies in representing and manipulating the spatiotemporal information of a scene, especially when dealing with complex dynamic scenes. Current methods often rely on multi-view data for training, which limits applicability to real-world scenarios where acquiring such data is impractical. Recent breakthroughs use diffusion models, leveraging their ability to generate realistic content from noise, to address the limitations of earlier approaches. However, these models often struggle with the ill-posed nature of the task, needing to infer unseen aspects of the scene. Advancements using masked video fine-tuning techniques attempt to ameliorate this by focusing on known regions, leaving the model to fill in plausible details for unseen parts. Future research should focus on improving efficiency, handling more complex scenarios with fewer input views, and potentially exploring new representational paradigms beyond explicit 4D models.

Camera Control Methods#

Camera control in video generation is a rapidly evolving field. Early methods often relied on pre-defined trajectories or simplistic manipulation of existing video frames, limiting creativity and realism. Recent breakthroughs utilize diffusion models, offering more sophisticated control over camera movement. These models learn complex relationships between camera parameters and video content, enabling generation of novel viewpoints and camera paths. However, challenges remain, particularly in handling dynamic scenes and ensuring temporal consistency. Methods that can process user-provided videos, rather than relying solely on model-generated data, are crucial advancements. Achieving seamless integration of novel camera movements while maintaining scene integrity and coherence remains a significant technical hurdle. Future research should focus on more robust techniques for dynamic scene handling, improved temporal consistency, and extension to various video formats and resolutions. This will allow for more versatile, efficient, and creative camera manipulation in video generation and editing.

More visual insights#

More on figures

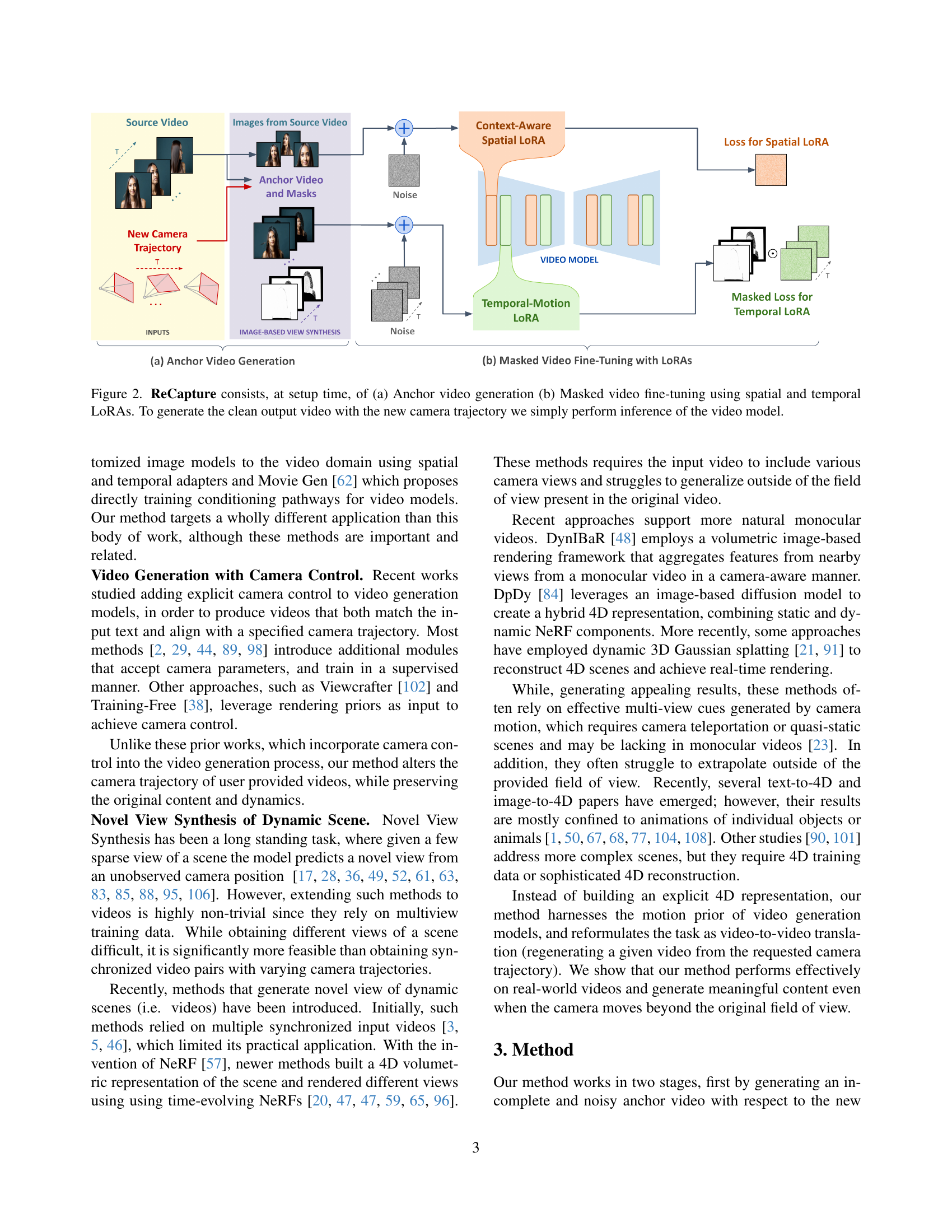

🔼 This figure illustrates the two-stage ReCapture process. Stage (a) shows the generation of a noisy ‘anchor’ video using either point cloud rendering or multiview diffusion modeling. This anchor video incorporates the desired new camera trajectory but contains artifacts and inconsistencies. Stage (b) depicts the masked video fine-tuning stage. Here, spatial and temporal Low-Rank Adaptation (LoRA) modules are trained on the known parts of the anchor video and source video. The spatial LoRA learns spatial context from the source video, while the temporal LoRA learns temporal consistency from the anchor video. During inference, only the fine-tuned model is used to generate a temporally consistent, clean video with the new camera path, filling in any missing information from the anchor video. The masked loss ensures that the model primarily focuses on the known areas during the fine-tuning process. The final output is a clean re-angled video.

read the caption

Figure 2: ReCapture consists, at setup time, of (a) Anchor video generation (b) Masked video fine-tuning using spatial and temporal LoRAs. To generate the clean output video with the new camera trajectory we simply perform inference of the video model.

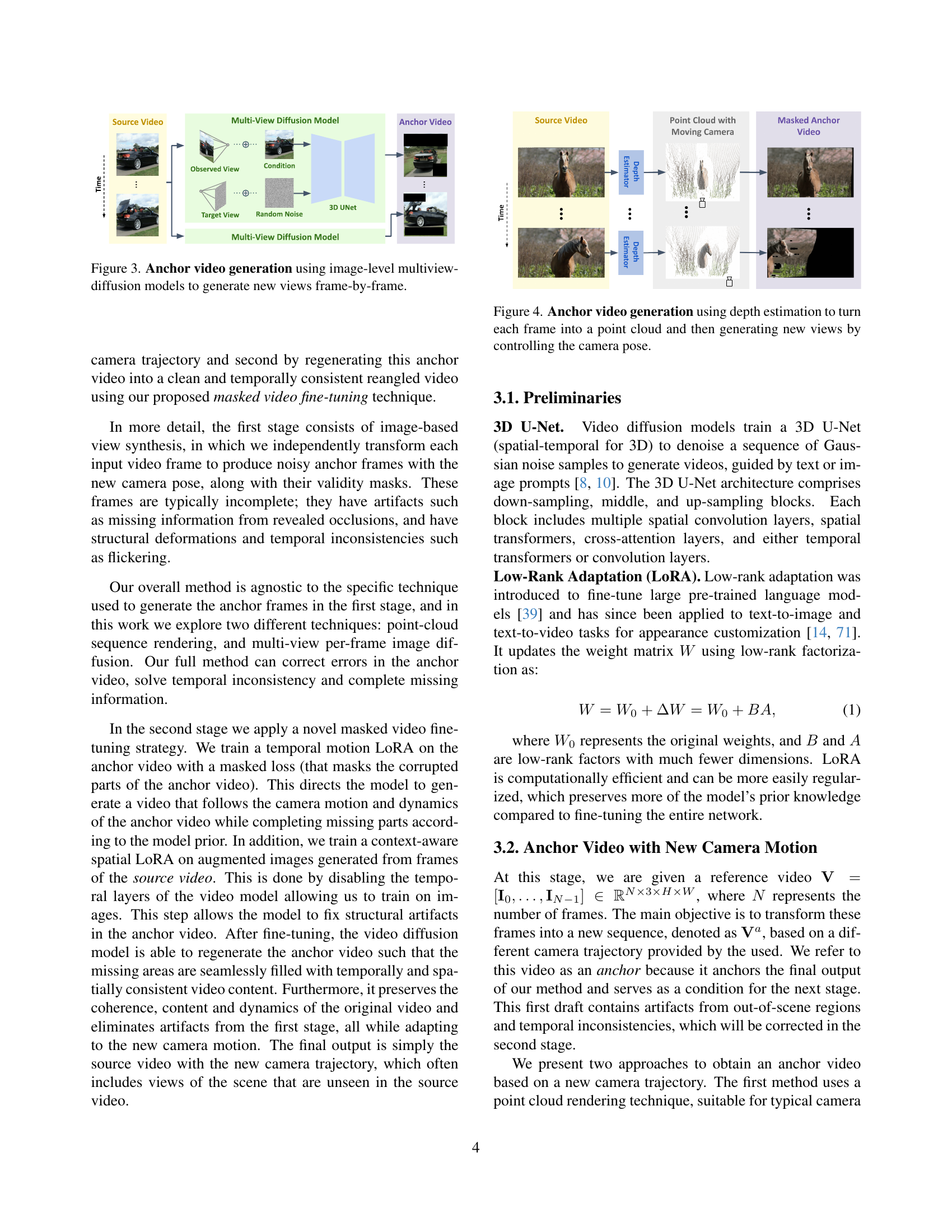

🔼 This figure illustrates the process of creating an ‘anchor video’ which is a noisy intermediate video that serves as the input for the next stage of the ReCapture method. It uses a multiview image diffusion model to generate new views frame by frame. The model takes a source video frame and its corresponding camera parameters as input and produces a new view based on a novel camera trajectory. The process is repeated for every frame to create a complete anchor video. The anchor video will have artifacts (missing information) due to the new camera viewpoints, and it’s not temporally consistent; these artifacts will be corrected in a later stage.

read the caption

Figure 3: Anchor video generation using image-level multiview-diffusion models to generate new views frame-by-frame.

🔼 This figure illustrates the first stage of the ReCapture method, specifically the point cloud approach for generating anchor videos. Depth estimation is first performed on each frame of the input video to create a 3D point cloud representation of the scene. The user-specified camera trajectory (including zoom, pan, tilt, etc.) is then applied to these point clouds. Finally, the modified point clouds are projected back onto the image plane from the new camera viewpoints to generate the anchor video. This process produces a noisy anchor video containing missing information, artifacts, and inconsistencies, which will be refined in the subsequent masked video fine-tuning stage.

read the caption

Figure 4: Anchor video generation using depth estimation to turn each frame into a point cloud and then generating new views by controlling the camera pose.

🔼 Figure 5 displays a qualitative comparison between ReCapture and Generative Camera Dolly [82], a prior method, using an orbit camera trajectory. The comparison focuses on the visual quality of videos generated using both methods. The figure shows source videos with different subjects, videos generated by Generative Camera Dolly, and videos generated by ReCapture. The results demonstrate that ReCapture produces sharper and clearer results than Generative Camera Dolly, especially concerning motion blur, and more accurately follows the requested orbit camera trajectory.

read the caption

Figure 5: Comparisons with generative camera dolly [82] using an orbit camera trajectory.

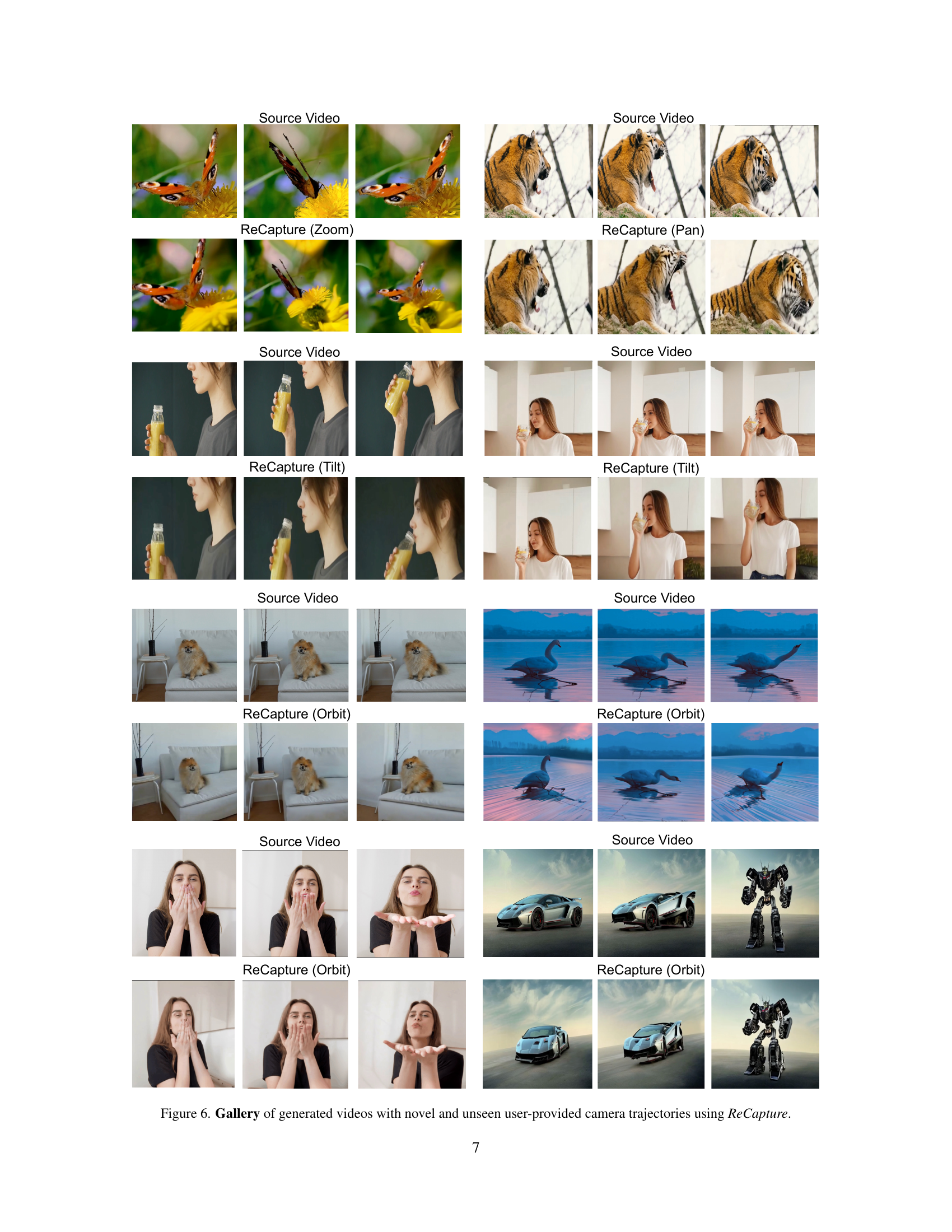

🔼 This figure showcases several example videos generated using the ReCapture model. Each row presents a source video alongside its corresponding ReCapture outputs under various novel camera trajectories. These trajectories include zooming, panning, tilting, and orbiting, demonstrating ReCapture’s ability to generate new video perspectives while maintaining the original scene’s content and subject motion. The examples highlight ReCapture’s capability to generate plausible views even from angles that were not originally captured.

read the caption

Figure 6: Gallery of generated videos with novel and unseen user-provided camera trajectories using ReCapture.

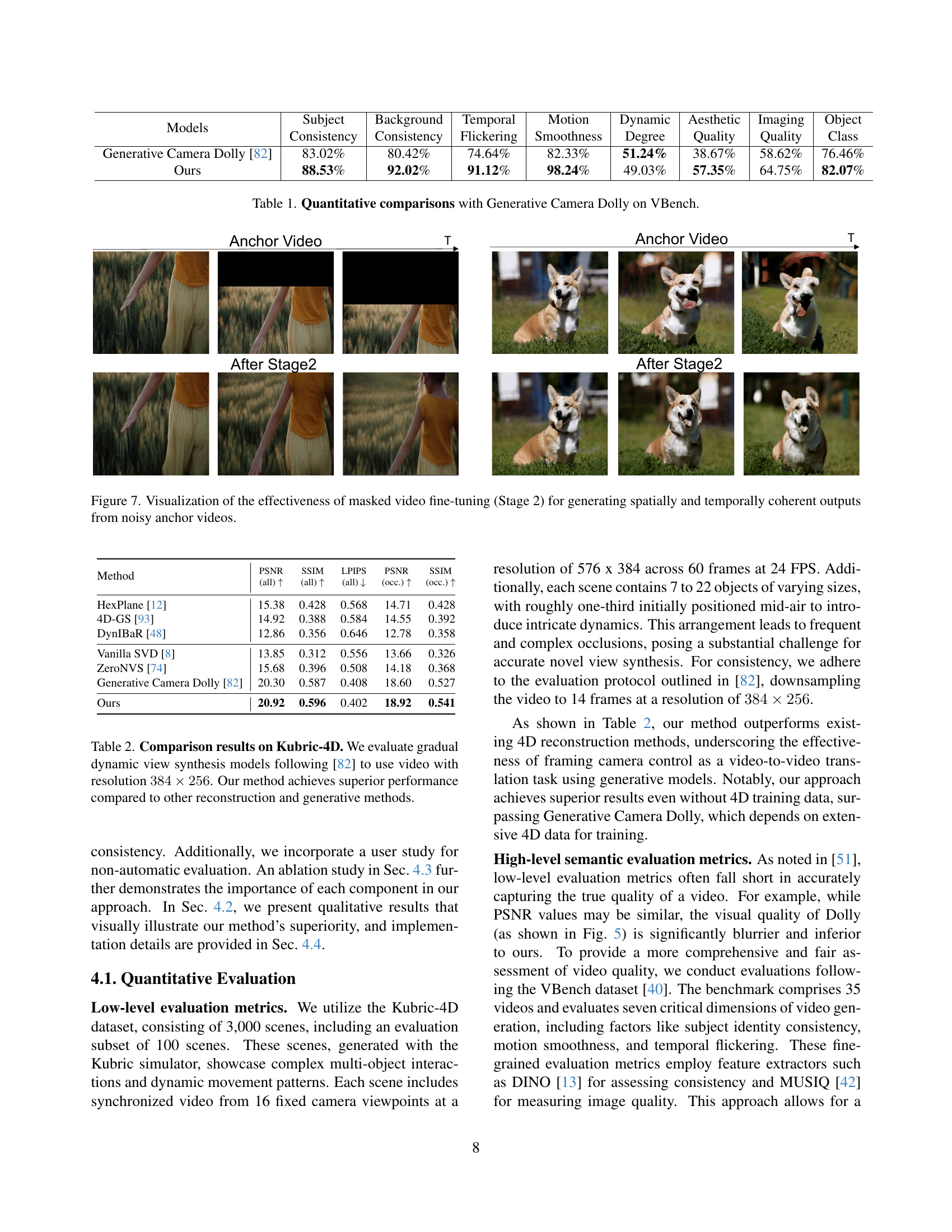

🔼 Figure 7 shows the effectiveness of the masked video fine-tuning stage (Stage 2) in ReCapture. The top row displays noisy anchor videos, which contain artifacts and are incomplete due to the camera movement. The bottom row shows the results after masked video fine-tuning. The masked video fine-tuning process effectively cleans and completes the noisy anchor videos. This results in a spatially and temporally coherent output video, demonstrating the effectiveness of the method in removing artifacts and ensuring consistency.

read the caption

Figure 7: Visualization of the effectiveness of masked video fine-tuning (Stage 2) for generating spatially and temporally coherent outputs from noisy anchor videos.

More on tables

| Method | PSNR (all) ↑ | SSIM (all) ↑ | LPIPS (all) ↓ | PSNR (occ.) ↑ | SSIM (occ.) ↑ |

|---|---|---|---|---|---|

| HexPlane [12] | 15.38 | 0.428 | 0.568 | 14.71 | 0.428 |

| 4D-GS [93] | 14.92 | 0.388 | 0.584 | 14.55 | 0.392 |

| DynIBaR [48] | 12.86 | 0.356 | 0.646 | 12.78 | 0.358 |

| Vanilla SVD [8] | 13.85 | 0.312 | 0.556 | 13.66 | 0.326 |

| ZeroNVS [74] | 15.68 | 0.396 | 0.508 | 14.18 | 0.368 |

| Generative Camera Dolly [82] | 20.30 | 0.587 | 0.408 | 18.60 | 0.527 |

| Ours | 20.92 | 0.596 | 0.402 | 18.92 | 0.541 |

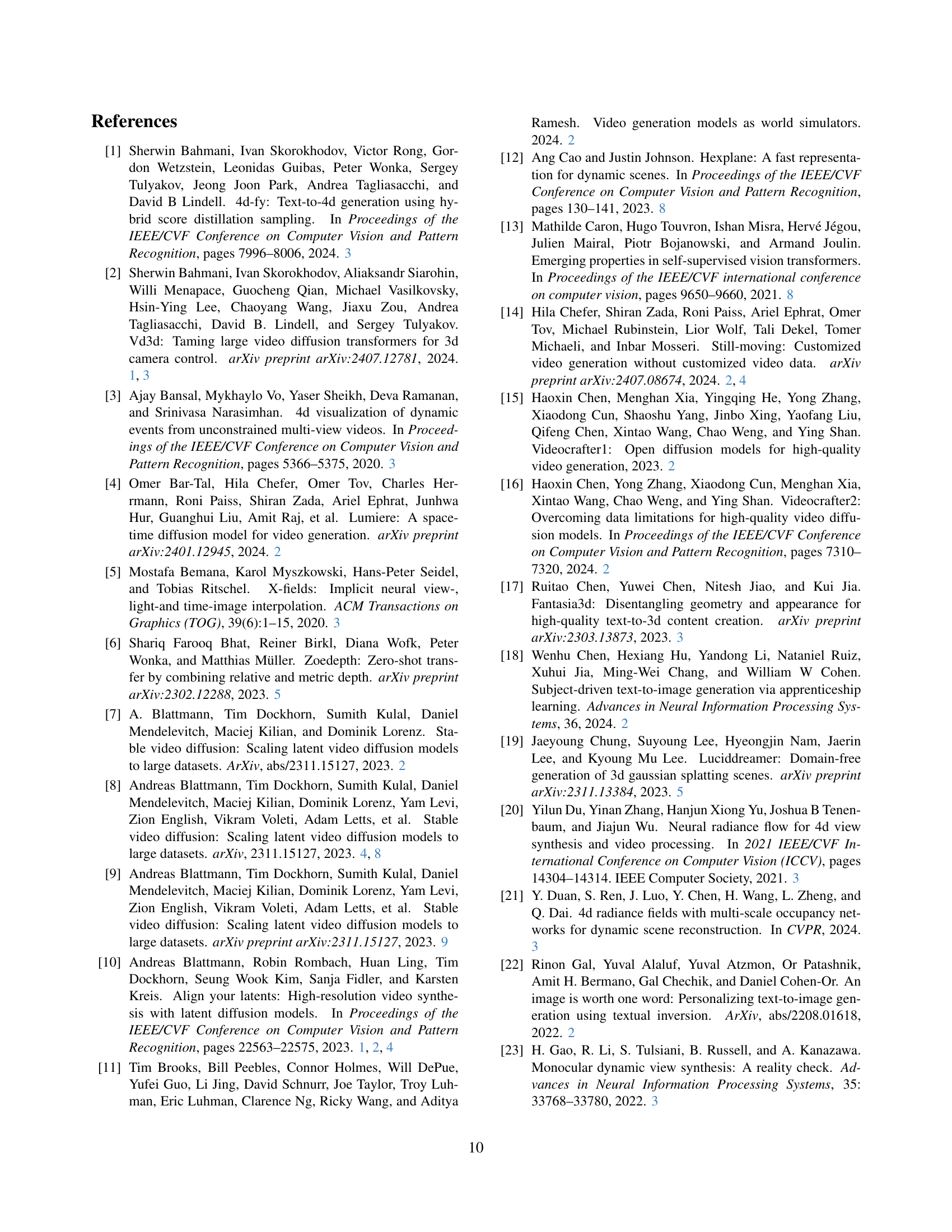

🔼 Table 2 presents a quantitative comparison of different methods for gradual dynamic view synthesis on the Kubric-4D dataset. The evaluation uses videos downsampled to a resolution of 384x256 pixels. The table compares the Peak Signal-to-Noise Ratio (PSNR), Structural Similarity Index (SSIM), and Learned Perceptual Image Patch Similarity (LPIPS) metrics. The results show that the proposed method outperforms existing reconstruction and generative methods in terms of these metrics, demonstrating its superior performance in generating high-quality videos with novel viewpoints.

read the caption

Table 2: Comparison results on Kubric-4D. We evaluate gradual dynamic view synthesis models following [82] to use video with resolution 384×256384256384\times 256384 × 256. Our method achieves superior performance compared to other reconstruction and generative methods.

| Models | Subject | |||||||

|---|---|---|---|---|---|---|---|---|

| Consistency | Background | |||||||

| Consistency | Temporal | |||||||

| Flickering | Motion | |||||||

| Smoothness | Dynamic | |||||||

| Degree | Aesthetic | |||||||

| Quality | Imaging | |||||||

| Quality | Object | |||||||

| Class | ||||||||

| Anchor Video | 82.41% | 77.45% | 64.50% | 74.27% | 49.72% | 34.94% | 55.90% | 79.82% |

| + Temporal LoRAs w/ Masks ) | 85.24% | 90.88% | 89.60% | 97.32% | 49.64% | 40.41% | 62.34% | 80.02% |

| ++ Spatial LoRAs) | 86.02% | 91.24% | 90.02% | 97.32% | 49.64% | 49.18% | 63.03% | 80.02% |

| +++ SD-Edit | 88.53% | 92.02% | 91.12% | 98.24% | 49.03% | 57.35% | 64.75% | 82.07% |

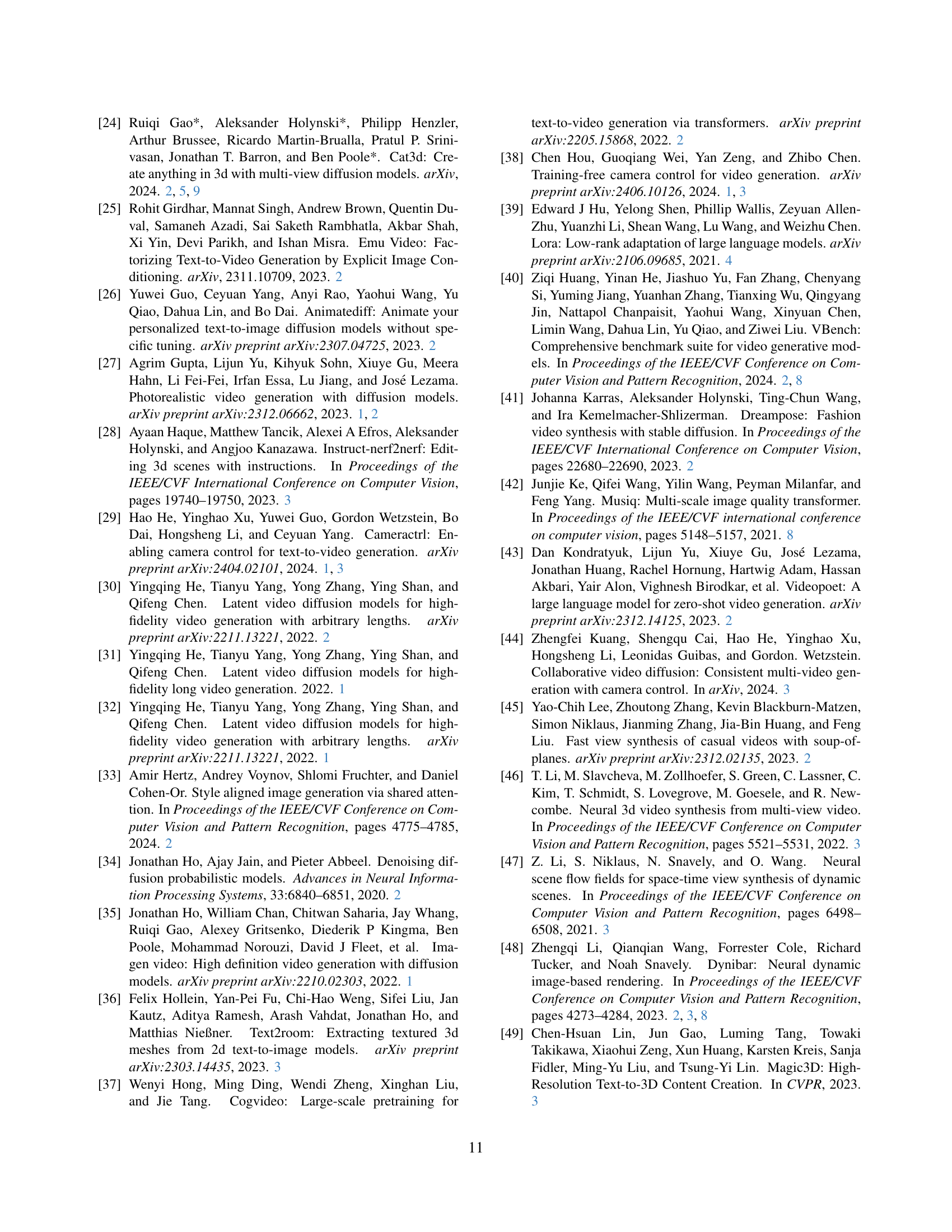

🔼 This table presents the results of ablation studies evaluating the impact of different components in the masked video fine-tuning stage of the ReCapture model. Three variations are compared: using only temporal LoRAs, adding spatial LoRAs to the temporal ones, and finally, applying SD-Edit post-processing to further reduce blurriness. The quantitative results are presented for various aspects of video quality, showing the cumulative improvement brought by each added component.

read the caption

Table 3: Ablation studies for each component of mask video diffusion finetuning: ’+ Temporal LoRAs’ applies temporal LoRAs solely for masked video finetuning. ’++ Spatial LoRAs’ introduces additional context-aware LoRAs, using both spatial and temporal LoRAs for finetuning. ’+++ SD-Edit’ involves applying SD-editing after completing training with both LoRAs for eliminating blurriness.

Full paper#