↗ arXiv ↗ Hugging Face ↗ Papers with Code

TL;DR#

Controlling image generation style remains a challenge. Existing methods, such as using example images or style-reference codes, are often cumbersome or limited in flexibility and shareability. The reliance on text prompts for stylistic control can prove inaccurate or restrictive.

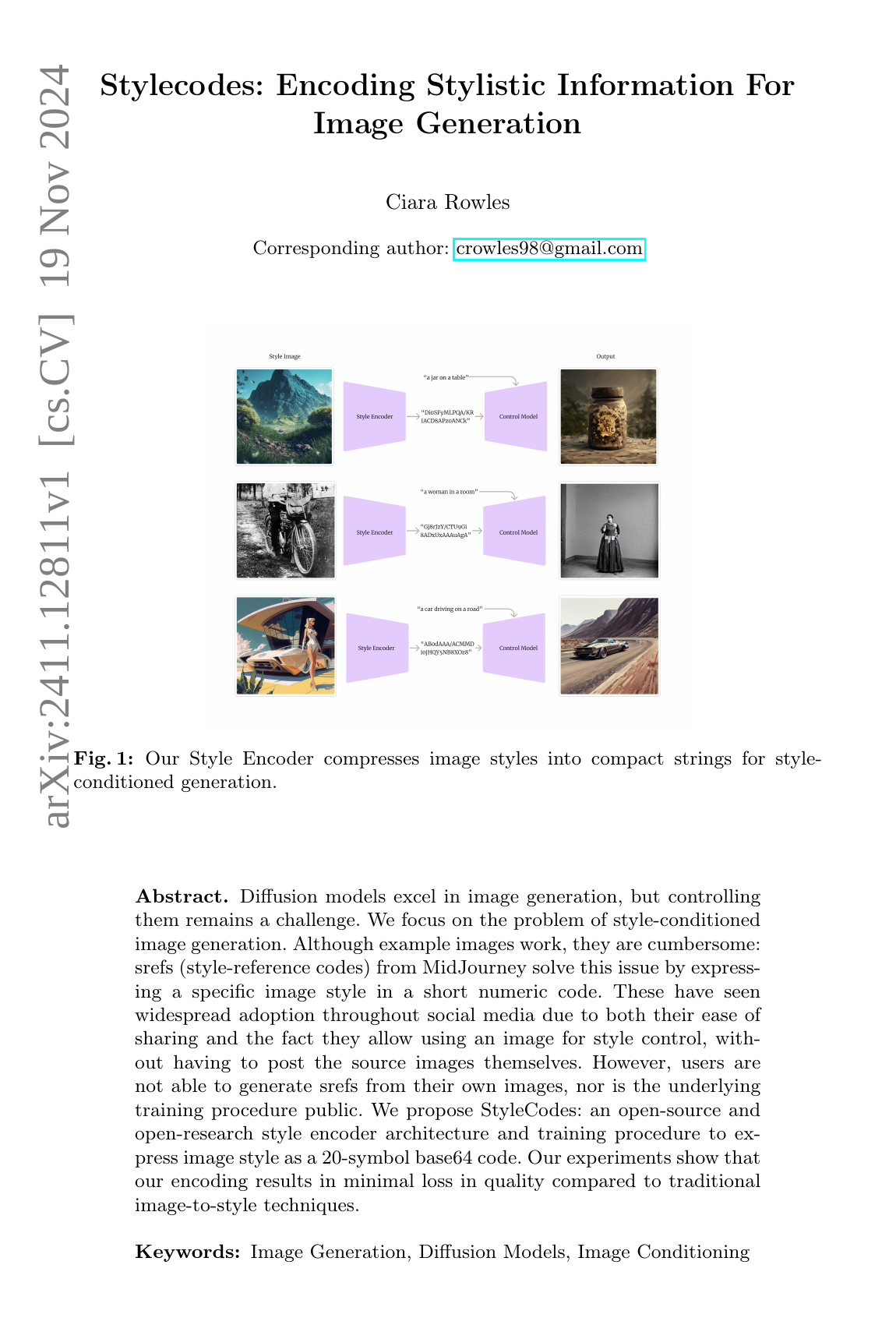

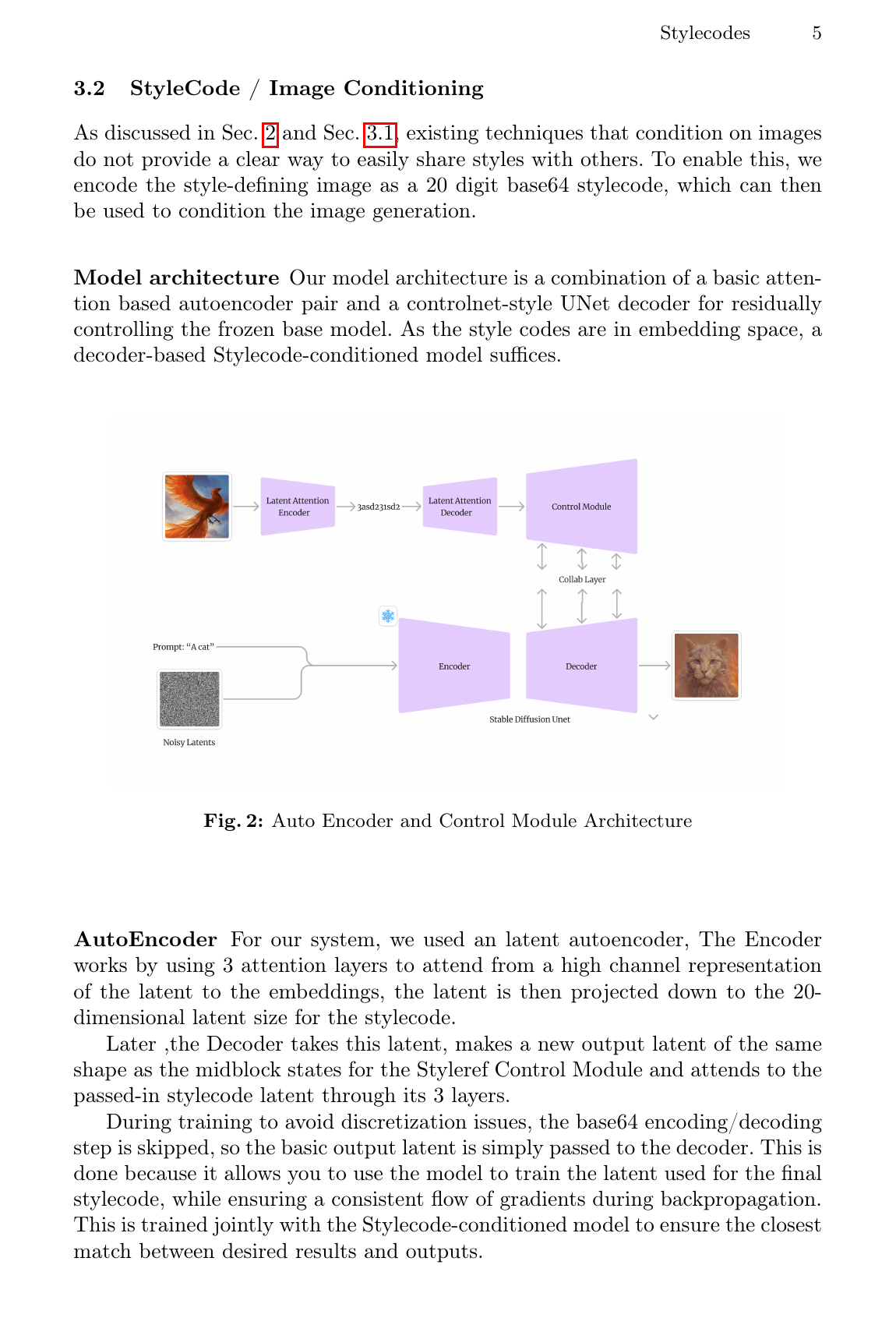

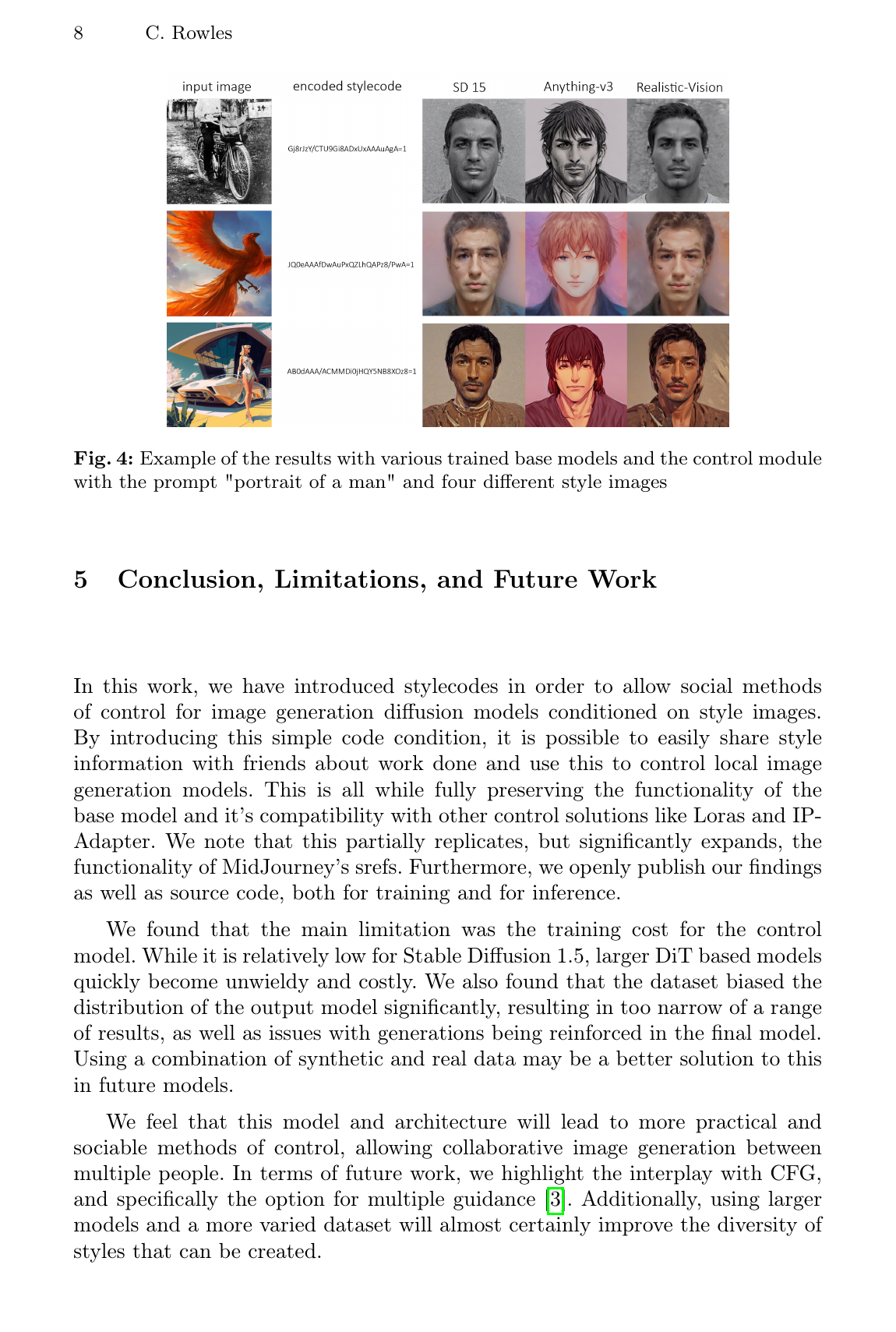

StyleCodes solves this by introducing a novel style encoding method. The approach compresses image styles into short, shareable strings (20-symbol base64 codes), enabling simple and efficient style sharing and control. It leverages an open-source autoencoder architecture and a modified UNet for style-conditioned image generation, demonstrating that the encoding produces minimal quality loss compared to other techniques. This advances controllability and promotes collaboration in image generation.

Key Takeaways#

Why does it matter?#

This paper is important because it introduces StyleCodes, a novel and open-source method for controlling image generation styles. It addresses the limitations of existing image-based conditioning techniques by offering a simple, shareable way to represent and apply image styles, opening new avenues for collaborative image generation and social sharing of style information. This is highly relevant to current trends in AI art and style transfer, and its simplicity may lead to wider adoption by artists and researchers alike.

Visual Insights#

Full paper#