↗ arXiv ↗ Hugging Face ↗ Papers with Code

TL;DR#

Large Language Models (LLMs) often prioritize speed over accuracy, especially in complex tasks like mathematical problem-solving. Existing methods to improve reasoning usually involve extensive and costly training data. This limits their practical application. The current research identifies the importance of ‘patient reasoning’ - allowing more time for the model to carefully consider a problem before giving a response - as a key factor impacting LLM performance.

The study proposes a novel training method to address this. Instead of creating new complex datasets, it uses a simple preference optimization approach. This involves training the model to favor more detailed reasoning steps by presenting examples of thorough solutions as ‘positive’ and concise solutions as ’negative’. The result demonstrates a substantial increase in performance in math problem-solving benchmarks, showing that the ‘patient’ LLM strategy is effective. This offers a more cost-effective way to improve LLM reasoning.

Key Takeaways#

Why does it matter?#

This paper is important because it offers a simple yet effective solution to improve large language models’ reasoning abilities. It challenges the common practice of prioritizing speed over accuracy in LLM inference, demonstrating that encouraging ‘patient’ reasoning through a novel training approach significantly enhances performance. This work opens new avenues for enhancing LLMs without relying on expensive, large-scale datasets, making it highly relevant to current research trends focusing on improving LLM reasoning and efficiency.

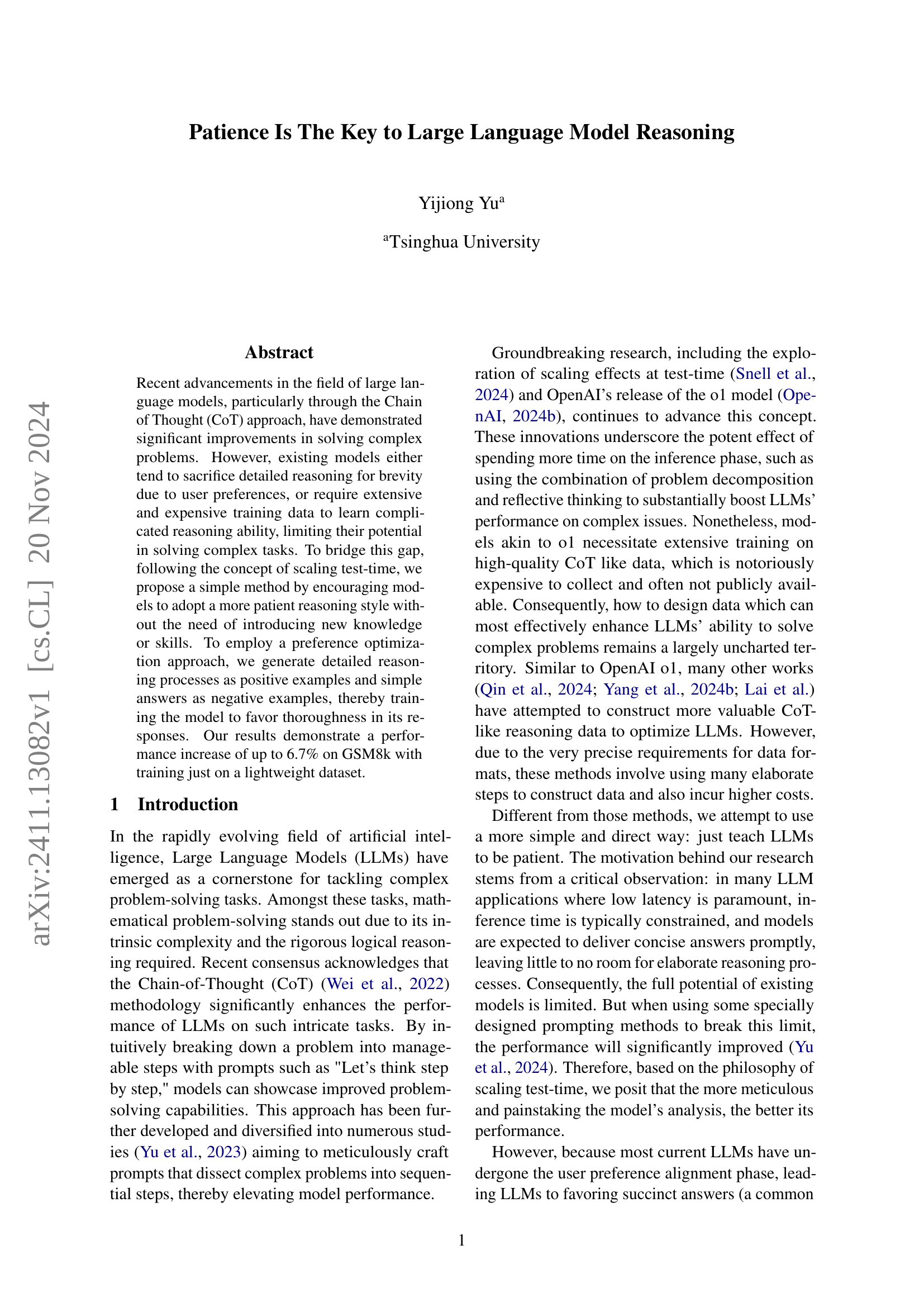

Visual Insights#

🔼 This figure illustrates the workflow of the proposed method. It starts with collecting mathematical problems and generating initial solutions using an LLM. These solutions are then refined by prompting the LLM to provide more detailed and patient reasoning steps, creating positive examples. Concurrently, the concise original solutions serve as negative examples. These positive and negative examples are used in a preference optimization process (DPO) to fine-tune a base language model. The ultimate goal is to train the model to favor more thorough and elaborate reasoning processes when solving problems.

read the caption

Figure 1: The overall process of our methods.

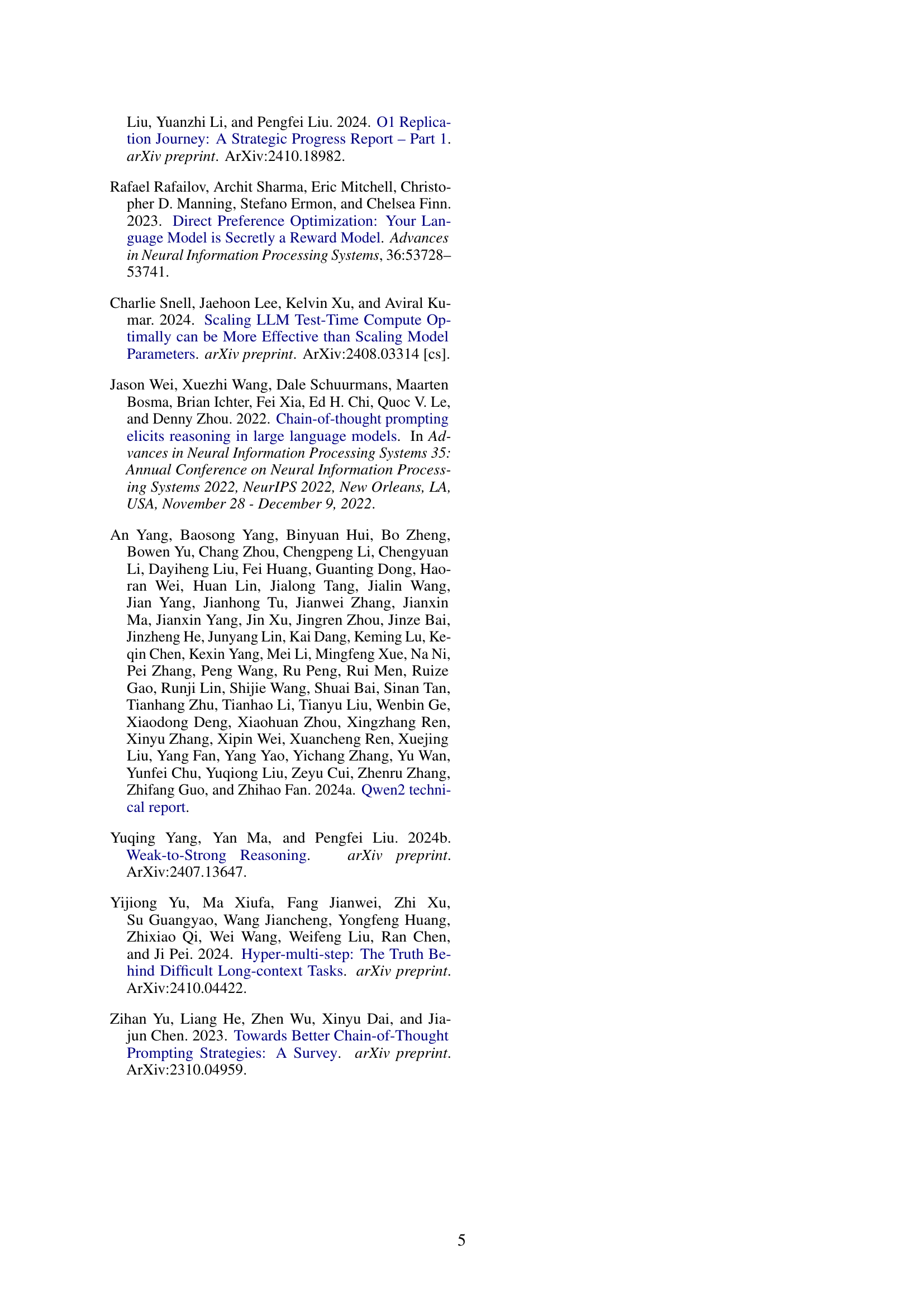

| Method | gsm8k | math | time |

|---|---|---|---|

| baseline | 81.2 | 48.8 | 7.2 |

| ours | 87.9 | 49.0 | 10.9 |

🔼 This table presents a comparison of the performance of a baseline language model and a model enhanced by the proposed method. The comparison is done across two benchmark datasets (gsm8k and MATH), showing both accuracy percentages and average inference time (in seconds). This allows for an evaluation of the trade-off between improved accuracy and increased processing time resulting from the optimization technique.

read the caption

Table 1: The accuracy (%) and the average time consumption (seconds) of the based model and the model optimized by out methods on gsm8k and MATH.

Full paper#