↗ arXiv ↗ Hugging Face ↗ Papers with Code

TL;DR#

Current large language models (LLMs) struggle with open-ended reasoning tasks that lack clear evaluation standards. Many focus on domains with easily quantifiable success (math, coding), neglecting real-world applications. Existing LLMs often produce inaccurate or illogical answers when tackling complex, open-ended questions.

Marco-01 improves upon these limitations. It uses Chain-of-Thought (CoT) fine-tuning to enhance reasoning abilities. Monte Carlo Tree Search (MCTS) helps explore multiple reasoning paths, increasing accuracy. A novel reflection mechanism allows Marco-01 to self-correct errors and improve reasoning process. Experimental results on various datasets demonstrate Marco-01’s improved performance in open-ended reasoning and translation tasks, including handling of colloquial expressions.

Key Takeaways#

Why does it matter?#

This paper is important because it introduces Marco-01, a novel large reasoning model that significantly advances the state-of-the-art in open-ended problem-solving. Its innovative use of Chain-of-Thought prompting, Monte Carlo Tree Search, and a reflection mechanism addresses limitations of existing models in handling complex, real-world tasks. This work will inspire further research into more robust and versatile LLM architectures, especially those focused on complex reasoning and open-domain question answering.

Visual Insights#

🔼 This figure demonstrates the reasoning process of the Marco-01 model on a simple word problem: counting the occurrences of the letter ‘r’ in the word ‘strawberry’. The figure shows a four-step reasoning chain that starts with the question and ends with the correct answer. Each step involves a step-by-step analysis of the word to count and verify the number of ‘r’s. This example showcases the model’s ability to break down a problem into smaller, manageable steps before arriving at a solution.

read the caption

Figure 1: A classic ‘strawberry’ question reasoned by our Marco-o1 model: “How many ‘r’s are in ‘strawberry’.”

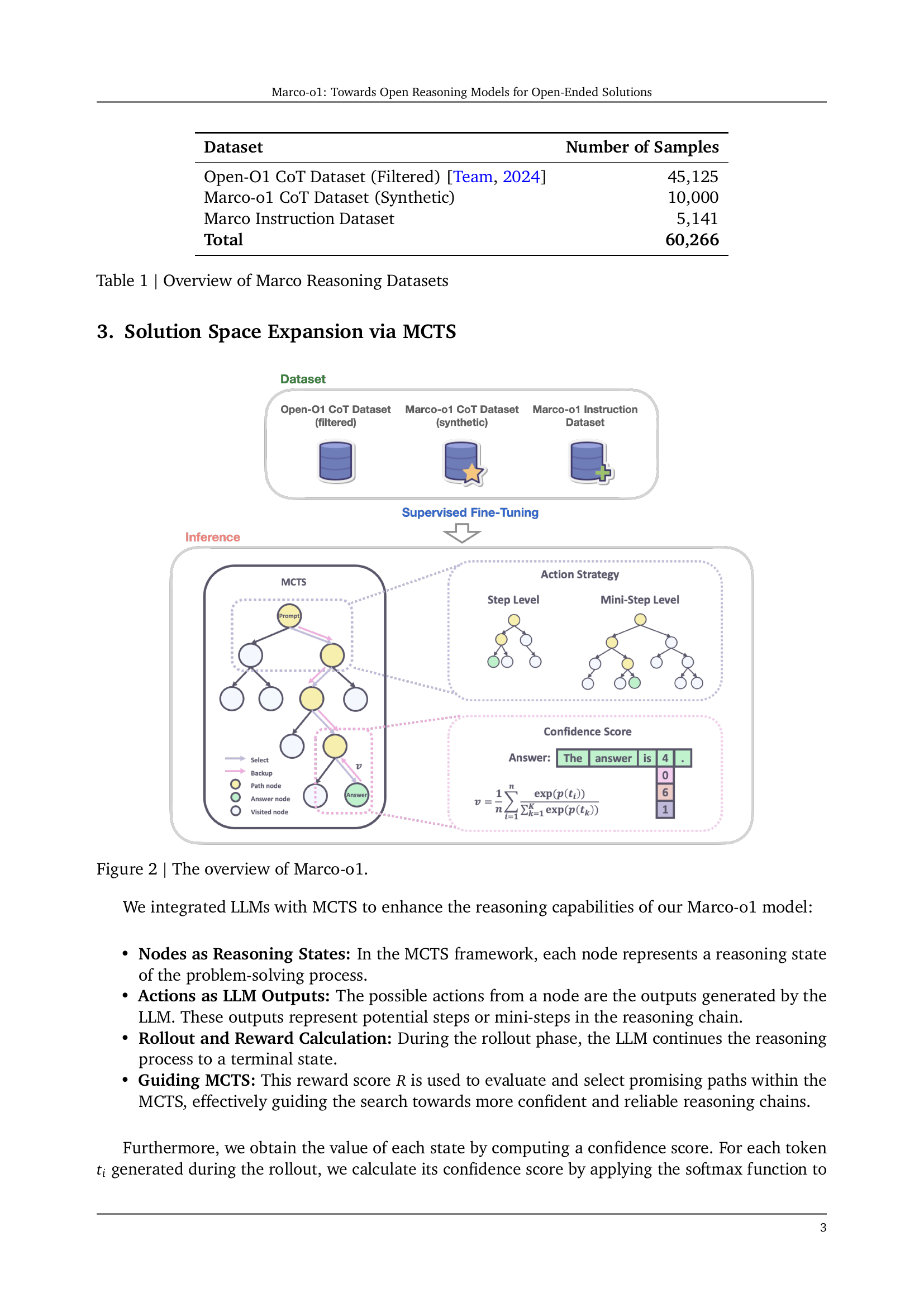

| Dataset | Number of Samples |

|---|---|

| Open-O1 CoT Dataset (Filtered) [Team, 2024] | 45,125 |

| Marco-o1 CoT Dataset (Synthetic) | 10,000 |

| Marco Instruction Dataset | 5,141 |

| Total | 60,266 |

🔼 This table presents a summary of the datasets used to train and enhance the Marco-01 reasoning model. It details the name of each dataset, its source (including citations where applicable), and the number of samples contained within. The datasets are categorized to highlight the various aspects of reasoning they focus on, such as Chain-of-Thought (CoT) reasoning, instructions, and synthetically generated data.

read the caption

Table 1: Overview of Marco Reasoning Datasets

In-depth insights#

Open-Ended Reasoning#

Open-ended reasoning presents a significant challenge in artificial intelligence, moving beyond the limitations of closed-domain question-answering systems. It necessitates models capable of handling ambiguity, uncertainty, and diverse solution paths, rather than converging on a single predetermined answer. This requires a paradigm shift from traditional methods reliant on pre-defined knowledge bases and rule-based systems to more flexible and adaptable approaches. Successful open-ended reasoning systems need to exhibit strong commonsense reasoning skills, the capacity for creative problem-solving, and robust self-reflection capabilities. They should also be able to handle nuanced language, manage conflicting information, and learn from experience to continually improve their performance. The development of such systems is crucial for advancing AI towards more human-like cognitive abilities and tackling complex real-world problems that demand creative and flexible solutions. Furthermore, a key challenge lies in evaluating the success of open-ended reasoning, as the absence of pre-defined ‘correct’ answers demands novel metrics and evaluation strategies. This demands the development of sophisticated benchmark datasets reflecting the nuances of open-ended tasks. Overall, open-ended reasoning represents a key frontier in AI research, requiring significant advancements in model architecture, training methodologies, and evaluation techniques.

MCTS Enhanced Search#

MCTS (Monte Carlo Tree Search) enhanced search, as described in the paper, significantly improves the reasoning capabilities of large language models (LLMs) by expanding the solution space. MCTS guides the search process by evaluating multiple reasoning paths using confidence scores derived from the LLM’s probability predictions. This allows the model to explore a wider range of possibilities and select the most promising paths, ultimately leading to more accurate and reliable solutions, particularly for complex or open-ended problems. The integration of MCTS with LLMs showcases a powerful synergy between probabilistic reasoning and directed search. The action strategy used within MCTS, whether at the step-level or finer mini-step level, critically impacts the effectiveness of the search. While the optimal granularity of actions might depend on problem complexity, the adoption of mini-steps demonstrates a capacity to find solutions previously missed by coarser-grained search. This adaptability is crucial for handling real-world scenarios, which are often characterized by multifaceted reasoning needs. In essence, MCTS enhanced search provides a powerful framework for augmenting LLMs’ inherent probabilistic nature with a goal-directed exploration mechanism, pushing the boundaries of what LLMs can achieve in complex reasoning tasks.

Action Granularity#

Action granularity, in the context of Monte Carlo Tree Search (MCTS) for large language models (LLMs), significantly impacts the efficiency and effectiveness of reasoning. Coarser granularity, such as using entire reasoning steps as actions, might overlook nuanced pathways crucial for complex problem-solving. Finer granularity, such as using smaller units (mini-steps) of tokens, allows for more detailed exploration of solution space, potentially leading to better accuracy. However, excessive fineness, like token-level granularity, becomes computationally expensive and might require complex reward models, thus hindering practical implementation. The optimal granularity is problem-dependent; simple problems may benefit from coarse-grained actions, while complex problems demand a finer approach. The trade-off between computational cost and the richness of the explored solution space necessitates careful consideration when determining the most suitable action granularity for an LLM’s MCTS-based reasoning system.

Translation Tasks#

The section on ‘Translation Tasks’ would explore the application of large reasoning models (LRMs) to machine translation, a novel area of research. It would likely demonstrate enhanced capabilities in handling nuanced language, such as slang and colloquialisms, going beyond the limitations of standard translation tools. The experiments might involve comparing the LRM’s performance against existing state-of-the-art translation models on benchmark datasets containing such challenging linguistic elements. A key finding could be the superior accuracy and naturalness of the LRM’s translations, especially for informal or idiomatic language. The discussion might delve into the model’s ability to understand context and cultural nuances to produce more faithful translations. Additionally, this section could analyze the inference-time scaling laws in multilingual and translation domains, exploring the trade-off between computational cost and translation quality. This analysis would be critical for determining the practicality and scalability of using LRMs for real-world translation applications. Finally, the section would likely conclude by highlighting the potential of LRMs to revolutionize machine translation by tackling its long-standing challenges related to handling complex linguistic phenomena and preserving subtle meanings.

Future of Marco-01#

The future of Marco-01 hinges on addressing its current limitations and capitalizing on its strengths. Improving reward modeling within the Monte Carlo Tree Search (MCTS) framework is crucial. More sophisticated reward functions, potentially incorporating aspects of both outcome and process, could significantly reduce the randomness observed in action selection. Integrating reinforcement learning techniques will be essential for further refining decision-making processes and enhancing Marco-01’s ability to handle complex, real-world problems. Expanding the datasets used for fine-tuning, particularly with more diverse and nuanced examples of reasoning, will broaden its generalizability. Exploring different model architectures, perhaps incorporating advanced techniques from other areas of AI, could yield substantial improvements. Finally, careful consideration of the ethical implications of increasingly powerful reasoning models, ensuring fairness and safety, will be paramount as Marco-01’s capabilities advance.

More visual insights#

More on figures

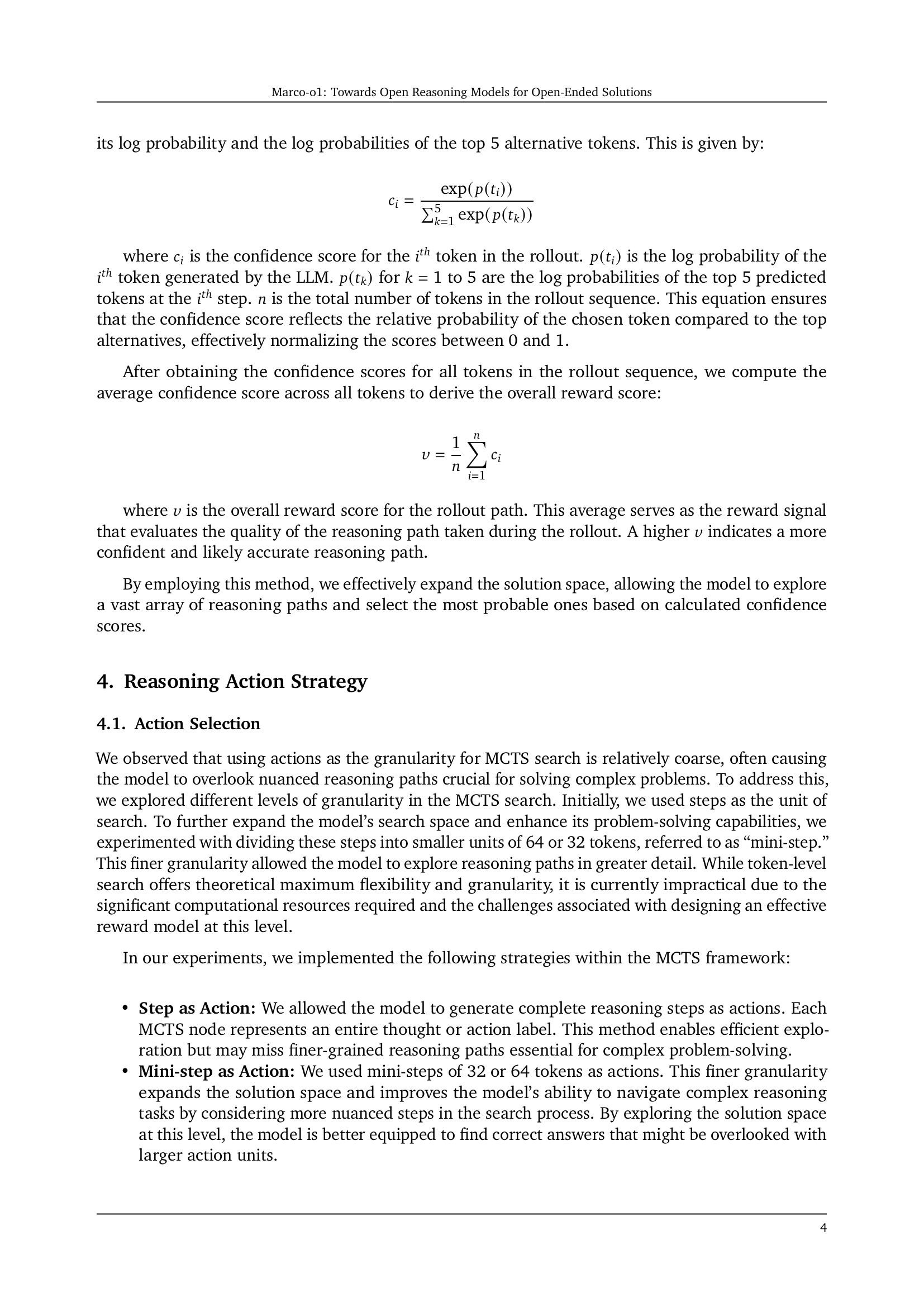

🔼 This figure illustrates the architecture of Marco-01, a reasoning model that enhances its capabilities by integrating LLMs with Monte Carlo Tree Search (MCTS). It shows how the model uses supervised fine-tuning on datasets including the filtered Open-01 CoT dataset, synthetic Marco-01 CoT data, and the Marco Instruction dataset. The MCTS component is highlighted, showing how it explores multiple reasoning paths using confidence scores derived from LLM outputs. The reasoning action strategy, employing both step-level and mini-step-level actions, is also depicted, along with the calculation of confidence scores to guide the search towards more effective and reliable reasoning chains.

read the caption

Figure 2: The overview of Marco-o1.

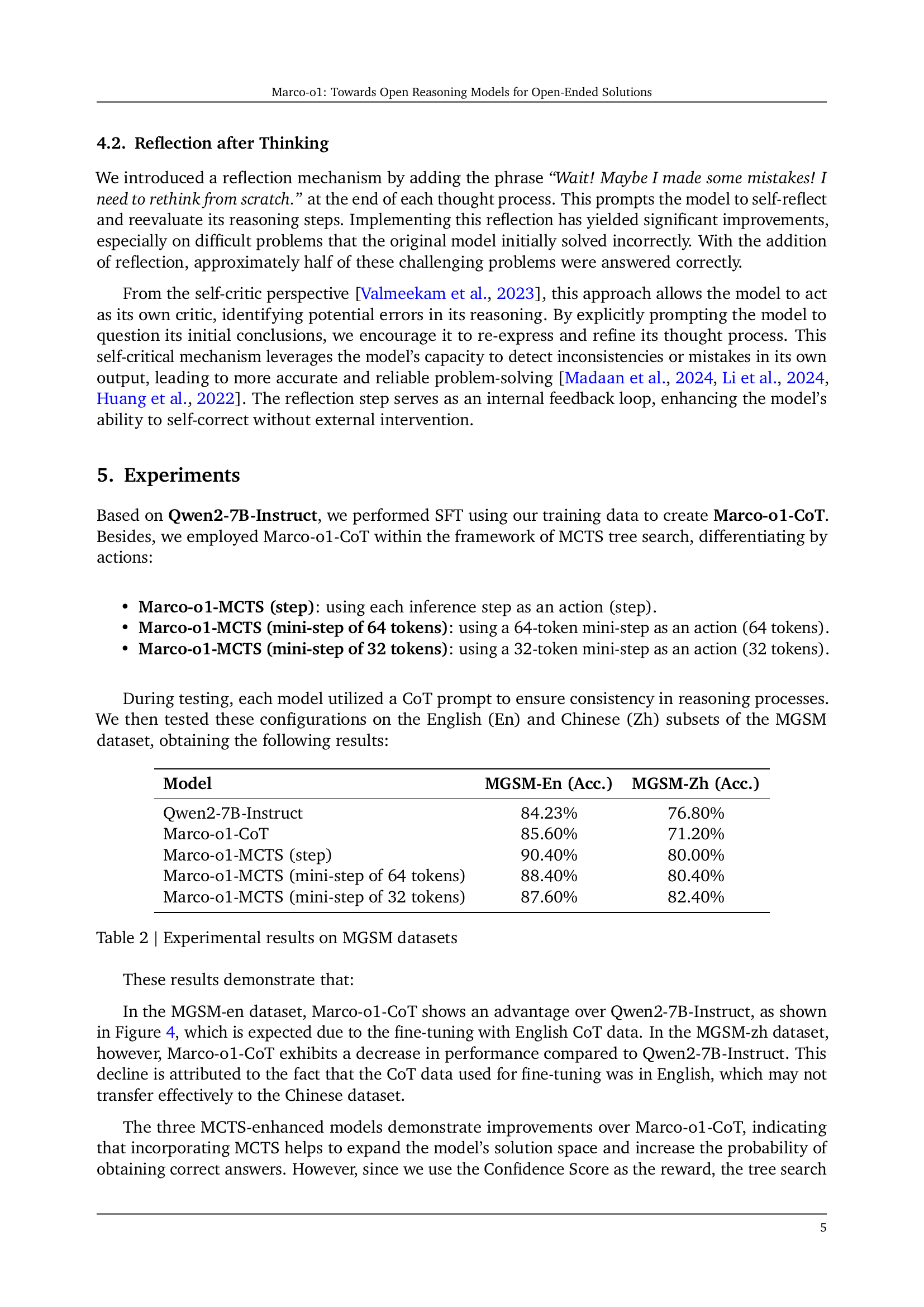

🔼 Figure 3 presents a bar chart comparison of the performance of different models on the MGSM benchmark dataset (English and Chinese). The models compared include Qwen2-7B-Instruct, Marco-01-CoT, Marco-01-MCTS (step), Marco-01-MCTS (mini-step of 64 tokens), and Marco-01-MCTS (mini-step of 32 tokens). The chart visually demonstrates the accuracy improvements achieved by incorporating various techniques such as Chain-of-Thought (CoT) fine-tuning and Monte Carlo Tree Search (MCTS) into the base model, Qwen2-7B-Instruct. The results highlight the effectiveness of the proposed methods in enhancing the reasoning capabilities of the Marco-01 model.

read the caption

Figure 3: The main results of Marco-o1.

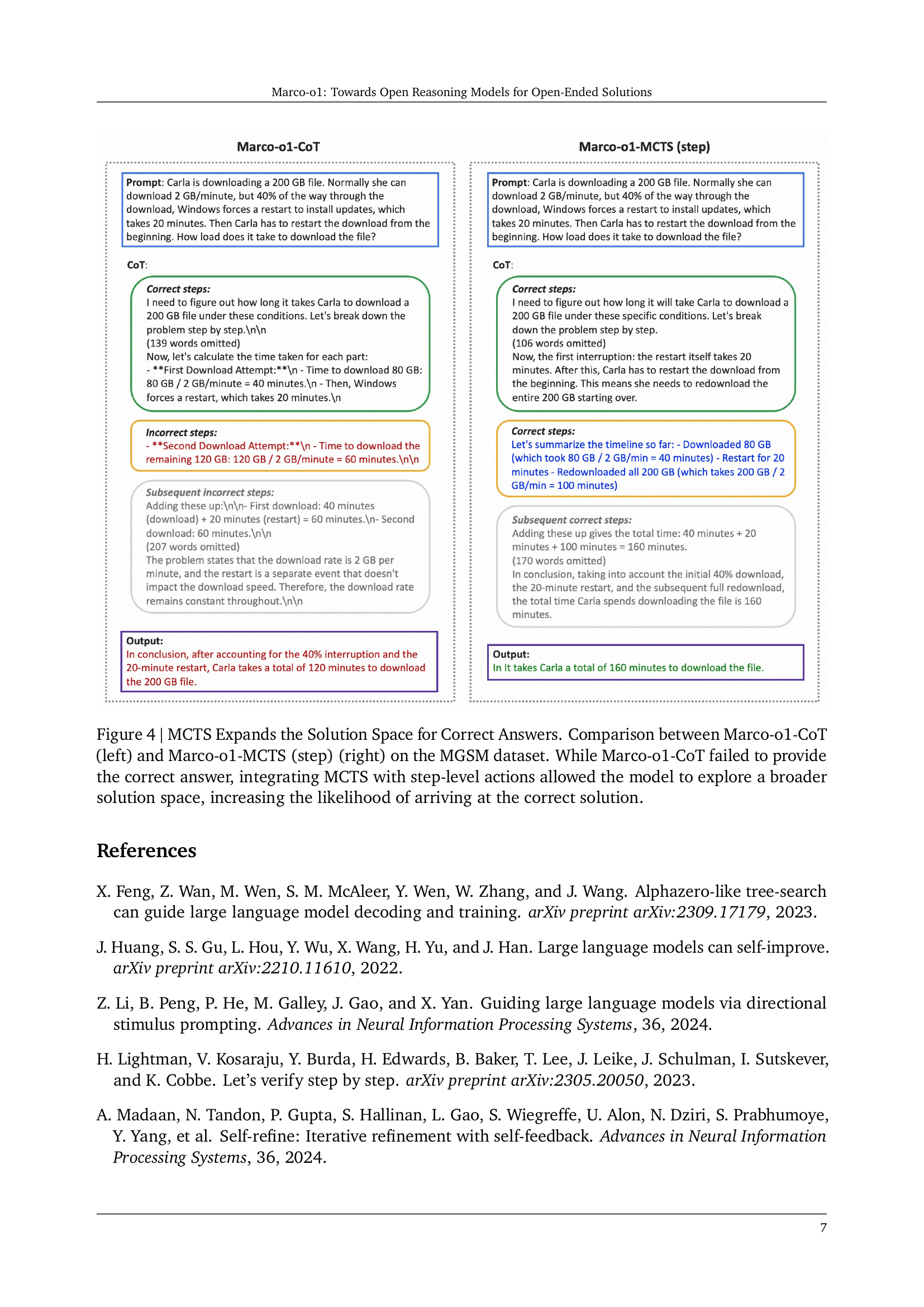

🔼 Figure 4 presents a comparative analysis of two model variations: Marco-01-CoT (without MCTS) and Marco-01-MCTS (with step-level MCTS integration), both applied to the MGSM dataset. The left side shows the reasoning process of Marco-01-CoT, which fails to reach the correct solution. The right side showcases Marco-01-MCTS successfully finding the correct answer. By using MCTS with step-level actions, the model effectively explores a much wider range of potential solution paths, significantly enhancing its chances of arriving at the correct solution, illustrating the benefit of MCTS in expanding the search space for complex problem-solving.

read the caption

Figure 4: MCTS Expands the Solution Space for Correct Answers. Comparison between Marco-o1-CoT (left) and Marco-o1-MCTS (step) (right) on the MGSM dataset. While Marco-o1-CoT failed to provide the correct answer, integrating MCTS with step-level actions allowed the model to explore a broader solution space, increasing the likelihood of arriving at the correct solution.

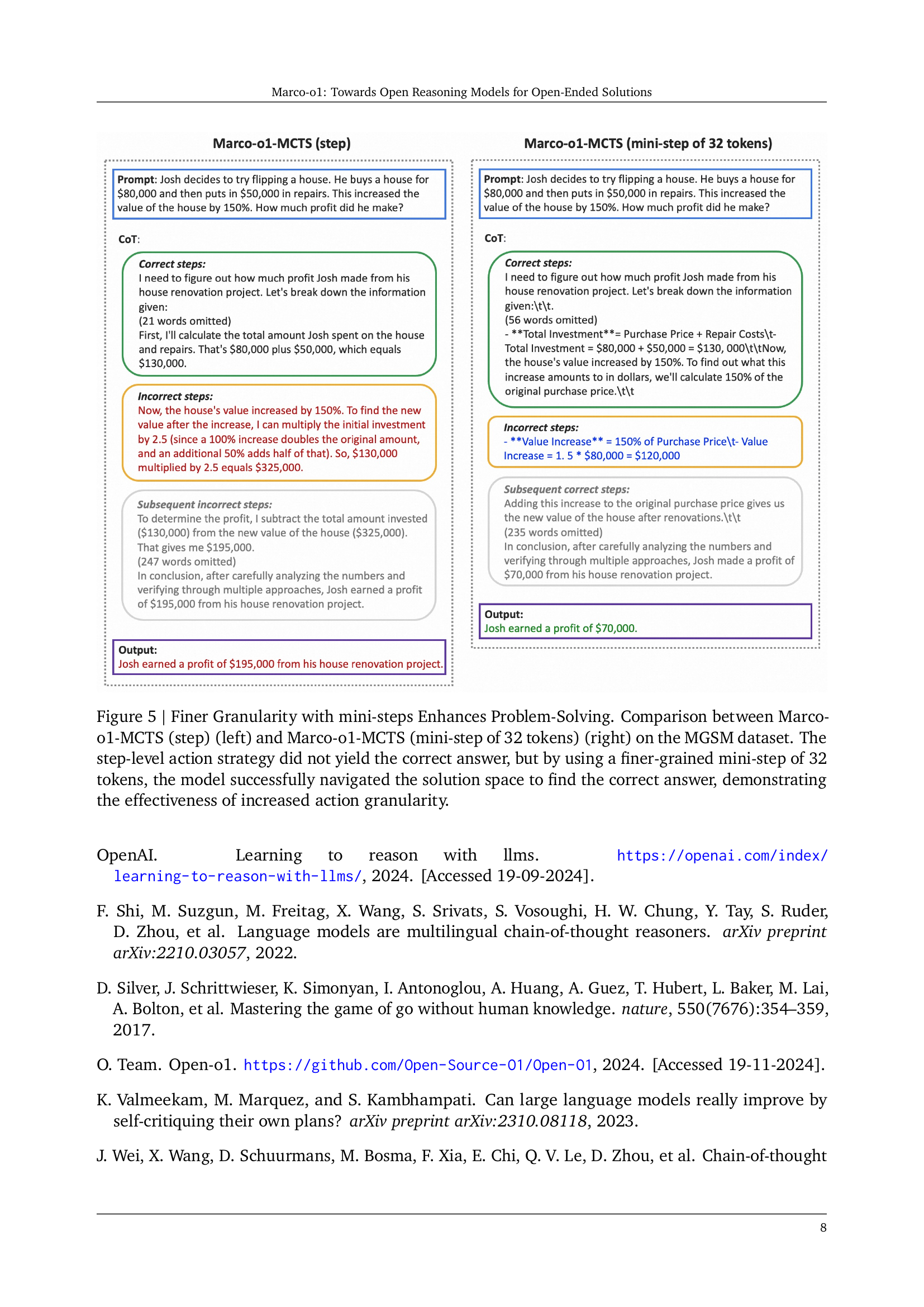

🔼 Figure 5 presents a detailed comparison of two approaches within the Marco-01-MCTS model, using different action granularities to solve problems in the MGSM dataset. The left side shows the results using a step-level action strategy (a coarser granularity), where the model failed to arrive at the correct solution. In contrast, the right side displays the results when employing a finer-grained mini-step strategy of 32 tokens, which successfully led to the correct answer. This visualization effectively demonstrates how increasing the action granularity (using smaller steps) significantly improves the model’s ability to navigate the solution space and find accurate solutions.

read the caption

Figure 5: Finer Granularity with mini-steps Enhances Problem-Solving. Comparison between Marco-o1-MCTS (step) (left) and Marco-o1-MCTS (mini-step of 32 tokens) (right) on the MGSM dataset. The step-level action strategy did not yield the correct answer, but by using a finer-grained mini-step of 32 tokens, the model successfully navigated the solution space to find the correct answer, demonstrating the effectiveness of increased action granularity.

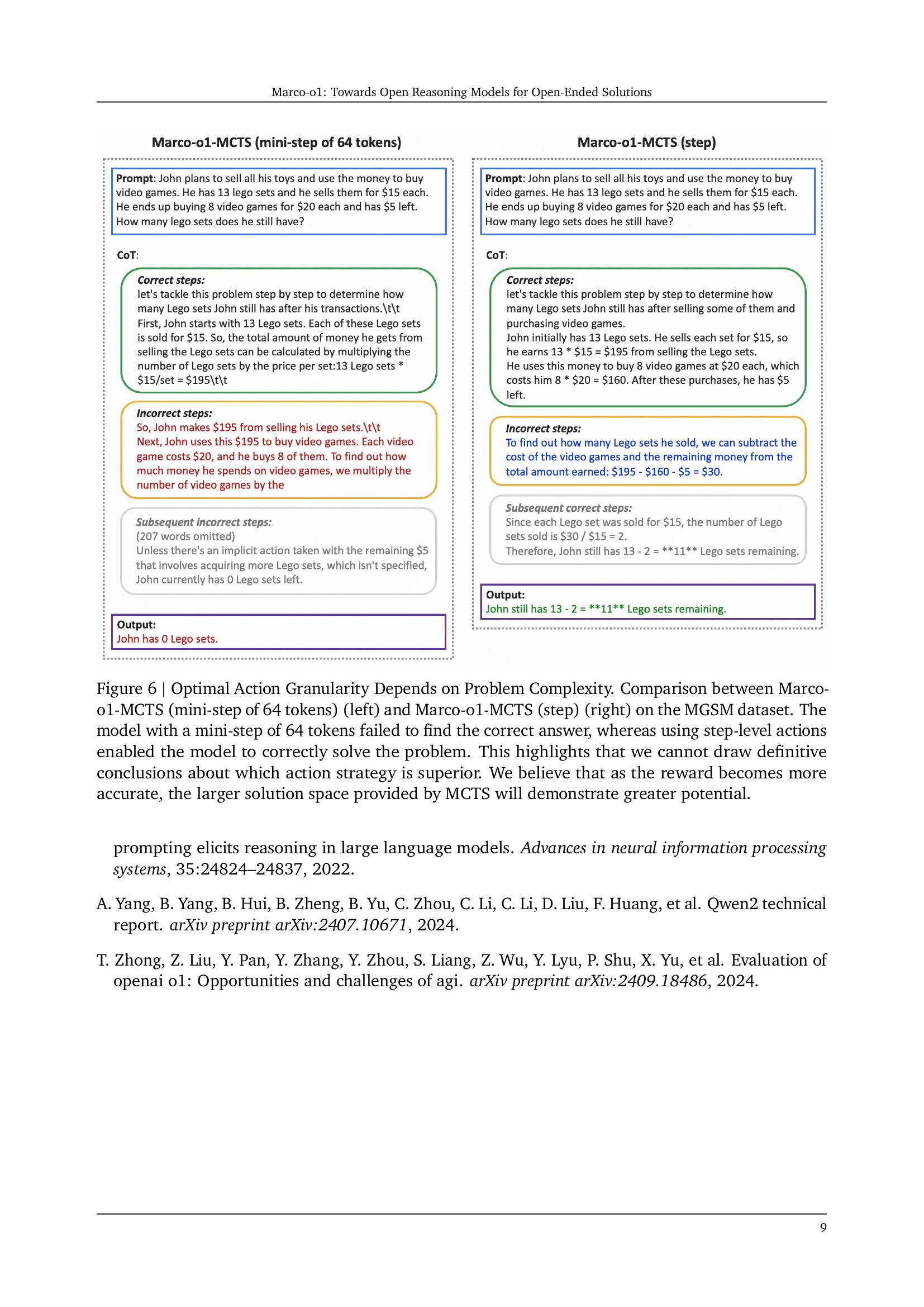

🔼 Figure 6 demonstrates how the optimal granularity of actions within the Monte Carlo Tree Search (MCTS) algorithm depends on the complexity of the problem. The figure presents a comparison between two versions of the Marco-01 model using MCTS. One version uses mini-steps of 64 tokens as actions, while the other uses steps as actions. The results on the MGSM dataset show that for this particular problem, the step-level actions led to the correct answer, while the mini-step approach failed. This highlights that there’s no universally superior action granularity; the best choice depends on the problem’s complexity. The authors suggest that more accurate reward signals within MCTS would likely lead to better performance with the finer-grained mini-step approach, due to the larger solution space that it explores.

read the caption

Figure 6: Optimal Action Granularity Depends on Problem Complexity. Comparison between Marco-o1-MCTS (mini-step of 64 tokens) (left) and Marco-o1-MCTS (step) (right) on the MGSM dataset. The model with a mini-step of 64 tokens failed to find the correct answer, whereas using step-level actions enabled the model to correctly solve the problem. This highlights that we cannot draw definitive conclusions about which action strategy is superior. We believe that as the reward becomes more accurate, the larger solution space provided by MCTS will demonstrate greater potential.

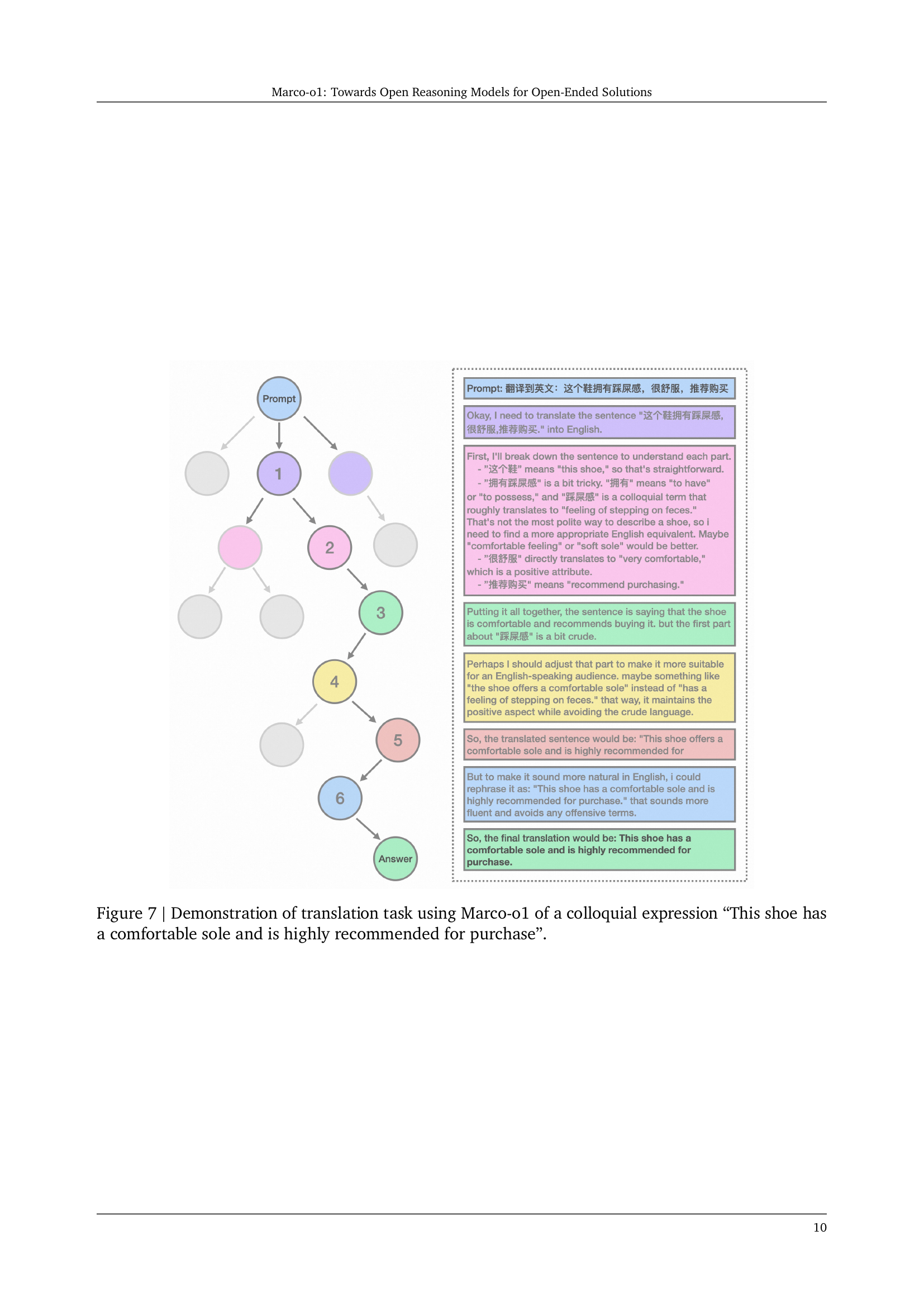

🔼 Figure 7 showcases Marco-01’s translation capabilities by translating the Chinese colloquial expression ‘这个鞋拥有踩屎感,很舒服,推荐购买’ which literally translates to ‘This shoe has a feeling of stepping on feces, very comfortable, recommended to buy.’ The model demonstrates its ability to understand nuanced language and cultural context, translating the phrase into the more natural and appropriate English equivalent: ‘This shoe has a comfortable sole and is highly recommended for purchase.’ This highlights Marco-01’s superior grasp of colloquialisms and its ability to produce accurate and fluent translations.

read the caption

Figure 7: Demonstration of translation task using Marco-o1 of a colloquial expression “This shoe has a comfortable sole and is highly recommended for purchase”.

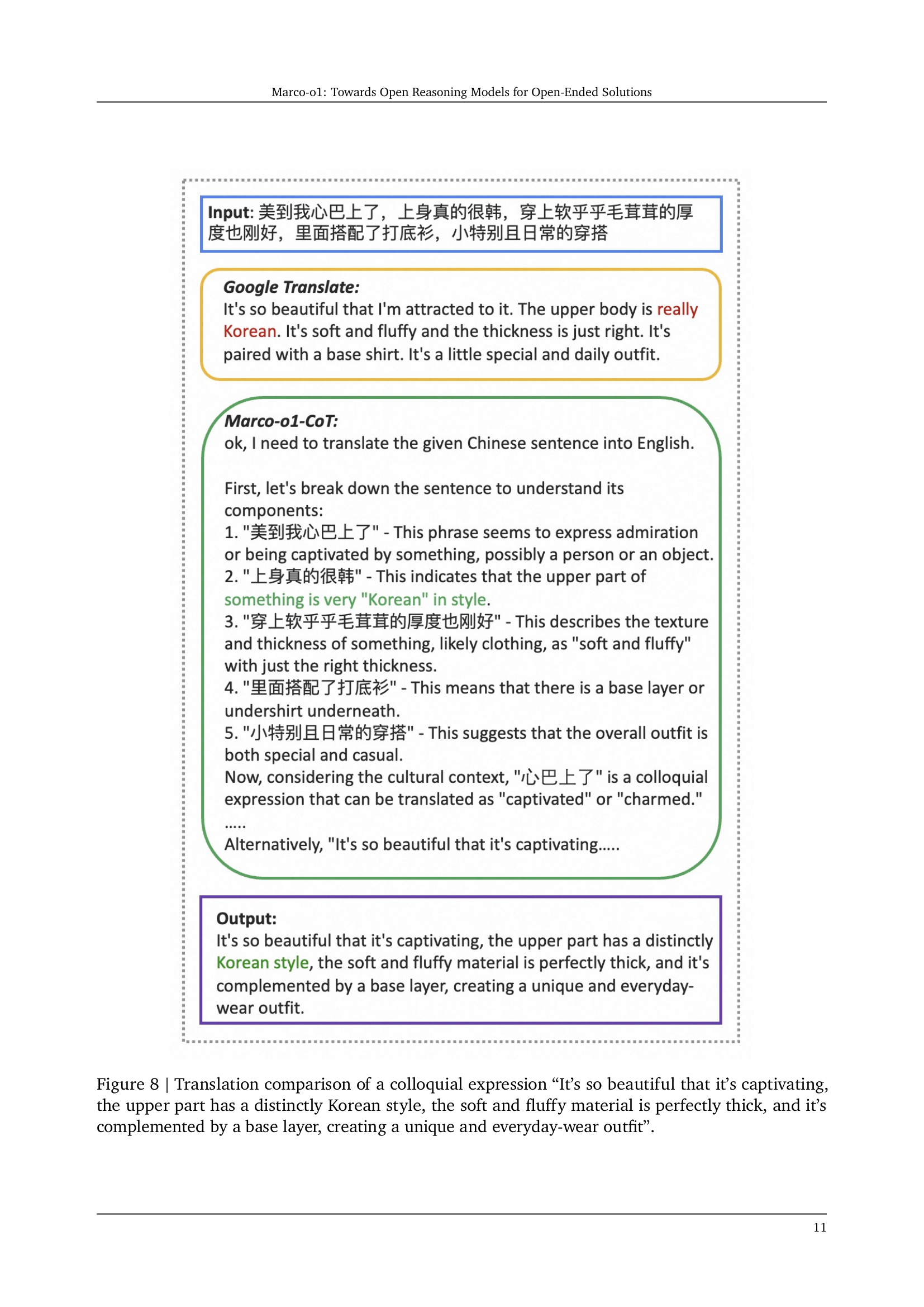

🔼 Figure 8 presents a comparison of how Google Translate and the Marco-01 model translate a colloquial Chinese sentence. The Chinese sentence describes clothing, highlighting its beauty, Korean style, soft texture, appropriate thickness, and layering. The comparison shows Marco-01’s more nuanced translation, capturing the original text’s descriptive style and cultural references better than Google Translate’s more literal approach.

read the caption

Figure 8: Translation comparison of a colloquial expression “It’s so beautiful that it’s captivating, the upper part has a distinctly Korean style, the soft and fluffy material is perfectly thick, and it’s complemented by a base layer, creating a unique and everyday-wear outfit”.

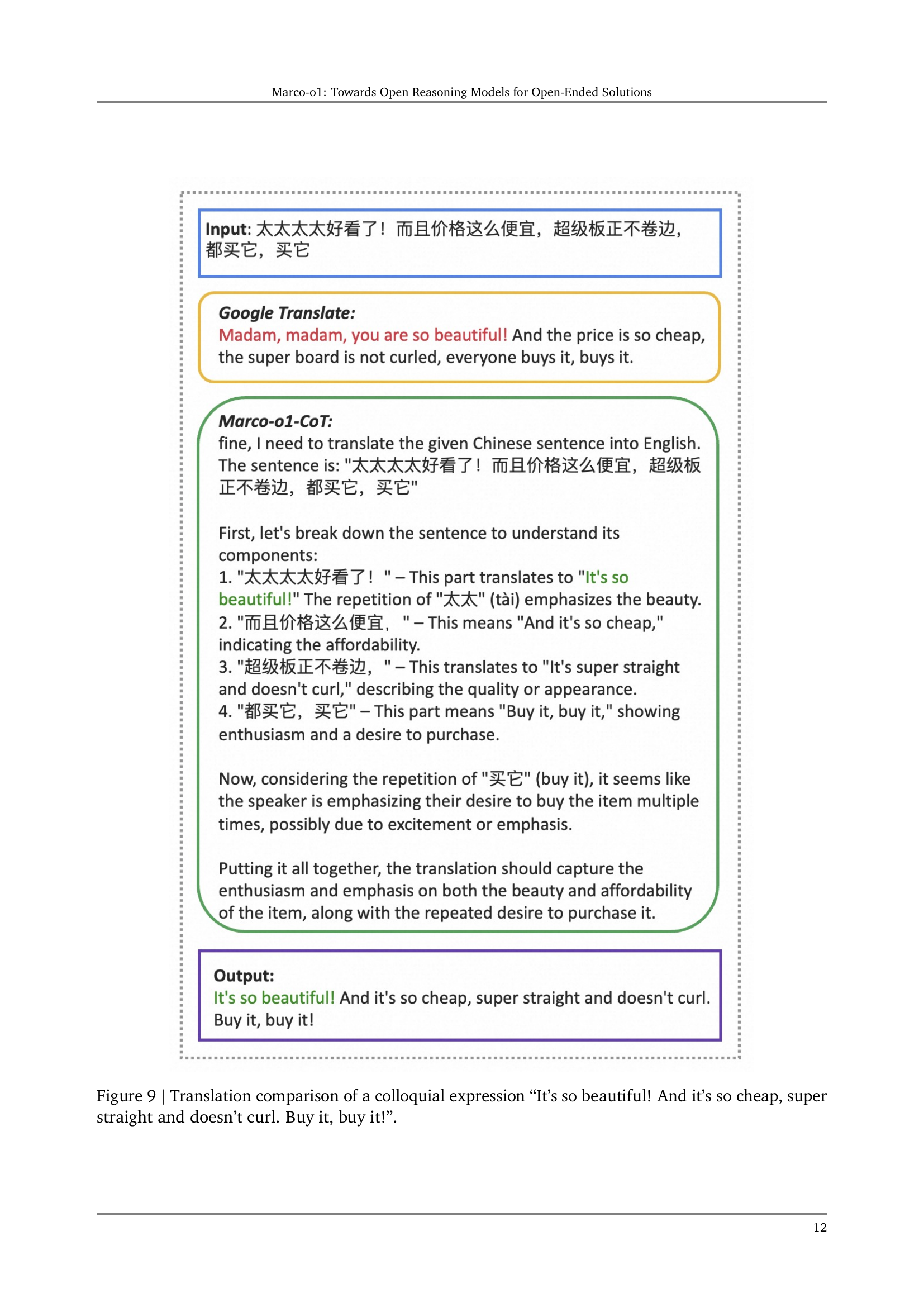

🔼 Figure 9 shows a comparison of how Google Translate and the Marco-01 model translate the Chinese colloquial expression ‘太太太太好看了!而且价格这么便宜,超级板正不卷边,都买它,买它.’ The Marco-01 model’s translation, ‘It’s so beautiful! And it’s so cheap, super straight and doesn’t curl. Buy it, buy it!’ more accurately captures the enthusiastic and colloquial tone of the original Chinese.

read the caption

Figure 9: Translation comparison of a colloquial expression “It’s so beautiful! And it’s so cheap, super straight and doesn’t curl. Buy it, buy it!”.

Full paper#