↗ arXiv ↗ Hugging Face ↗ Papers with Code

TL;DR#

Accurately predicting how a person’s face ages is incredibly difficult due to the complex interplay of genetic, lifestyle, and environmental factors. Current global aging methods often generate plausible but inaccurate results, lacking personalization. They often fail to capture an individual’s unique aging patterns. This paper addresses these shortcomings by introducing MyTimeMachine, a new method capable of learning highly accurate personalized aging models using a limited number of personal photos.

MyTimeMachine leverages a novel adapter network combined with a global aging prior. This system refines global age transformations with personalized characteristics learned from the user’s personal photos. The model incorporates three custom loss functions designed to improve the realism, accuracy, and consistency of the results. Experiments show that MyTimeMachine produces superior results compared to other methods, especially in its ability to accurately predict a person’s appearance at different ages and extend the re-aging effect to videos. The technique is shown to be particularly effective even with just 50 photos, showcasing its efficiency and potential for real-world applications.

Key Takeaways#

Why does it matter?#

This paper is important because it presents a novel approach to personalized facial age transformation, a challenging problem with significant implications for various applications, including entertainment, forensics, and social media. The method is particularly relevant due to its ability to achieve high-quality results using a limited number of personal photos, opening up new avenues of research in personalized image generation and manipulation. Furthermore, the comprehensive evaluation and analysis of different approaches provide valuable insights for future research in this area.

Visual Insights#

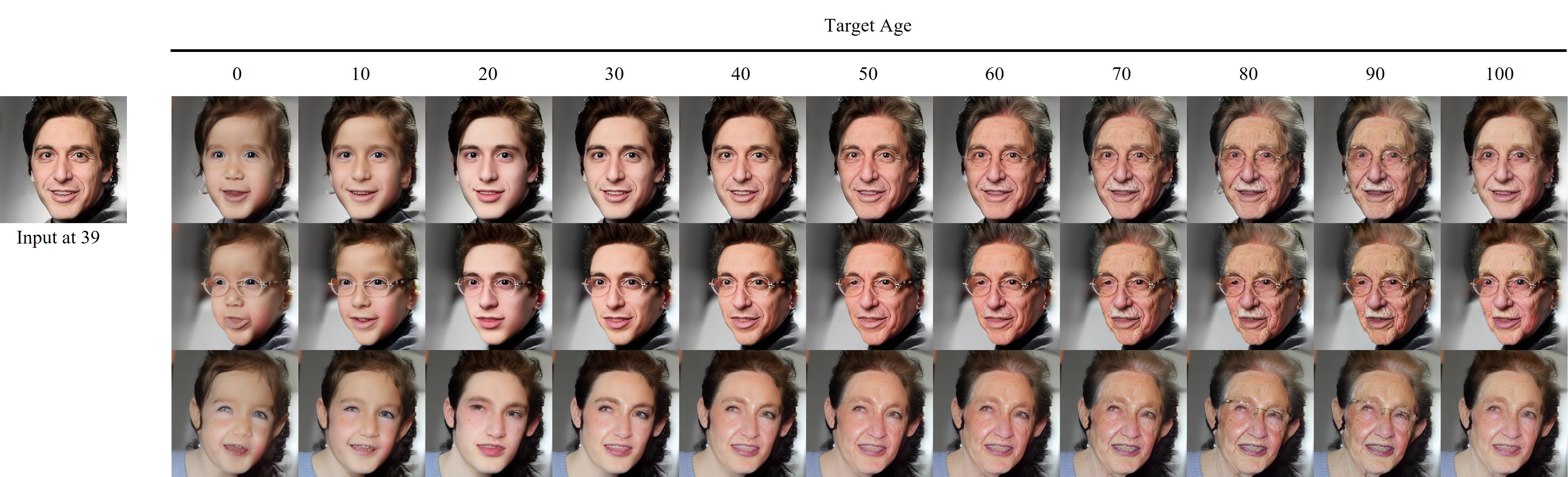

🔼 This figure demonstrates the MyTimeMachine model’s ability to perform both age regression and progression using a personalized approach. The top row showcases the model’s age regression capabilities, taking an older image as input and generating a younger, realistic version while preserving the individual’s identity. The bottom row illustrates the age progression function, starting with a younger image and producing an older, realistic representation. In both cases, the model is trained on a relatively small number of personal photos (approximately 50) spanning 20-40 years. The re-aged faces generated by MyTimeMachine are designed to closely resemble the individual’s actual appearance at the target age, representing an improvement over existing general-purpose age transformation techniques.

read the caption

Figure 1: We introduce MyTimeMachine to perform personalized age regression (top) and progression (bottom) by training a person-specific aging model from a few (∼similar-to\sim∼50) personal photos spanning over a 20-40 year range. Our method outperforms existing age transformation techniques to generate re-aged faces that closely resemble the characteristic facial appearance of the user at the target age.

| Method | AgeMAE(↓) | IDsim(↑) a_tgt ≤ 70 | IDsim(↑) a_tgt ∈ 50~70 | IDsim(↑) a_tgt ∈ 30~70 |

|---|---|---|---|---|

| SAM [2] | 8.1 | 0.49 | 0.58 | 0.53 |

| + Pers. f.t. (50~70) | 8.2 | 0.48 | 0.58 | - |

| + Pers. f.t. (30~70) | 9.2 | 0.49 | - | 0.53 |

| CUSP [14] | 11.0 | 0.39 | 0.44 | 0.42 |

| AgeTransGAN [17] | 11.1 | 0.53 | 0.65 | 0.58 |

| FADING [7] | 8.9 | 0.60 | 0.72 | 0.66 |

| + Dreambooth [49] (50~70) | 25.9 | 0.63 | 0.78 | - |

| + Dreambooth [49] (30~70) | 23.0 | 0.64 | - | 0.70 |

| Ours (50~70) | 7.7 | 0.65 | 0.76 | - |

| Ours (30~70) | 7.8 | 0.67 | - | 0.72 |

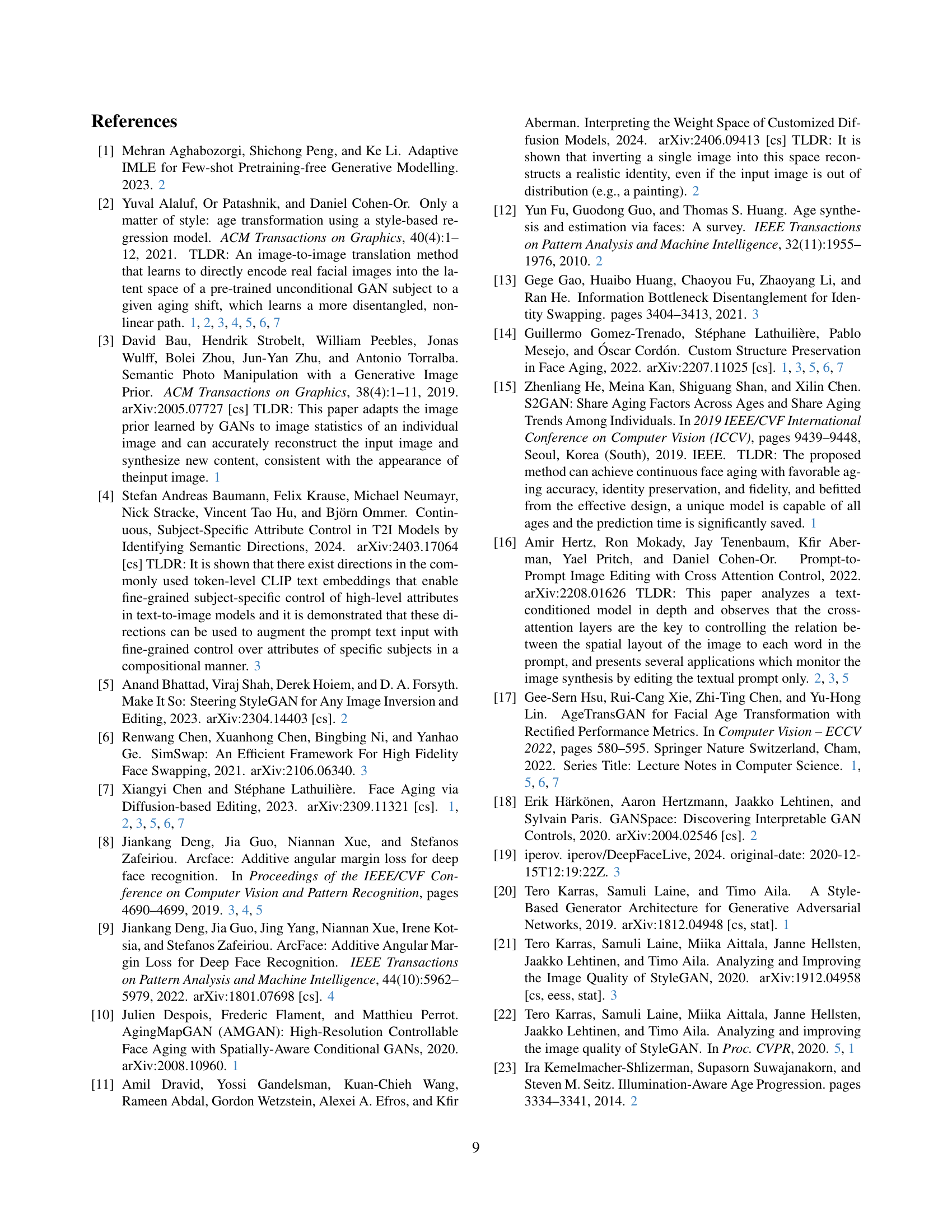

🔼 This table presents a quantitative comparison of different facial age transformation methods on an age regression task. The task involves de-aging a 70-year-old input face image to various target ages (less than or equal to 70). The comparison includes several state-of-the-art methods (SAM, CUSP, AgeTransGAN, FADING) and two versions of the proposed MyTimeMachine method, trained with different lengths of personal photo collections (20-year and 40-year ranges). The evaluation metrics used are Age Mean Absolute Error (AgeMAE), measuring the accuracy of age prediction, and Identity Similarity (IDsim), which indicates how well the re-aged face retains the identity of the original individual. The table highlights the best-performing method for each metric in bold and the second-best in underlined text, providing a comprehensive view of the relative performance of various techniques.

read the caption

Table 1: Performance of age regression where an input test image at 70 years old is de-aged to a target age atgt≤70subscript𝑎𝑡𝑔𝑡70a_{tgt}\leq 70italic_a start_POSTSUBSCRIPT italic_t italic_g italic_t end_POSTSUBSCRIPT ≤ 70. We also evaluate MyTM (Ours) using 20-year (atgt∈50∼70subscript𝑎tgt50similar-to70a_{\text{tgt}}\in 50\sim 70italic_a start_POSTSUBSCRIPT tgt end_POSTSUBSCRIPT ∈ 50 ∼ 70) and 40-year (atgt∈30∼70subscript𝑎tgt30similar-to70a_{\text{tgt}}\in 30\sim 70italic_a start_POSTSUBSCRIPT tgt end_POSTSUBSCRIPT ∈ 30 ∼ 70) age ranges in the training data. Bold indicates the best results, while underlined denotes the second-best.

In-depth insights#

Personalized Aging#

The concept of “Personalized Aging” in the context of facial age transformation research signifies a significant advancement beyond traditional methods. Existing techniques often rely on general aging models trained on large datasets, resulting in re-aged faces that lack individual-specific characteristics. Personalized aging addresses this limitation by incorporating individual-specific data, typically a collection of personal photographs spanning a significant age range. This approach enables the creation of aging models tailored to the unique aging patterns of a specific person. The challenge lies in effectively combining this personalized data with general aging knowledge to avoid overfitting and to generalize to ages outside the training data. This is achieved through techniques such as adapter networks, which modify general features with personalized adjustments, and careful selection of loss functions to optimize both identity preservation and age realism. The ability to generate realistic and identity-preserving re-aged faces at a variety of ages has significant implications for various applications, including virtual aging in film and television, forensic analysis, and personal reminiscence tools. The success of personalized aging hinges on the availability of sufficient high-quality personal photos and development of robust algorithms to handle the inherent complexities of individual aging patterns.

Adapter Network#

The core concept of the ‘Adapter Network’ within the context of this research paper is to personalize a pre-trained global aging model. Instead of training a completely new model from scratch for each individual, which would be data-intensive and computationally expensive, this network acts as a fine-tuning mechanism. It takes the output of a generic age transformation model and adjusts it using information learned from a person’s own photos. This personalization ensures that the final output closely reflects the individual’s unique aging characteristics, resulting in more accurate and realistic age transformations compared to applying generic aging models alone. The adapter is trained using custom loss functions designed to maintain identity consistency, accurately reflect age progression across a range of years, and to prevent overfitting to the available personal data. The combination of a global model and the personalized adapter represents a novel approach to the challenge of personalized facial age transformation, effectively balancing the benefits of large-scale training data with the need for highly individualistic results.

Loss Function Design#

The authors thoughtfully address the challenge of personalized facial age transformation by designing a multi-faceted loss function. Personalized aging loss directly targets the core issue of accurately reflecting an individual’s appearance at a specific age, moving beyond global average aging models by comparing generated images with actual images from the individual’s personal photo collection at a similar age. This strategy prioritizes identity preservation and accuracy at the target age. To overcome overfitting on limited personal data and improve generalization to unseen ages, they incorporate extrapolation regularization. This loss term encourages the model to align its output with a pre-trained global aging model when generating ages beyond the training range, ensuring that personalized and global aging aspects are appropriately balanced. Finally, adaptive w-norm regularization elegantly addresses the inherent tension between the fidelity of the generated images and their editability, common in StyleGAN2. By dynamically adjusting the regularization strength based on the age difference between the input and target ages, the model avoids overfitting to the training data while maintaining the expressiveness necessary for convincing age transformations.

Video Age Transfer#

Video age transfer, a crucial aspect of personalized facial aging, presents unique challenges. Extending single-image techniques to videos necessitates addressing temporal consistency. Simply applying frame-by-frame age transformation leads to jarring inconsistencies. Therefore, a robust video age transfer method must ensure smooth, identity-preserving transitions across frames. Techniques like face-swapping, while effective for transferring aged features, require careful selection of a keyframe and robust alignment to prevent artifacts. This approach hinges on the accuracy of the single-image age transformation, and hence improvement in single-image approaches is beneficial. Further research should explore methods to directly learn temporal dynamics of aging, potentially using recurrent neural networks or other temporal modeling techniques, for more natural and realistic results. The quality and quantity of training data, particularly longitudinal video data with consistent identity and lighting conditions, play a vital role. Addressing issues such as changes in facial features, hair, and pose across time will further enhance the realism and efficacy of video age transfer methods. Ethical considerations remain paramount, as video age transfer can have significant social implications.

Future Directions#

Future research could explore improving the robustness of the model to handle various factors influencing facial aging like lighting, pose, and ethnicity, enhancing its generalizability and accuracy. Addressing the limitations of the current approach in handling accessories like glasses and hairstyles would also be beneficial. Furthermore, investigating techniques to mitigate potential biases and ethical concerns associated with age transformation is crucial. Developing methods for fine-grained control over specific aging features and improving temporal consistency in video re-aging are important directions. Exploring alternative model architectures beyond StyleGAN2, such as diffusion models with improved inversion and editability capabilities, could unlock new possibilities. Finally, creating larger and more diverse datasets with high-quality longitudinal data across multiple ethnicities and genders would significantly improve training data availability and model performance.

More visual insights#

More on figures

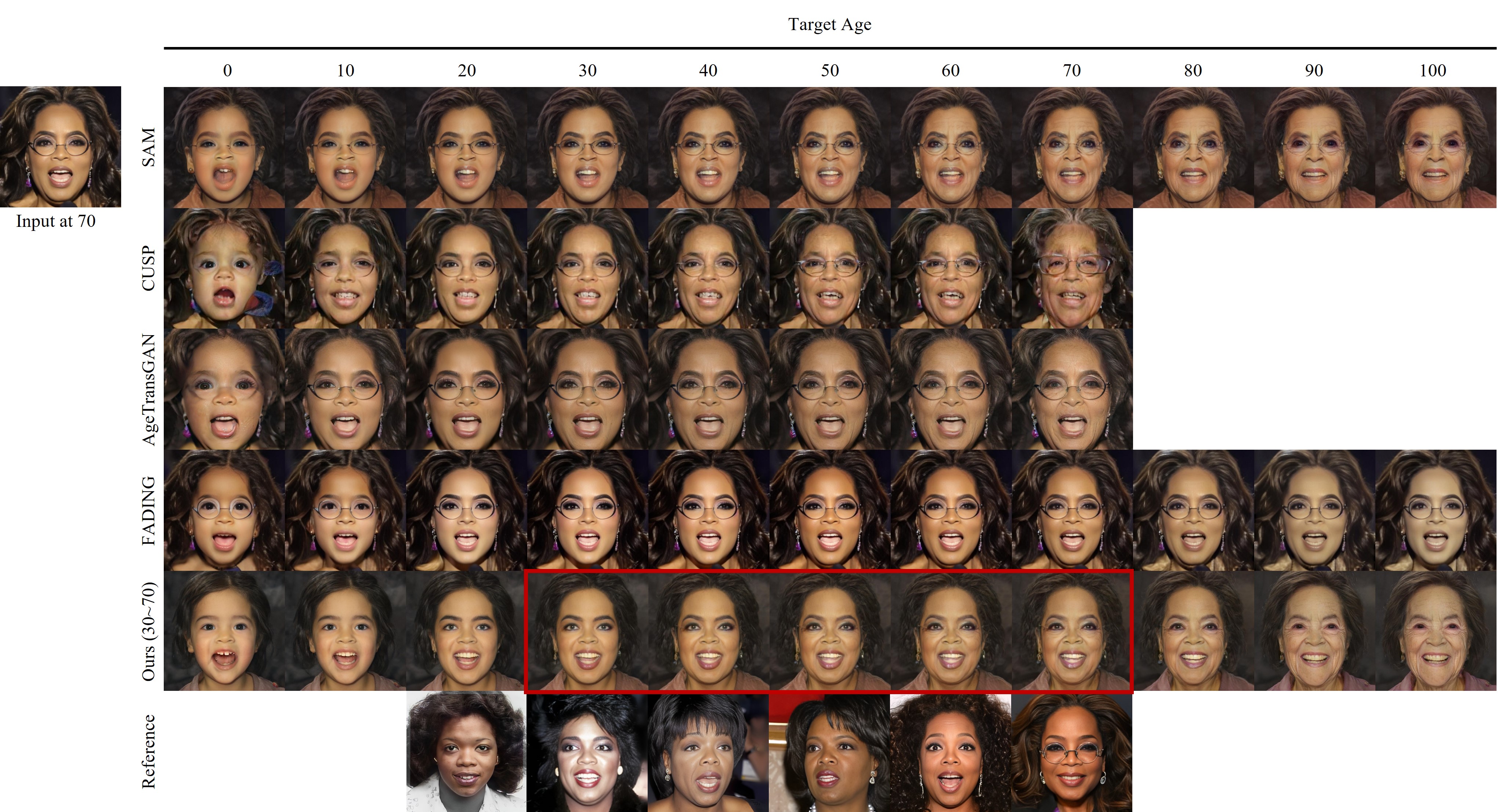

🔼 This figure shows the results of the MyTimeMachine model on an image of Oprah Winfrey. The input is a 70-year-old image. The model successfully de-ages the image to make Oprah appear to be approximately 30, maintaining the style and identity of the original image. This is achieved by training a personalized model using around 50 images of the same person across their lifespan, combined with a pre-trained global aging model (SAM). The adapter network adjusts the global aging features with personalized aging features, resulting in accurate and consistent re-aging across a range of target ages, both within and outside the training age range.

read the caption

Figure 2: Given an input face of Oprah Winfrey at 70 years old, our adapter re-ages her face to resemble her appearance at 30, while preserving the style of the input image. To achieve personalized re-aging, we collect ∼similar-to\sim∼50 images of an individual across different ages and train an adapter network that updates the latent code generated by the global age encoder SAM. Our adapter preserves identity during interpolation when the target age falls within the range of ages seen in the training data, while also extrapolating well to unseen ages.

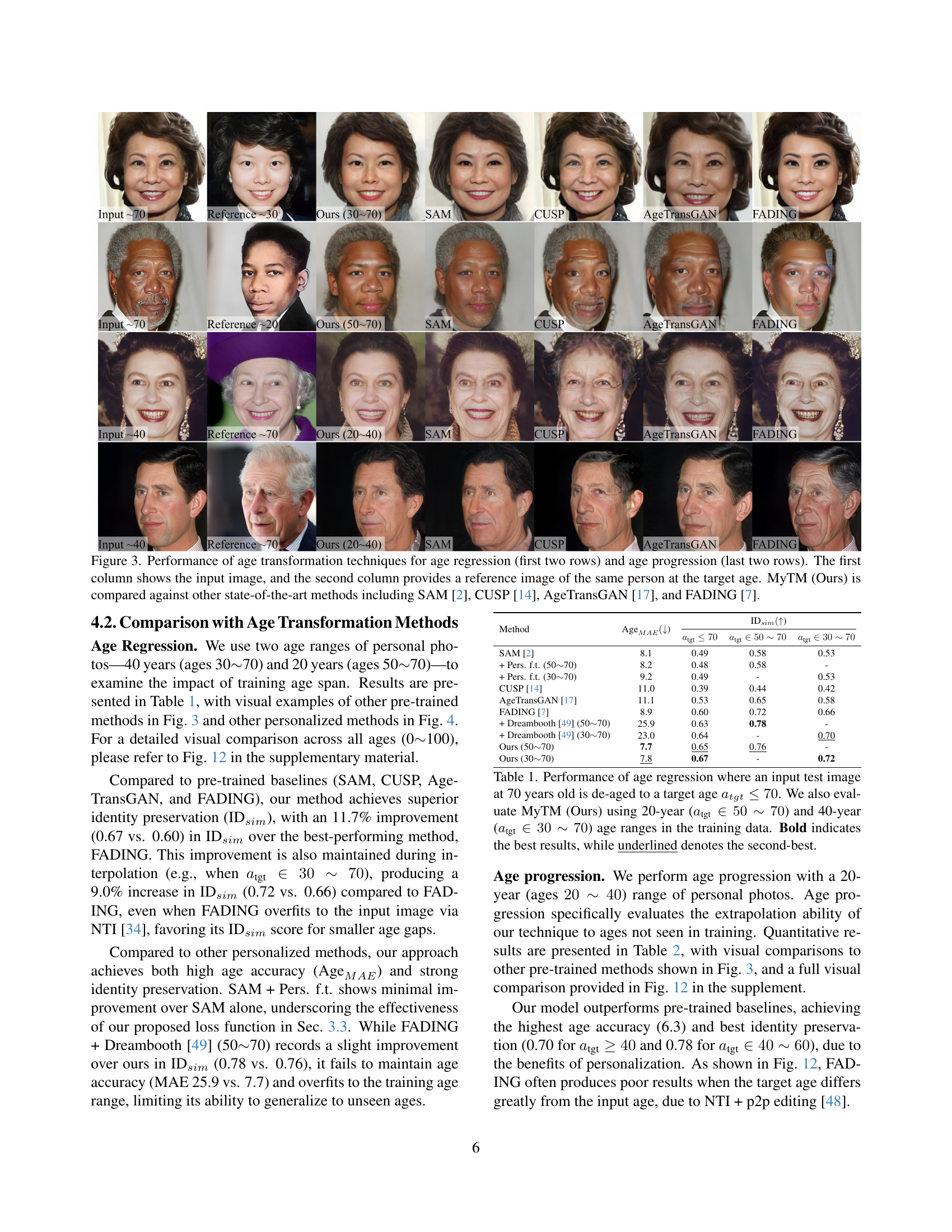

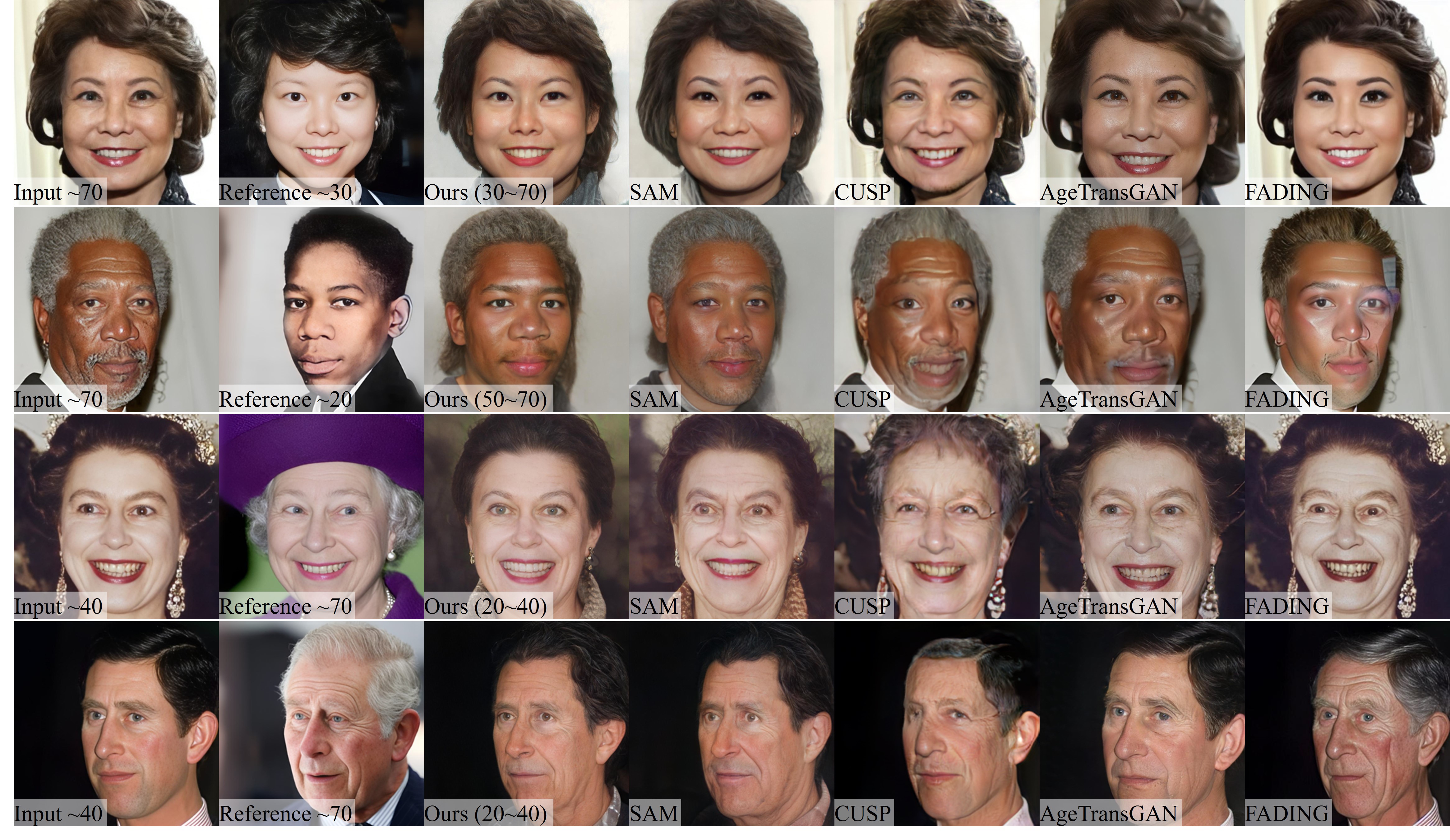

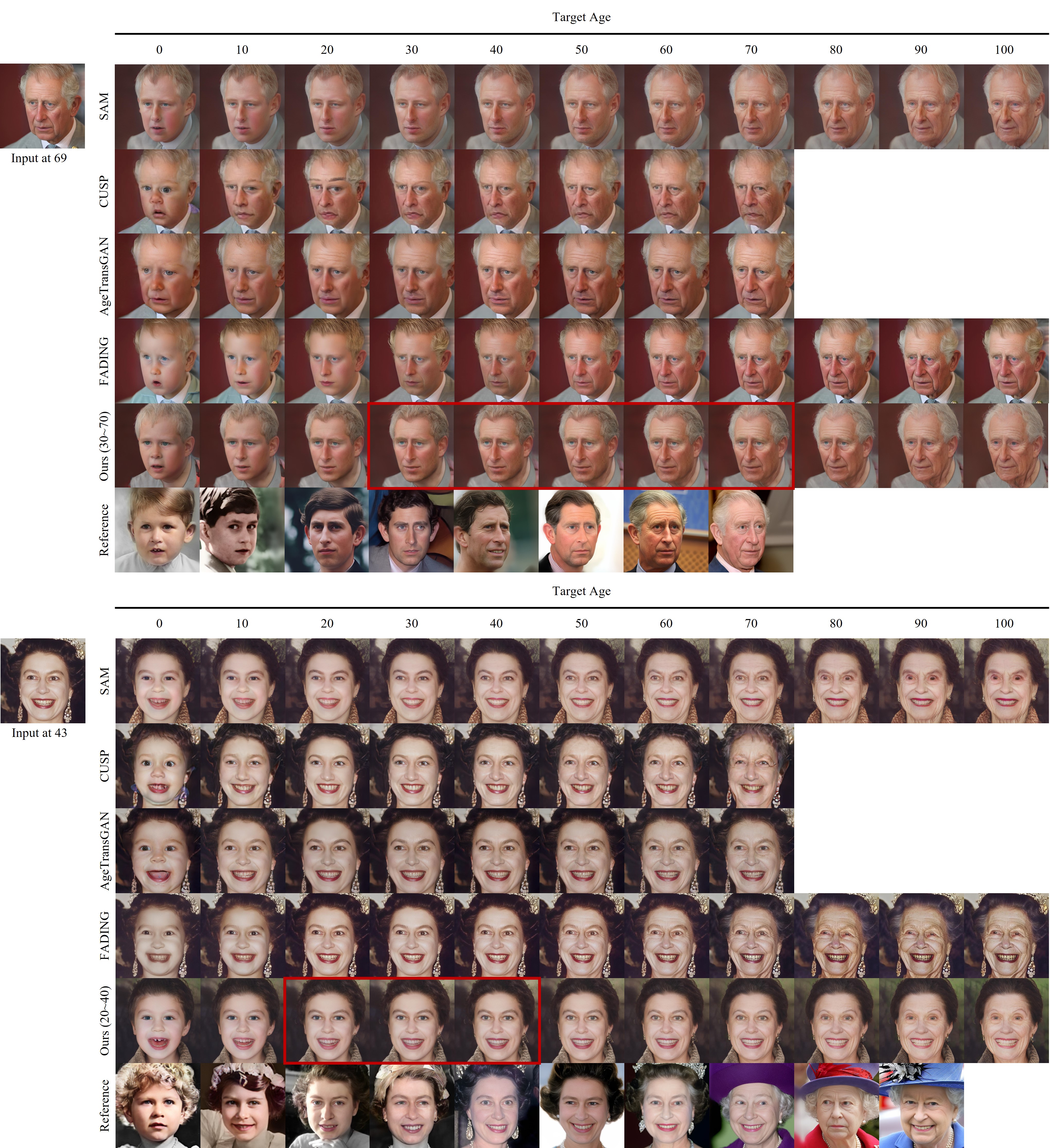

🔼 Figure 3 presents a comparison of facial age transformation results using different techniques. The top two rows illustrate age regression, where an older image is transformed to appear younger, while the bottom two rows show age progression where a younger image is transformed to appear older. Each set of rows shows results for a different individual. The leftmost column of each row displays the original input image of a particular individual. The second column provides a reference image of the same individual at the desired target age. The remaining columns display the results obtained using different age transformation techniques: MyTimeMachine (the authors’ proposed method), SAM, CUSP, AgeTransGAN, and FADING. This allows for a visual comparison of the quality and accuracy of each method in achieving realistic and identity-preserving transformations.

read the caption

Figure 3: Performance of age transformation techniques for age regression (first two rows) and age progression (last two rows). The first column shows the input image, and the second column provides a reference image of the same person at the target age. MyTM (Ours) is compared against other state-of-the-art methods including SAM [2], CUSP [14], AgeTransGAN [17], and FADING [7].

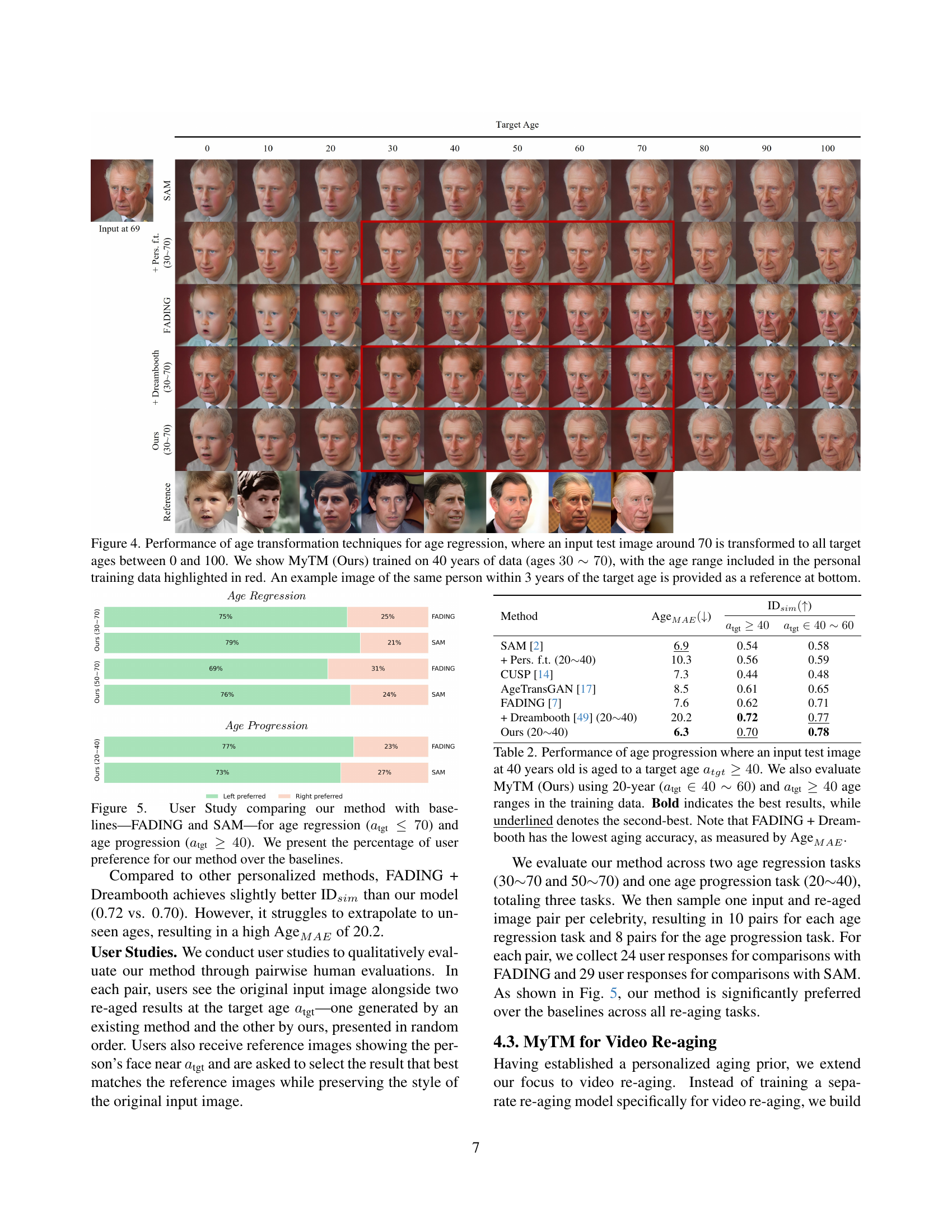

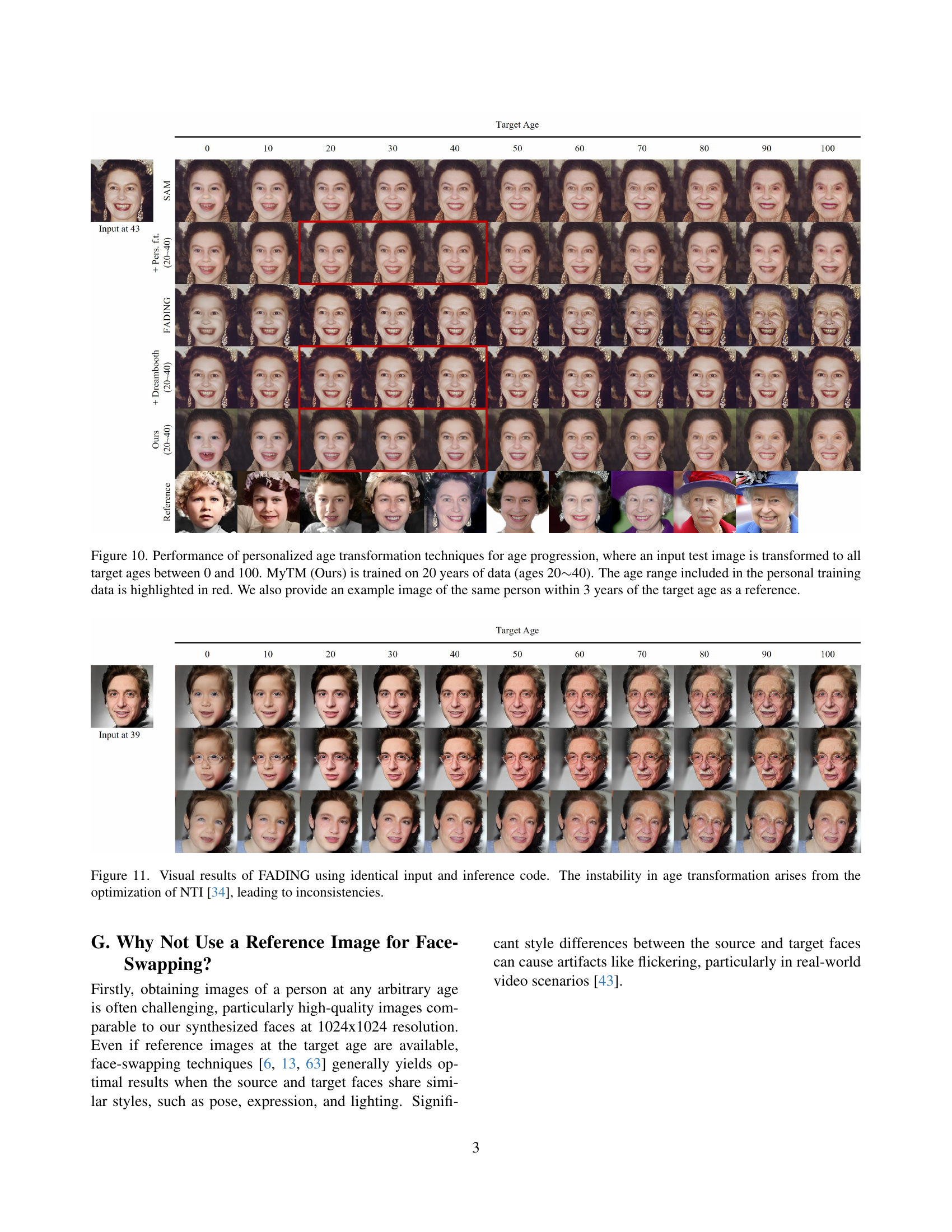

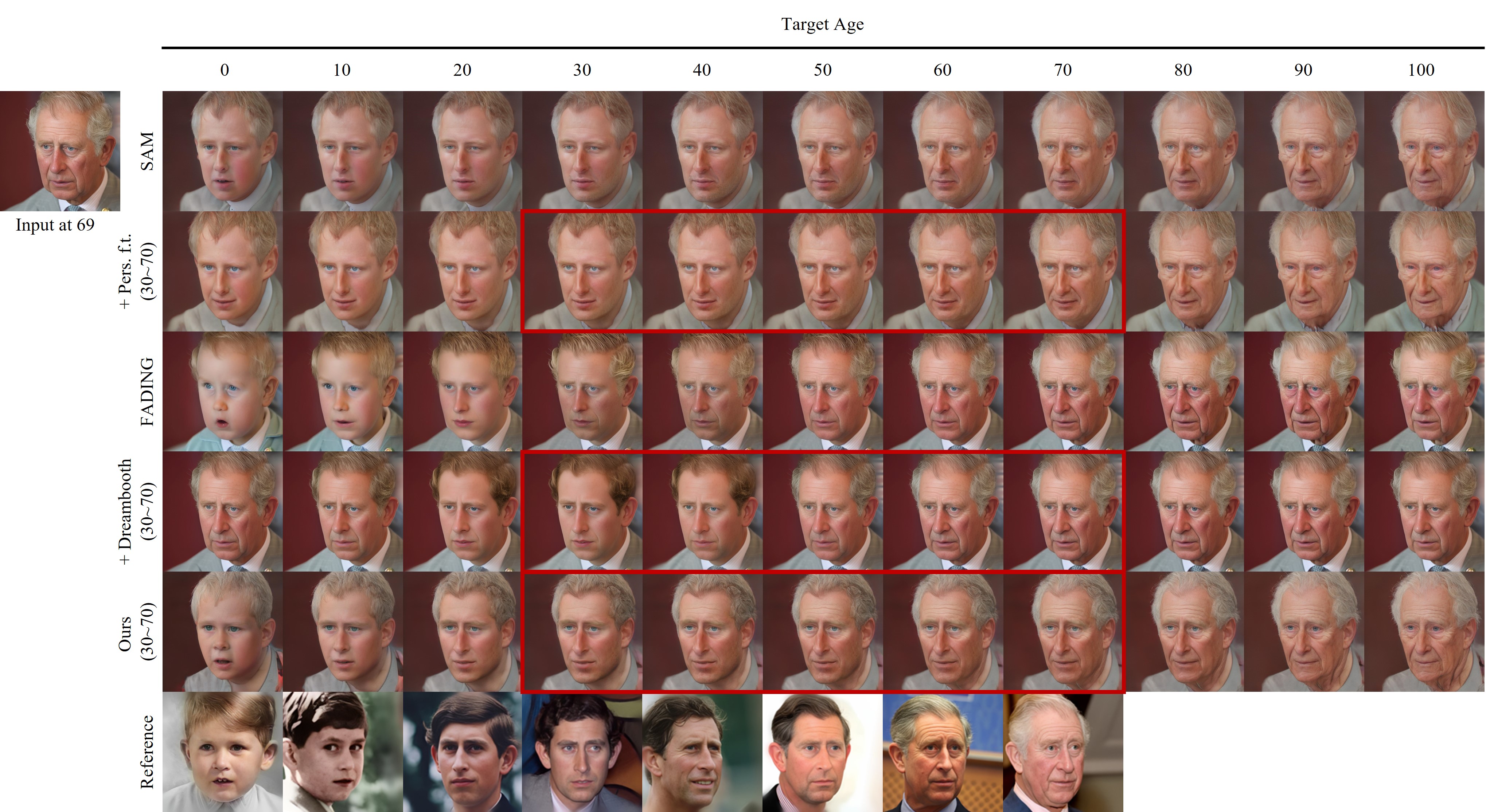

🔼 Figure 4 presents a comparison of different facial age transformation methods, focusing on age regression. The input is a single image of a person around age 70. Each method is used to generate images of the same person at various ages ranging from 0 to 100. The results from MyTimeMachine (MyTM), the proposed method, are shown, along with results from several existing methods. The MyTM model was trained on a dataset spanning 40 years (ages 30-70), and the age range used in the training set is highlighted in red for each individual’s results. A reference image of the person at an age close to the target age (±3 years) is included at the bottom of the figure for comparison, helping to visually assess the accuracy of the different techniques in generating realistic-looking faces.

read the caption

Figure 4: Performance of age transformation techniques for age regression, where an input test image around 70 is transformed to all target ages between 0 and 100. We show MyTM (Ours) trained on 40 years of data (ages 30∼70similar-to307030\sim 7030 ∼ 70), with the age range included in the personal training data highlighted in red. An example image of the same person within 3 years of the target age is provided as a reference at bottom.

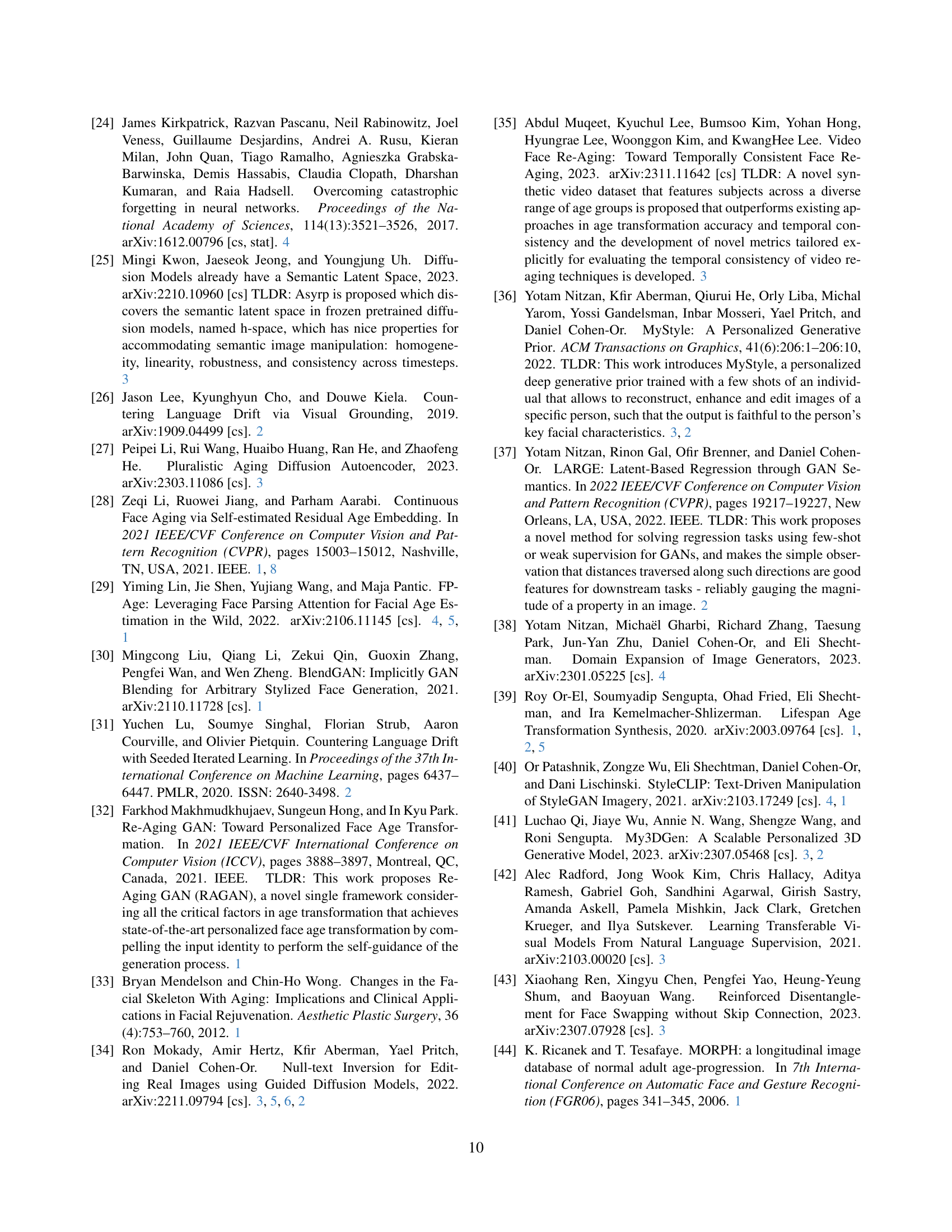

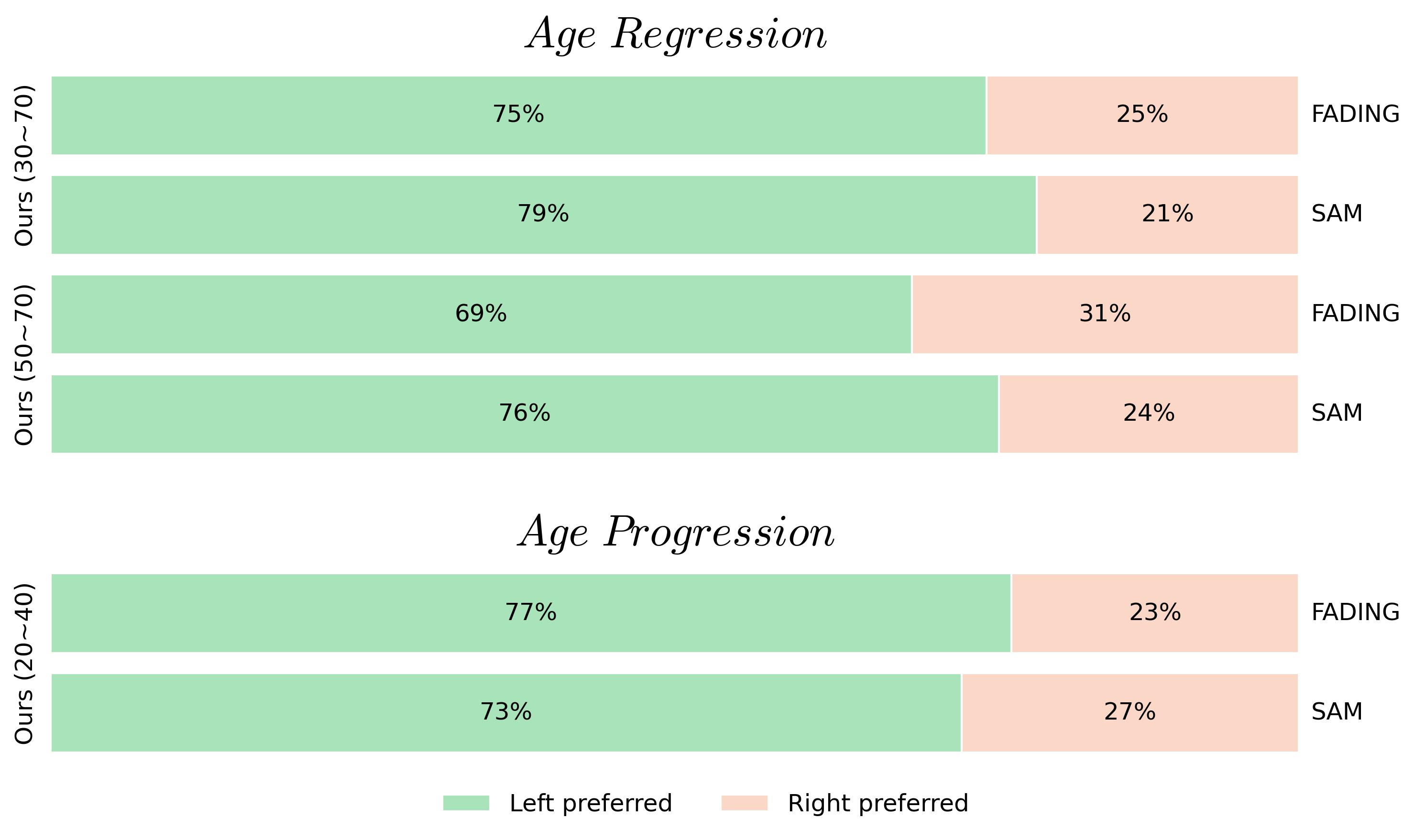

🔼 This figure presents the results of a user study comparing the authors’ proposed method for age transformation against two state-of-the-art baselines: FADING and SAM. The study focused on two scenarios: age regression (making someone look younger, where the target age is less than or equal to 70) and age progression (making someone look older, where the target age is greater than or equal to 40). The figure shows the percentage of times users preferred the authors’ method over each baseline in each scenario, indicating the relative preference for the proposed method in terms of visual quality and accuracy of age transformation.

read the caption

Figure 5: User Study comparing our method with baselines—FADING and SAM—for age regression (atgt≤70subscript𝑎tgt70a_{\text{tgt}}\leq 70italic_a start_POSTSUBSCRIPT tgt end_POSTSUBSCRIPT ≤ 70) and age progression (atgt≥40subscript𝑎tgt40a_{\text{tgt}}\geq 40italic_a start_POSTSUBSCRIPT tgt end_POSTSUBSCRIPT ≥ 40). We present the percentage of user preference for our method over the baselines.

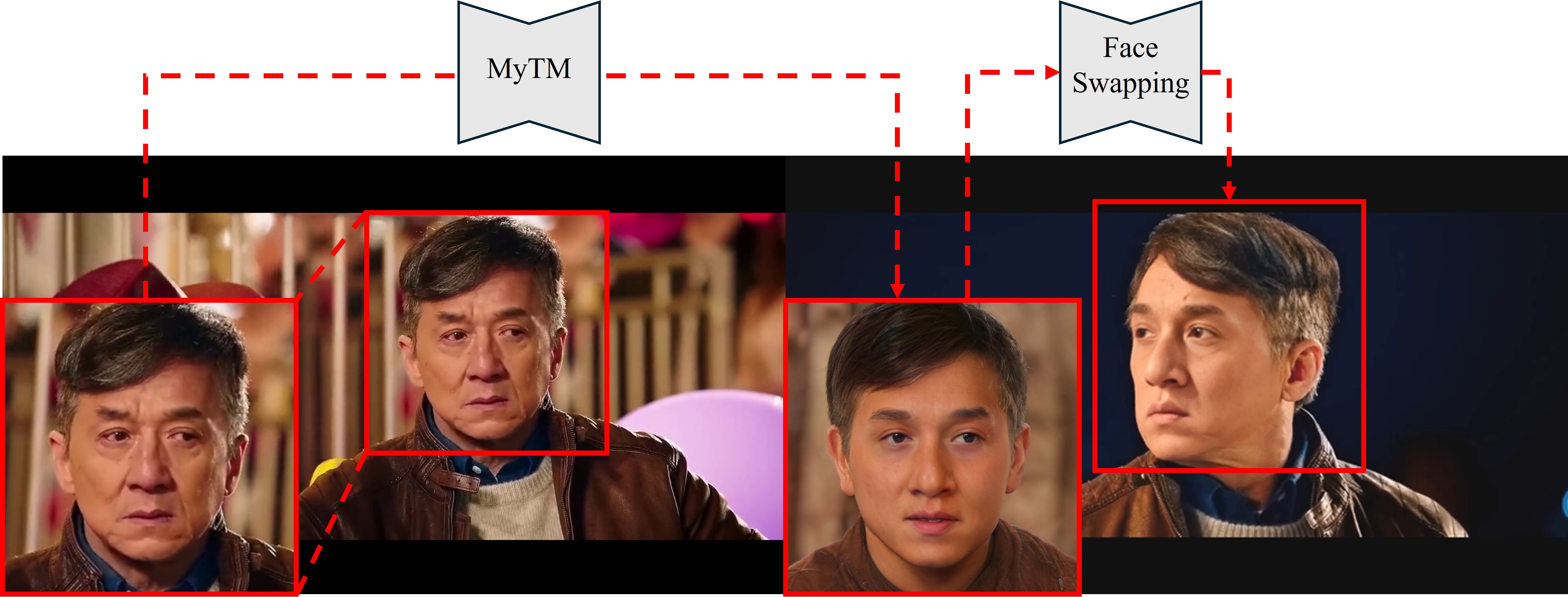

🔼 This figure demonstrates the application of the MyTimeMachine (MyTM) model to video re-aging. A keyframe from a video of Jackie Chan in the movie Bleeding Steel is selected. The MyTM model is used to re-age Jackie Chan’s face in this keyframe. Then, using face-swapping techniques, this re-aged face is seamlessly integrated into all the other frames of the original video, resulting in a temporally consistent video where Jackie Chan appears younger.

read the caption

Figure 6: We apply video re-aging on a video of Jackie Chan from the movie Bleeding Steel. Left: The keyframe from the source video that we re-age with MyTM. Right: The re-aged face is mapped onto other frames of the source video via face-swapping.

🔼 This figure demonstrates the impact of the training dataset size on the performance of the MyTimeMachine model. The experiment focuses on age regression, where the model de-ages a 70-year-old face to a younger age (atgt≤70). The model was trained on a range of ages from 30 to 70. The figure displays examples of the results generated from using training datasets of various sizes (10, 50, and 100 images) for Robert De Niro. The quantitative results, shown below the images, demonstrate the improvement in the quality of age transformation as the dataset size increases, indicating that using larger amounts of training data leads to better personalization and generalization.

read the caption

Figure 7: Effect of training dataset size 𝒟𝒟\mathcal{D}caligraphic_D on personalization. MyTM is trained on ages 30∼similar-to\sim∼70 and tested for atgt≤70subscript𝑎tgt70a_{\text{tgt}}\leq 70italic_a start_POSTSUBSCRIPT tgt end_POSTSUBSCRIPT ≤ 70. Visual examples of Robert De Niro are shown at the top, with quantitative results displayed below.

🔼 This figure demonstrates the impact of each component of the proposed personalized age transformation network, MyTimeMachine, on age regression performance. It shows the results of experiments conducted on Al Pacino’s images, using data spanning ages 30 to 70 for training and testing on images with target ages less than or equal to 70. The ablation study progressively adds components—a personalized aging loss, extrapolation regularization, and adaptive w-norm regularization—to the baseline SAM model, and an adapter network, to show their individual contribution to the overall performance. The results illustrate improvements in identity preservation (IDsim) as each component is added.

read the caption

Figure 8: Contributions of our proposed loss functions and the adapter network for the age regression task, trained on ages 30∼similar-to\sim∼70 and tested for atgt≤70subscript𝑎tgt70a_{\text{tgt}}\leq 70italic_a start_POSTSUBSCRIPT tgt end_POSTSUBSCRIPT ≤ 70 on Al Pacino.

More on tables

| Method | AgeMAE(↓) | IDsim(↑) atgt≥40 | IDsim(↑) atgt∈40~60 |

|---|---|---|---|

| SAM [2] | 6.9 | 0.54 | 0.58 |

| + Pers. f.t. (20~40) | 10.3 | 0.56 | 0.59 |

| CUSP [14] | 7.3 | 0.44 | 0.48 |

| AgeTransGAN [17] | 8.5 | 0.61 | 0.65 |

| FADING [7] | 7.6 | 0.62 | 0.71 |

| + Dreambooth [49] (20~40) | 20.2 | 0.72 | 0.77 |

| Ours (20~40) | 6.3 | 0.70 | 0.78 |

🔼 Table 2 presents the performance of different age transformation methods on an age progression task. The task involves taking an input image of a person at age 40 and generating images of that same person at older ages (40 and above). The table compares the performance of the proposed method (MyTimeMachine) with several existing methods including SAM, CUSP, AgeTransGAN, FADING, and variations using techniques like Dreambooth for personalization. The evaluation considers both age accuracy (AgeMAE) and identity preservation (IDsim). Results are shown for two different training data scenarios: one using 20-year-old data (ages 40-60) and another using data ranging from 40 years old and above. The best-performing method for each metric is highlighted in bold, and the second-best is underlined.

read the caption

Table 2: Performance of age progression where an input test image at 40 years old is aged to a target age atgt≥40subscript𝑎𝑡𝑔𝑡40a_{tgt}\geq 40italic_a start_POSTSUBSCRIPT italic_t italic_g italic_t end_POSTSUBSCRIPT ≥ 40. We also evaluate MyTM (Ours) using 20-year (atgt∈40∼60subscript𝑎tgt40similar-to60a_{\text{tgt}}\in 40\sim 60italic_a start_POSTSUBSCRIPT tgt end_POSTSUBSCRIPT ∈ 40 ∼ 60) and atgt≥40subscript𝑎tgt40a_{\text{tgt}}\geq 40italic_a start_POSTSUBSCRIPT tgt end_POSTSUBSCRIPT ≥ 40 age ranges in the training data. Bold indicates the best results, while underlined denotes the second-best. Note that FADING + Dreambooth has the lowest aging accuracy, as measured by AgeMAEsubscriptAge𝑀𝐴𝐸\text{Age}_{MAE}Age start_POSTSUBSCRIPT italic_M italic_A italic_E end_POSTSUBSCRIPT.

| Dataset | Metric | SAM | ||

|---|---|---|---|---|

| Size Ablations | 8.1 | 8.5 | 7.8 | |

| ID | 0.49 | 0.58 | 0.67 |

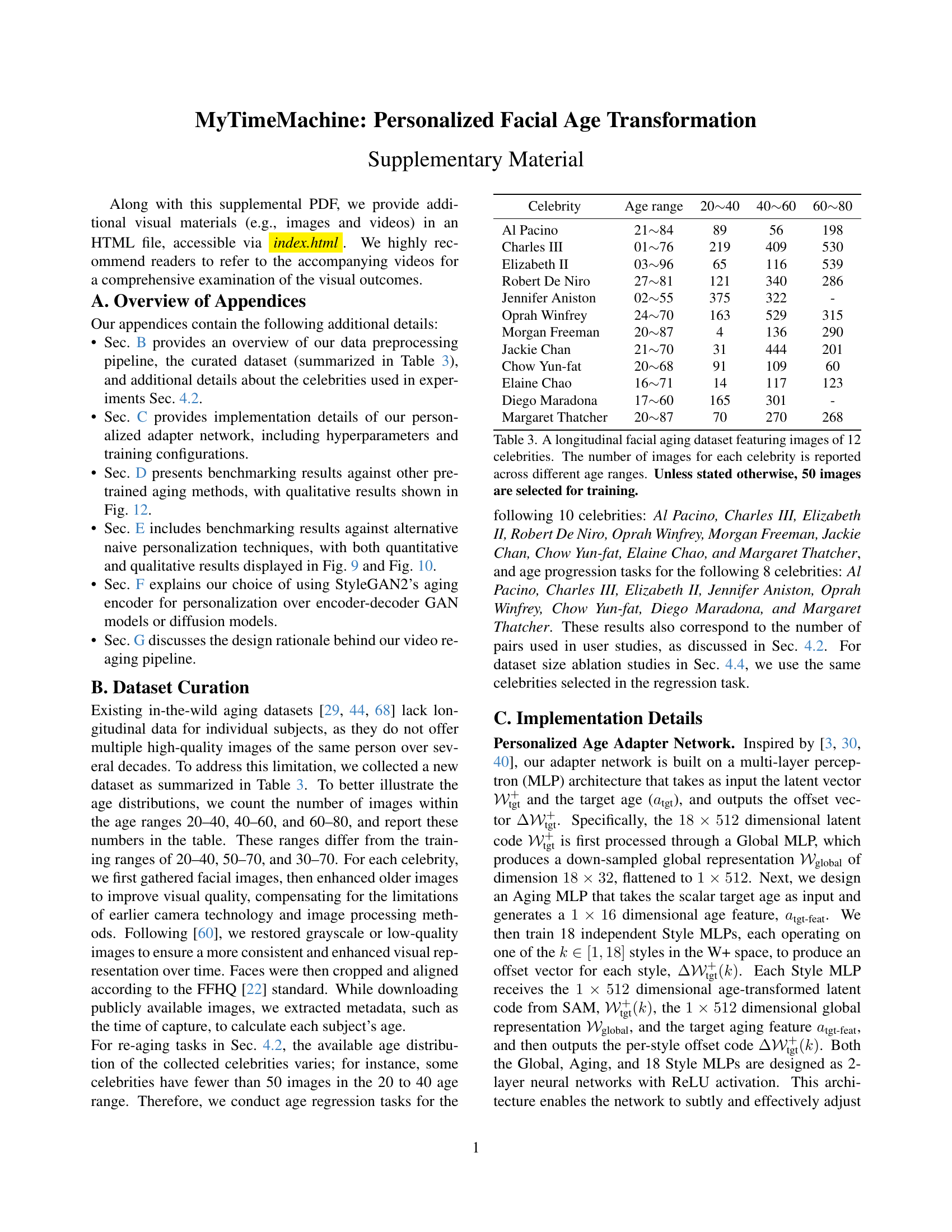

🔼 This table presents a longitudinal facial aging dataset containing images of 12 celebrities. For each celebrity, the dataset includes images spanning across different age ranges (20-40, 40-60, and 60-80 years old), with the total number of images per celebrity and age range provided. The table is crucial for understanding the data used to train the MyTimeMachine model, showcasing the diversity of the dataset in terms of both individuals and age ranges covered. The table notes that unless otherwise specified, 50 images per celebrity are selected for model training.

read the caption

Table 3: A longitudinal facial aging dataset featuring images of 12 celebrities. The number of images for each celebrity is reported across different age ranges. Unless stated otherwise, 50 images are selected for training.

Full paper#