↗ arXiv ↗ Hugging Face ↗ Papers with Code

TL;DR#

Traditional robotic systems struggle with adaptability in dynamic, real-world scenarios due to their reliance on pre-programmed instructions. Human-robot collaboration is hampered because of the limitations on knowledge representation and error handling, as well as the challenges of interpreting complex human instructions. This limits their applications in complex environments.

This research introduces a novel system that uses LLMs and a modified ReAct framework to overcome these limitations. The system leverages LLMs to understand natural language commands, integrates real-time perception and feedback to handle unexpected changes, and employs robust error handling mechanisms to ensure seamless task execution. The results show promising adaptability to dynamic environments and improve interactions with human users. The architecture utilizes a scene graph for environmental representation and employs continuous feedback loops to enable dynamic plan adjustment.

Key Takeaways#

Why does it matter?#

This paper is important because it presents a novel architecture for robotic action planning that uses large language models (LLMs) to integrate communication, perception, and planning. This is highly relevant to current research trends in human-robot interaction and autonomous robotics. The proposed system demonstrates significant improvements in adaptability and robustness, particularly in dynamic environments. It opens up new avenues for research, such as exploring the use of LLMs for adaptive planning, the development of more robust error handling mechanisms, and improving the ability of robots to collaborate seamlessly with humans. The findings could significantly impact the development of next-generation robots capable of performing complex tasks in real-world settings. The integration of LLMs with real-time feedback loops is a particularly significant contribution that has the potential to advance the field considerably.

Visual Insights#

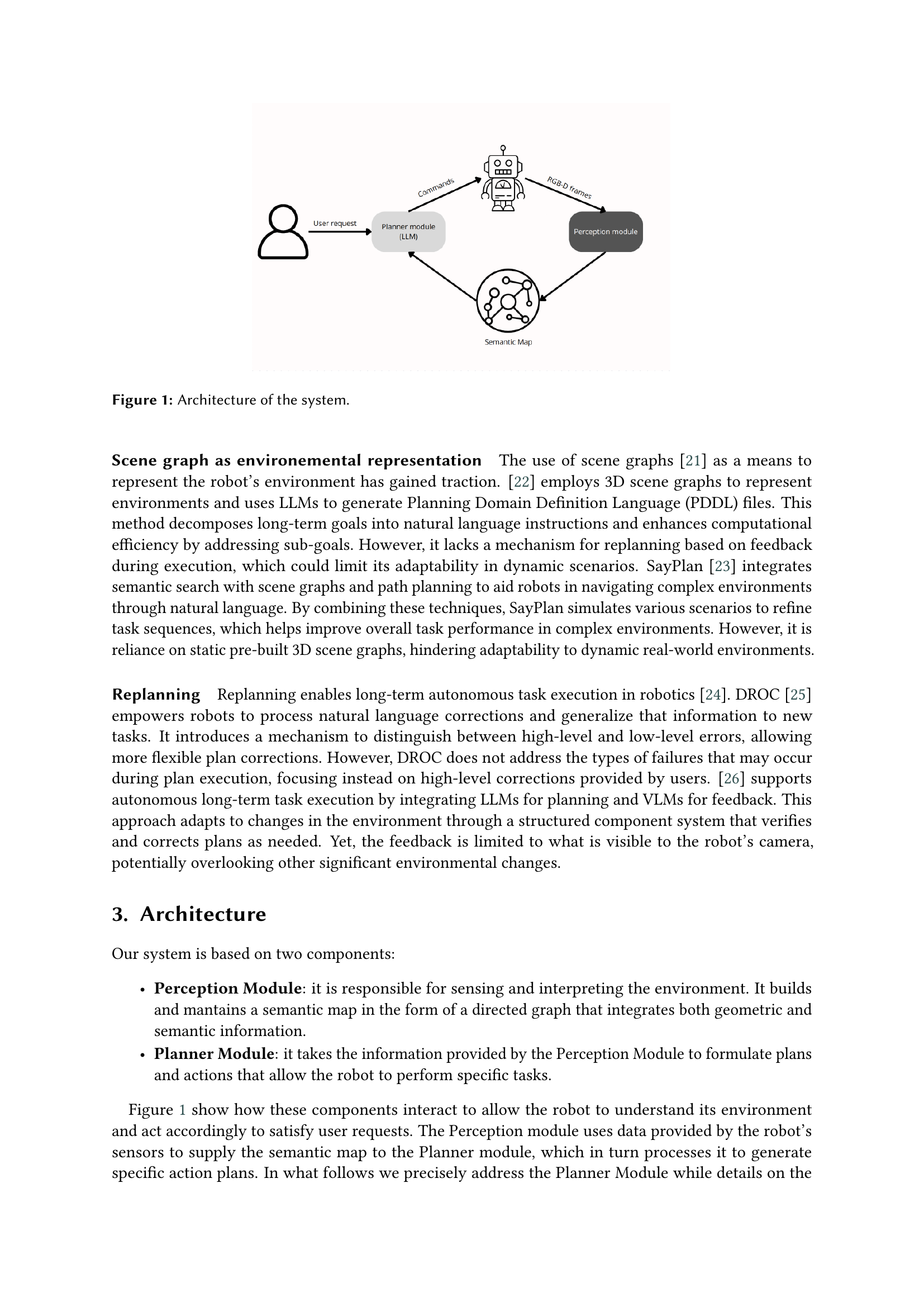

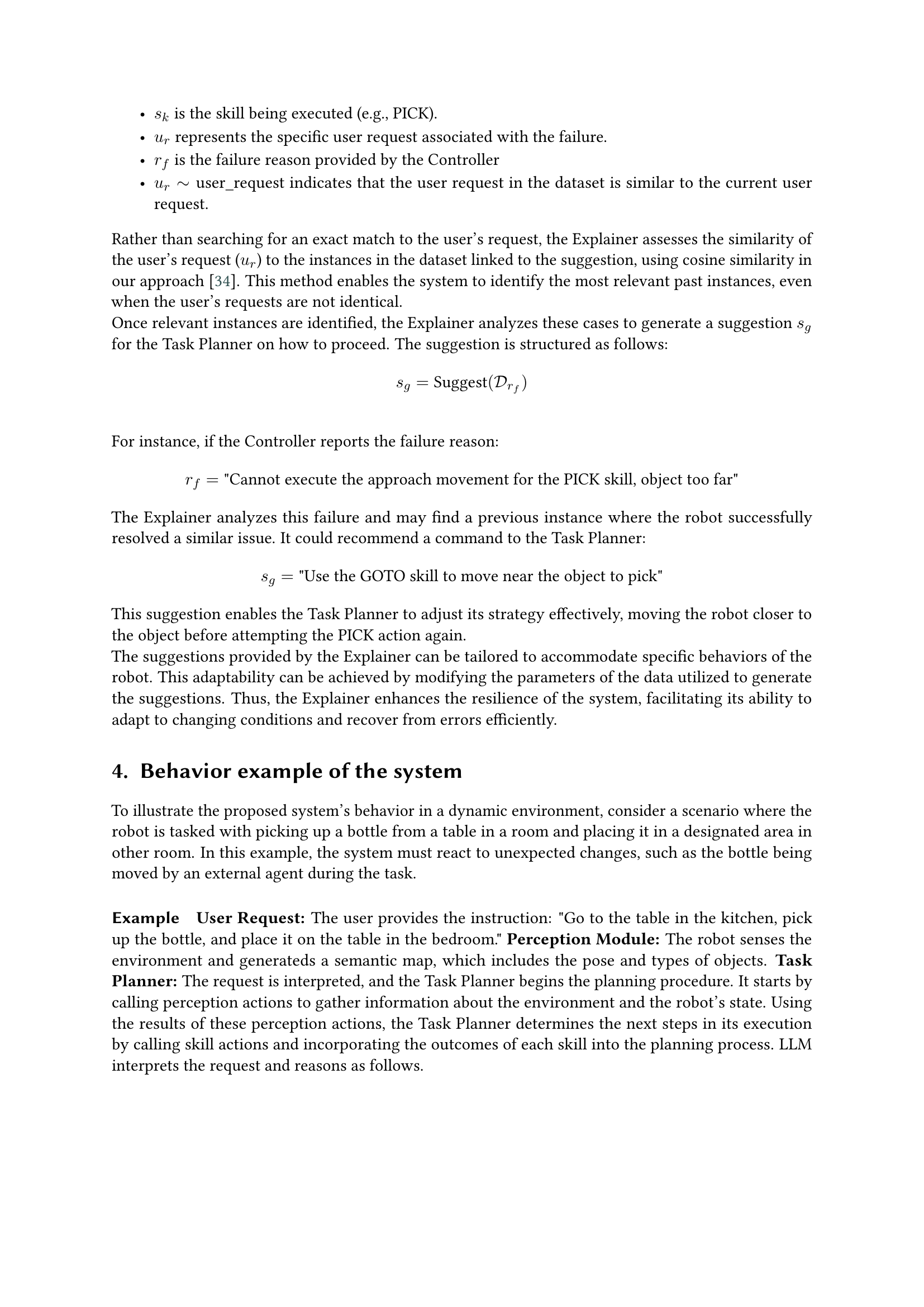

🔼 This figure illustrates the system’s architecture, which consists of two main modules: the Perception Module and the Planner Module. The Perception Module is responsible for acquiring and interpreting environmental information using RGB-D cameras and building a semantic map (a directed graph representing both geometric and semantic features). This information is then passed to the Planner Module. The Planner Module leverages a modified ReAct framework with Large Language Models (LLMs) to translate user commands (in natural language) into executable robot actions. The Planner Module incorporates real-time environmental feedback and dynamically updates plans based on this feedback.

read the caption

Figure 1: Architecture of the system.

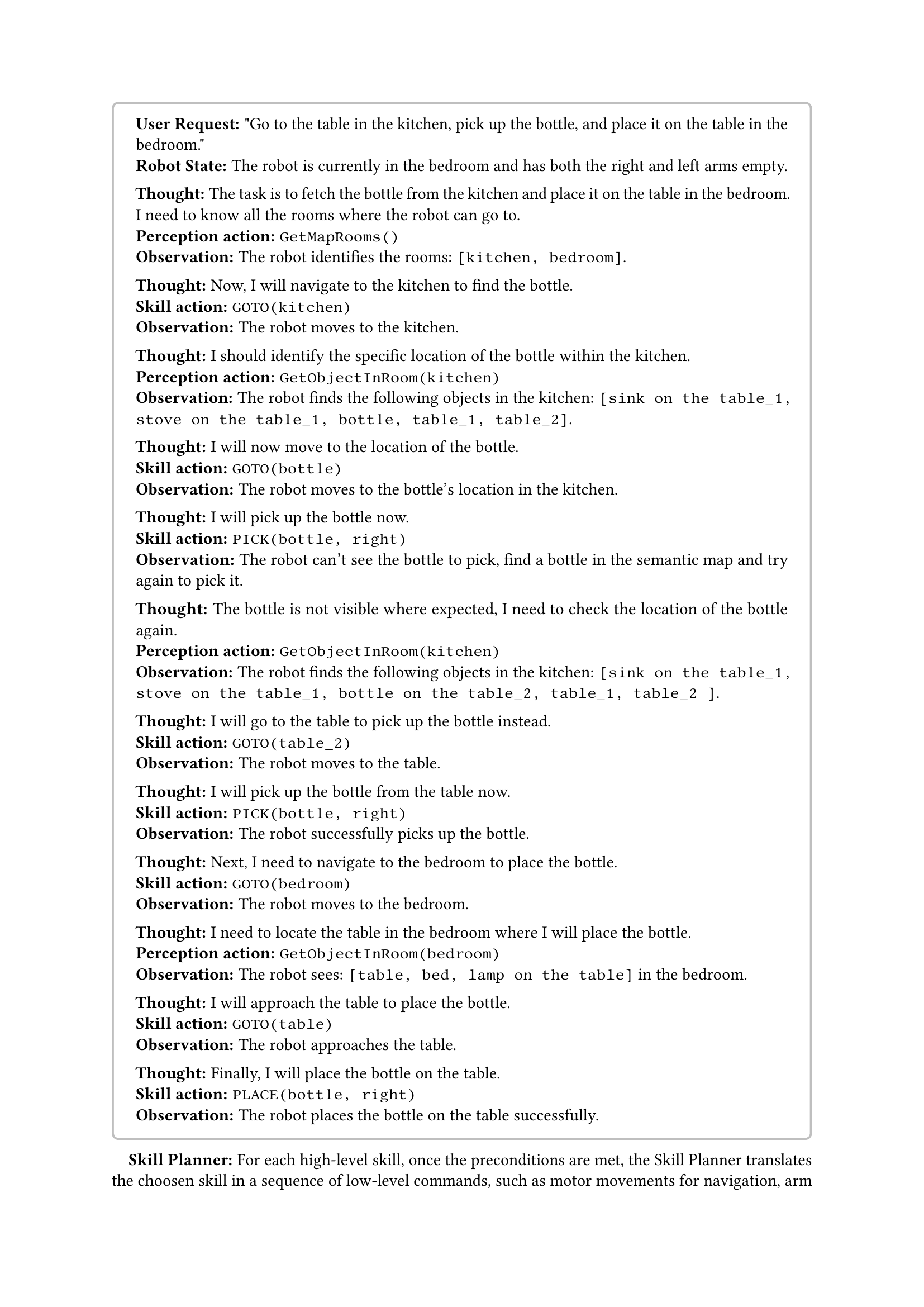

| Request type | Number of attempts | Success rate |

|---|---|---|

| Simple requests | 30 | 90% |

| Moderately complex requests | 20 | 75% |

| Complex requests | 10 | 25% |

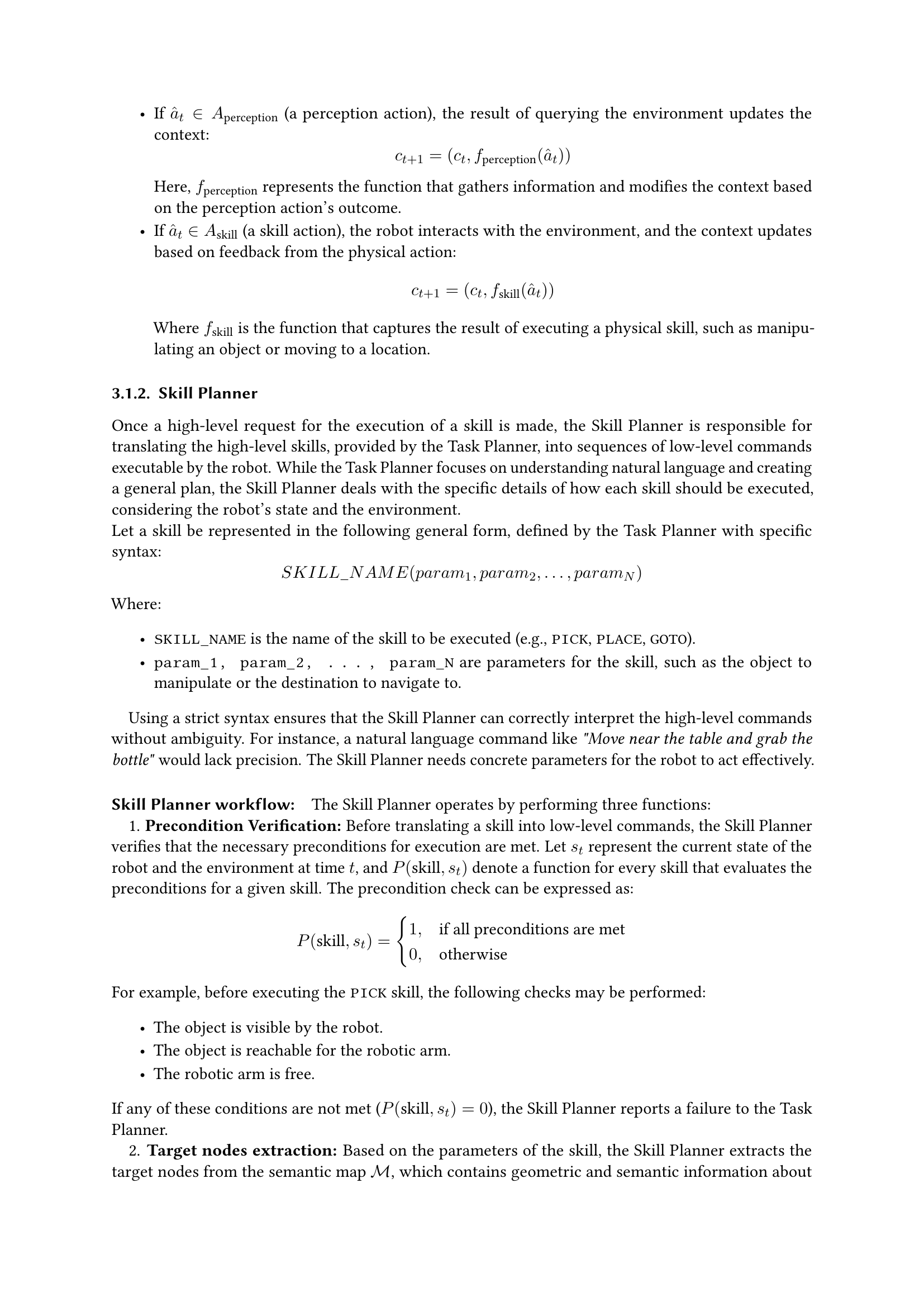

🔼 This table presents the results of experiments conducted to evaluate the performance of the proposed robotic system on three types of user requests: simple, moderately complex, and complex. For each request type, it indicates the number of attempts made and the corresponding success rate, showcasing the system’s effectiveness across different levels of task complexity. The results highlight the varying success rates for different task complexities.

read the caption

Table 1: Number of attempts and success rate for each request type

In-depth insights#

LLM-Based Robot Control#

LLM-based robot control represents a significant advancement in robotics, leveraging the power of large language models (LLMs) for more natural and flexible human-robot interaction. LLMs enable robots to understand complex instructions expressed in natural language, moving beyond pre-programmed commands. This opens possibilities for robots to operate in dynamic, unstructured environments and adapt to unexpected situations. A key challenge is bridging the gap between the abstract reasoning of LLMs and the physical reality of robot control. This requires integrating LLMs with perception systems, robust planning algorithms, and mechanisms for handling uncertainties and errors. Scene graphs and other semantic mapping techniques are crucial for representing the robot’s environment and enabling LLMs to reason effectively in context. The successful integration of LLMs demands careful consideration of safety, reliability, and the potential limitations of LLMs, such as hallucinations and inconsistencies. Continuous feedback loops and adaptive planning strategies are vital for ensuring robust and reliable execution in real-world scenarios.

ReAct Framework Extension#

The core idea behind extending the ReAct framework for robotic control is to enhance the robot’s ability to interact with dynamic environments and execute complex tasks expressed in natural language. A vanilla ReAct framework relies on alternating between reasoning (language processing) and action phases. An extension would likely incorporate real-time environmental perception through sensory data, feeding this into the LLM’s context alongside the language input. This allows the LLM to ground its reasoning in the current state of the world. Furthermore, feedback from the robot’s actions, including successes and failures, is crucial for effective replanning. The integration of a controller and an explainer module is vital for monitoring task execution and handling unforeseen situations, This expanded framework would allow the LLM to adapt dynamically, generate more robust plans, and handle unexpected changes and errors by providing context-aware suggestions for plan modification, thereby increasing autonomy and efficiency.

Semantic Scene Graphs#

Semantic scene graphs offer a powerful mechanism for representing and reasoning about environments in robotics. By integrating semantic information with spatial relationships, they move beyond simple geometric maps to enable richer understanding of a scene. This richer understanding is crucial for robots to effectively interpret natural language instructions and execute complex tasks. Scene graphs explicitly encode the relationships between objects and their attributes, providing context that goes beyond individual object recognition. This contextual information is vital for disambiguation, especially when dealing with nuanced language or unpredictable scenarios. For example, a command like “pick up the blue bottle” requires a robot to not only identify the bottle but also to understand its location relative to other objects and the robot itself. Semantic scene graphs thus form a key component in enabling robots to move from simple action execution towards true intelligence. They support more advanced planning and reasoning capabilities, especially in dynamic or uncertain environments. Effective integration of scene graphs with natural language processing and large language models (LLMs) is a key area of current research, promising significant advancement in human-robot interaction and robotic autonomy.

Adaptive Replanning#

Adaptive replanning in robotics is crucial for handling dynamic environments and unexpected events. Effective replanning requires a system capable of real-time perception and understanding of both the robot’s capabilities and the environment’s state. This necessitates the integration of robust sensory feedback loops that continuously update the system’s knowledge, allowing it to identify deviations from the planned trajectory. The system must also employ flexible planning algorithms that can quickly adapt to these changes, generating new plans to address unforeseen obstacles or failures. Successful adaptive replanning often involves the integration of AI techniques, such as machine learning, to enable more robust and efficient decision-making. This may involve predictive modeling to anticipate potential issues or reinforcement learning to optimize the replanning process. A key challenge in adaptive replanning is the trade-off between planning time and plan quality. While rapid replanning is essential in dynamic situations, a poorly constructed plan may lead to further failures and inefficiencies. Therefore, finding the right balance between speed and accuracy is critical to the success of adaptive replanning systems. The ability to learn from past failures is also crucial for improving the system’s overall performance. Sophisticated failure analysis mechanisms are needed to understand the root causes of deviations and improve the system’s resilience to future events. Ultimately, adaptive replanning is a key enabling technology for creating truly robust and autonomous robotic systems.

RoBee System Integration#

Integrating the LLM-based planning system with the RoBee humanoid robot presents a significant challenge and opportunity. RoBee’s unique physical capabilities and sensor suite necessitate careful consideration during system integration. The architecture requires robust error handling and adaptable planning strategies, particularly given RoBee’s operation in dynamic environments. Seamless communication between the LLM, perception modules, and RoBee’s hardware is critical. This requires precise translation of high-level skills (e.g., ‘pick up the bottle’) into low-level commands compatible with RoBee’s actuators. Success hinges on real-time feedback loops, allowing the system to adjust plans based on sensory input and actual execution outcomes. The integration process must also address issues of latency and computational cost associated with LLM processing. Ultimately, the success of RoBee system integration will depend on the robustness of the overall architecture in handling unexpected situations and ensuring reliable, safe operation in real-world settings. Thorough testing and evaluation will be key to validate the system’s performance and identify areas for further improvement.

More visual insights#

More on figures

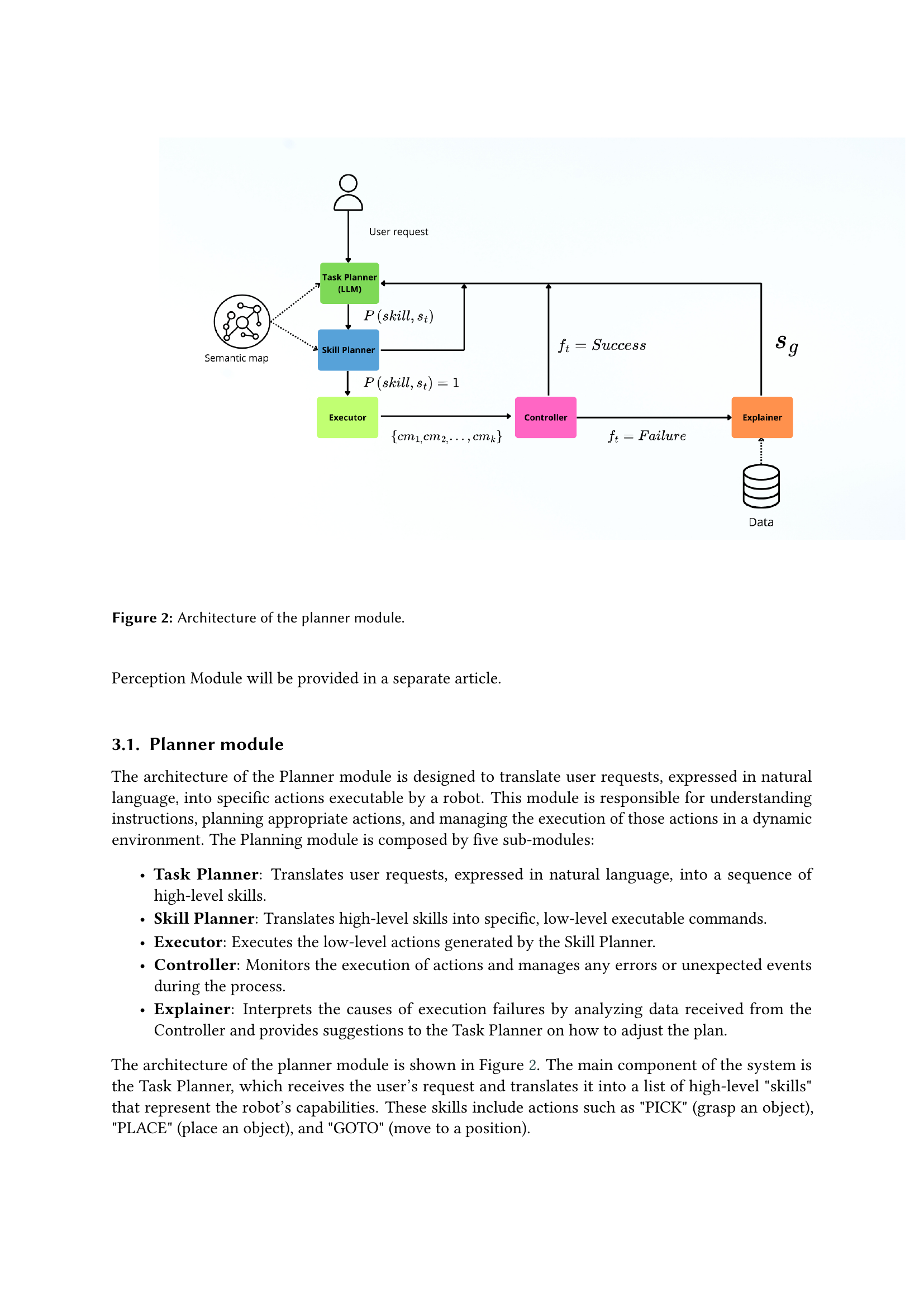

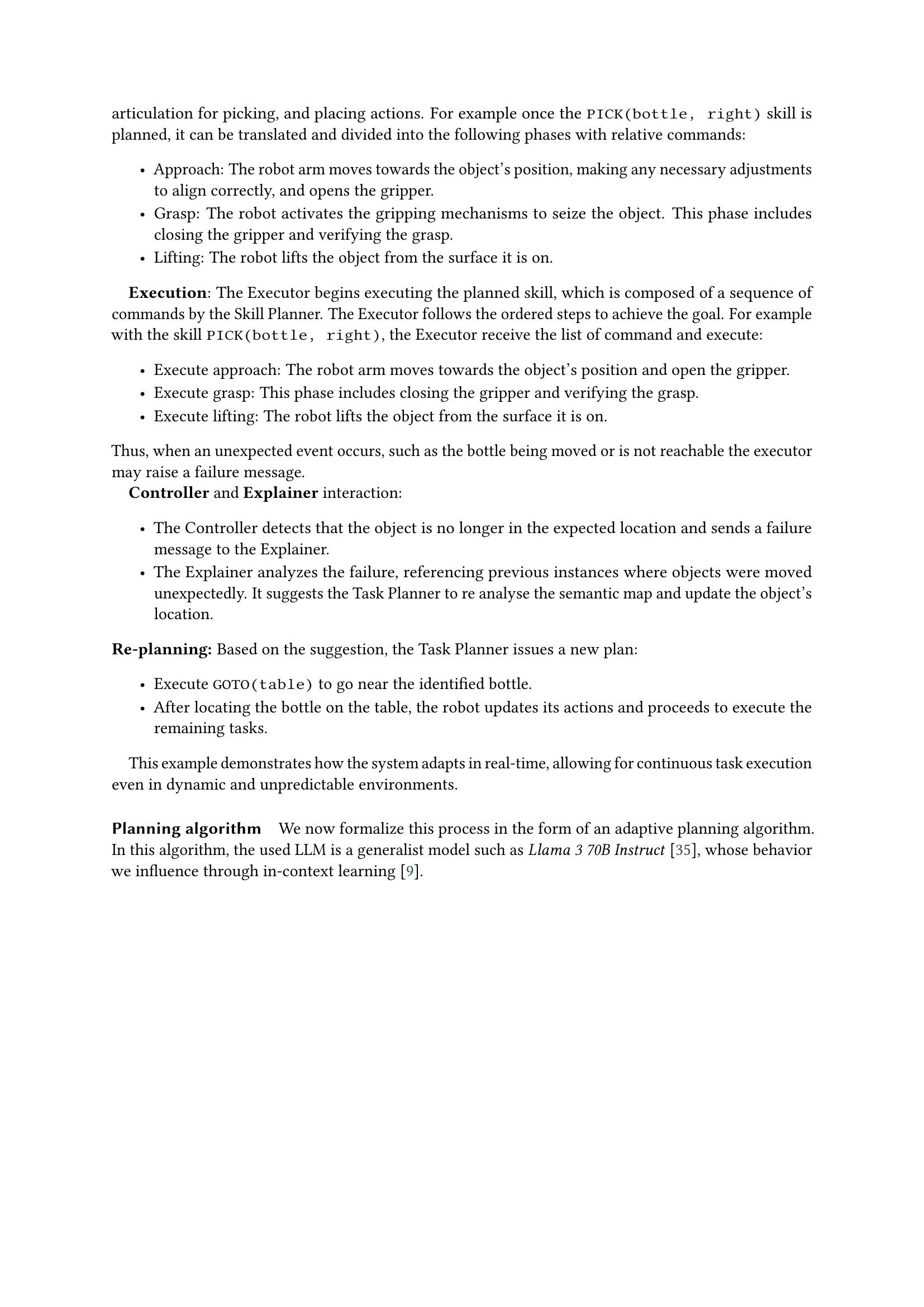

🔼 This figure illustrates the architecture of the Planner module, a key component of a robotic system designed to translate natural language commands into executable robot actions. The module consists of five sub-modules: Task Planner, which translates high-level user requests into sequences of skills; Skill Planner, which converts these skills into low-level commands executable by the robot; Executor, which executes these commands; Controller, which monitors the execution and handles errors; and Explainer, which analyzes failures and provides suggestions for corrective actions. The figure shows how these modules interact to translate user input (commands), incorporating feedback loops for dynamic plan adjustments. It shows the flow of information and the decision-making process for executing robotic actions based on user input and real-time feedback from the robot’s environment.

read the caption

Figure 2: Architecture of the planner module.

🔼 This image shows RoBee, a humanoid robot developed by Oversonic Robotics. RoBee is used in the experiments described in the paper to test the proposed natural language-based robotic task planning system. Its features, relevant to the research, include 32 degrees of freedom, allowing for flexible movement; multiple sensors (cameras, microphones, force sensors, LIDAR) providing data for perception; and two arms capable of bimanual manipulation.

read the caption

Figure 3: Robee, humaniod robot developed by Oversonic Robotics.

Full paper#