↗ arXiv ↗ Hugging Face ↗ Papers with Code

TL;DR#

Visual tokenizers are fundamental to image generation, converting images into discrete tokens for processing by transformer-based models. Existing VQ-based methods, however, struggle with large codebooks, leading to instability and performance limitations. Simply increasing codebook size often worsens the issue.

FQGAN introduces a novel solution, Factorized Quantization (FQ), which decomposes a large codebook into smaller, independent sub-codebooks. This reduces complexity and improves stability. FQGAN further incorporates disentanglement regularization to ensure sub-codebooks learn diverse and complementary features, and leverages pretrained vision models for semantic richness. The results show significant improvements in reconstruction quality, exceeding the performance of existing methods, and demonstrate effective adaptation to auto-regressive image generation.

Key Takeaways#

Why does it matter?#

This paper is important because it significantly advances visual tokenization, a crucial step in image generation. Its novel Factorized Quantization (FQ) method addresses limitations of existing VQ-based approaches, improving scalability and stability. The results are impactful, achieving state-of-the-art performance in image reconstruction and generation, and opening new research avenues in autoregressive models and semantic feature learning.

Visual Insights#

🔼 The figure showcases a comparison of reconstruction performance (measured by rFID) across several popular visual tokenizers as their codebook size varies. The tokenizers compared are VQ (from the Taming-Transformers paper), VQ (from LlamaGen), VQ-LC, LFQ (from OpenMAGVIT2), and the novel FQGAN model introduced in this paper. Lower rFID values represent better performance, indicating a higher quality of image reconstruction from the discrete tokens produced by the tokenizer. The graph illustrates how the reconstruction quality changes as the codebook size increases, highlighting the relative strengths and weaknesses of each tokenizer in terms of scalability and accuracy.

read the caption

Figure 1: Performance comparison of popular tokenizers at various codebook sizes, including VQ (Taming) [6], VQ (LlamaGen) [25], VQ-LC [41], LFQ (OpenMAGVIT2) [15], and FQGAN. Lower rFID values indicate better performance.

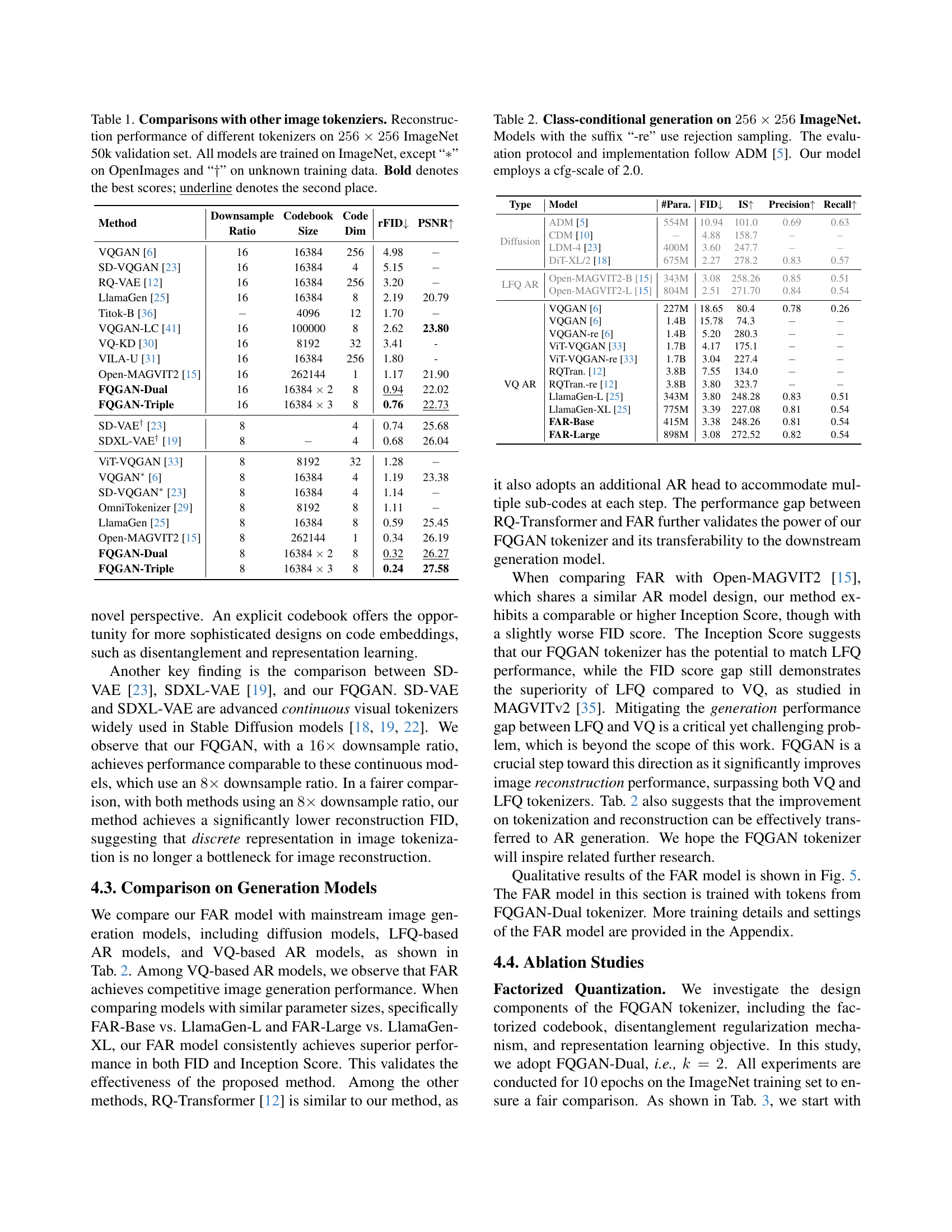

| Method | Downsample | Codebook Size | Code Dim | rFID ↓ | PSNR ↑ |

|---|---|---|---|---|---|

| VQGAN [6] | 16 | 16384 | 256 | 4.98 | - |

| SD-VQGAN [23] | 16 | 16384 | 4 | 5.15 | - |

| RQ-VAE [12] | 16 | 16384 | 256 | 3.20 | - |

| LlamaGen [25] | 16 | 16384 | 8 | 2.19 | 20.79 |

| Titok-B [36] | - | 4096 | 12 | 1.70 | - |

| VQGAN-LC [41] | 16 | 100000 | 8 | 2.62 | 23.80 |

| VQ-KD [30] | 16 | 8192 | 32 | 3.41 | - |

| VILA-U [31] | 16 | 16384 | 256 | 1.80 | - |

| Open-MAGVIT2 [15] | 16 | 262144 | 1 | 1.17 | 21.90 |

| FQGAN-Dual | 16 | 16384 × 2 | 8 | 0.94 | 22.02 |

| FQGAN-Triple | 16 | 16384 × 3 | 8 | 0.76 | 22.73 |

| SD-VAE † [23] | 8 | 4 | 0.74 | 25.68 | |

| SDXL-VAE † [19] | 8 | - | 4 | 0.68 | 26.04 |

| ViT-VQGAN [33] | 8 | 8192 | 32 | 1.28 | - |

| VQGAN * [6] | 8 | 16384 | 4 | 1.19 | 23.38 |

| SD-VQGAN * [23] | 8 | 16384 | 4 | 1.14 | - |

| OmniTokenizer [29] | 8 | 8192 | 8 | 1.11 | - |

| LlamaGen [25] | 8 | 16384 | 8 | 0.59 | 25.45 |

| Open-MAGVIT2 [15] | 8 | 262144 | 1 | 0.34 | 26.19 |

| FQGAN-Dual | 8 | 16384 × 2 | 8 | 0.32 | 26.27 |

| FQGAN-Triple | 8 | 16384 × 3 | 8 | 0.24 | 27.58 |

🔼 This table compares the reconstruction performance of various visual tokenizers on the ImageNet 50k validation set. The models were evaluated using 256x256 pixel images. The table shows the reconstruction performance metrics (rFID, PSNR) for each model, along with relevant hyperparameters, like codebook size and dimensionality, downsampling ratio, and the number of parameters. Note that some models were trained on datasets other than ImageNet (indicated by * and †), and the best and second-best results are highlighted in bold and underlined, respectively.

read the caption

Table 1: Comparisons with other image tokenziers. Reconstruction performance of different tokenizers on 256×256256256256\times 256256 × 256 ImageNet 50k validation set. All models are trained on ImageNet, except “∗*∗” on OpenImages and “††\dagger†” on unknown training data. Bold denotes the best scores; underline denotes the second place.

In-depth insights#

Factorized Quantization#

The core concept of “Factorized Quantization” revolves around decomposing a large codebook into multiple smaller, independent sub-codebooks. This addresses the instability and computational challenges associated with using massive codebooks in vector quantization (VQ) for image tokenization. By factorizing, the authors aim to reduce the complexity of the lookup process, making visual tokenization more efficient and scalable. A key innovation is the introduction of disentanglement regularization, which explicitly reduces redundancy between sub-codebooks, ensuring each captures unique and complementary information. The integration of representation learning, using pretrained vision models like CLIP and DINO, further enhances the quality of the learned representations. This combined approach improves reconstruction quality, leading to more expressive and disentangled visual tokens, ultimately benefiting both image reconstruction and autoregressive image generation tasks. The effectiveness of this method is demonstrated through experimental results, showcasing superior performance compared to existing state-of-the-art visual tokenizers.

Disentanglement Reg.#

The concept of ‘Disentanglement Reg.’ within the context of factorized visual tokenization is crucial for achieving high-quality image generation. The core idea is to prevent the sub-codebooks from learning redundant or overlapping information, ensuring that each sub-codebook specializes in capturing unique aspects of an image. Without disentanglement, the sub-codebooks might learn similar features, leading to a less expressive and less efficient representation. This regularization encourages diversity and promotes complementary feature learning across the sub-codebooks, enabling the model to capture a richer set of visual information. The method’s effectiveness hinges on the careful design of a regularization mechanism that explicitly measures and minimizes redundancy between the sub-codebooks. This might involve techniques like orthogonality constraints or other similarity metrics to ensure independence. The success of ‘Disentanglement Reg.’ is also interconnected with the representation learning objective; both work together to produce semantically meaningful features, resulting in improved reconstruction quality and generalization capabilities. Overall, the effectiveness of ‘Disentanglement Reg.’ is not just about preventing redundancy; it’s about guiding the learning process to produce a more comprehensive, balanced, and interpretable representation of the visual data.

Rep. Learning#

The heading ‘Rep. Learning’, likely short for ‘Representation Learning’, highlights a crucial aspect of the research. It addresses the inherent limitation of traditional visual tokenizers, which primarily focus on pixel-level reconstruction and often fail to capture the semantic meaning of images. By integrating representation learning, the model is guided to learn richer, more semantically meaningful features. This is achieved by leveraging pre-trained vision models like CLIP and DINOv2, which are already equipped with substantial semantic understanding. This integration ensures the tokenizer learns features beyond superficial details, capturing diverse semantic levels from low-level structures to high-level concepts. This approach not only improves reconstruction quality but also enhances the tokenizer’s ability to generalize and perform well on downstream tasks like image generation. The inclusion of representation learning is key to disentangling the features learned by different sub-codebooks, ensuring each specializes in unique aspects of the image, thus creating a comprehensive and diverse representation. This multifaceted approach addresses the challenge of representing the complex and nuanced nature of visual data.

Autoregressive Gen.#

Autoregressive generative models, as discussed in the context of image generation, represent a powerful approach that sequentially predicts tokens to construct images or videos. Unlike other methods that produce complete images in one go, autoregressive models build the output step-by-step, offering finer control and often leading to high-quality results. This approach typically involves a transformer network that learns the dependencies between tokens, predicting the next token based on previously generated ones. However, a key challenge lies in efficiently handling a very large codebook, as the size of this codebook directly impacts computational cost and the stability of training. The paper highlights the significant impact of the chosen visual tokenizer on the success of the overall autoregressive generation process. Effective tokenization is crucial because it directly influences the quality and efficiency of the subsequent generation task. The methods proposed in the paper aim to improve both quality and scalability by addressing the limitations of existing visual tokenizers, ultimately enhancing the overall performance of autoregressive image generation models.

VQGAN Enhancements#

The core of this research lies in enhancing VQGAN (Vector Quantized Generative Adversarial Network), a foundational model for image generation. VQGAN’s limitation stems from its reliance on a fixed-size codebook, which restricts its capacity to represent diverse image features. This paper tackles this challenge by introducing Factorized Quantization (FQ), a novel technique to decompose a large codebook into multiple smaller, independent sub-codebooks. This approach cleverly reduces computational complexity while enhancing the expressiveness of the model. Further enhancing VQGAN, the research integrates disentanglement regularization to reduce redundancy and promote diversity between sub-codebooks. Representation learning is incorporated by leveraging pre-trained models like CLIP and DINO to infuse semantic richness into the generated features, leading to improved reconstruction quality and downstream application capabilities. Overall, the improvements described significantly enhance the scalability, stability, and semantic expressiveness of VQGAN.

More visual insights#

More on figures

🔼 Figure 2 illustrates the architecture of the proposed Factorized Quantization (FQ) method. The left side shows the FQGAN-Dual model, a specific example of FQ with two sub-codebooks (k=2). It demonstrates how a large codebook is broken down into smaller, independent sub-codebooks, reducing the computational complexity of quantization and improving stability. Each sub-codebook uses its own encoder and quantizer to process the input features and generate sub-tokens. These sub-tokens are concatenated before being fed to the decoder for image reconstruction. The right side of the figure shows how the factorized tokens are handled in an autoregressive (AR) image generation model. A factorized AR head is added that predicts multiple sub-tokens simultaneously for each image patch, utilizing the output of an AR transformer backbone.

read the caption

Figure 2: Illustration of the our method. The left part shows FQGAN-Dual, the factorized tokenizer design in an example scenario when k=2𝑘2k=2italic_k = 2. This framework is extendable to factorization of more codebooks. The right part demonstrate how we leverage an additional AR head to accommodate the factorized sub-codes based on standard AR generative transformer.

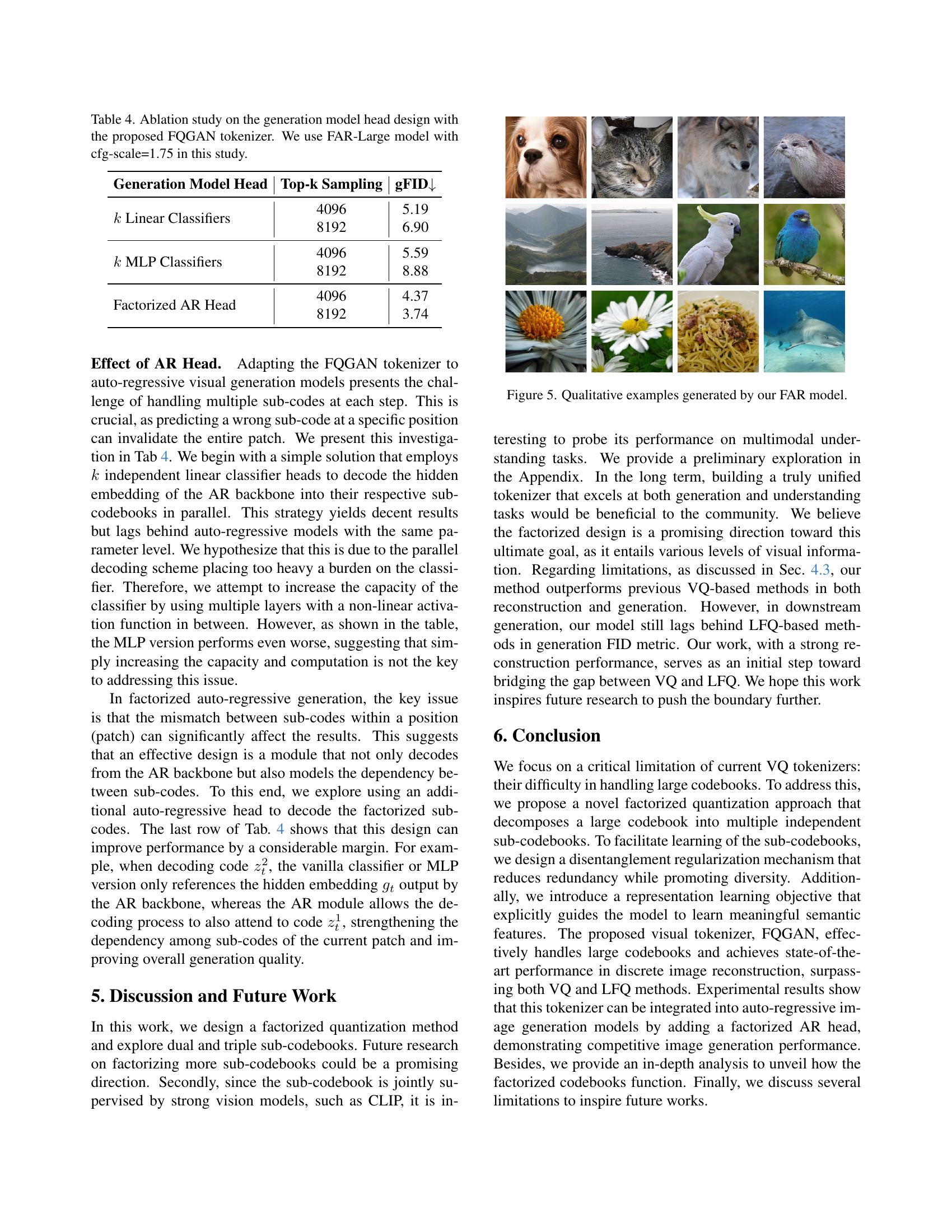

🔼 This figure shows a comparison of image reconstructions. The leftmost image is the original image. The next three images show reconstructions using the FQGAN-Dual model, which uses two sub-codebooks. The first reconstruction uses both sub-codebooks. The second and third use only one sub-codebook each, highlighting the individual contributions of each sub-codebook to the overall reconstruction quality. The results visually demonstrate the effectiveness of the factorized approach in capturing diverse visual features.

read the caption

Figure 3: Visualization of standard reconstruction by FQGAN-Dual and reconstruction using only a single sub-codebook.

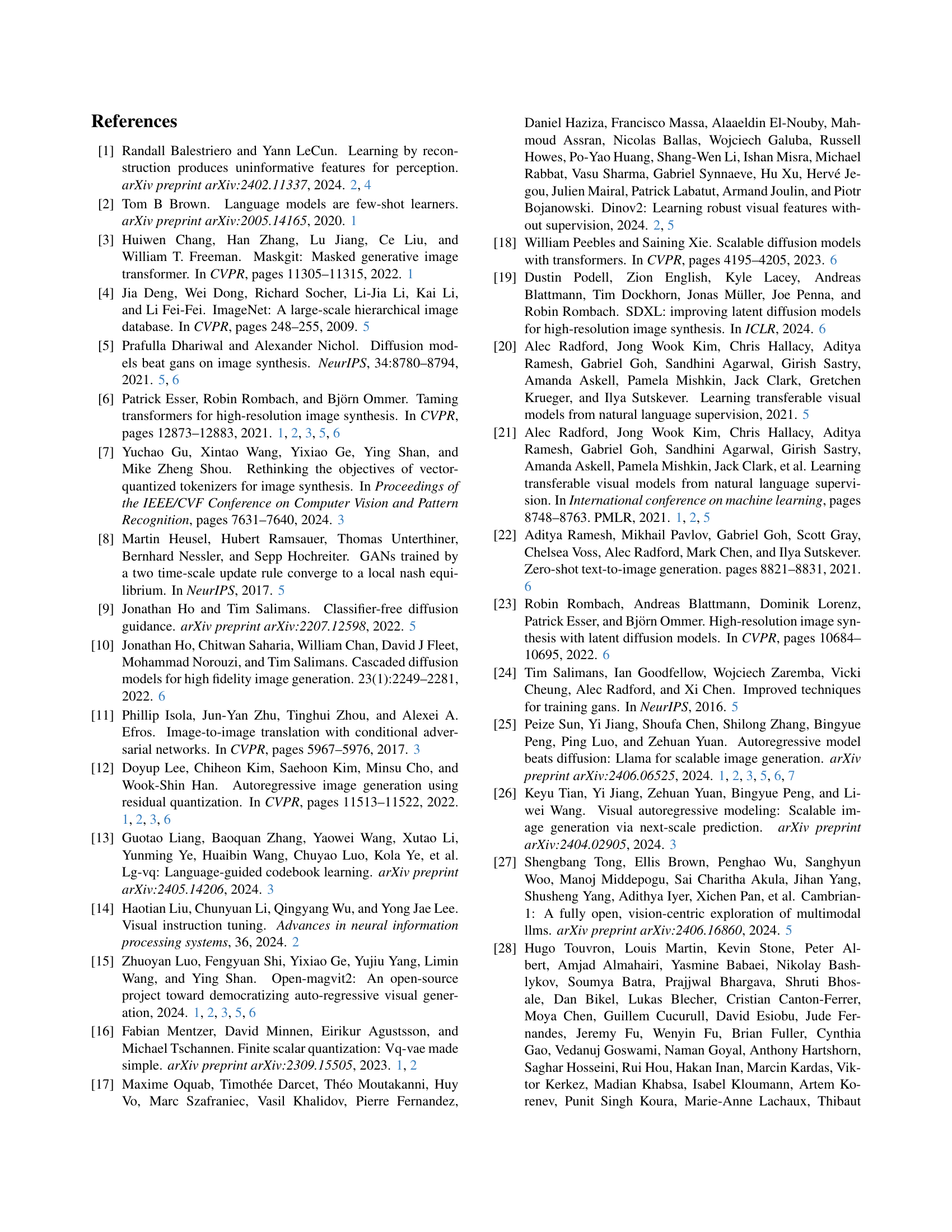

🔼 This figure visualizes the distribution of vector quantized (VQ) codes learned by the two sub-codebooks in the Factorized Quantization Generative Adversarial Network (FQGAN-Dual) model. t-SNE (t-distributed Stochastic Neighbor Embedding) is used to reduce the dimensionality of the VQ code representations for visualization in a 2D space. The plot shows how the VQ codes from each sub-codebook cluster based on their semantic content. One sub-codebook focuses on low-level visual features while the other, guided by CLIP embeddings, focuses on higher-level semantic information. This visualization demonstrates the effectiveness of the disentanglement regularization in FQGAN-Dual, showing that each sub-codebook learns distinct and complementary image features.

read the caption

Figure 4: T-SNE visualization of VQ codes from different sub-codebooks in FQGAN-Dual.

🔼 Figure 5 showcases several example images generated by the Factorized Auto-Regressive (FAR) model. These examples highlight the model’s ability to produce high-quality and diverse images, demonstrating its effectiveness in autoregressive visual generation tasks. The images likely represent a range of different classes or objects, showcasing the breadth of the model’s capabilities.

read the caption

Figure 5: Qualitative examples generated by our FAR model.

More on tables

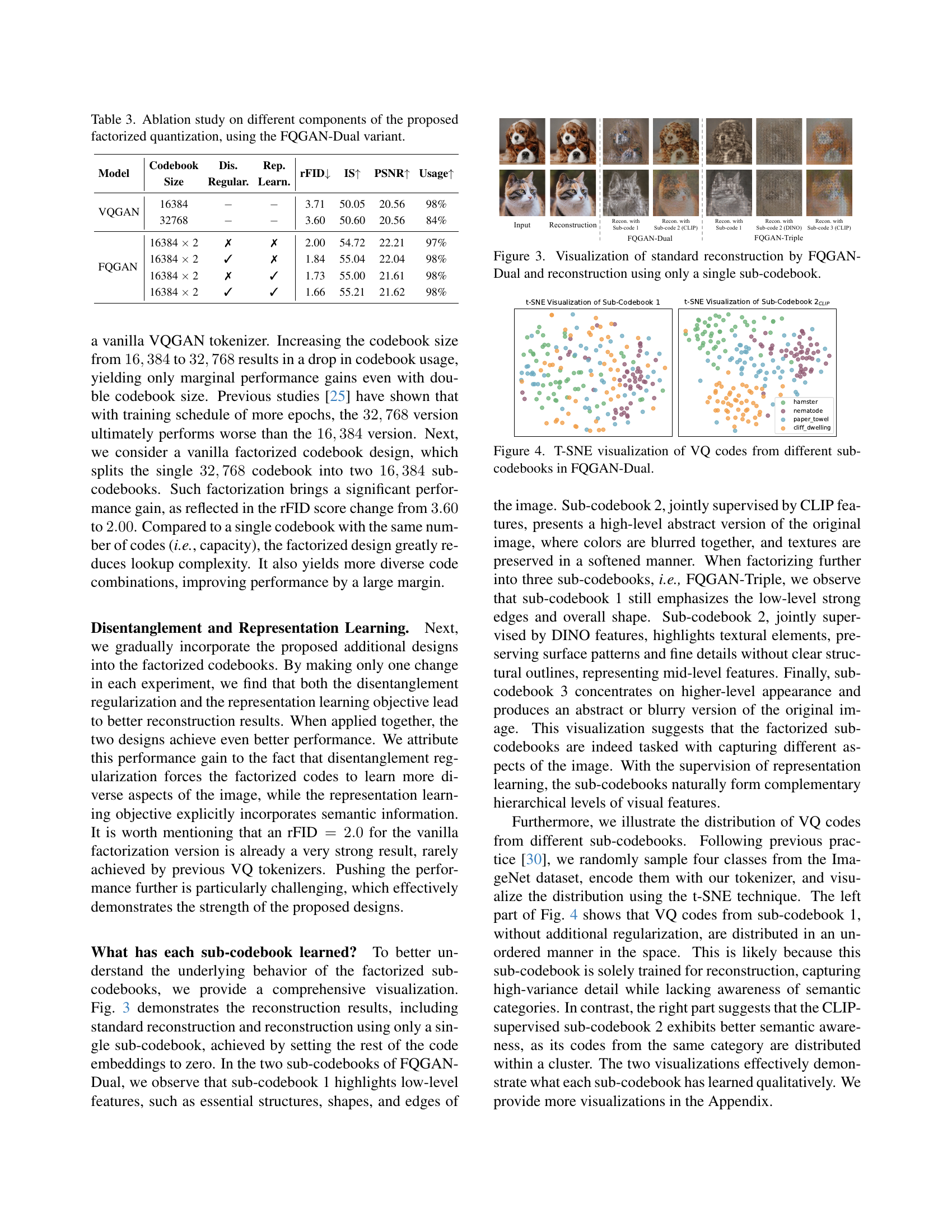

| Type | Model | #Para. | FID↓ | IS↑ | Precision↑ | Recall↑ |

|---|---|---|---|---|---|---|

| Diffusion | ADM [5] | 554M | 10.94 | 101.0 | 0.69 | 0.63 |

| CDM [10] | - | 4.88 | 158.7 | - | - | |

| LDM-4 [23] | 400M | 3.60 | 247.7 | - | - | |

| DiT-XL/2 [18] | 675M | 2.27 | 278.2 | 0.83 | 0.57 | |

| LFQ AR | Open-MAGVIT2-B [15] | 343M | 3.08 | 258.26 | 0.85 | 0.51 |

| Open-MAGVIT2-L [15] | 804M | 2.51 | 271.70 | 0.84 | 0.54 | |

| VQ AR | VQGAN [6] | 227M | 18.65 | 80.4 | 0.78 | 0.26 |

| VQGAN [6] | 1.4B | 15.78 | 74.3 | - | - | |

| VQGAN-re [6] | 1.4B | 5.20 | 280.3 | - | - | |

| ViT-VQGAN [33] | 1.7B | 4.17 | 175.1 | - | - | |

| ViT-VQGAN-re [33] | 1.7B | 3.04 | 227.4 | - | - | |

| RQTran. [12] | 3.8B | 7.55 | 134.0 | - | - | |

| RQTran.-re [12] | 3.8B | 3.80 | 323.7 | - | - | |

| LlamaGen-L [25] | 343M | 3.80 | 248.28 | 0.83 | 0.51 | |

| LlamaGen-XL [25] | 775M | 3.39 | 227.08 | 0.81 | 0.54 | |

| FAR-Base | 415M | 3.38 | 248.26 | 0.81 | 0.54 | |

| FAR-Large | 898M | 3.08 | 272.52 | 0.82 | 0.54 |

🔼 Table 2 presents a comparison of class-conditional image generation results on the 256x256 ImageNet dataset. It shows various metrics, including FID (Fréchet Inception Distance) which measures the quality of generated images by comparing them to real images, IS (Inception Score) indicating the diversity and quality of generated samples, Precision, and Recall. Models are categorized based on their architecture and whether or not they utilize rejection sampling, indicated by the “-re” suffix. The evaluation methodology is consistent with the ADM [5] approach and a cfg-scale of 2.0 is used for our model.

read the caption

Table 2: Class-conditional generation on 256×256256256256\times 256256 × 256 ImageNet. Models with the suffix “-re” use rejection sampling. The evaluation protocol and implementation follow ADM [5]. Our model employs a cfg-scale of 2.0.

| Model | Codebook Size | Dis. | Rep. | rFID ↓ | IS ↑ | PSNR ↑ | Usage ↑ |

|---|---|---|---|---|---|---|---|

| VQGAN | 16384 | - | - | 3.71 | 50.05 | 20.56 | 98% |

| 32768 | - | - | 3.60 | 50.60 | 20.56 | 84% | |

| FQGAN | 16384 × 2 | ✗ | ✗ | 2.00 | 54.72 | 22.21 | 97% |

| 16384 × 2 | ✓ | ✗ | 1.84 | 55.04 | 22.04 | 98% | |

| 16384 × 2 | ✗ | ✓ | 1.73 | 55.00 | 21.61 | 98% | |

| 16384 × 2 | ✓ | ✓ | 1.66 | 55.21 | 21.62 | 98% |

🔼 This table presents an ablation study analyzing the impact of different components of the proposed factorized quantization method on the performance of the FQGAN-Dual model. It compares the reconstruction quality (measured by rFID, IS, PSNR) and codebook usage rate across various configurations, including the use of a single codebook (as in standard VQGAN), a factorized codebook without disentanglement or representation learning, and finally the full FQGAN-Dual model which incorporates both disentanglement regularization and representation learning. This allows for a quantitative assessment of the contribution of each proposed technique to the overall improvement in performance.

read the caption

Table 3: Ablation study on different components of the proposed factorized quantization, using the FQGAN-Dual variant.

| Generation Model Head | Top-k Sampling | gFID↓ |

|---|---|---|

| k Linear Classifiers | 4096 | 5.19 |

| 8192 | 6.90 | |

| k MLP Classifiers | 4096 | 5.59 |

| 8192 | 8.88 | |

| Factorized AR Head | 4096 | 4.37 |

| 8192 | 3.74 |

🔼 This table presents an ablation study on different design choices for the autoregressive (AR) head in the context of the proposed Factorized Quantization Generative Adversarial Network (FQGAN). Specifically, it investigates the impact of various AR head architectures on the quality of image generation. The study uses the FAR-Large model with a classifier-free guidance (CFG) scale of 1.75, comparing different head designs: multiple linear classifiers, multiple multi-layer perceptrons (MLPs), and the proposed factorized AR head. The results are evaluated using the Fréchet Inception Distance (FID) metric, a lower FID indicating better image generation quality. The experiment helps determine the optimal design for the AR head that effectively handles multiple sub-tokens produced by the factorized tokenizer in FQGAN.

read the caption

Table 4: Ablation study on the generation model head design with the proposed FQGAN tokenizer. We use FAR-Large model with cfg-scale=1.75 in this study.

Full paper#