↗ arXiv ↗ Hugging Face ↗ Papers with Code

TL;DR#

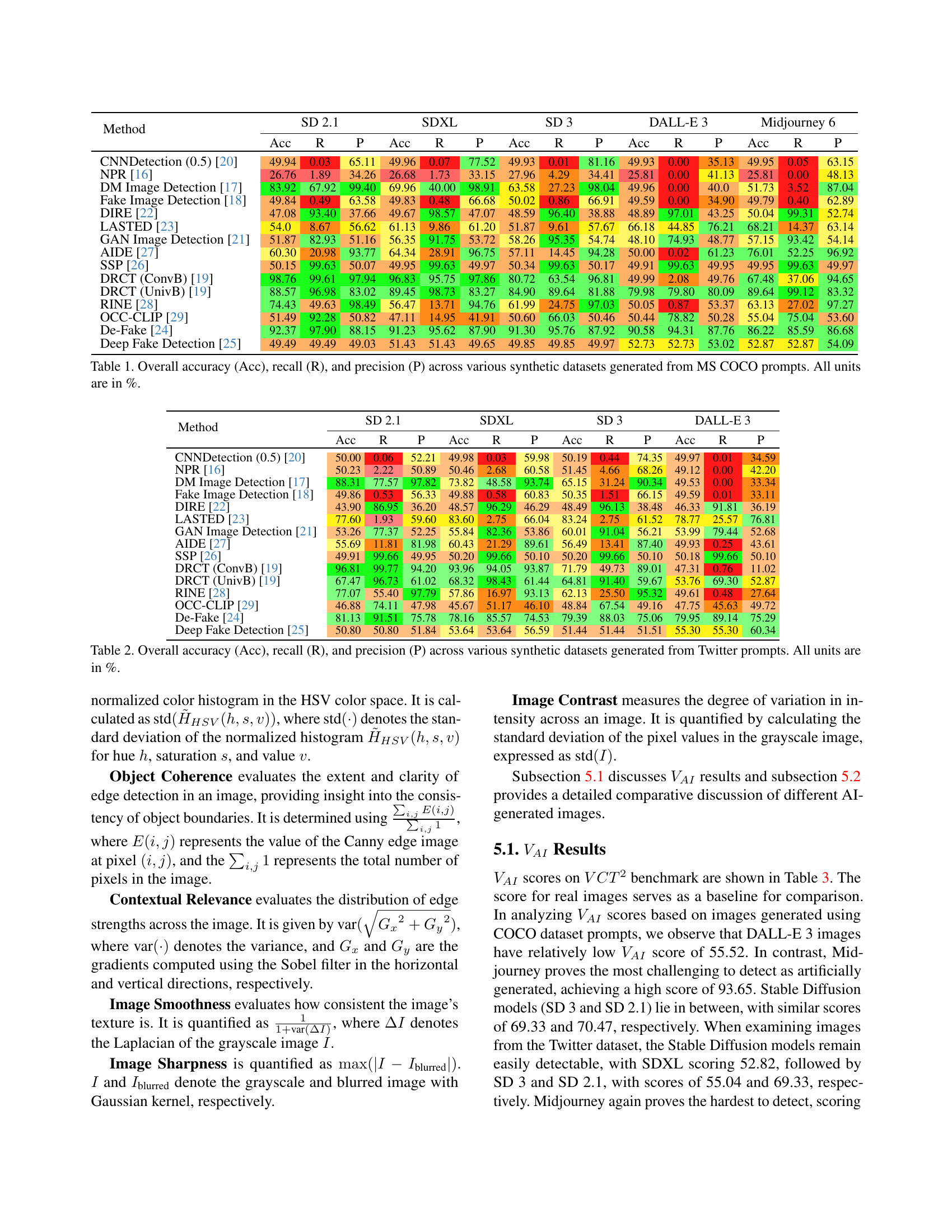

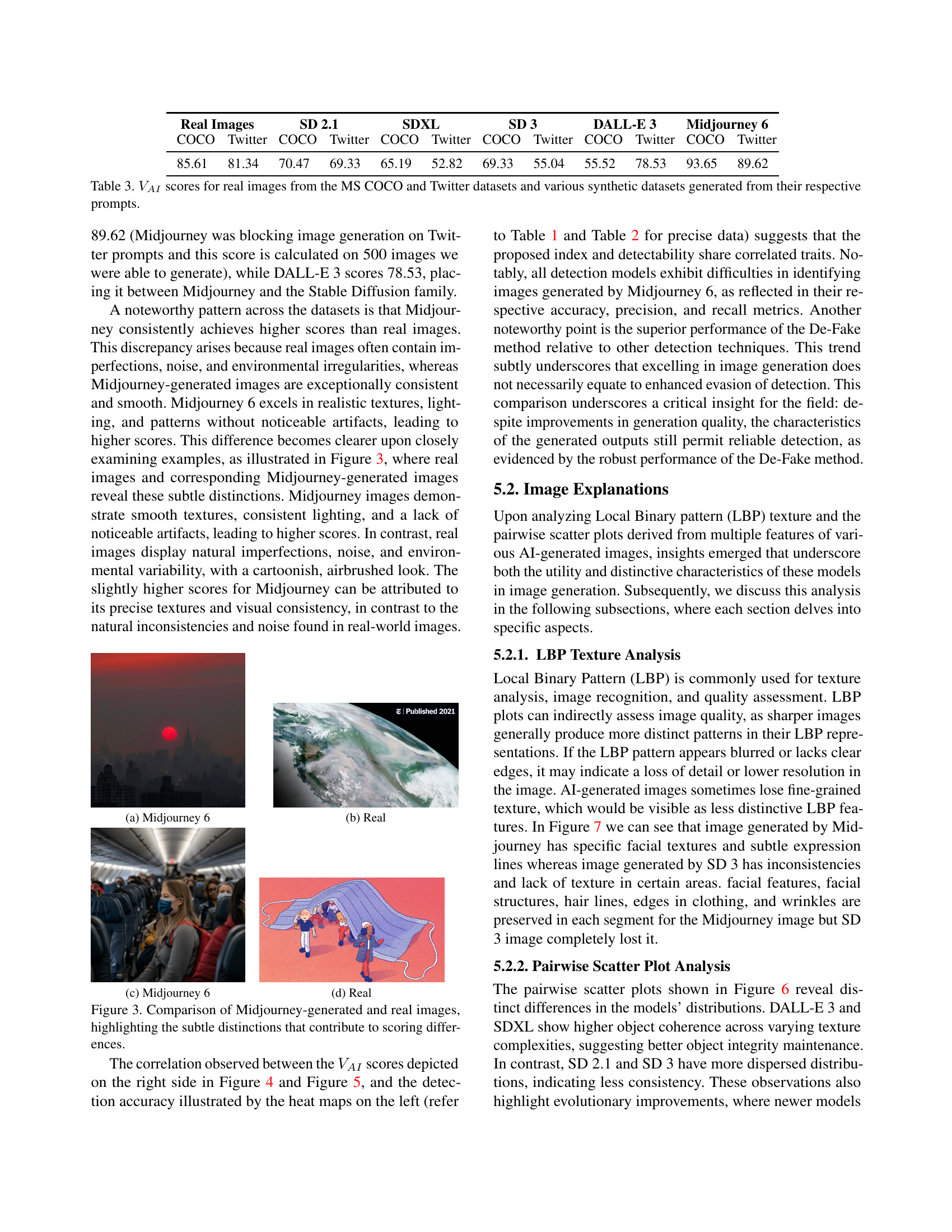

The surge in AI-generated images necessitates robust detection methods. Existing techniques struggle with sophisticated models, leading to concerns about misinformation and malicious use. This research underscores the critical need for advanced detection methods and a more comprehensive evaluation framework.

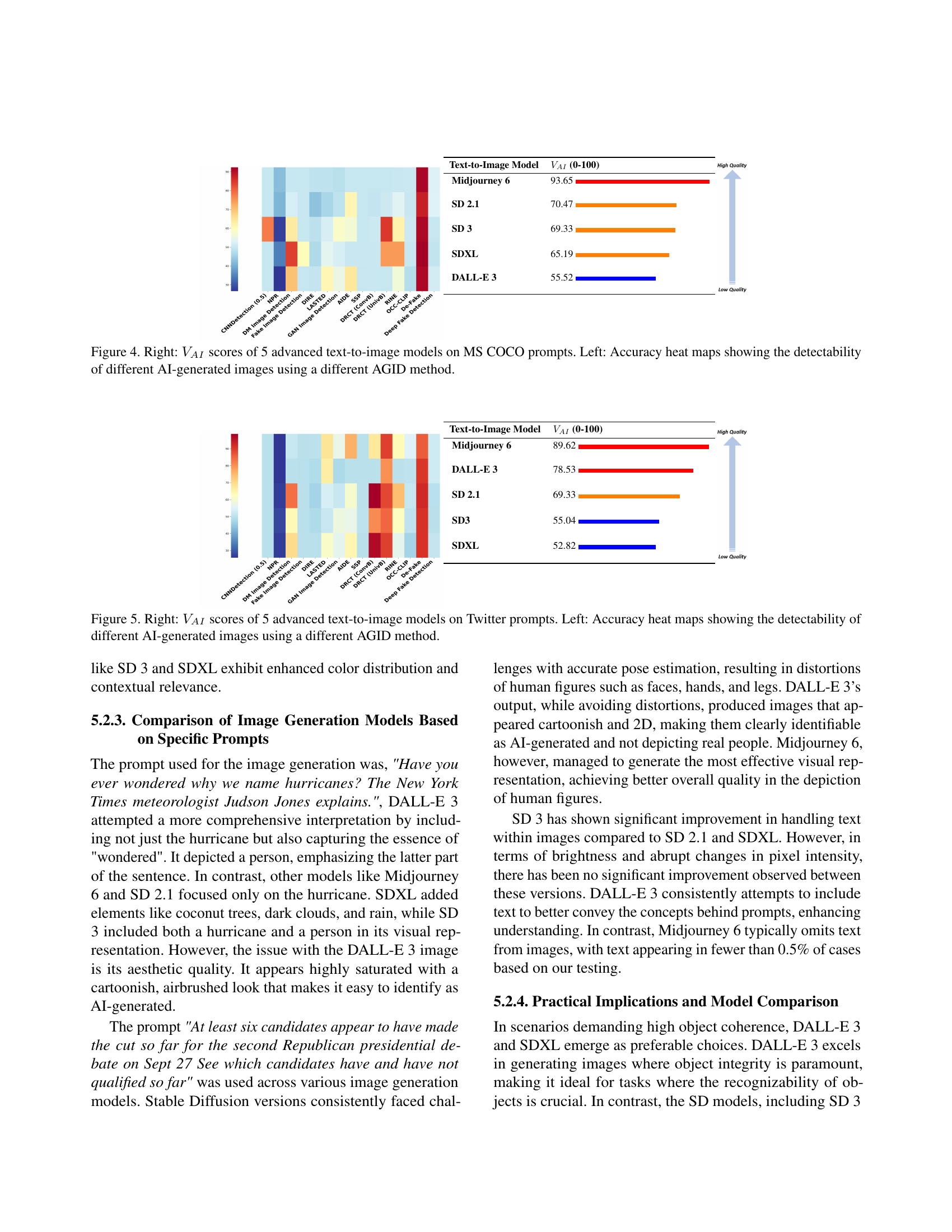

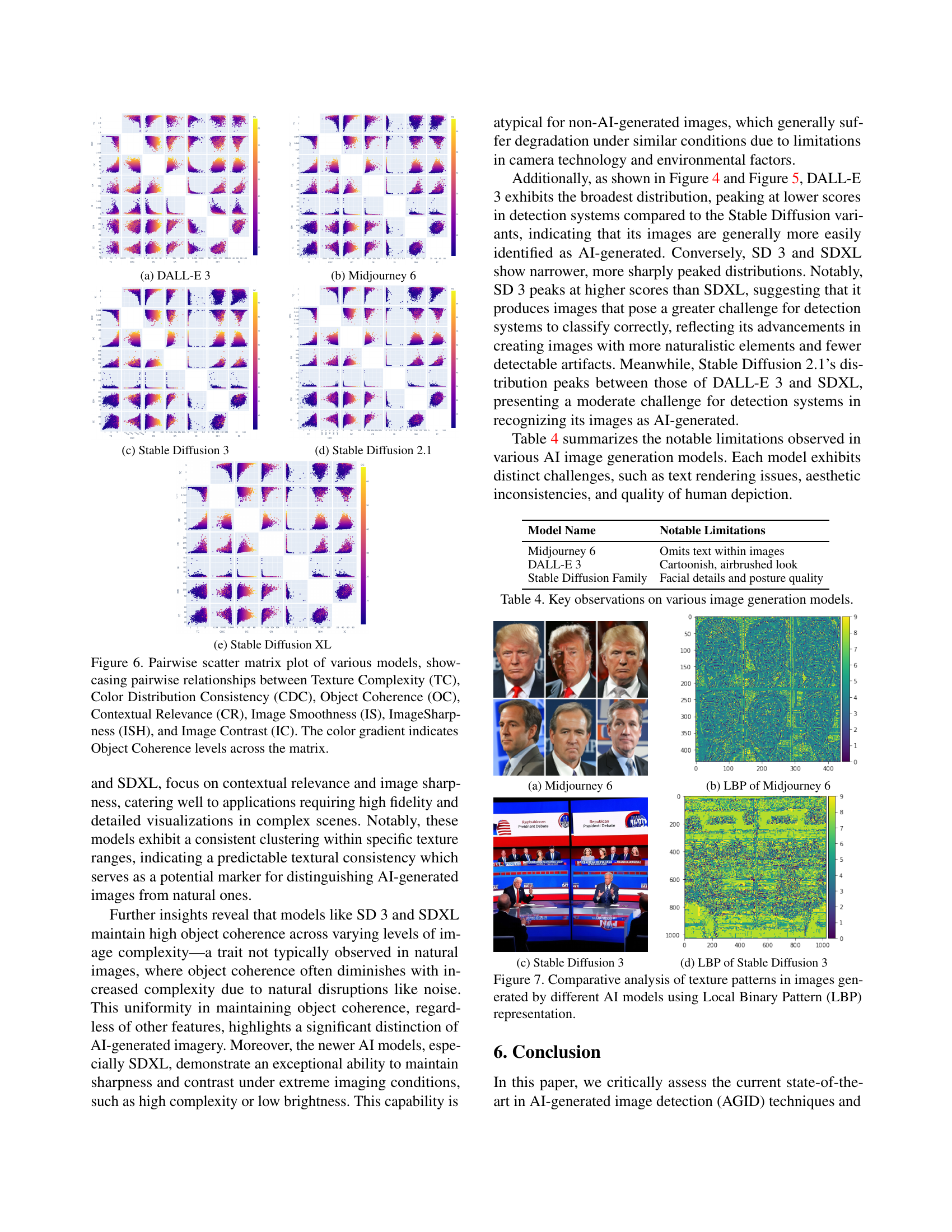

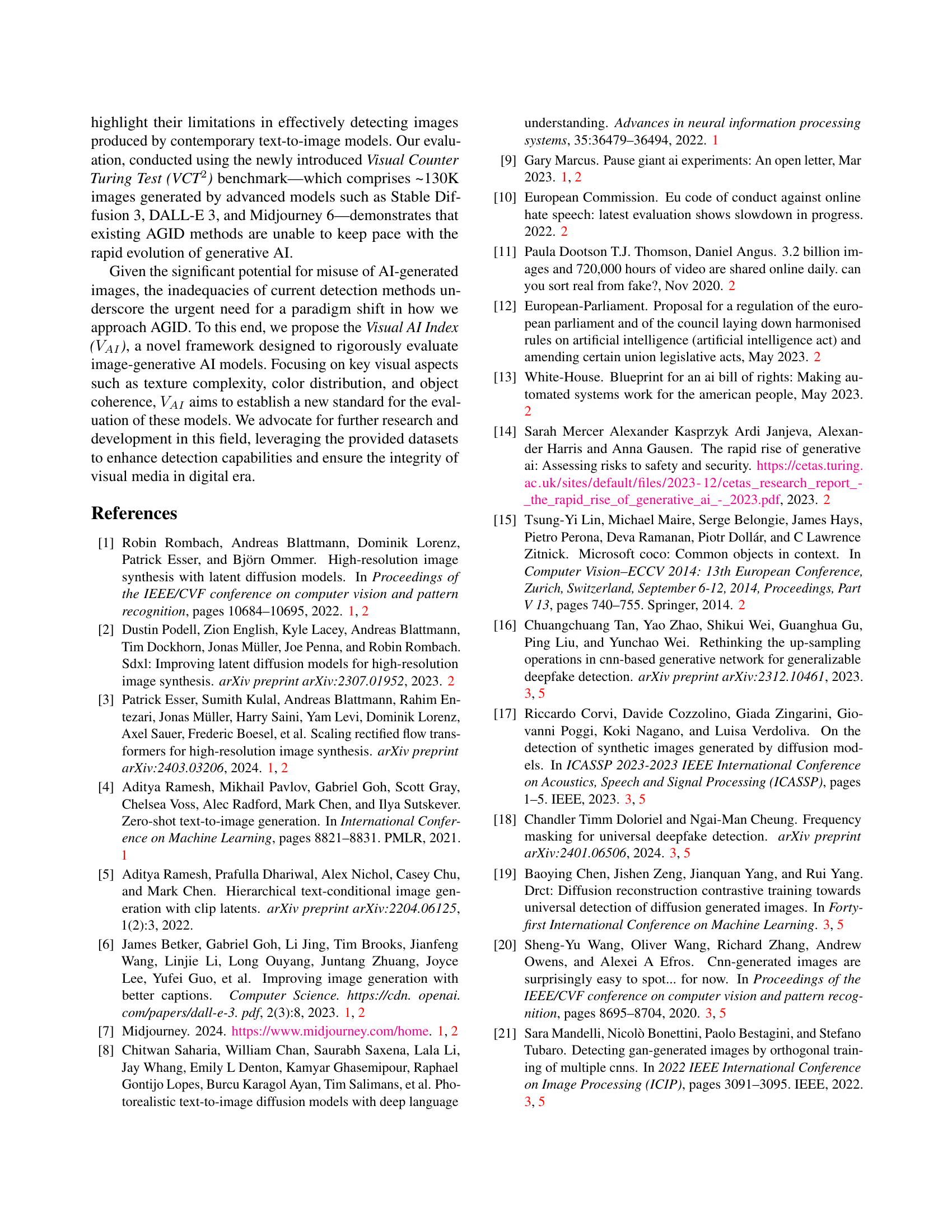

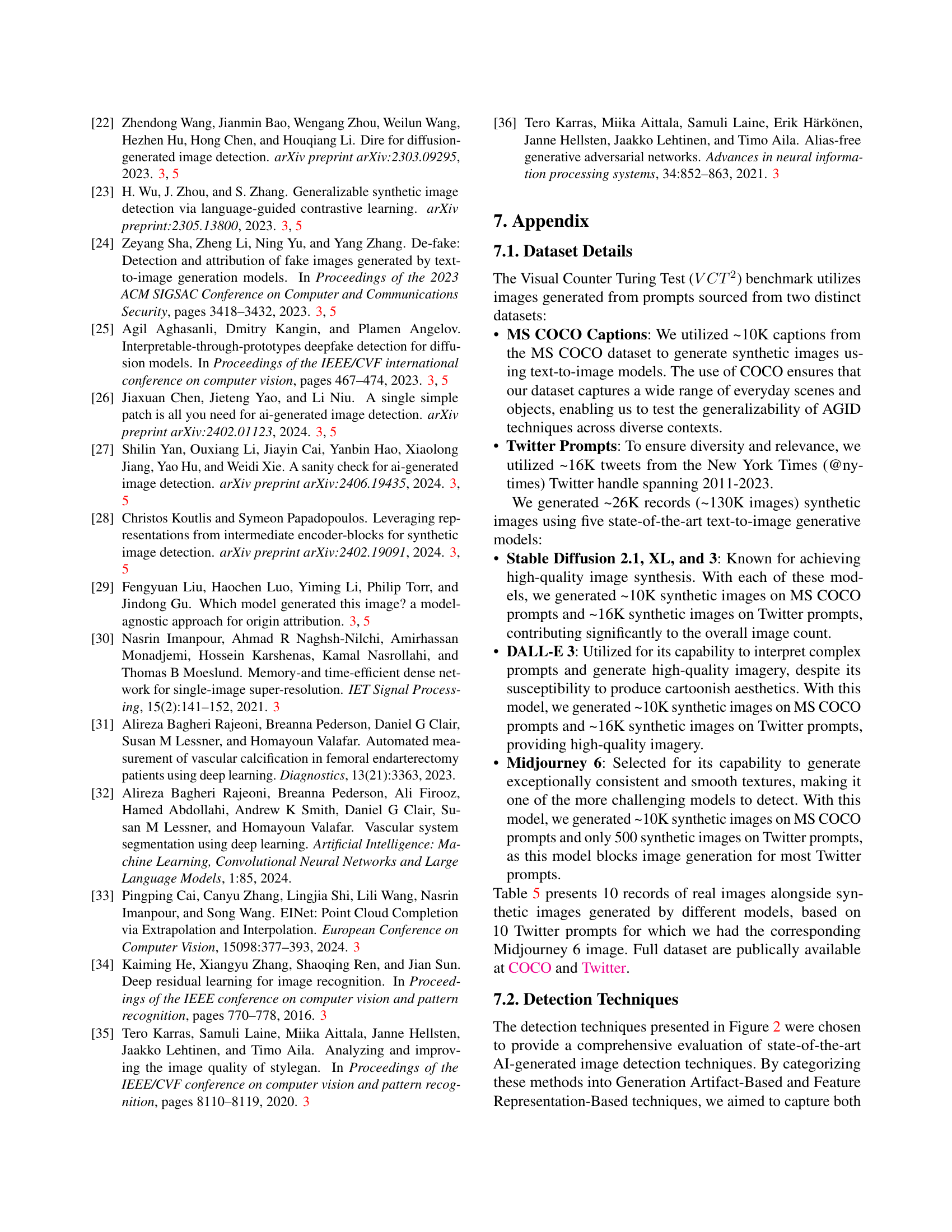

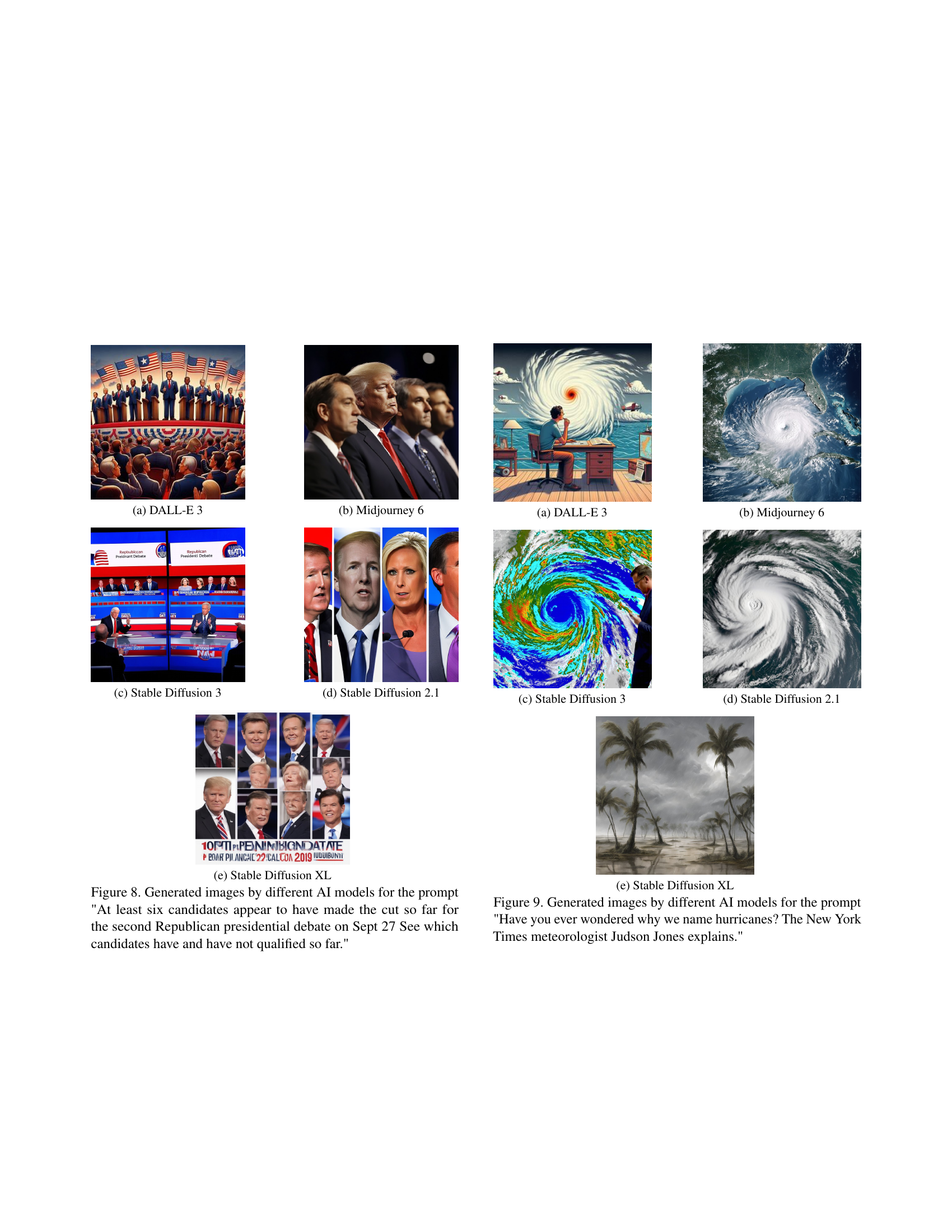

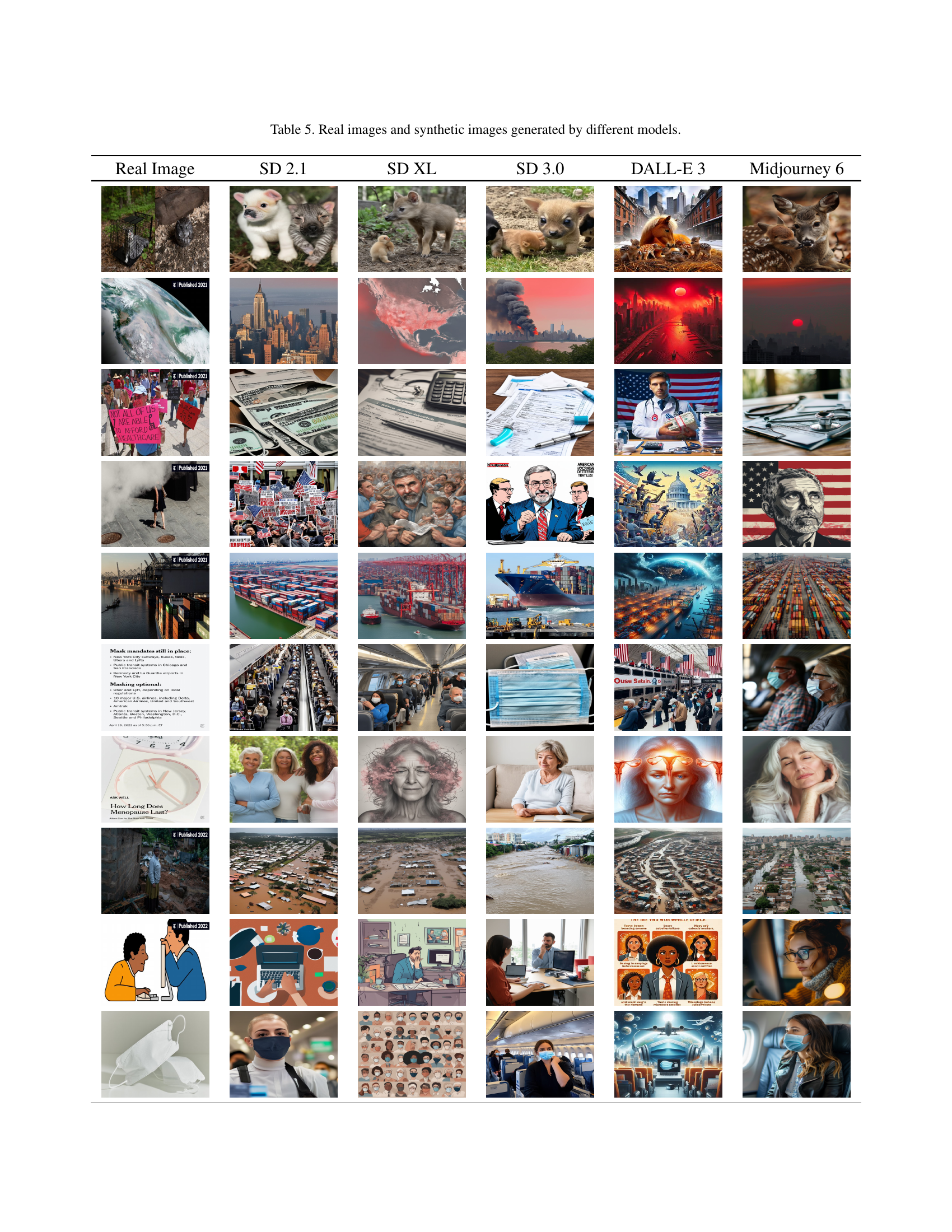

The paper introduces the Visual Counter Turing Test (VCT²) benchmark, using ~130,000 images from various AI models. It also proposes the Visual AI Index (VAI), a new metric evaluating generated images’ visual quality across seven key aspects. Results reveal significant shortcomings in existing detectors, demonstrating the urgency for improved methods and highlighting the value of VAI in evaluating generative AI models.

Key Takeaways#

Why does it matter?#

This paper is crucial because it highlights the inadequacy of current AI-generated image detection methods and proposes solutions to address this critical issue. It introduces novel evaluation metrics and benchmarks, thus shaping future research directions in this rapidly evolving field.

Visual Insights#

Full paper#