↗ arXiv ↗ Hugging Face ↗ Papers with Code

TL;DR#

Large Language Models (LLMs) struggle with complex reasoning tasks, particularly in mathematics, due to a lack of high-quality training data. Existing datasets are limited in size and diversity, hindering the development of sophisticated mathematical reasoning abilities in AI systems. This creates a significant obstacle for advancing AI’s capacity for problem-solving in mathematical domains.

This paper introduces Template-based Data Generation (TDG), a novel method that uses LLMs (specifically GPT-4) to automatically generate meta-templates for creating a vast array of high-quality mathematical problems and their solutions. The researchers used TDG to generate the TemplateGSM dataset comprising over 7 million problems, which are publicly available. This addresses the data scarcity problem and significantly improves the ability to train and evaluate LLMs for mathematical reasoning. The method also incorporates a verification process to ensure data accuracy.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers in NLP and AI because it introduces a novel method for generating high-quality mathematical datasets. The scarcity of such datasets has been a major bottleneck in developing LLMs capable of complex reasoning. This research directly addresses that limitation, opening new avenues for improving the mathematical problem-solving abilities of AI systems. The publicly available dataset and code also facilitate reproducibility and further research.

Visual Insights#

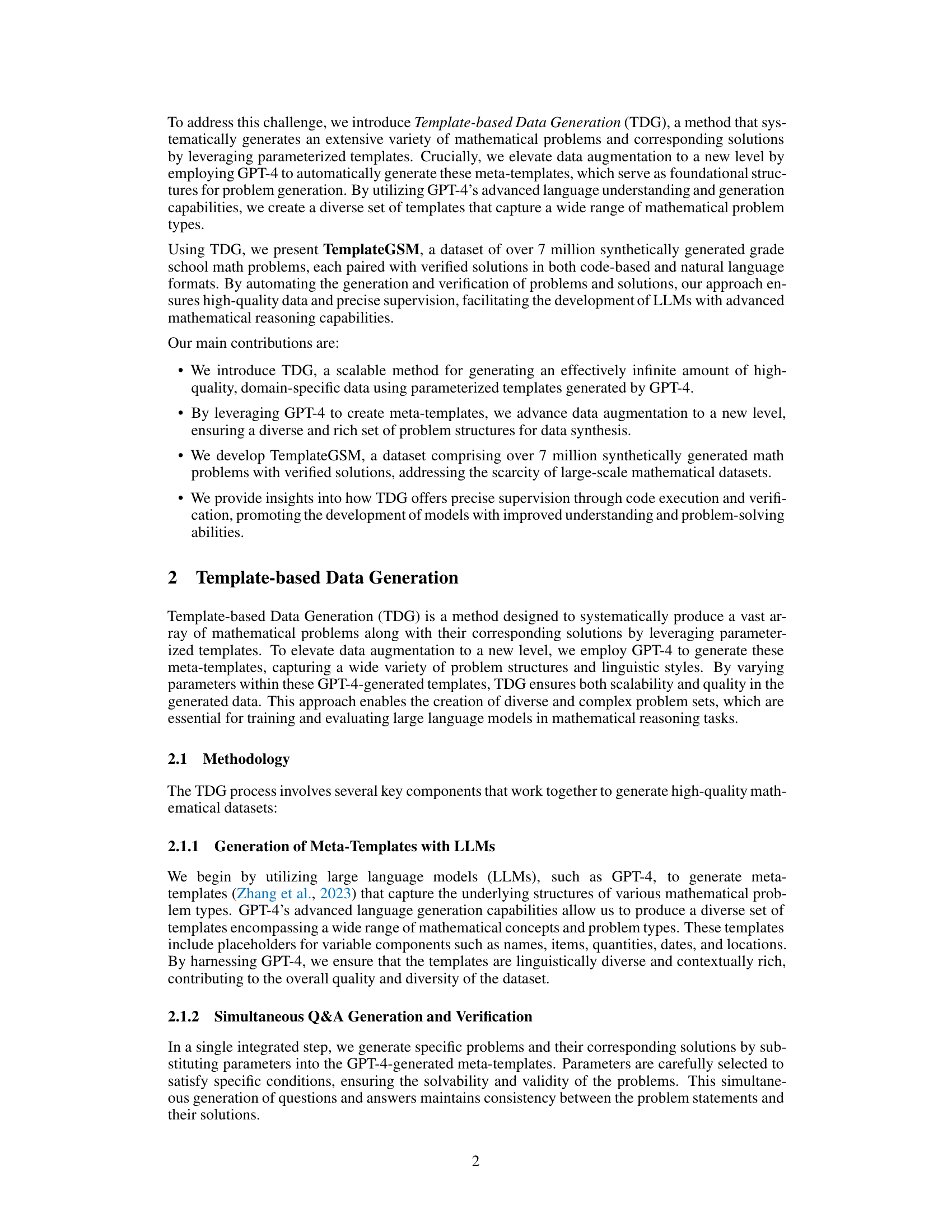

| Metric | Value |

|---|---|

| Number of source templates | 7,473 |

| Total number of problems | 7,473,000 |

| Problem length range (tokens) | [18, 636] |

| Code solution length range (tokens) | [30, 513] |

| Code solution length average (tokens) | 123.43 ± 40.82 |

| Natural language solution length range (tokens) | [1, 1024] |

| Natural language solution length average (tokens) | 77.87 ± 33.03 |

🔼 This table presents a statistical overview of the TemplateGSM dataset, which contains synthetically generated grade school math problems. It details the number of unique templates used to generate the problems, the total count of problems in the dataset, and the ranges and averages of the lengths (measured in tokens) of both the problem statements and their corresponding solutions (in code and natural language formats). The tokenizer used is specified as ‘o-200k’. This information is crucial for understanding the dataset’s scale and characteristics, particularly for researchers working with large language models (LLMs) in the context of mathematical problem-solving.

read the caption

Table 1: Statistics of the TemplateGSM Dataset (o-200k tokenizer)

Full paper#