↗ arXiv ↗ Hugging Face ↗ Papers with Code

TL;DR#

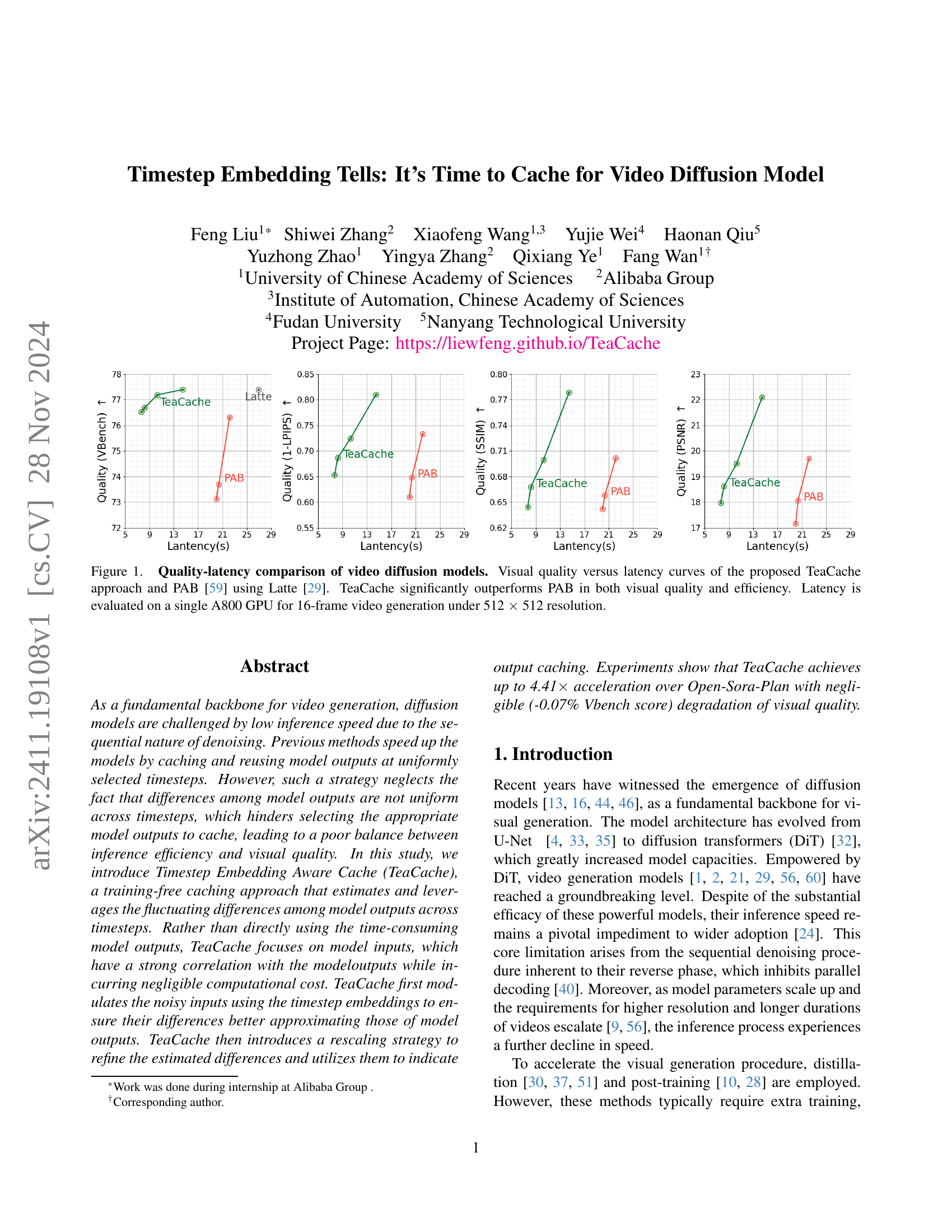

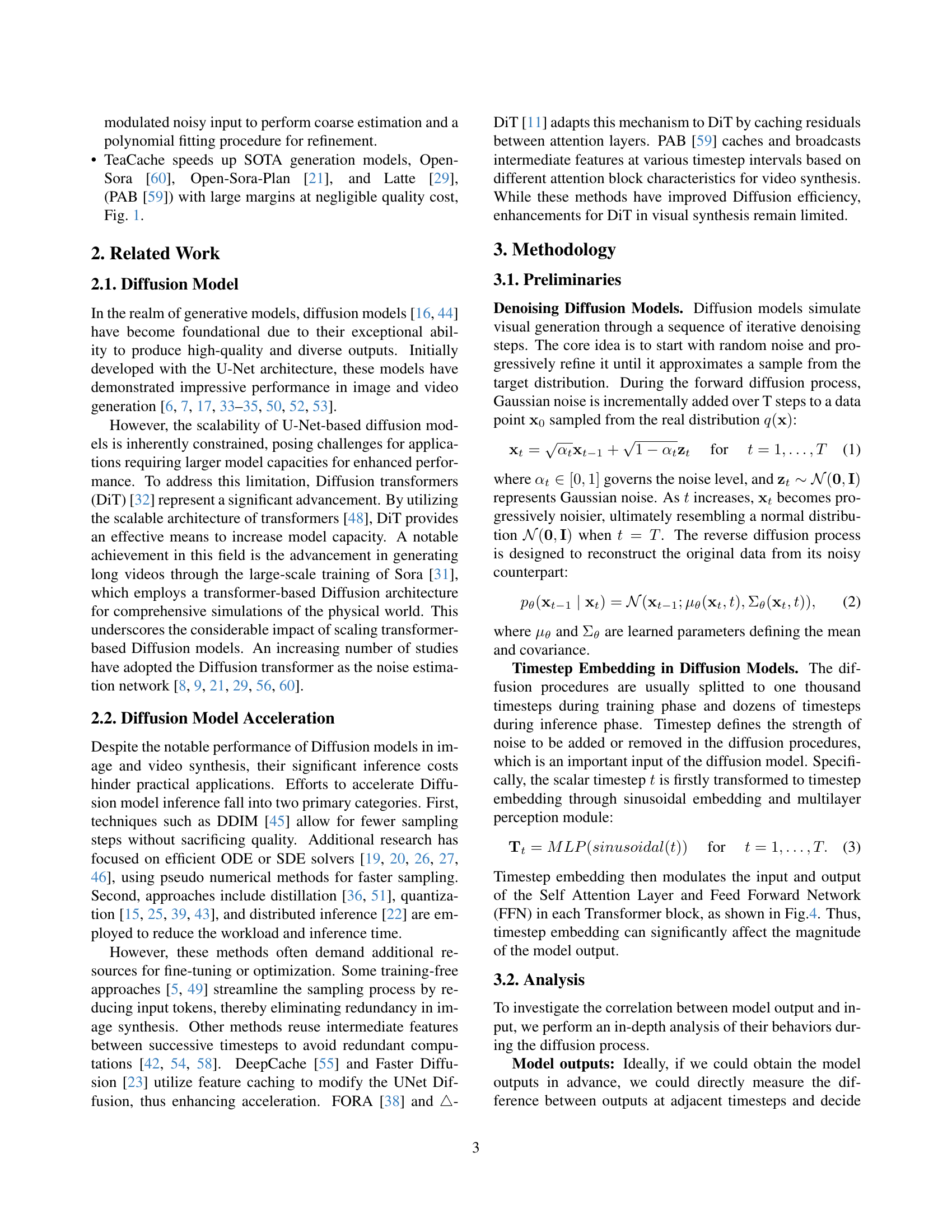

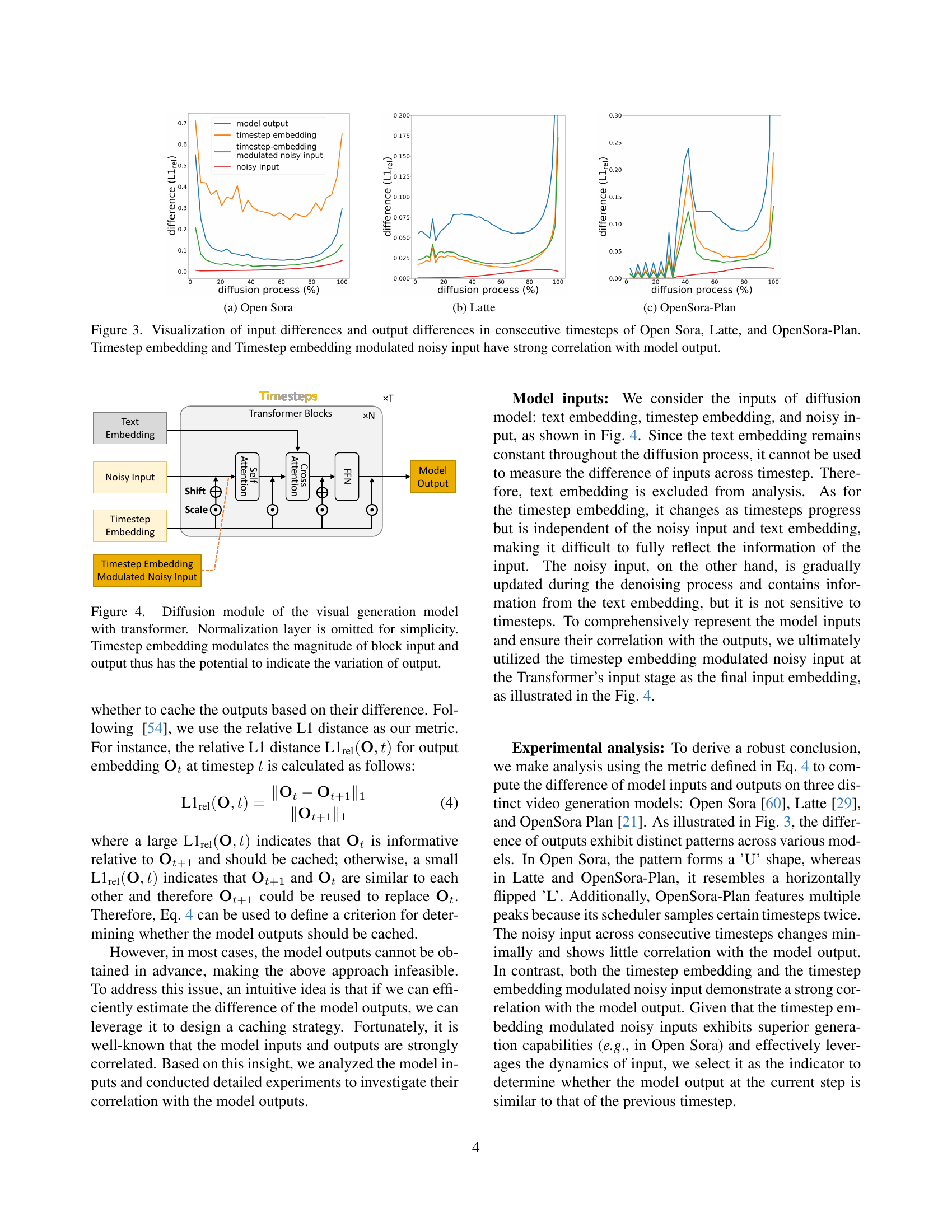

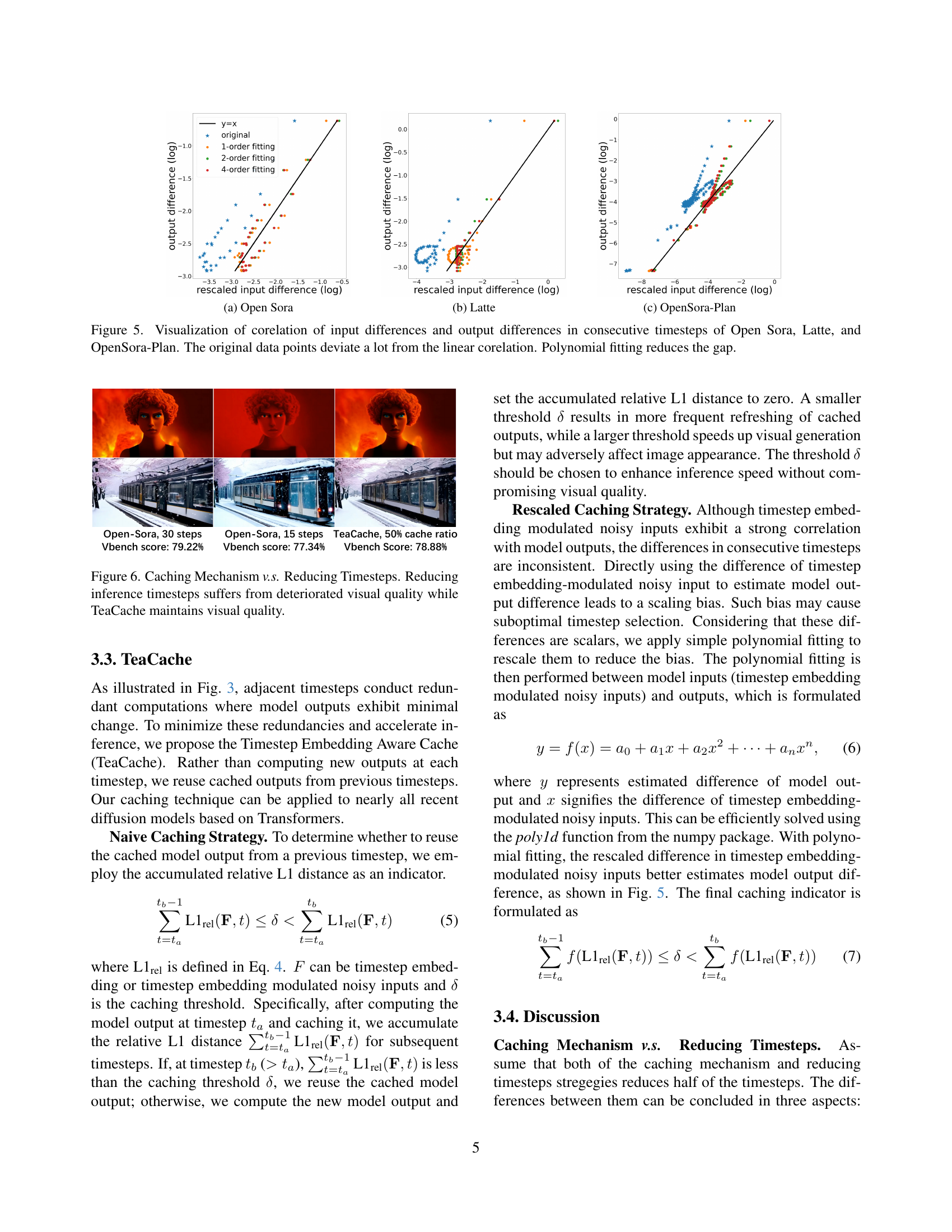

Video generation using diffusion models is slow due to their sequential processing. Existing methods attempt to speed this up by caching and reusing model outputs at uniform intervals, but this approach is inefficient because the differences between model outputs aren’t uniform across all steps. This paper introduces TeaCache, a new method that addresses this problem.

TeaCache works by focusing on the model inputs, which are strongly correlated with the outputs but much cheaper to compute. It leverages timestep embeddings to modulate noisy inputs, ensuring better approximation of output differences. A rescaling strategy refines these estimations to accurately indicate when outputs can be cached, thus maximizing caching efficiency and visual quality. Experiments show TeaCache significantly outperforms existing methods in terms of both speed and visual quality.

Key Takeaways#

Why does it matter?#

This paper is important because it presents TeaCache, a novel training-free method that significantly accelerates video generation by strategically caching model outputs. This addresses a critical bottleneck in current diffusion models, improving inference speed without sacrificing visual quality. The approach is broadly applicable and opens avenues for research into more efficient model training and inference strategies for various generative models. It offers a practical solution to a prevalent problem in the field, and its findings are directly relevant to ongoing efforts to improve the efficiency and scalability of visual generative models.

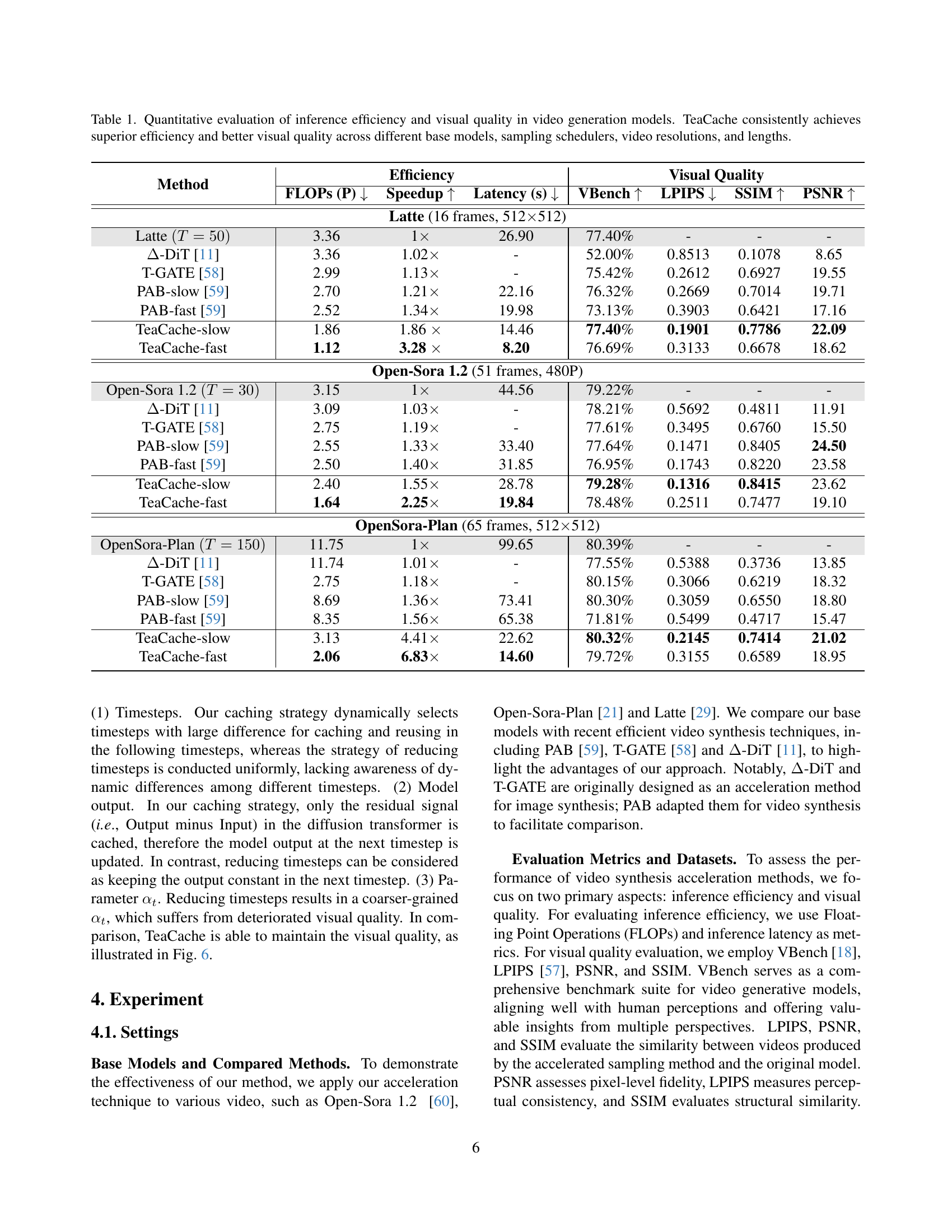

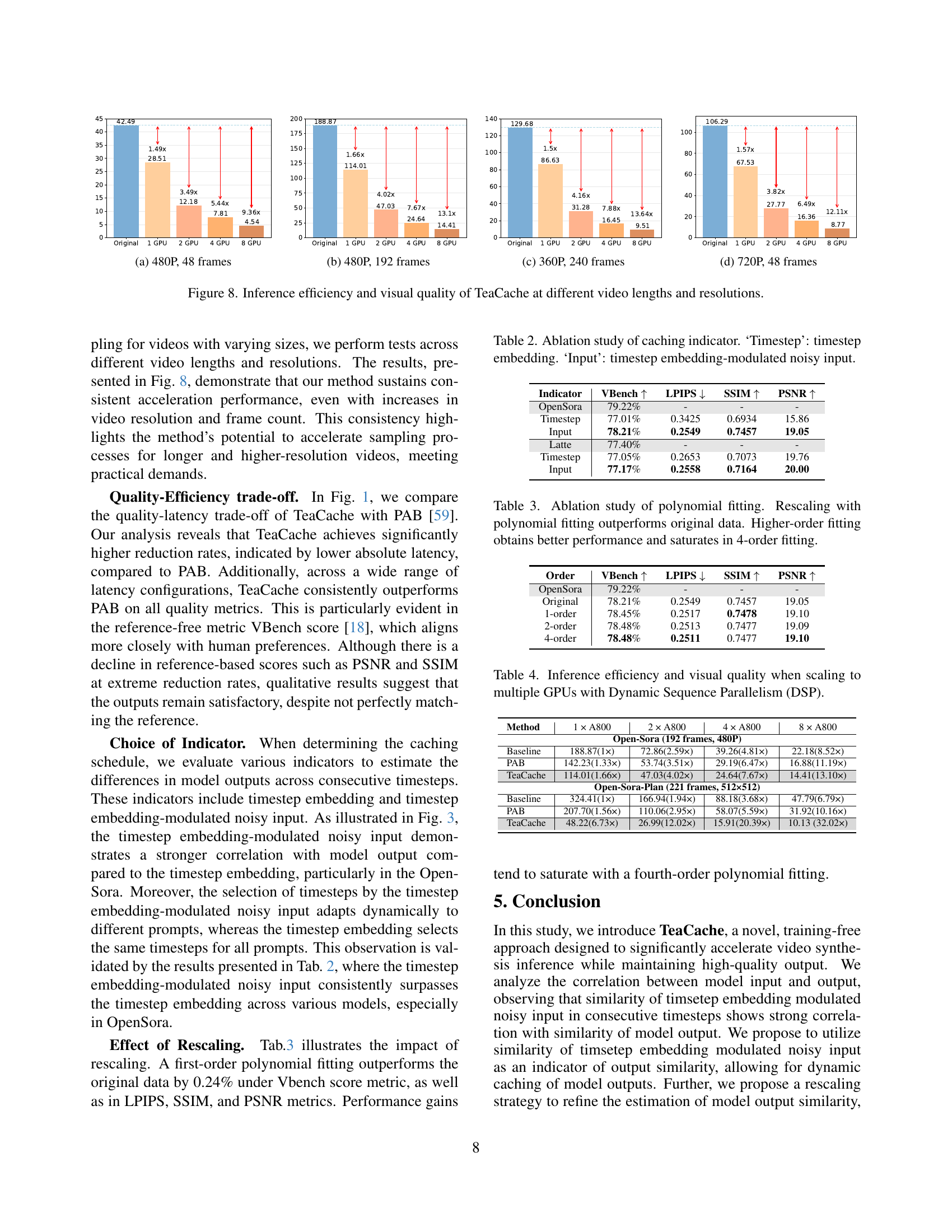

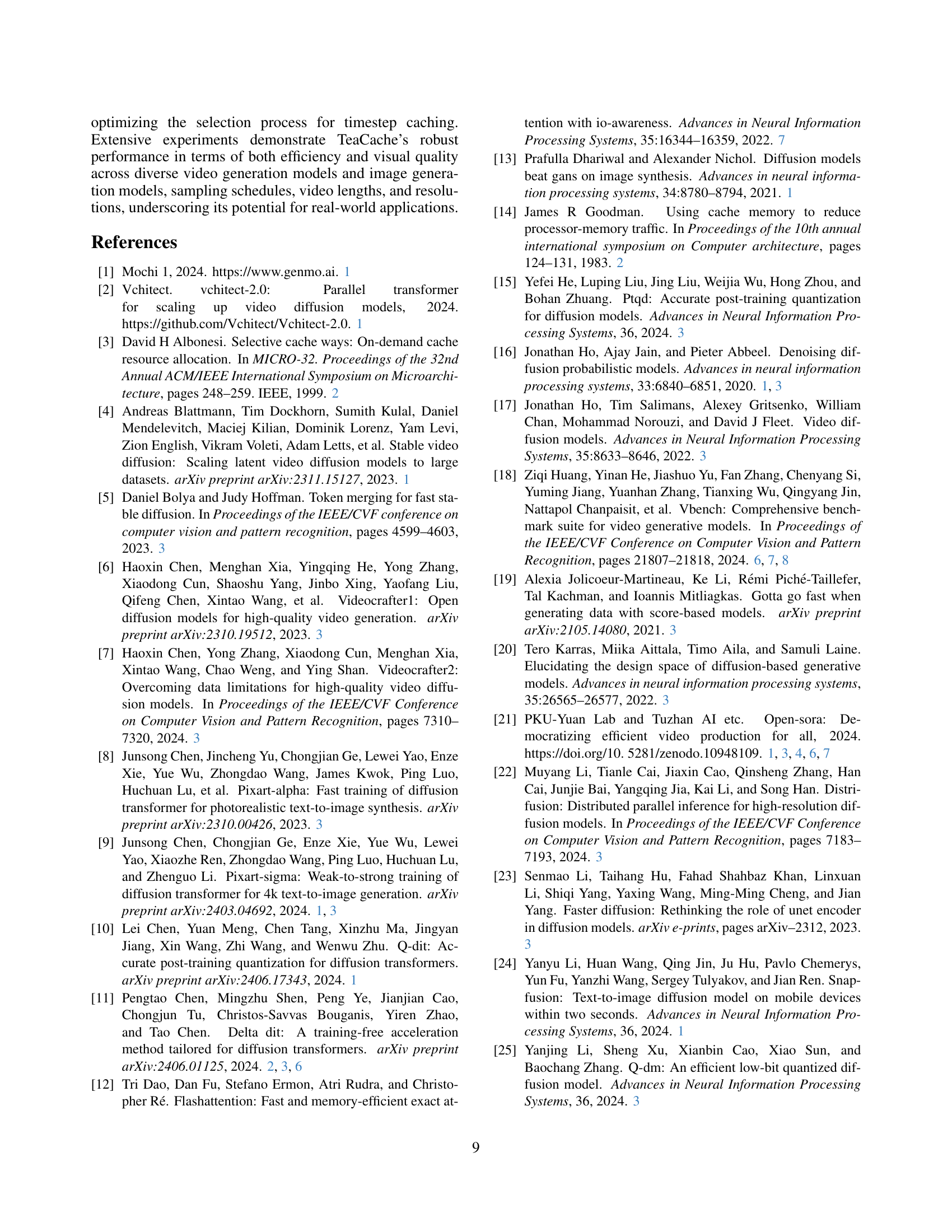

Visual Insights#

Full paper#