TL;DR#

Large language models (LLMs) have shown impressive capabilities but often lack strong reasoning skills. OpenAI’s o1 model demonstrated significant advancements in this area, spurring multiple replication attempts. However, replicating and improving upon the o1 model for specialized tasks like code generation presents significant challenges, particularly concerning code evaluation and defining appropriate reward functions for the reasoning process.

This paper introduces O1-CODER, a system designed to replicate the o1 model specifically for coding. It employs a unique approach, combining reinforcement learning with Monte Carlo Tree Search. This framework includes a Test Case Generator for automated code testing and iterative fine-tuning of the model to generate pseudocode initially, followed by the complete code. The result is a system that demonstrates improved System-2 thinking capabilities for generating code, which is evaluated based on both the quality of the reasoning steps and the correctness of the final code.

Key Takeaways#

Why does it matter?#

This paper is important because it presents a novel approach to replicating and improving upon OpenAI’s o1 model, focusing on coding tasks. It introduces a novel framework integrating reinforcement learning and Monte Carlo Tree Search, addressing key challenges in code generation evaluation and reasoning process design. This work significantly contributes to the ongoing efforts in creating more advanced AI models capable of complex reasoning, and opens up new avenues for research in reinforcement learning and code generation.

Visual Insights#

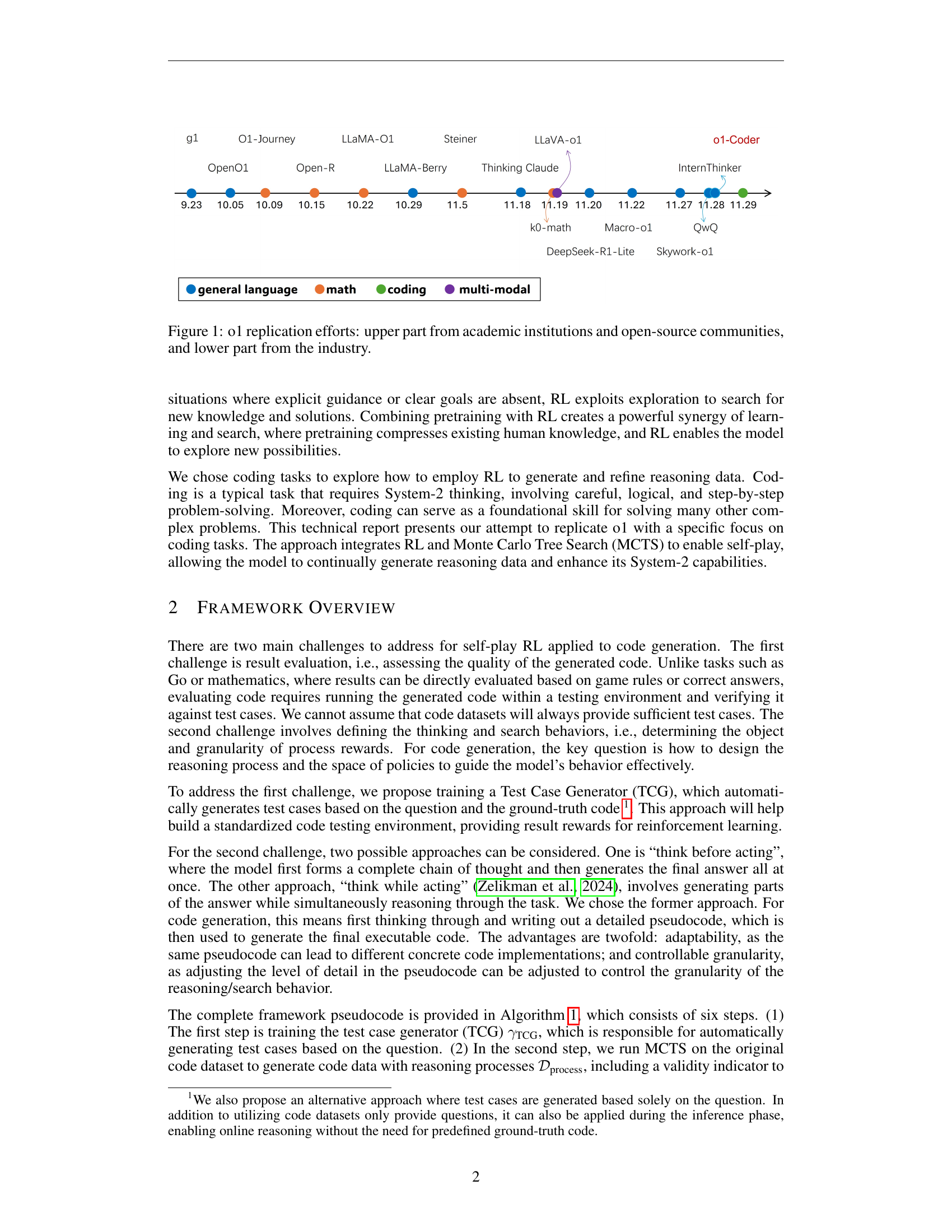

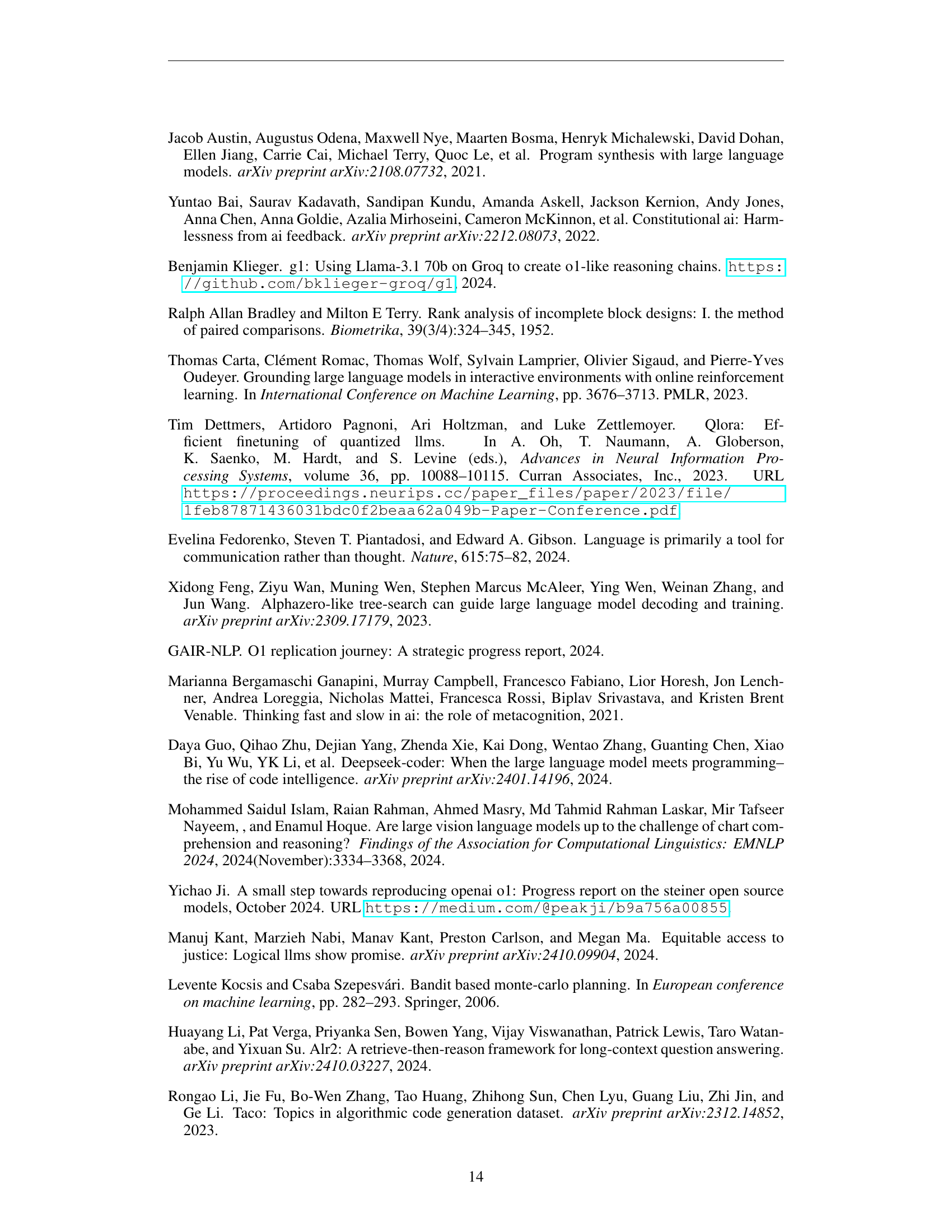

🔼 This figure illustrates the timeline and various efforts to replicate OpenAI’s o1 model. The upper part showcases contributions from academic research institutions and open-source communities, while the lower part highlights those from industry players. Each entry is marked with its name and the date of its release, providing a visual overview of the rapid development and wide interest in replicating and extending the o1 model.

read the caption

Figure 1: o1 replication efforts: upper part from academic institutions and open-source communities, and lower part from the industry.

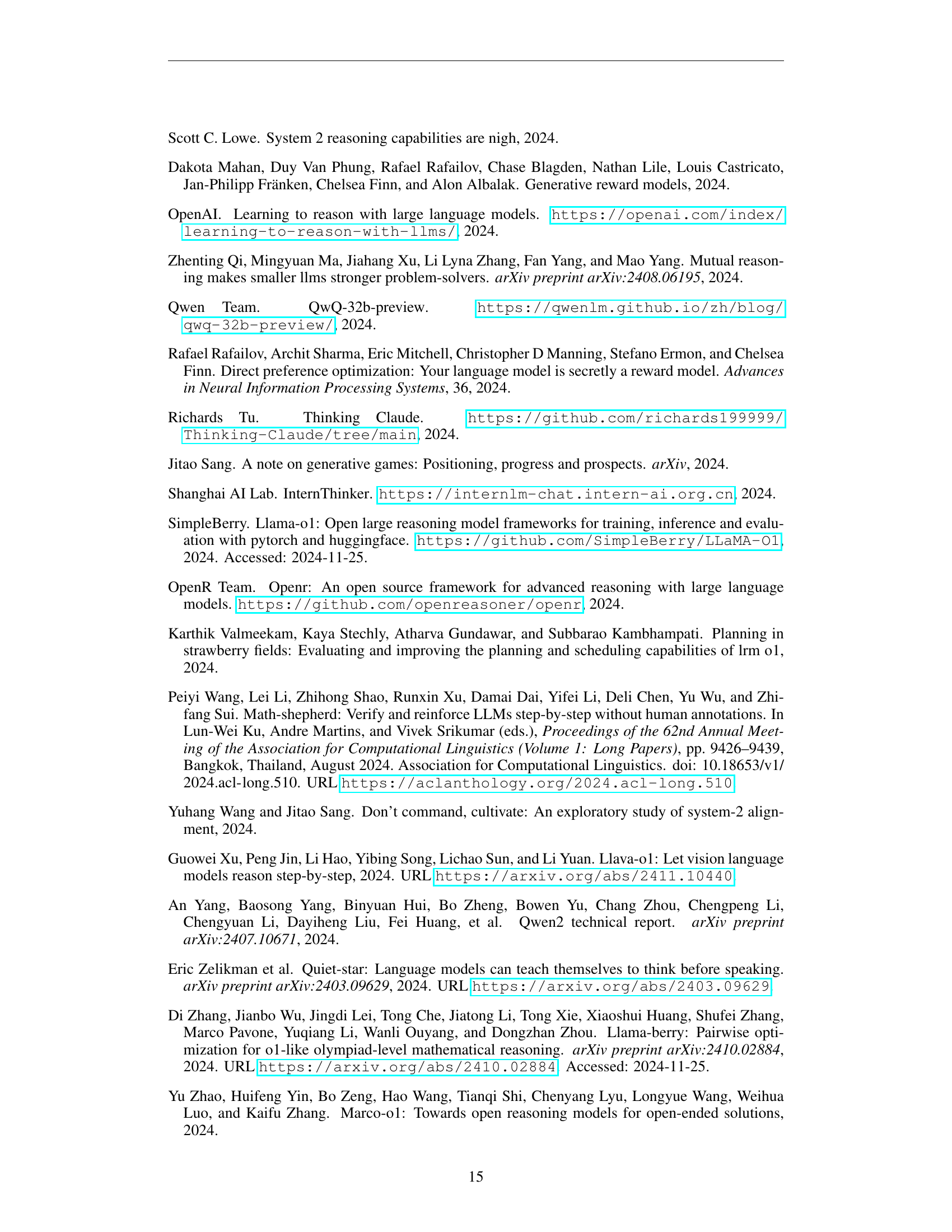

| Model | Qwen2.5-1.5B | Qwen2.5-3B | Qwen2.5-7B | Qwen2.5-Coder-7B | ||||

|---|---|---|---|---|---|---|---|---|

| Vanilla | Pseudocode | Vanilla | Pseudocode | Vanilla | Pseudocode | Vanilla | Pseudocode | |

| Pass@1(%) | 55.8 | 46.7**(-9.1)** | 56.3 | 51.3**(-5.0)** | 59.8 | 50.1**(-9.7)** | 57.7 | 58.2(+0.5) |

| ASPR(%) | 49.9 | 54.5(+4.6) | 52.0 | 70.6(+18.6) | 66.4 | 78.1(+11.7) | 49.3 | 74.9(+25.6) |

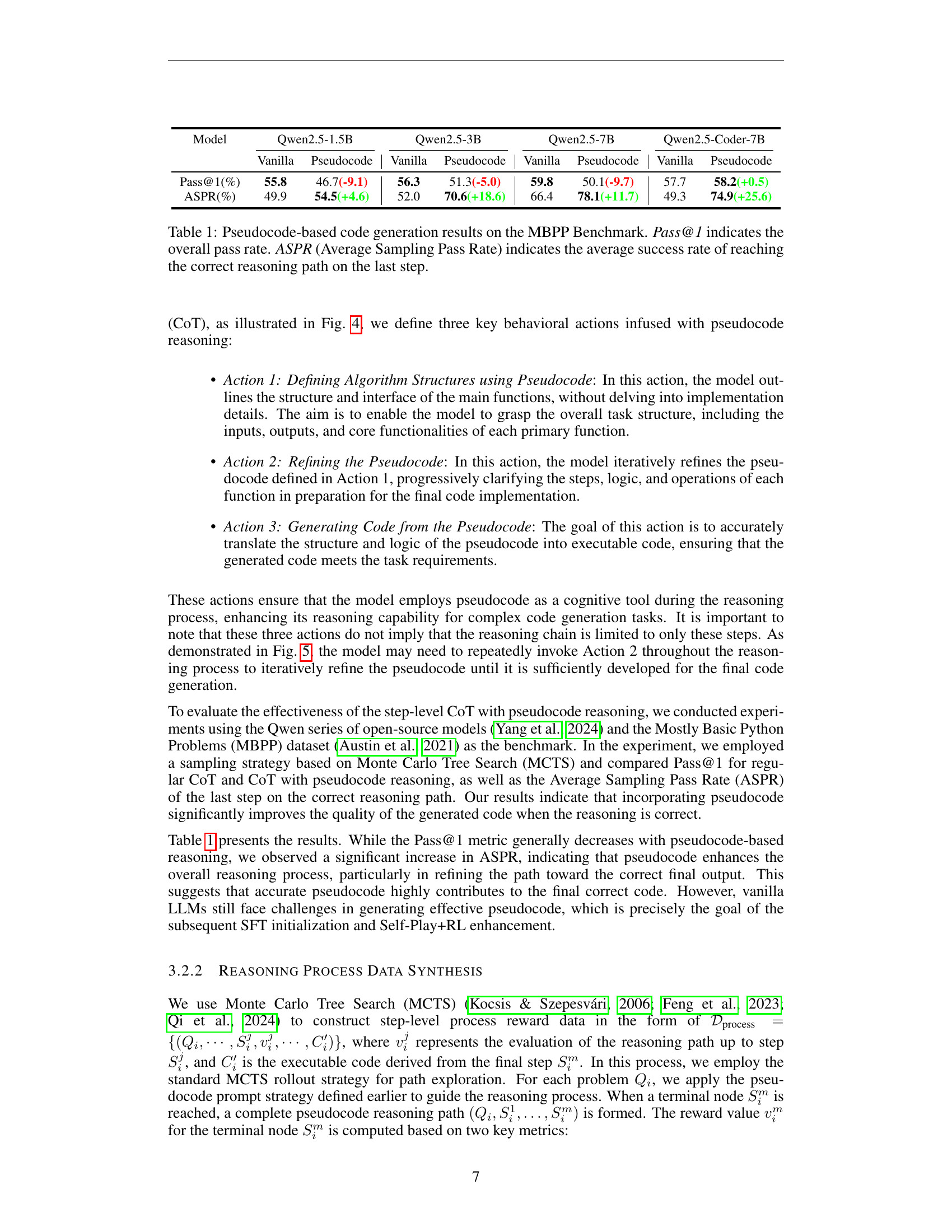

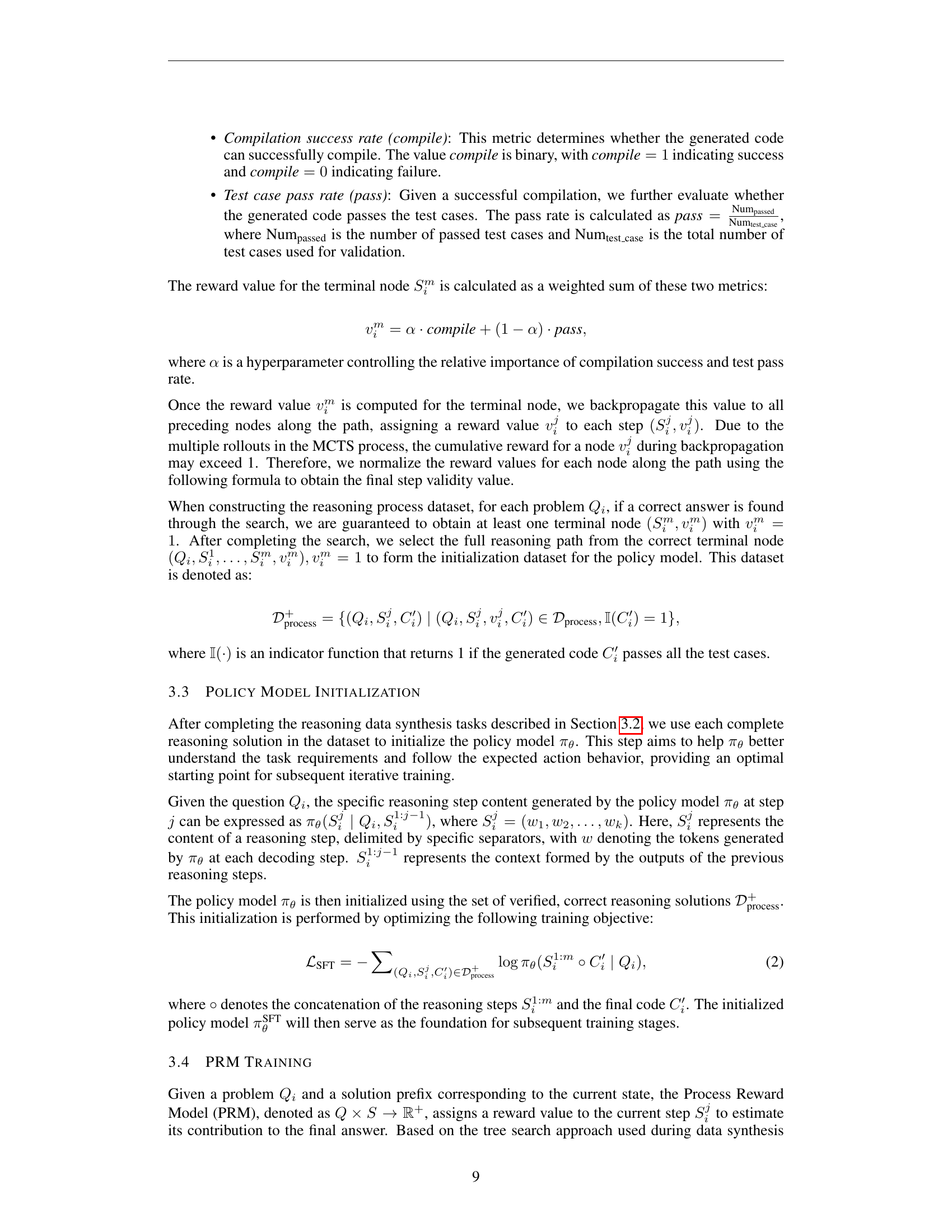

🔼 This table presents the performance of different language models on the Mostly Basic Python Problems (MBPP) benchmark when generating code based on pseudocode. The ‘Pass@1’ metric shows the percentage of times the model successfully generated correct code on its first attempt. The ‘ASPR’ (Average Sampling Pass Rate) metric is more nuanced; it indicates the average success rate of the model in arriving at the correct reasoning path when generating code. A higher ASPR value suggests a more effective reasoning process, even if the final code might not be correct. The models used include several variants of the Qwen model family.

read the caption

Table 1: Pseudocode-based code generation results on the MBPP Benchmark. Pass@1 indicates the overall pass rate. ASPR (Average Sampling Pass Rate) indicates the average success rate of reaching the correct reasoning path on the last step.

In-depth insights#

O1 Replication for Code#

The concept of “O1 Replication for Code” involves recreating or approximating OpenAI’s advanced language model, known as ‘o1’, specifically tailored for code generation tasks. This is a significant undertaking because ‘o1’ showcases impressive system-2 thinking, going beyond simple pattern recognition to true reasoning and complex problem-solving. A successful replication would require understanding and implementing ‘o1’’s underlying architecture, which likely incorporates reinforcement learning and techniques like Monte Carlo Tree Search (MCTS) to guide the model’s decision-making process. The challenges are considerable, encompassing the need for large-scale datasets of code, sophisticated evaluation methods to assess code correctness and reasoning quality, and the ability to efficiently train and fine-tune such a large model. A key focus should be on the methodology used to generate and refine reasoning data, potentially through self-play mechanisms or other techniques that encourage the model to learn from its own code generation attempts and errors. The ultimate goal is not just to replicate ‘o1’ but also to improve upon its capabilities, potentially pushing the boundaries of automated code generation and making it more accessible and beneficial to developers.

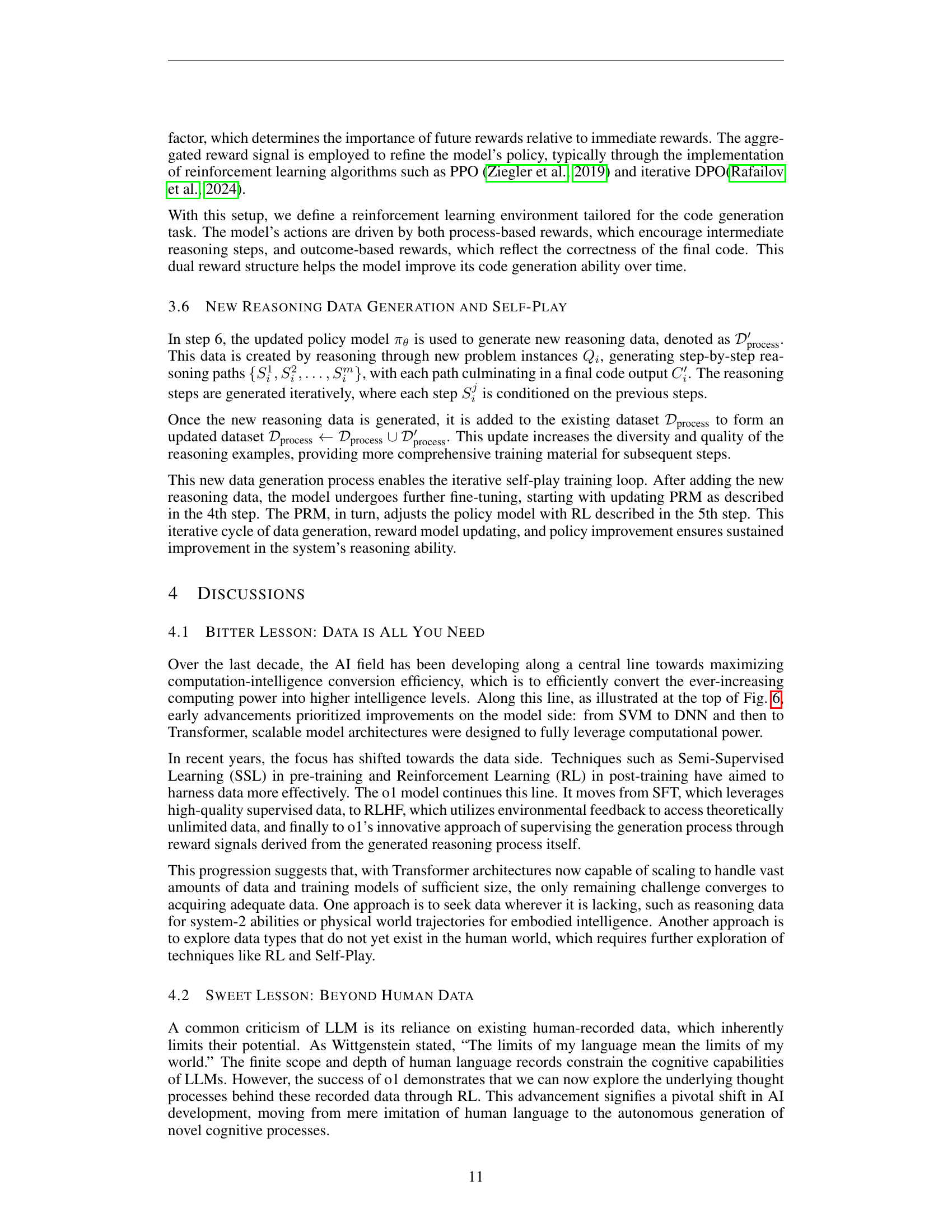

Self-Play RL Framework#

A self-play reinforcement learning framework is a powerful paradigm for training advanced AI models, especially in complex domains like code generation. It leverages the model’s ability to play against itself, generating diverse data and refining its own strategies without the need for extensive human-labeled datasets. The core idea is to have the model generate code, evaluate it via automatic testing (potentially using a separate test case generation module), and use the results to improve the model’s code generation capabilities. This iterative process of self-play, evaluation, and refinement allows the AI to learn complex reasoning patterns and improve its system-2 thinking abilities. Crucially, such a framework needs a robust reward mechanism to effectively guide the learning process. This reward often incorporates both code correctness and the quality of the reasoning steps leading to the code, encouraging more structured and explainable problem-solving approaches. The challenge lies in designing effective reward functions and efficient mechanisms for generating diverse and challenging test cases. The complexity of the code generation task necessitates a system that is both rigorous and adaptable, constantly improving through self-interaction and feedback.

Test Case Generation#

The paper delves into a crucial aspect of automated code generation: robust test case generation. It highlights the challenge of evaluating code quality without readily available test cases, a limitation often encountered in real-world scenarios. The authors propose an innovative solution: a Test Case Generator (TCG). This TCG is trained to automatically generate input-output test cases, significantly improving the efficiency and reliability of code assessment. The approach leverages a two-phased training process: Supervised Fine-Tuning (SFT), to ensure the generator adheres to a specific format and Direct Preference Optimization (DPO), to align the generated cases with desired preferences. This sophisticated methodology is pivotal in creating a standardized testing environment for reinforcement learning, thereby optimizing the process of creating and refining reasoning data, which is central to the overall goal of replicating and enhancing system-2 capabilities in AI.

Pseudocode Reasoning#

The concept of “Pseudocode Reasoning” in AI models represents a significant advancement in bridging the gap between human-like reasoning and machine-generated code. It leverages the abstract nature of pseudocode to guide the model through a step-by-step reasoning process, making the code generation process more transparent and interpretable. This approach contrasts with the “black box” nature of many LLMs, which often produce code without revealing the underlying rationale. By having the model first construct a detailed pseudocode representation, before translating it into executable code, the reasoning process becomes more structured and verifiable. This allows for easier debugging and modification, and also facilitates better understanding of the model’s decision-making. Furthermore, the use of pseudocode enables finer-grained control over the reasoning process since pseudocode is a more flexible format than full code and can be adjusted more easily. Self-play and reinforcement learning can then be used to improve the model’s ability to generate high-quality pseudocode, thus driving advancements in its overall code generation capabilities. However, challenges remain in designing effective reward mechanisms for evaluating pseudocode quality and in scaling this approach to larger and more complex coding tasks.

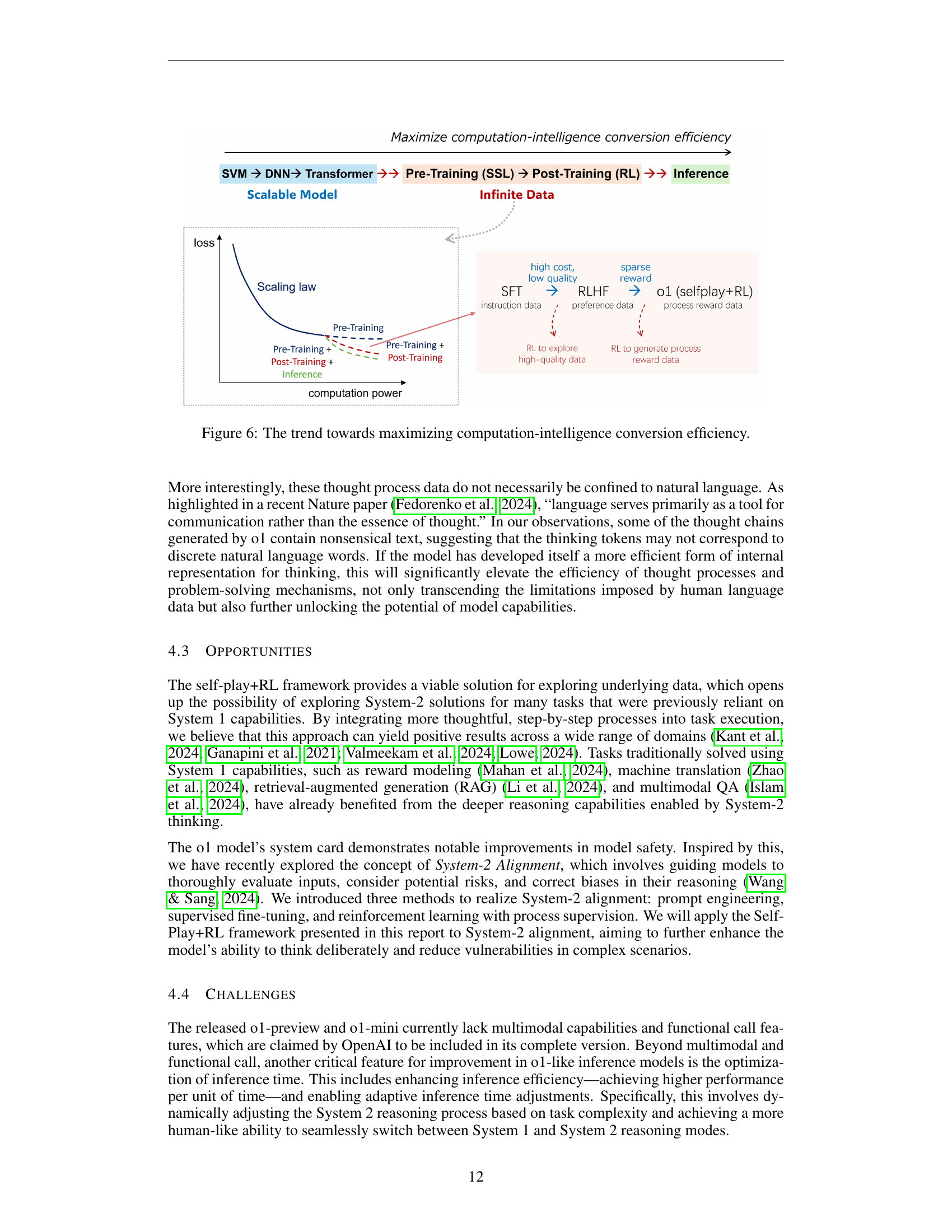

Future of System-2#

The “Future of System-2” in AI hinges on overcoming current limitations in data acquisition and model architecture. While System-2 models like OpenAI’s o1 demonstrate impressive reasoning capabilities, their reliance on extensive, curated datasets poses a significant hurdle to broader application. Future advancements will likely involve more efficient data generation techniques, such as self-play and reinforcement learning, to move beyond human-annotated data. Integrating world models will be crucial for applying these models to complex, dynamic real-world scenarios, where state updates are not readily available. Furthermore, research into alternative representations of thought processes, potentially moving beyond natural language as the sole basis for reasoning, may unlock new levels of efficiency and capability. Ultimately, the future of System-2 AI relies on a synergistic approach that combines advances in both data generation and model architecture, leading to more robust, adaptable, and efficient reasoning systems.

More visual insights#

More on figures

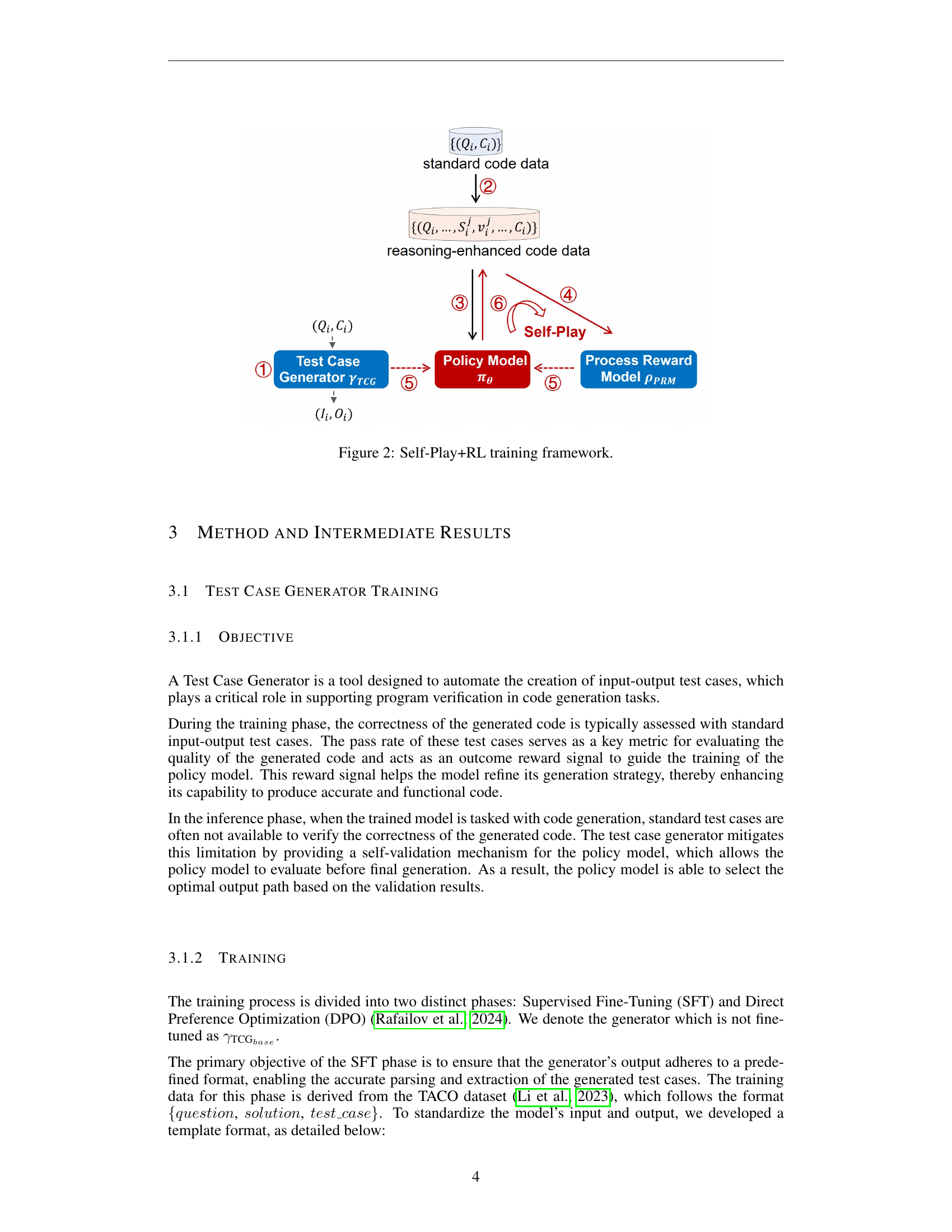

🔼 This figure illustrates the iterative training process of the O1-CODER model. It shows the interaction between the Test Case Generator (TCG), which creates test cases for evaluating the code generated by the model, the Policy Model (πθ), which generates the code, and the Process Reward Model (PPRM), which evaluates the quality of reasoning steps during code generation. The training process is based on self-play, where the model iteratively generates reasoning-enhanced code data, which is then used to train the models and improve their performance. The figure details the flow of data and feedback between the models, illustrating how the training process works in a cycle of improvement.

read the caption

Figure 2: Self-Play+RL training framework.

🔼 This figure shows the template used for training the Test Case Generator (TCG) during the Supervised Fine-Tuning (SFT) phase. The template guides the model to generate test cases by providing a clear instruction, a problem statement, a code section (where a solution is randomly selected from existing solutions), and a test section where the model should generate multiple test cases (input and expected output) to validate the code provided. This structured approach is essential for ensuring consistency and quality in the generated test cases for subsequent steps.

read the caption

Figure 3: Template format for TCG SFT

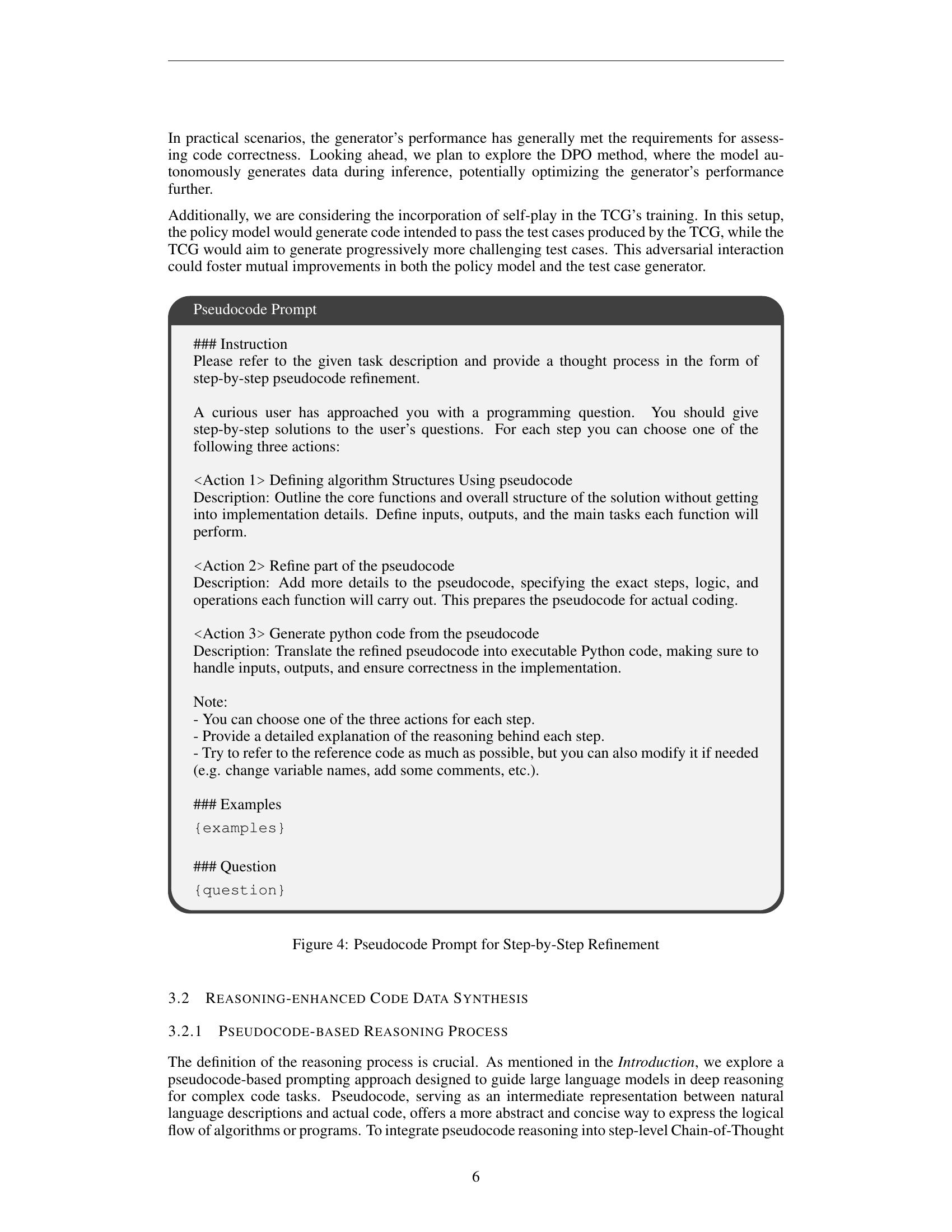

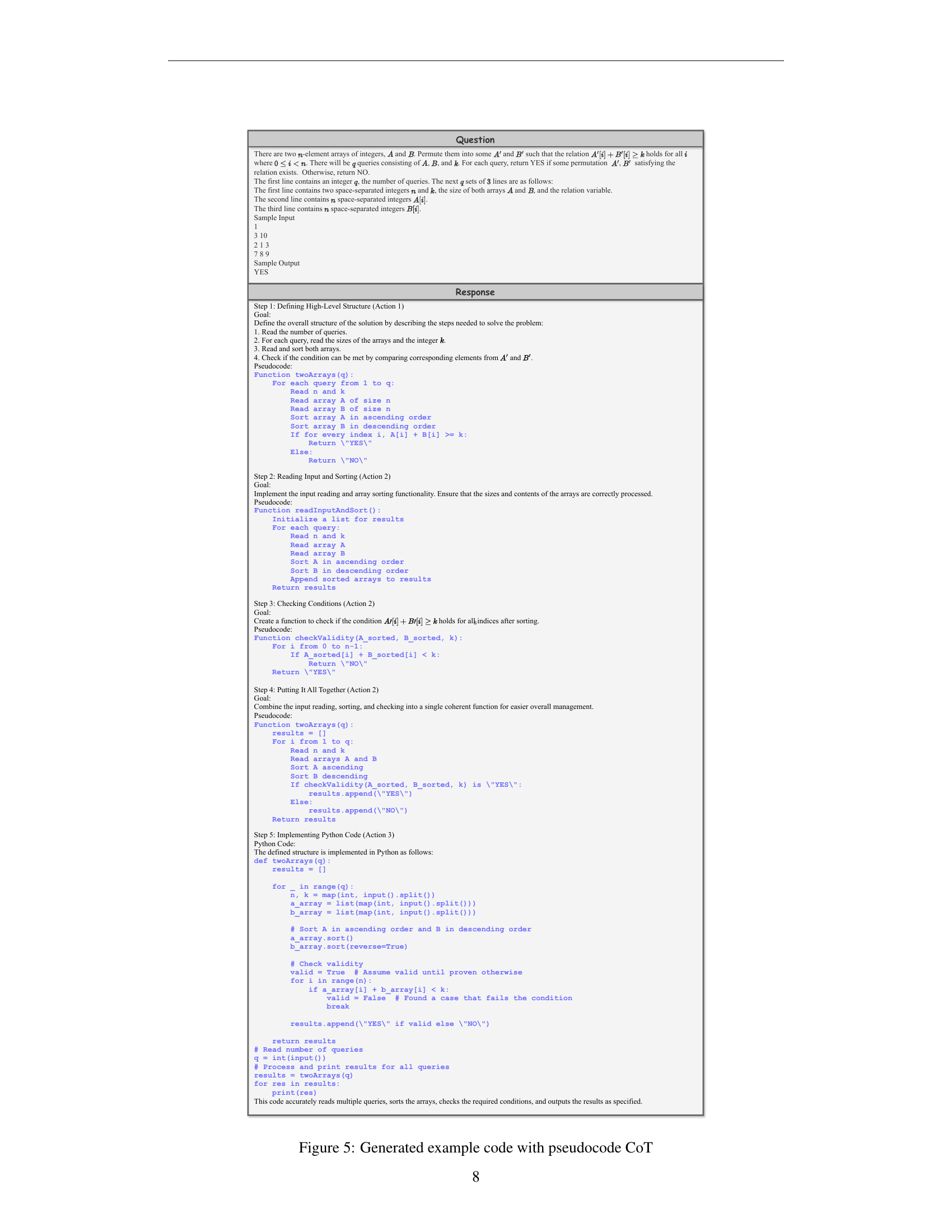

🔼 This figure shows a detailed prompt used to guide a large language model in generating pseudocode. The prompt provides step-by-step instructions, outlining three distinct actions: defining the algorithm’s structure using pseudocode, refining the pseudocode by adding more detail, and translating the refined pseudocode into Python code. This approach is designed to enhance the model’s reasoning capabilities by encouraging a structured thought process during code generation.

read the caption

Figure 4: Pseudocode Prompt for Step-by-Step Refinement

Full paper#