↗ arXiv ↗ Hugging Face ↗ Papers with Code

TL;DR#

Reconstructing indoor scenes is challenging due to complex spatial structures and textureless regions. Existing methods like multi-view stereo and neural radiance fields have limitations in accuracy and efficiency, particularly for detailed areas and surfaces. 3D Gaussian splatting has shown promise but still suffers from artifacts and incompleteness.

This paper introduces 2DGS-Room, a novel method that uses seed-guided 2D Gaussian splatting to address these limitations. It improves reconstruction quality by strategically placing Gaussians based on seed points, incorporates depth and normal priors, and enforces multi-view consistency. Extensive experiments demonstrate superior performance compared to existing techniques across multiple metrics on ScanNet and ScanNet++ datasets.

Key Takeaways#

Why does it matter?#

This paper is important because it significantly advances high-fidelity indoor scene reconstruction. Its novel approach using seed-guided 2D Gaussian splatting with geometric constraints addresses limitations of existing methods, leading to more accurate and complete 3D models. This is highly relevant to researchers in computer vision, 3D reconstruction, and related fields. The improved reconstruction quality has implications for various applications, including robotics, virtual reality, and augmented reality. The study’s open-source nature also facilitates further research and development.

Visual Insights#

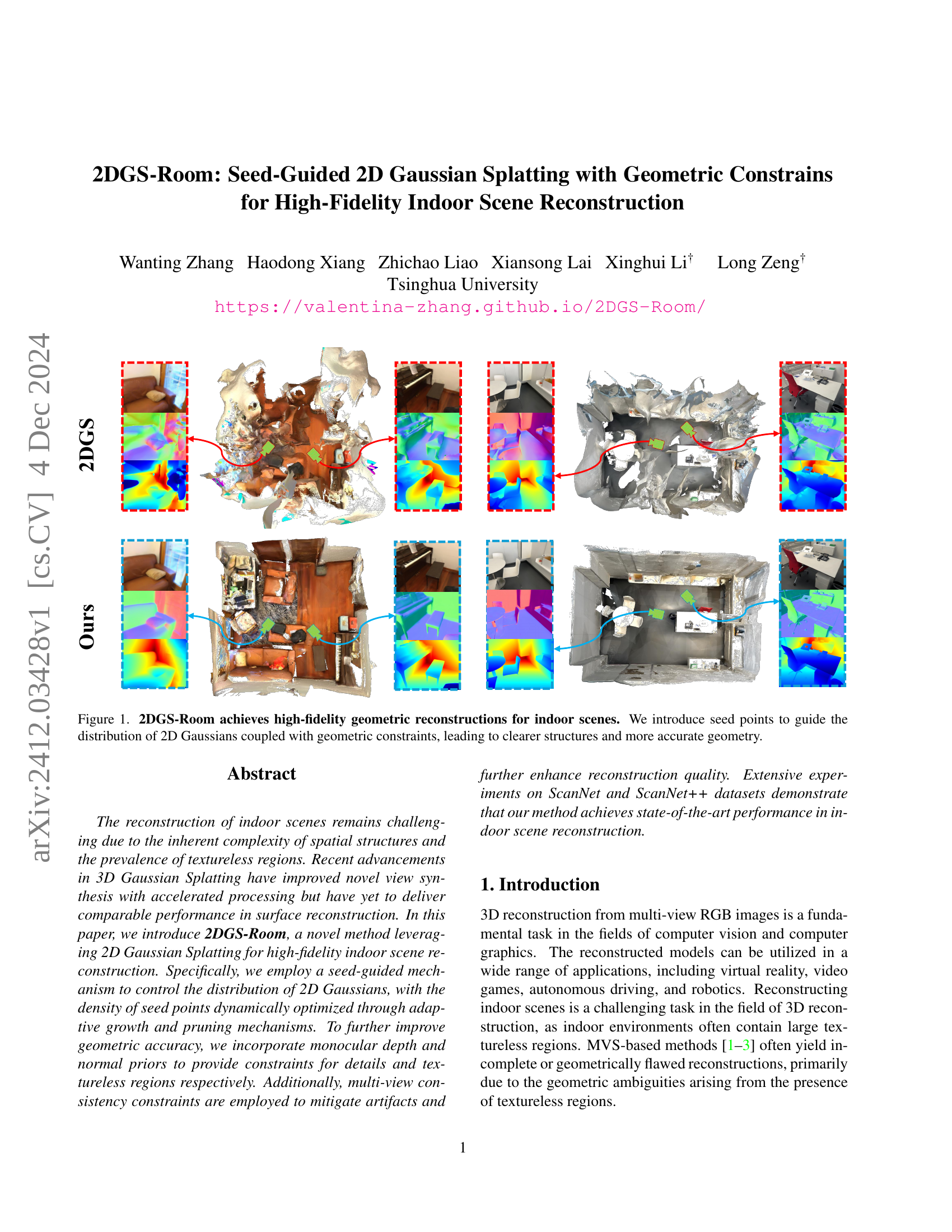

🔼 This figure showcases the high-fidelity indoor scene reconstruction capabilities of the 2DGS-Room method. The method uses seed points to guide the placement of 2D Gaussian primitives. These seed points, coupled with geometric constraints, improve the accuracy and clarity of the reconstructed scene geometry, resulting in more defined structures and improved overall accuracy compared to methods that don’t utilize seed points. The images in the figure compare reconstructions from the proposed method to results from other methods, highlighting the improved results.

read the caption

Figure 1: 2DGS-Room achieves high-fidelity geometric reconstructions for indoor scenes. We introduce seed points to guide the distribution of 2D Gaussians coupled with geometric constraints, leading to clearer structures and more accurate geometry.

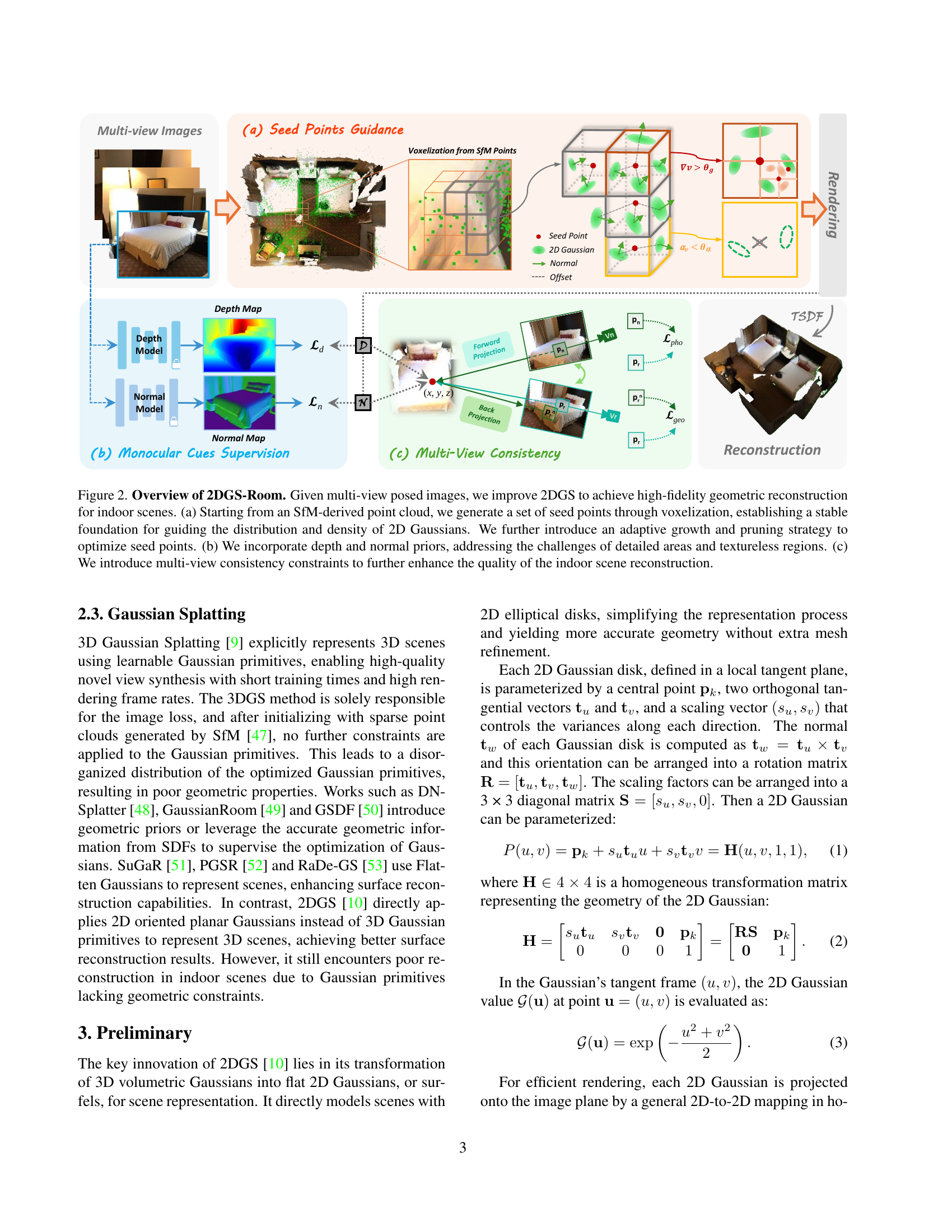

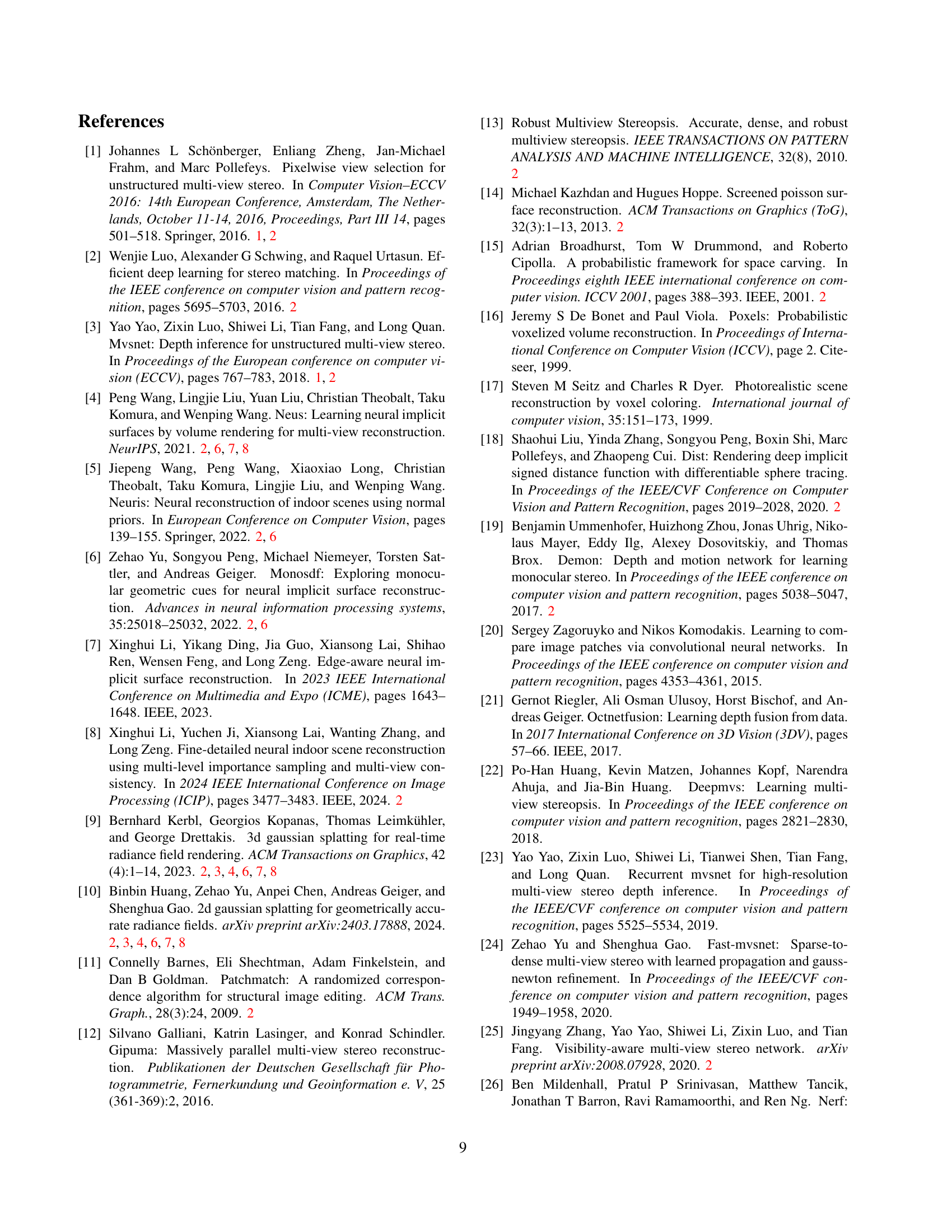

| Method | Acc. ↓ | Comp. ↓ | Prec. ↑ | Recall ↑ | F-score ↑ | Acc. ↓ | Comp. ↓ | Prec. ↑ | Recall ↑ | F-score ↑ |

|---|---|---|---|---|---|---|---|---|---|---|

| NeuS [4] | 0.105 | 0.124 | 0.448 | 0.378 | 0.409 | 0.160 | 0.224 | 0.294 | 0.221 | 0.251 |

| Neuralangelo [34] | 0.185 | 0.223 | 0.252 | 0.260 | 0.255 | 0.363 | 0.264 | 0.172 | 0.120 | 0.141 |

| 3DGS [9] | 0.338 | 0.406 | 0.129 | 0.067 | 0.085 | 0.144 | 0.990 | 0.322 | 0.066 | 0.104 |

| SuGaR [51] | 0.167 | 0.148 | 0.361 | 0.373 | 0.366 | 0.158 | 0.178 | 0.383 | 0.349 | 0.361 |

| 2DGS [10] | 0.157 | 0.151 | 0.336 | 0.347 | 0.341 | 0.359 | 0.228 | 0.230 | 0.160 | 0.183 |

| PGSR [52] | 0.125 | 0.117 | 0.420 | 0.433 | 0.426 | 0.204 | 0.202 | 0.353 | 0.217 | 0.249 |

| RaDe-GS [53] | 0.167 | 0.205 | 0.309 | 0.307 | 0.306 | 0.284 | 0.252 | 0.171 | 0.179 | 0.166 |

| 2DGS-Room (Ours) | 0.055 | 0.092 | 0.648 | 0.518 | 0.575 | 0.262 | 0.112 | 0.450 | 0.498 | 0.464 |

🔼 This table presents a quantitative comparison of the proposed 2DGS-Room method against several state-of-the-art techniques for 3D indoor scene reconstruction. The comparison uses five standard metrics: Accuracy, Completion, Precision, Recall, and F-score. These metrics assess the geometric accuracy and completeness of the reconstructed 3D models. Results are averaged across eight scenes from the ScanNet dataset and four scenes from the ScanNet++ dataset. The table highlights that 2DGS-Room achieves the best F-score among all compared methods, indicating superior performance in balancing precision and recall in the 3D reconstruction task. The metrics provide a comprehensive evaluation of the reconstruction quality, considering both geometric accuracy and completeness.

read the caption

Table 1: Quantitative reconstruction comparison on ScanNet and ScanNet++ dataset. Averaged results are reported over 8 scenes and 4 scenes, respectively. 2DGS-Room achieves the best F-score.

In-depth insights#

Seed-Guided Splats#

The concept of “Seed-Guided Splats” in the context of 3D scene reconstruction using Gaussian splatting represents a significant advancement. It addresses the limitations of previous Gaussian splatting methods that often result in an uneven distribution of Gaussian primitives, leading to inaccurate and incomplete surface reconstructions, particularly in complex indoor scenes. The introduction of seed points acts as a guiding mechanism, controlling the density and placement of the Gaussian splats. This ensures that they align with actual surfaces within the scene. The method is further enhanced by employing an adaptive growth and pruning strategy, dynamically adjusting the density of seed points based on the complexity of the local geometry, thereby providing a more efficient representation. In essence, seed-guided splats offer a structured approach to Gaussian splatting, effectively bridging the gap between the efficiency of the splatting technique and the accuracy required for high-fidelity indoor scene reconstruction. This targeted approach offers a considerable improvement over unstructured Gaussian splatting, leading to more accurate and detailed 3D models.

Depth Priors’ Impact#

The integration of depth priors significantly enhances the accuracy and detail of the 3D reconstruction, particularly in areas with complex geometries or textureless regions. Depth priors act as a powerful constraint, guiding the model to generate more realistic depth maps and reducing ambiguities. The use of a scale-and-shift-invariant loss further improves the robustness of the depth estimation by accounting for potential discrepancies between predicted and reference depths. This approach not only improves the geometric accuracy of the reconstruction but also reduces the presence of artifacts such as floating points or inaccurate depth estimations in challenging areas. The combination of depth priors with other components such as seed point guidance and multi-view consistency constraints leads to a synergistic improvement in overall reconstruction quality. The impact is especially pronounced in indoor scenes where textureless surfaces and complex geometries are prevalent.

Multi-View Fusion#

Multi-view fusion, in the context of 3D scene reconstruction, is a crucial step that integrates information from multiple viewpoints to create a coherent and complete 3D model. The core challenge lies in resolving inconsistencies and ambiguities arising from different camera perspectives, occlusions, and varying lighting conditions. Effective fusion strategies must address these issues by intelligently combining data from multiple views. This often involves techniques such as depth map fusion, which combines depth estimates from different cameras to produce a more comprehensive depth map, or point cloud fusion, which merges point clouds generated from individual views, resolving overlaps and inconsistencies. Advanced algorithms often leverage probabilistic frameworks or deep learning models to achieve robust and accurate fusion. These approaches allow for handling uncertainty inherent in individual views and produce high-quality reconstructions, often superior to those created using a single-view approach. Successfully addressing the challenges of multi-view fusion is paramount for achieving high-fidelity 3D scene reconstruction in complex environments.

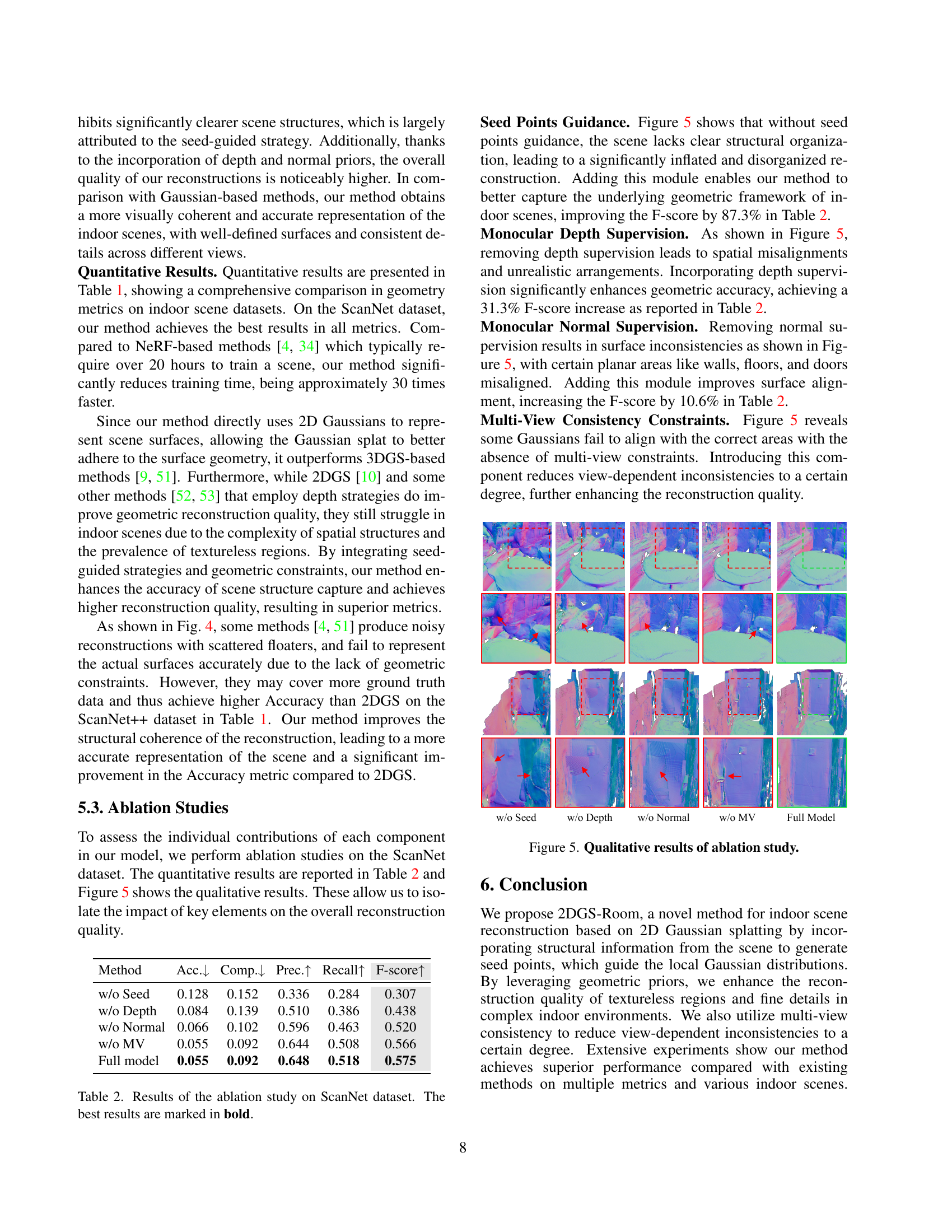

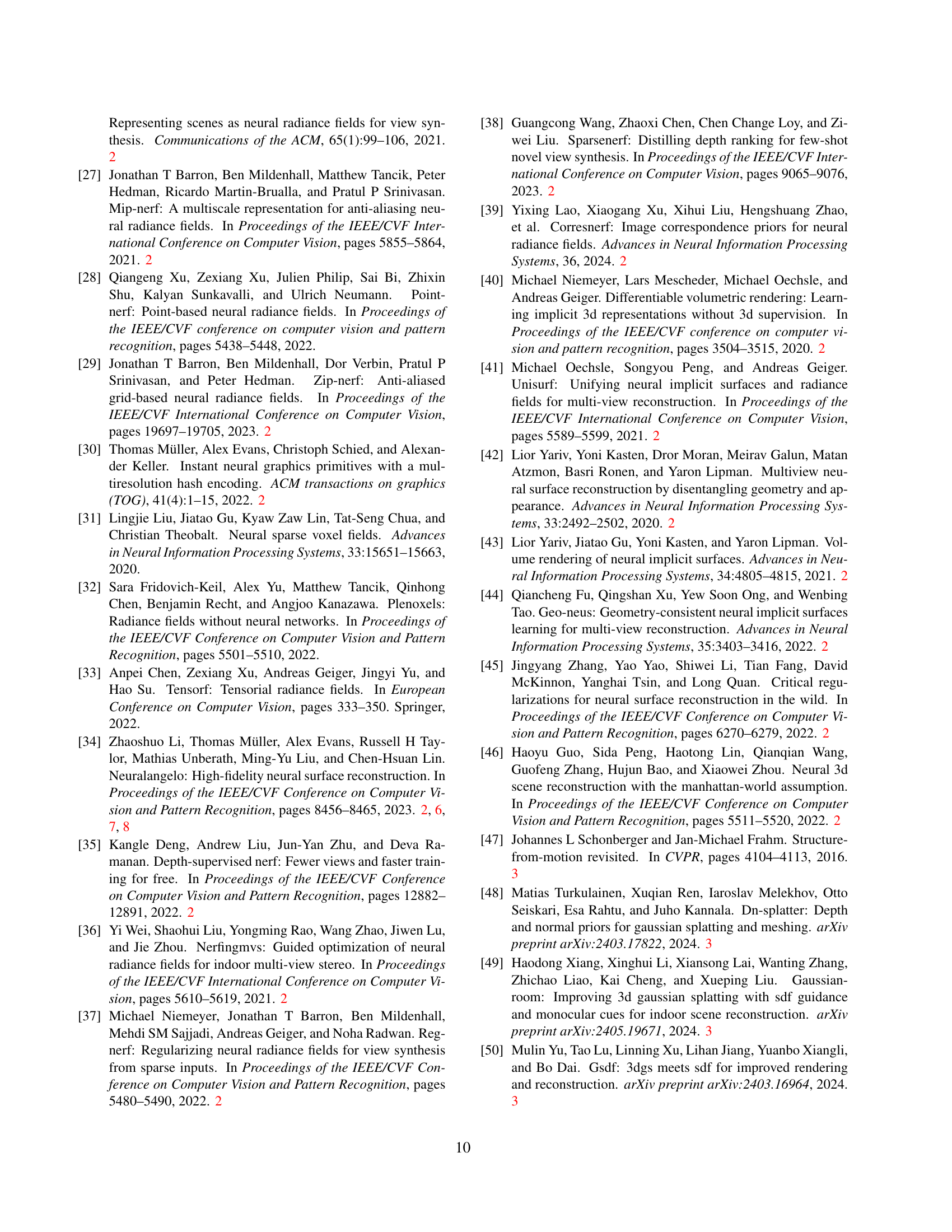

Ablation Study#

An ablation study systematically evaluates the contribution of individual components within a machine learning model. In the context of this research paper, it likely investigates the impact of specific design choices such as seed point guidance, monocular depth supervision, monocular normal supervision, and multi-view consistency constraints. By removing each component in turn and measuring the resulting performance changes, the study reveals which elements are essential for the model’s success and which are less important or even detrimental. The results from an ablation study guide future model improvements and help researchers understand the underlying mechanisms and strengths of their model. It can also reveal unexpected interactions between different components, providing valuable insights into model design and future research directions. A well-executed ablation study is crucial for building robust and reliable 3D reconstruction models and advancing the state-of-the-art in computer vision.

Future Enhancements#

Future work could explore several promising avenues to enhance 2DGS-Room. Improving the efficiency of the seed-guided optimization is crucial, perhaps through more sophisticated methods for adaptive growth and pruning. Investigating different seed point generation strategies beyond simple voxelization, potentially leveraging semantic information or learned representations, could also yield improvements. Incorporating more robust and diverse geometric priors is another key area; exploring alternative depth and normal estimation techniques or incorporating other geometric cues like surface curvature could enhance accuracy. Addressing the limitations in handling highly dynamic scenes or scenes with significant occlusion would also require further research. Finally, while multi-view consistency constraints were introduced, more sophisticated techniques could be developed to enforce consistency, especially in challenging lighting conditions. Ultimately, a combination of algorithmic improvements and the exploration of new data augmentation strategies will be necessary to reach a greater level of robustness and accuracy.

More visual insights#

More on figures

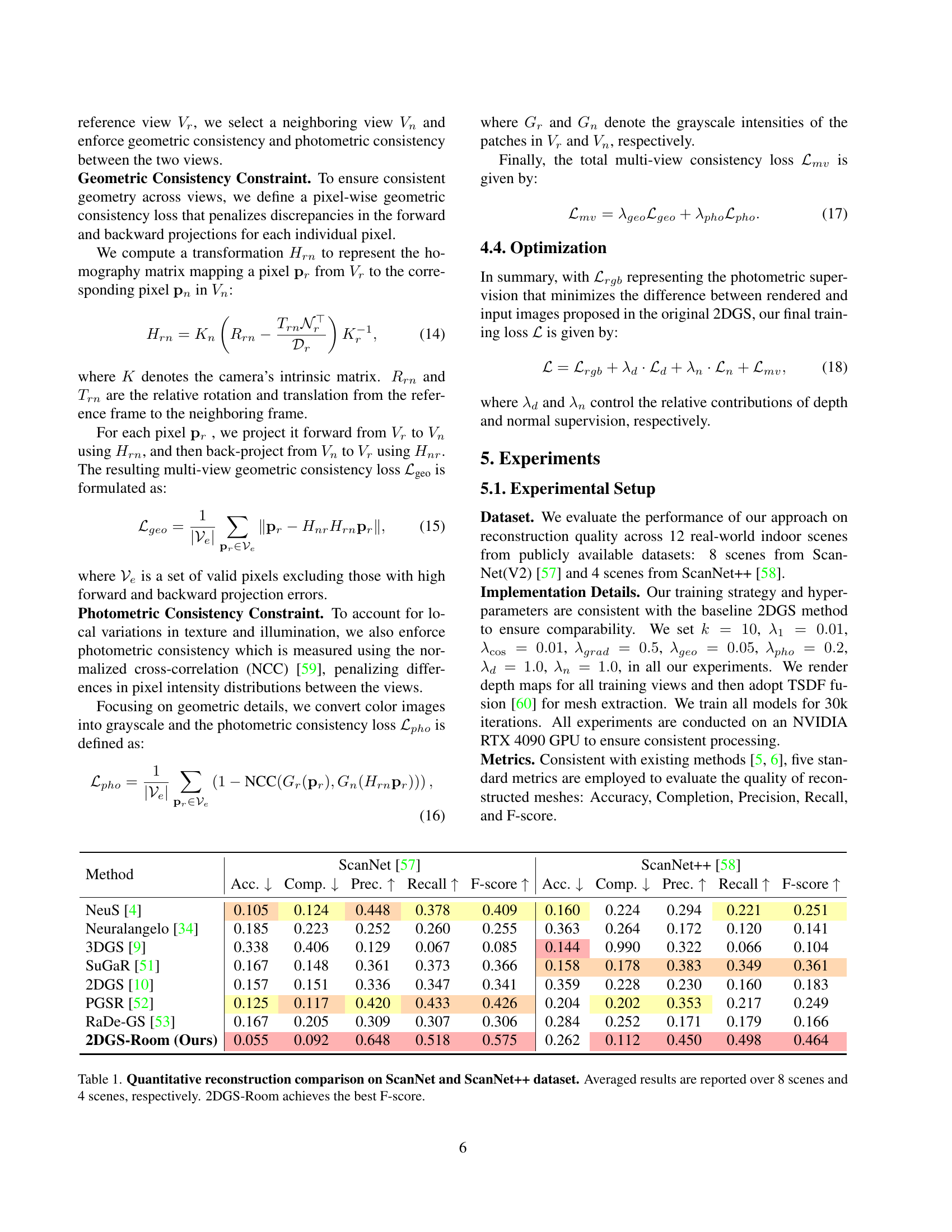

🔼 Figure 2 illustrates the 2DGS-Room framework for high-fidelity indoor scene reconstruction. It starts with multi-view images. (a) Seed points are generated from an SfM point cloud via voxelization, acting as a foundation to guide the distribution and density of 2D Gaussians. An adaptive growth and pruning strategy further optimizes these seed points. (b) Monocular depth and normal priors are integrated to improve accuracy in areas with detailed features or lacking texture. (c) Lastly, multi-view consistency constraints are added to address any remaining reconstruction artifacts, resulting in a refined final reconstruction.

read the caption

Figure 2: Overview of 2DGS-Room. Given multi-view posed images, we improve 2DGS to achieve high-fidelity geometric reconstruction for indoor scenes. (a) Starting from an SfM-derived point cloud, we generate a set of seed points through voxelization, establishing a stable foundation for guiding the distribution and density of 2D Gaussians. We further introduce an adaptive growth and pruning strategy to optimize seed points. (b) We incorporate depth and normal priors, addressing the challenges of detailed areas and textureless regions. (c) We introduce multi-view consistency constraints to further enhance the quality of the indoor scene reconstruction.

🔼 Figure 3 visualizes the differences in Gaussian primitive distributions between ground truth, 3DGS, 2DGS, and the proposed 2DGS-Room method. It highlights how 2DGS-Room, through its structured geometric constraints, effectively minimizes the number of scattered Gaussian primitives that do not align with the actual scene surface, resulting in a cleaner and more accurate reconstruction compared to the baseline methods.

read the caption

Figure 3: Ground truth scene surface and Gaussian primitives distribution. Compared with 3DGS and 2DGS, our method significantly reduces scattered floaters in the non-surface areas, benefitting from our designed structured geometric constraints.

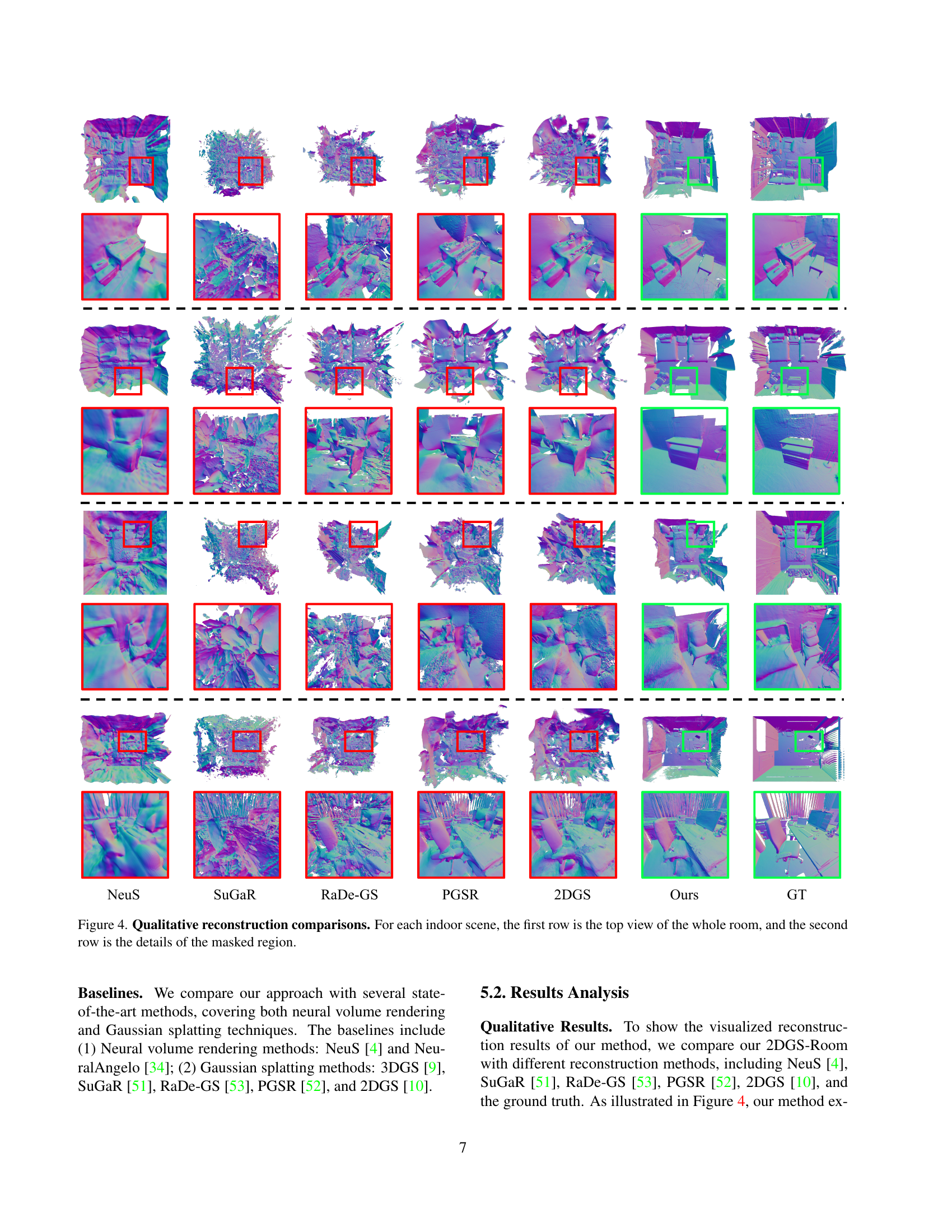

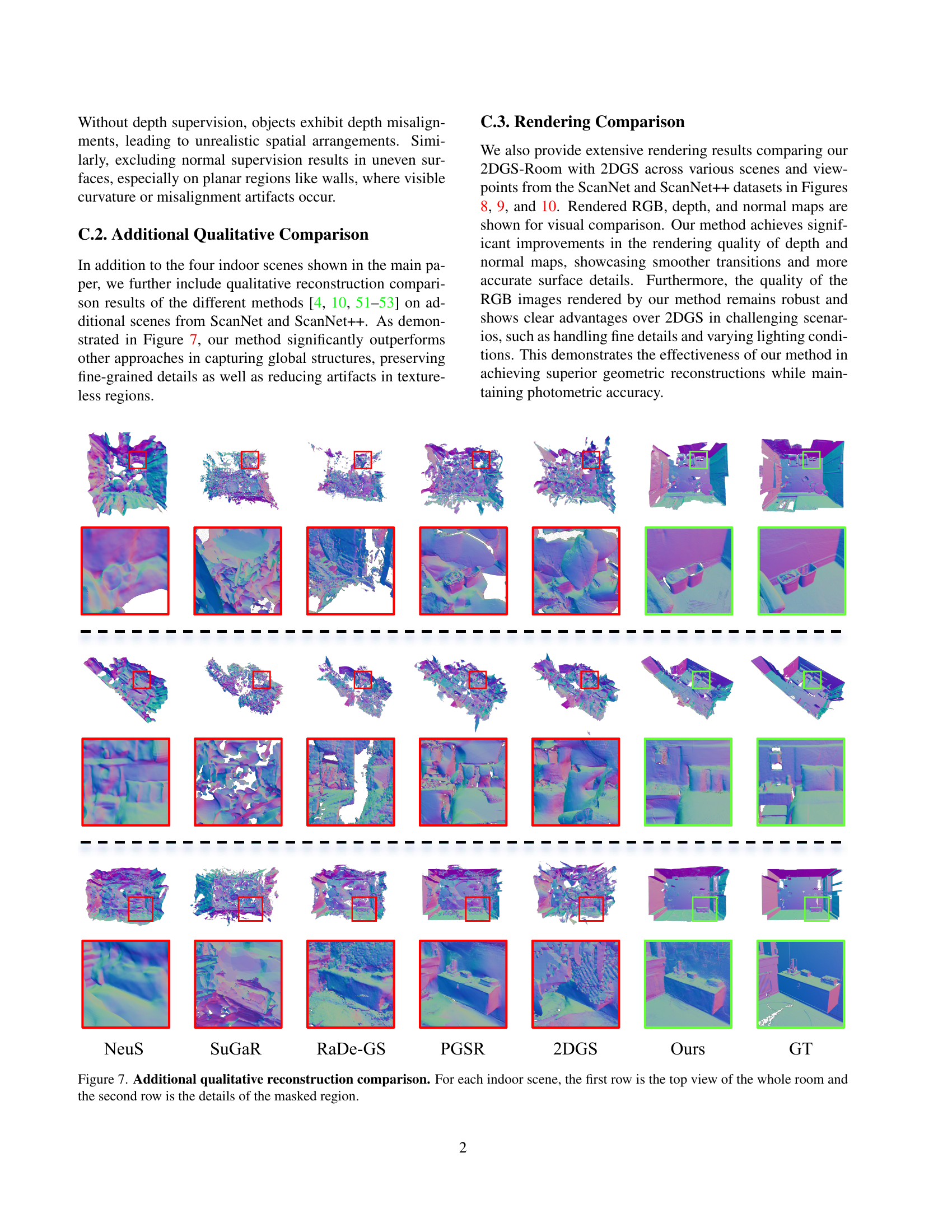

🔼 This figure displays a qualitative comparison of several state-of-the-art 3D reconstruction methods on indoor scenes. For each scene, two rows of images are shown. The first row provides a top-down view of the entire reconstructed room, offering a comprehensive overview of the scene’s overall structure and geometry. The second row zooms in on a specific masked region within the scene, highlighting the fine details and accuracy of the reconstruction methods. This detailed view allows for a more thorough comparison of the methods’ performance in capturing intricate geometric features and textures, such as walls, furniture, and other objects. The figure allows the reader to visualize the strengths and weaknesses of each reconstruction method in various aspects.

read the caption

Figure 4: Qualitative reconstruction comparisons. For each indoor scene, the first row is the top view of the whole room, and the second row is the details of the masked region.

🔼 This figure presents a qualitative comparison of the results obtained with different configurations of the 2DGS-Room model. By removing components such as seed points, depth supervision, normal supervision, or multi-view consistency, the impact of each component on the final reconstruction quality is shown. The images demonstrate how the absence of each component leads to artifacts and inaccuracies in the reconstruction, highlighting the importance of each element in achieving high-fidelity geometric reconstruction of indoor scenes.

read the caption

Figure 5: Qualitative results of ablation study.

🔼 Figure 6 presents a qualitative comparison of ablation studies, focusing on the overall scene structure rather than just local details. It visually demonstrates the impact of removing key components (seed points guidance, depth supervision, and normal supervision) from the 2DGS-Room model on the resulting 3D reconstruction. The comparison reveals the crucial role each component plays in achieving high-fidelity, structurally accurate reconstructions. The figure highlights how removing each component results in significant degradation of scene structure, with objects becoming fused or showing misalignments.

read the caption

Figure 6: Additional qualitative results of ablation study.

🔼 This figure presents a qualitative comparison of various methods for indoor scene reconstruction. It shows top-down views of entire rooms, followed by zoomed-in views of highlighted regions within those rooms for more detailed analysis. Multiple methods are compared, including the authors’ proposed method (2DGS-Room), to demonstrate the relative quality and completeness of each technique in reconstructing indoor scenes with varying levels of complexity and detail. The ground truth is also provided as a visual reference for comparison.

read the caption

Figure 7: Additional qualitative reconstruction comparison. For each indoor scene, the first row is the top view of the whole room and the second row is the details of the masked region.

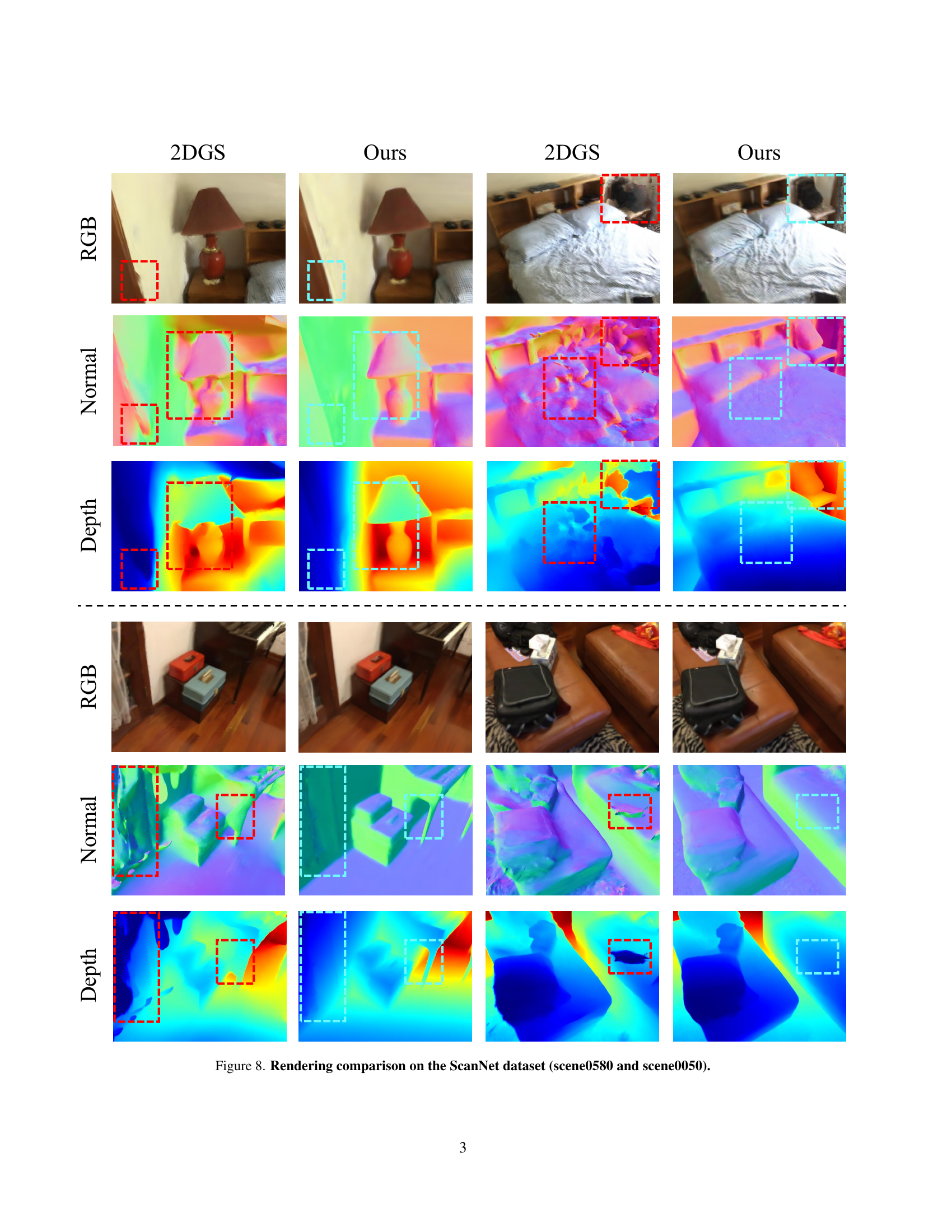

🔼 This figure shows a comparison of rendering results between the original 2DGS method and the proposed 2DGS-Room method on two scenes from the ScanNet dataset (scene0580 and scene0050). For each scene, RGB images, depth maps, and normal maps are displayed for both methods. The comparison highlights the improvements achieved by 2DGS-Room in terms of rendering quality, particularly in terms of depth and normal map accuracy and smoothness.

read the caption

Figure 8: Rendering comparison on the ScanNet dataset (scene0580 and scene0050).

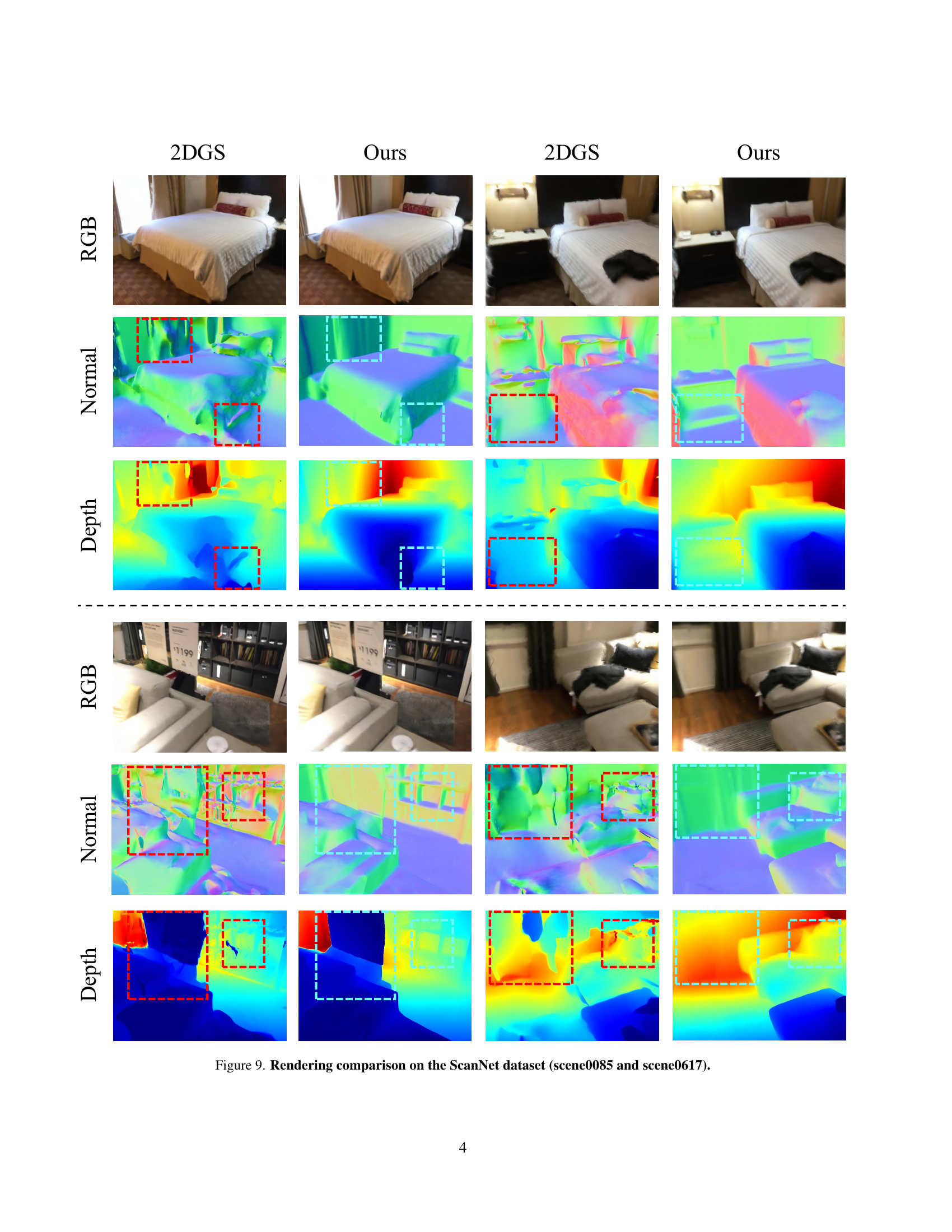

🔼 This figure presents a qualitative comparison of rendering results between the proposed 2DGS-Room method and the baseline 2DGS method. It showcases the RGB images, depth maps, and normal maps generated by each method for two indoor scenes from the ScanNet dataset (scene0085 and scene0617). The comparison highlights the improvements in rendering quality achieved by 2DGS-Room, particularly in terms of smoother transitions and more accurate surface details in the depth and normal maps, as well as more robust and visually appealing RGB images.

read the caption

Figure 9: Rendering comparison on the ScanNet dataset (scene0085 and scene0617).

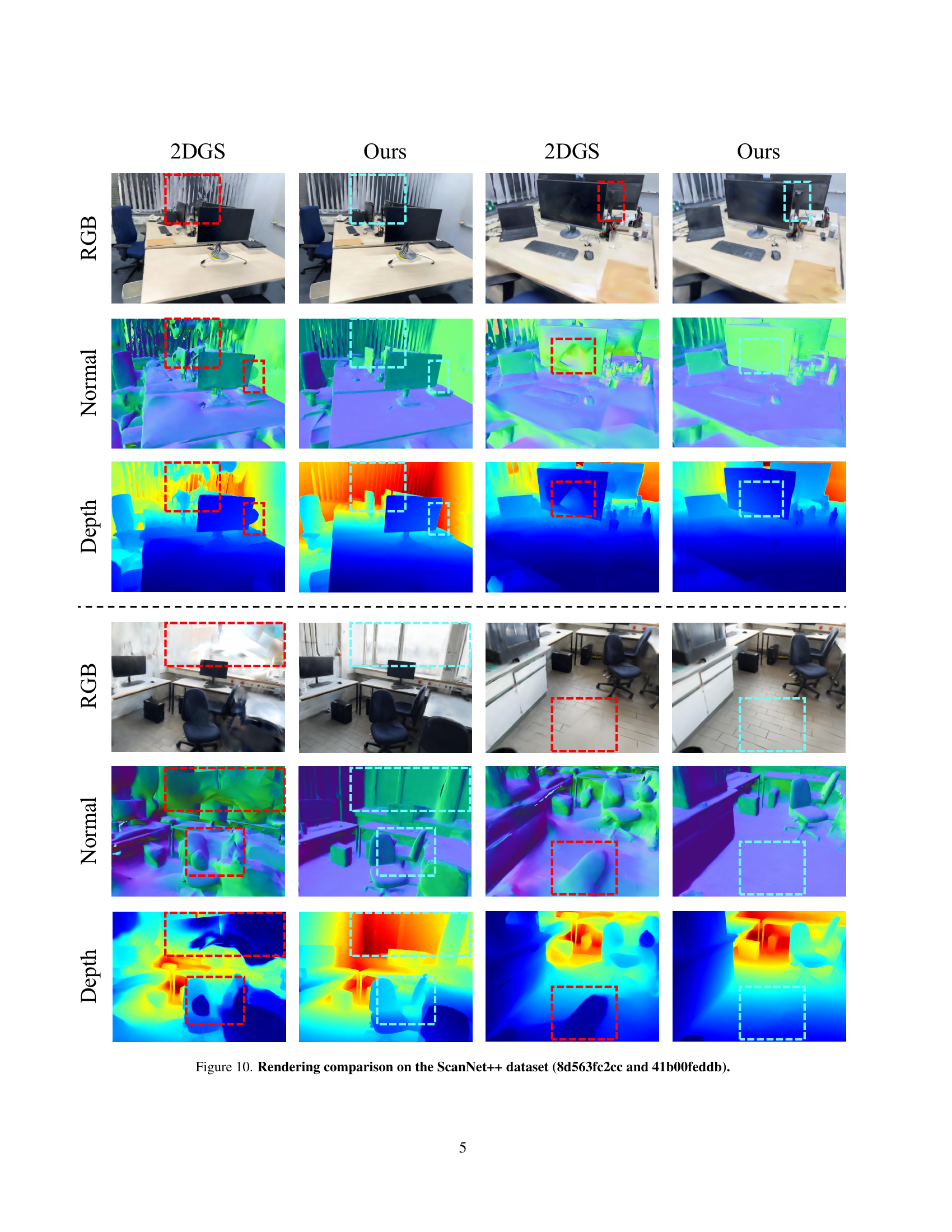

🔼 Figure 10 presents a detailed comparison of rendering results between the 2DGS method and the proposed 2DGS-Room method. It focuses on two scenes from the ScanNet++ dataset: 8d563fc2cc and 41b00feddb. The comparison includes RGB images, depth maps, and normal maps for each method and scene, allowing for a visual assessment of the differences in rendering quality, particularly highlighting improvements in accuracy and detail achieved by 2DGS-Room.

read the caption

Figure 10: Rendering comparison on the ScanNet++ dataset (8d563fc2cc and 41b00feddb).

More on tables

| Method | Acc.↓ | Comp.↓ | Prec.↑ | Recall↑ | F-score↑ |

|---|---|---|---|---|---|

| w/o Seed | 0.128 | 0.152 | 0.336 | 0.284 | 0.307 |

| w/o Depth | 0.084 | 0.139 | 0.510 | 0.386 | 0.438 |

| w/o Normal | 0.066 | 0.102 | 0.596 | 0.463 | 0.520 |

| w/o MV | 0.055 | 0.092 | 0.644 | 0.508 | 0.566 |

| Full model | 0.055 | 0.092 | 0.648 | 0.518 | 0.575 |

🔼 This table presents the results of an ablation study conducted on the ScanNet dataset to evaluate the individual contributions of different components in the proposed 2DGS-Room model. It shows the performance metrics (Accuracy, Completion, Precision, Recall, and F-score) achieved by the full model and variations of the model where specific components (seed points, depth supervision, normal supervision, and multi-view consistency constraints) were removed. The best performing model variation for each metric is highlighted in bold, illustrating the relative importance of each component in achieving high reconstruction quality.

read the caption

Table 2: Results of the ablation study on ScanNet dataset. The best results are marked in bold.

| Metric | Definition |

|---|---|

| Acc. | \mbox{mean}{c\in C}(\min{c^{}\in C^{}} |

| Comp. | \mbox{mean}{c^{}\in C^{}}(\min{c\in C} |

| Prec. | \mbox{mean}{c\in C}(\min{c^{}\in C^{}} |

| Recall | \mbox{mean}{c^{}\in C^{}}(\min{c\in C} |

| zoF-score | \frac{2\times\text{Prec}\times\text{Recall}}{\text{Prec}+\text{Recall}} |

🔼 This table defines the five 3D geometry metrics used to evaluate the quality of the reconstructed point clouds. These metrics assess the geometric fidelity by comparing the predicted point cloud (c) to the ground truth point cloud (c*). Specifically, Accuracy measures the average distance between corresponding points; Completion assesses how well the reconstruction covers the ground truth; Precision and Recall measure the proportion of points within a certain threshold; and F-score provides a balanced measure combining Precision and Recall. Lower values for Accuracy and Completion are better, while higher values for Precision, Recall, and F-score indicate better performance.

read the caption

Table 3: Definitions of 3D metrics. c𝑐citalic_c and c∗superscript𝑐c^{*}italic_c start_POSTSUPERSCRIPT ∗ end_POSTSUPERSCRIPT are the predicted and ground truth point clouds.

Full paper#