↗ arXiv ↗ Hugging Face ↗ Papers with Code

TL;DR#

Generating realistic 3D scenes from just a single image is a tough challenge in computer vision. Current methods either struggle with accuracy, especially when dealing with multiple objects, or are too slow and complex. Many approaches rely on reconstructing scenes from existing 3D models which hinders creativity and limits the ability to generate novel scenes. This makes producing natural-looking, composite 3D scenes from a single input picture very hard.

The researchers present MIDI, a new method that tackles this problem. MIDI uses multi-instance diffusion models, which means it can generate many 3D objects simultaneously. This is done by leveraging pre-trained object generation models and adding a new attention mechanism that helps the model understand how different objects relate to each other in space. Experiments show that MIDI outperforms existing methods, creating high-quality 3D scenes efficiently and exhibiting strong generalization across different types of input images. This opens up exciting possibilities in 3D scene generation and computer vision.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers in 3D scene generation and computer vision due to its novel approach to multi-instance diffusion. It addresses limitations of existing methods by enabling simultaneous generation of multiple 3D objects with accurate spatial relationships, thus advancing the field significantly. Its strong generalization ability and high-quality results make it a valuable contribution, opening avenues for new research on compositional 3D scene generation techniques and multi-instance attention mechanisms. The code release further enhances its impact by allowing other researchers to build upon this work.

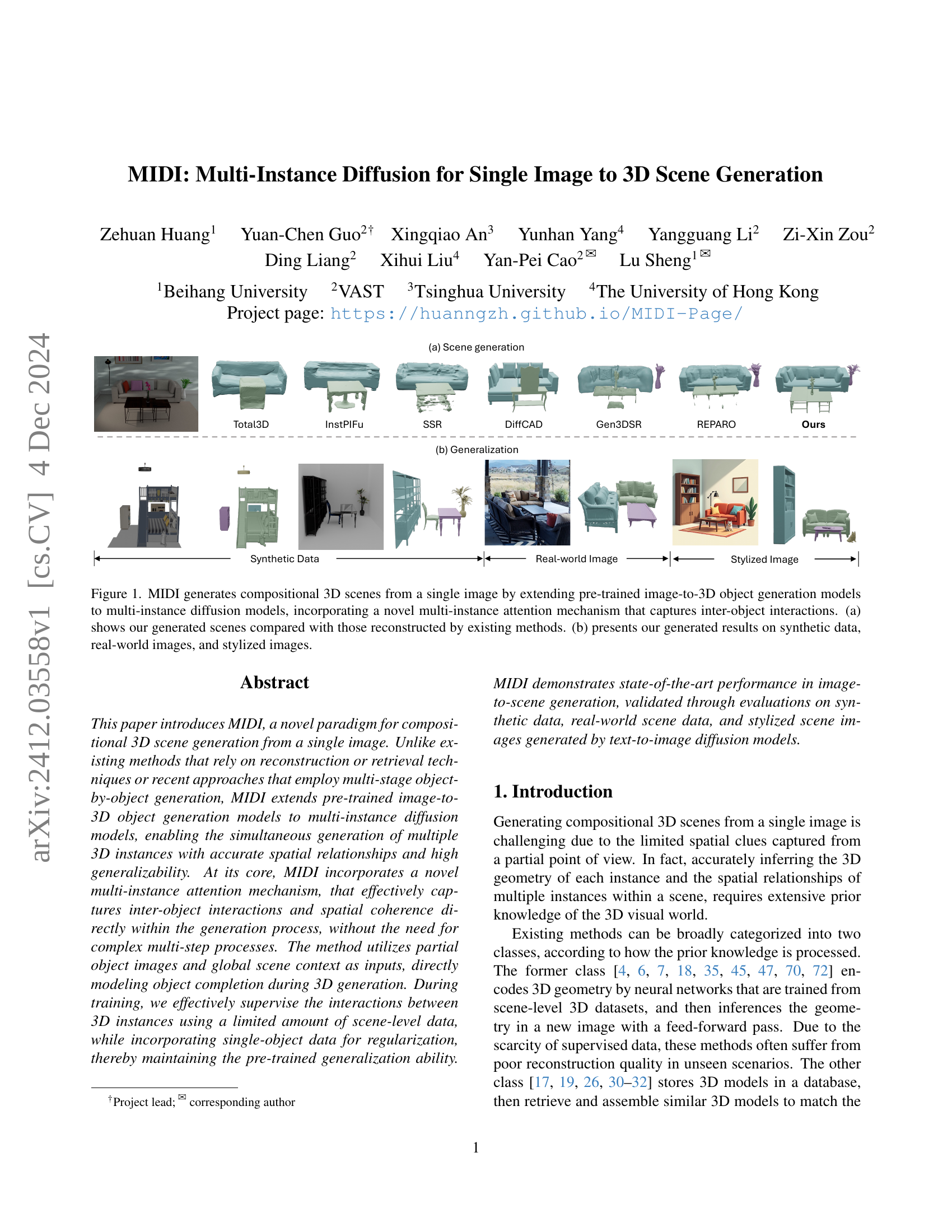

Visual Insights#

🔼 This figure demonstrates the capabilities of the MIDI model in generating compositional 3D scenes from a single input image. It achieves this by extending pre-trained image-to-3D object generation models and incorporating a novel multi-instance attention mechanism to effectively capture interactions between multiple objects within the scene. Panel (a) provides a visual comparison of 3D scenes generated by MIDI against those generated by several existing methods. Panel (b) showcases MIDI’s ability to generalize across different types of input images, including synthetic data, real-world photographs, and stylized images created by a text-to-image diffusion model.

read the caption

Figure 1: MIDI generates compositional 3D scenes from a single image by extending pre-trained image-to-3D object generation models to multi-instance diffusion models, incorporating a novel multi-instance attention mechanism that captures inter-object interactions. (a) shows our generated scenes compared with those reconstructed by existing methods. (b) presents our generated results on synthetic data, real-world images, and stylized images.

| Method | 3D-Front CD-S ↓ | 3D-Front F-Score-S ↑ | 3D-Front CD-O ↓ | 3D-Front F-Score-O ↑ | 3D-Front IoU-B ↑ | BlendSwap CD-S ↓ | BlendSwap F-Score-S ↑ | BlendSwap CD-O ↓ | BlendSwap F-Score-O ↑ | BlendSwap IoU-B ↑ | Runtime ↓ |

|---|---|---|---|---|---|---|---|---|---|---|---|

| PanoRecon [7] | 0.150 | 40.65 | 0.211 | 35.05 | 0.240 | 0.427 | 19.11 | 0.713 | 13.06 | 0.119 | 32s |

| Total3D [45] | 0.270 | 32.90 | 0.179 | 36.38 | 0.238 | 0.258 | 37.93 | 0.168 | 38.14 | 0.328 | 39s |

| InstPIFu [35] | 0.138 | 39.99 | 0.165 | 38.11 | 0.299 | 0.129 | 50.28 | 0.167 | 38.42 | 0.340 | 32s |

| SSR [4] | 0.140 | 39.76 | 0.170 | 37.79 | 0.311 | 0.132 | 48.72 | 0.173 | 38.11 | 0.336 | 32s |

| DiffCAD [17] | 0.117 | 43.58 | 0.190 | 37.45 | 0.392 | 0.110 | 52.83 | 0.169 | 38.98 | 0.457 | 64s |

| Gen3DSR [11] | 0.123 | 40.07 | 0.157 | 38.11 | 0.363 | 0.107 | 60.17 | 0.148 | 40.76 | 0.449 | 9min |

| REPARO [20] | 0.129 | 41.68 | 0.160 | 40.85 | 0.339 | 0.115 | 62.39 | 0.151 | 42.84 | 0.410 | 4min |

| Ours | 0.080 | 50.19 | 0.103 | 53.58 | 0.518 | 0.077 | 78.21 | 0.090 | 62.94 | 0.663 | 40s |

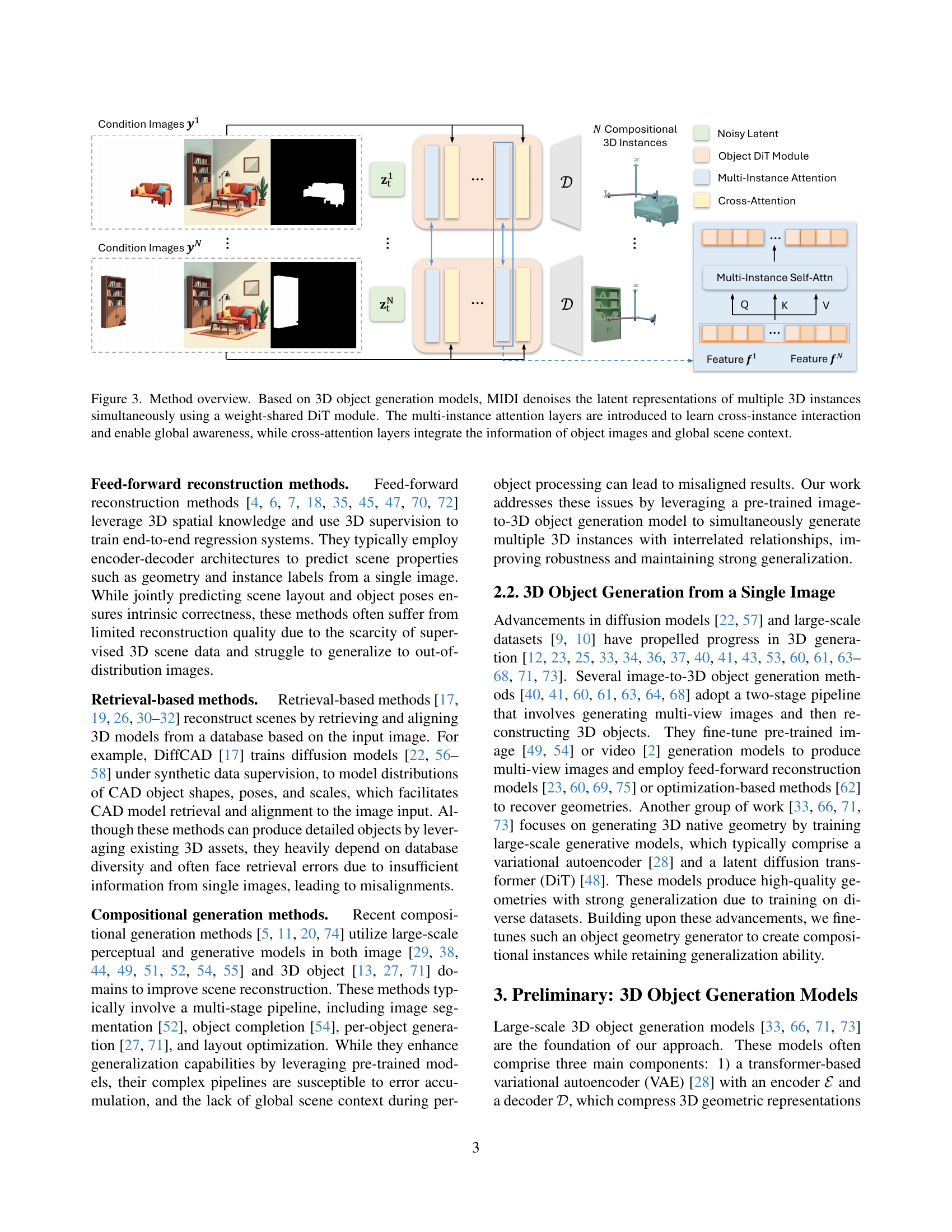

🔼 This table presents a quantitative comparison of different methods for 3D scene generation on synthetic datasets (3D-Front [15] and BlendSwap [1]). The comparison uses four metrics: scene-level Chamfer Distance (CD-S) and F-score (F-Score-S), object-level Chamfer Distance (CD-O) and F-score (F-Score-O), and the Volume IoU of object bounding boxes (IoU-B). Lower values for CD-S and CD-O and higher values for F-Score-S, F-Score-O, and IoU-B indicate better performance. The table also includes the runtime for each method.

read the caption

Table 1: Quantitative comparisons on synthetic datasets [15, 1] in scene-level Chamfer Distance (CD-S) and F-Score (F-Score-S), object-level Chamfer Distance (CD-O) and F-Score (F-Score-O), and Volume IoU of object bounding boxes (IoU-B).

In-depth insights#

Multi-instance Diffusion#

The concept of “Multi-instance Diffusion” in the context of 3D scene generation represents a significant advancement. Instead of generating objects individually and then painstakingly arranging them, this approach models the generation of multiple 3D instances simultaneously. This is achieved by extending pre-trained image-to-3D object generation models into a multi-instance diffusion framework. The key innovation lies in the integration of a novel multi-instance attention mechanism. This mechanism allows the model to directly capture inter-object interactions and spatial relationships during the generation process, eliminating the need for complex multi-step procedures and improving coherence. By modeling object completion directly within the generation, the approach also addresses limitations associated with staged object-by-object methods, which are prone to error accumulation and misalignments. The use of both scene-level data and single-object data during training ensures that the model retains the generalization ability of the original object generation model, while enhancing its ability to model compositional scenes. This holistic approach to scene generation is expected to significantly improve accuracy and efficiency in generating realistic and coherent 3D scenes from a single image.

3D Scene Synthesis#

3D scene synthesis, as explored in the context of the provided research paper, represents a significant challenge in computer vision. The core difficulty lies in accurately reconstructing the 3D geometry of individual objects within a scene and, crucially, representing their spatial relationships. Existing methods often struggle with this, either through limitations in their ability to handle multiple objects simultaneously or due to inherent inaccuracies in reconstruction and retrieval processes. The research highlights the potential of multi-instance diffusion models as a powerful approach, enabling simultaneous generation of multiple 3D instances while capturing complex inter-object interactions. The integration of a novel multi-instance attention mechanism is a key innovation, allowing the model to directly learn these relationships within the generation process, overcoming limitations of stepwise compositional approaches. The emphasis on generalization, through the utilization of pre-trained object generation models and the incorporation of single-object data for regularization, addresses previous shortcomings observed in existing models. This strategy ensures high-quality results even on unseen data, paving the way for significant advancements in the field of 3D scene synthesis.

Attention Mechanism#

The core of the paper revolves around a novel multi-instance attention mechanism, designed to address the limitations of previous methods in 3D scene generation. Existing techniques often struggle with accurately capturing inter-object relationships due to the sequential nature of processing. The proposed attention mechanism tackles this by enabling simultaneous generation of multiple 3D instances, allowing for the direct modeling of spatial coherence and inter-object interactions within a single generation process. This differs significantly from traditional methods where these interactions are dealt with as post-processing steps. The mechanism’s effectiveness is evident in the paper’s results, showcasing improved accuracy in spatial arrangements and enhanced scene composition compared to baseline methods. This highlights the critical role of incorporating spatial awareness directly into the diffusion process, rather than relying on sequential object generation and subsequent optimization. The ability to capture complex interplay between objects sets this approach apart and contributes to the advancement of image-to-3D scene generation.

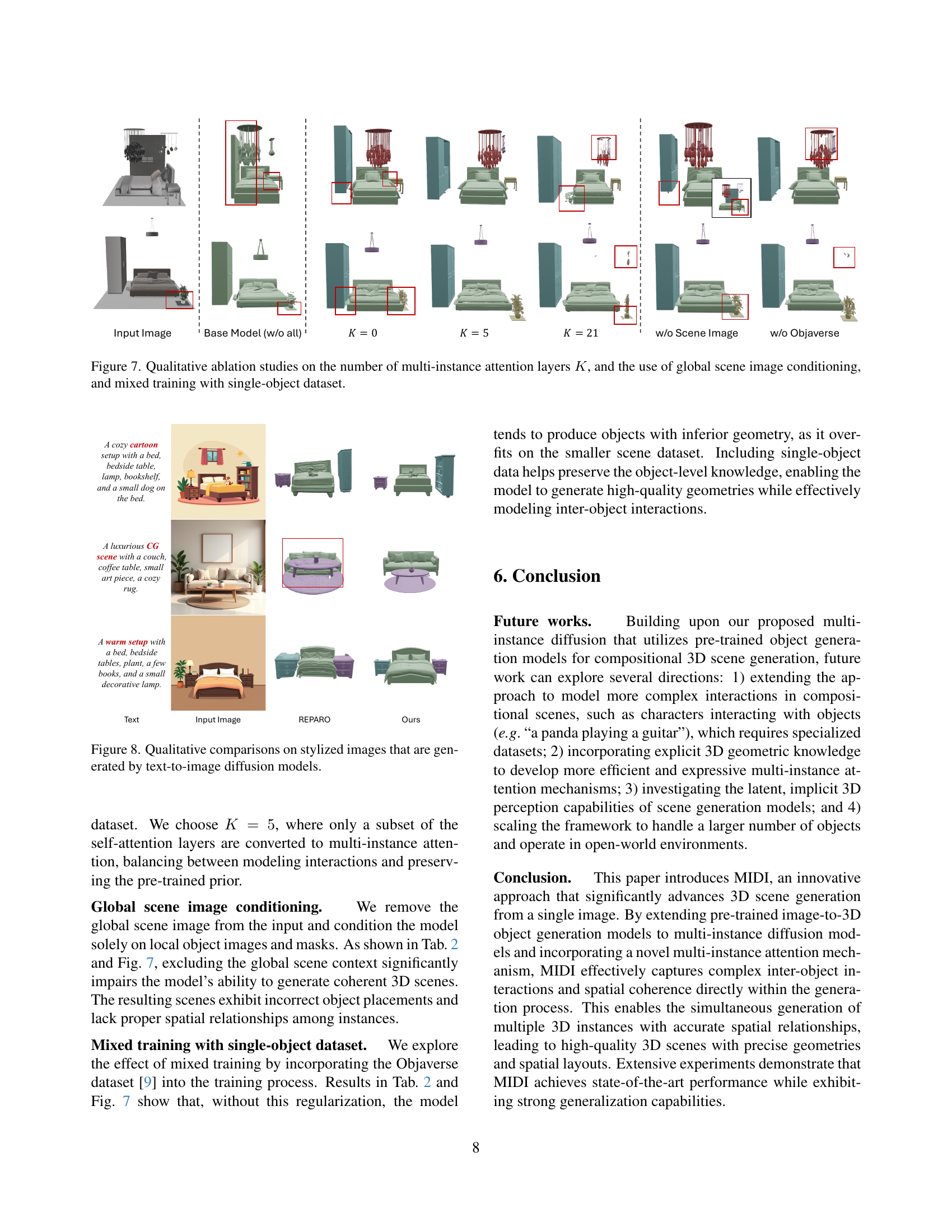

Ablation Experiments#

Ablation experiments systematically remove components of a model to assess their individual contributions. In this context, the researchers would likely disable features such as multi-instance attention, global scene conditioning, or the use of single-object datasets during training, and compare the performance of the resulting models to the full model. This approach is vital to isolate the impact of each component and validate their hypotheses. For instance, removing multi-instance attention should negatively affect the spatial coherence and relationships between generated 3D objects. Similarly, disabling global scene conditioning would likely impair the model’s ability to capture the overall scene context and integrate objects appropriately. The results of these ablation studies would provide strong evidence for the necessity and efficacy of each architectural choice, strengthening the overall conclusions and understanding of the model’s performance.

Future Directions#

The paper’s “Future Directions” section suggests several promising avenues for extending the presented multi-instance diffusion model. Extending the model to handle more complex interactions between objects, such as characters interacting with objects, is crucial. This would require specialized datasets capturing these dynamic relationships. Incorporating explicit 3D geometric knowledge into the model could improve efficiency and expressiveness, possibly via integrating explicit geometric priors or constraints during the diffusion process. The authors also highlight the need for investigating the latent, implicit 3D perception capabilities within the scene generation models. Finally, scaling the framework to handle a larger number of objects and open-world environments is vital for real-world applicability. Addressing these future directions would advance the field significantly, making scene generation more robust, accurate, and applicable to complex and dynamic scenarios.

More visual insights#

More on figures

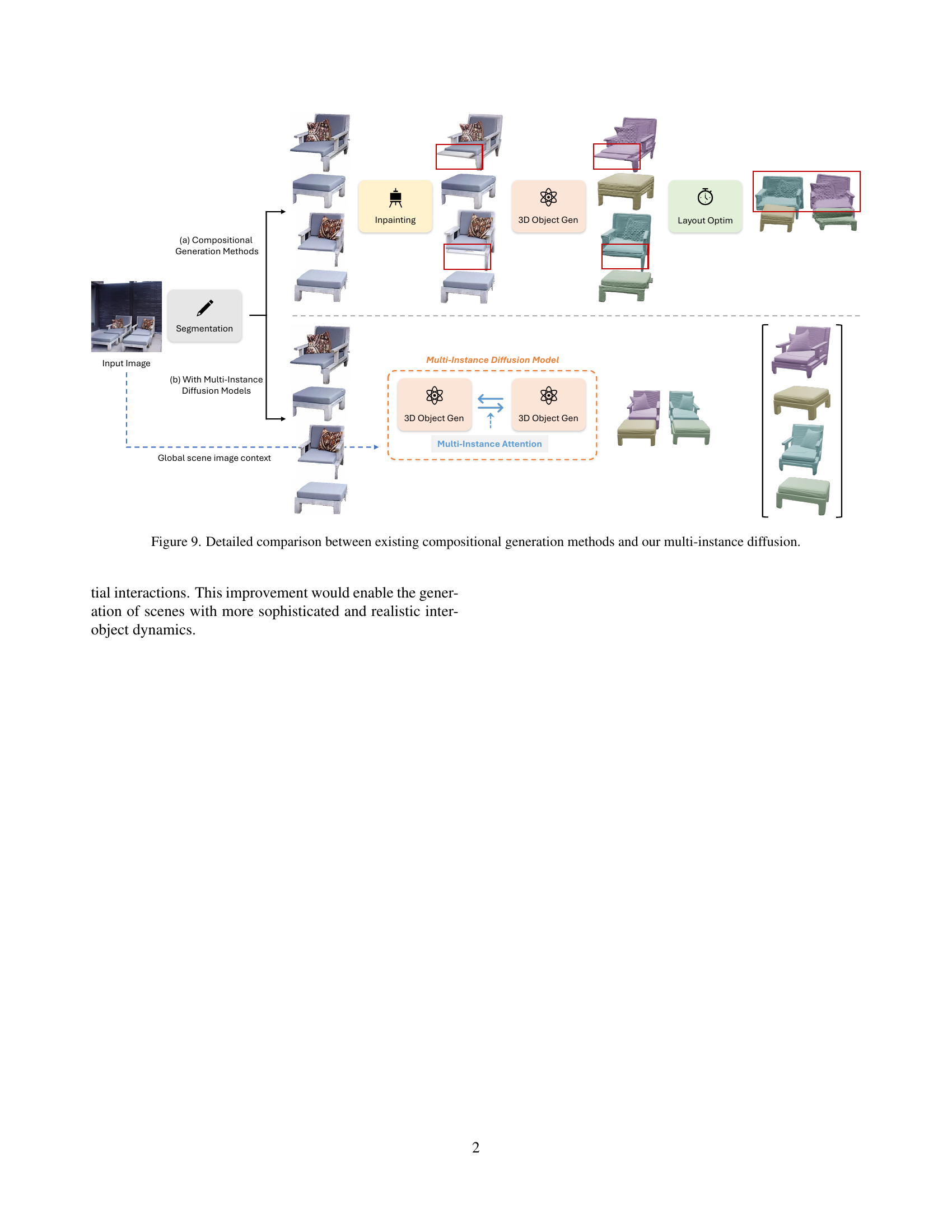

🔼 Figure 2 illustrates the difference between traditional compositional 3D scene generation methods and the proposed MIDI approach. Traditional methods (a) involve multiple sequential steps: image segmentation, individual object image inpainting, individual 3D object generation for each object, and finally, layout optimization to arrange the generated objects in a scene. This multi-step process is prone to error accumulation, making it difficult to ensure the final scene is coherent. In contrast, the MIDI pipeline (b) utilizes multi-instance diffusion, directly generating multiple 3D objects simultaneously with a novel multi-instance attention mechanism that captures spatial relationships, thus creating a more efficient and accurate scene generation process.

read the caption

Figure 2: Comparison between our scene generation pipeline with multi-instance diffusion and existing compositional generation methods.

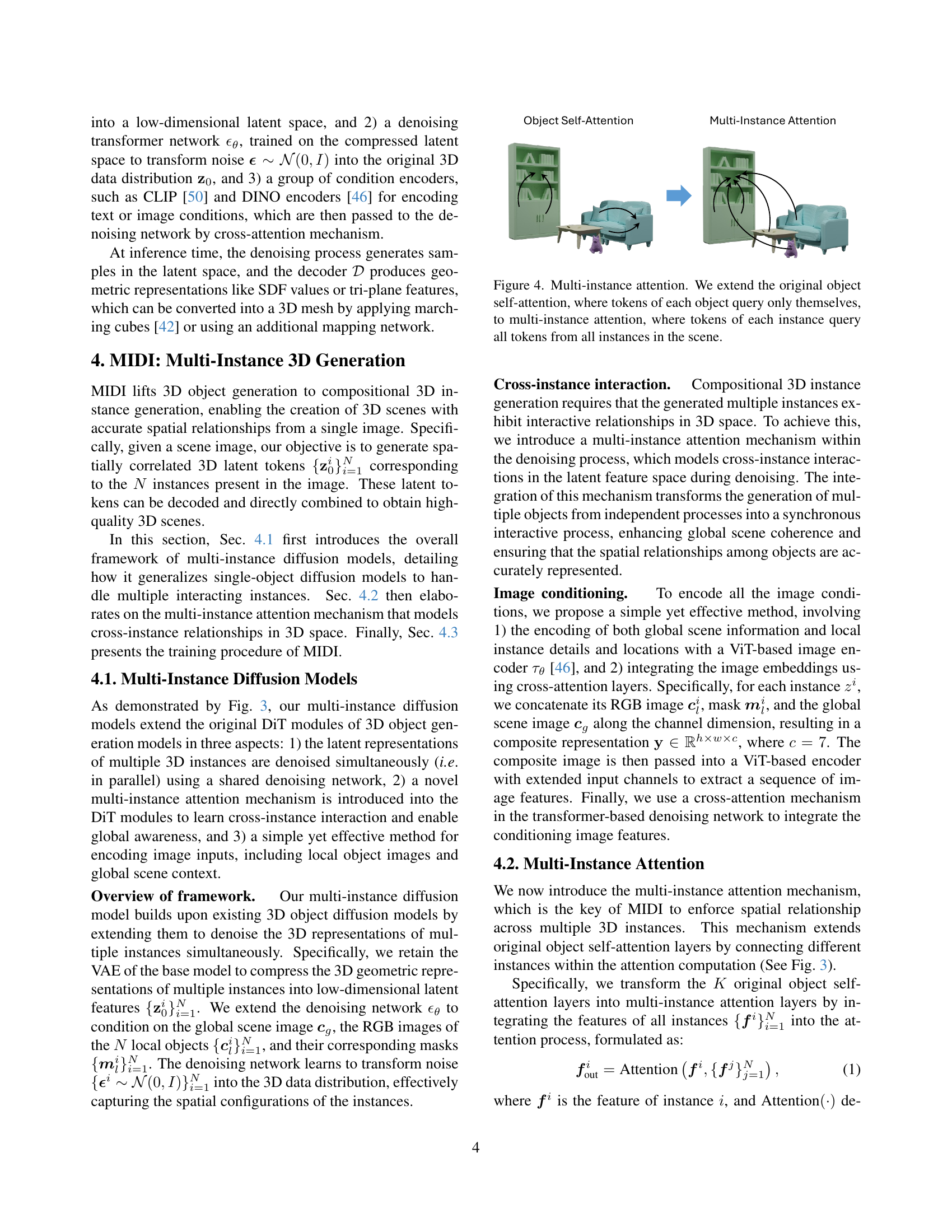

🔼 This figure illustrates the architecture of the MIDI model. It starts with multiple input images, each representing a single object in a scene. These images are pre-processed by a 3D object generation model to extract latent representations (features encoding the objects’ 3D structure). Then, a shared diffusion model, called a DiT module (denoising transformer), processes these latent representations simultaneously. Key to the model are multi-instance attention layers which enable the different object representations to interact, effectively modeling relationships between them (e.g., spatial arrangement, occlusion). Finally, cross-attention layers integrate information from both the object-specific image features and a global scene context image into the diffusion process. The output is a set of denoised latent representations corresponding to multiple 3D instances that are spatially coherent and consistent with the global scene context.

read the caption

Figure 3: Method overview. Based on 3D object generation models, MIDI denoises the latent representations of multiple 3D instances simultaneously using a weight-shared DiT module. The multi-instance attention layers are introduced to learn cross-instance interaction and enable global awareness, while cross-attention layers integrate the information of object images and global scene context.

🔼 This figure illustrates the mechanism of multi-instance attention used in the MIDI model. The original object self-attention mechanism only allows tokens within a single object to interact. In contrast, the enhanced multi-instance attention allows tokens from each object to attend to (query) tokens from all other objects in the scene. This crucial modification enables the model to capture inter-object relationships and spatial coherence during 3D scene generation, leading to more realistic and accurate results.

read the caption

Figure 4: Multi-instance attention. We extend the original object self-attention, where tokens of each object query only themselves, to multi-instance attention, where tokens of each instance query all tokens from all instances in the scene.

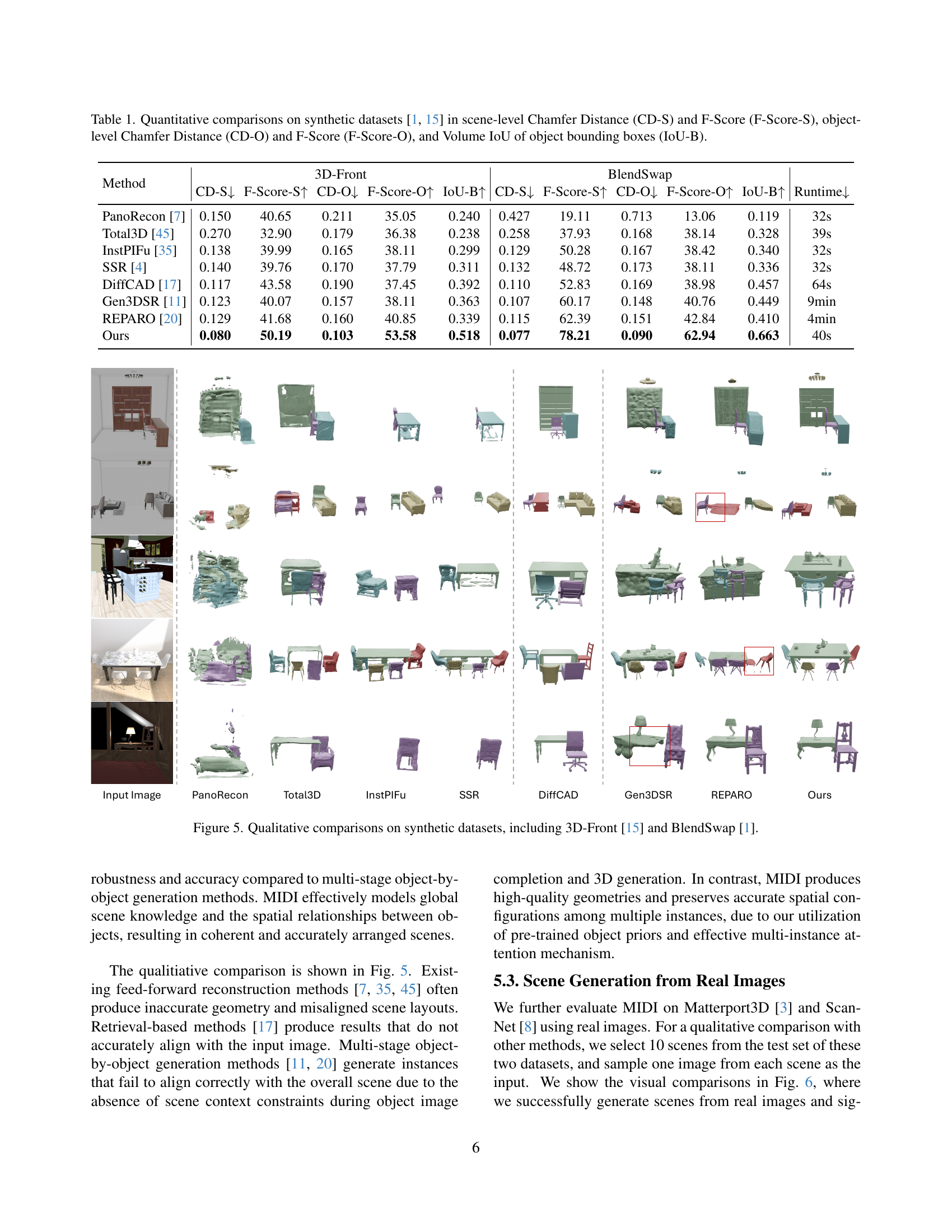

🔼 Figure 5 presents a qualitative comparison of 3D scene generation results from different methods on synthetic datasets, namely 3D-Front and BlendSwap. It visually demonstrates the strengths and weaknesses of various approaches, including the proposed MIDI method, by showing the generated 3D scenes side-by-side with the input images. This allows for a direct visual assessment of the accuracy, completeness, and coherence of generated scenes, particularly regarding object shapes, spatial relationships, and overall scene quality.

read the caption

Figure 5: Qualitative comparisons on synthetic datasets, including 3D-Front [15] and BlendSwap [1].

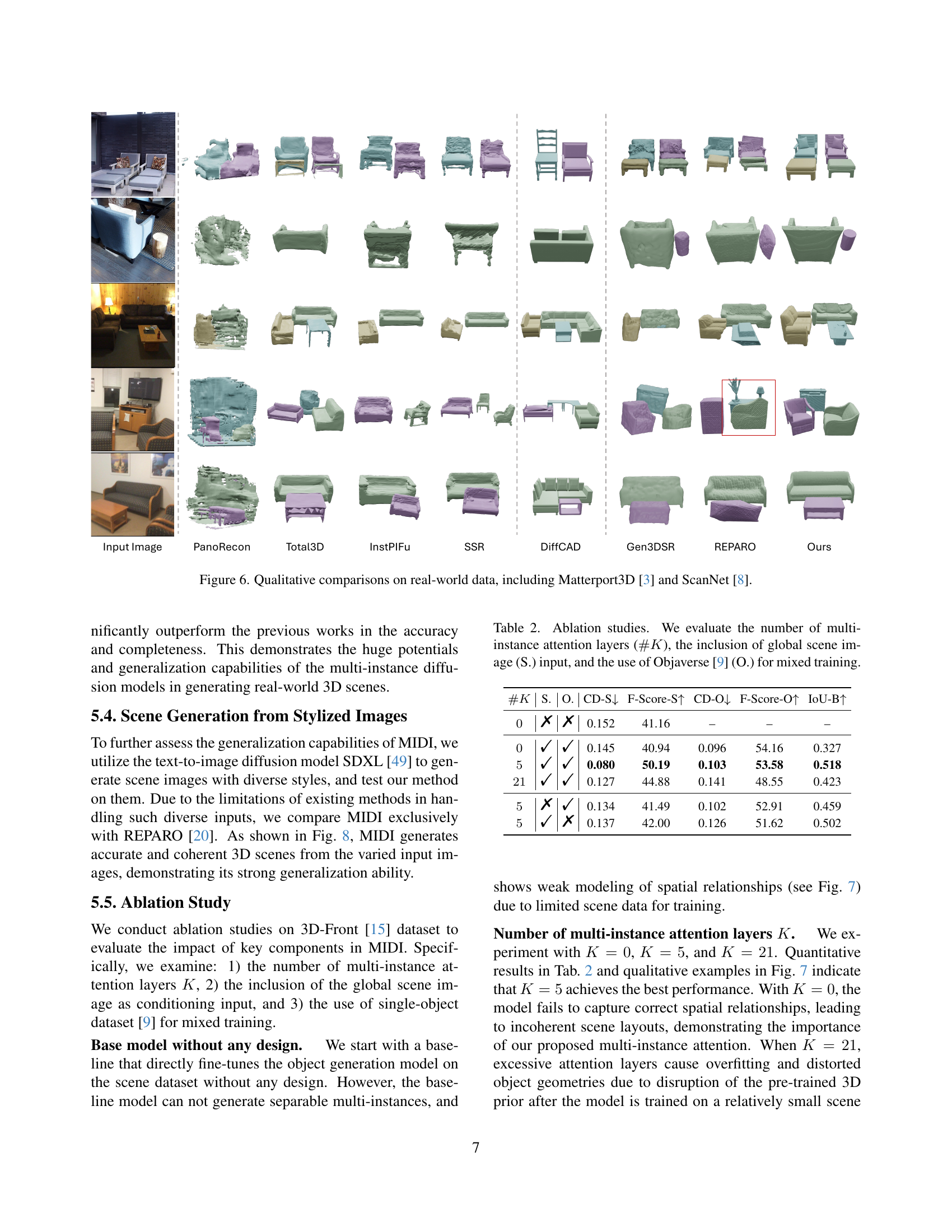

🔼 Figure 6 presents a qualitative comparison of 3D scene generation results from real-world images using different methods. It shows input images from Matterport3D and ScanNet datasets alongside the 3D reconstructions produced by various methods, including the proposed MIDI model. This allows for a visual assessment of the accuracy, completeness, and overall quality of the 3D scenes generated by each technique.

read the caption

Figure 6: Qualitative comparisons on real-world data, including Matterport3D [3] and ScanNet [8].

🔼 This figure presents a qualitative comparison of ablation studies conducted on the MIDI model. It shows the impact of varying the number of multi-instance attention layers (K), the inclusion or exclusion of global scene image conditioning, and the use of mixed training with a single-object dataset. By comparing the generated 3D scenes under different conditions, the figure illustrates how these factors affect the model’s ability to generate accurate and coherent 3D scenes with correct spatial relationships between objects.

read the caption

Figure 7: Qualitative ablation studies on the number of multi-instance attention layers K𝐾Kitalic_K, and the use of global scene image conditioning, and mixed training with single-object dataset.

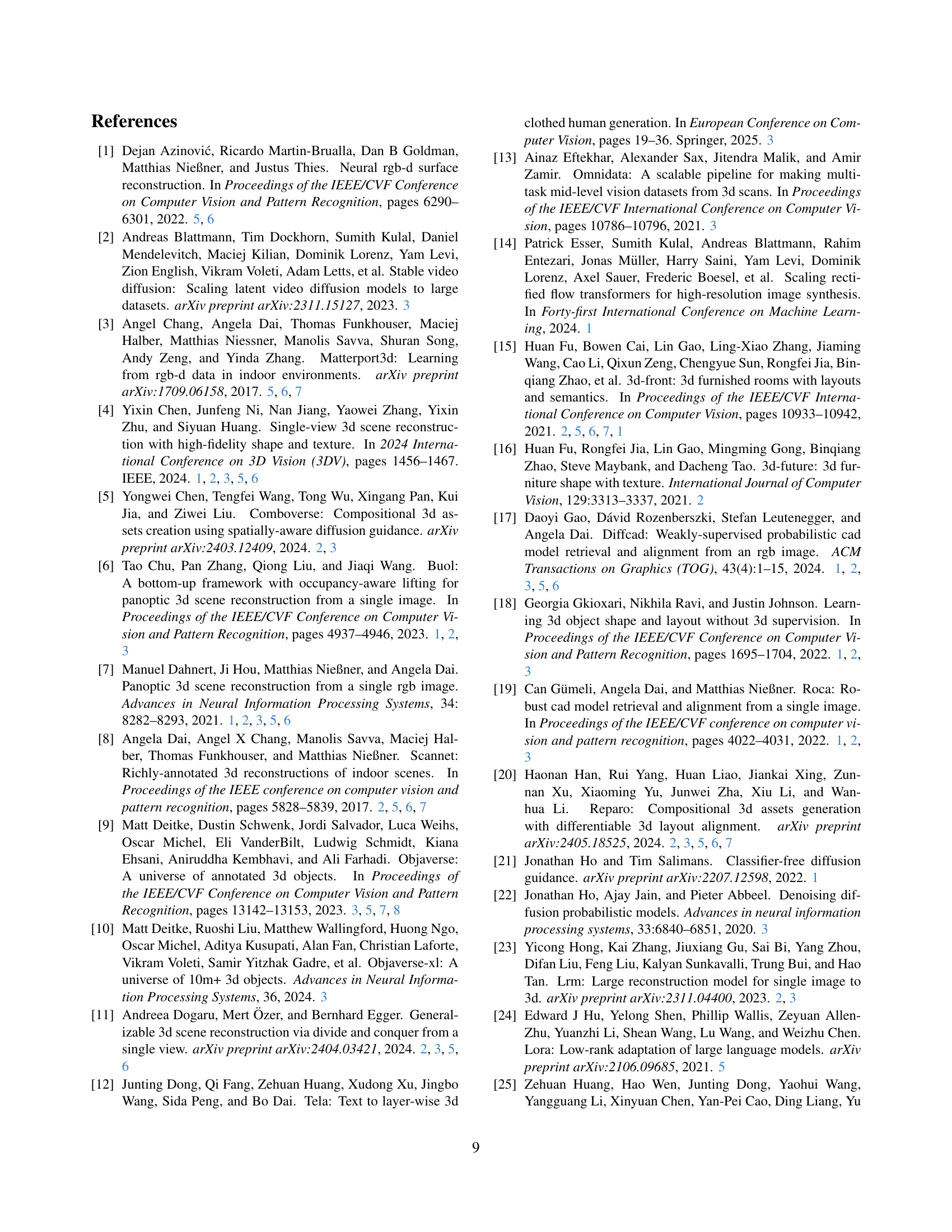

🔼 This figure displays a qualitative comparison of 3D scene generation results from stylized images. Stylized images, generated using a text-to-image diffusion model, are used as input to different scene generation methods. The comparison highlights the ability of the proposed MIDI model to generate more coherent and accurate 3D scenes compared to existing methods, particularly when dealing with diverse image styles.

read the caption

Figure 8: Qualitative comparisons on stylized images that are generated by text-to-image diffusion models.

Full paper#