↗ arXiv ↗ Hugging Face ↗ Papers with Code

TL;DR#

Current text-to-video models struggle with generating complex scenes from detailed text descriptions, particularly those involving multiple objects, dynamic interactions, and temporal relationships. Single-agent approaches often lead to inaccuracies and hallucinations. This limitation necessitates innovative solutions capable of handling the intricate complexities inherent in compositional video generation.

This paper introduces GENMAC, an innovative framework that tackles the challenge by employing a multi-agent collaborative approach. GENMAC decomposes the complex generation task into simpler sub-tasks, each handled by a specialized agent. This allows for iterative refinement and correction, significantly improving the accuracy and quality of the generated videos. The adaptive self-routing mechanism ensures the selection of appropriate agents for various scenarios, resulting in state-of-the-art performance on compositional video generation benchmarks.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers working on text-to-video generation because it introduces a novel multi-agent framework, GENMAC, that significantly improves the generation of complex, dynamic scenes. This addresses a key limitation of existing single-agent models and opens new avenues for research into multi-agent collaboration and compositional video generation. It also provides a benchmark for evaluating compositional generation that facilitates future research and development.

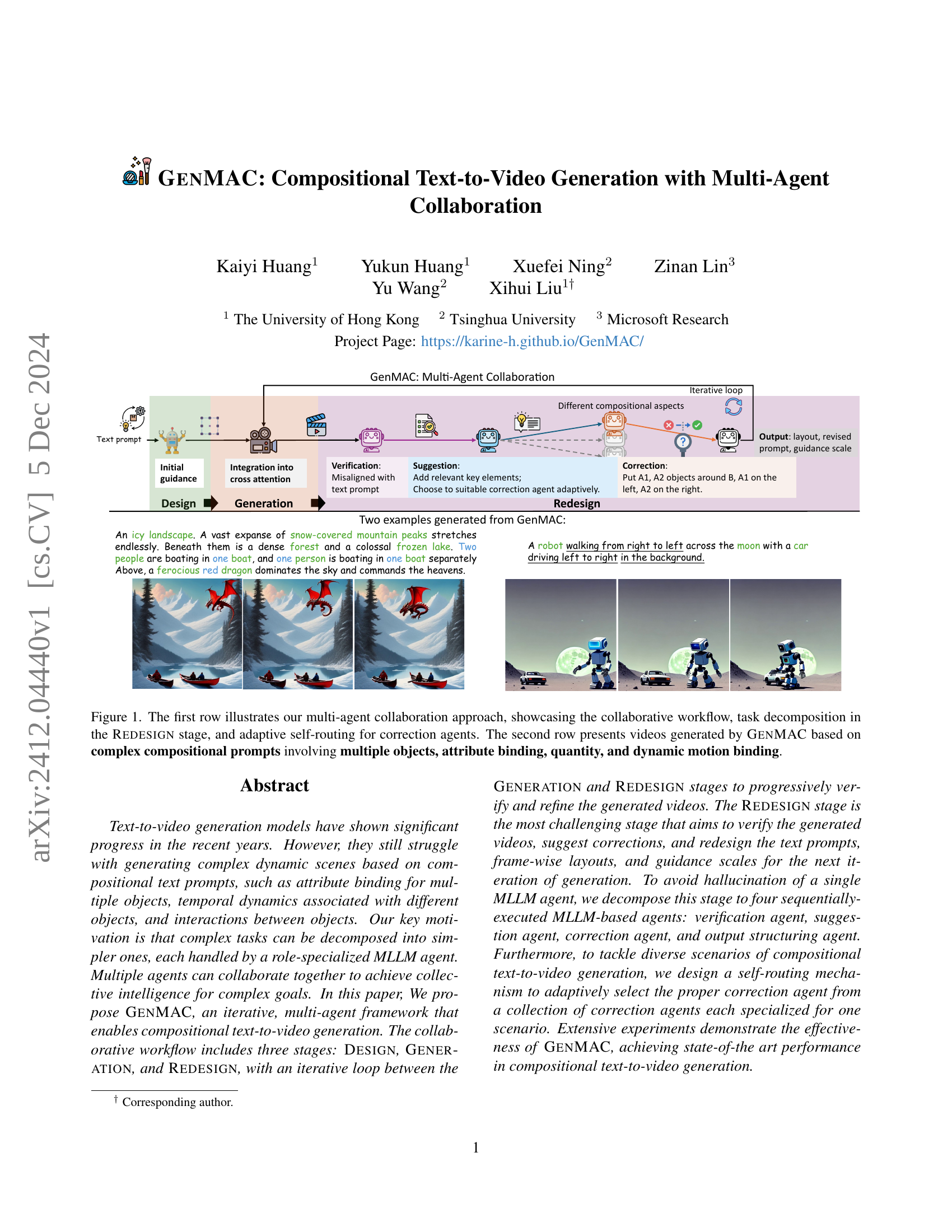

Visual Insights#

🔼 Figure 1 demonstrates GenMAC’s multi-agent collaborative text-to-video generation process. The top row illustrates the workflow’s three stages: Design, Generation, and Redesign. The Redesign stage is broken down into four sub-tasks handled by specialized agents (verification, suggestion, correction, and output structuring), each responsible for a specific aspect of refining the generated video. The adaptive self-routing mechanism ensures that the appropriate agent is chosen for the task. The bottom row displays example videos produced by GenMAC showcasing its ability to handle complex prompts involving multiple objects, their attributes, quantities, and dynamic interactions.

read the caption

Figure 1: The first row illustrates our multi-agent collaboration approach, showcasing the collaborative workflow, task decomposition in the Redesign stage, and adaptive self-routing for correction agents. The second row presents videos generated by GenMAC based on complex compositional prompts involving multiple objects, attribute binding, quantity, and dynamic motion binding.

| Model | Consist-attr | Dynamic-attr | Spatial | Motion | Action | Interaction | Numeracy |

|---|---|---|---|---|---|---|---|

| Metric | Grid-LLaVA ↑ | D-LLaVA ↑ | G-Dino ↑ | DOT ↑ | Grid-LLaVA ↑ | Grid-LLaVA ↑ | G-Dino ↑ |

| ModelScope [54] | 0.5483 | 0.1654 | 0.4220 | 0.2552 | 0.4880 | 0.7075 | 0.2066 |

| ZeroScope [1] | 0.4495 | 0.1086 | 0.4073 | 0.2319 | 0.4620 | 0.5550 | 0.2378 |

| Latte [34] | 0.5325 | 0.1598 | 0.4476 | 0.2187 | 0.5200 | 0.6625 | 0.2187 |

| Show-1 [72] | 0.6388 | 0.1828 | 0.4649 | 0.2316 | 0.4940 | 0.7700 | 0.1644 |

| VideoCrafter2 [8] | 0.6750 | 0.1850 | 0.4891 | 0.2233 | 0.5800 | 0.7600 | 0.2041 |

| Open-Sora 1.1 [21] | 0.6370 | 0.1762 | 0.5671 | 0.2317 | 0.5480 | 0.7625 | 0.2363 |

| Open-Sora 1.2 [21] | 0.6600 | 0.1714 | 0.5406 | 0.2388 | 0.5717 | 0.7400 | 0.2556 |

| Open-Sora-Plan v1.0.0 [26] | 0.5088 | 0.1562 | 0.4481 | 0.2147 | 0.5120 | 0.6275 | 0.1650 |

| Open-Sora-Plan v1.1.0 [26] | 0.7413 | 0.1770 | 0.5587 | 0.2187 | 0.6780 | 0.7275 | 0.2928 |

| CogVideoX-5B [66] | 0.7220 | 0.2334 | 0.5461 | 0.2943 | 0.5960 | 0.7950 | 0.2603 |

| AnimateDiff [15] | 0.4883 | 0.1764 | 0.3883 | 0.2236 | 0.4140 | 0.6550 | 0.0884 |

| VideoTetris [51] | 0.7125 | 0.2066 | 0.5148 | 0.2204 | 0.5280 | 0.7600 | 0.2609 |

| Vico [65] | 0.7025 | 0.2376 | 0.4952 | 0.2225 | 0.5480 | 0.7775 | 0.2116 |

| LVD [29] | 0.5595 | 0.1499 | 0.5469 | 0.2699 | 0.4960 | 0.6100 | 0.0991 |

| MagicTime [70] | - | 0.1834 | - | - | - | - | - |

| Pika [2] (Commercial) | 0.6513 | 0.1744 | 0.5043 | 0.2221 | 0.5380 | 0.6625 | 0.2613 |

| Gen-3 [42] (Commercial) | 0.7045 | 0.2078 | 0.5533 | 0.3111 | 0.6280 | 0.7900 | 0.2169 |

| GenMAC (Ours) | 0.7875 | 0.2498 | 0.7461 | 0.3623 | 0.7273 | 0.8250 | 0.5166 |

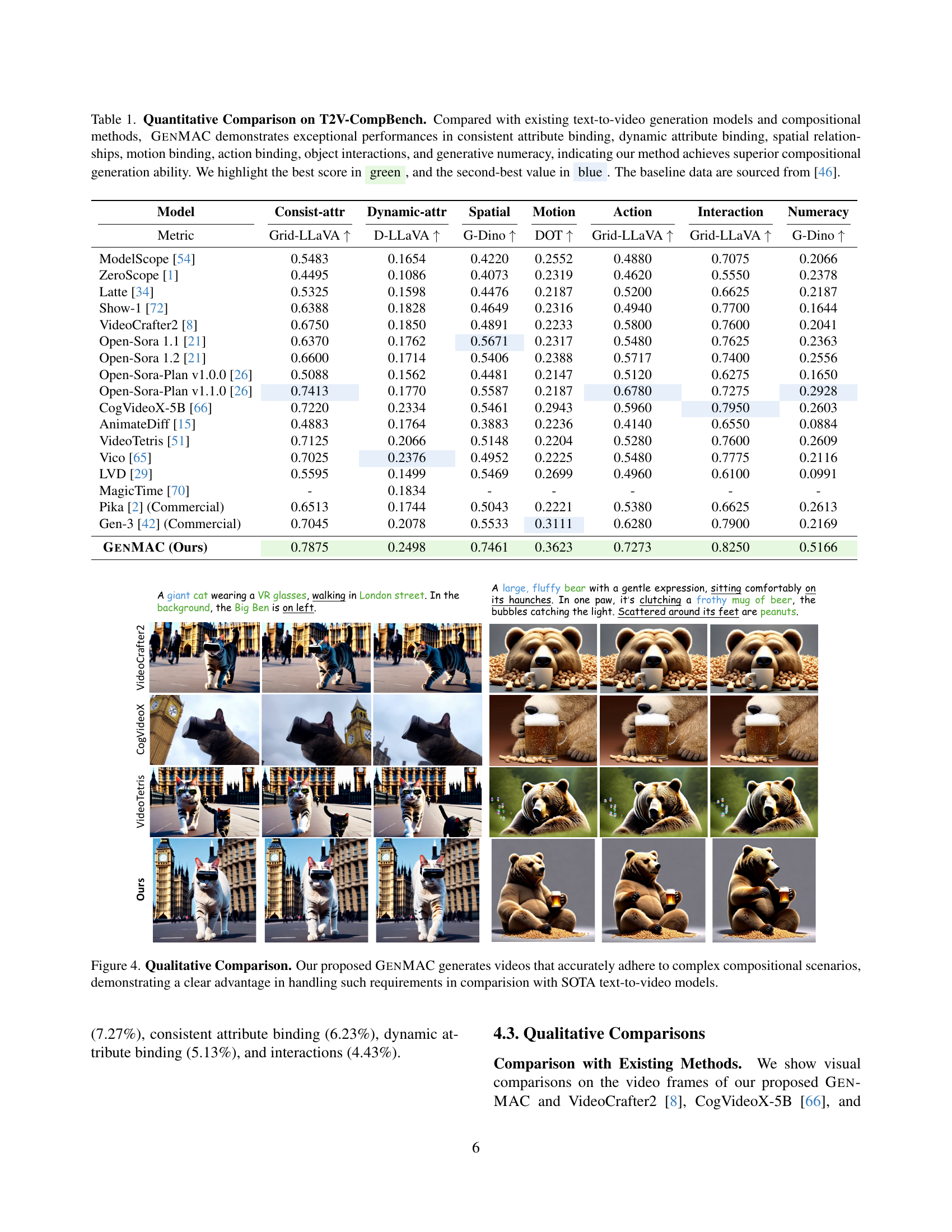

🔼 This table presents a quantitative comparison of GenMAC against 17 existing text-to-video generation models and compositional methods on the T2V-CompBench benchmark. The comparison focuses on seven key aspects of compositional video generation: consistent attribute binding, dynamic attribute binding, spatial relationships, motion binding, action binding, object interactions, and generative numeracy. GenMAC demonstrates superior performance across all seven aspects, with the best scores highlighted in green and the second-best in blue. The baseline data for comparison is sourced from reference [46] in the paper.

read the caption

Table 1: Quantitative Comparison on T2V-CompBench. Compared with existing text-to-video generation models and compositional methods, GenMAC demonstrates exceptional performances in consistent attribute binding, dynamic attribute binding, spatial relationships, motion binding, action binding, object interactions, and generative numeracy, indicating our method achieves superior compositional generation ability. We highlight the best score in green, and the second-best value in blue. The baseline data are sourced from [46].

In-depth insights#

Multi-agent Collab#

The concept of “Multi-agent Collab” in a text-to-video generation model presents a compelling approach to tackling the complexity inherent in creating dynamic and nuanced video content from textual descriptions. The core idea is to decompose the complex task of video generation into smaller, more manageable sub-tasks, each handled by a specialized agent. This allows for a more efficient and robust system, as each agent can focus on its area of expertise, leading to improved accuracy and reduced hallucination. The collaborative aspect is key, enabling agents to interact and refine the generated video progressively through iterative feedback loops. This iterative refinement ensures that the final video adheres closely to the textual prompt, overcoming limitations of single-agent methods. The choice of using multiple Large Language Models (LLMs) as agents is particularly interesting, as LLMs possess the capacity for high-level reasoning, understanding context, and generating natural language descriptions, making them well-suited to the tasks involved. The effectiveness of this approach will depend heavily on the design of the individual agents, their communication protocols, and the overall workflow. Adaptive self-routing mechanisms, capable of selecting the most appropriate agent for a given sub-task based on the specific needs of a situation, will greatly enhance the system’s flexibility and robustness.

Iterative Refinement#

The concept of “Iterative Refinement” in the context of compositional text-to-video generation is crucial. It highlights the model’s ability to progressively improve the generated video through multiple iterations, rather than relying on a single-pass generation. Each iteration involves a redesign phase where the model analyzes the previous output, identifies misalignments with the given text prompt, and suggests corrections and refinements. This iterative process is key to achieving high-quality results, especially when dealing with complex prompts involving multiple objects, dynamic interactions, and temporal changes. The self-routing mechanism, which intelligently selects the most suitable correction agent based on the specific needs of each iteration, plays an important role in enhancing the effectiveness of the iterative process. Task decomposition further improves efficiency by breaking down the complex redesign stage into smaller, manageable subtasks handled by specialized agents, minimizing errors and improving overall accuracy. The iterative refinement loop demonstrates the power of multi-agent collaboration in achieving a level of compositional understanding and generation that surpasses single-agent approaches. This is pivotal for addressing the limitations of current models and pushing the boundaries of AI-driven video generation.

Agent Specialization#

Agent specialization in compositional text-to-video generation is a crucial technique for effectively handling the complexity of diverse tasks. By decomposing complex tasks into smaller, more manageable subtasks, each assigned to a specialized agent, the system leverages the strengths of individual agents, exceeding the limitations of single-agent approaches. This modularity is especially beneficial in the redesign phase, which requires nuanced understanding of video contents and prompt alignment. Specialized agents, such as verification, suggestion, correction, and output structuring agents, each focus on a specific aspect, leading to more accurate and efficient results. The selection of the appropriate agent is also vital and can be made adaptively, based on the detected video-text discrepancies. This approach promotes efficient resource allocation and superior performance compared to using a single, general-purpose agent that struggles with the diverse demands of compositional video generation.

Compositional Bench#

A hypothetical “Compositional Bench” in a research paper would likely involve a standardized evaluation framework for assessing the capabilities of text-to-video generation models on complex, multi-faceted prompts. Such a benchmark should go beyond simple scene generation and test the model’s ability to handle compositional aspects. This might include evaluating the model’s understanding of attribute binding (e.g., a red car), spatial relationships (e.g., a car parked next to a house), temporal dynamics (e.g., a car driving), and object interactions (e.g., a person getting into a car). A robust compositional benchmark necessitates diverse and intricate scenarios, going beyond simple descriptive sentences and including more abstract or nuanced prompts. Quantitative metrics would be essential to objectively compare different models, potentially measuring the accuracy with which compositional elements are rendered and the coherence and plausibility of the generated videos. The benchmark’s value would be greatly enhanced by publicly available datasets and a clearly defined evaluation protocol, facilitating broader research and comparison within the field of text-to-video generation.

Future Directions#

Future research directions for compositional text-to-video generation could focus on several key areas. Improving the efficiency and scalability of multi-agent systems is crucial, potentially through exploring more efficient communication protocols or hierarchical agent architectures. Addressing the limitations of current LLMs in understanding nuanced temporal dynamics and complex interactions is vital; advancements in multimodal LLMs specifically designed for video understanding would significantly benefit this area. Furthermore, developing more robust evaluation metrics that capture the subtleties of compositional video generation is necessary to objectively measure progress. Finally, investigating the ethical implications of this technology, including potential biases and misuse, should be a central focus to ensure responsible development and deployment. Exploring techniques to mitigate these risks and promote fair and equitable use are critical for the long-term success of compositional text-to-video generation.

More visual insights#

More on figures

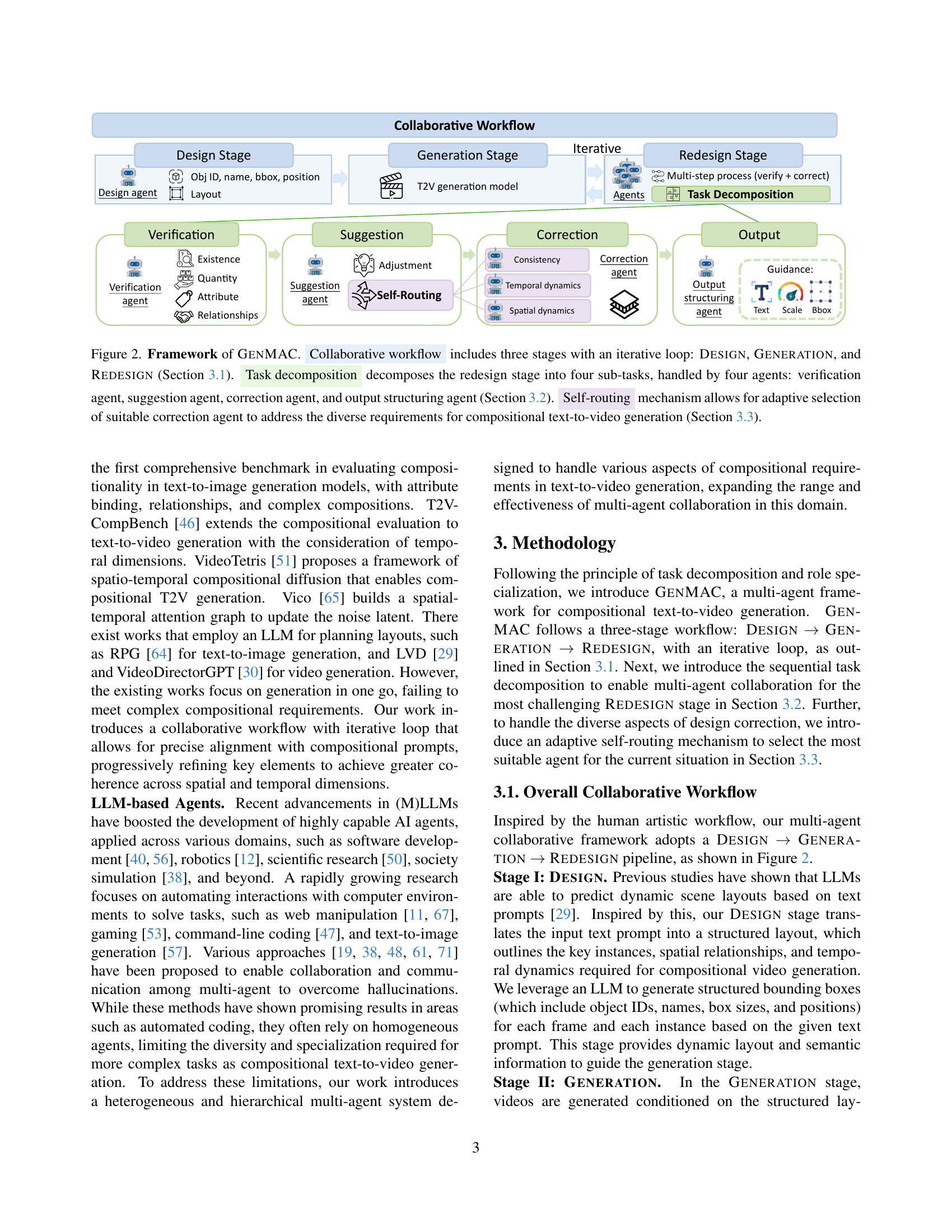

🔼 GenMAC’s framework consists of three stages: Design, Generation, and Redesign. The Design stage uses an LLM to create a structured layout based on the text prompt. The Generation stage uses a text-to-video model to create a video based on this layout. The Redesign stage is iterative and crucial; it verifies if the generated video matches the prompt, and if not, it uses four specialized agents (verification, suggestion, correction, and output structuring agents) to iteratively refine the layout, prompt, and other parameters for subsequent generation attempts. A self-routing mechanism intelligently selects the most suitable correction agent depending on the specific issues detected.

read the caption

Figure 2: Framework of GenMAC. Collaborative workflow includes three stages with an iterative loop: Design, Generation, and Redesign (Section 3.1). Task decomposition decomposes the redesign stage into four sub-tasks, handled by four agents: verification agent, suggestion agent, correction agent, and output structuring agent (Section 3.2). Self-routing mechanism allows for adaptive selection of suitable correction agent to address the diverse requirements for compositional text-to-video generation (Section 3.3).

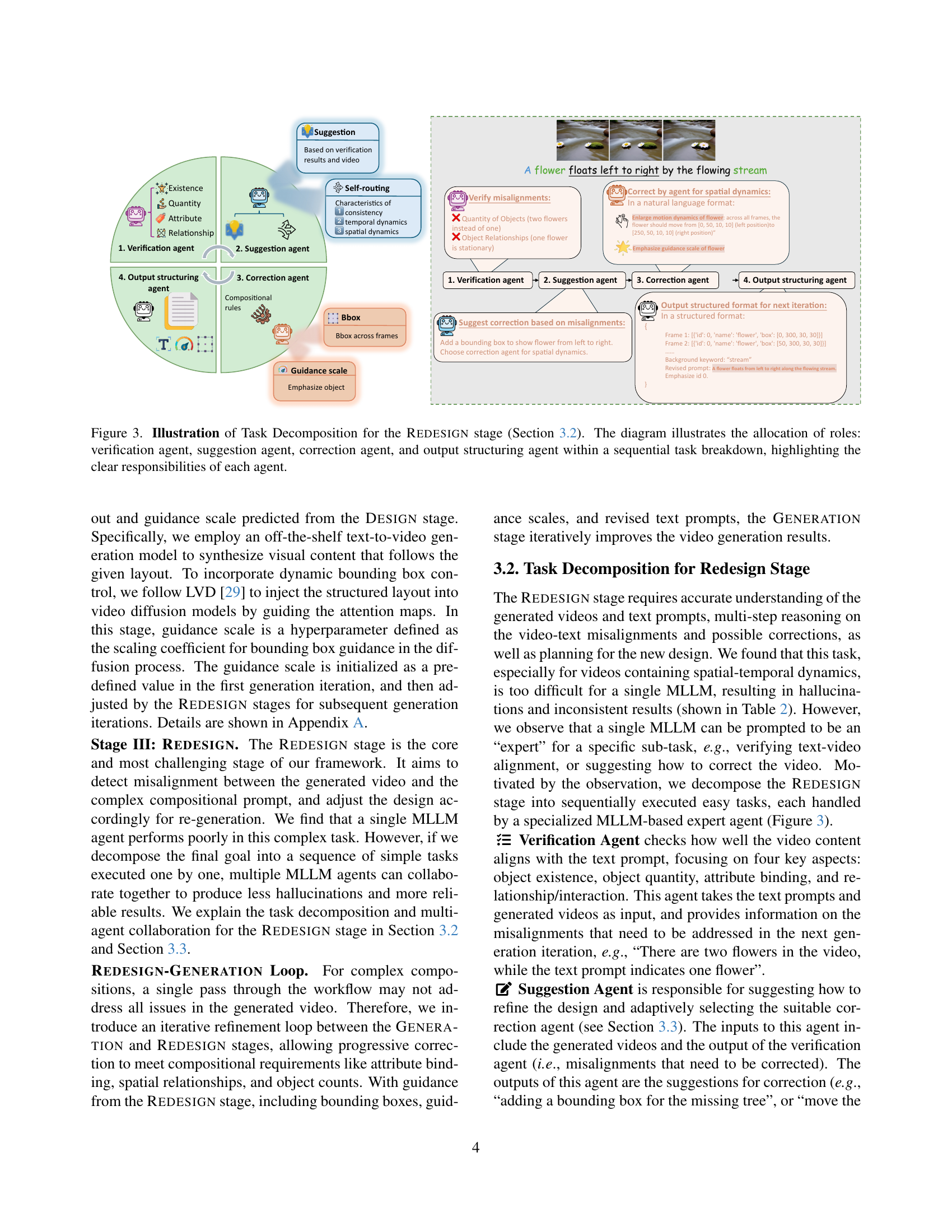

🔼 The figure illustrates the workflow of the REDESIGN stage in the GENMAC framework. The REDESIGN stage is broken down into four sequential sub-tasks, each handled by a specialized agent: a verification agent (checks for misalignments between the generated video and the text prompt), a suggestion agent (suggests corrections based on the verification results), a correction agent (makes the corrections based on the suggestions, choosing an appropriate agent based on the scenario), and an output structuring agent (formats the suggestions for the next iteration of the generation stage). The diagram clearly shows the flow of information and responsibilities between these four agents.

read the caption

Figure 3: Illustration of Task Decomposition for the Redesign stage (Section 3.2). The diagram illustrates the allocation of roles: verification agent, suggestion agent, correction agent, and output structuring agent within a sequential task breakdown, highlighting the clear responsibilities of each agent.

🔼 Figure 4 presents a qualitative comparison of video generation results between the proposed GenMAC model and state-of-the-art (SOTA) text-to-video generation models. The figure showcases several example video prompts requiring complex compositional elements such as multiple objects with specific attributes, spatial relationships, and dynamic actions. For each prompt, GenMAC’s generated video is compared alongside the outputs from other SOTA models, highlighting GenMAC’s superior ability to accurately capture and represent the intricate details and relationships specified in the complex prompts. This demonstrates GenMAC’s clear advantage in handling compositional text-to-video generation tasks compared to existing models.

read the caption

Figure 4: Qualitative Comparison. Our proposed GenMAC generates videos that accurately adhere to complex compositional scenarios, demonstrating a clear advantage in handling such requirements in comparision with SOTA text-to-video models.

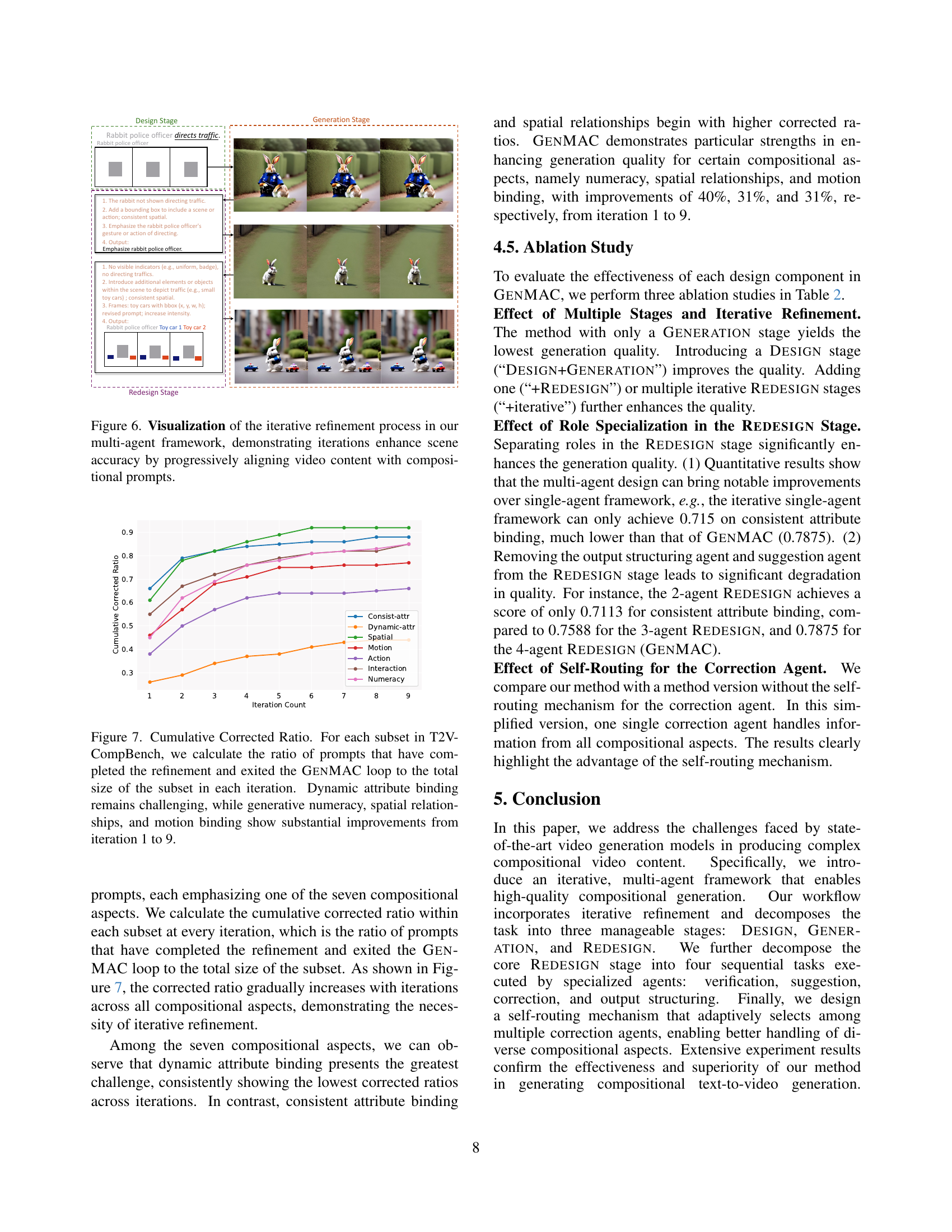

🔼 Figure 5 showcases example videos generated by GenMAC, highlighting its ability to handle complex and detailed text prompts. These examples demonstrate GenMAC’s capacity to accurately represent various compositional elements within a video, including the correct attributes of multiple objects, the specified number of objects, and dynamic movements as described in the text prompt. This figure visually supports the claims made in the paper regarding GenMAC’s superior compositional capabilities in text-to-video generation.

read the caption

Figure 5: Qualitative Results. Our proposed GenMAC generates videos that highly aligned with complex compositional prompts, including attribute binding, multiple objects, quantity, and dynamic motion binding.

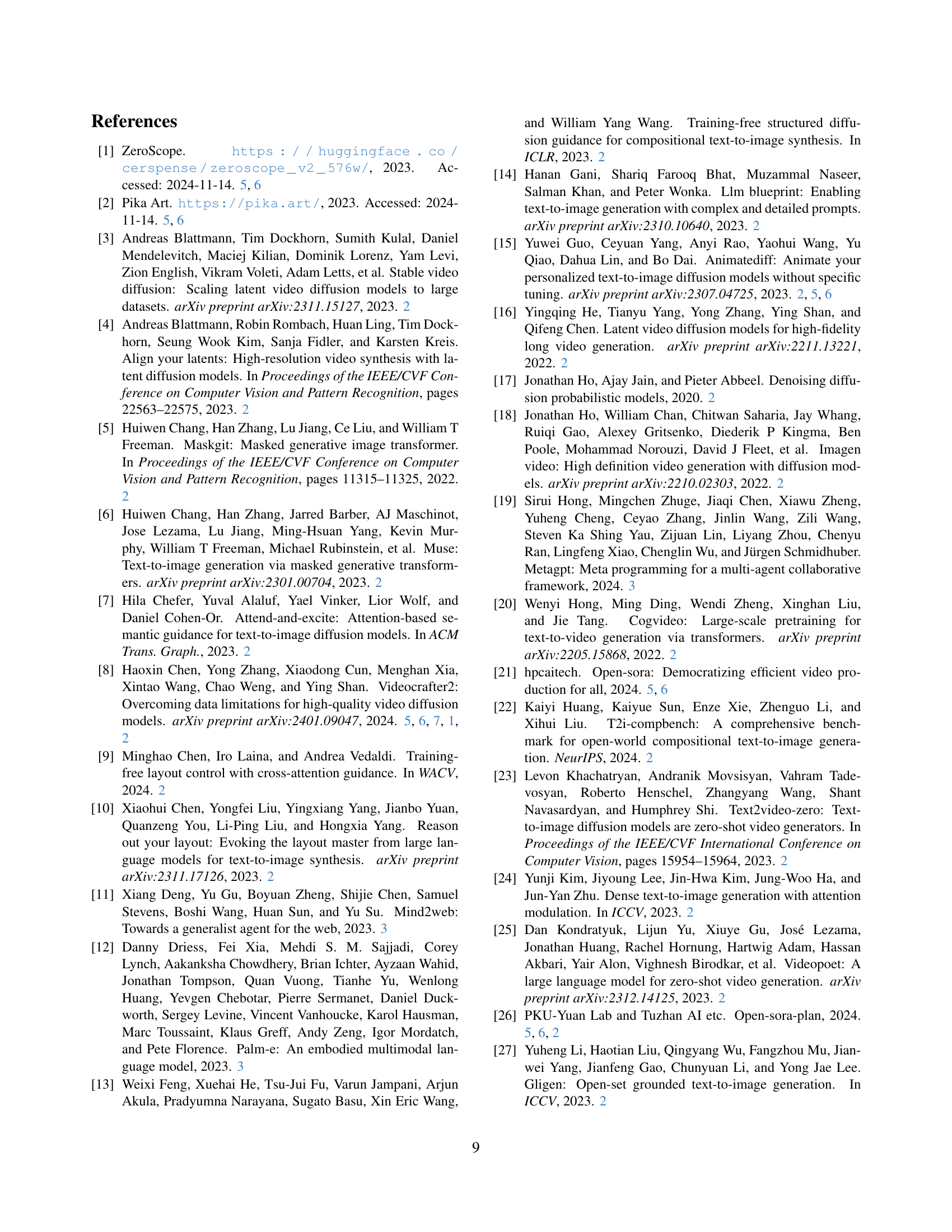

🔼 This figure visualizes how GENMAC iteratively refines video generation through multi-agent collaboration. It shows an example where the initial generated video doesn’t fully capture the prompt’s instructions. The subsequent iterations, facilitated by the REDESIGN stage agents, progressively improve the video by correcting misalignments and introducing missing elements. This iterative process demonstrates how GENMAC aligns the video content with complex compositional aspects outlined in the text prompt, enhancing scene accuracy step-by-step.

read the caption

Figure 6: Visualization of the iterative refinement process in our multi-agent framework, demonstrating iterations enhance scene accuracy by progressively aligning video content with compositional prompts.

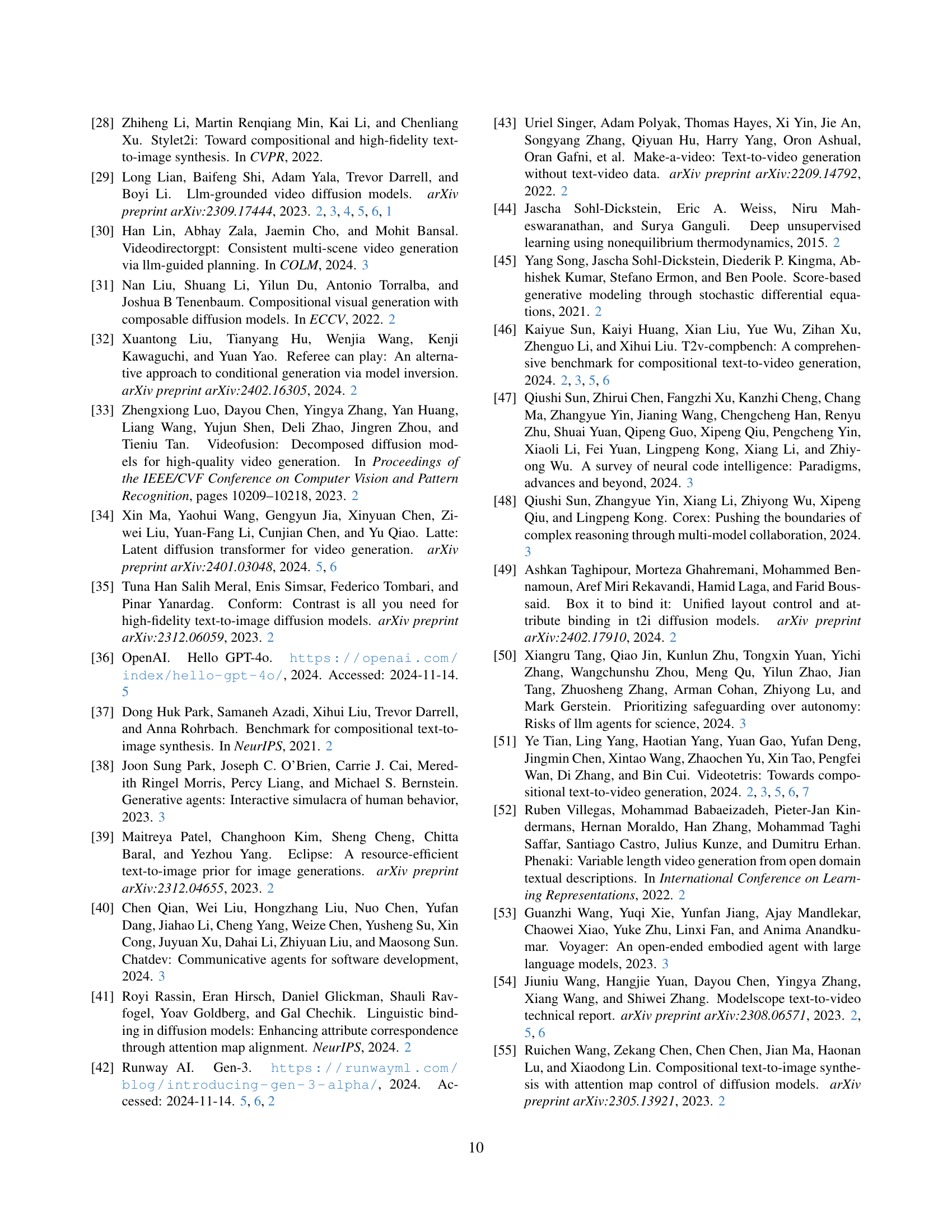

🔼 This figure displays the cumulative corrected ratio for each subset within the T2V-CompBench benchmark across nine iterations of the GenMAC model. The cumulative corrected ratio represents the proportion of prompts successfully refined and completed within the GenMAC loop relative to the total number of prompts in each subset. The graph reveals that while ‘dynamic attribute binding’ consistently proves challenging, notable improvements are observed across multiple iterations for ‘generative numeracy,’ ‘spatial relationships,’ and ‘motion binding.’

read the caption

Figure 7: Cumulative Corrected Ratio. For each subset in T2V-CompBench, we calculate the ratio of prompts that have completed the refinement and exited the GenMAC loop to the total size of the subset in each iteration. Dynamic attribute binding remains challenging, while generative numeracy, spatial relationships, and motion binding show substantial improvements from iteration 1 to 9.

🔼 This figure visualizes the iterative refinement process in the GENMAC framework using a multi-agent approach. The top row depicts the workflow. The initial video generation (left) fails to capture the ’tug’ motion between the rope and boat specified in the text prompt. The Redesign stage (middle) uses multiple specialized agents to detect the issue and suggest corrections, such as refining bounding boxes and visual cues to better represent the interaction. The final generated video (right) successfully incorporates the intended ’tug’ motion, aligning the output with the compositional prompt requirements. The bottom row shows frames from the video at different stages of refinement.

read the caption

(a) Visualization of multi-agent collaboration. Initial generation lacks “tug” motion between the rope and boat; Redesign agents adjust spatial alignment and visual tension, leading to a final video that aligns with the prompt’s interaction requirements.

🔼 This figure shows how the iterative refinement process in the REDESIGN stage corrects errors in object quantity and motion direction. The REDESIGN agents use multiple iterations to adjust the guidance scales and alignment of objects, improving the generated video’s adherence to the text prompt. The figure visualizes this process and highlights the collaborative and iterative nature of the approach.

read the caption

(b) Visualization of the iterative refinement in correcting object quantity and motion direction. The Redesign agents adjust guidance scale and alignment over successive iterations, progressively enhancing adherence to the prompt.

🔼 This figure visualizes the multi-agent collaboration process in GENMAC through two examples. (a) shows how the agents iteratively refine a video of a rope pulling a boat, correcting initial misalignments in the interaction between the rope and boat. The agents iteratively refine the video through adjusting the bounding boxes to reflect the tension between the objects, achieving a final version that accurately reflects the prompt. (b) illustrates the iterative correction of object quantity and motion direction in a video of a car on the moon. The agents detect the mismatch, and through successive iterations of adjustment (bounding box position and guidance scale) refine the video to match the direction and quantity specified in the prompt.

read the caption

Figure A8: Visualization of the multi-agent collaboration.

🔼 The bar chart visualizes the number of corrections needed in each iteration of the GENMAC’s iterative refinement loop for different compositional aspects. It shows how many times adjustments were required to the generated video across various aspects of composition like attribute consistency, dynamic attributes, spatial relationships, etc., across multiple iterations.

read the caption

(a) The number of corrections.

🔼 This figure shows a breakdown of how different types of guidance (structured layout, guidance scale, and new text prompt) contribute to the overall video quality scores. The analysis is performed after both the Design and Redesign stages of the generation process. It illustrates the relative importance of each guidance type in achieving the final video quality, showing their respective percentage contributions.

read the caption

(b) The contribution (%) of different guidance types to the video scores with Design and Redesign stages.

🔼 This figure shows a pie chart that breaks down the contributions of different types of guidance (structured layout, guidance scale, and new text prompt) to the overall quality of generated videos, specifically focusing on the results achieved during the REDESIGN stage of the GENMAC process. It quantifies the influence each guidance type had on the video scores while only using the REDESIGN stage; excluding the DESIGN and GENERATION stages.

read the caption

(c) The contribution (%) of different guidance types to the video scores with only the Redesign stage.

🔼 Figure A9 shows the number of corrections made during each iteration of the GENMAC model’s refinement process, broken down by the type of guidance used (structured layout, guidance scale, new text prompt). It also displays the percentage contribution of each guidance type to the overall video quality scores. This allows for analysis of which guidance method is most effective and how their impact changes across the iterative refinement process.

read the caption

Figure A9: Illustration of the number of corrections and contributions (%) in T2V-CompBench of different guidance types: structured layout, guidance scale, and new text prompt.

🔼 This figure provides a qualitative comparison of video frames generated by different models (GENMAC, VideoCrafter2, CogVideoX, Gen-3, VideoTetris, Open-Sora-Plan) for three complex compositional prompts. Each row represents a different prompt and shows the outputs of each model for comparison. This illustrates the ability of the proposed method GENMAC to generate videos that accurately match the complex composition and dynamics specified in the prompts, outperforming other existing methods.

read the caption

Figure A10: More qualitative comparisons.

More on tables

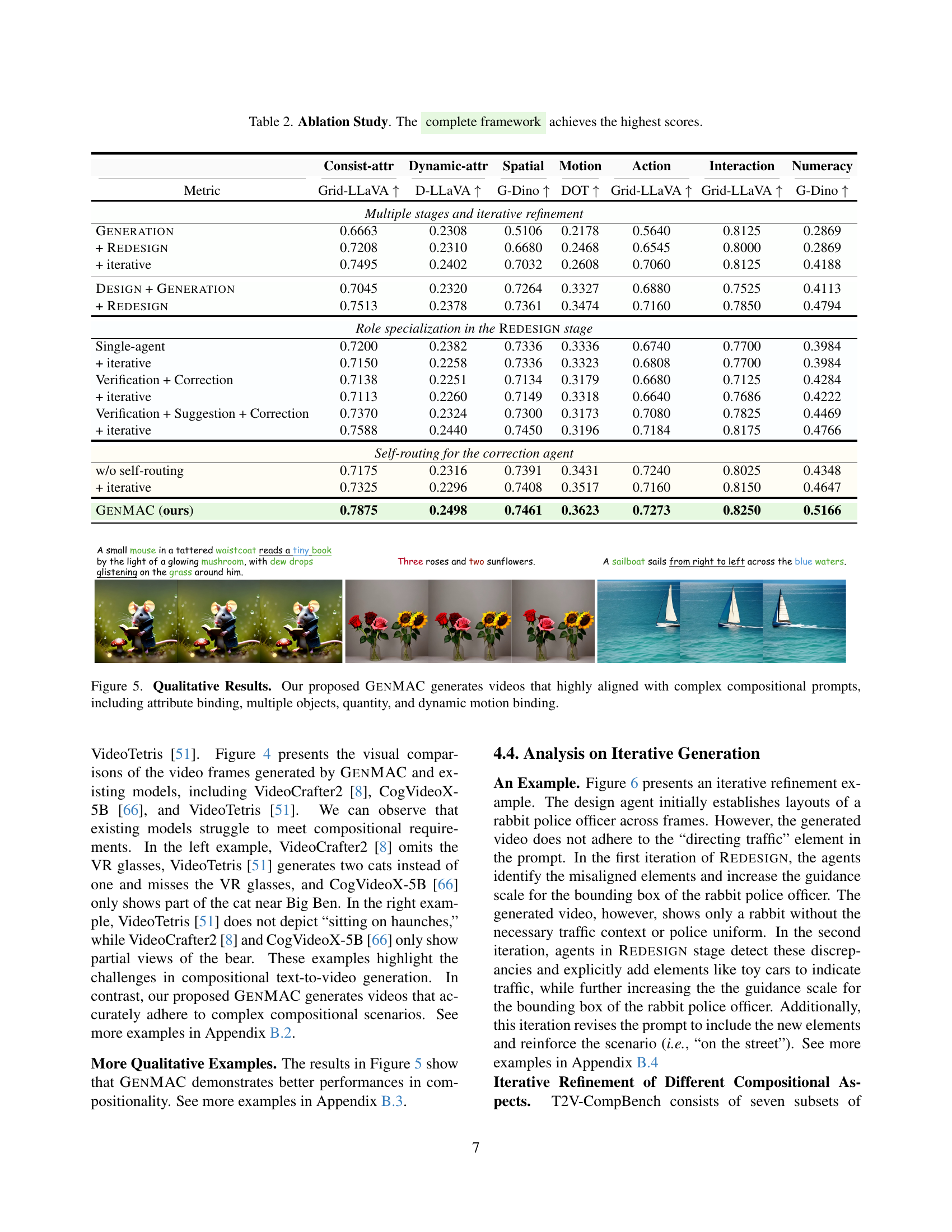

| Consist-attr | Dynamic-attr | Spatial | Motion | Action | Interaction | Numeracy | |

|---|---|---|---|---|---|---|---|

| Metric | Grid-LLaVA ↑ | D-LLaVA ↑ | G-Dino ↑ | DOT ↑ | Grid-LLaVA ↑ | Grid-LLaVA ↑ | G-Dino ↑ |

| Multiple stages and iterative refinement | |||||||

| Generation | 0.6663 | 0.2308 | 0.5106 | 0.2178 | 0.5640 | 0.8125 | 0.2869 |

| + Redesign | 0.7208 | 0.2310 | 0.6680 | 0.2468 | 0.6545 | 0.8000 | 0.2869 |

| + iterative | 0.7495 | 0.2402 | 0.7032 | 0.2608 | 0.7060 | 0.8125 | 0.4188 |

| Design + Generation | 0.7045 | 0.2320 | 0.7264 | 0.3327 | 0.6880 | 0.7525 | 0.4113 |

| + Redesign | 0.7513 | 0.2378 | 0.7361 | 0.3474 | 0.7160 | 0.7850 | 0.4794 |

| Role specialization in the Redesign stage | |||||||

| Single-agent | 0.7200 | 0.2382 | 0.7336 | 0.3336 | 0.6740 | 0.7700 | 0.3984 |

| + iterative | 0.7150 | 0.2258 | 0.7336 | 0.3323 | 0.6808 | 0.7700 | 0.3984 |

| Verification + Correction | 0.7138 | 0.2251 | 0.7134 | 0.3179 | 0.6680 | 0.7125 | 0.4284 |

| + iterative | 0.7113 | 0.2260 | 0.7149 | 0.3318 | 0.6640 | 0.7686 | 0.4222 |

| Verification + Suggestion + Correction | 0.7370 | 0.2324 | 0.7300 | 0.3173 | 0.7080 | 0.7825 | 0.4469 |

| + iterative | 0.7588 | 0.2440 | 0.7450 | 0.3196 | 0.7184 | 0.8175 | 0.4766 |

| Self-routing for the correction agent | |||||||

| w/o self-routing | 0.7175 | 0.2316 | 0.7391 | 0.3431 | 0.7240 | 0.8025 | 0.4348 |

| + iterative | 0.7325 | 0.2296 | 0.7408 | 0.3517 | 0.7160 | 0.8150 | 0.4647 |

| GenMAC (ours) | 0.7875 | 0.2498 | 0.7461 | 0.3623 | 0.7273 | 0.8250 | 0.5166 |

🔼 This ablation study analyzes the contribution of different components of the GENMAC framework to its overall performance on the T2V-CompBench benchmark. It compares the full model against versions with fewer components or stages (e.g., removing the REDESIGN stage, removing role specialization, or removing the self-routing mechanism) to demonstrate the effectiveness of each part of the proposed architecture. The results, measured across seven compositional aspects (consistent and dynamic attribute binding, spatial relationships, motion binding, action binding, object interactions, and generative numeracy), show that the complete GENMAC framework achieves the highest scores, highlighting the synergistic effects of the multiple stages and agents.

read the caption

Table 2: Ablation Study. The complete framework achieves the highest scores.

| Design Stage | |

|---|---|

| Design | Reasoning: The car is driving from right to left, so its x-coordinate should decrease while its y-coordinate remains constant. The moon’s surface is flat and has low gravity, so the car’s movement will be smooth and consistent. Frame 1: [ {’id’: 0, ’name’: ’car’, ’box’: [400, 350, 100, 50]}] Frame 2: [ {’id’: 0, ’name’: ’car’, ’box’: [320, 350, 100, 50]}] Frame 3: [ {’id’: 0, ’name’: ’car’, ’box’: [240, 350, 100, 50]}] Frame 4: [ {’id’: 0, ’name’: ’car’, ’box’: [160, 350, 100, 50]}] Frame 5: [ {’id’: 0, ’name’: ’car’, ’box’: [80, 350, 100, 50]}] Frame 6: [ {’id’: 0, ’name’: ’car’, ’box’: [0, 350, 100, 50]}] Background keyword: moon Generation suggestion: None New prompt: A car drives from right to left on the moon’s surface. |

| Redesign Stage | iter 1 |

| verification agent | |

| Verification | The alignment check reveals the following issues: 1. Quantity of Objects: There are two cars present in the video instead of one. 2. Motion Direction: The cars are not clearly shown moving from right to left. 3. Correctness of Attributes: The cars appear to be on the moon, which aligns with the prompt. Overall, there is a mismatch in the quantity of objects and the motion direction. |

| suggestion agent | |

| Suggestion | 1. Suggest corrections for the bounding boxes: - Remove one of the cars to correct the quantity issue. - Ensure the remaining car is clearly shown moving from right to left by adjusting its position across frames to depict motion. 2. Choose the suitable correction agent: B1. (correction agent for spatial dynamics) |

| correction agent | |

| Correction | The correction suggestion indicates that one of the cars should be removed to correct the quantity issue. The remaining car should be shown moving from right to left, which aligns with the previous bounding boxes. Here are the corrected bounding boxes: Corrected Bounding Boxes: - Frame 1: [’id’: 0, ’name’: ’car’, ’box’: [400, 350, 100, 50]] - Frame 2: [’id’: 0, ’name’: ’car’, ’box’: [320, 350, 100, 50]] - Frame 3: [’id’: 0, ’name’: ’car’, ’box’: [240, 350, 100, 50]] - Frame 4: [’id’: 0, ’name’: ’car’, ’box’: [160, 350, 100, 50]] - Frame 5: [’id’: 0, ’name’: ’car’, ’box’: [80, 350, 100, 50]] - Frame 6: [’id’: 0, ’name’: ’car’, ’box’: [0, 350, 100, 50]] Explanation: The bounding boxes remain the same as the previous ones, as they already depict the car moving from right to left. The suggestion to generation is to emphasize the movement of the single car across the frames. |

| output structuring agent | |

| Output | Reasoning: The sequence depicts a single car moving from right to left across the frames. Initially, the car is positioned on the right side of the frame and gradually moves to the left, maintaining a consistent y-coordinate, which aligns with the prompt of a car driving on the moon. Frame 1: [ {’id’: 0, ’name’: ’car’, ’box’: [400, 350, 100, 50]}] Frame 2: [ {’id’: 0, ’name’: ’car’, ’box’: [320, 350, 100, 50]}] Frame 3: [ {’id’: 0, ’name’: ’car’, ’box’: [240, 350, 100, 50]}] Frame 4: [ {’id’: 0, ’name’: ’car’, ’box’: [160, 350, 100, 50]}] Frame 5: [ {’id’: 0, ’name’: ’car’, ’box’: [80, 350, 100, 50]}] Frame 6: [ {’id’: 0, ’name’: ’car’, ’box’: [0, 350, 100, 50]}] Background keyword: moon Generation suggestion: emphasize id 0 New prompt: A car driving right to left on the moon. |

| iter 2 | |

| verification agent | |

| Verification | The alignment check reveals the following issues: Direction of Motion: The car is moving from left to right, which is opposite to the prompt’s description of right to left. Existence of Specified Objects: The car and the moon surface are present, which aligns with the prompt. Quantity of Objects: The single car is correctly depicted. Correctness of Object Attributes: The car and the lunar surface appear as expected. Overall, the main issue is the direction of the car’s movement. Alignment Check Result: The video does not fully align with the prompt due to the incorrect motion direction of the car. |

| suggestion agent | |

| Suggestion | 1. Suggest corrections for the bounding boxes: Adjust the motion path of the car to reflect a right-to-left direction instead of left-to-right. 2. Choose the suitable correction agent: B1. (correction agent for spatial dynamics) |

| correction agent | |

| Correction | Based on the correction suggestion, the bounding boxes need to be adjusted to reflect the car moving from right to left. Here are the corrected bounding boxes: Corrected Bounding Boxes: - Frame 1: [’id’: 0, ’name’: ’car’, ’box’: [400, 350, 100, 50]] - Frame 2: [’id’: 0, ’name’: ’car’, ’box’: [320, 350, 100, 50]] - Frame 3: [’id’: 0, ’name’: ’car’, ’box’: [240, 350, 100, 50]]- Frame 4: [’id’: 0, ’name’: ’car’, ’box’: [160, 350, 100, 50]] - Frame 5: [’id’: 0, ’name’: ’car’, ’box’: [80, 350, 100, 50]]- Frame 6: [’id’: 0, ’name’: ’car’, ’box’: [0, 350, 100, 50]] Comparison and Suggestion: The corrected bounding boxes are the same as the previous ones, indicating that the car is already moving from right to left as intended. Suggestion to Generation: Emphasize the car (id 0) to ensure it stands out against the moon background. |

| output structuring agent | |

| Output | Reasoning: The sequence depicts a car moving from right to left across the frames. The car’s x-coordinate decreases consistently, indicating its movement from right to left as described in the prompt. The background is the moon, providing a unique setting for the car’s journey. Frame 1: [ {’id’: 0, ’name’: ’car’, ’box’: [400, 350, 100, 50]}] Frame 2: [ {’id’: 0, ’name’: ’car’, ’box’: [320, 350, 100, 50]}] Frame 3: [ {’id’: 0, ’name’: ’car’, ’box’: [240, 350, 100, 50]}] Frame 4: [ {’id’: 0, ’name’: ’car’, ’box’: [160, 350, 100, 50]}] Frame 5: [ {’id’: 0, ’name’: ’car’, ’box’: [80, 350, 100, 50]}] Frame 6: [ {’id’: 0, ’name’: ’car’, ’box’: [0, 350, 100, 50]}] Background keyword: moon Generation Suggestion : emphasize id 0. New Prompt: A car driving right to left on the moon. |

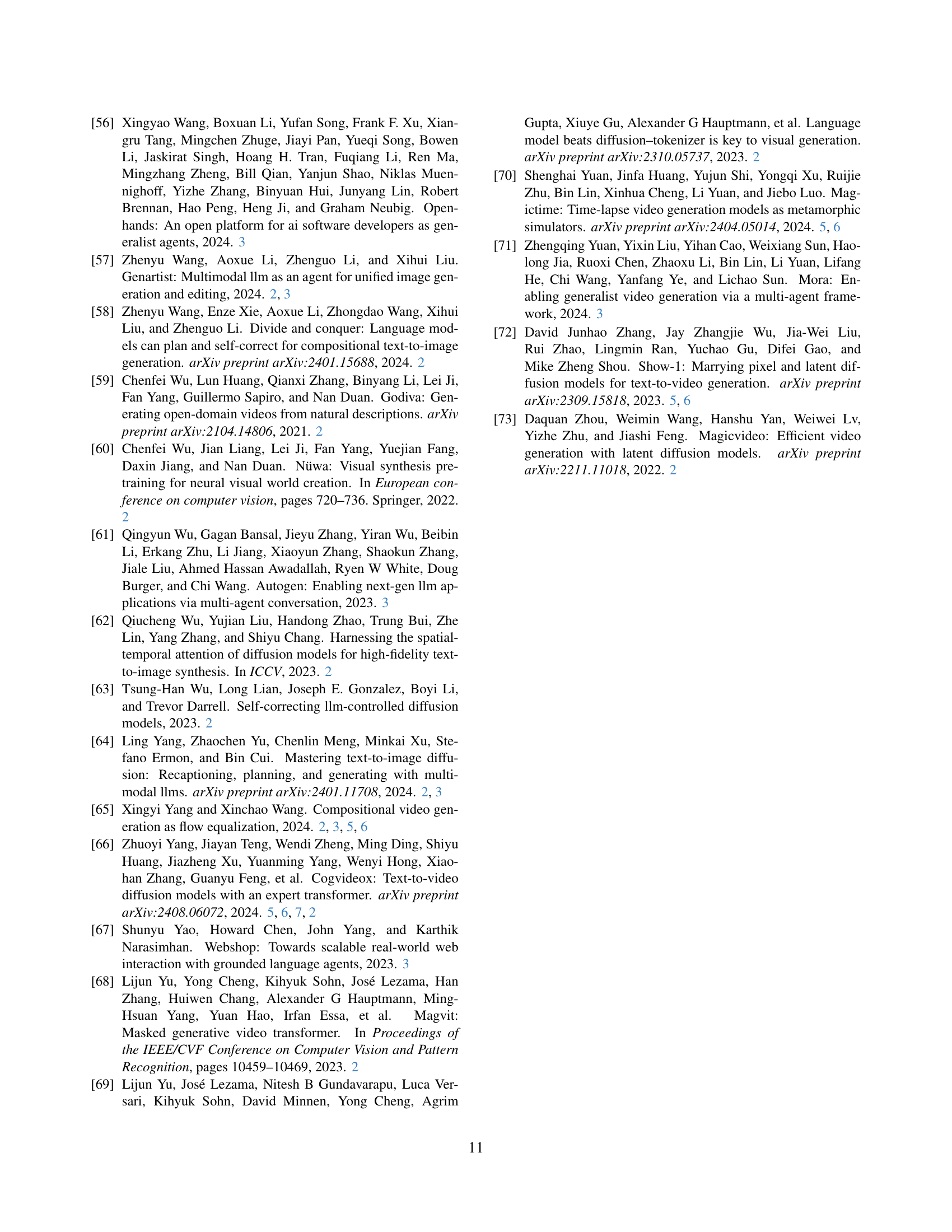

🔼 This table details the iterative process of the GENMAC model generating a video based on the prompt ‘A car driving right to left on the moon.’ It shows the workflow across three stages (DESIGN, GENERATION, REDESIGN), illustrating how different agents collaborate to refine the video based on verification and suggestion steps. Each stage and sub-task within the REDESIGN stage are documented with reasoning texts, execution instructions, and structured outputs in JSON format for each iteration. It demonstrates the process of correcting misalignments in the video, such as issues with the number of cars and their movement direction, using bounding box adjustments and prompt revisions.

read the caption

Table A3: Our full example for Figure A13, with the prompt “A car driving right to left on the moon”.

| Reasoning | Description |

|---|---|

| The car is driving from right to left, so its x-coordinate should decrease while its y-coordinate remains constant. | |

| The moon’s surface is flat and has low gravity, so the car’s movement will be smooth and consistent. | |

| Frame 1: [{’id’: 0, ’name’: ’car’, ’box’: [400, 350, 100, 50]}] | |

| Frame 2: [{’id’: 0, ’name’: ’car’, ’box’: [320, 350, 100, 50]}] | |

| Frame 3: [{’id’: 0, ’name’: ’car’, ’box’: [240, 350, 100, 50]}] | |

| Frame 4: [{’id’: 0, ’name’: ’car’, ’box’: [160, 350, 100, 50]}] | |

| Frame 5: [{’id’: 0, ’name’: ’car’, ’box’: [80, 350, 100, 50]}] | |

| Frame 6: [{’id’: 0, ’name’: ’car’, ’box’: [0, 350, 100, 50]}] | |

| Background keyword: moon | |

| Generation suggestion: None | |

| New prompt: A car drives from right to left on the moon’s surface. |

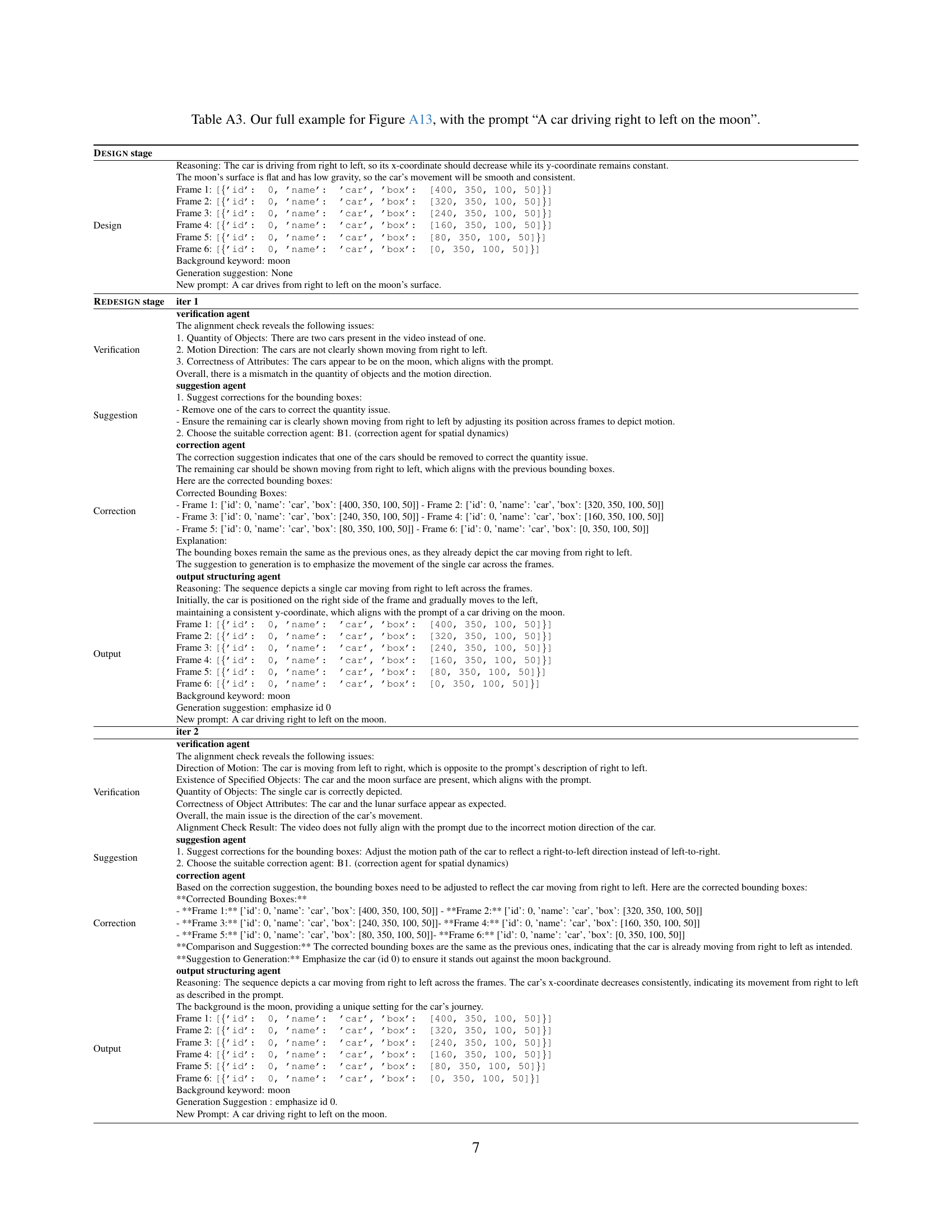

🔼 This table details the iterative process of the GENMAC model generating a video based on the prompt ‘Rabbit police officer directs traffic.’ It breaks down the stages (DESIGN, REDESIGN iterations 1 and 2) and shows the reasoning, verification, suggestions, corrections, and output of each agent (verification, suggestion, correction, output structuring) at each step. The table displays how the model progressively refines the video by adjusting bounding boxes, adding elements (toy cars), and clarifying prompts to ensure alignment with the initial request. This showcases the multi-agent collaborative approach and iterative refinement process central to the GENMAC framework.

read the caption

Table A4: Our full example for Figure 6 in the main paper, with the prompt “Rabbit police officer directs traffic”.

Full paper#