↗ arXiv ↗ Hugging Face ↗ Papers with Code

TL;DR#

Current dialogue systems lack comprehensive evaluation due to limited benchmarks focusing on a few aspects of dialogues. Existing datasets inadequately cover the complete dialogue lifecycle (Prelude, Interlocution, Epilogue) and neglect crucial elements like goals, personas, and scenes. This hinders precise modeling and systematic evaluation.

To address this, the paper proposes a novel benchmark, DEMO, with a new research task: Dialogue Element Modeling. This includes Element Awareness (reverse-engineering dialogue elements) and Dialogue Agent Interaction (goal-directed multi-turn dialogue). The researchers also develop a DEMO agent using imitation learning, achieving superior performance to existing LLMs, especially in modeling nuanced dialogue elements. DEMO provides a more complete evaluation framework, moving the field towards more comprehensive dialogue models.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers in dialogue systems because it introduces a novel benchmark, DEMO, and a new research task, Dialogue Element Modeling. This addresses the current limitations of existing datasets and models by providing a more comprehensive evaluation framework. The DEMO agent, trained using imitation learning, shows significant improvement, highlighting the potential for enhancing LLMs’ dialogue capabilities. This opens avenues for further research into more nuanced and human-like dialogue systems.

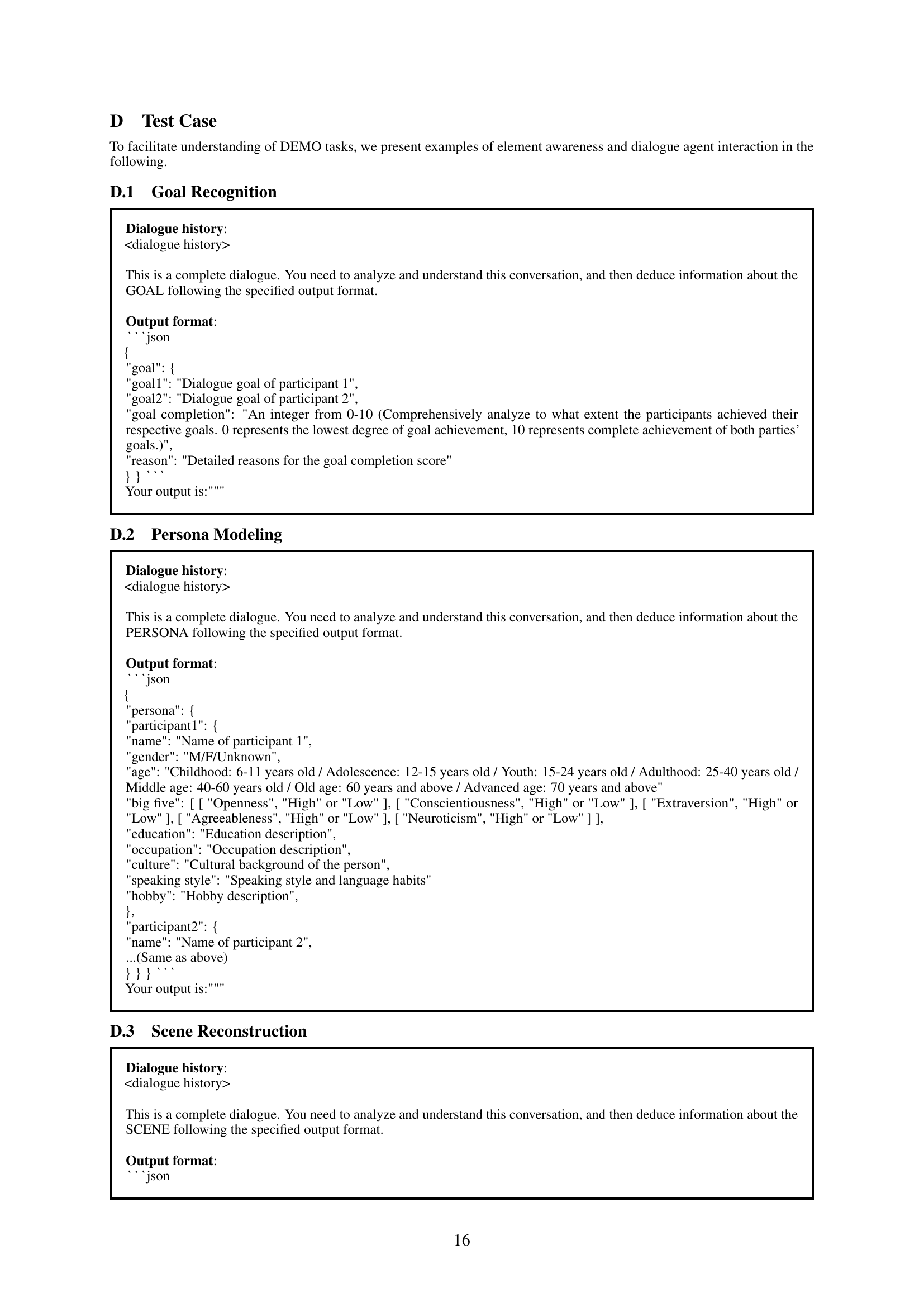

Visual Insights#

🔼 This figure illustrates the Dialogue Element Modeling task, which consists of two main parts: Element Awareness and Dialogue Agent Interaction. Element Awareness focuses on identifying key elements within a dialogue, such as the goal, persona, scene, and utterance. Dialogue Agent Interaction involves simulating a multi-turn dialogue between agents, where each agent’s actions are guided by their understanding of these elements. The figure shows how these elements are structured across the three stages of a complete dialogue: the Prelude (setting the scene and goals), the Interlocution (the main exchange), and the Epilogue (summarizing and concluding). The interplay between the dialogue and its constituent elements is also visually represented.

read the caption

Figure 1: Overview of task: Dialogue Element Modeling, which focuses on two main aspects: Element Awareness and Dialogue Agent Interaction. We have formulated the comprehensive elements of the Prelude, Interlocution, and Epilogue stages of a complete dialogue.

| Data | Goal | Scene | Persona | Utterance | Dialogue | Analysis | Generation | Multilingual |

|---|---|---|---|---|---|---|---|---|

| DialyDialog [Li et al., 2017] | ✗ | ✗ | ✗ | ✗ | ✓ | ✗ | ✓ | ✗ |

| DialogSum [Chen et al., 2021] | ✗ | ✗ | ✗ | ✗ | ✓ | ✓ | ✗ | ✗ |

| SODA [Kim et al., 2023a] | ✗ | ✗ | ✗ | ✗ | ✓ | ✗ | ✓ | ✗ |

| CharacterGLM [Zhou et al., 2023a] | ✗ | ✓ | ✓ | ✗ | ✓ | ✗ | ✓ | ✗ |

| Persona-Chat [Jandaghi et al., 2023] | ✗ | ✗ | ✓ | ✗ | ✓ | ✗ | ✓ | ✗ |

| SOTOPIA [Zhou et al., 2024c] | ✓ | ✓ | ✓ | ✗ | ✗ | ✗ | ✓ | ✗ |

| Ditto [Lu et al., 2024] | ✗ | ✗ | ✓ | ✗ | ✓ | ✗ | ✓ | ✓ |

| DEMO (Ours) | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

🔼 This table compares the characteristics of the proposed DEMO benchmark with existing dialogue datasets. It highlights DEMO’s comprehensiveness in covering various dialogue elements (goal, scene, persona, utterance) and tasks (dialogue analysis, generation, multilingual support). Unlike many existing datasets that focus on a subset of these aspects, DEMO offers a more holistic approach, including both English and Chinese languages.

read the caption

Table 1: The overview of our DEMO’s characteristics in comparison to those in the related work. DEMO encompasses comprehensive dialogue elements and tasks applicable to both English and Chinese.

In-depth insights#

Dialogue Element Modeling#

The concept of “Dialogue Element Modeling” presents a novel approach to understanding and improving dialogue systems. It moves beyond simply generating coherent text by focusing on the fine-grained elements that constitute a conversation, such as goals, personas, scenes, and utterances. This granular analysis allows for a more nuanced understanding of dialogue dynamics. By explicitly modeling these elements, systems can become more contextually aware and generate responses that are not only relevant but also reflect an understanding of the conversational participants and their objectives. A key strength is the ability to assess model performance through the lens of these individual elements, providing more precise feedback for model improvement. The task’s multifaceted nature addresses significant challenges in current dialogue systems, setting a higher standard for evaluation and ultimately leading to more natural and engaging human-computer interactions. Comprehensive benchmarking through a well-defined dataset and evaluation metrics is crucial for assessing progress in this field. The research promises significant advances in the development of sophisticated, context-aware conversational agents.

DEMO Benchmark#

The heading ‘DEMO Benchmark’ suggests a comprehensive evaluation framework for dialogue element modeling. It implies a new benchmark dataset, DEMO, designed to thoroughly assess the capabilities of Large Language Models (LLMs) in understanding and generating dialogue. The benchmark likely evaluates LLMs across various dimensions: element awareness (identifying goals, personas, scenes, and utterances) and dialogue agent interaction (multi-turn dialogue modeling driven by elements). This indicates that DEMO likely goes beyond simple dialogue generation and focuses on nuances within interactions. A successful benchmark would provide quantitative metrics for performance evaluation and reveal the strengths and weaknesses of current LLMs. Furthermore, it could highlight opportunities for future improvements in dialogue systems by identifying areas where models struggle to grasp complex conversational elements. Ultimately, DEMO’s value lies in its potential to advance dialogue system research, helping researchers to develop more sophisticated, robust, and human-like conversational AI.

Agent Training#

The agent training methodology detailed in this research is noteworthy for its reliance on imitation learning, a technique that leverages expert demonstrations to train a model. This approach is particularly relevant given the complexity of dialogue element modeling, where nuanced understanding and effective interaction are crucial. By utilizing expert LLMs, the researchers effectively bypass the need for extensive manual annotation, a significant advantage in terms of efficiency and scalability. The use of a multi-dimensional reward framework, combined with rigorous quality control measures, further enhances the effectiveness of the training process, ensuring that the resulting agent possesses a robust and nuanced understanding of dialogue elements. The evaluation across in-domain and out-of-domain tasks showcases the agent’s superior generalization abilities, adding to the practical implications of the developed methodology. The method’s effectiveness, as demonstrated by outperforming existing LLMs, highlights its potential as a valuable contribution for future research in dialogue system development.

Catastrophic Forgetting#

Catastrophic forgetting, a significant challenge in machine learning, is the tendency of a model to forget previously learned information when trained on new data. This is particularly relevant in the context of lifelong learning or incremental learning, where models are expected to continuously learn and adapt throughout their lifespan. In the research paper’s context of Dialogue Element Modeling, catastrophic forgetting would mean that as the model learns new dialogue elements or interaction strategies, it might lose its ability to accurately model previously learned ones. This is critical because dialogue is inherently sequential and context-dependent. A system unable to retain previous information loses its ability to understand and generate contextually appropriate responses. Therefore, mitigating catastrophic forgetting is essential to developing robust and effective dialogue systems that can continually adapt to new conversational situations and user interaction patterns without losing performance on earlier learned tasks. Strategies for addressing this could include techniques like regularization, rehearsal (re-introducing old data during new training), or employing more sophisticated memory mechanisms in the model architecture to retain past knowledge. Further research is needed to investigate the extent of catastrophic forgetting in dialogue models and to develop effective mitigation techniques to enhance their robustness and ability to engage in meaningful and evolving conversations.

Future Work#

Future work in dialogue element modeling should prioritize expanding the DEMO benchmark to encompass a wider range of dialogue scenarios and languages. Improving the robustness and generalizability of the models is critical, particularly in handling noisy or ambiguous data. Addressing the limitations of current LLMs in accurately modeling complex social dynamics and nuanced elements within dialogues remains vital. Investigating novel training methodologies is key. Exploring the use of reinforcement learning or more sophisticated imitation learning techniques could significantly enhance performance. Furthermore, research into more efficient and explainable dialogue element modeling approaches is needed. Developing methods to better assess the social and ethical implications of such models, as well as ensuring fairness and inclusivity, will be essential for their responsible deployment. Finally, exploring the integration of multi-modal inputs (e.g., incorporating visual or audio information) could create more immersive and realistic dialogue systems.

More visual insights#

More on figures

🔼 This figure illustrates the five-step synthesis framework used to create the DEMO benchmark dataset for dialogue element modeling. The steps are: 1) Goal and Scene Distillation: extracts goals and scenes from a large dialogue corpus. 2) Persona Design: generates diverse personas with detailed attributes. 3) Conflict Assessment: evaluates the coherence of goal, scene, and persona combinations. 4) Dialogue Generation: generates dialogues based on the approved combinations. 5) Quality Control: ensures the quality of the generated data through multiple checks. The diagram also shows how the DEMO agent is trained using imitation learning from an expert LLM.

read the caption

Figure 2: Overview of our DEMO synthesis framework, which consists of five steps: (1) Goal and Scene Distillation, (2) Persona Design, (3) Conflict Assessment, (4) Dialogue Generation, (5) Quality Control. The process of DEMO Agent train is also shown in this figure.

🔼 This figure presents a comprehensive overview of the dialogue elements considered in the DEMO benchmark. It details the structure of a complete dialogue, dividing it into three key stages: Prelude, Interlocution, and Epilogue. Each stage comprises various elements such as goal, scene, persona, and utterance, which are meticulously analyzed in the DEMO task. The Prelude sets the context with elements like the goal and persona of each participant, along with the overall scene. Interlocution focuses on the exchange of utterances, incorporating the intent and sentiment expressed. Finally, the Epilogue summarizes the entire dialogue, assessing goal achievement and the overall information flow. This figure serves to illustrate the complexity of dialogue and the comprehensive nature of the proposed DEMO evaluation framework.

read the caption

Figure 3: Overview of the system of dialogue elements.

More on tables

| Goal |

|---|

🔼 Table 2 presents a comprehensive evaluation of various Large Language Models (LLMs) on the Dialogue Element Modeling benchmark (DEMO). The table shows each model’s performance across four Element Awareness subtasks (Goal Recognition, Persona Modeling, Scene Reconstruction, and Utterance Mining), and four Dialogue Agent Interaction dimensions (Goal Achievement, Believability, Skillfulness, and Realism). The highest score for each task/dimension is shown in bold, the second-highest is underlined. Improvements over a baseline model are indicated using Δ values. The overall score is the average of all eight tasks/dimensions, providing a holistic view of each LLM’s performance on the DEMO benchmark.

read the caption

Table 2: The results of various LLMs on the DEMO. The highest score among different LLMs is highlighted in bold, and the second highest is underlined. And ΔΔ\Deltaroman_Δ values represent the improvement over the baseline. Element Awareness has four tasks:(1) Goa: Goal Recognition, (2) Per: Persona Modeling, (3) Sce: Scene Reconstruction, and (4) Utt: Utterance Mining. Dialogue Agent Interaction assesses the performance from four dimensions: (1) Goa: Goal Achievement, (2) Bel: Believability,(3) Ski: Skillfulness,(4) Rea: Realistic. Overall: The overall score is the average of the element awareness and dialogue agent interaction.

| Scene |

|---|

🔼 This table presents the inter-annotator agreement results, calculated using Cohen’s Kappa statistic, to evaluate the consistency between LLM-generated annotations and human annotations for various dialogue elements. The elements assessed include Goal, Persona, Scene, and Utterance, reflecting different aspects of dialogue understanding. The Kappa values quantify the level of agreement between the automated LLM assessment and manual human evaluation for each element, providing a measure of the reliability of the LLM-generated annotations.

read the caption

Table 3: The Kappa consistency results between LLM annotations and two human raters on different elements

| Persona |

|---|

🔼 Table 4 presents the performance of different models on SOTOPIA’s challenging scenarios. SOTOPIA assesses social intelligence across seven dimensions: believability, relationship management, knowledge application, handling secrets, adherence to social rules, understanding financial and material benefits, and goal achievement. Each model’s performance is evaluated on each of these seven dimensions, with GPT-4o providing the scores. The overall score represents the average performance across all dimensions, providing a comprehensive measure of social intelligence. Higher scores indicate better performance in socially intelligent interactions.

read the caption

Table 4: Evaluation results on SOTOPIA hard episodes, which scored from seven social dimensions: believability (BEL), relationship (REL), knowledge (KNO), secret (SEC), social rules (SOC), financial and material benefits (FIN), and goal completion (GOA). The overall score is the average of the seven social dimensions reflecting the overall social intelligence. GPT-4o rates each dimension.

| Utterance |

|---|

🔼 This table presents the results of evaluating the general capabilities and alignment of several large language models (LLMs). The evaluation uses accuracy scores derived from the MMLU and HHH benchmarks, which test the models’ performance across various tasks and their adherence to safety guidelines, respectively. This allows for comparison of the models’ overall capabilities beyond the specific dialogue modeling tasks explored elsewhere in the paper. Higher accuracy scores indicate better performance on these general language understanding and alignment assessments.

read the caption

Table 5: The evaluation results of the LLM’s general capability and alignment performance, using the accuracy score.

| Dialogue |

|---|

🔼 This table lists the specific versions of the large language models (LLMs) used in the experiments. For each model (e.g., GPT-4, Claude, Llama, Qwen), the exact version number and whether it was accessed via an API or a local version (VLLM) are provided. This detail is crucial for reproducibility of the experiments.

read the caption

Table 6: The detailed versions of our used LLMs.

Full paper#