↗ arXiv ↗ Hugging Face ↗ Papers with Code

TL;DR#

The development of large language models (LLMs) has been dominated by proprietary models, raising concerns about transparency and access. While open-source LLMs exist, many lack crucial components like training data and code, hindering further research and innovation. This limits the ability for researchers to build upon existing work and slows down the progress of the field.

To address this, researchers developed Moxin 7B, a fully open-source LLM adhering to the Model Openness Framework. This means all components are publicly available, including code, data, and intermediate checkpoints. Benchmark tests show that Moxin 7B outperforms existing open-source models in zero-shot evaluations and performs competitively in few-shot evaluations. This makes Moxin 7B a valuable contribution to the field, promoting reproducible research and fostering collaboration within the open-source AI community.

Key Takeaways#

Why does it matter?#

This paper is important because it introduces Moxin 7B, a fully open-source LLM that addresses the critical issue of transparency and reproducibility in the field. By adhering to the Model Openness Framework (MOF), it provides a valuable resource for research and allows for easier customization and deployment. The superior zero-shot performance compared to other 7B models further highlights its significance. This work also promotes open science practices and encourages further innovation within the open-source LLM community.

Visual Insights#

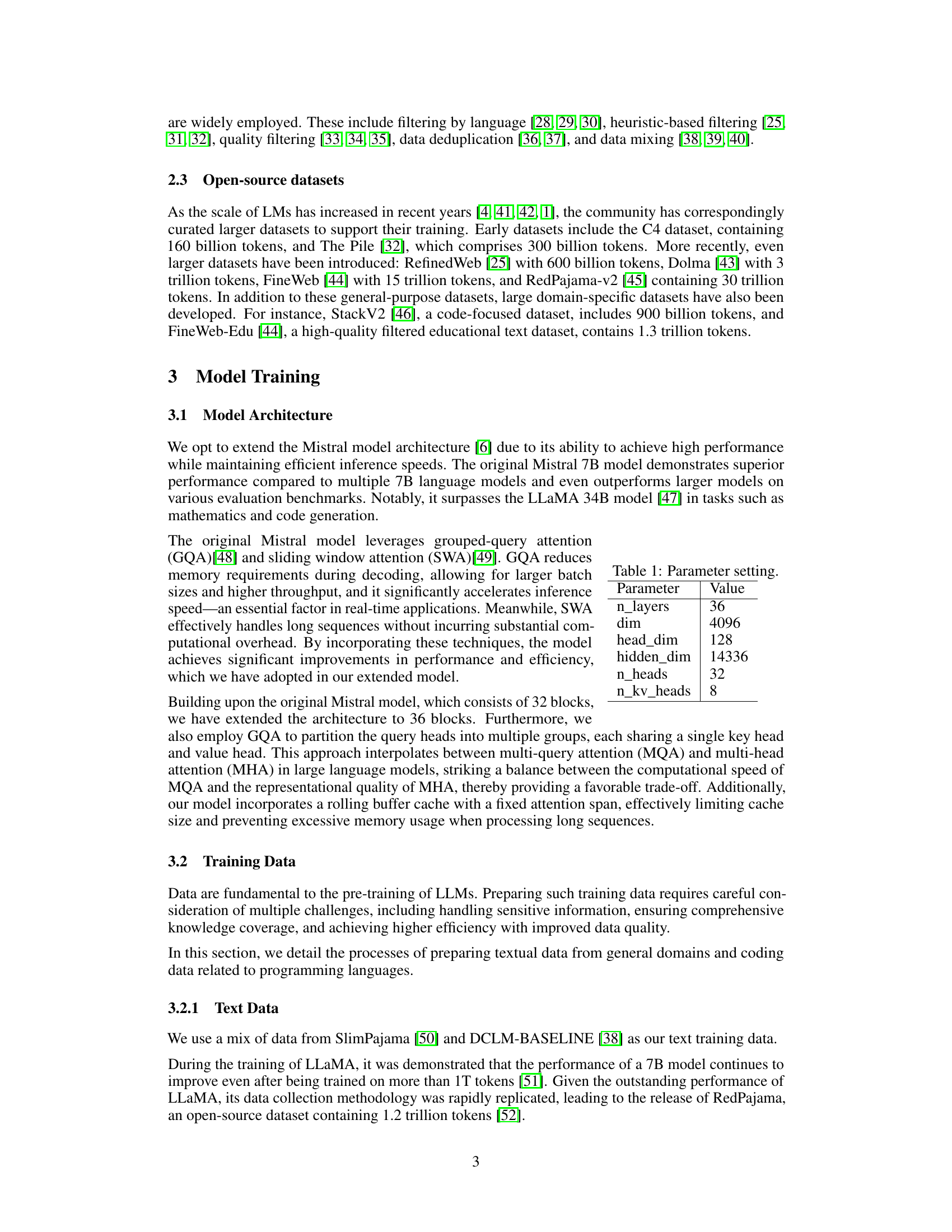

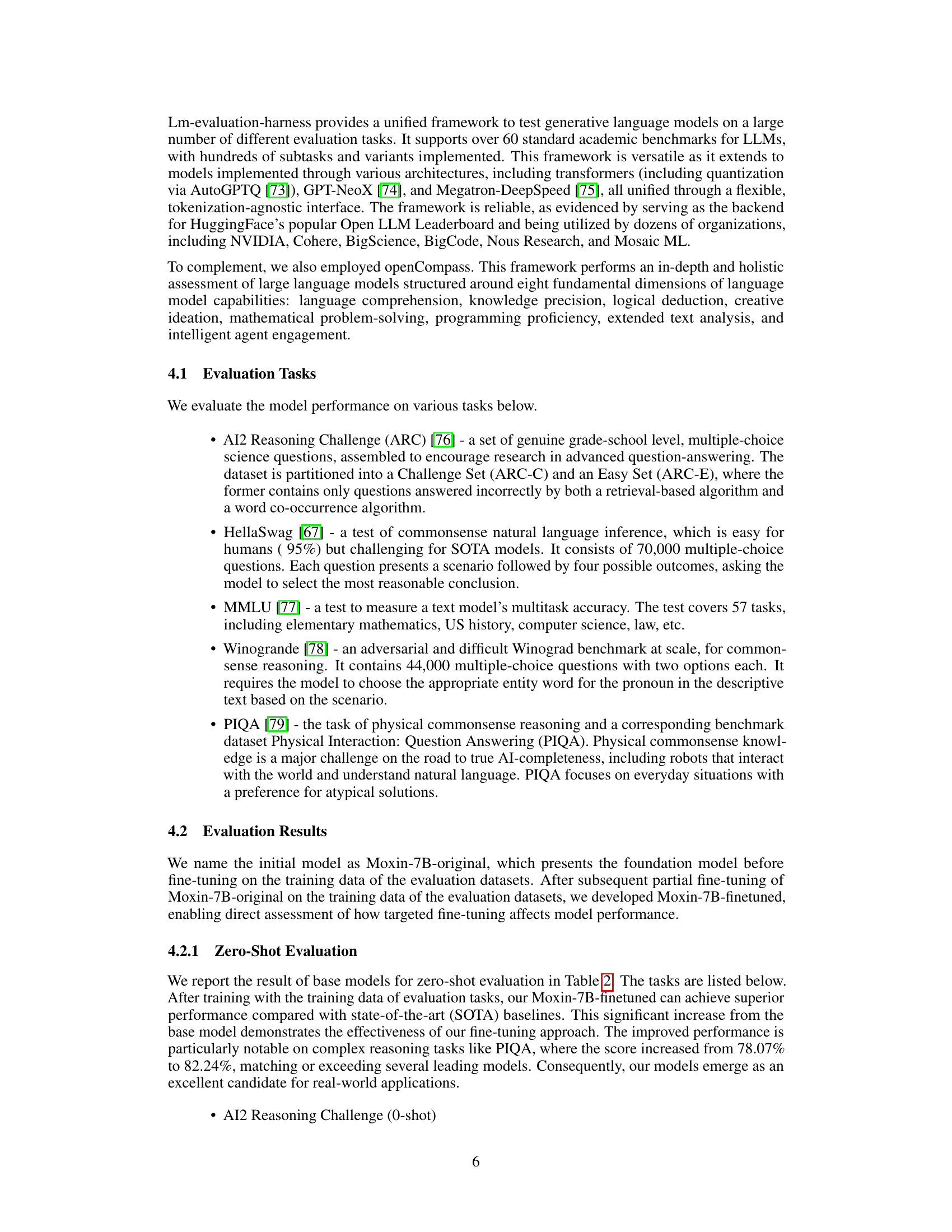

| Parameter | Value |

|---|---|

| n_layers | 36 |

| dim | 4096 |

| head_dim | 128 |

| hidden_dim | 14336 |

| n_heads | 32 |

| n_kv_heads | 8 |

🔼 This table details the hyperparameters used in the architecture of the Moxin 7B model. It shows the values set for key parameters that define the model’s structure and behavior, including the number of layers, dimensions, head dimensions, hidden dimensions, and number of heads. These parameters are crucial for understanding the model’s complexity and computational requirements.

read the caption

Table 1: Parameter setting.

Full paper#