↗ arXiv ↗ Hugging Face ↗ Papers with Code

TL;DR#

Current text-to-image models, while impressive, often require extensive task-specific training and struggle with complex, multi-stage visual generation tasks like creating picture books or interleaved text-image articles. Existing solutions, like adapters or unified models, haven’t fully cracked the code for stable, general-purpose zero-shot generation and often falter with unseen tasks. These approaches also often underutilize the wealth of publicly available task-agnostic data.

This paper introduces ChatDiT, a novel framework leveraging the inherent zero-shot capabilities of pre-trained diffusion transformers (DiTs). It requires no additional training or modifications. Instead, it uses a clever multi-agent system: an Instruction-Parsing agent to understand user instructions and images, a Strategy-Planning agent to devise generation steps, and an Execution agent to perform actions using a toolkit of DiTs. This enables users to interact with the system via natural language for tasks ranging from image edits to multi-page illustrations. Evaluation on IDEA-Bench reveals ChatDiT outperforms existing models in zero-shot generation, showcasing DiTs’ potential.

Key Takeaways#

Why does it matter?#

ChatDiT introduces a novel approach to zero-shot image generation, pushing boundaries in leveraging pre-trained DiTs. Its multi-agent, conversational design offers valuable insights into building interactive visual generation systems. By releasing their code and results, the researchers promote further study of DiTs’ capabilities, particularly for complex tasks where current models fall short. This research opens avenues for creating more flexible and user-friendly image generation tools.

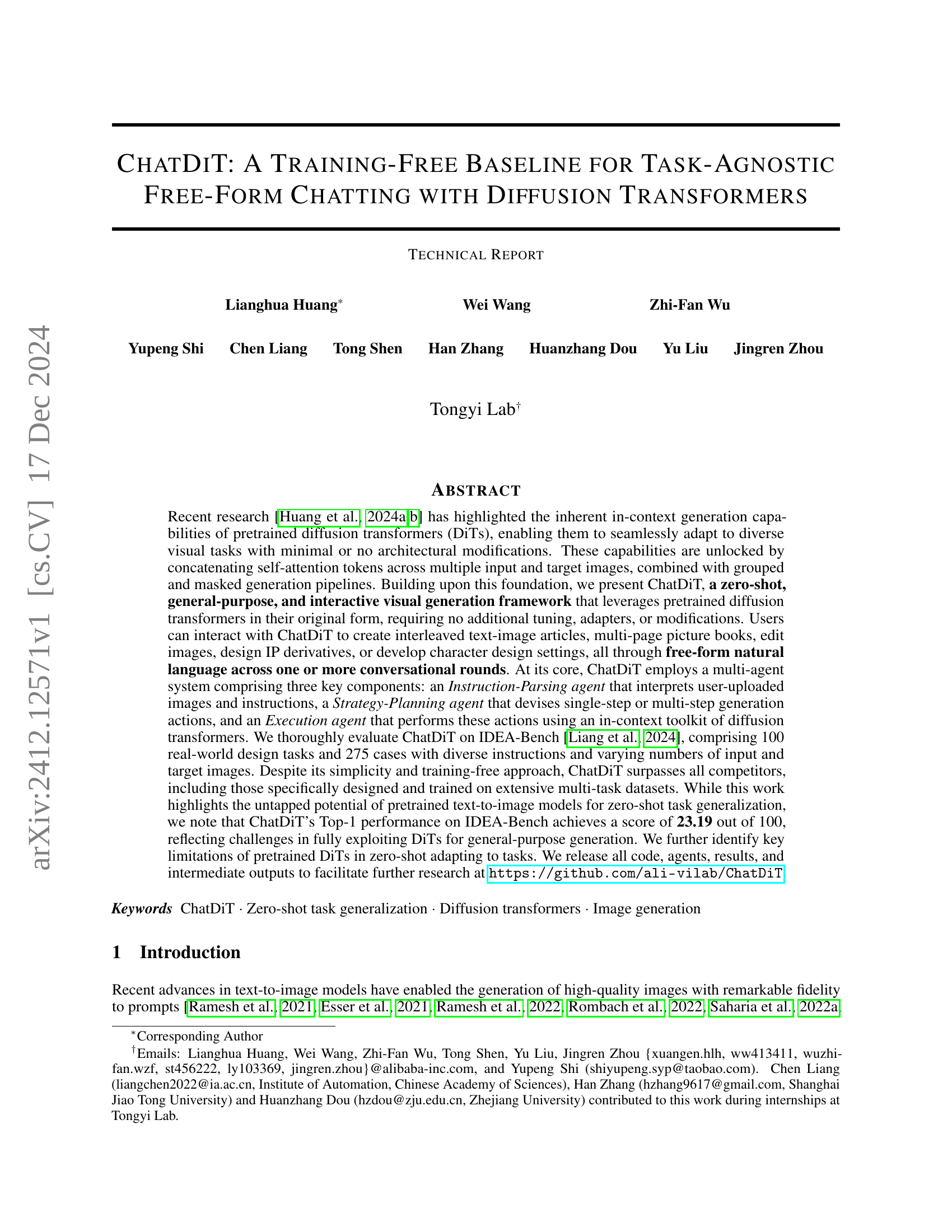

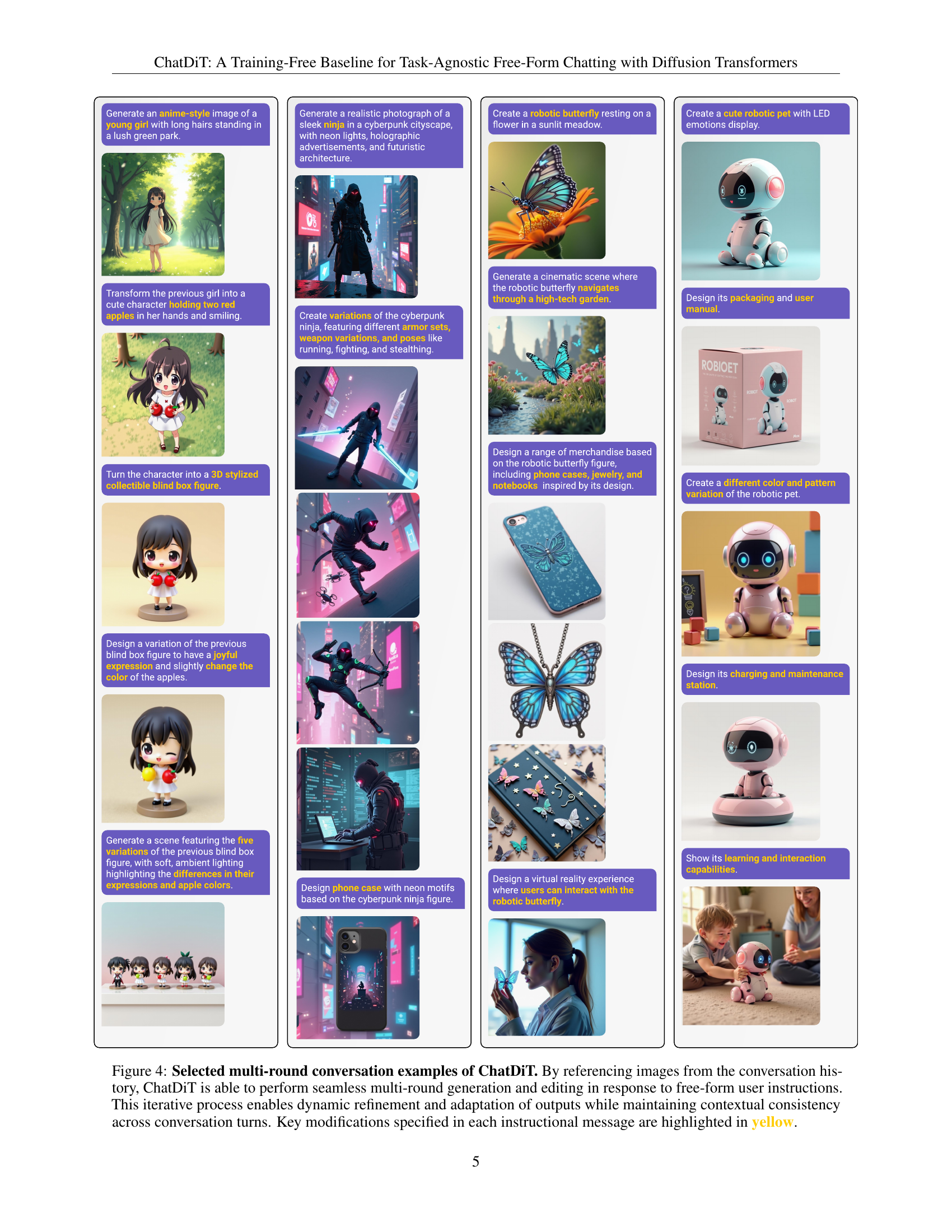

Visual Insights#

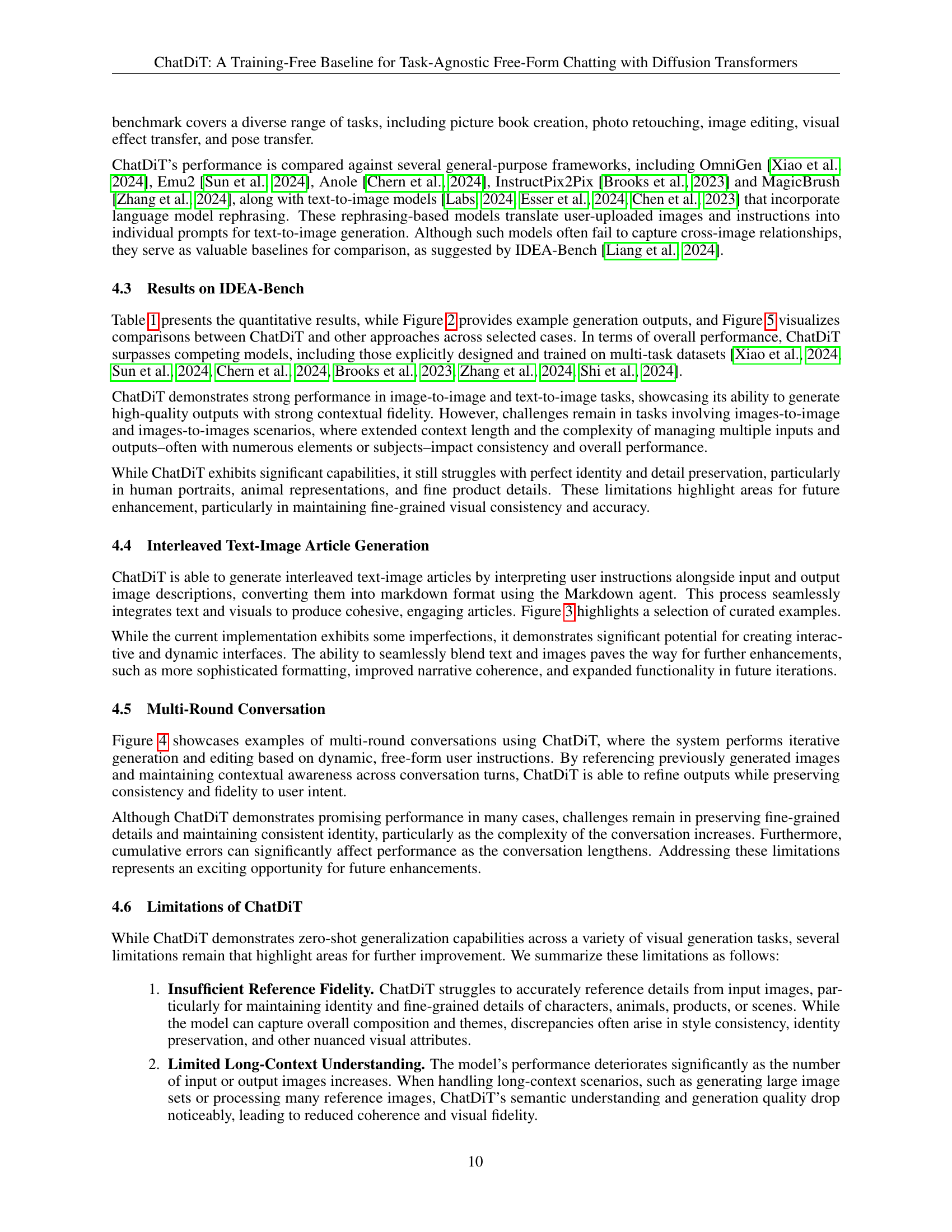

🔼 The ChatDiT framework employs a multi-agent system for image generation. It has three core agents: Instruction-Parsing, Strategy-Planning, and Execution. The Instruction-Parsing agent analyzes user instructions and input images; the Strategy-Planning agent formulates generation strategies; and the Execution agent uses pretrained diffusion transformers to generate images. An optional Markdown agent can create illustrated articles from the output. Sub-agents within each core agent handle specific tasks.

read the caption

Figure 1: Overview of the ChatDiT multi-agent framework. The framework consists of three core agents operating sequentially: the Instruction-Parsing Agent interprets user instructions and analyzes inputs, the Strategy-Planning Agent formulates in-context generation strategies, and the Execution Agent performs the planned actions using pretrained diffusion transformers. An optional Markdown Agent integrates the outputs into cohesive, illustrated articles. Sub-agents handle specialized tasks within each core agent, ensuring flexibility and precision in generation.

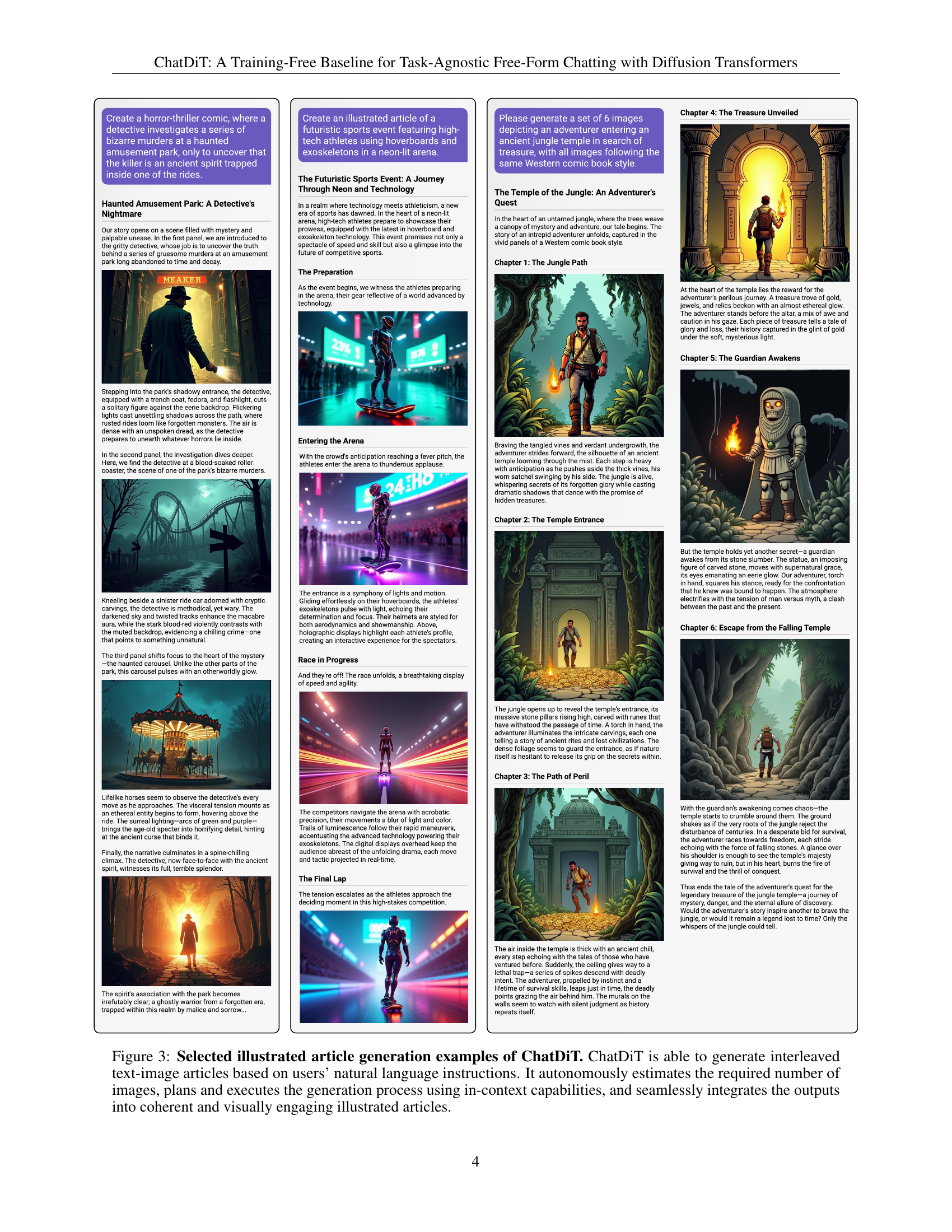

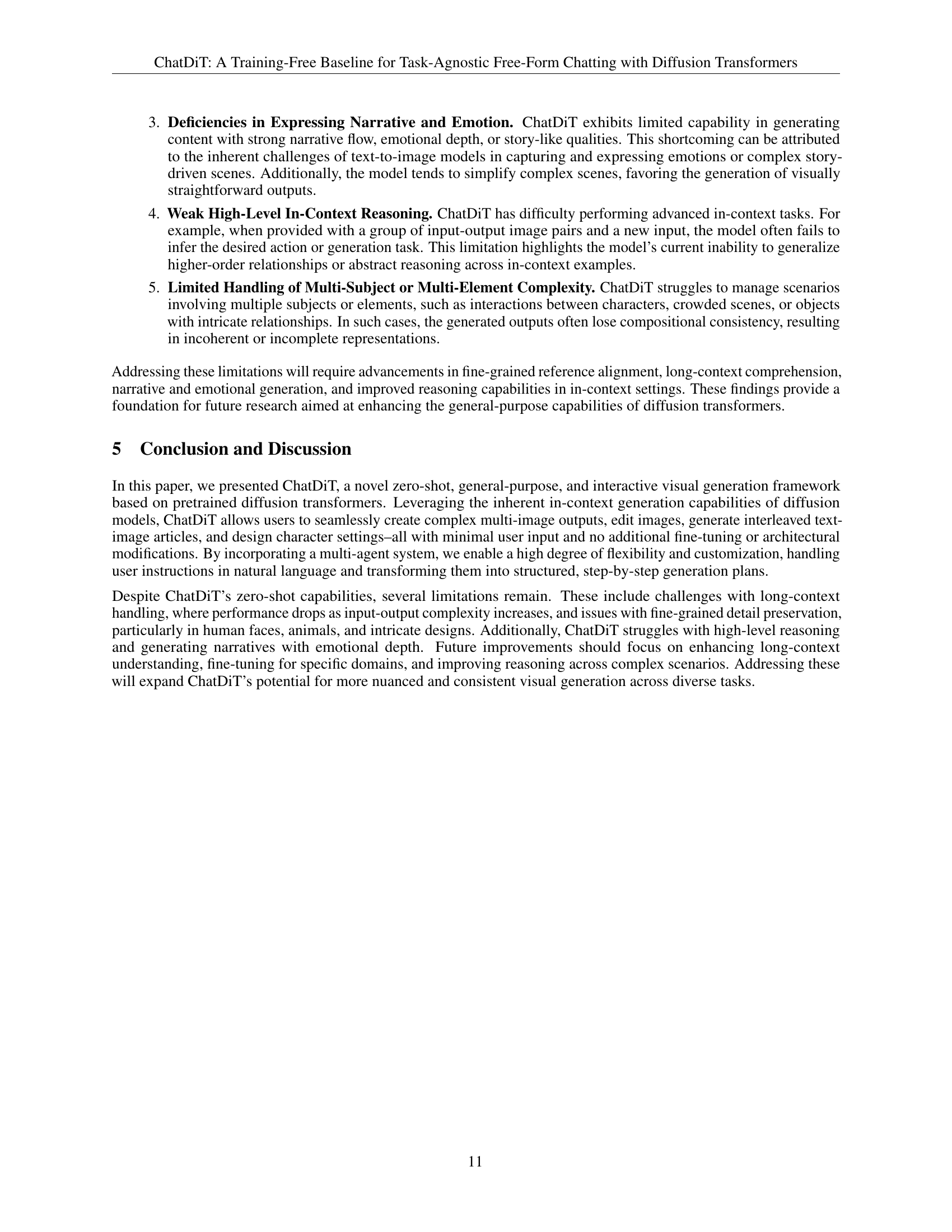

| Task Type | FLUX+GPT4o | DALL-E3+GPT4o | SD3+GPT4o | Pixart+GPT4o | InstructPix2Pix | MagicBrush | Anole | Emu2 | OmniGen | ChatDiT |

|---|---|---|---|---|---|---|---|---|---|---|

| T2I | 46.06 | 24.34 | 24.04 | 14.44 | 0 | 0 | 0 | 17.98 | 21.41 | 50.91 |

| I2I | 12.13 | 6.95 | 10.79 | 7.75 | 17.58 | 19.07 | 0.64 | 7.05 | 8.17 | 21.58 |

| Is2I | 4.89 | 5.27 | 4.69 | 3.48 | 0 | 0 | 0 | 8.98 | 2.77 | 2.36 |

| T2Is | 20.15 | 14.36 | 21.59 | 17.46 | 0 | 0 | 1.74 | 0 | 0 | 27.77 |

| Is2Is | 29.17 | 14.44 | 13.06 | 21.39 | 0 | 0 | 0 | 0 | 0 | 13.33 |

| Avg. | 22.48 | 13.07 | 14.83 | 12.90 | 3.52 | 3.81 | 0.48 | 6.80 | 6.47 | 23.19 |

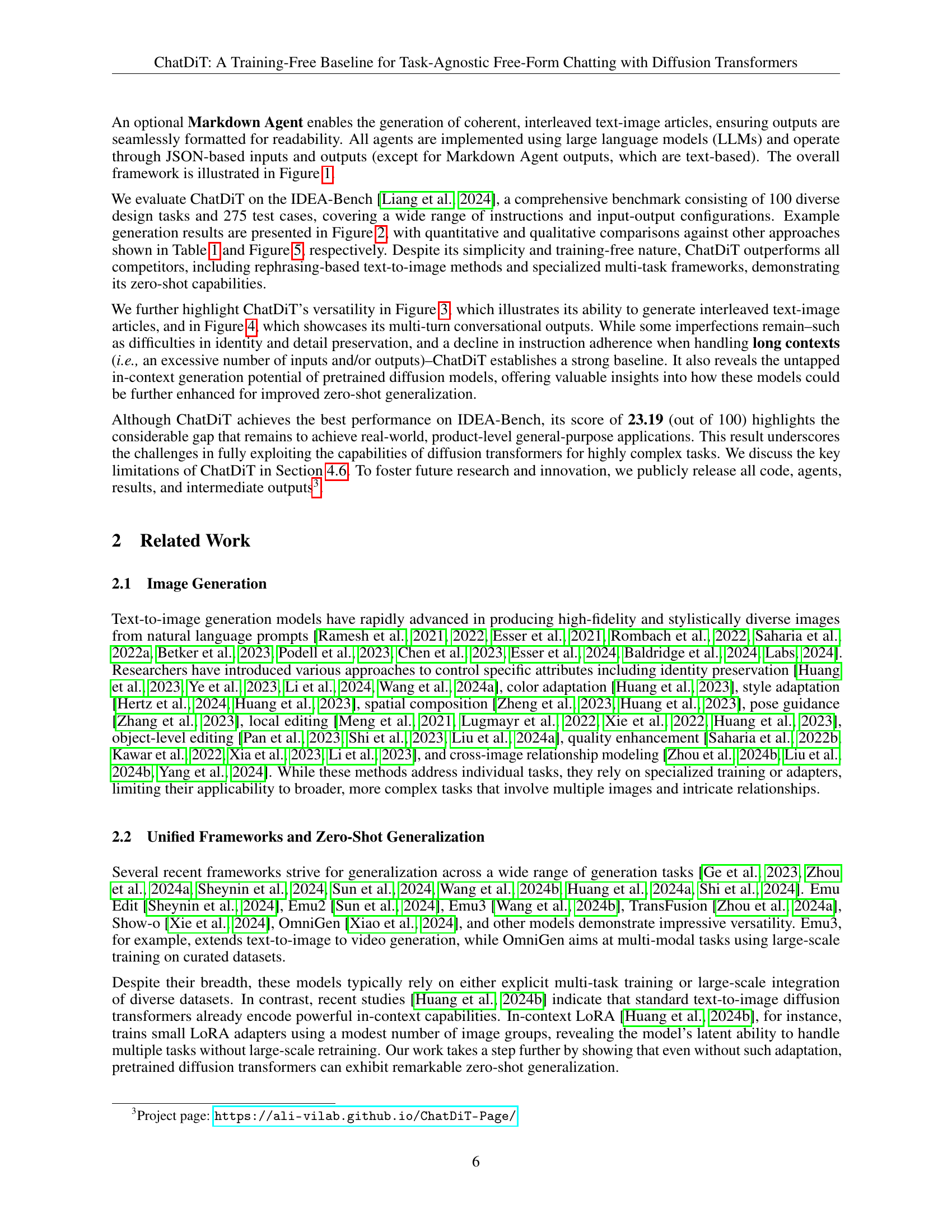

🔼 This table compares the performance of ChatDiT, a training-free image generation model, with other existing models on IDEA-Bench, a comprehensive benchmark for visual design tasks. The table includes metrics for Text-to-Image (T2I), Image-to-Image (I2I), Image set to Image (Is2I), Text-to-Image set (T2Is), and Image set to Image set (Is2Is) generation. Some competing models use a “+GPT4o” approach, meaning they leverage GPT-4 to rephrase instructions and images into prompts for individual image generation. The highest and second-highest scores for each task type are highlighted for easy comparison.

read the caption

Table 1: Comparison of ChatDiT with other models across various tasks on IDEA-Bench (Liang et al., 2024). Performance metrics are reported for different task types: T2I (Text-to-Image), I2I (Image-to-Image), Is2I (Image set to Image), T2Is (Text-to-Image set), and Is2Is (Image set to Image set). “+GPT4o” indicates that user instructions and uploaded images are reformulated into per-output-image prompts, enabling text-to-image models to generate results. The top two scores for each task are highlighted in red (best) and blue (second best).

In-depth insights#

In-Context DiT#

In-Context DiT refers to leveraging Diffusion Transformers (DiTs) for in-context learning in image generation. This approach suggests DiTs possess inherent capabilities to understand and generate images based on relationships between input and target images presented within a single context, eliminating the need for extensive training on individual tasks. This ‘context’ could be a collection of image-prompt pairs or a multi-panel image layout where relationships are encoded through prompts. Key aspects include using grouped and masked generation pipelines for multi-image generation and leveraging visible regions for in-context inpainting. This allows adaptation to various visual tasks, like style transfer and IP derivations, by manipulating the structure and content of the input context. This zero-shot generalization potential simplifies image manipulation workflows by translating complex instructions into contextual prompts for DiTs, promoting flexible and intuitive image generation.

Zero-Shot Visual Chat#

Zero-Shot Visual Chat presents a compelling vision for the future of image generation. Imagine conversing with an AI, providing natural language instructions alongside images, and receiving modified or newly created visuals in real-time. This paradigm shift moves beyond simple text prompts towards richer, multi-turn dialogues centered around visual content. The potential applications are vast, from interactive design and content creation to accessible image editing for non-professionals. Though challenges remain, such as maintaining visual consistency across turns and handling complex instructions, Zero-Shot Visual Chat represents a crucial step towards more intuitive and flexible human-computer interaction.

Multi-Agent ChatDiT#

ChatDiT’s multi-agent system is its core strength, enabling complex visual generation tasks. This system interprets instructions, plans generation strategies, and executes them using an in-context toolkit. The Instruction-Parsing Agent analyzes user input and images. The Strategy-Planning Agent formulates a step-by-step plan. The Execution Agent, powered by diffusion transformers, brings the plan to life. A crucial element is the in-context toolkit, which leverages the inherent capabilities of diffusion transformers to handle multi-image generation. This framework is training-free and adaptable, showcasing the potential of multi-agent systems in zero-shot visual generation.

IDEA-Bench Results#

ChatDiT, a training-free framework, demonstrates promising zero-shot image generation capabilities on IDEA-Bench. It leverages the in-context learning potential of diffusion transformers, outperforming existing methods, especially in image-to-image and text-to-image tasks. However, limitations arise in scenarios requiring multi-image handling or long-context understanding, such as storybook generation or editing multiple elements simultaneously. These limitations suggest future directions involving improved handling of extended contexts and refining the preservation of fine-grained visual details, especially for portraits and objects. Despite these challenges, ChatDiT’s strong performance establishes a valuable baseline for future training-free, general-purpose generation models.

DiT Limitations#

While Diffusion Transformers (DiTs) show promise, several key limitations hinder their real-world applicability. Reference fidelity remains a challenge, especially preserving identity and fine details when referencing multiple inputs. Long-context understanding is weak, with performance dropping as input/output complexity rises. The models struggle to convey narrative, emotion, and higher-order reasoning, often simplifying complex scenarios. Multi-subject compositions pose problems, and outputs lose coherence when depicting interactions. Addressing these limitations through improved reference alignment, long-context comprehension, and reasoning capabilities will be crucial for realizing DiTs’ full potential.

More visual insights#

More on figures

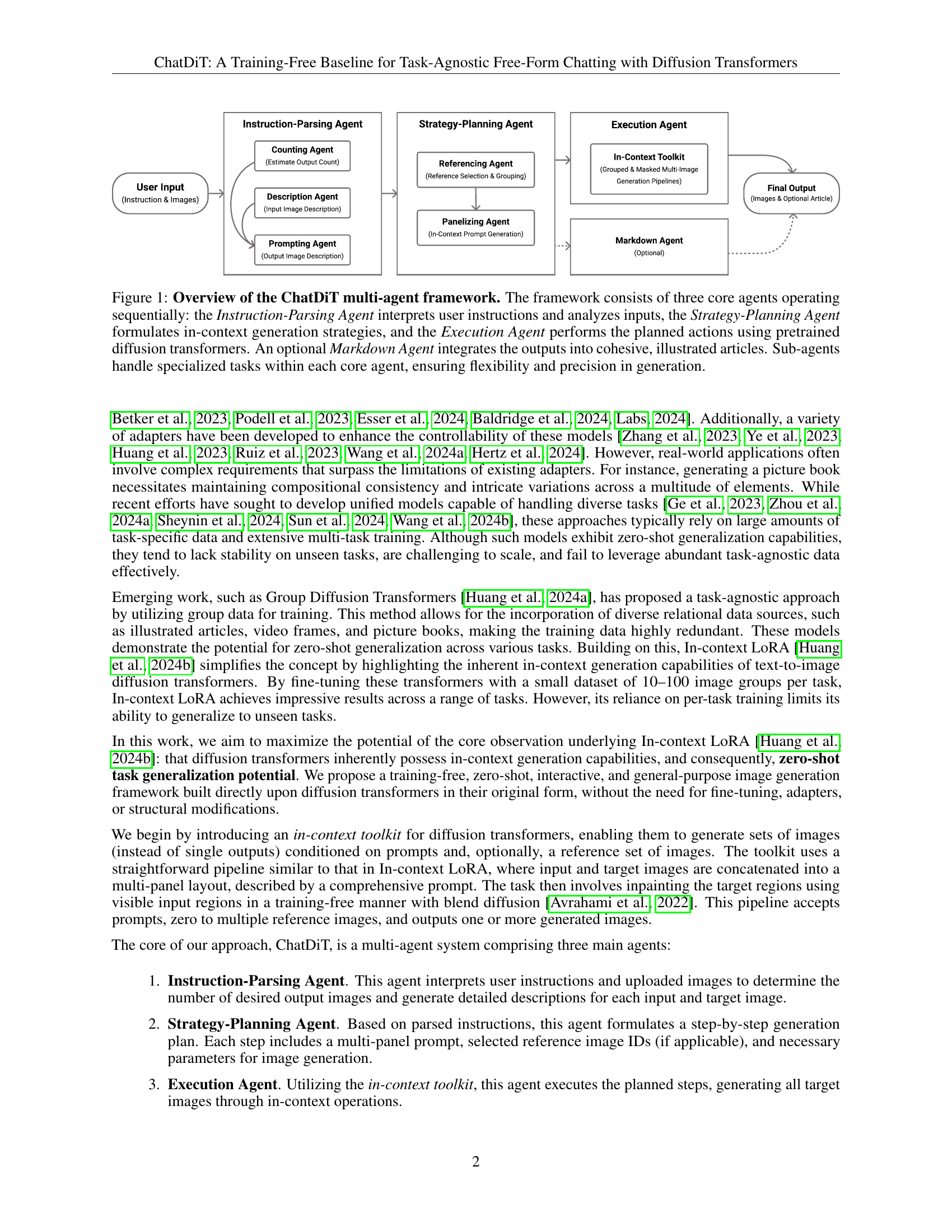

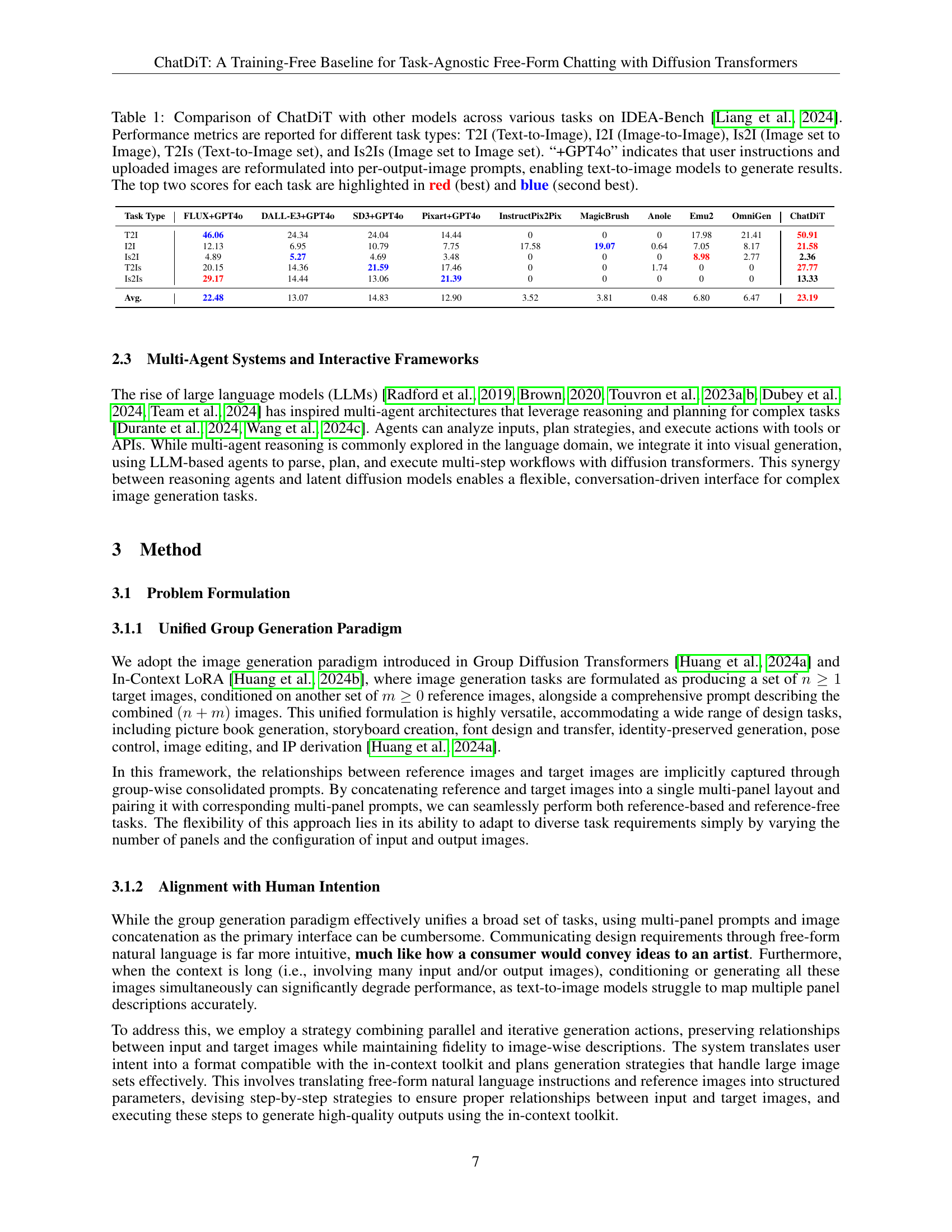

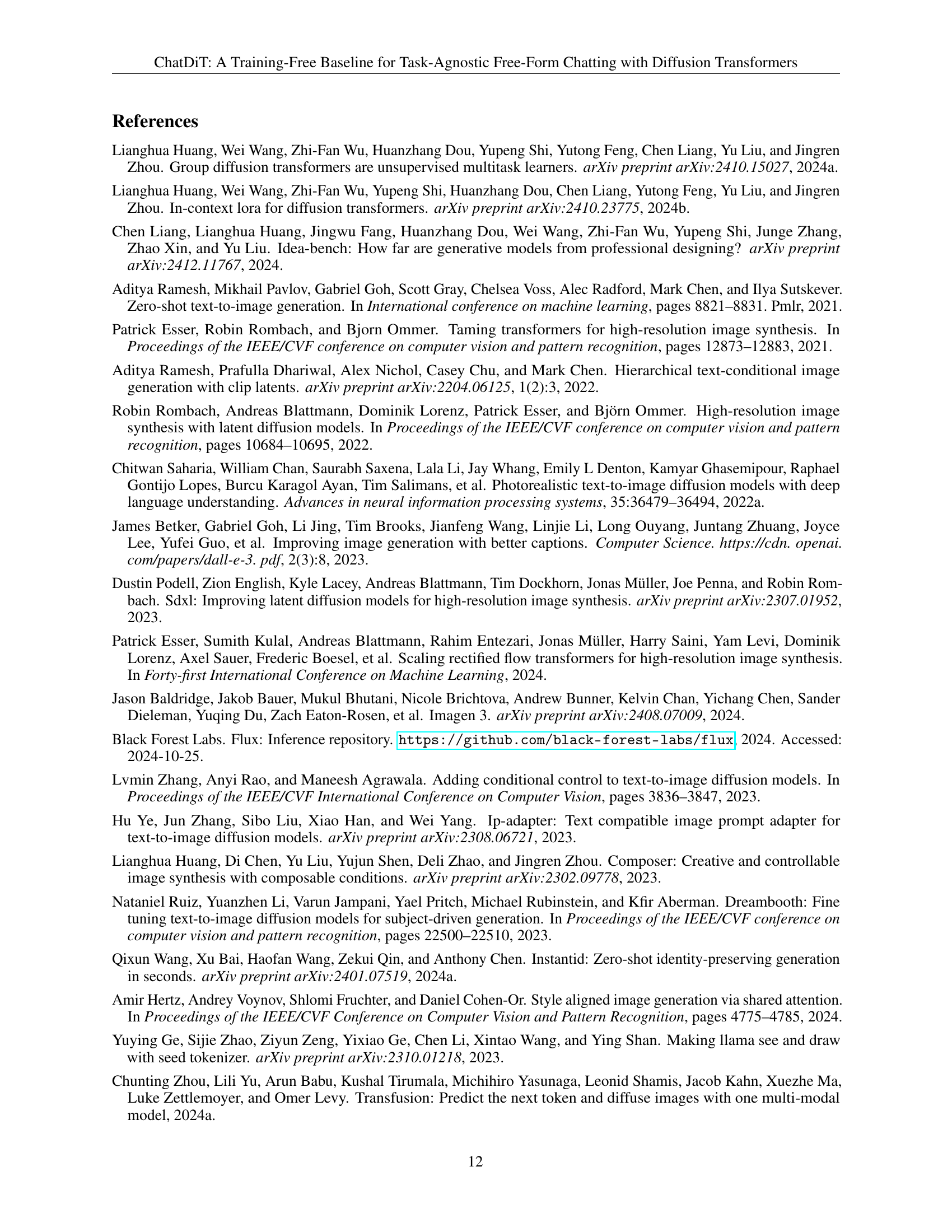

🔼 This figure showcases ChatDiT’s zero-shot image generation capabilities on a variety of tasks from the IDEA-Bench dataset. Each example includes the user’s condensed instruction and the generated image(s). The tasks demonstrated include color variations of a game controller, 3D rendering from a sketch, image zooming and cropping, pose modification, character design transfer to a new object (tote bag), image-based product design (water bottle), scene generation based on an object (speaker), image sequence generation (sunflower growth), and style transfer (witch portrait). These examples highlight ChatDiT’s ability to handle diverse instructions, input types, and desired output formats without any specific training or adaptation.

read the caption

Figure 2: Selected single-round generation examples of ChatDiT on IDEA-Bench (Liang et al., 2024). ChatDiT demonstrates versatility by handling diverse tasks, instructions, and input-output configurations in a zero-shot manner through free-form natural language interactions. The user messages shown here are condensed summaries of the original detailed instructions from IDEA-Bench to conserve space.

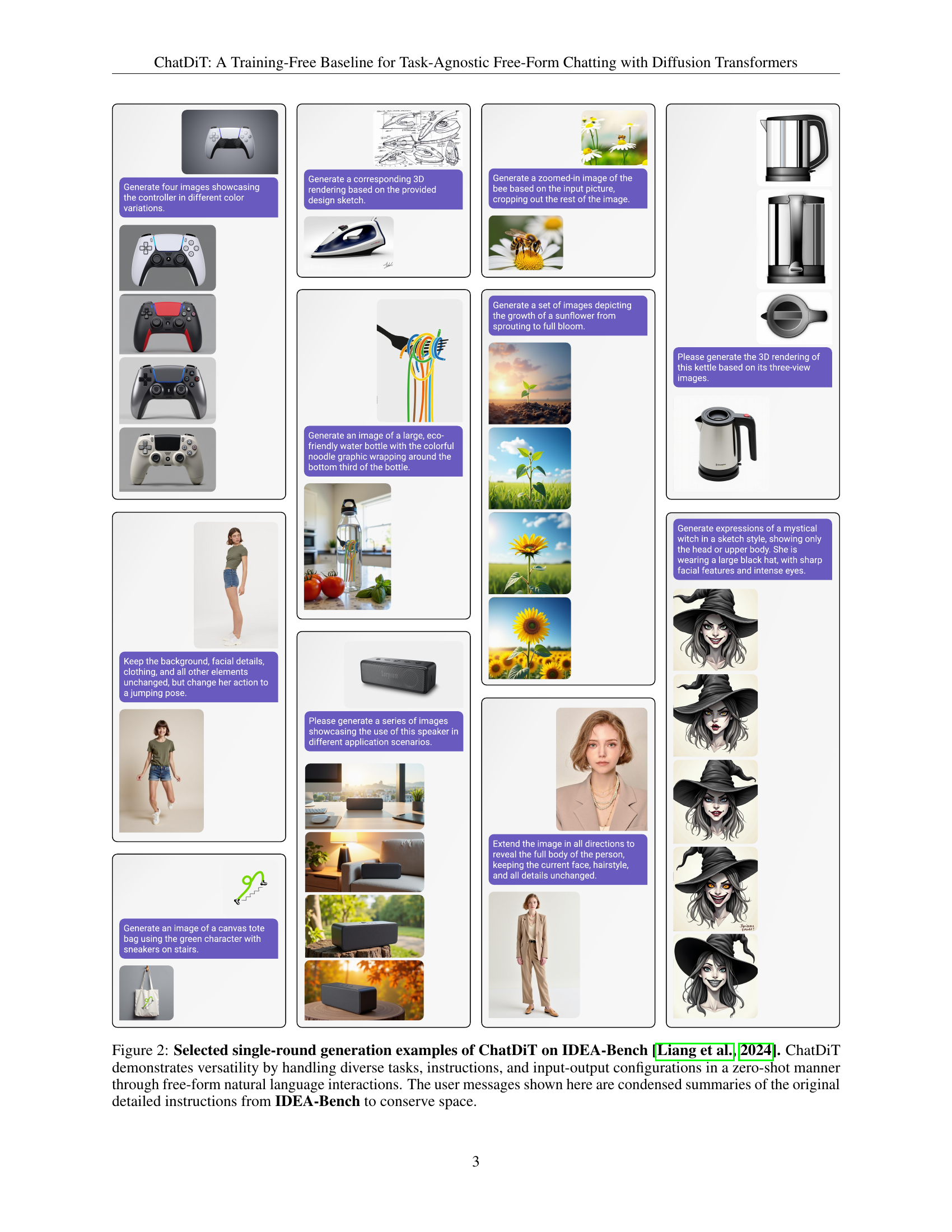

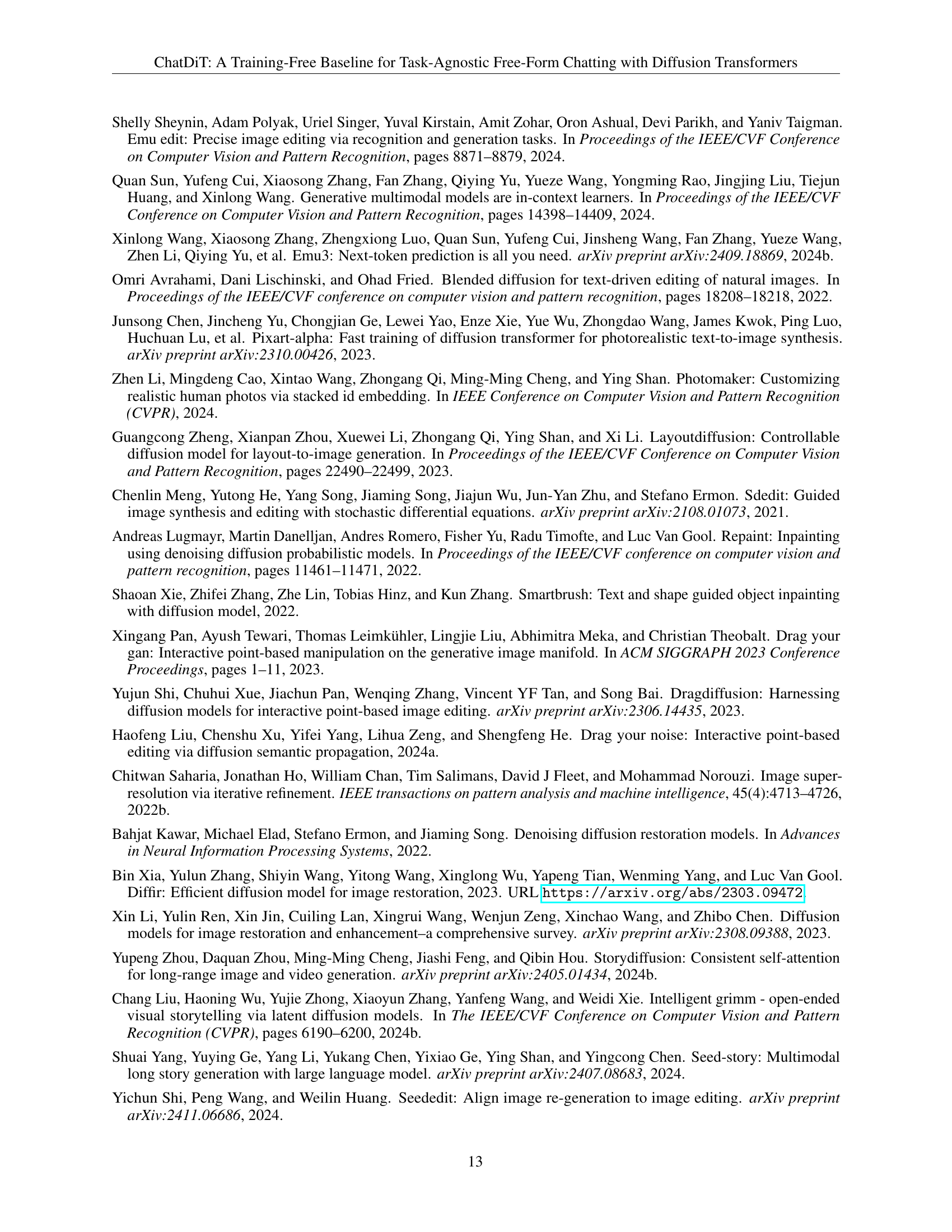

🔼 ChatDiT generates illustrated articles based on natural language instructions, estimating the number of images, planning, and executing generation using in-context capabilities to seamlessly integrate outputs into coherent articles. The examples showcase ChatDiT’s ability to generate diverse illustrated content, including a horror-thriller comic about a detective investigating murders at a haunted amusement park, an article on a futuristic sports event featuring high-tech athletes, and an adventurer’s quest for treasure in a jungle temple.

read the caption

Figure 3: Selected illustrated article generation examples of ChatDiT. ChatDiT is able to generate interleaved text-image articles based on users’ natural language instructions. It autonomously estimates the required number of images, plans and executes the generation process using in-context capabilities, and seamlessly integrates the outputs into coherent and visually engaging illustrated articles.

Full paper#