↗ arXiv ↗ Hugging Face ↗ Papers with Code

TL;DR#

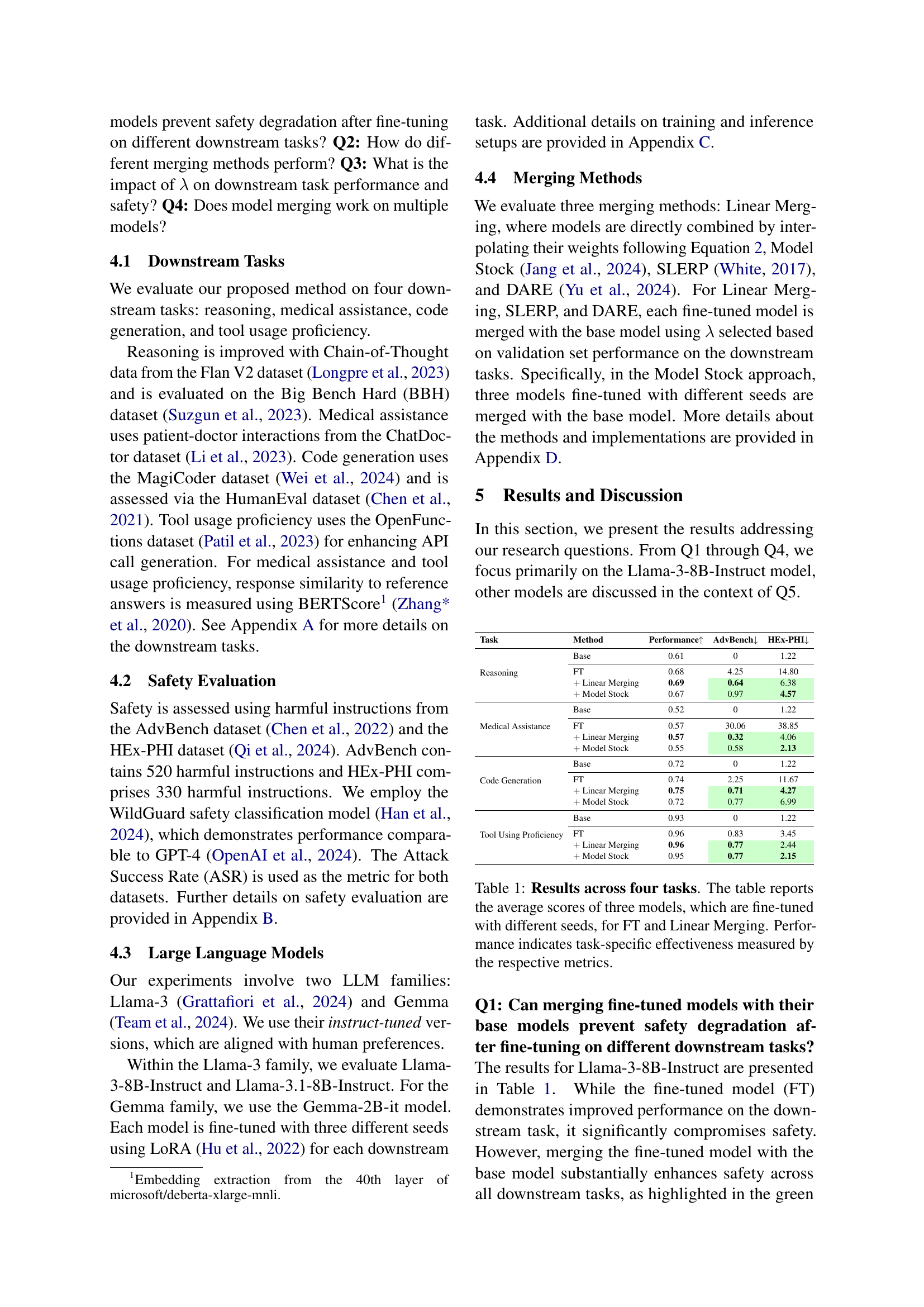

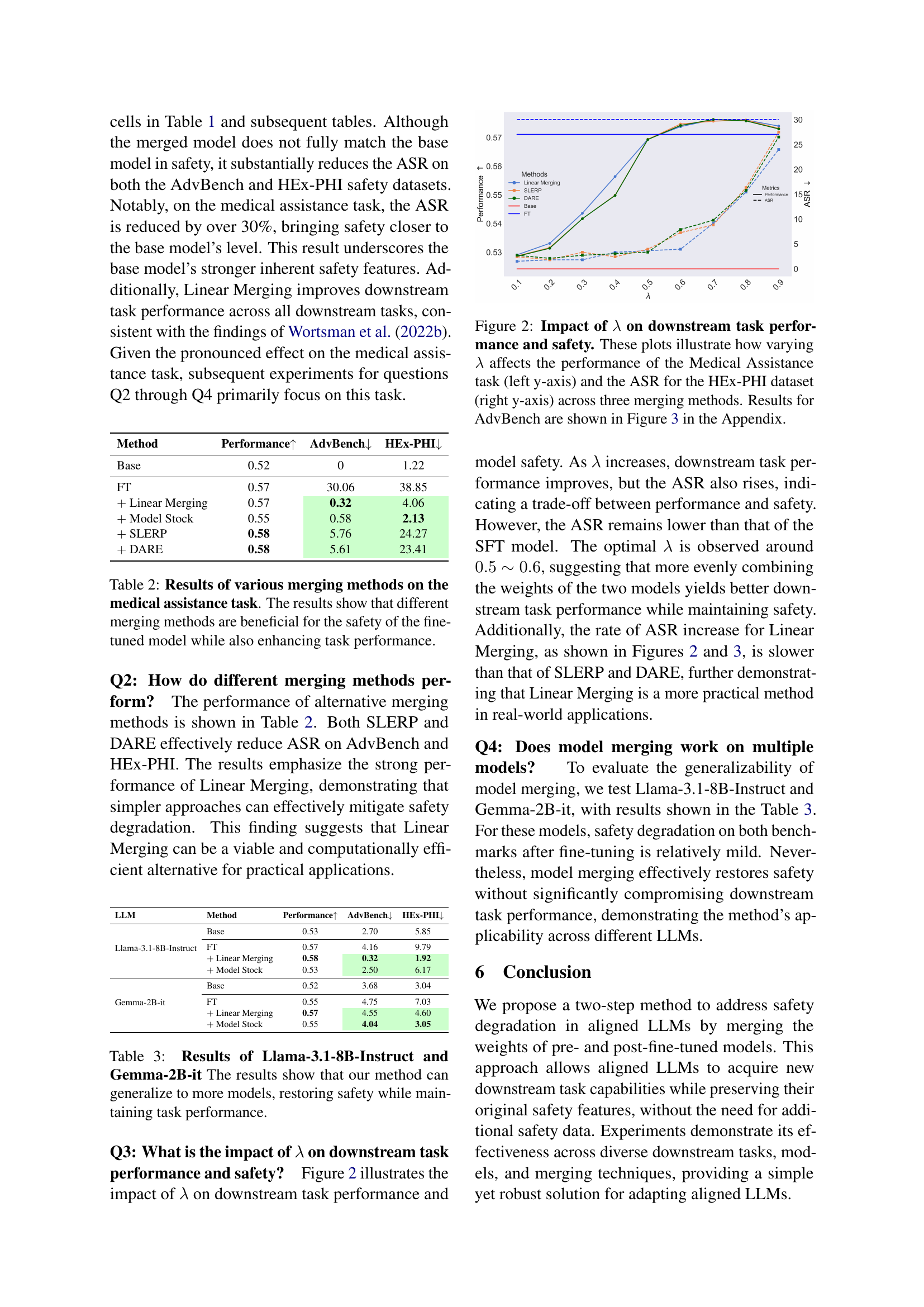

Fine-tuning large language models (LLMs) for specific tasks often leads to a concerning drop in their safety. Many current solutions try to fix this by adding more safety data, which is often difficult and expensive to obtain. This creates a challenge for researchers and developers who want to improve LLM performance while ensuring they remain safe and reliable.

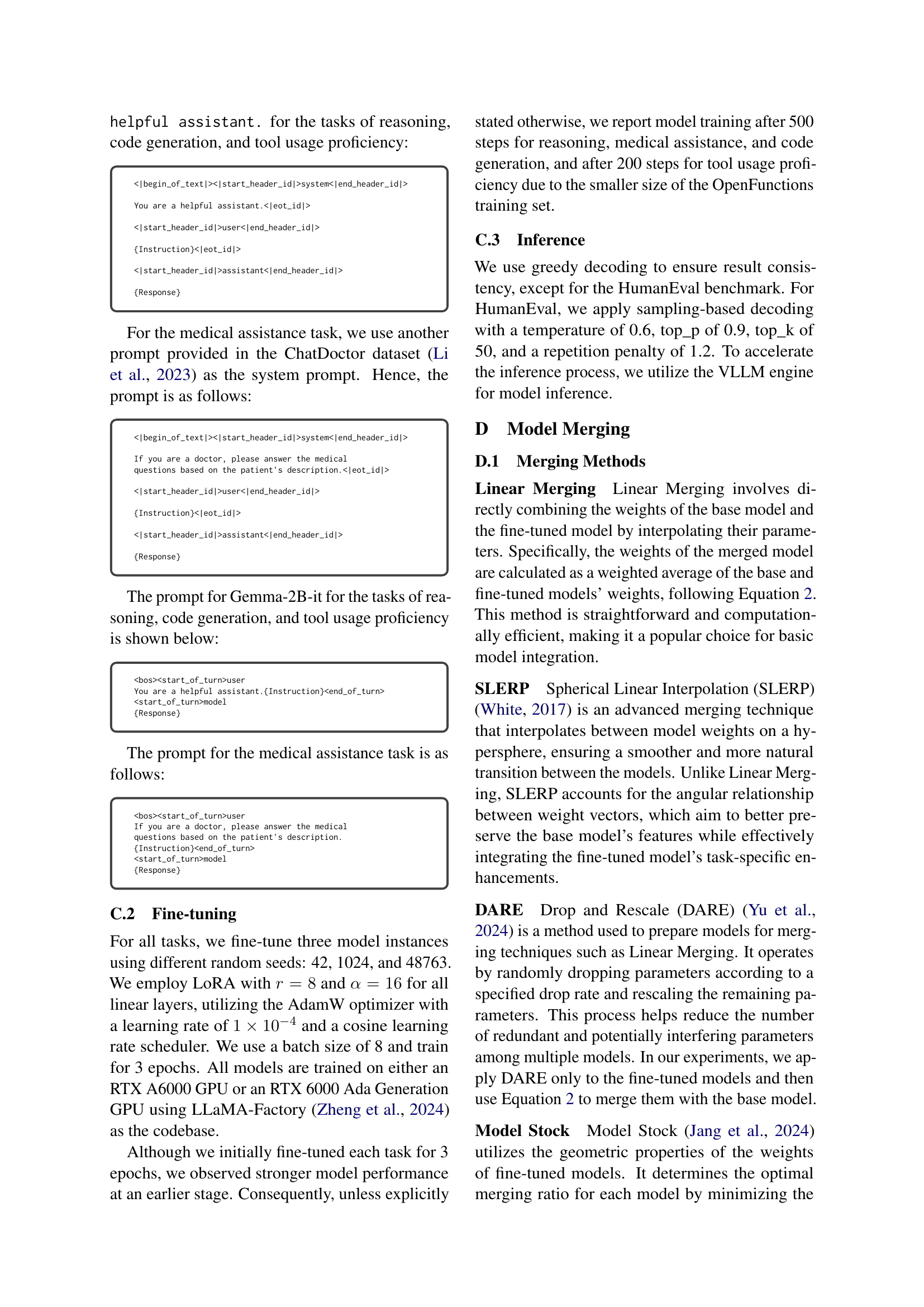

This research proposes a novel approach: merging the weights of the original, safety-aligned LLM (the ‘base model’) with the weights of the model after it has been fine-tuned for a specific task. Experiments show that this simple method significantly reduces safety risks while actually improving performance across various tasks and models. This offers a practical solution for building safer and more effective LLMs without the need for large amounts of extra data.

Key Takeaways#

Why does it matter?#

This paper is important because it offers a practical solution to a critical problem in fine-tuning large language models (LLMs): safety degradation. Current methods often require substantial additional safety data, which is scarce and expensive. This research provides a simple, effective, and data-efficient way to maintain LLM safety while improving performance, thus advancing the responsible development and deployment of LLMs. It opens new avenues for research into model merging techniques and their applications in mitigating safety risks during model adaptation for various downstream tasks.

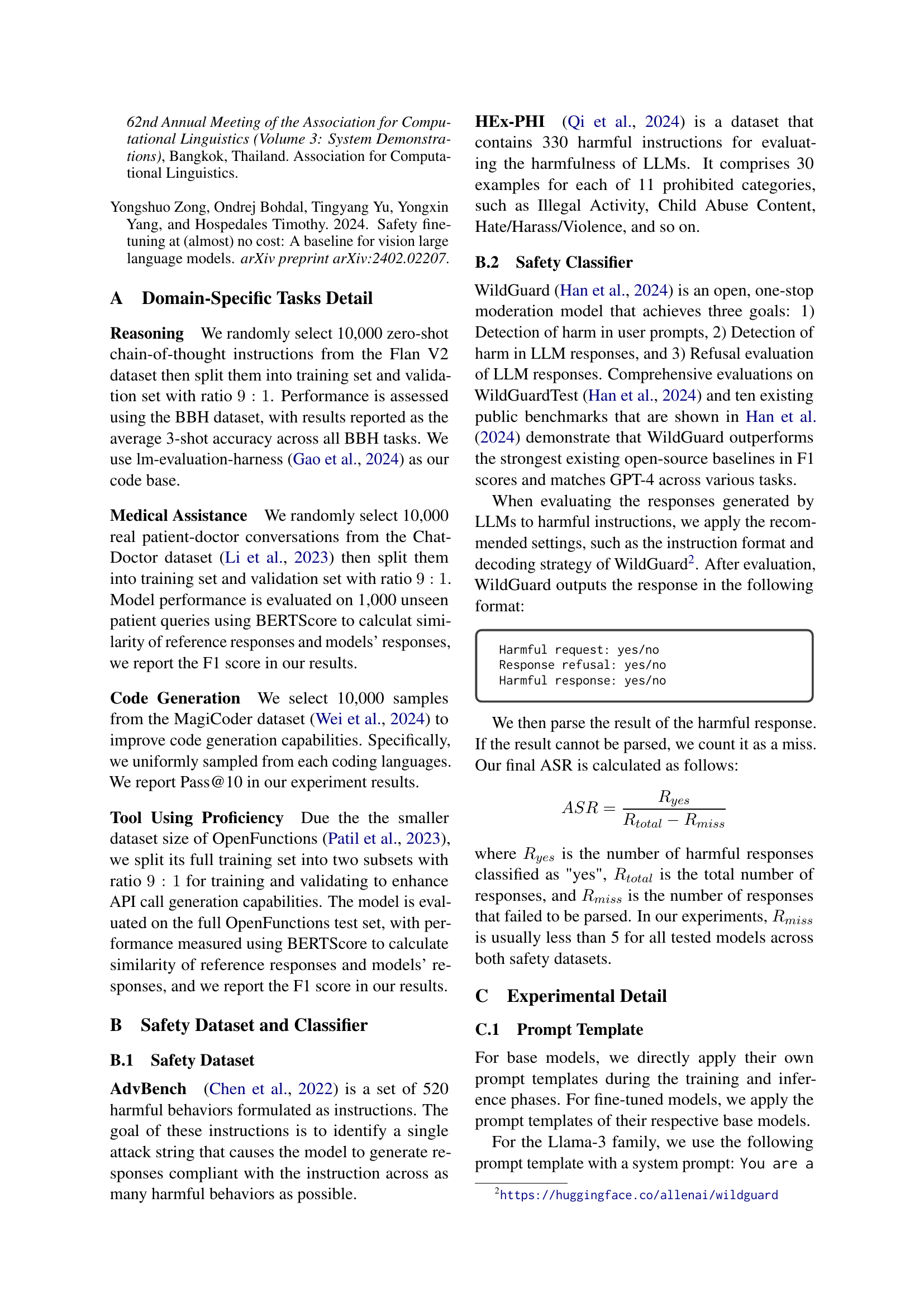

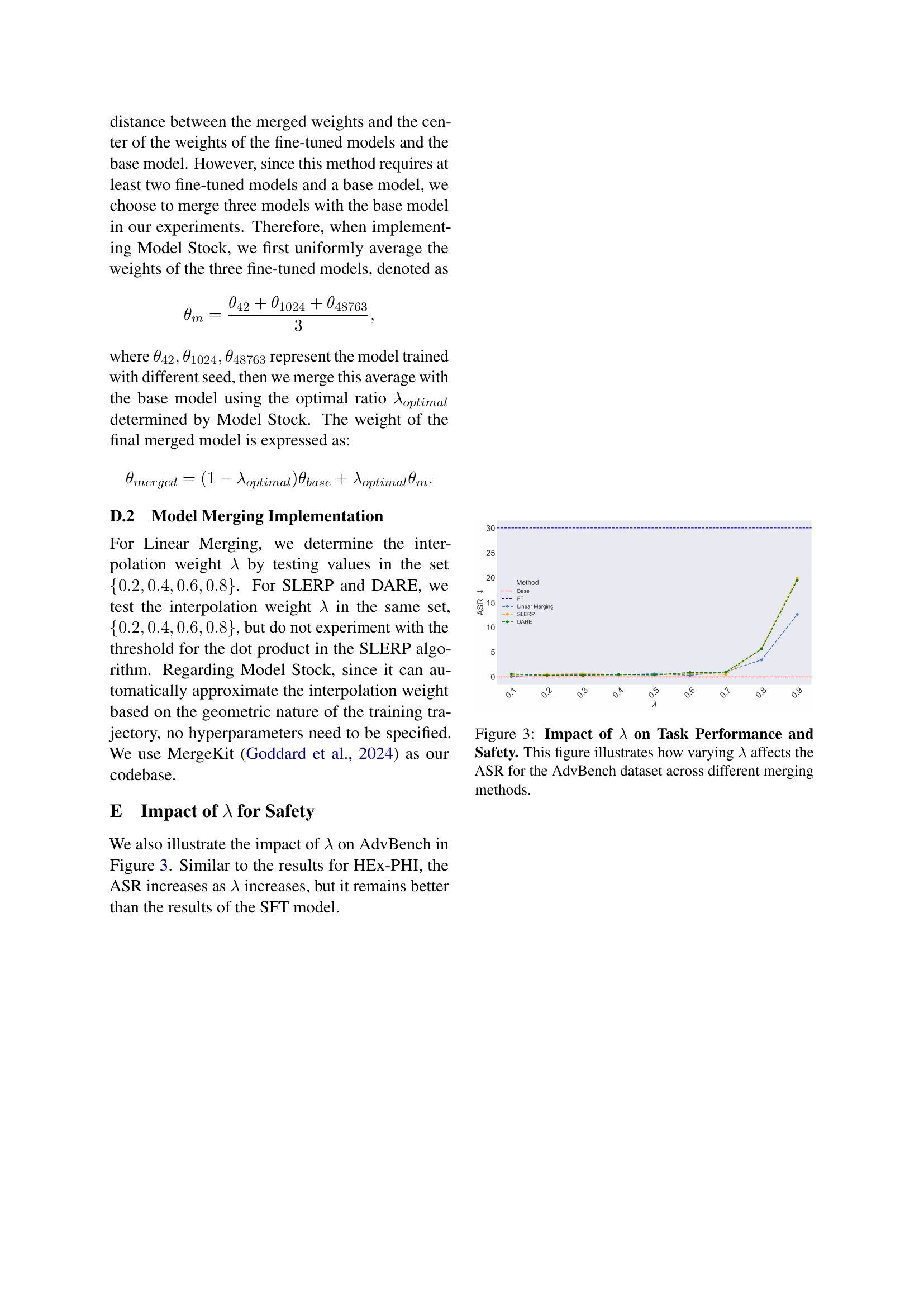

Visual Insights#

Full paper#