↗ arXiv ↗ Hugging Face ↗ Papers with Code

TL;DR#

Current methods for zero-shot customized video generation often struggle due to suboptimal feature extraction and injection techniques, relying on extra models and adding complexity. These methods often fail to maintain consistent subject appearance and diversity in generated videos. This leads to subpar results, hindering the potential of this exciting field.

VideoMaker directly uses a pre-trained video diffusion model (VDM) as both a fine-grained feature extractor and injector. It achieves this by inputting a reference image and employing the VDM’s spatial self-attention mechanism to align subject features with the generated content naturally. This innovative approach improves subject fidelity and video diversity while significantly reducing model complexity and training time. Experiments validate the superior performance of VideoMaker compared to existing methods.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers in video generation because it introduces a novel approach to zero-shot customized video generation, a highly sought-after capability. Its innovative framework bypasses the limitations of existing methods by leveraging the inherent capabilities of video diffusion models. The findings challenge existing assumptions and open new avenues for research, impacting various applications from film production to virtual reality.

Visual Insights#

🔼 Figure 1 visualizes the capabilities of VideoMaker, a novel framework for zero-shot customized video generation. The figure showcases example video sequences generated by the method, demonstrating its ability to create high-fidelity videos of both humans and objects. The top row shows examples of customized human video generation where the model generates videos of a person performing various actions (drinking coffee, reading, working on a laptop, playing guitar), based only on a single reference image. The bottom row demonstrates customized object video generation with examples of panda and dog videos generated from a single reference image, displaying various actions. These results highlight the model’s capacity to synthesize realistic and diverse video content based on minimal input, showcasing a significant advancement in zero-shot video generation.

read the caption

Figure 1: Visualization for our VideoMaker. Our method achieves high-fidelity zero-shot customized human and object video generation based on AnimateDiff [26].

| Method | CLIP-T | Face Sim. | CLIP-I | DINO-I | T.Cons. | DD |

|---|---|---|---|---|---|---|

| IP-Adapter | 0.2064 | 0.1994 | 0.7772 | 0.6825 | 0.9980 | 0.1025 |

| IP-Adapter-Plus | 0.2109 | 0.2204 | 0.7784 | 0.6856 | 0.9981 | 0.1000 |

| IP-Adapter-Faceid | 0.2477 | 0.5610 | 0.5852 | 0.4410 | 0.9945 | 0.1200 |

| ID-Animator | 0.2236 | 0.3224 | 0.4719 | 0.3872 | 0.9891 | 0.2825 |

| Photomaker(SDXL) | 0.2627 | 0.3545 | 0.7323 | 0.4579 | 0.9777 | 0.3675 |

| Ours | 0.2586 | 0.8047 | 0.8285 | 0.7119 | 0.9818 | 0.3725 |

🔼 This table presents a comparison of different methods for customized human video generation. Several metrics are used to evaluate the performance of each method, including CLIP-T (text alignment), Face Similarity (subject fidelity), CLIP-I (image-text similarity assessing subject fidelity), DINO-I (visual similarity measuring subject fidelity), Temporal Consistency (how consistent the subject appearance is over time), and Dynamic Degree (a measure of motion variability). The best and second-best results for each metric are highlighted in bold and underlined, respectively. This allows for a quantitative comparison of the effectiveness of the different approaches in generating high-fidelity, consistent videos that accurately reflect the input subject and text prompt.

read the caption

Table 1: Comparison with the existing methods for customized human video generation. The best and the second-best results are denoted in bold and underlined, respectively.

In-depth insights#

VDM’s Inherent Force#

The core concept of “VDM’s Inherent Force” revolves around the unexpected capabilities of Video Diffusion Models (VDMs). The authors argue that existing methods for customized video generation rely on external components, like additional networks, to extract and inject subject features. This approach is deemed suboptimal due to the added complexity and limitations. Instead, they propose leveraging the inherent ability of pre-trained VDMs to perform these tasks. Directly inputting reference images acts as a potent way to extract detailed features, bypassing the need for separate feature extractors. Furthermore, the paper highlights the spatial self-attention mechanism within VDMs as a natural means for feature injection, enabling fine-grained control over the subject’s presence in the generated video. This reliance on the VDM’s own internal mechanisms represents a significant paradigm shift, suggesting a more efficient and effective approach to zero-shot customized video generation. This inherent force, therefore, is the unleashed potential of pre-trained VDMs, allowing for sophisticated video customization without the need for extensive auxiliary models or extensive finetuning.

Zero-shot Customization#

Zero-shot customization in video generation is a significant advancement, enabling the creation of specific videos without the need for subject-specific training data. This is achieved by leveraging the inherent capabilities of pre-trained Video Diffusion Models (VDMs). Instead of relying on extra modules for feature extraction and injection, which often leads to inconsistencies, this approach directly uses the VDM’s intrinsic processes. This involves using the VDM’s feature extraction capabilities on a reference image, effectively obtaining fine-grained subject features already aligned with the model’s knowledge. A novel bidirectional interaction between subject and generated content is then established within the VDM using spatial self-attention, ensuring the subject’s fidelity while maintaining the diversity of the video. This eliminates the need for additional training parameters and complicated architectures, making the process more efficient and effective. The inherent force of the VDM is thus activated, eliminating the limitations of previous heuristic approaches. This is a promising step towards more flexible and practical video generation systems, especially in applications requiring rapid customization and diverse video outputs.

Self-Attention Injection#

The concept of ‘Self-Attention Injection’ in the context of video generation using diffusion models presents a novel approach to incorporating subject features. Instead of relying on external modules or heuristic methods, this technique leverages the inherent spatial self-attention mechanism within the Video Diffusion Model (VDM) itself. This is crucial because it avoids the introduction of additional training parameters and potential misalignment issues often encountered when injecting features extraneously. By directly interacting subject features with the generated content through spatial self-attention, the VDM can maintain better subject fidelity while promoting the diversity of generated video sequences. The bidirectional interaction ensures that the model doesn’t overfit to the subject features while still preserving subject appearance consistency. This approach also aligns well with the VDM’s pre-trained knowledge, leading to more natural and high-fidelity results. The effectiveness of self-attention injection lies in its ability to perform fine-grained feature interaction, going beyond coarse-grained semantic-level feature integration used in prior methods. This methodology ultimately represents a significant shift from heuristic methods towards exploiting the model’s innate capabilities for customized video generation.

Ablation Study Analysis#

An ablation study systematically removes components of a model to assess their individual contributions. In the context of a video generation model, this might involve removing or disabling elements such as the spatial self-attention mechanism, the guidance information recognition loss, or the subject feature extraction process. By observing how performance metrics (like FID, CLIP-score, etc.) change after each ablation, researchers can quantitatively determine the importance of each module. A well-executed ablation study demonstrates not just the overall effectiveness but also the internal working and relative contributions of various architectural choices. For instance, removing the spatial self-attention module might show a significant drop in subject fidelity, indicating that this component is crucial for integrating subject features into the video generation process. The ablation study also helps highlight the design choices that positively contribute to model robustness and effectiveness. A comprehensive ablation study is therefore a crucial aspect of assessing the novelty and quality of the work by proving the necessity of each chosen component in the overall pipeline.

Future Research#

Future research directions for zero-shot customized video generation should prioritize enhancing model robustness and generalization capabilities. Addressing limitations in handling diverse subjects and complex scenes is crucial. Exploring alternative feature extraction methods beyond direct VDM input, such as incorporating pre-trained visual encoders, could improve performance. Investigating more sophisticated feature injection mechanisms, potentially using attention-based architectures or diffusion model modifications, warrants investigation to ensure fidelity and diversity. Furthermore, developing larger and more diverse datasets is essential for improving model generalization and reducing biases. Finally, exploring the potential for incorporating user feedback during generation and developing metrics for evaluating subjective qualities like artistic style and emotional impact would significantly advance the field.

More visual insights#

More on figures

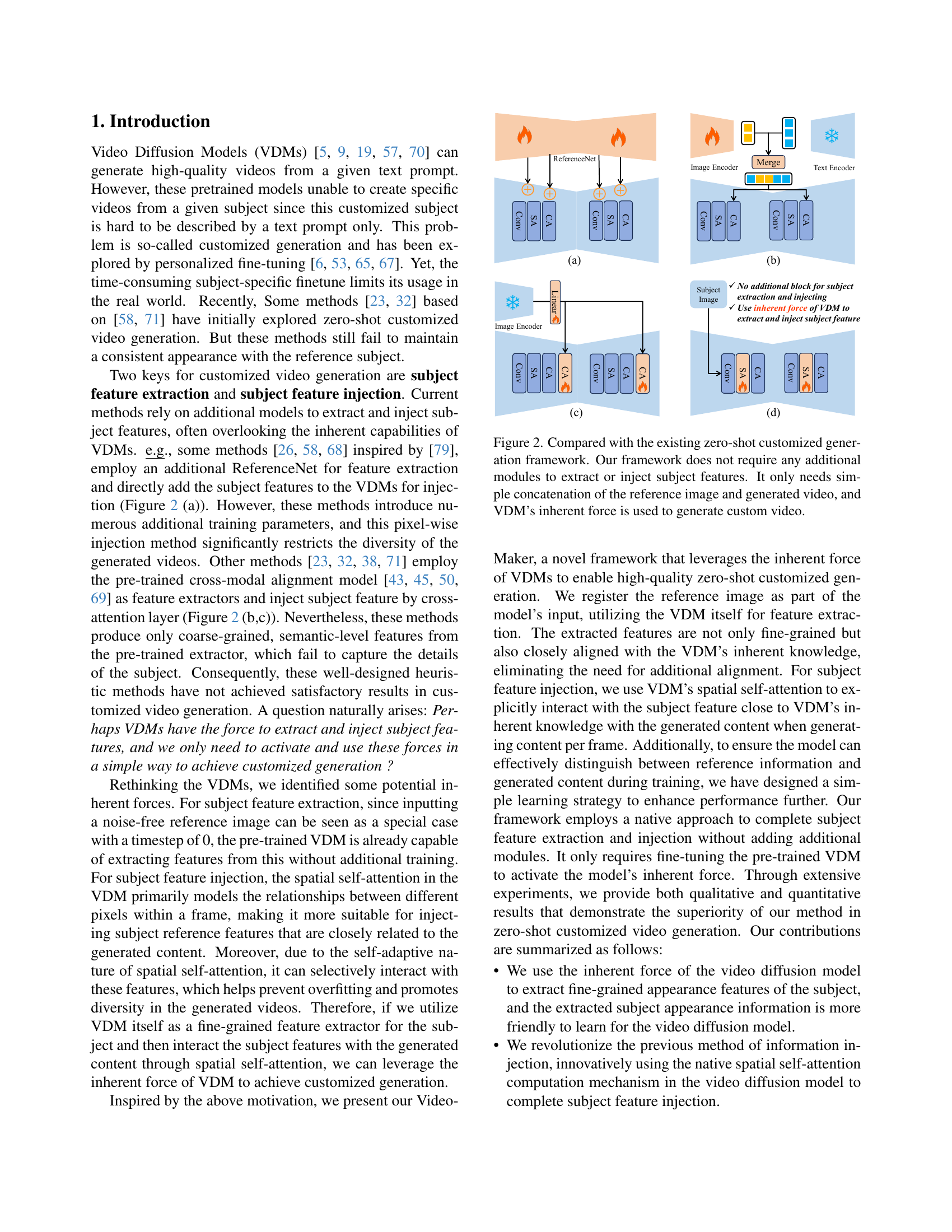

🔼 This figure compares the proposed VideoMaker framework with existing zero-shot customized video generation methods. Existing methods typically employ additional modules for subject feature extraction and injection, often involving complex processes like using a separate ReferenceNet or cross-modal alignment models. In contrast, VideoMaker leverages the inherent capabilities of Video Diffusion Models (VDMs). It directly inputs the reference image and the generated video frames into the VDM, relying on the VDM’s internal mechanisms (like spatial self-attention) to extract and inject the subject’s features naturally without extra modules. This streamlined approach is highlighted to demonstrate the simplicity and efficiency of the proposed method.

read the caption

Figure 2: Compared with the existing zero-shot customized generation framework. Our framework does not require any additional modules to extract or inject subject features. It only needs simple concatenation of the reference image and generated video, and VDM’s inherent force is used to generate custom video.

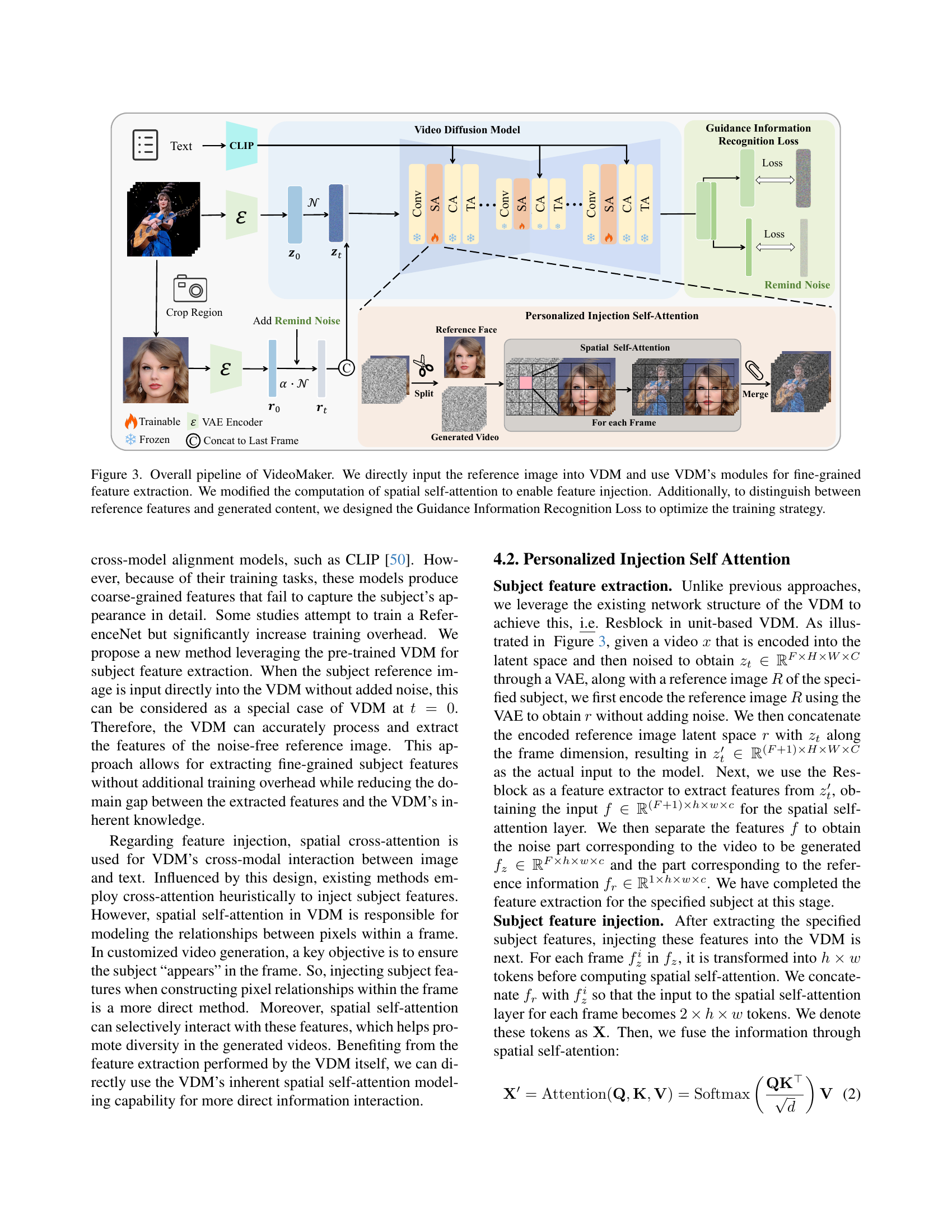

🔼 The figure illustrates the architecture of VideoMaker, a novel framework for zero-shot customized video generation. It leverages the inherent capabilities of Video Diffusion Models (VDMs) rather than relying on external modules. The process begins by directly inputting a reference image into the VDM. The VDM’s internal mechanisms are then used for fine-grained feature extraction from this image. A key modification involves altering the spatial self-attention mechanism within the VDM to effectively inject these extracted features into the video generation process. Finally, a novel ‘Guidance Information Recognition Loss’ is introduced to help the model distinguish between the reference image features and the newly generated video content, thus improving the overall quality and consistency of the generated video.

read the caption

Figure 3: Overall pipeline of VideoMaker. We directly input the reference image into VDM and use VDM’s modules for fine-grained feature extraction. We modified the computation of spatial self-attention to enable feature injection. Additionally, to distinguish between reference features and generated content, we designed the Guidance Information Recognition Loss to optimize the training strategy.

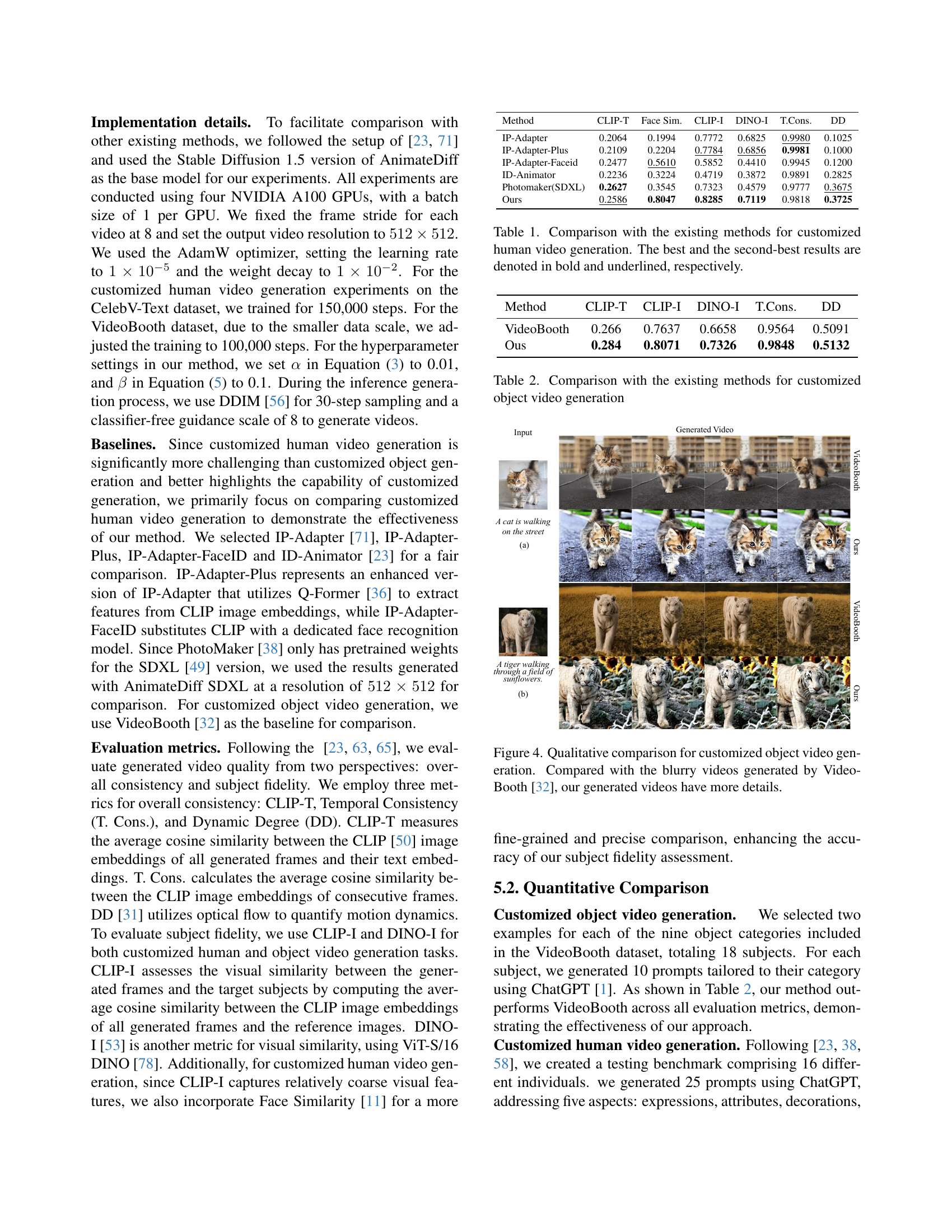

🔼 Figure 4 presents a qualitative comparison of customized object video generation results between the VideoBooth method and the proposed VideoMaker method. The figure shows that VideoMaker produces videos with significantly more detail and clarity than VideoBooth. VideoBooth’s output appears blurry and lacks fine details, while VideoMaker generates sharper, more defined videos, highlighting the superior performance of the proposed approach in capturing the nuances of the object appearance and movement.

read the caption

Figure 4: Qualitative comparison for customized object video generation. Compared with the blurry videos generated by VideoBooth [32], our generated videos have more details.

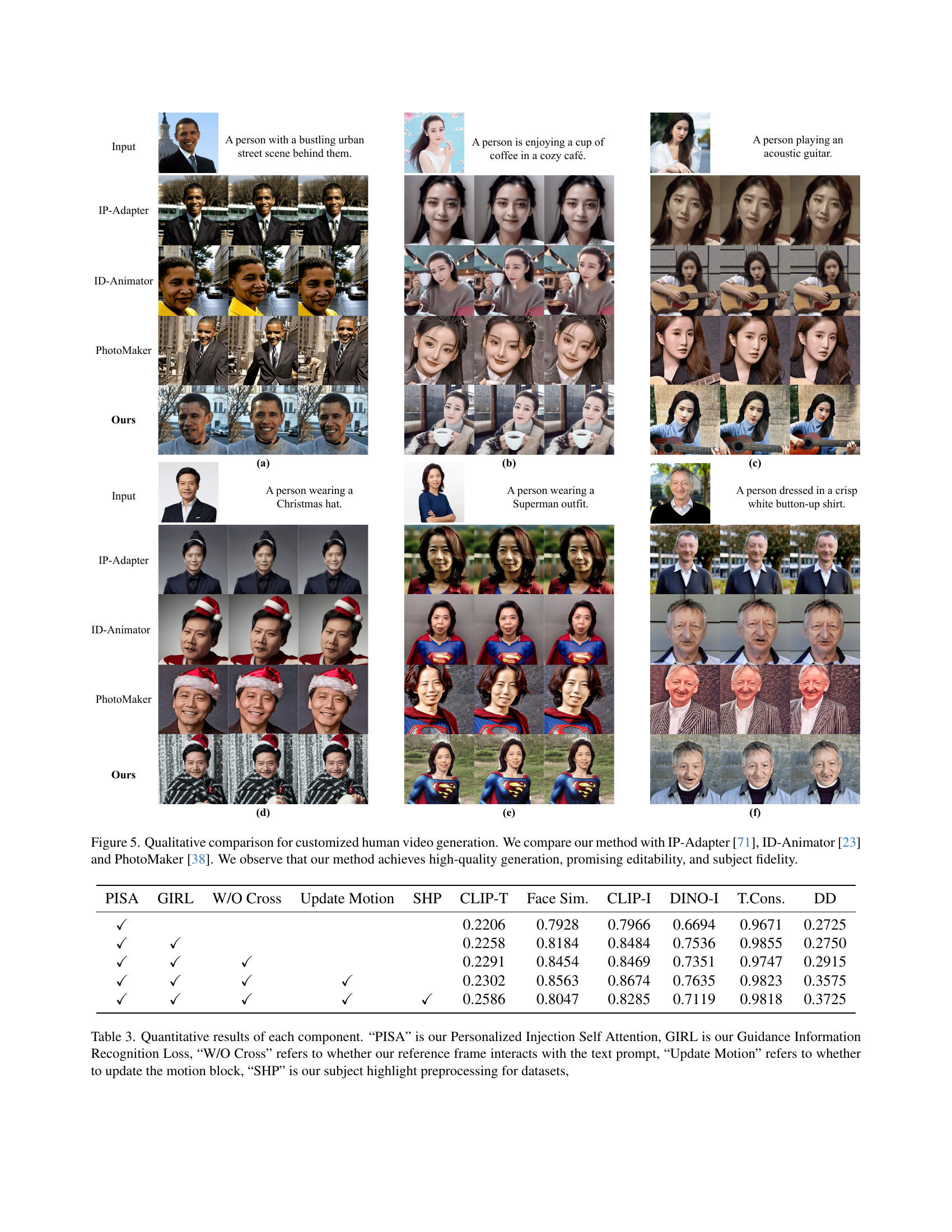

🔼 Figure 5 presents a qualitative comparison of customized human video generation results from four different methods: the proposed VideoMaker approach and three existing techniques—IP-Adapter, ID-Animator, and PhotoMaker. For three distinct video generation prompts, the figure showcases sample video frames produced by each method. This allows for a visual assessment of the quality of video generation, including editability and subject fidelity. The results suggest that VideoMaker outperforms the others in generating high-quality, editable videos that accurately preserve the appearance of the subject.

read the caption

Figure 5: Qualitative comparison for customized human video generation. We compare our method with IP-Adapter [71], ID-Animator [23] and PhotoMaker [38]. We observe that our method achieves high-quality generation, promising editability, and subject fidelity.

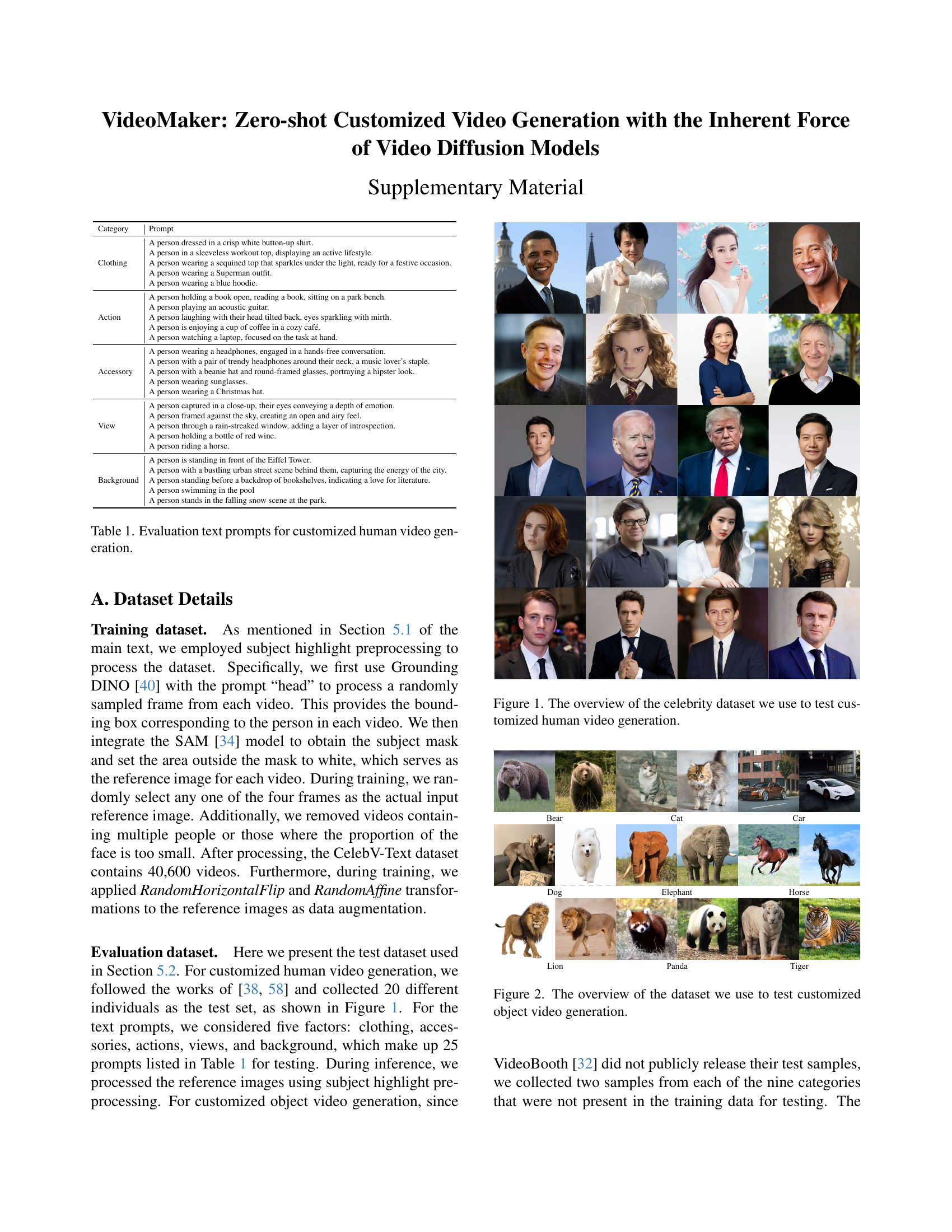

🔼 This figure displays a subset of the CelebV-Text dataset used in the paper to evaluate customized human video generation. It shows a selection of images from various individuals in different poses, demonstrating the diversity of the dataset’s human subjects used to test video generation. The images are intended to illustrate the quality and range of appearances found within the training dataset that the proposed model was trained and tested on.

read the caption

Figure 1: The overview of the celebrity dataset we use to test customized human video generation.

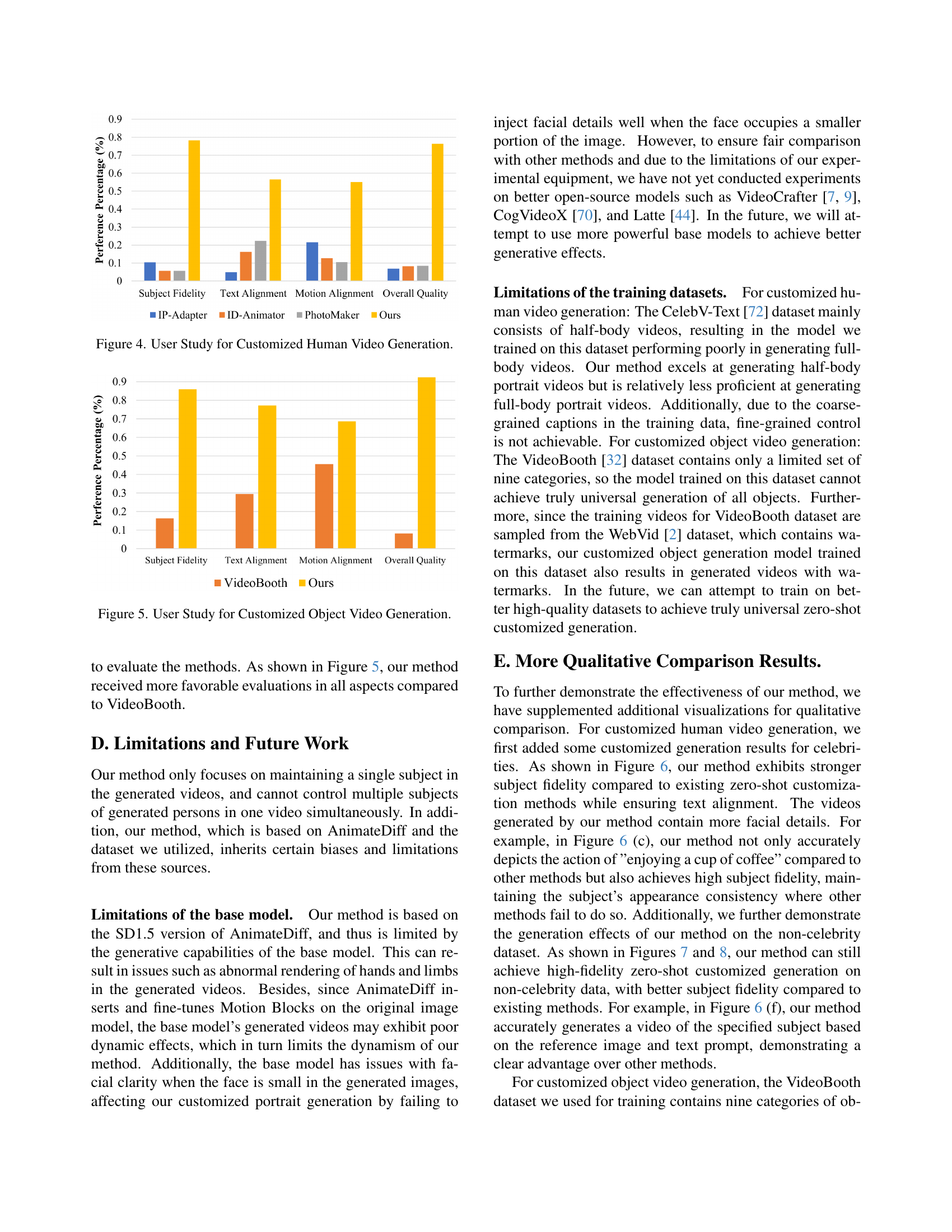

🔼 This figure shows the nine object categories used in the VideoBooth dataset for evaluating customized object video generation. Each category includes two example videos, demonstrating the diverse range of objects and actions covered in the dataset. The objects depicted include bears, cats, cars, dogs, elephants, horses, lions, pandas, and tigers. The images showcase variations in the environment (e.g., snowy landscape, jungle, beach) and object activities (e.g., walking, running, resting). These images represent the reference videos used as input for the model to generate customized videos.

read the caption

Figure 2: The overview of the dataset we use to test customized object video generation.

🔼 This figure displays the non-celebrity dataset used for evaluating the customized human video generation method. The dataset consists of 16 images of diverse individuals, each depicting a unique pose and style. The images were sourced from the Unsplash50 dataset and selected to ensure they had not appeared in the training data for the model. These images served as reference inputs for the model, which was tasked with generating videos based on the provided textual prompts. This subset was used to assess the model’s generalizability beyond the celebrity-centric data used in primary training and evaluation, providing a more robust measure of performance and capability.

read the caption

Figure 3: The overview of the non-celebrity dataset we used for testing customized human video generation.

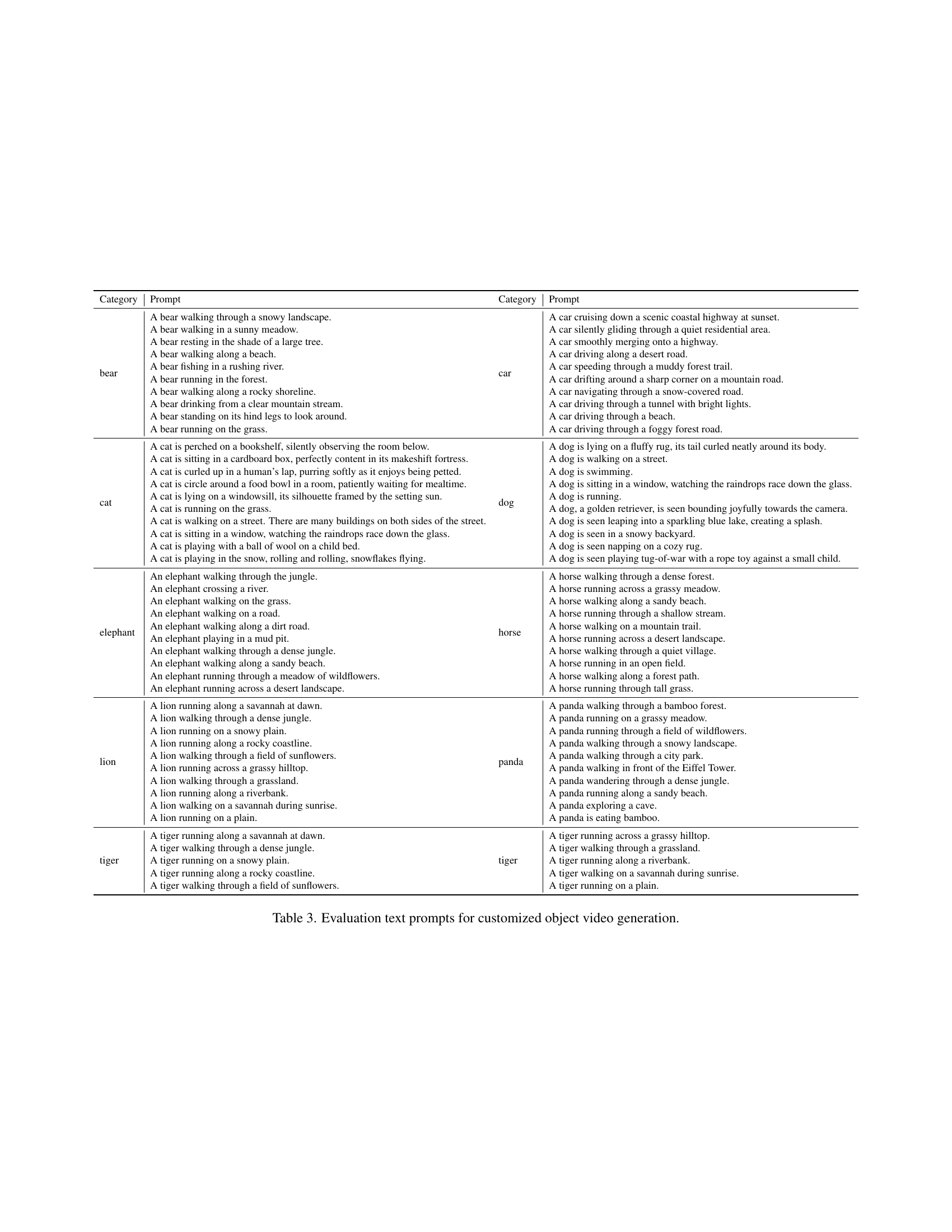

🔼 This figure displays the results of a user study comparing different methods for customized human video generation. The study evaluated the generated videos based on four criteria: Text Alignment (how well the generated video matches the text prompt), Subject Fidelity (how accurately the generated video reflects the subject’s appearance), Motion Alignment (the quality of movement in the generated video), and Overall Quality (a general assessment of the video’s quality). The bar chart shows the percentage of user preference for each method across these four evaluation criteria. This allows for a direct comparison of the effectiveness of different approaches to customized video generation, highlighting the strengths and weaknesses of each method in terms of satisfying user expectations for realism and accuracy.

read the caption

Figure 4: User Study for Customized Human Video Generation.

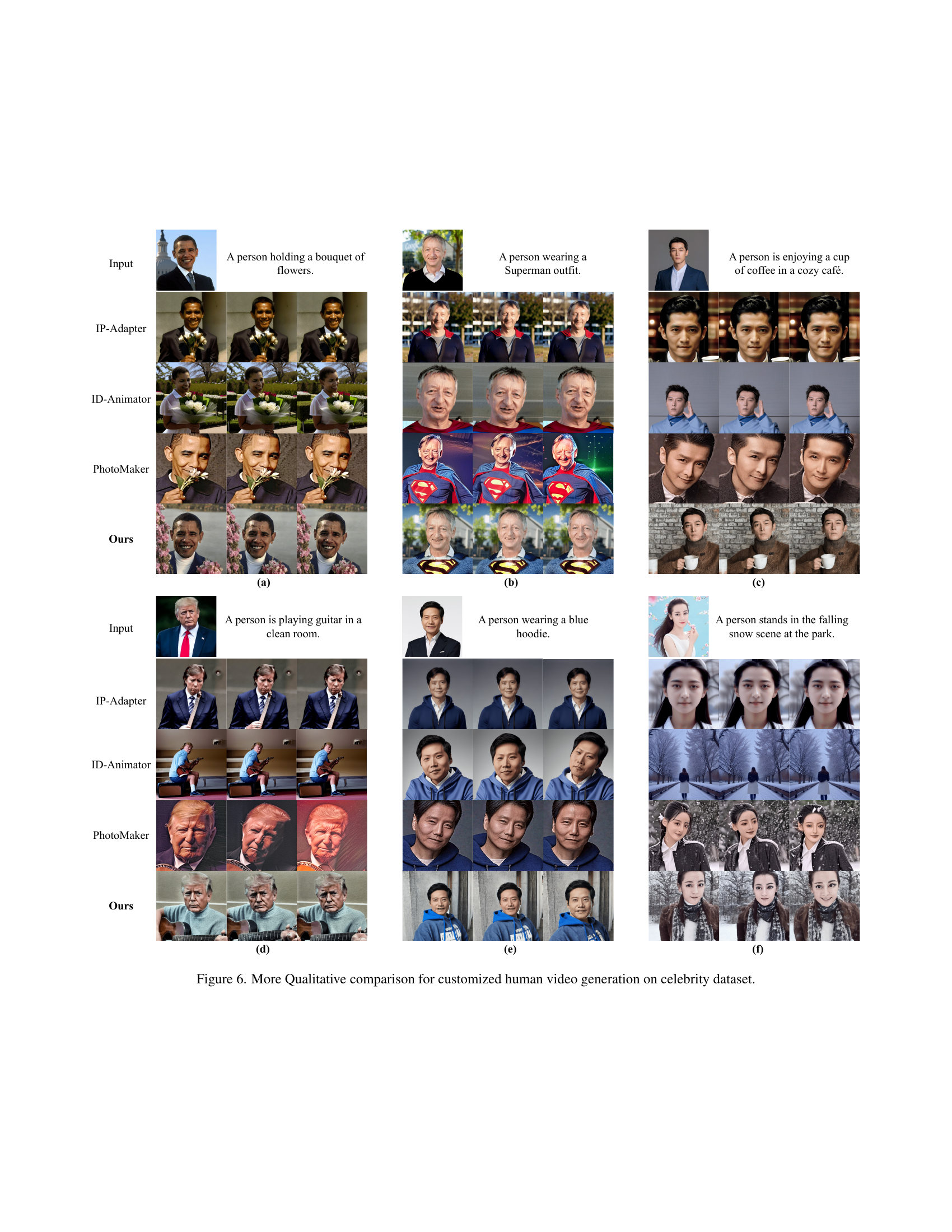

🔼 This figure displays the results of a user study comparing the quality of customized object video generation between the proposed VideoMaker method and the VideoBooth baseline method. The user study evaluated four aspects: Subject Fidelity, Text Alignment, Motion Alignment, and Overall Quality. Each bar represents the percentage of user preference for each method across these four aspects. The results visually demonstrate the relative performance of VideoMaker and VideoBooth in generating high-quality videos that accurately reflect the specified subject and text prompts, while also displaying smooth and realistic motion.

read the caption

Figure 5: User Study for Customized Object Video Generation.

🔼 This figure provides a qualitative comparison of customized human video generation results on a celebrity dataset. It shows the input image prompts (a person performing a specific action or wearing certain clothing), and the videos generated by four different methods: IP-Adapter, ID-Animator, PhotoMaker, and the authors’ proposed VideoMaker method. The comparison highlights the differences in video quality, subject fidelity, action consistency, and overall visual realism across the different approaches, showcasing VideoMaker’s improved performance in terms of maintaining consistent appearance and accurate action generation.

read the caption

Figure 6: More Qualitative comparison for customized human video generation on celebrity dataset.

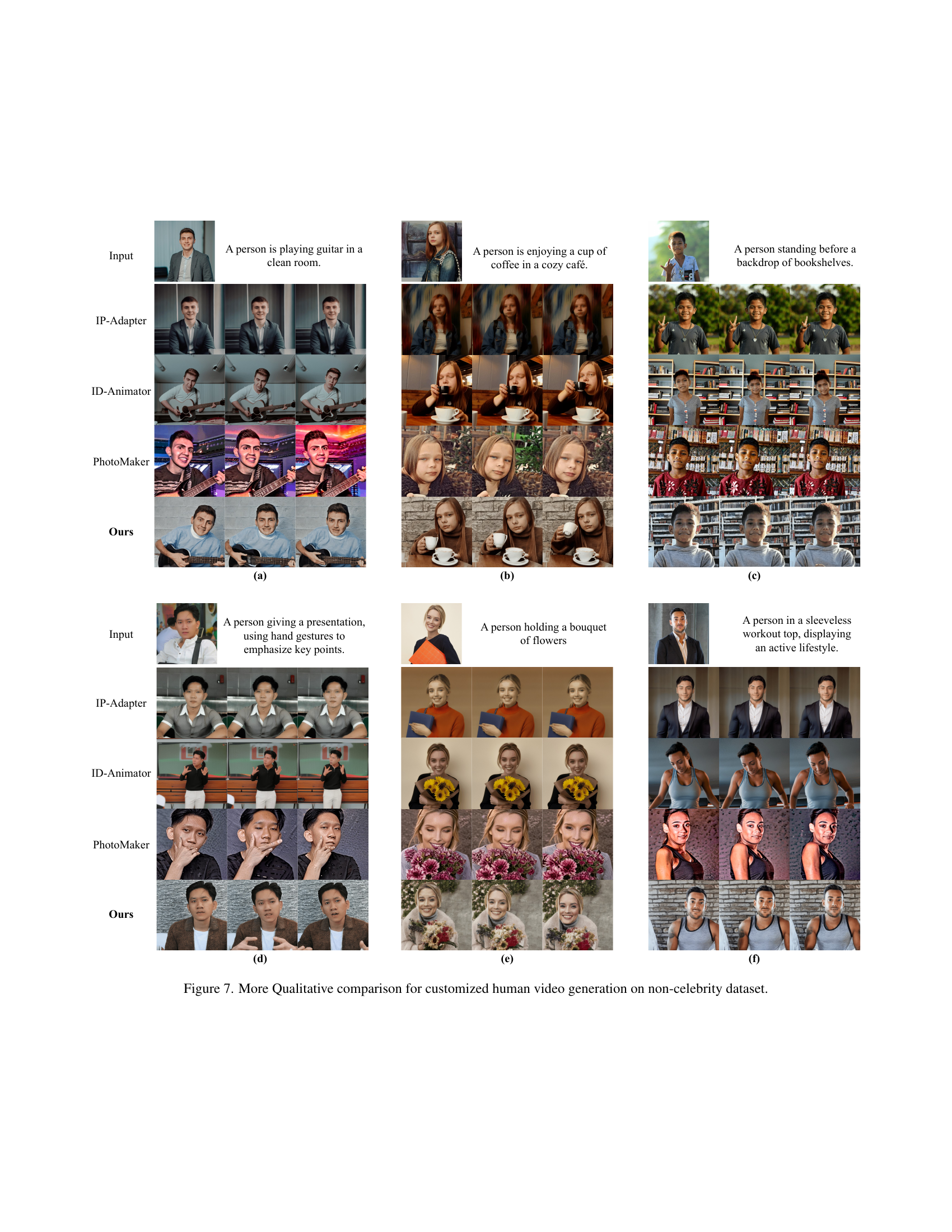

🔼 This figure provides a qualitative comparison of customized human video generation results on a non-celebrity dataset. It showcases the outputs generated by different methods (IP-Adapter, ID-Animator, PhotoMaker, and the proposed VideoMaker approach) for various prompts. The goal is to visually demonstrate the relative performance of each method in terms of subject fidelity, text alignment, and overall video quality when applied to individuals not included in the training data. The comparison highlights the strengths and weaknesses of each approach when dealing with unseen subjects.

read the caption

Figure 7: More Qualitative comparison for customized human video generation on non-celebrity dataset.

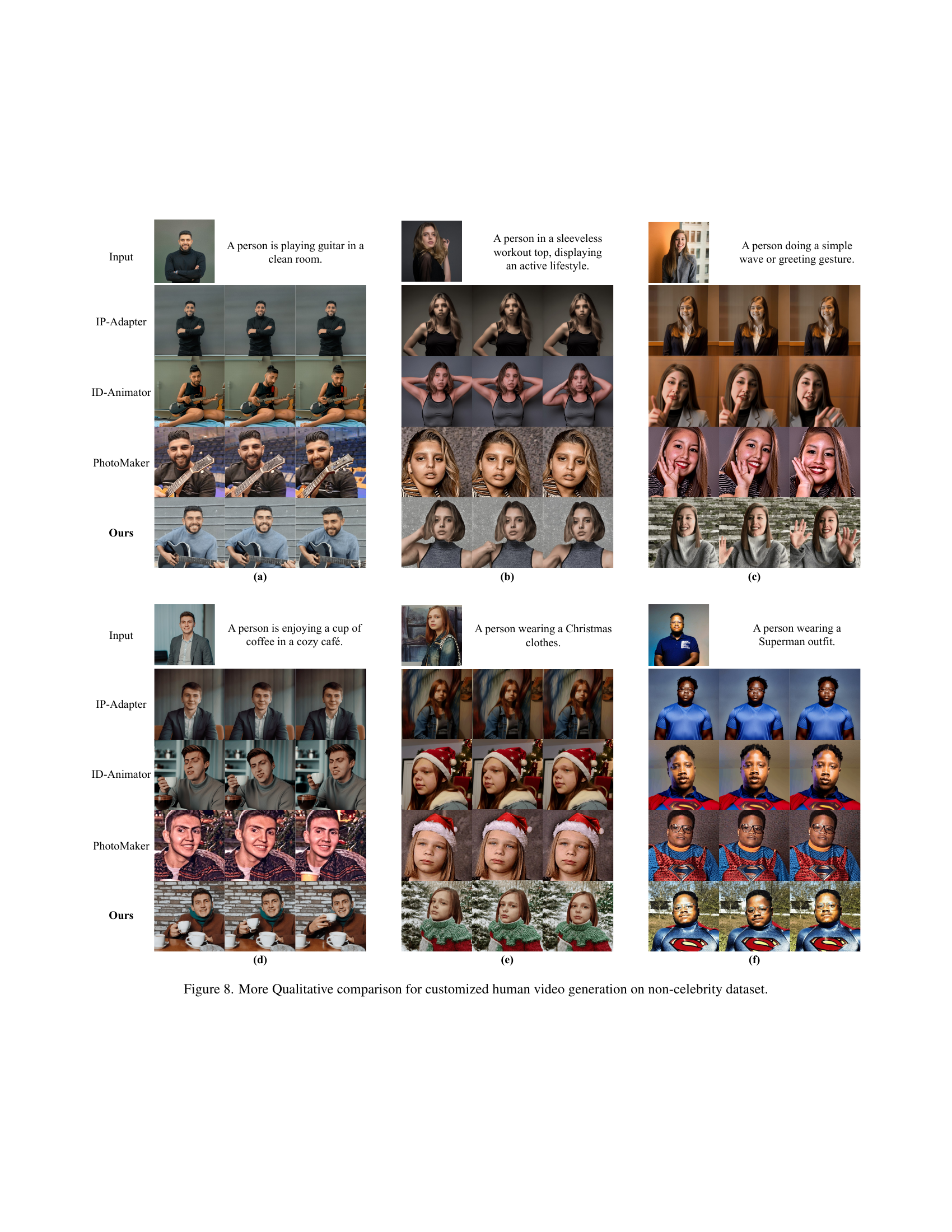

🔼 This figure presents a qualitative comparison of customized human video generation results on a non-celebrity dataset. It showcases the performance of several methods, including IP-Adapter, ID-Animator, PhotoMaker, and the authors’ proposed method (Ours), across various prompts and scenarios. Each row demonstrates results for a specific prompt, providing a visual comparison of video quality, subject fidelity, and overall realism. This comparison helps to highlight the strengths and weaknesses of each approach in generating videos of non-famous individuals, focusing on aspects like facial details, background consistency, and the accurate depiction of clothing and actions.

read the caption

Figure 8: More Qualitative comparison for customized human video generation on non-celebrity dataset.

More on tables

| Method | CLIP-T | CLIP-I | DINO-I | T.Cons. | DD |

|---|---|---|---|---|---|

| VideoBooth | 0.266 | 0.7637 | 0.6658 | 0.9564 | 0.5091 |

| Ous | 0.284 | 0.8071 | 0.7326 | 0.9848 | 0.5132 |

🔼 This table presents a quantitative comparison of VideoMaker against existing methods for customized object video generation. It uses several metrics to evaluate both the overall consistency of the generated videos (CLIP-T, Temporal Consistency, Dynamic Degree) and the fidelity of the subject’s appearance (CLIP-I, DINO-I). Higher scores generally indicate better performance. The results show VideoMaker’s superior performance across all metrics.

read the caption

Table 2: Comparison with the existing methods for customized object video generation

| PISA | GIRL | W/O Cross | Update Motion | SHP | CLIP-T | Face Sim. | CLIP-I | DINO-I | T.Cons. | DD |

|---|---|---|---|---|---|---|---|---|---|---|

| ✓ | 0.2206 | 0.7928 | 0.7966 | 0.6694 | 0.9671 | 0.2725 | ||||

| ✓ | ✓ | 0.2258 | 0.8184 | 0.8484 | 0.7536 | 0.9855 | 0.2750 | |||

| ✓ | ✓ | ✓ | 0.2291 | 0.8454 | 0.8469 | 0.7351 | 0.9747 | 0.2915 | ||

| ✓ | ✓ | ✓ | ✓ | 0.2302 | 0.8563 | 0.8674 | 0.7635 | 0.9823 | 0.3575 | |

| ✓ | ✓ | ✓ | ✓ | ✓ | 0.2586 | 0.8047 | 0.8285 | 0.7119 | 0.9818 | 0.3725 |

🔼 This table presents an ablation study evaluating the impact of different components in the VideoMaker model on customized human video generation. It shows quantitative results (CLIP-T, Face Similarity, CLIP-I, DINO-I, Temporal Consistency, and Dynamic Degree) for several model variations. Each row represents a different configuration, varying the inclusion or exclusion of: Personalized Injection Self-Attention (PISA), Guidance Information Recognition Loss (GIRL), cross-attention between the reference frame and text prompt, updating of motion blocks during training, and subject highlight preprocessing. The results allow for assessing the contribution of each component to overall performance.

read the caption

Table 3: Quantitative results of each component. “PISA” is our Personalized Injection Self Attention, GIRL is our Guidance Information Recognition Loss, “W/O Cross” refers to whether our reference frame interacts with the text prompt, “Update Motion” refers to whether to update the motion block, “SHP” is our subject highlight preprocessing for datasets,

| Category | Prompt |

|---|---|

| Clothing | A person dressed in a crisp white button-up shirt. |

| A person in a sleeveless workout top, displaying an active lifestyle. | |

| A person wearing a sequined top that sparkles under the light, ready for a festive occasion. | |

| A person wearing a Superman outfit. | |

| A person wearing a blue hoodie. | |

| Action | A person holding a book open, reading a book, sitting on a park bench. |

| A person playing an acoustic guitar. | |

| A person laughing with their head tilted back, eyes sparkling with mirth. | |

| A person is enjoying a cup of coffee in a cozy café. | |

| A person watching a laptop, focused on the task at hand. | |

| Accessory | A person wearing a headphones, engaged in a hands-free conversation. |

| A person with a pair of trendy headphones around their neck, a music lover’s staple. | |

| A person with a beanie hat and round-framed glasses, portraying a hipster look. | |

| A person wearing sunglasses. | |

| A person wearing a Christmas hat. | |

| View | A person captured in a close-up, their eyes conveying a depth of emotion. |

| A person framed against the sky, creating an open and airy feel. | |

| A person through a rain-streaked window, adding a layer of introspection. | |

| A person holding a bottle of red wine. | |

| A person riding a horse. | |

| Background | A person is standing in front of the Eiffel Tower. |

| A person with a bustling urban street scene behind them, capturing the energy of the city. | |

| A person standing before a backdrop of bookshelves, indicating a love for literature. | |

| A person swimming in the pool | |

| A person stands in the falling snow scene at the park. |

🔼 This table lists example text prompts used to generate customized human videos. The prompts are categorized by different aspects to control the final video’s content: Clothing (describing the subject’s attire), Action (specifying the subject’s activity), Accessory (detailing additional items the subject might have), View (describing the camera angle or setting), and Background (setting the environment where the subject is situated). Each category contains multiple example prompts, demonstrating the variety of instructions that can be given to the model.

read the caption

Table 1: Evaluation text prompts for customized human video generation.

| Method | CLIP-T | Face Sim. | CLIP-I | DINO-I | T.Cons. | DD |

|---|---|---|---|---|---|---|

| IP-Adapter | 0.2347 | 0.1298 | 0.6364 | 0.5178 | 0.9929 | 0.0825 |

| IP-Adapter-Plus | 0.2140 | 0.2017 | 0.6558 | 0.5488 | 0.9920 | 0.0815 |

| IP-Adapter-Faceid | 0.2457 | 0.4651 | 0.6401 | 0.4108 | 0.9930 | 0.0950 |

| ID-Animator | 0.2303 | 0.1294 | 0.4993 | 0.0947 | 0.9999 | 0.2645 |

| Photomaker* | 0.2803 | 0.2294 | 0.6558 | 0.3209 | 0.9768 | 0.3335 |

| Ours | 0.2773 | 0.6974 | 0.6882 | 0.5937 | 0.9797 | 0.3590 |

🔼 This table compares the performance of VideoMaker against several existing methods for generating customized human videos. The comparison uses a non-celebrity dataset and focuses on customized human video generation. Metrics include CLIP-T (text alignment), Face Similarity, CLIP-I and DINO-I (subject fidelity), Temporal Consistency, and Dynamic Degree (overall video quality). The best and second-best results for each metric are highlighted. Note that PhotoMaker uses a different base model (AnimateDiff SDXL) compared to the others (AnimateDiff SD1.5).

read the caption

Table 2: Comparison with the existing methods for customized human video generation on our non-celebrity dataset. The best and the second-best results are denoted in bold and underlined, respectively. Besides, PhotoMaker [38] is base on AnimateDiff [25] SDXL version.

| Category | Prompt | Category | Prompt |

|---|---|---|---|

| bear | A bear walking through a snowy landscape. | car | A car cruising down a scenic coastal highway at sunset. |

| A bear walking in a sunny meadow. | A car silently gliding through a quiet residential area. | ||

| A bear resting in the shade of a large tree. | A car smoothly merging onto a highway. | ||

| A bear walking along a beach. | A car driving along a desert road. | ||

| A bear fishing in a rushing river. | A car speeding through a muddy forest trail. | ||

| A bear running in the forest. | A car drifting around a sharp corner on a mountain road. | ||

| A bear walking along a rocky shoreline. | A car navigating through a snow-covered road. | ||

| A bear drinking from a clear mountain stream. | A car driving through a tunnel with bright lights. | ||

| A bear standing on its hind legs to look around. | A car driving through a beach. | ||

| A bear running on the grass. | A car driving through a foggy forest road. | ||

| cat | A cat is perched on a bookshelf, silently observing the room below. | dog | A dog is lying on a fluffy rug, its tail curled neatly around its body. |

| A cat is sitting in a cardboard box, perfectly content in its makeshift fortress. | A dog is walking on a street. | ||

| A cat is curled up in a human’s lap, purring softly as it enjoys being petted. | A dog is swimming. | ||

| A cat is circle around a food bowl in a room, patiently waiting for mealtime. | A dog is sitting in a window, watching the raindrops race down the glass. | ||

| A cat is lying on a windowsill, its silhouette framed by the setting sun. | A dog is running. | ||

| A cat is running on the grass. | A dog, a golden retriever, is seen bounding joyfully towards the camera. | ||

| A cat is walking on a street. There are many buildings on both sides of the street. | A dog is seen leaping into a sparkling blue lake, creating a splash. | ||

| A cat is sitting in a window, watching the raindrops race down the glass. | A dog is seen in a snowy backyard. | ||

| A cat is playing with a ball of wool on a child bed. | A dog is seen napping on a cozy rug. | ||

| A cat is playing in the snow, rolling and rolling, snowflakes flying. | A dog is seen playing tug-of-war with a rope toy against a small child. | ||

| elephant | An elephant walking through the jungle. | horse | A horse walking through a dense forest. |

| An elephant crossing a river. | A horse running across a grassy meadow. | ||

| An elephant walking on the grass. | A horse walking along a sandy beach. | ||

| An elephant walking on a road. | A horse running through a shallow stream. | ||

| An elephant walking along a dirt road. | A horse walking on a mountain trail. | ||

| An elephant playing in a mud pit. | A horse running across a desert landscape. | ||

| An elephant walking through a dense jungle. | A horse walking through a quiet village. | ||

| An elephant walking along a sandy beach. | A horse running in an open field. | ||

| An elephant running through a meadow of wildflowers. | A horse walking along a forest path. | ||

| An elephant running across a desert landscape. | A horse running through tall grass. | ||

| lion | A lion running along a savannah at dawn. | panda | A panda walking through a bamboo forest. |

| A lion walking through a dense jungle. | A panda running on a grassy meadow. | ||

| A lion running on a snowy plain. | A panda running through a field of wildflowers. | ||

| A lion running along a rocky coastline. | A panda walking through a snowy landscape. | ||

| A lion walking through a field of sunflowers. | A panda walking through a city park. | ||

| A lion running across a grassy hilltop. | A panda walking in front of the Eiffel Tower. | ||

| A lion walking through a grassland. | A panda wandering through a dense jungle. | ||

| A lion running along a riverbank. | A panda running along a sandy beach. | ||

| A lion walking on a savannah during sunrise. | A panda exploring a cave. | ||

| A lion running on a plain. | A panda is eating bamboo. | ||

| tiger | A tiger running along a savannah at dawn. | tiger | A tiger running across a grassy hilltop. |

| A tiger walking through a dense jungle. | A tiger walking through a grassland. | ||

| A tiger running on a snowy plain. | A tiger running along a riverbank. | ||

| A tiger running along a rocky coastline. | A tiger walking on a savannah during sunrise. | ||

| A tiger walking through a field of sunflowers. | A tiger running on a plain. |

🔼 This table lists example text prompts used to generate customized object videos. The prompts are categorized by object type (bear, car, cat, dog, elephant, horse, lion, panda, tiger) and each category includes multiple prompts describing various actions, settings, or moods to elicit diverse video outputs. The goal is to showcase the versatility of the VideoMaker model in generating videos that accurately reflect the specified text descriptions for a wide range of object and situation variations.

read the caption

Table 3: Evaluation text prompts for customized object video generation.

Full paper#