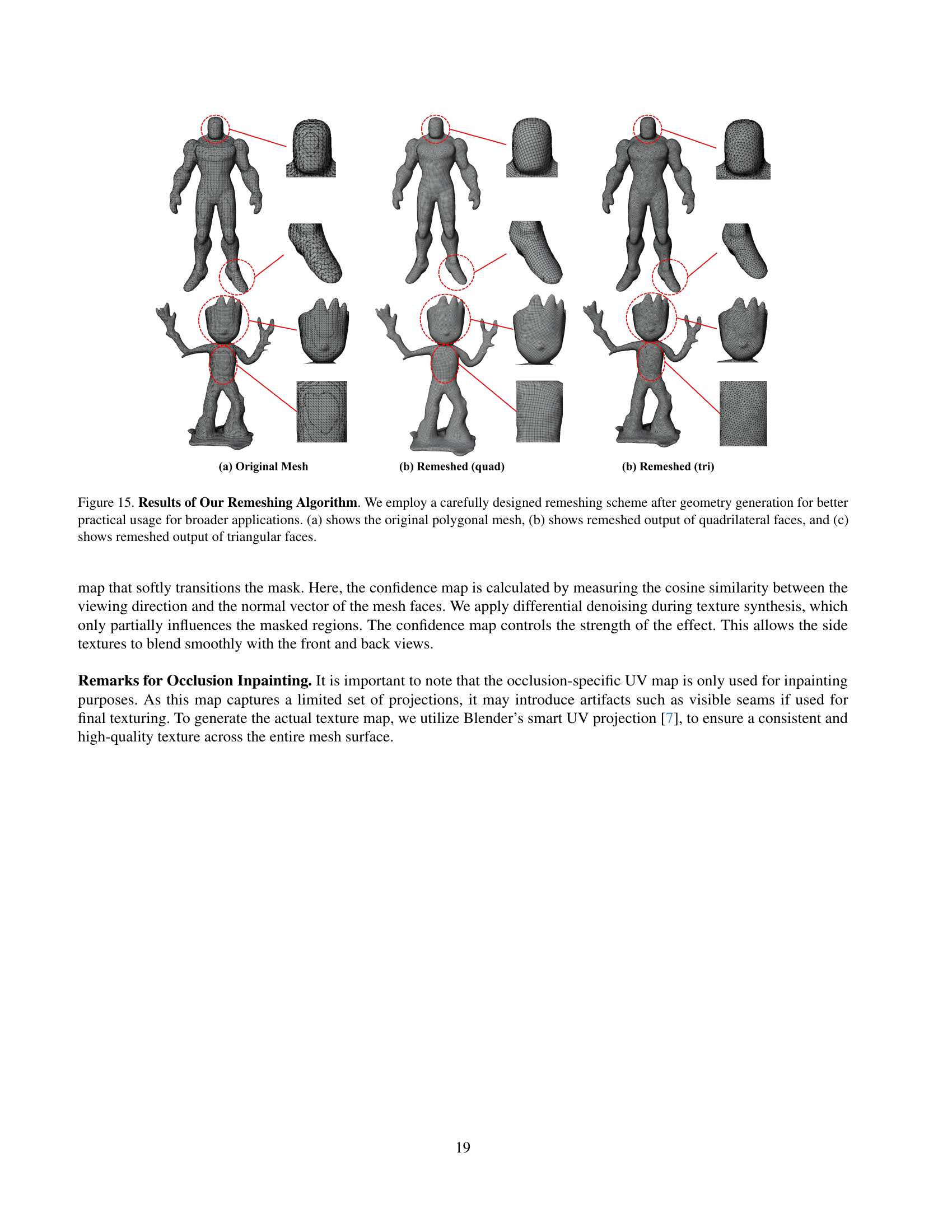

TL;DR#

Generating high-quality 3D assets is crucial for many applications but existing methods are often slow, inefficient, or produce low-fidelity results. Current approaches struggle with multi-view inconsistencies, slow generation times, and issues with surface reconstruction. These challenges hinder the progress of 3D generative models and limit their practical applications.

CaPa tackles these problems with a two-stage “carve-and-paint” approach. It uses a 3D latent diffusion model to generate geometry guided by multi-view inputs, ensuring structural consistency. A novel Spatially Decoupled Attention then synthesizes high-resolution textures. Finally, a 3D-aware occlusion inpainting algorithm fills untextured regions, resulting in high-quality outputs. CaPa excels in both texture fidelity and geometric stability, generating ready-to-use 3D assets in under 30 seconds.

Key Takeaways#

Why does it matter?#

This paper is important because it presents CaPa, a novel and efficient method for generating high-quality 3D assets. This addresses a critical need in various fields, such as gaming, film, and VR/AR, where high-quality 3D models are in high demand. The efficiency of CaPa makes it particularly relevant to researchers working on scalable and practical solutions for 3D content creation, opening up new avenues for research in areas such as geometry and texture generation and improving the overall quality and speed of 3D asset production.

Visual Insights#

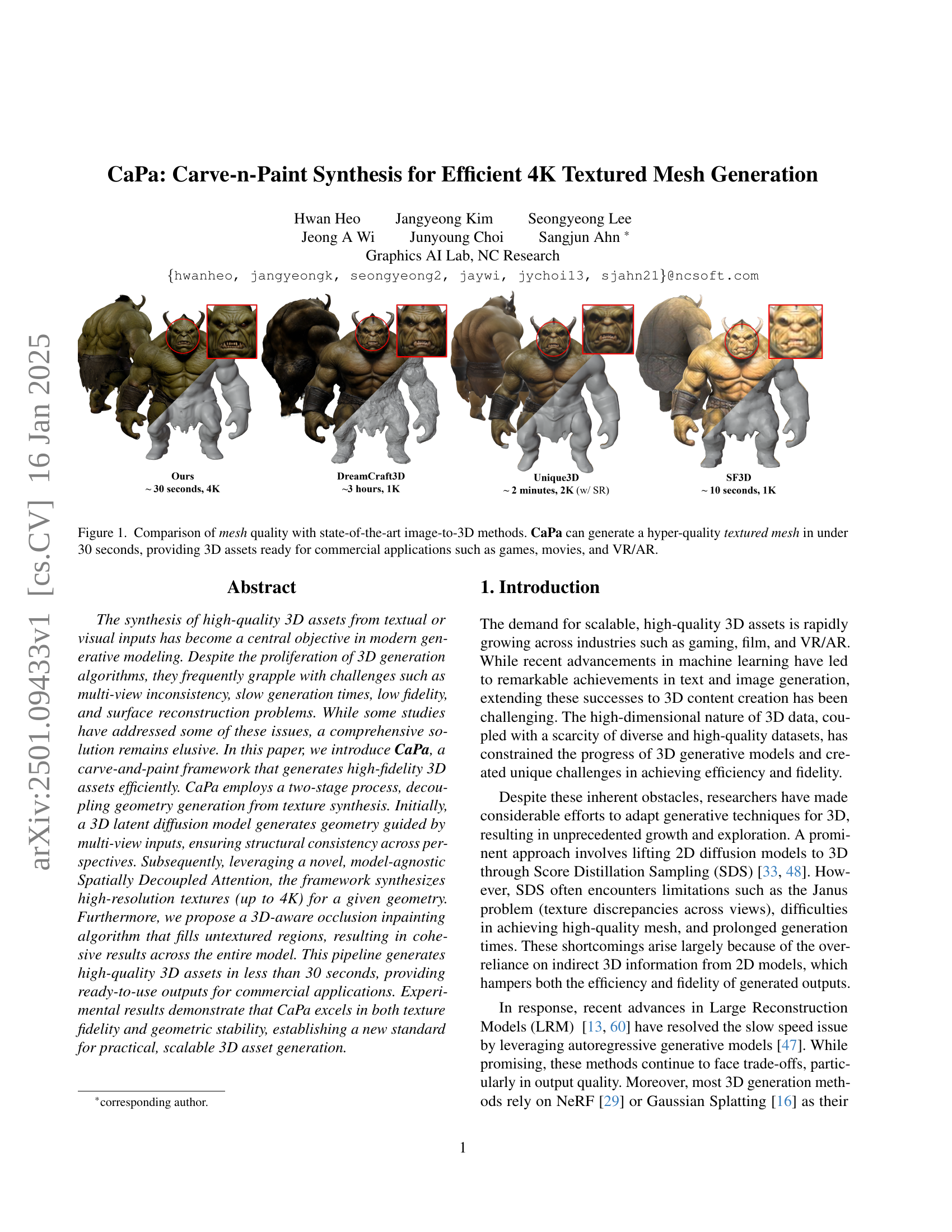

🔼 CaPa, a novel framework for efficient 4K textured mesh generation, is depicted. The pipeline begins with multi-view images as input, which guide a 3D latent diffusion model (trained using ShapeVAE) to generate a 3D geometry. Four orthogonal views of this geometry are then rendered and used as input for a texture synthesis stage employing spatially decoupled attention. This attention mechanism ensures high-quality textures while preventing inconsistencies across different views (the ‘Janus problem’). Finally, a 3D-aware occlusion inpainting algorithm is applied to fill in any missing or incomplete texture regions, resulting in a hyper-quality textured mesh.

read the caption

Figure 1: CaPa pipeline. We first generate 3D geometry using a 3D latent diffusion model. Using the learned 3D latent space with ShapeVAE, we train a 3D Latent Diffusion Model that generates 3D geometries, guided by multi-view images to ensure alignment between the generated shape and texture. After the 3D geometry is created, we render four orthogonal views of the mesh, which serve as inputs for texture generation. To produce a high-quality texture while preventing the Janus problem, we utilize a novel, model-agnostic spatially decoupled attention. Finally, we obtain a hyper-quality textured mesh through back projection and a 3D-aware occlusion inpainting algorithm.

| Method | CLIP (-) | FID | Time |

|---|---|---|---|

| Ours | 86.34 | 47.56 | 30 seconds |

| DreamCraft3D [43] | 77.61 | 75.66 | 3 hours |

| Unique3D [54] | 81.92 | 67.17 | 2 minutes |

| Era3D [18] | 66.81 | 89.18 | 10 minutes |

| SF3D [3] | 70.18 | 84.52 | 10 seconds |

🔼 This table presents a quantitative comparison of CaPa against several state-of-the-art image-to-3D generation methods. The evaluation metrics used are CLIP score (higher is better, indicating better alignment between generated images and input text descriptions) and FID score (lower is better, indicating higher image quality and less divergence from real images). Generation time is also included. The results show that CaPa significantly outperforms other methods in both CLIP and FID scores, while maintaining a reasonable generation time.

read the caption

Table 1: Quantitative results. CaPa outperforms all the competitors by a significant margin in both CLIP score and FID score, with a reasonable generation time.

In-depth insights#

CaPa: 3D Mesh Synthesis#

CaPa, a novel framework for 3D mesh synthesis, presents a compelling approach to generating high-fidelity textured meshes efficiently. Its two-stage process, decoupling geometry and texture synthesis, addresses limitations of previous methods. The geometry generation stage, leveraging a 3D latent diffusion model guided by multi-view inputs, ensures structural consistency. This is followed by a texture synthesis stage, employing spatially decoupled cross-attention for high-resolution (up to 4K) textures, effectively mitigating multi-view inconsistencies. Furthermore, CaPa incorporates a 3D-aware occlusion inpainting algorithm, enhancing texture completeness. The results demonstrate that CaPa surpasses state-of-the-art methods in both texture fidelity and geometric stability, generating high-quality 3D assets in under 30 seconds. This combination of speed, fidelity, and robustness positions CaPa as a significant advancement in practical and scalable 3D asset generation.

Multi-view 3D Diffusion#

Multi-view 3D diffusion models address a critical challenge in 3D asset generation: creating consistent representations from multiple viewpoints. Standard approaches often struggle with inconsistencies, resulting in artifacts like the “Janus problem.” Multi-view methods strive to overcome this by explicitly incorporating information from several camera angles during the diffusion process. This can involve concatenating features from multiple views, using attention mechanisms to relate information across views, or employing specialized architectures that explicitly model 3D structure and view relationships. The advantages are significant: improved consistency across views, more realistic and complete 3D models, and reduced artifacts. However, challenges remain, particularly regarding computational cost and the scalability to high-resolution outputs. Efficiently handling multiple views without a massive increase in parameters is a key research area. The choice of appropriate architecture and the method for integrating multi-view information significantly impact the model’s performance and the quality of generated assets. Furthermore, the availability of large-scale, high-quality multi-view datasets is crucial for training effective multi-view 3D diffusion models. Future research should focus on developing more efficient architectures and exploring innovative data augmentation techniques to address these limitations.

4K Texture Synthesis#

The concept of “4K Texture Synthesis” within the context of a research paper likely refers to the generation of high-resolution textures for 3D models. This is a significant challenge in computer graphics because high-resolution textures require substantial computational resources and memory. The paper likely explores methods to achieve this efficiently, possibly through techniques like generative adversarial networks (GANs) or diffusion models. A key aspect is likely the trade-off between quality, speed, and memory usage. The approach might involve techniques for downsampling textures during training to manage computational needs, followed by upsampling to 4K for final output. Efficiency is crucial; methods which enable rapid 4K texture generation without significant performance compromise would be a major contribution. The research may delve into novel architectures, loss functions, or training strategies to achieve this high-resolution texture synthesis with reduced resource demands and superior quality compared to existing methods. The paper will likely showcase results and compare them to state-of-the-art methods to highlight any significant improvements in quality or performance metrics.

Occlusion Inpainting#

The research paper section on “Occlusion Inpainting” addresses a critical challenge in multi-view 3D reconstruction: filling in missing texture information from occluded regions. The authors propose a novel 3D-aware occlusion inpainting algorithm that surpasses previous approaches by efficiently identifying and addressing untextured areas. Instead of directly using standard 2D inpainting methods, which often struggle with spatial coherence in 3D, the algorithm utilizes k-means clustering to group occluded regions based on their surface normals and spatial coordinates, generating specialized UV maps for each cluster. This approach facilitates a more accurate representation of the occluded regions, enabling the use of a 2D diffusion model for inpainting while preserving surface locality. The resulting cohesive textures significantly improve the overall quality of the generated 3D model. The model-agnostic nature of this approach is also a significant advantage, allowing easy integration with various pre-trained diffusion models. The effectiveness is further highlighted by its superior performance to previous methods. This intelligent approach demonstrates a significant advancement in efficient and high-quality 3D asset generation, especially in handling complex occlusion scenarios.

Future of CaPa#

The future of CaPa hinges on addressing its current limitations while expanding its capabilities. Improving PBR material understanding is crucial; integrating with advanced material-aware diffusion models could significantly enhance realism. Addressing the Janus problem more robustly, perhaps through innovative multi-view consistency techniques beyond spatially decoupled attention, is key. Scaling to even higher resolutions (beyond 4K) while maintaining speed and efficiency will be a significant challenge requiring optimization across all stages of the pipeline. Exploring different mesh representations could potentially improve efficiency and geometry fidelity. Finally, extending CaPa’s functionality to encompass novel applications such as interactive 3D modeling and animation, would demonstrate its versatility and further solidify its place as a leading 3D asset generation framework. This will likely involve investigating more sophisticated methods for handling complex geometries and textural details while keeping the process rapid and user-friendly.

More visual insights#

More on figures

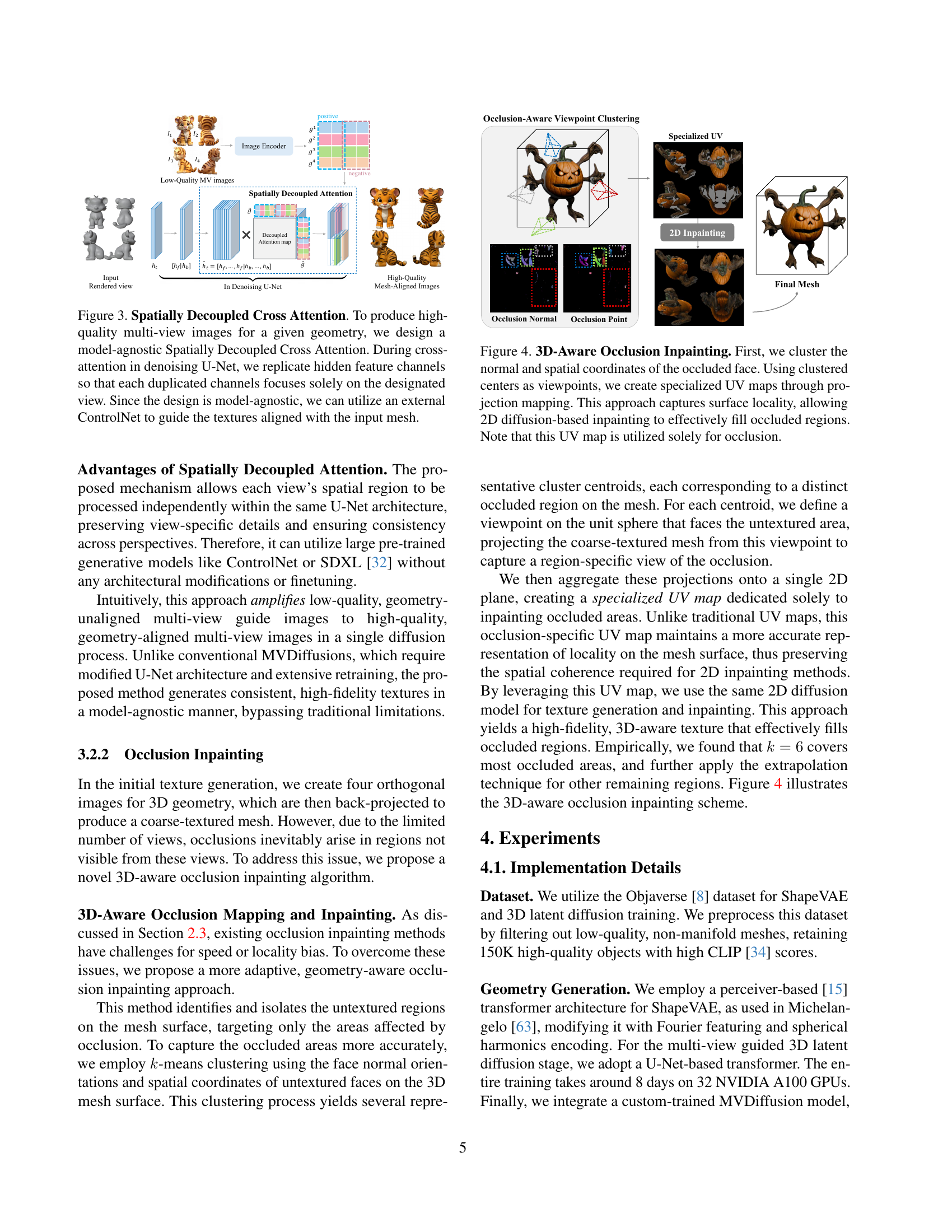

🔼 This figure illustrates the Spatially Decoupled Cross Attention mechanism. The core idea is to improve multi-view texture generation by decoupling the attention process for each view. Instead of a single attention mechanism processing all views simultaneously, this method replicates the hidden feature channels within the denoising U-Net. Each set of duplicated channels then focuses exclusively on a single view’s information. This allows the model to generate more consistent and higher-quality textures across different perspectives without sacrificing efficiency. The model-agnostic nature means it works with existing models like ControlNet, further enhancing texture fidelity by aligning the generated textures with the input geometry.

read the caption

Figure 2: Spatially Decoupled Cross Attention. To produce high-quality multi-view images for a given geometry, we design a model-agnostic Spatially Decoupled Cross Attention. During cross-attention in denoising U-Net, we replicate hidden feature channels so that each duplicated channels focuses solely on the designated view. Since the design is model-agnostic, we can utilize an external ControlNet to guide the textures aligned with the input mesh.

🔼 CaPa uses a novel 3D-aware occlusion inpainting algorithm to address the problem of incomplete textures caused by occlusions in multi-view 3D models. First, it clusters the surface normals and spatial coordinates of occluded faces to identify distinct occluded regions. Then, it uses the cluster centers as viewpoints to generate specialized UV maps that preserve surface locality. Finally, these UV maps are used to guide a 2D diffusion-based inpainting process, effectively filling the occluded regions in the texture. Importantly, these specialized UV maps are only used for inpainting and not the final texture.

read the caption

Figure 3: 3D-Aware Occlusion Inpainting. First, we cluster the normal and spatial coordinates of the occluded face. Using clustered centers as viewpoints, we create specialized UV maps through projection mapping. This approach captures surface locality, allowing 2D diffusion-based inpainting to effectively fill occluded regions. Note that this UV map is utilized solely for occlusion.

🔼 This figure compares CaPa’s texturing results with two other state-of-the-art methods (SyncMVD and FlashTex). It highlights CaPa’s ability to generate high-quality textures that are consistent across multiple views, unlike the other methods which suffer from the ‘Janus problem’ (inconsistent textures across different viewpoints). The Janus problem leads to visible artifacts and inconsistencies in the final 3D model. CaPa effectively addresses the Janus problem by generating textures with consistent identity (ID), resulting in a more visually appealing and coherent 3D model.

read the caption

Figure 4: Comparison of Texturing Method. Unlike prior works, CaPa effectively resolved the Janus problem with consistent ID.

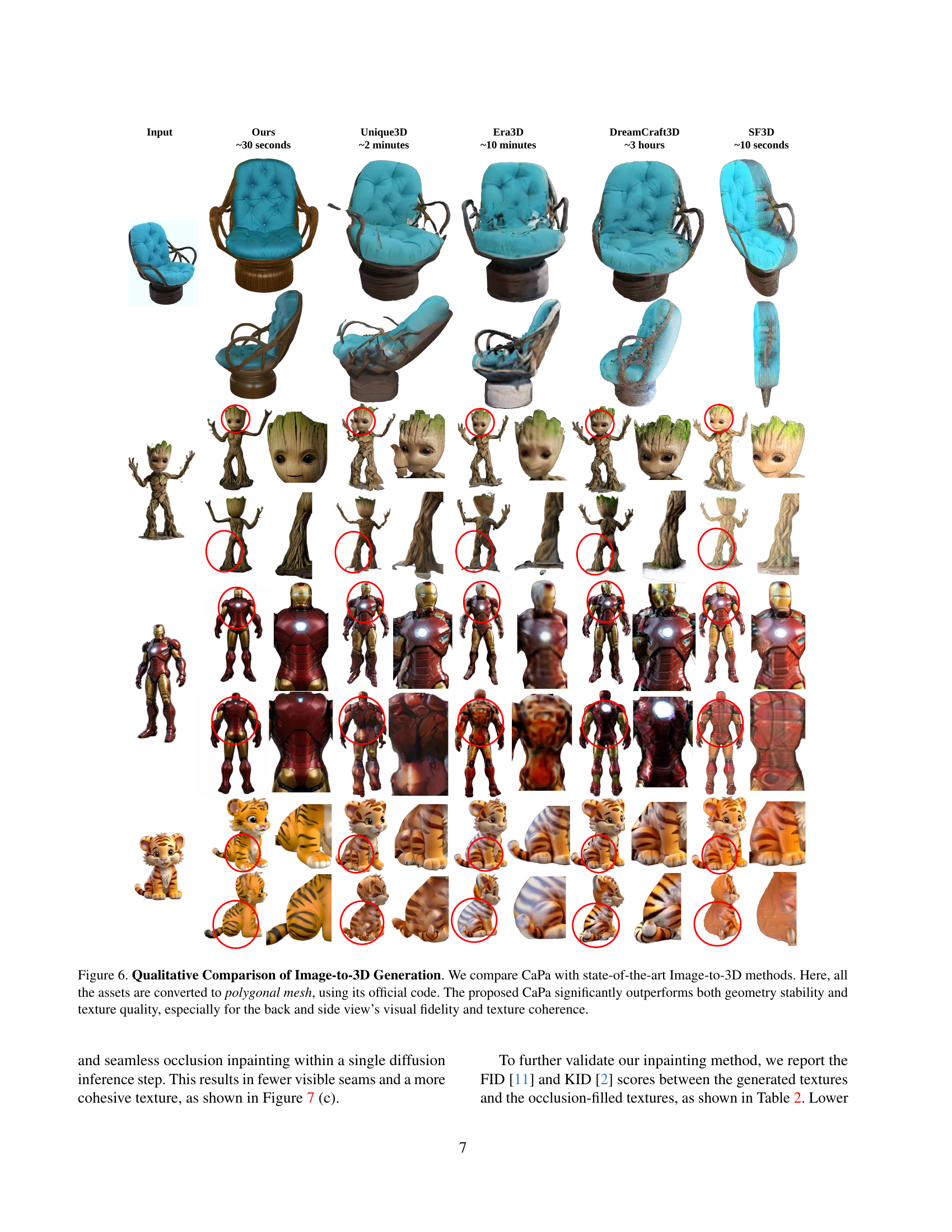

🔼 This figure compares CaPa’s image-to-3D generation results with several state-of-the-art methods. All models’ outputs were converted to polygonal meshes using the original code provided by the respective authors. The comparison highlights CaPa’s superior performance in terms of both geometry stability and texture quality, particularly noticeable in the less-commonly-seen back and side views. CaPa produces meshes with noticeably better visual fidelity and texture coherence.

read the caption

Figure 5: Qualitative Comparison of Image-to-3D Generation. We compare CaPa with state-of-the-art Image-to-3D methods. Here, all the assets are converted to polygonal mesh, using its official code. The proposed CaPa significantly outperforms both geometry stability and texture quality, especially for the back and side view’s visual fidelity and texture coherence.

🔼 This figure presents an ablation study evaluating the impact of three key components of the CaPa model on 3D asset generation: multi-view guidance for geometry generation, spatially decoupled attention for texture synthesis, and 3D-aware occlusion inpainting. Subfigure (a) compares geometry quality with and without multi-view guidance, showcasing improved results with the guidance. Subfigure (b) compares texture quality with and without spatially decoupled attention, highlighting its effectiveness in preventing the Janus problem (texture inconsistencies across views). Finally, subfigure (c) compares the performance of the proposed occlusion inpainting method with a state-of-the-art technique, demonstrating superior results in terms of texture fidelity.

read the caption

Figure 6: Ablation Study. (a) demonstrates that using multi-view guidance significantly increases the geometry quality. (b) shows our Spatially Decoupled Attention effectively resolves the Janus problem, achieving high-fidelity texture coherence, (c) reveals our occlusion inpainting outperforms previous inpainting methods like UV-ControlNet, presented in Paint3D [59].

🔼 Figure 7 demonstrates CaPa’s versatility and scalability by showcasing three examples. (a) shows a standard CaPa-generated output. (b) illustrates how CaPa seamlessly integrates with 3D inpainting by using a text prompt (‘orange sofa, orange pulp’) to modify the existing model, demonstrating its ability to adapt to new styles. (c) highlights CaPa’s model-agnostic nature by applying a pre-trained LoRA (Low-Rank Adaptation) for ‘balloon style’ without requiring additional 3D-specific training, thus showcasing its compatibility with external models and ease of customization.

read the caption

Figure 7: Scalability of CaPa. (a) Original result of CaPa. (b) 3D inpainting result using text-prompt (“orange sofa, orange pulp”). CaPa’s texture generation extends smoothly to 3D inpainting, stylizing the generated asset. (c) CaPa w/ LoRA [14] adaptation. The model-agnostic approach allows CaPa to leverage pre-trained LoRA (balloon style) without additional 3D-specific retraining.

🔼 Figure 8 showcases the results of CaPa when integrated with Physically Based Rendering (PBR) material capabilities. The images demonstrate CaPa’s ability to generate high-quality textures that adapt to different lighting conditions. Three lighting scenarios are shown: ‘city’, ‘studio’, and ’night’, all using Blender’s default settings. The results highlight CaPa’s potential for creating realistic 3D assets with accurate material properties and lighting interactions.

read the caption

Figure 8: Result of the CaPa with PBR Understanding. We demonstrate CaPa’s capability for disentangling physically based rendering (PBR) materials. The figure shows PBR-aware generation results under various lighting conditions: ‘city,’ ‘studio,’ and ‘night,’ using Blender’s default environment settings [7]. As shown, CaPa effectively adapts to different light environments, highlighting its potential for PBR-aware asset generation.

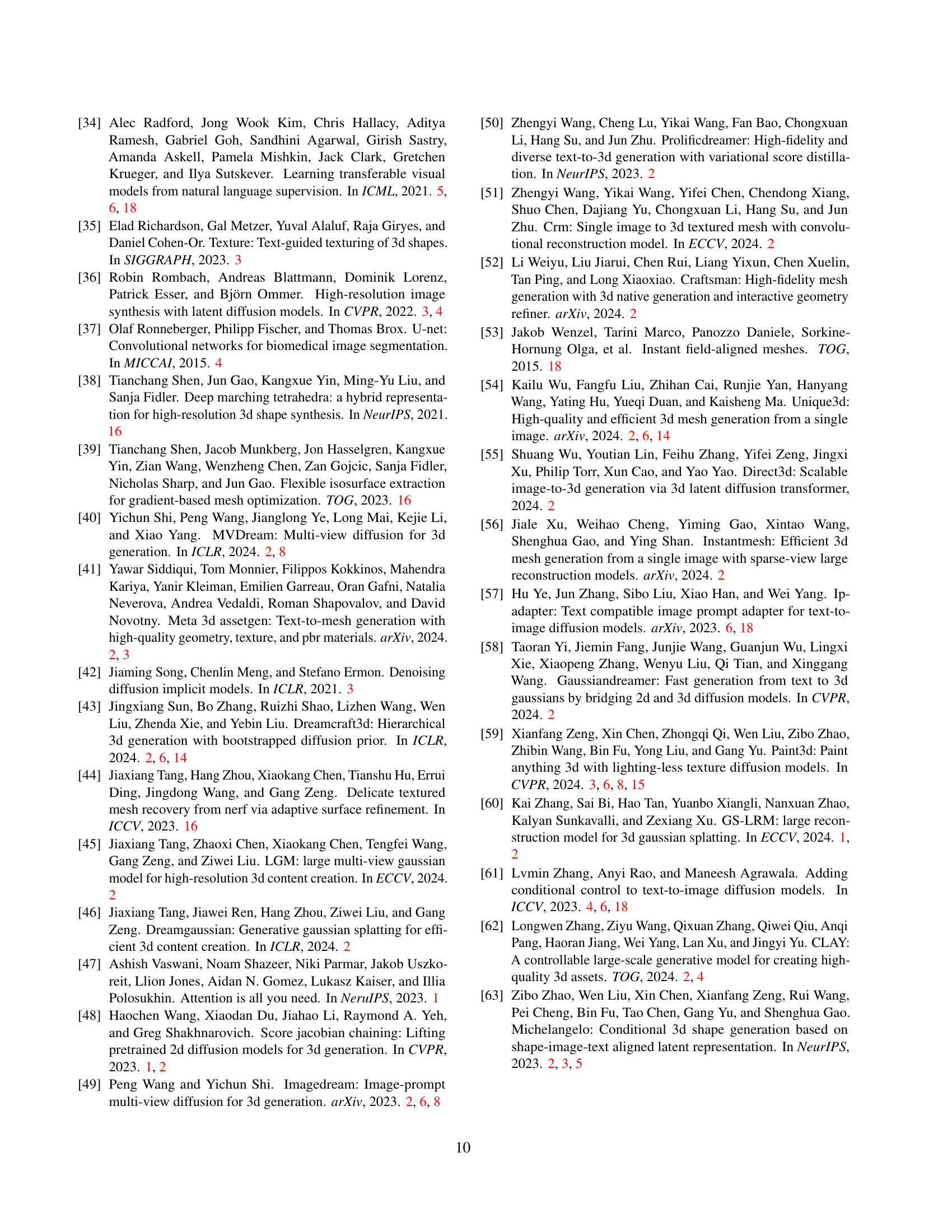

🔼 Figure 10 showcases the diverse range of 3D assets CaPa can generate from text and image inputs. The examples illustrate CaPa’s ability to produce high-quality, detailed models across a variety of object categories, including animals (tigers, dragons), accessories (handbags), characters (cartoon figures, superheroes), and household items. The figure highlights CaPa’s flexibility and its potential for practical applications in 3D asset creation for games, films, and VR/AR experiences.

read the caption

Figure 9: Additional Image-to-3D Results of CaPa. CaPa can generate diverse objects from textual, and visual input. The result demonstrates our diversity across the various categories, marking a significant advancement in practical 3D asset generation methodologies.

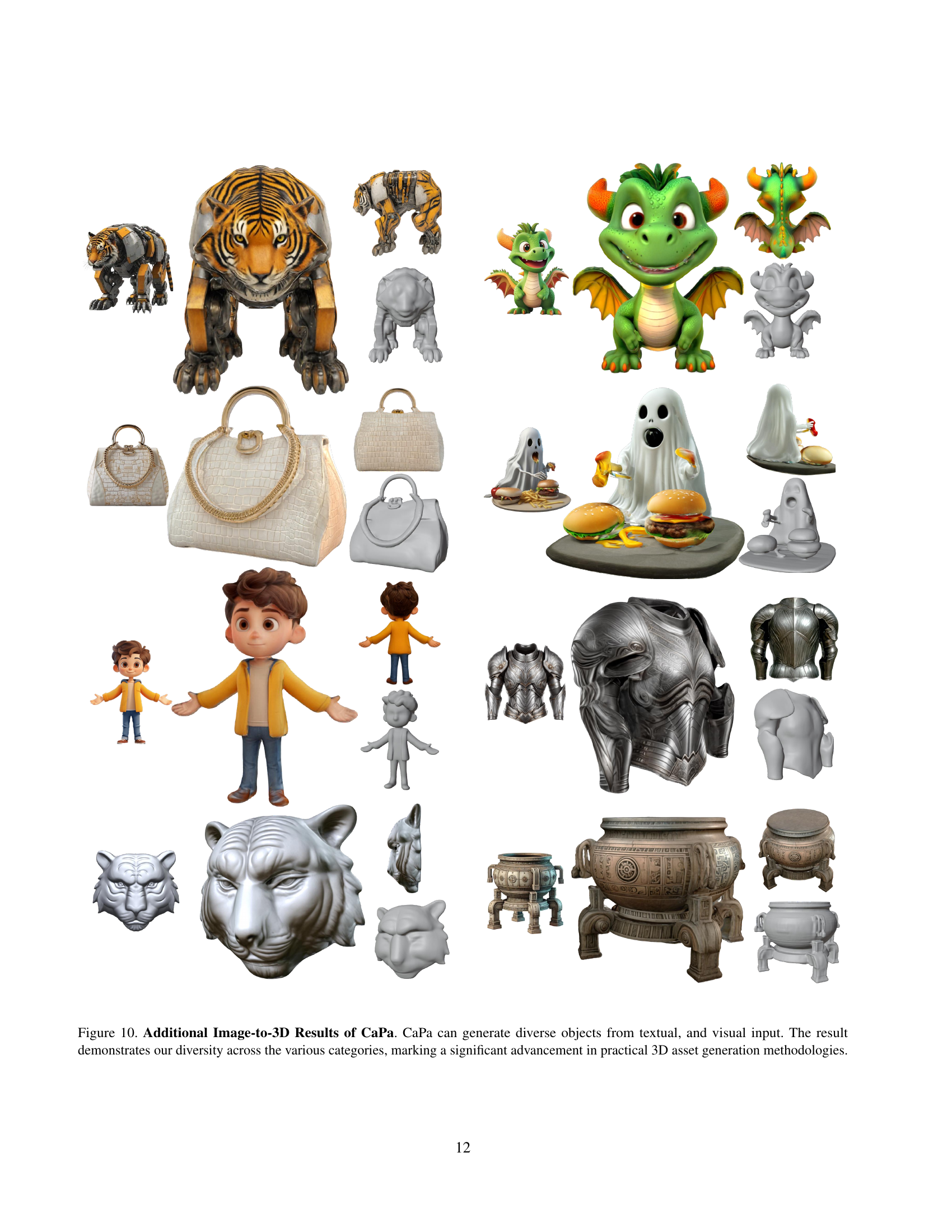

🔼 Figure 10 presents a detailed comparison of 3D model generation results from CaPa against several state-of-the-art methods. The comparison highlights CaPa’s superior performance in terms of both geometry stability and texture quality, particularly noticeable in the back and side views where other methods often exhibit significant degradation. The figure shows multiple views of several different 3D models, allowing for a visual assessment of the quality and consistency across various perspectives. This demonstrates CaPa’s ability to produce high-fidelity 3D assets which are structurally sound and maintain texture detail from different viewpoints.

read the caption

Figure 10: Additional Comparison of Image-to-3D Generation. CaPa significantly outperforms both geometry stability and texture quality, especially for the back and side view’s visual fidelity and texture coherence.

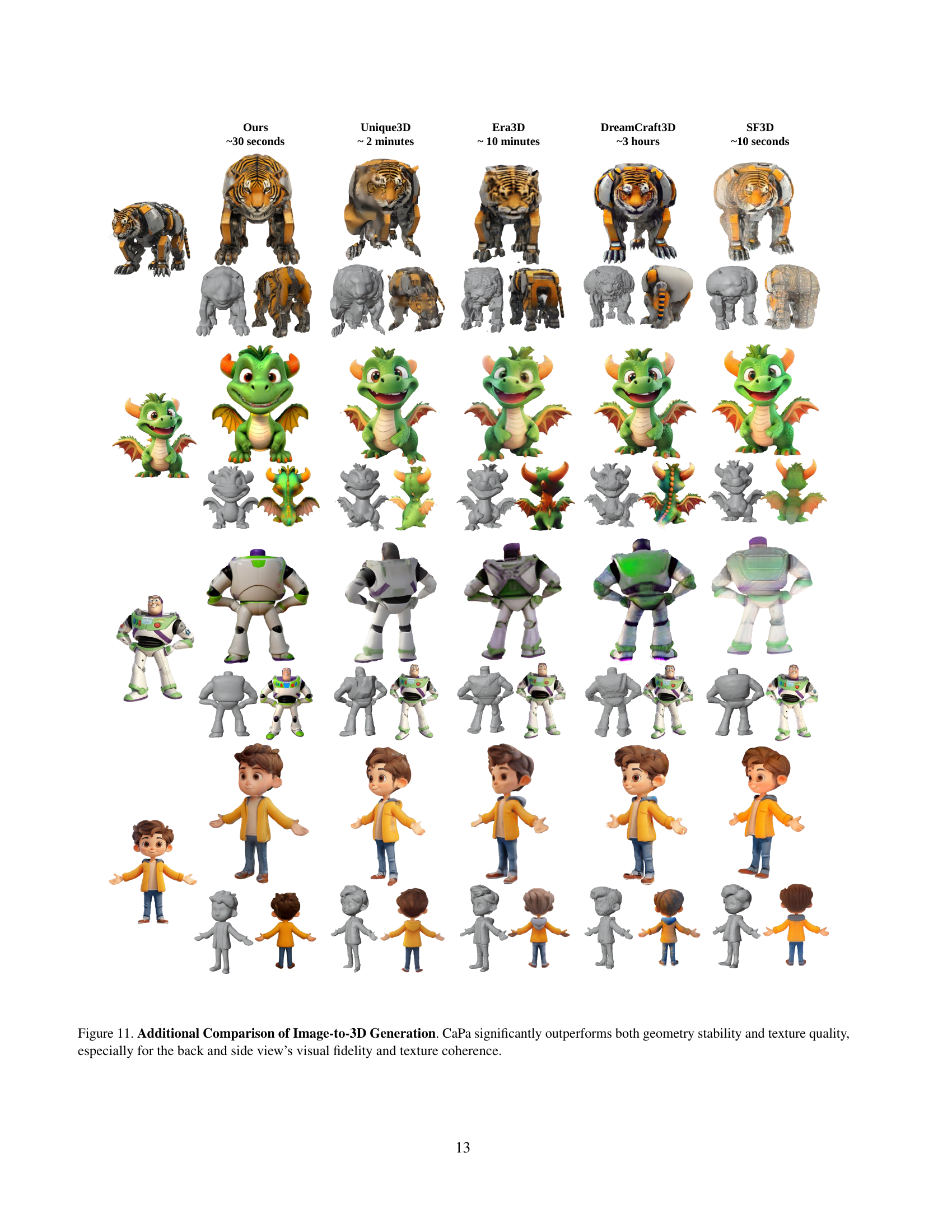

🔼 This figure demonstrates the effectiveness of the proposed spatially decoupled cross-attention mechanism in preventing the Janus artifact, a common issue in multi-view 3D texture generation. Each row shows a comparison of three different approaches for generating textures: (a) using the spatially decoupled cross-attention, (b) without using the spatially decoupled cross-attention (resulting in the Janus artifact), and (c) a mesh rendering of the current view for better visualization. The comparison highlights how the proposed method improves multi-view consistency and eliminates texture discrepancies.

read the caption

Figure 11: Impact of Spatially Decoupled Cross Attention on Janus Artifacts. In this additional figure, We demonstrate the capability of Janus prevention in the proposed spatially decoupled cross-attention mechanism. Each row depicts, (a) with spatially decoupled cross attention, (b) without spatially decoupled cross attention, and (c) a mesh rendering of the current view, respectively.

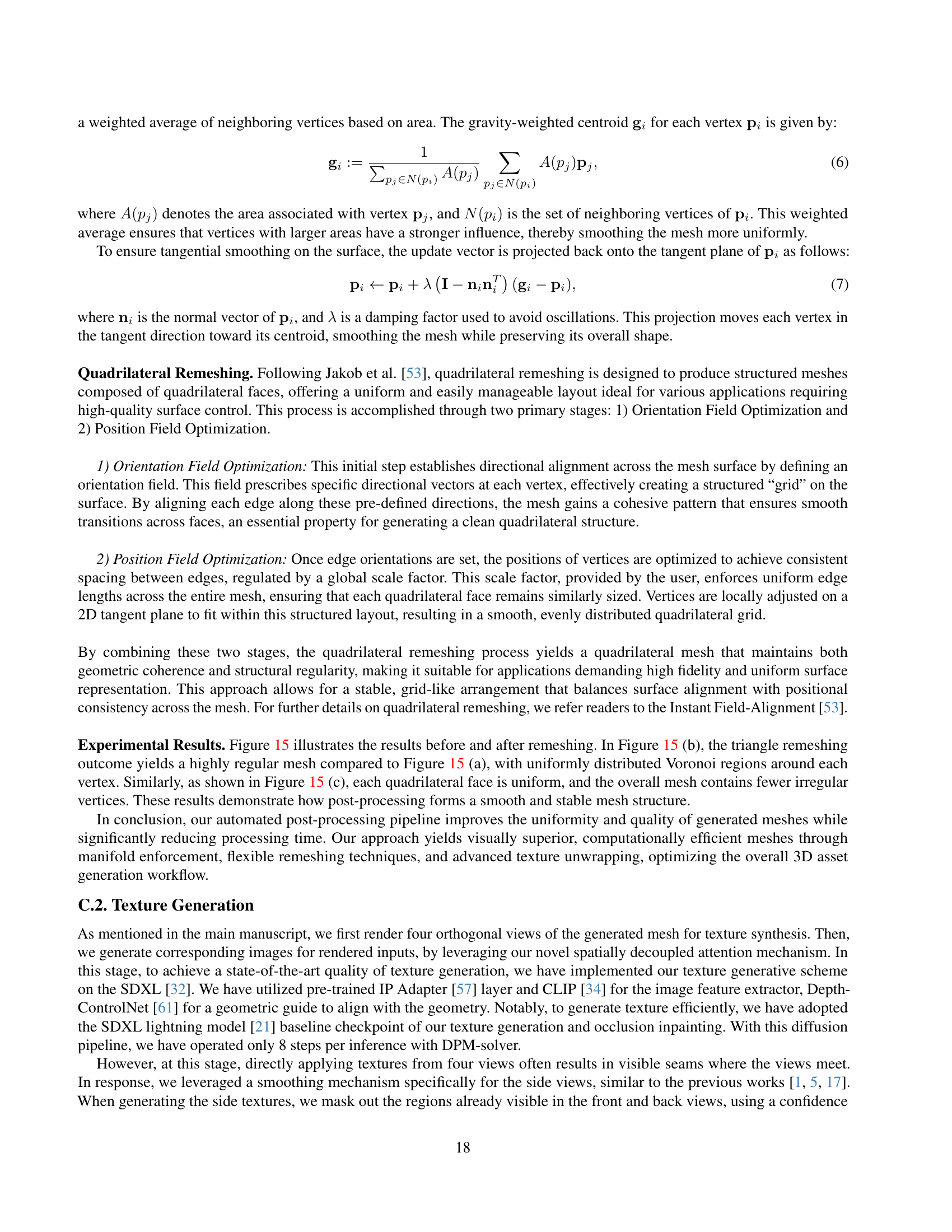

🔼 This figure compares three different occlusion inpainting methods: the authors’ proposed 3D-aware approach, automatic view selection, and UV ControlNet. Each method’s results are shown for various objects with challenging occlusions, demonstrating the strengths and limitations of each approach in terms of texture fidelity, seam visibility, and overall visual coherence.

read the caption

Figure 12: Qualitative results for different occlusion inpainting methods. (a) shows results from our 3D-aware occlusion inpainting method, (b) uses automatic view selection, and (c) employs UV ControlNet.

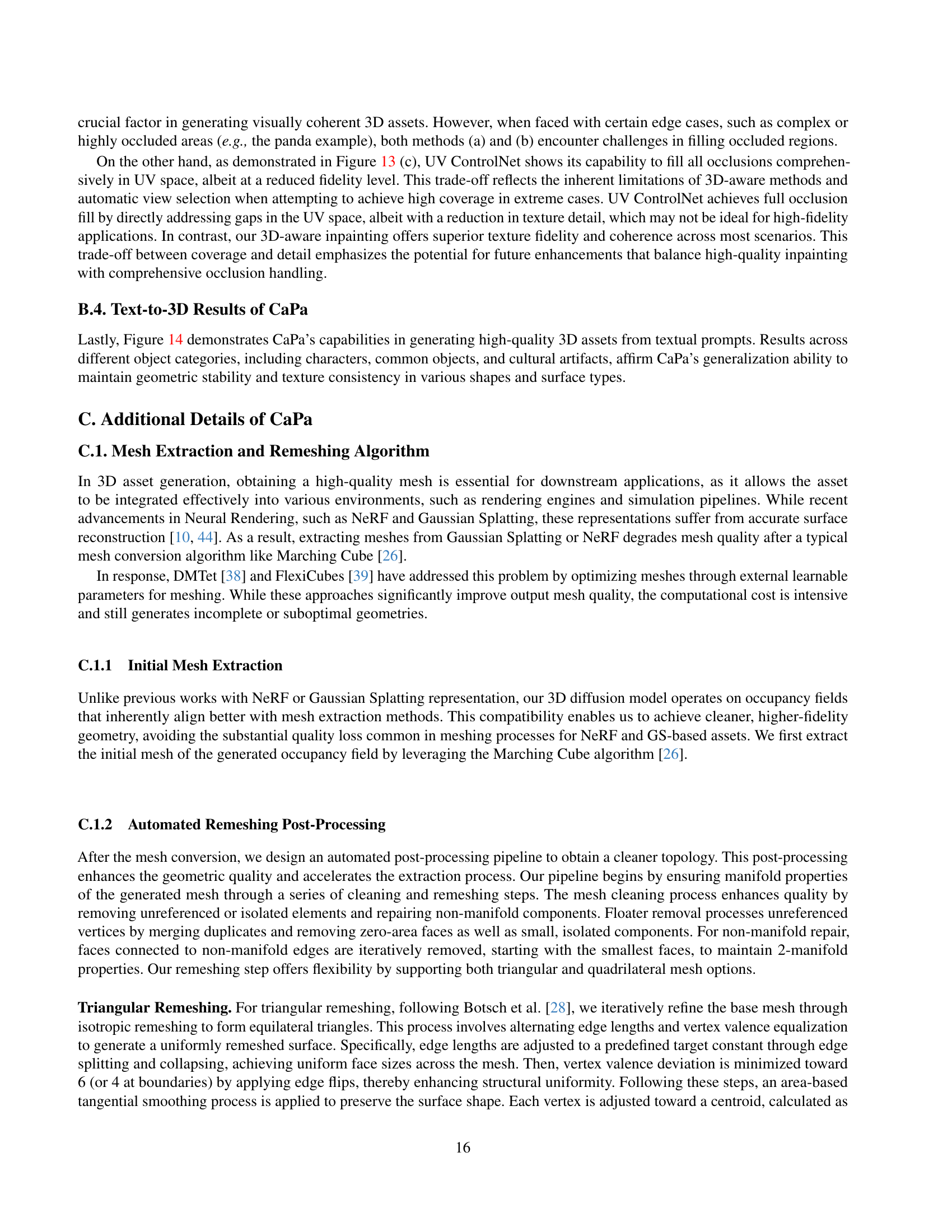

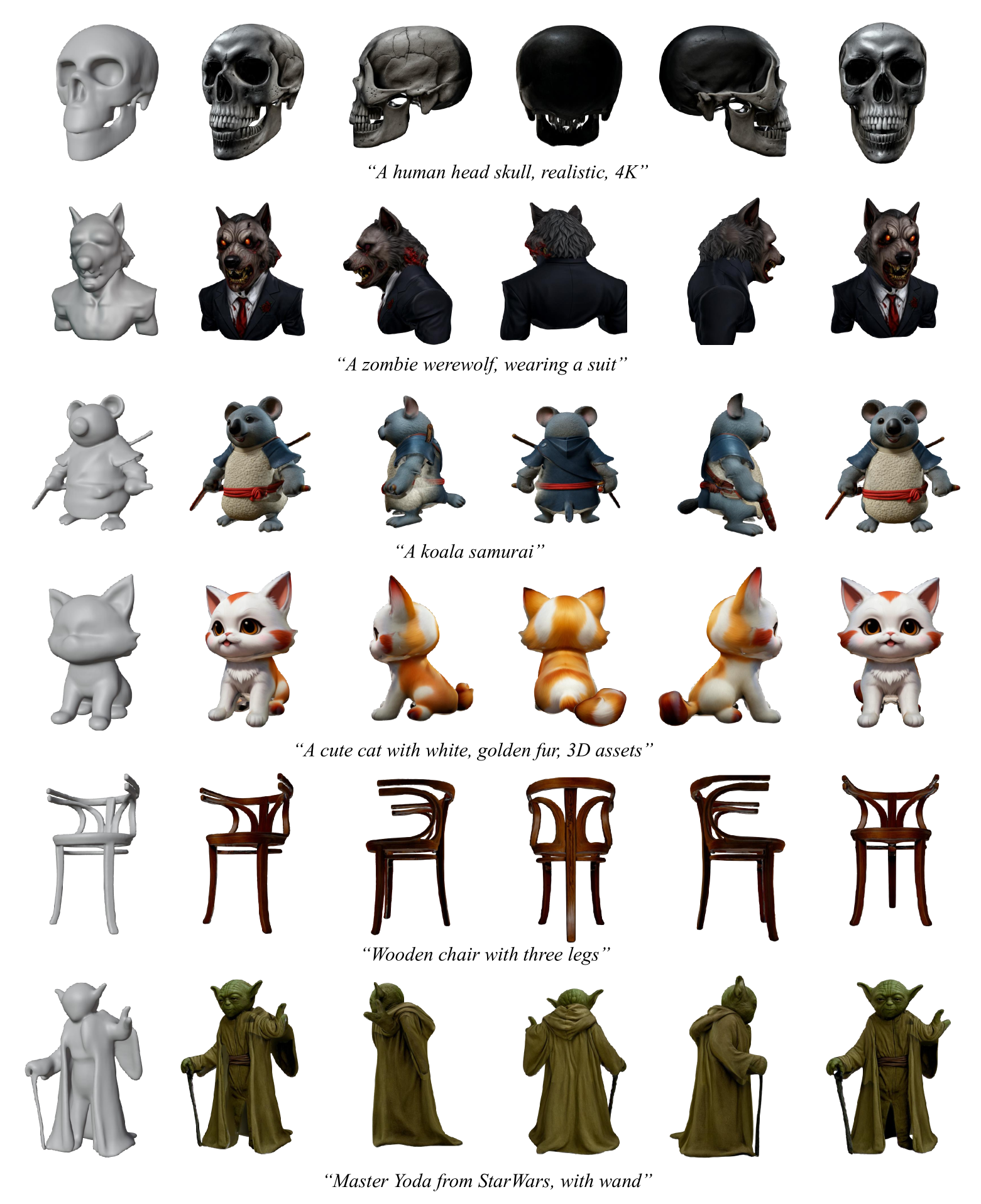

🔼 This figure showcases various 3D models generated by CaPa from text prompts. It highlights CaPa’s ability to create high-resolution textures that seamlessly integrate with well-defined 3D geometries. The examples demonstrate CaPa’s versatility in generating a wide range of objects, from skulls and anthropomorphic animals to furniture and fictional characters. The detailed textures and sharp geometries demonstrate the system’s effectiveness and its capability to produce high-quality 3D assets suitable for various applications.

read the caption

Figure 13: Text-to-3D Results of CaPa. CaPa can generate diverse objects from textual, and visual input. The result underscores CaPa’s strengths in generating high-resolution textures that align with well-defined geometries.

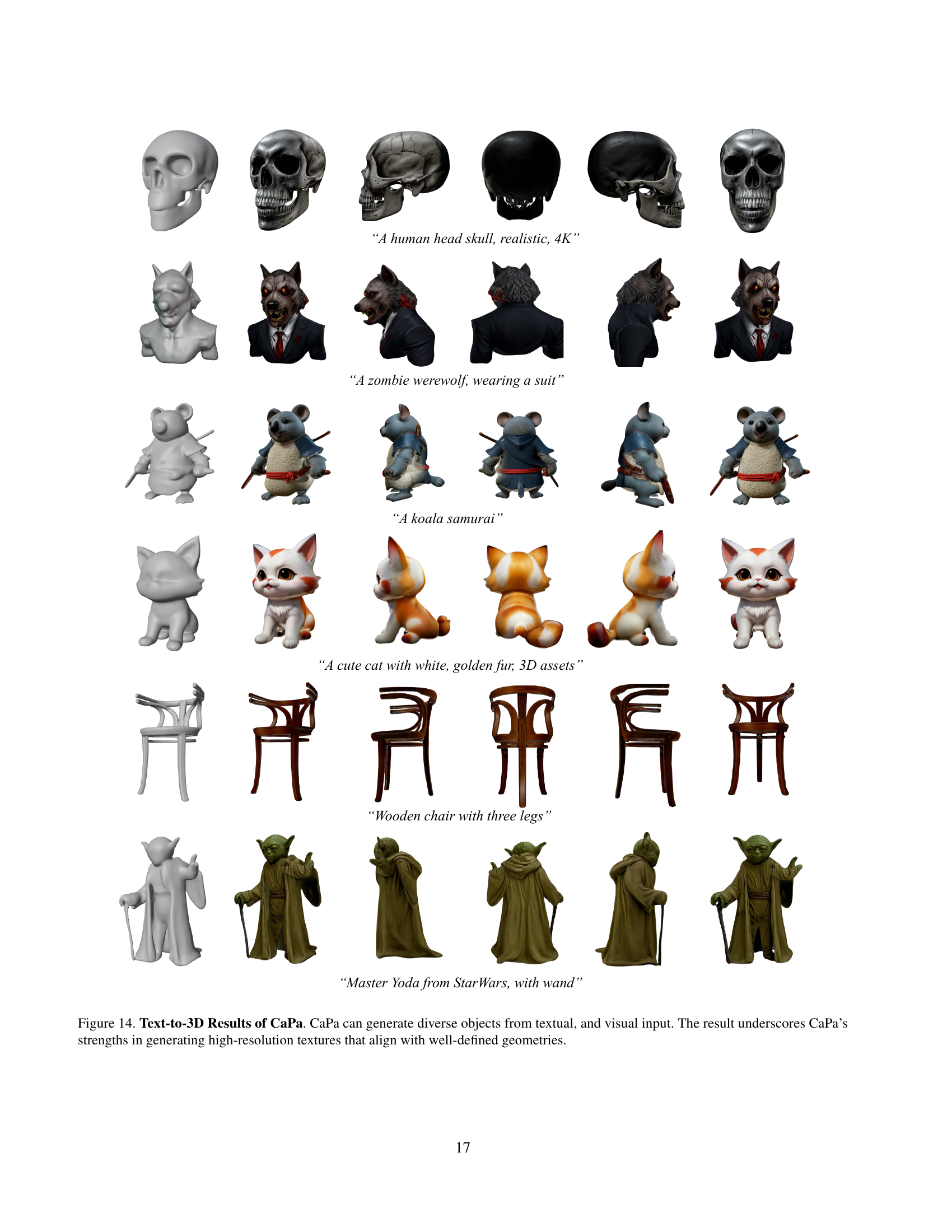

🔼 This figure demonstrates the remeshing process employed in the CaPa framework. The original polygonal mesh (a) is shown, alongside the results after remeshing with quadrilateral faces (b) and triangular faces (c). Remeshing is a crucial step in producing high-quality 3D models suitable for various applications, enhancing the mesh quality and resolving issues such as non-manifold geometry. Quadrilateral remeshing offers a more structured and uniform mesh ideal for texturing and subsequent processing, while triangular remeshing is more flexible. The figure showcases the improved mesh quality and regularity achieved through these remeshing techniques.

read the caption

Figure 14: Results of Our Remeshing Algorithm. We employ a carefully designed remeshing scheme after geometry generation for better practical usage for broader applications. (a) shows the original polygonal mesh, (b) shows remeshed output of quadrilateral faces, and (c) shows remeshed output of triangular faces.

More on tables

| Method | FID | KID | Time |

|---|---|---|---|

| Ours | 55.23 | 13.46 | 5 sec. |

| Automatic View-Selection [5] | 62.31 | 15.83 | 20 sec. |

| UV-ControlNet [59] | 128.71 | 37.38 | 5 sec. |

🔼 This table presents a quantitative comparison of different occlusion inpainting methods, focusing on their ability to restore occluded regions in 3D textures while maintaining semantic consistency and contextual alignment. The results are measured using FID and KID scores, which evaluate the visual fidelity and semantic similarity between the original and inpainted textures. Lower FID and KID scores indicate better performance. The table highlights the superior performance of the proposed 3D-aware occlusion inpainting method compared to alternative approaches, demonstrating its ability to reconstruct occluded areas effectively without introducing significant visual artifacts.

read the caption

Table 2: Quantitative Comparison of Occlusion Inpainting. Our 3D-aware inpainting restores occlusions with minimal semantic drift and improves contextual alignment efficiently.

| Method | CLIP (-) |

|---|---|

| w/ Spatially Decoupled Attention | 85.37 |

| w/o Spatially Decoupled Attention | 81.28 |

🔼 This table presents a quantitative analysis of the Janus effect, a common artifact in multi-view 3D generation where inconsistencies appear between different views of a generated object. The Janus effect is evaluated by calculating the CLIP (Contrastive Language–Image Pre-training) similarity score between the rendered normal maps (representing the surface geometry) and the generated textures. A higher CLIP score indicates better alignment and consistency between the geometry and texture across multiple views, suggesting a reduction in Janus artifacts. The table compares the average CLIP scores obtained with and without the proposed spatially decoupled cross-attention mechanism, demonstrating the effectiveness of this mechanism in mitigating the Janus effect.

read the caption

Table 3: Quantitative analysis of Janus Artifacts, measuring a CLIP score between rendered normals and textures across random views.

Full paper#