TL;DR#

Creating clear and informative visualizations from scientific data can be challenging, especially for researchers without strong programming skills. Existing tools are often complex, requiring significant expertise to use effectively, and even experienced users often struggle with accuracy. Large language models (LLMs) show promise for automating this process, but struggle with accuracy and need iterative debugging.

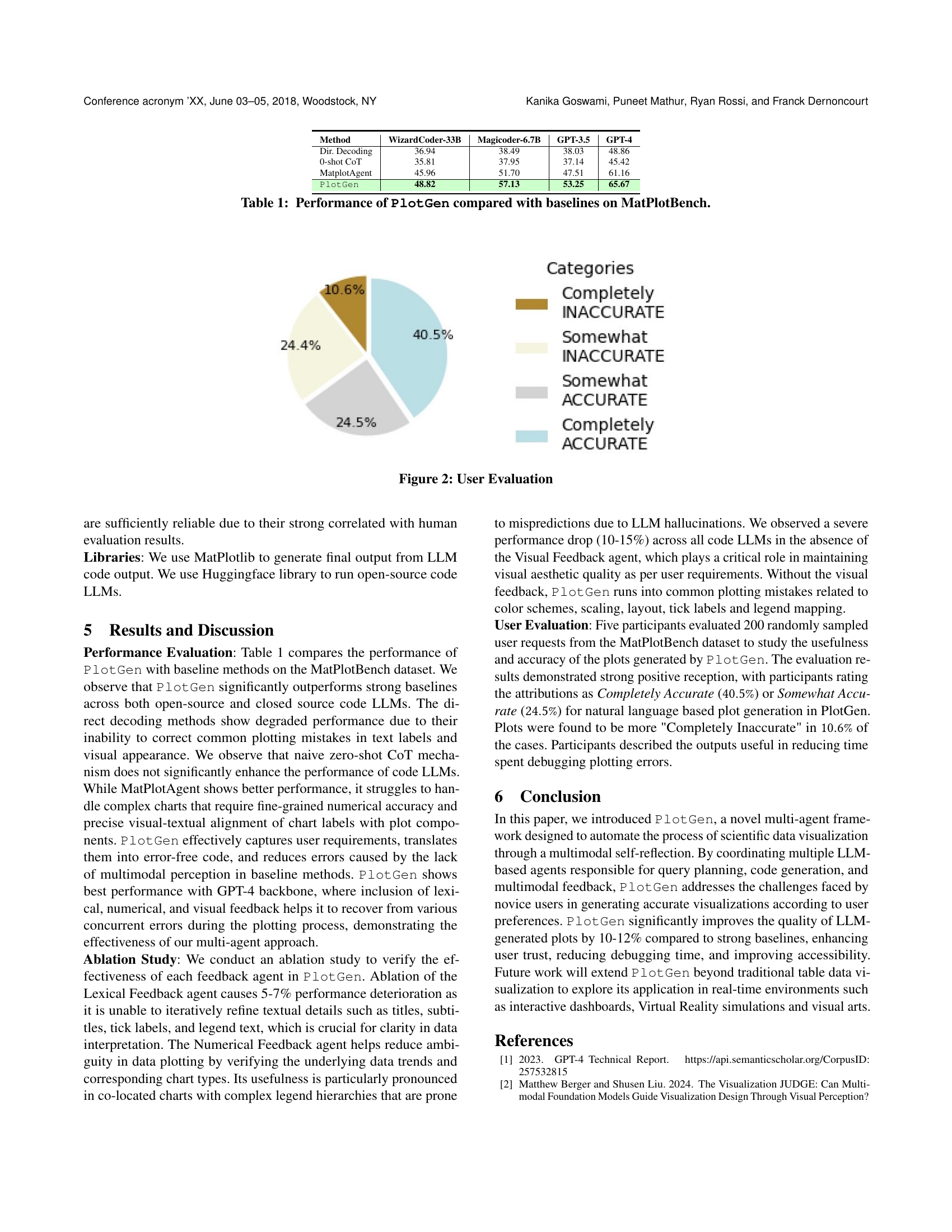

PlotGen tackles these issues by using a multi-agent system powered by LLMs. It breaks down complex user requests into smaller tasks, generates code, and then uses three feedback agents (numerical, lexical, and visual) to iteratively refine the visualization based on multimodal feedback. This iterative refinement significantly improves accuracy and reduces debugging time, making the process much easier for novice users and ultimately improving their productivity. The results demonstrate that PlotGen outperforms other methods, showing the effectiveness of its approach.

Key Takeaways#

Why does it matter?#

This paper is important because it presents PlotGen, a novel framework that significantly improves the accuracy and efficiency of automated scientific data visualization. This addresses a critical need in the field, making advanced visualization techniques more accessible to novice users and potentially accelerating research across many scientific disciplines. The innovative use of multimodal LLMs and multi-agent design opens new avenues for research in AI-assisted data analysis and visualization.

Visual Insights#

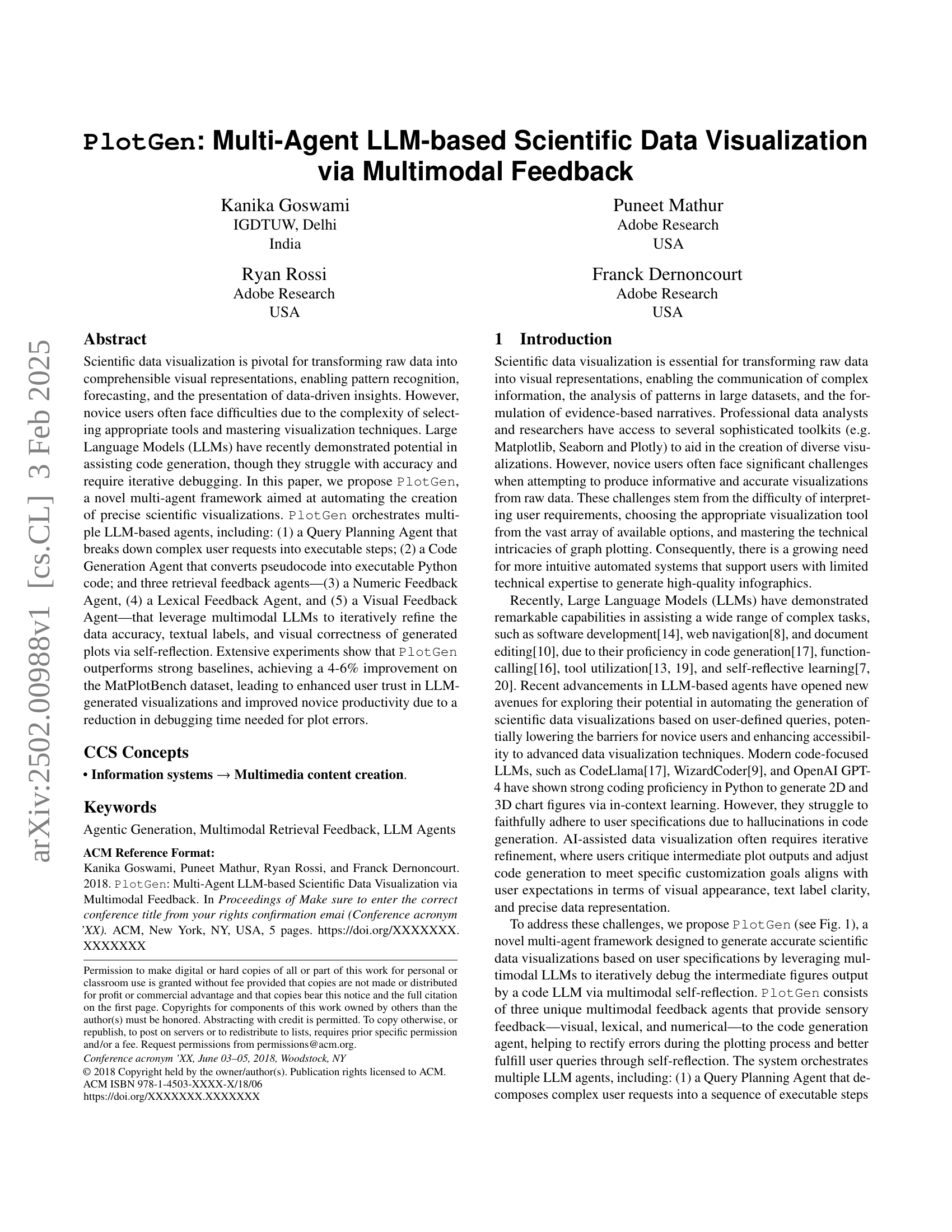

🔼 PlotGen uses multiple large language models (LLMs) working together to create visualizations from user requests. A Query Planning Agent breaks down the request into steps. A Code Generation Agent turns those steps into Python code. Three feedback agents (Numeric, Lexical, and Visual) use multimodal LLMs to check the accuracy of the data, text, and appearance of the generated plot, making iterative improvements via self-reflection.

read the caption

Figure 1. PlotGen generates accurate scientific data visualizations based on user specifications by orchestrating multimodal LLMs: (1) Query Planning Agent that breaks down complex user requests into executable steps; (2) Code Generation Agent that converts pseudocode into executable Python code; and three code retrieval feedback agents—(3) Numeric Feedback Agent, (4) Lexical Feedback Agent, and (5) Visual Feedback Agent—that leverage multimodal LLMs to iteratively refine the data accuracy, textual labels, and visual aesthetics of generated plots via self-reflection.

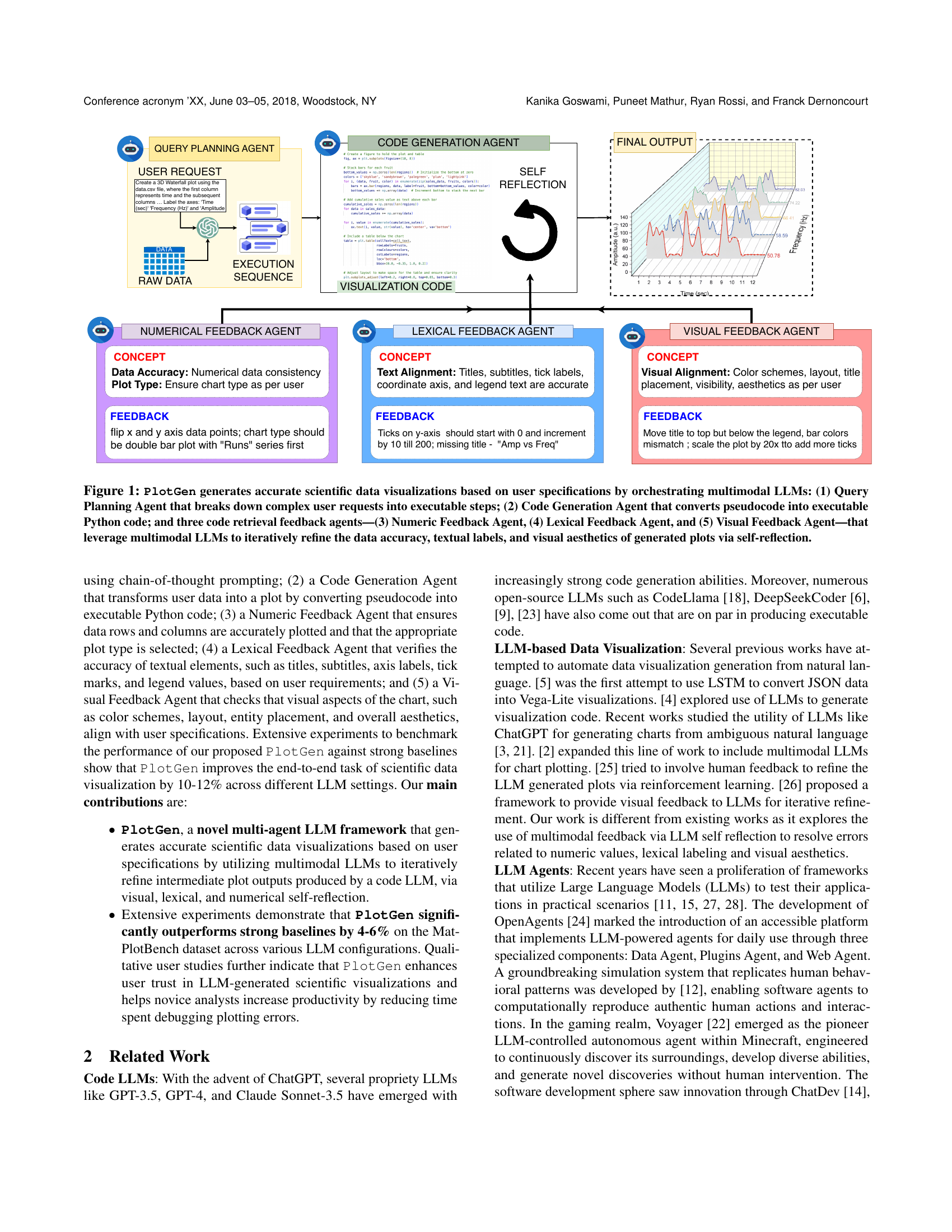

| Method | WizardCoder-33B | Magicoder-6.7B | GPT-3.5 | GPT-4 |

| Dir. Decoding | 36.94 | 38.49 | 38.03 | 48.86 |

| 0-shot CoT | 35.81 | 37.95 | 37.14 | 45.42 |

| MatplotAgent | 45.96 | 51.70 | 47.51 | 61.16 |

| PlotGen | 48.82 | 57.13 | 53.25 | 65.67 |

🔼 This table presents a comparison of the performance of the PlotGen model against several baseline models on the MatPlotBench dataset. The performance is measured using an LLM-based automatic scoring metric (0-100). The baseline models include Direct Decoding (LLM directly generating code), 0-shot Chain-of-thought prompting (LLM prompted with chain of thought), and MatPlotAgent (a prior model using GPT-4 and GPT-4V). The table shows the performance scores for each model across four different LLMs: WizardCoder-33B, Magicoder-6.7B, GPT-3.5, and GPT-4, highlighting PlotGen’s consistent improvement over the baselines.

read the caption

Table 1. Performance of PlotGen compared with baselines on MatPlotBench.

Full paper#