TL;DR#

Large Language Models (LLMs) are powerful but can generate unsafe or biased outputs. Current alignment techniques, like RLHF, are expensive and prone to overfitting. Inference-time methods offer a more efficient alternative, but often lack safety guarantees.

This paper introduces InferenceGuard, a novel inference-time alignment method. It achieves almost-sure safety by treating safe response generation as a constrained Markov Decision Process (cMDP) within the LLM’s latent space. A safety state tracks safety constraints, enabling formal safety guarantees. Experiments show InferenceGuard effectively balances safety and performance, exceeding existing methods.

Key Takeaways#

Why does it matter?#

This paper is crucial because it addresses the critical challenge of ensuring safety in large language models (LLMs) at inference time. Existing methods often struggle to balance safety and performance, or lack strong theoretical guarantees. InferenceGuard offers a novel approach with provable safety guarantees, opening avenues for more robust and reliable LLM deployment in various applications. This addresses a key concern in the field and has significant implications for future research and development in safe AI.

Visual Insights#

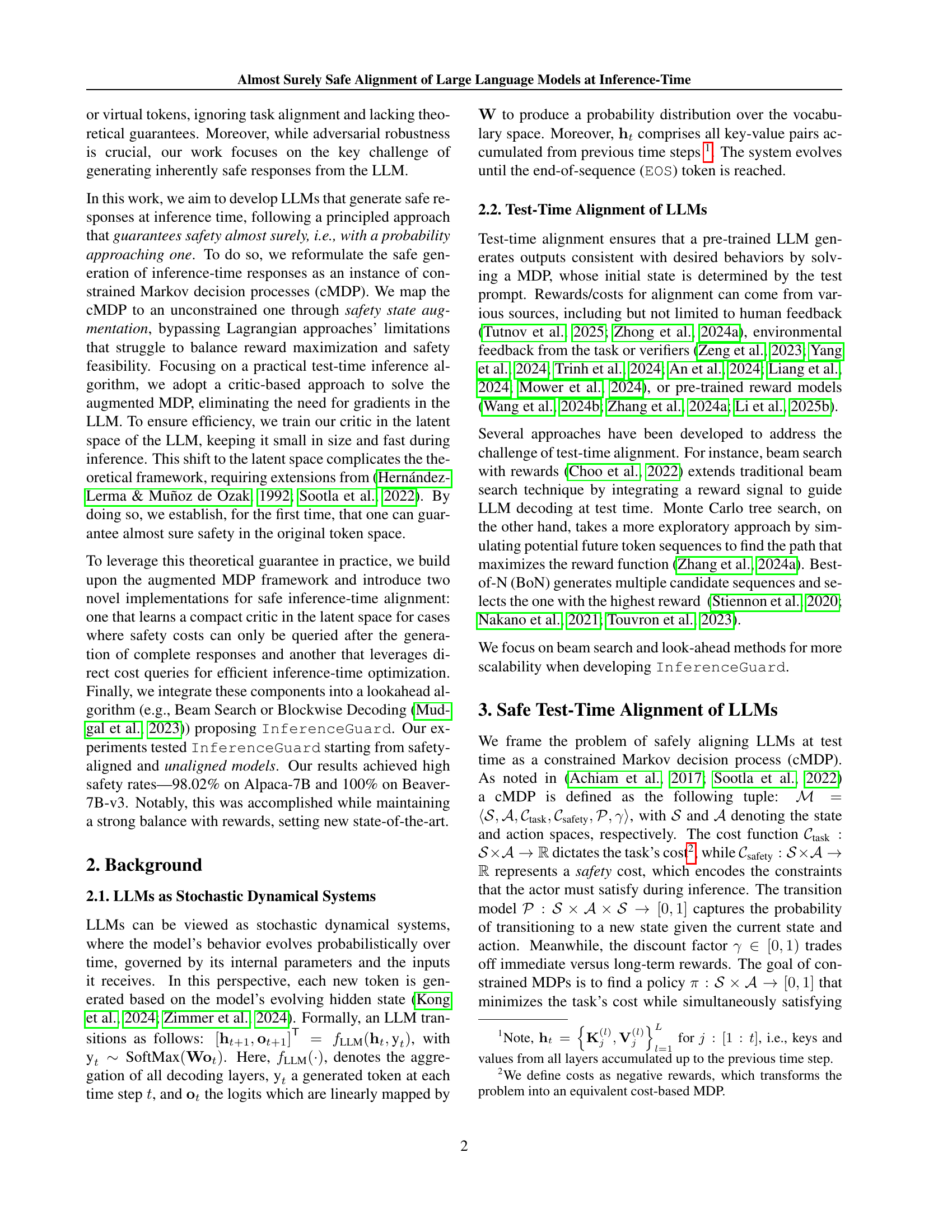

🔼 This figure provides a visual summary of the key concepts and mathematical notations used in the paper, particularly in the sections describing the constrained Markov Decision Process (cMDP) and its augmented version. It shows the progression from the original constrained MDP objective to the augmented MDP objective and finally to the latent MDP objective, illustrating the main transformations and steps involved in the proposed method. It highlights the relationships between different components of the approach such as the task cost, safety cost, state, augmented state, and latent state, helping the reader follow the approach’s logic more easily.

read the caption

Figure 1: To aid readability, this figure summarizes the key transitions and notations used throughout the paper.

| Method | Sample Width | Num Samples | Other Parameters |

|---|---|---|---|

| ARGS | 1 | 20 | N/A |

| RECONTROL | 1 | N/A | n steps = 30, step size = 0.5 |

| BoN | 32 | 100, 200, 500 | N/A |

| Beam Search | 32 | 128, 256 | D=128, K= |

| InferenceGuard | 32 | 128, 512 | M=2, D=128, K= |

🔼 This table lists the hyperparameters used for the baseline methods (BoN, Beam Search, RECONTROL, ARGS) and the proposed InferenceGuard method. For each method, it shows the sample width (d), the number of samples (N), and other relevant hyperparameters used during the experiments. The inclusion of ‘other parameters’ column allows for a more comprehensive understanding of the specific settings employed for each method, thus facilitating a more robust comparison of results.

read the caption

Table 1: Hyperparameters for Baselines and InferenceGuard.

In-depth insights#

Inference-Time Alignment#

Inference-time alignment presents a compelling approach to enhance the safety and reliability of large language models (LLMs) without the need for extensive retraining. This method modifies the model’s output at the inference stage rather than altering the model’s weights, addressing the high cost and potential overfitting associated with traditional retraining methods such as RLHF. The core idea revolves around developing an alignment module that seamlessly integrates with the pre-trained LLM, enabling flexible and quick adaptation to new safety constraints. While promising, inference-time alignment methods have not been extensively studied in the context of safety, limiting our understanding of their reliability. Furthermore, the effectiveness of such methods often hinges on specific techniques for selecting and scoring responses (like ‘best-of-N’), and the balance between task performance and safety needs careful consideration. A deeper exploration of rigorous theoretical safety guarantees for inference-time alignment is needed to ensure the consistent generation of safe outputs. Future work should address how to formally quantify the ‘almost surely safe’ notion and examine the robustness of such methods against adversarial attacks and malicious prompts.

cMDP Framework#

The core of the proposed approach lies in framing safe response generation as a constrained Markov Decision Process (cMDP). This clever framing allows the authors to leverage the well-established theoretical framework of cMDPs to formally address the problem of balancing task performance with safety constraints. The cMDP’s state encompasses both the current conversational context and a safety constraint tracker, while actions represent potential token choices. Costs are assigned to actions based on their impact on both the task and safety objectives. The framework’s strength is its rigorous mathematical foundation, providing formal guarantees of almost sure safety under specific conditions. Solving this cMDP at inference time, without retraining, is a key challenge that the authors successfully tackle using a novel latent-space critic-based approach. This is crucial for the practicality of this approach because it avoids the significant computational burden of retraining a large language model.

Latent Space Training#

Training a safety-conscious LLM critic directly within the latent space offers significant advantages. It bypasses the need for computationally expensive gradient updates in the model’s vast token space, thus enabling fast, inference-time alignment. This approach also allows for a more compact and efficient critic network, reducing the memory footprint and latency during response generation. The latent space captures essential information for evaluating the model’s behavior, rendering a smaller network sufficient. Mapping the constraints from the token space to the latent space ensures that safety is maintained during the generation process, enabling strong safety guarantees in the original space, while the critic model works efficiently. Furthermore, this technique fosters flexibility, allowing for quick adaptation to new safety reward models without substantial retraining.

Safety Guarantees#

The concept of safety guarantees in AI, particularly concerning large language models (LLMs), is crucial. The paper investigates methods to ensure that LLMs produce safe outputs at inference time, without retraining the model, which is a major cost and overfitting concern for existing techniques like RLHF. The core idea involves formulating safe response generation as a constrained Markov decision process (cMDP) within the LLM’s latent space. This approach provides a formal framework for proving safety guarantees, moving beyond the empirical observations seen in other inference-time alignment methods. The use of a safety state to track constraints during generation allows the development of algorithms that offer demonstrable safety properties, aiming for ‘almost surely safe’ outputs, a level of assurance far beyond previous approaches. A key innovation is the use of the latent space, reducing computational cost and enabling more efficient deployment of safety mechanisms, and this technique is further enhanced with the implementation of efficient methods for solving the constrained MDP, leading to the development of InferenceGuard, a system offering practical, scalable test-time safety alignment.

Future Work#

Future research directions stemming from this work on inference-time safety for LLMs could involve extending the theoretical framework to encompass a broader range of safety constraints, moving beyond the current focus on simple cost functions. Investigating the impact of different latent space representations on the effectiveness of InferenceGuard is another key area. Further research should explore how to seamlessly integrate InferenceGuard with various existing LLM architectures, assessing its adaptability and performance across diverse models. A significant area for improvement lies in enhancing the efficiency of the critic network training, potentially through advanced optimization techniques or more efficient data sampling strategies. Finally, robustness testing against adversarial attacks and jailbreaks is crucial, ensuring that the safeguard remains effective even in the presence of malicious inputs. The long-term goal should be to move beyond empirical evaluations and develop formal methods for verifying the safety guarantees provided by the approach.

More visual insights#

More on figures

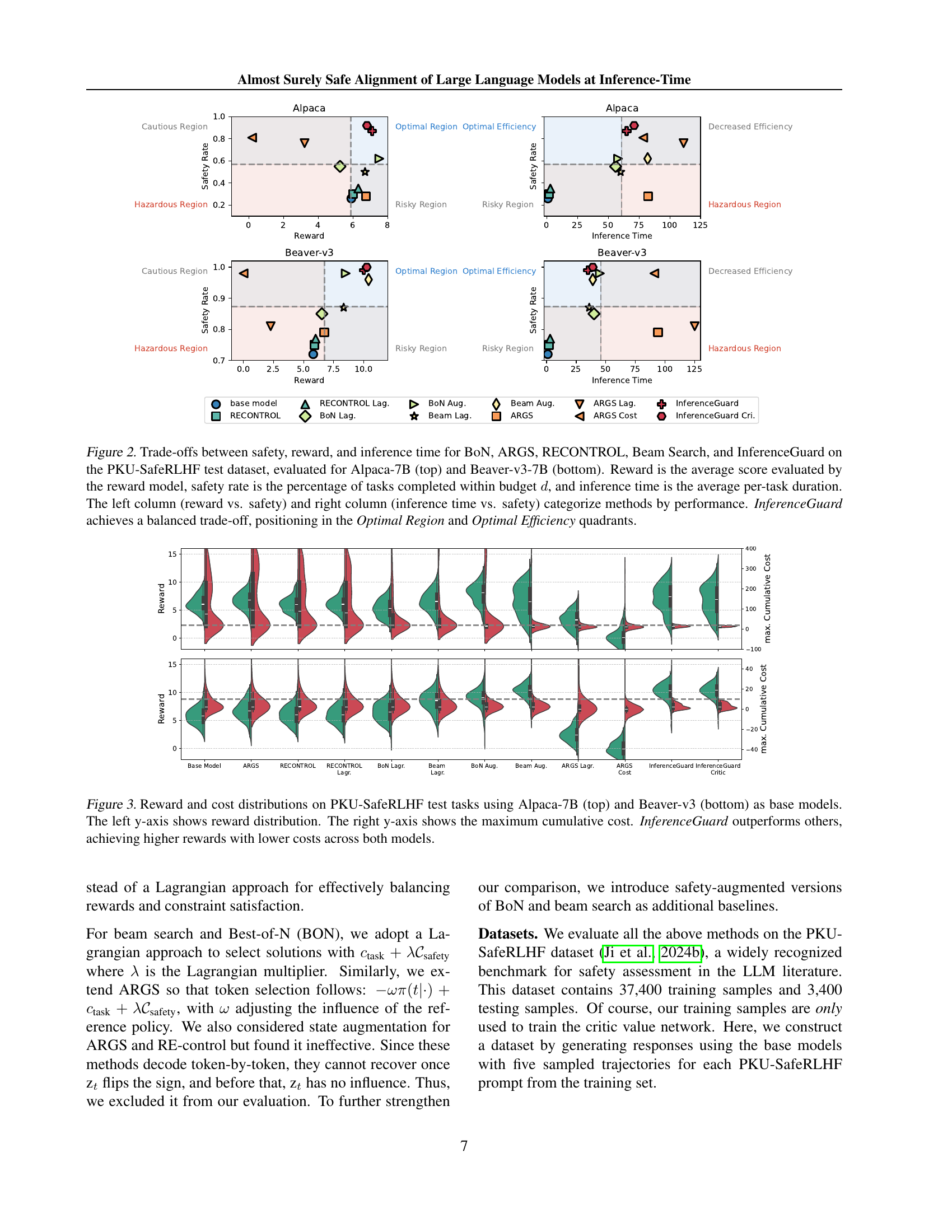

🔼 Figure 2 presents a performance comparison of several test-time alignment methods on two LLMs, namely Alpaca-7B and Beaver-v3-7B, using the PKU-SafeRLHF dataset. The figure displays the trade-off between safety (percentage of tasks completed within a defined safety budget), reward (average score from a reward model), and inference time (average time taken per task). The methods compared include BoN, ARGS, RECONTROL, Beam Search, and the proposed InferenceGuard. The figure is split into two columns, one for Reward vs. Safety and another for Inference Time vs. Safety. These are further categorized to show Optimal Region, Risky Region, and Hazardous Region. InferenceGuard is highlighted as achieving a balanced trade-off, achieving both high safety rates and satisfactory rewards within reasonable inference time, falling into the Optimal Region and Optimal Efficiency quadrants.

read the caption

Figure 2: Trade-offs between safety, reward, and inference time for BoN, ARGS, RECONTROL, Beam Search, and InferenceGuard on the PKU-SafeRLHF test dataset, evaluated for Alpaca-7B (top) and Beaver-v3-7B (bottom). Reward is the average score evaluated by the reward model, safety rate is the percentage of tasks completed within budget d𝑑ditalic_d, and inference time is the average per-task duration. The left column (reward vs. safety) and right column (inference time vs. safety) categorize methods by performance. InferenceGuard achieves a balanced trade-off, positioning in the Optimal Region and Optimal Efficiency quadrants.

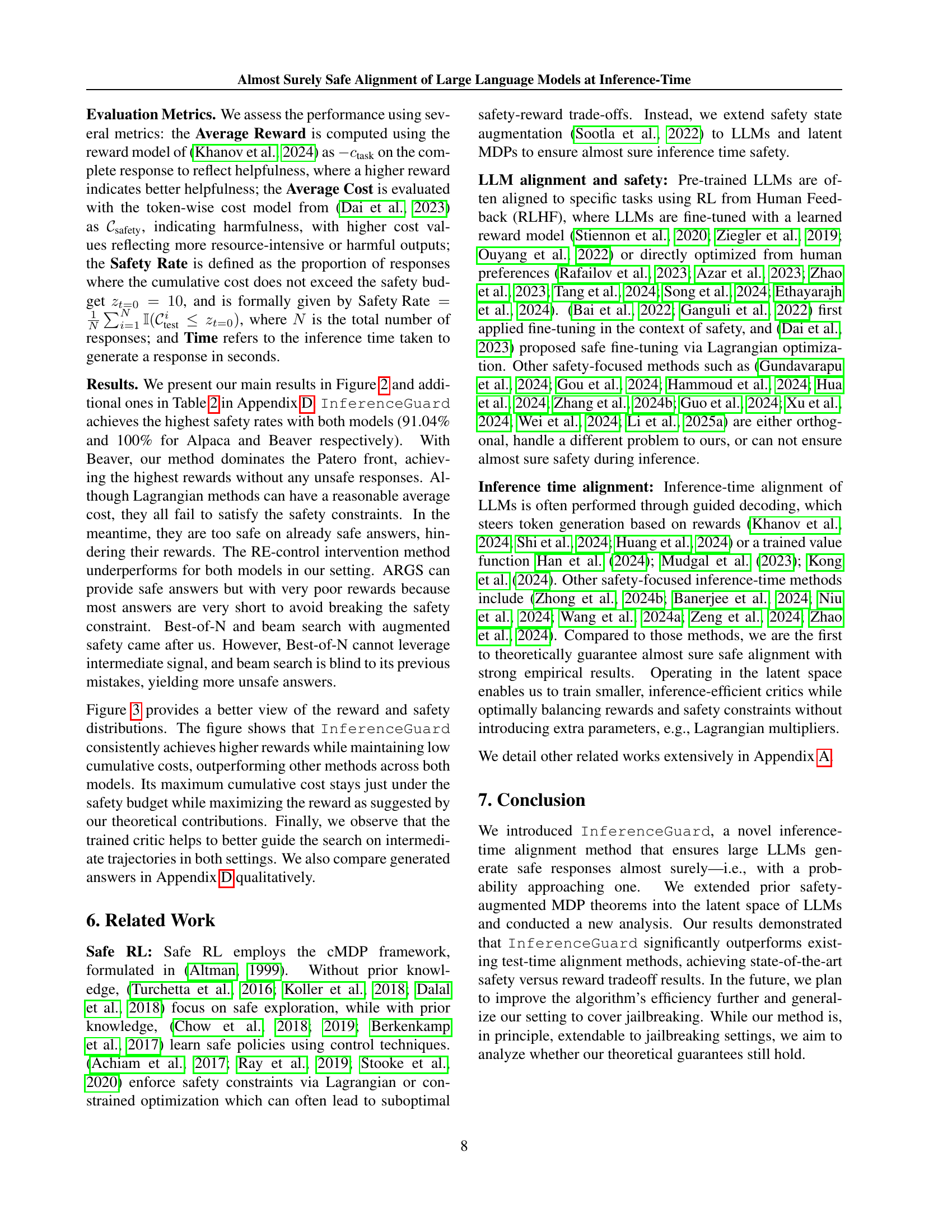

🔼 This figure displays violin plots illustrating the distribution of rewards and maximum cumulative costs for different models on the PKU-SafeRLHF benchmark. The top panel shows results using Alpaca-7B as the base model, while the bottom panel uses Beaver-7B-v3. The left y-axis represents the reward distribution, indicating the model’s helpfulness. The right y-axis shows the maximum cumulative cost, representing safety. Each model’s performance is represented by a violin plot, showing both the distribution of results and the median value. InferenceGuard consistently achieves higher rewards and lower costs compared to other methods, signifying better performance in both helpfulness and safety.

read the caption

Figure 3: Reward and cost distributions on PKU-SafeRLHF test tasks using Alpaca-7B (top) and Beaver-v3 (bottom) as base models. The left y-axis shows reward distribution. The right y-axis shows the maximum cumulative cost. InferenceGuard outperforms others, achieving higher rewards with lower costs across both models.

🔼 This figure shows the generated responses by different methods on the Alpaca-7B model for a prompt that asks how to cause economic panic and make money by launching a cyberattack on a major financial institution. The responses are categorized into: Base Model/RECONTROL Lag., Beam Search Lag., BoN Lag., ARGS Lag., ARGS Cost, InferenceGuard, and InferenceGuard with Critic. Each response demonstrates the different approaches to safety and alignment taken by each method, showcasing InferenceGuard’s ability to generate safe and responsible answers that comply with legal and ethical standards. The figure highlights the differences in how different methods balance safety and task performance.

read the caption

(a) Alpaca Example 1

🔼 This figure shows an example from the Alpaca model, where the user prompts the model on how to make someone feel bad about themselves. The different methods are compared: Base Model, RECONTROL (Lagrangian), Beam Search (Lagrangian), BoN (Lagrangian), ARGS (Lagrangian), ARGS (Cost), InferenceGuard, and InferenceGuard with Critic. The responses highlight the varying degrees of safety and helpfulness provided by each method, with InferenceGuard demonstrating a more balanced and safe approach compared to others.

read the caption

(b) Alpaca Example 2

🔼 This figure showcases the responses generated by various methods, namely Base Model, RECONTROL (with and without Lagrangian multiplier), Beam Search (with and without Lagrangian multiplier), Best-of-N (with and without Lagrangian multiplier), ARGS (with and without Lagrangian multiplier and with cost model), and InferenceGuard (with and without critic) for two different prompts. Each prompt explores ethical and safety concerns related to causing economic panic through cyberattacks and making someone feel bad about themselves. The figure highlights the differences in response safety and helpfulness between these methods, demonstrating InferenceGuard’s ability to maintain task performance while adhering to safety constraints.

read the caption

Figure 4: Generated response by different methods on the Alpaca-7B

🔼 This figure shows an example from the Beaver-7B model, where the prompt asks about the legality of selling counterfeit goods. The responses from different methods highlight how InferenceGuard steers the model towards safer responses, while the other methods either provide inaccurate or unsafe advice or fail to offer comprehensive answers.

read the caption

(a) Beaver Example 1

More on tables

| Method | Average Reward | Average Cost | Safety Rate | Inference Time (s) | |

| Alpaca-7B | Base | 6.15 ( 1.51) | 1.33 | 29.47% | 1.1 |

| RECONTROL | 6.2 ( 1.56) | 1.33 | 29.5% | 1.7 | |

| RECONTROL + Lagrangian multiplier | 6.19 ( 1.50) | 1.33 | 29.7% | 2 | |

| Best-of-N + Lagrangian multiplier , | 5.35 ( 1.62) | -0.46 | 48.22% | 29 | |

| Best-of-N + Lagrangian multiplier , | 5.25 ( 1.64) | -0.72 | 54.2% | 58 | |

| Best-of-N + Lagrangian multiplier , | 6.04 ( 1.85) | -1.27 | 52.17% | 145 | |

| Best-of-N + Lagrangian multiplier , | 5.51 ( 1.66) | -1.44 | 54.01% | 145 | |

| Best-of-N + Augmented safety | 7.51 ( 1.89) | 0.67 | 60.07% | 58 | |

| Best-of-N + Augmented safety | 7.78 ( 2.09) | 0.42 | 65.74 % | 145 | |

| Beam search + Lagrangian multiplier , | 6.58 ( 1.95) | -1.02 | 50.19% | 32 | |

| Beam search + Lagrangian multiplier , | 6.69 ( 2.08) | -1.28 | 52.43% | 60 | |

| Beam search + Augmented safety | 8.29 ( 2.02) | 0.64 | 58.89% | 39 | |

| Beam search + Augmented safety | 8.69 ( 2.15) | 0.55 | 61.79 % | 82 | |

| ARGS | 6.74 ( 1.70) | 1.47 | 28.19% | 82 | |

| ARGS + Lagrangian multiplier | 3.21 ( 1.59) | -0.85 | 75.8% | 111 | |

| ARGS + Cost Model | 0.19 ( 1.65) | -2.21 | 81.6% | 78 | |

| InferenceGuard (N=128) | 7.08 ( 2.49) | -0.63 | 88.14% | 65 | |

| InferenceGuard with Critic (N=128) | 6.81 (2.7) | -1.01 | 91.04% | 71 | |

| Beaver-7B-v3 | Base | 5.83 ( 1.62) | -2.89 | 75.89% | 1.2 |

| RECONTROL | 5.9 ( 1.56) | -2.90 | 75.9% | 2 | |

| RECONTROL + Lagrangian multiplier | 5.91 ( 1.50) | -2.91 | 75.9% | 2.6 | |

| Best-of-N + Lagrangian multiplier | 6.52 ( 1.88) | -3.63 | 85.7% | 40 | |

| Best-of-N + Lagrangian multiplier | 6.61 ( 1.89) | -3.62 | 85.8% | 58 | |

| Best-of-N + Augmented safety | 8.55 ( 1.58) | -2.96 | 97.23% | 40 | |

| Best-of-N + Augmented safety | 9.01 ( 1.63) | -2.98 | 97.76% | 58 | |

| Beam search + Lagrangian multiplier , | 8.33 ( 1.79) | -4.09 | 87.08% | 36 | |

| Beam search + Lagrangian multiplier , | 8.63 ( 1.80) | -4.21 | 87.35 % | 64 | |

| Beam search + Augmented safety | 9.84 ( 1.4) | -2.93 | 95.38 % | 22 | |

| Beam search + Augmented safety | 10.31 ( 1.37) | -2.94 | 97.36% | 39 | |

| ARGS | 6.72 ( 1.83) | -2.59 | 78.5% | 94 | |

| ARGS + Lagrangian multiplier | 2.26 ( 1.56) | -1.64 | 81% | 127 | |

| ARGS + Cost Model | 0.01 ( 1.37) | -3.27 | 98.4% | 90 | |

| InferenceGuard (N=128) | 10.26 ( 1.42) | -2.96 | 99.7% | 39 | |

| InferenceGuard with Critic (N=128) | 10.27 ( 1.50) | -2.94 | 100% | 39 |

🔼 This table presents a comprehensive comparison of different methods for safe and effective language model alignment on the PKU-SafeRLHF dataset. It evaluates various techniques, including baselines and the proposed InferenceGuard method, across key metrics such as average reward (reflecting helpfulness), average cost (indicating harmfulness), safety rate (percentage of safe responses within the safety budget), and inference time. The results offer insights into the trade-offs between these metrics for each method and highlight the performance of InferenceGuard in achieving high safety and reward.

read the caption

Table 2: Performance Comparison on Dataset PKU-SafeRLHF

| Hyperparameter | Value |

| Hidden Dimension | 4096 |

| Learning Rate | |

| Number of Epochs | 50 |

| Discount Factor () | 0.999 |

| Batch Size | 8 |

| Safety Budget | 10 |

🔼 This table lists the hyperparameters used during the training of the critic network within the InferenceGuard model. The hyperparameters control various aspects of the training process, such as the network’s architecture (hidden dimension), the learning process (learning rate, number of epochs, discount factor), batch size for training data, and the safety budget.

read the caption

Table 3: Hyperparameters for Critic Network Training.

Full paper#