TL;DR#

Current language agents often struggle with complex interactive tasks due to the reliance on outcome-based reward models which fail to provide sufficient guidance during the reasoning process. This results in suboptimal policies and hinders overall performance. The lack of intermediate annotations also makes training these models data-intensive and challenging.

QLASS offers a solution by introducing a Q-guided stepwise search to automatically generate annotations and improve training. By estimating Q-values in a stepwise manner and using an exploration tree, QLASS provides effective intermediate guidance and achieves significant performance improvements. Even with less training data, QLASS maintains strong results, highlighting its efficiency. The method enables more effective decision-making and qualitative analysis.

Key Takeaways#

Why does it matter?#

This paper is important because it introduces a novel method for improving the inference-time performance of language agents, a crucial area of research in AI. QLASS addresses the limitations of existing methods that rely heavily on outcome rewards by incorporating a Q-guided stepwise search strategy. This allows for more efficient decision-making and handling of complex tasks, even with limited training data. The findings are highly relevant to ongoing efforts in self-improving language models and open up avenues for improving the efficiency of interactive reasoning systems.

Visual Insights#

🔼 This figure illustrates the QLASS pipeline, a four-stage process for boosting language agent inference. Stage 1 involves supervised fine-tuning (SFT) of a language model using expert data. Stage 2 uses the SFT agent to explore the environment and create an exploration tree for each task, estimating Q-values for each node in the tree using Equation 7. Stage 3 trains a Q-network (QNet) on the estimated Q-values. Finally, Stage 4 utilizes the trained QNet to guide the agent’s inference at each step, improving decision-making.

read the caption

Figure 1: QLASS pipeline overview. QLASS involves mainly four stages: 1) Supervised fine-tuning (SFT) on expert data. 2) Leverage SFT agent to explore the environment and construct an exploration tree for each task. After construction, estimate the Q-value of each tree node based on Equation 7. 3) Train QNet on the estimated Q-values. 4) Use the trained QNet to provide inference guidance at each step.

| Dataset | #Train | #Test-Seen | #Test-Unseen | #Turns |

|---|---|---|---|---|

| WebShop | 1,938 | 200 | - | 4.9 |

| SciWorld | 1,483 | 194 | 241 | 14.4 |

| ALFWorld | 3,321 | 140 | 134 | 10.1 |

🔼 This table presents a statistical overview of the datasets used in the experiments, following the same setup as the ETO model (Song et al., 2024). It details the number of training samples (’#Train’), test samples with seen scenarios (‘Test-Seen’), test samples with unseen scenarios (‘Test-Unseen’), and the average number of interactions (’#Turns’) within each successful trajectory from the expert dataset for three different tasks: WebShop, SciWorld, and ALFWorld. These statistics provide insights into the size and complexity of the datasets, which are crucial for understanding the performance of different language agents tested on these datasets.

read the caption

Table 1: The statistics of datasets (We follow the same setup as ETO (Song et al., 2024)). “Test-Seen” and “Test-Unseen” are test sets with seen and unseen cases respectively. “#Turns” means the average number of interaction turns for the SFT trajectories.

In-depth insights#

Stepwise Reward Shaping#

Stepwise reward shaping is a crucial technique in reinforcement learning, especially when dealing with complex, long-horizon tasks. It addresses the limitations of traditional methods that rely solely on sparse, final rewards by providing intermediate feedback at each step of a task. This allows the agent to better understand the progress towards the final goal and learn more efficient policies. The key benefit is improved learning efficiency, as the agent receives continuous guidance rather than only knowing the outcome at the very end. This is especially helpful in scenarios where the optimal trajectory is not immediately apparent or involves multiple sub-goals. By providing intermediate rewards, stepwise shaping guides the agent towards more effective decision-making. However, designing effective stepwise rewards can be challenging. Carefully chosen intermediate rewards should align with the overall goal, avoid misleading the agent, and ideally, be automatically generated, eliminating the need for significant manual effort. A poorly designed stepwise reward system can negatively impact the learning process by reinforcing suboptimal behaviors or by being overly complex and computationally expensive. Successful implementation of stepwise reward shaping often requires careful consideration of the task’s structure, the agent’s capabilities, and the characteristics of the environment. Future research in this area could focus on developing methods for automatically generating effective stepwise rewards and adapting shaping strategies for specific problem domains.

QLASS Pipeline#

The QLASS pipeline is a novel, four-stage process designed to enhance language agent inference. It starts with behavior cloning, fine-tuning a language model on expert-demonstrated trajectories. Next, reasoning trees are constructed via self-generation, where the agent explores the environment, creating a tree representing various action sequences and their outcomes. Crucially, Q-values are estimated for each node in the tree, providing intermediate rewards that guide learning, overcoming the limitations of sparse final-reward settings. Finally, Q-guided generation uses these Q-values to direct the agent’s actions during inference, leading to more efficient and effective decision-making. This stepwise approach avoids the pitfalls of solely relying on final outcomes, enabling the agent to learn from intermediate feedback and significantly improving performance, especially in complex scenarios with limited training data. The use of Q-values as a process reward is key to the pipeline’s success, providing a mechanism to learn from the internal steps of a complex decision process, unlike traditional methods relying on only final outcome-based rewards.

Q-Value Estimation#

The core of QLASS lies in its novel approach to process reward modeling using Q-value estimation. Instead of relying on sparse, outcome-based rewards which can’t effectively guide step-wise decision-making, QLASS estimates Q-values for each step in an agent’s trajectory. This is achieved by constructing an exploration tree, where each node represents a state-action pair. The Q-values are then calculated recursively using a modified Bellman equation, incorporating future rewards from the leaf nodes (final outcomes) to provide effective intermediate guidance. This stepwise evaluation is crucial for long-horizon, complex tasks where a single final reward is insufficient. The estimation process leverages the Bellman equation, propagating future rewards back through the tree to more accurately assess the long-term value of each action at each step. The Q-values thus serve as a strong inductive bias, enabling the language agent to make more effective decisions during inference, focusing on maximizing long-term reward rather than short-sighted gains. The system’s ability to learn from limited supervision is enhanced by this method, as it significantly reduces the need for extensive human annotation of intermediate steps.

Limited Data Efficiency#

Limited data efficiency is a crucial challenge in many machine learning applications, especially those involving complex tasks such as language agent training. The paper addresses this by proposing a novel method to enhance performance with less data. QLASS leverages a Q-guided stepwise search approach, generating intermediate annotations (Q-values) to guide the language agent’s inference process. This reduces reliance on outcome-based rewards which can be sparse and misleading in complex scenarios, making it particularly effective in low-data regimes. By implicitly generating informative feedback at each step, QLASS allows for more effective learning and better generalization, even when the amount of annotated data is significantly less than traditional methods. The experimental results demonstrate the effectiveness of this strategy in achieving strong performance with almost half the annotated data, confirming its efficiency and robustness in handling limited supervision.

Future Extensions#

Future research directions for QLASS could explore several promising avenues. Improving the Q-value estimation is crucial; more sophisticated methods beyond Bellman updates, perhaps incorporating uncertainty estimation or model-based RL techniques, could enhance accuracy and robustness. Expanding the exploration tree construction strategies is another key area; exploring more efficient tree pruning techniques, adaptive tree depths, and incorporating prior knowledge or heuristics would improve efficiency and reduce computational costs. Furthermore, investigating different Q-network architectures beyond MLPs, such as transformers or graph neural networks, could potentially capture richer feature interactions and lead to better Q-value prediction. Finally, extending QLASS to handle more complex environments with richer action spaces, partial observability, or non-Markovian dynamics, would demonstrate its generalizability and applicability to real-world scenarios. The ultimate goal is to develop a more effective and efficient self-improvement method for language agents applicable across a wider range of challenging tasks.

More visual insights#

More on figures

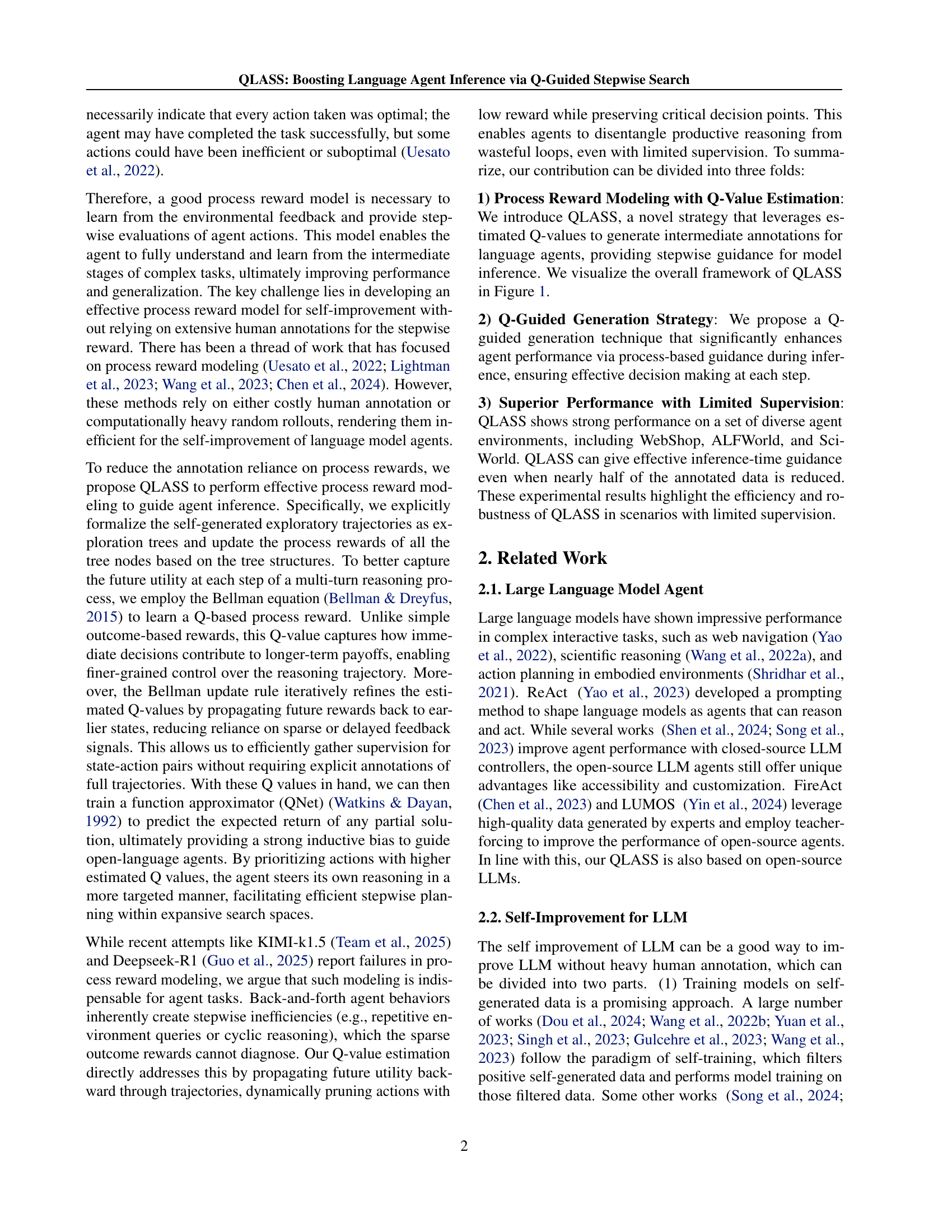

🔼 This figure illustrates the process of building an exploration tree. Each node in the tree represents a step in an agent’s interaction with an environment. Grey nodes indicate branches that resulted in a zero reward; once a zero-reward leaf node is encountered, the algorithm stops expanding that branch. Green nodes, on the other hand, signify branches with non-zero rewards, and these branches continue to be explored. This selective expansion strategy helps to focus computational resources on more promising trajectories.

read the caption

Figure 2: Illustrative example of constructing a exploration tree. Grey nodes represent the branches with a zero outcome reward. Once the leaf node with a zero outcome reward is detected, a Stop expansion signal will be sent back to the first unexpanded node on the branch. Green nodes are on branches where zero outcome reward is not detected and can keep expanding.

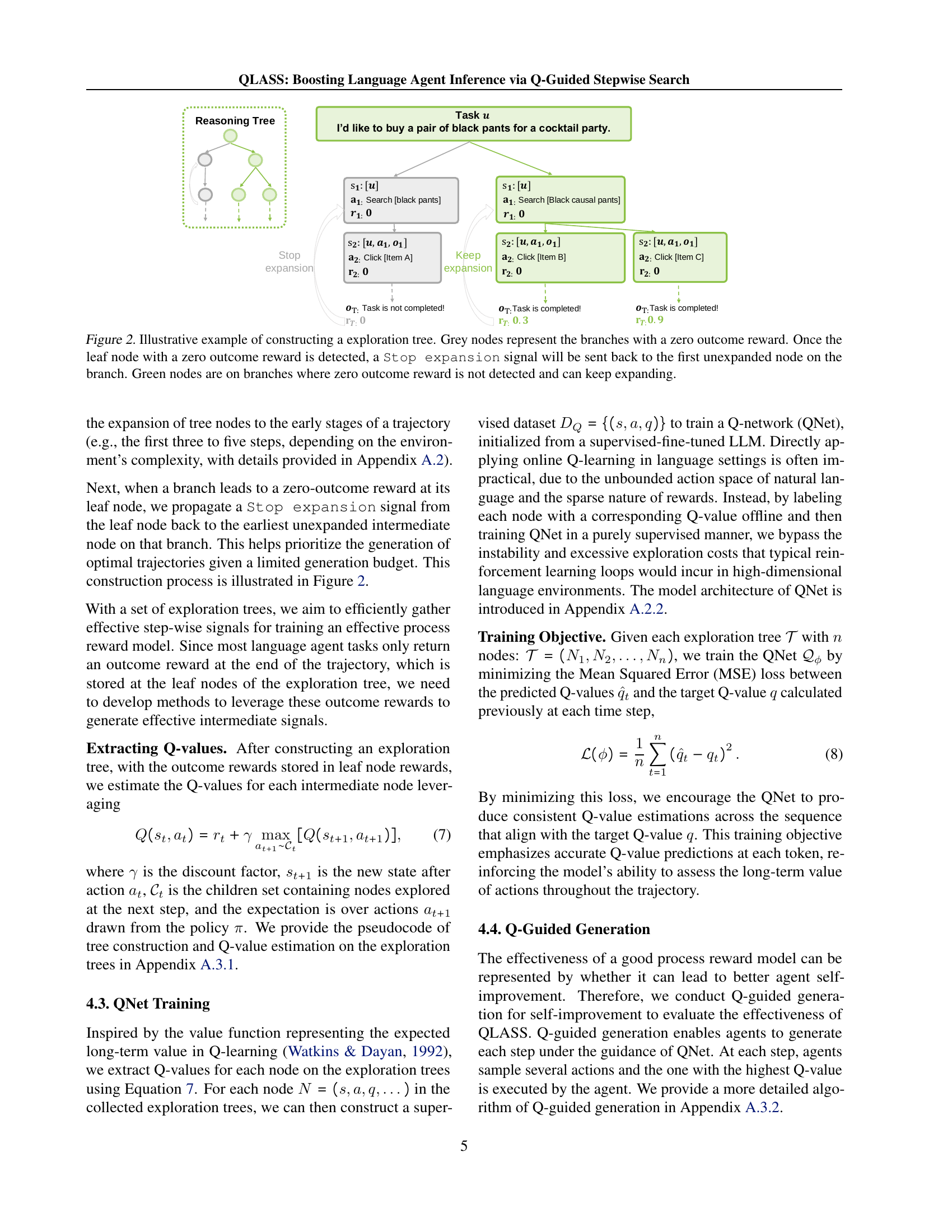

🔼 This figure compares the performance of QLASS and the Best-of-N method across various search budget levels. The x-axis displays the average number of tokens used in the trajectories generated during inference across all three tasks (WebShop, SciWorld, and ALFWorld). The y-axis represents the average reward obtained for each method under different search budgets. The plot visualizes how both methods’ performance changes as the number of tokens increases, demonstrating the impact of varying search space on the final result.

read the caption

Figure 3: QLASS and Best-of-N under different search budgets. The x-axis represents the number of tokens consumed by the trajectories generated during inference averaged on all the tasks in each test set.

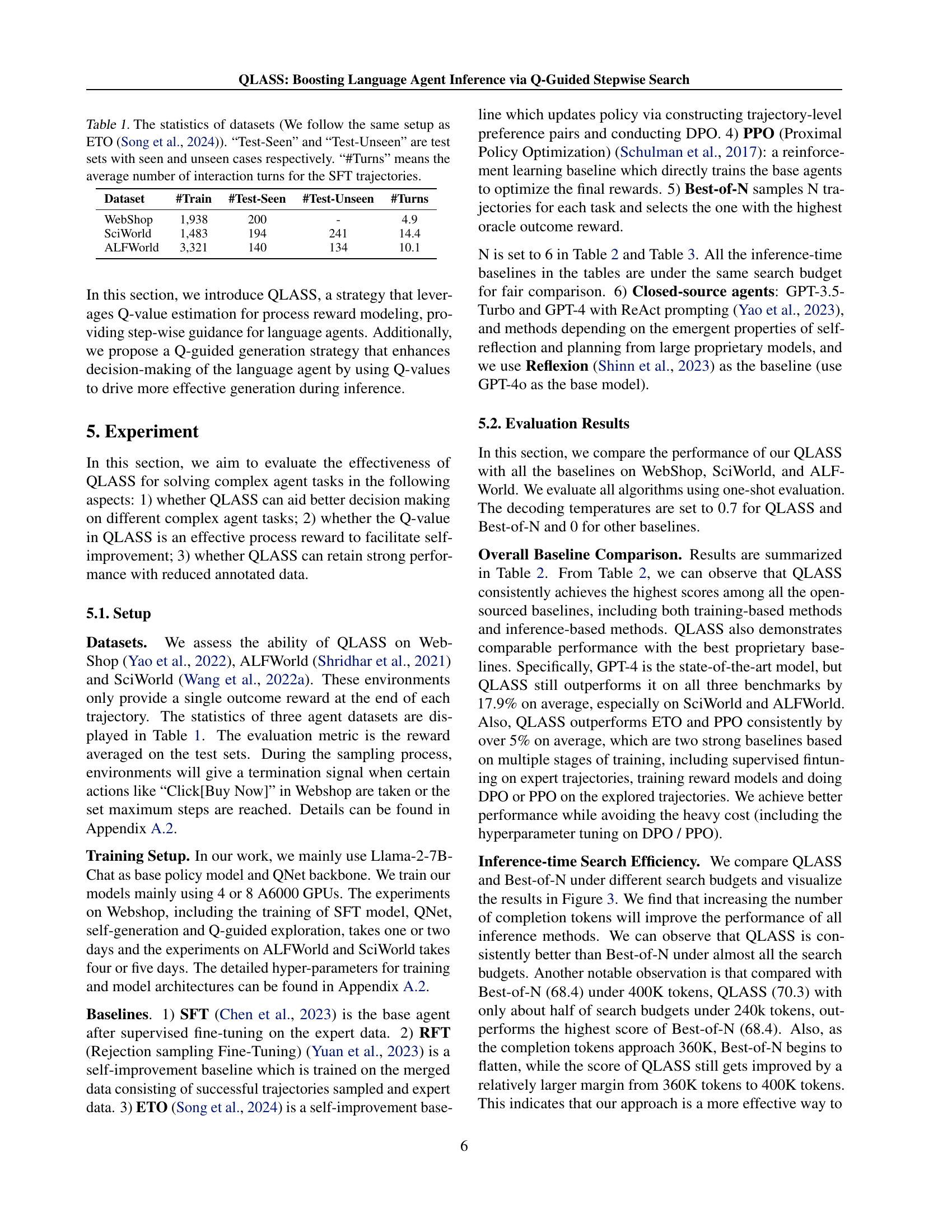

🔼 This figure compares the performance of three different self-training methods that use varying process reward models. All three methods leverage exploration trees created in Stage 2 of the QLASS pipeline to guide the self-training data generation process. The three process reward models are: Q-value (the proposed method from QLASS), Average Reward (which averages final rewards), and Reward (which uses back-propagated final rewards). The plot shows that using Q-values for process reward modeling leads to the best self-training performance, demonstrating the effectiveness of the proposed method.

read the caption

Figure 4: Self-training baselines. The three methods marked with diagonal stripes leverage different process reward modeling based on the same exploration trees constructed in Stage 2 to guide self-training data generation.

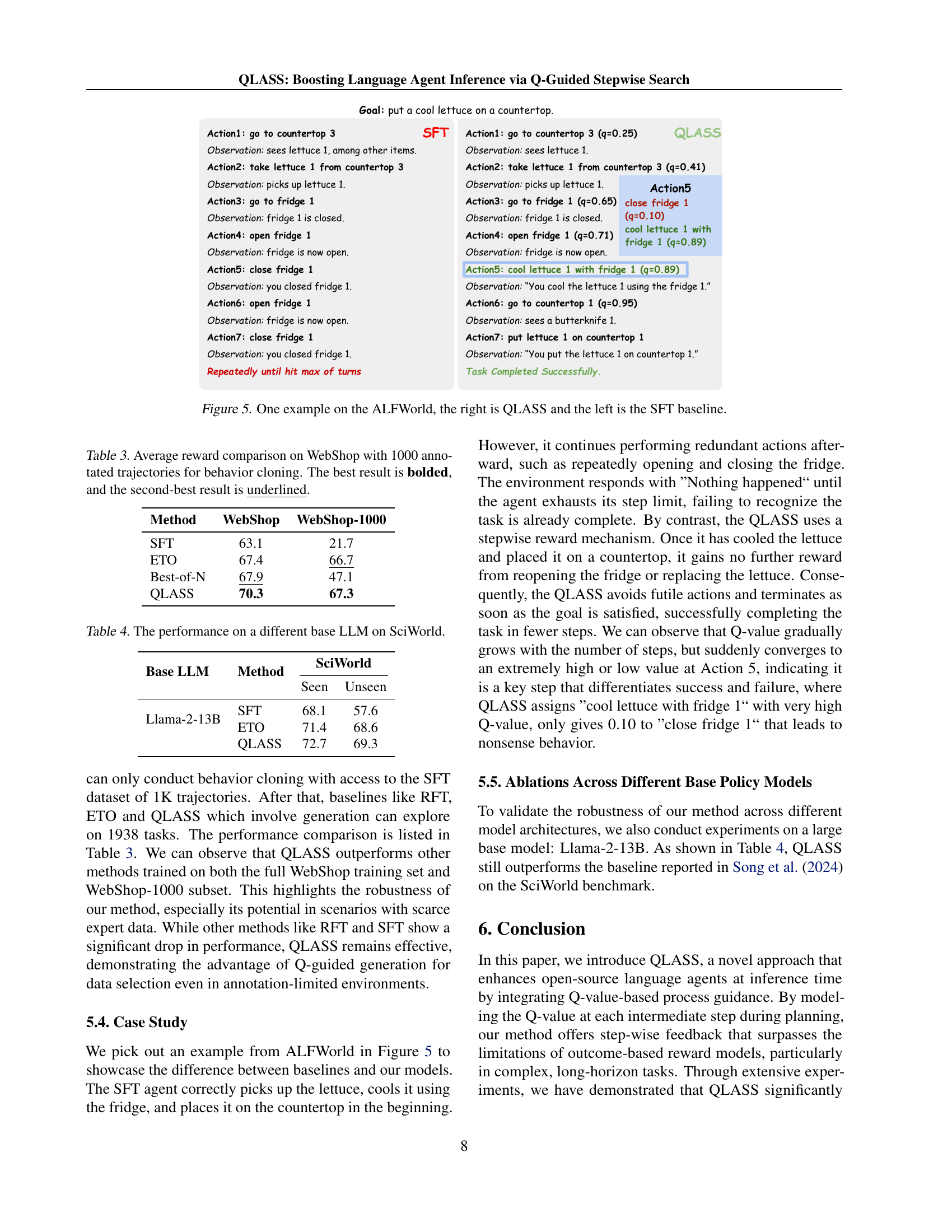

🔼 This figure presents a comparison of the performance of QLASS and SFT (Supervised Fine-Tuning) on a specific task within the ALFWorld environment. The left side shows the steps taken by the SFT agent to complete the task of placing a cool lettuce on a countertop. The SFT agent demonstrates redundancy in its actions, repeatedly opening and closing the fridge. In contrast, the right side illustrates QLASS’s efficient approach to the same task. QLASS achieves the goal more efficiently by prioritizing actions with higher Q-values, effectively avoiding unnecessary steps and completing the task successfully.

read the caption

Figure 5: One example on the ALFWorld, the right is QLASS and the left is the SFT baseline.

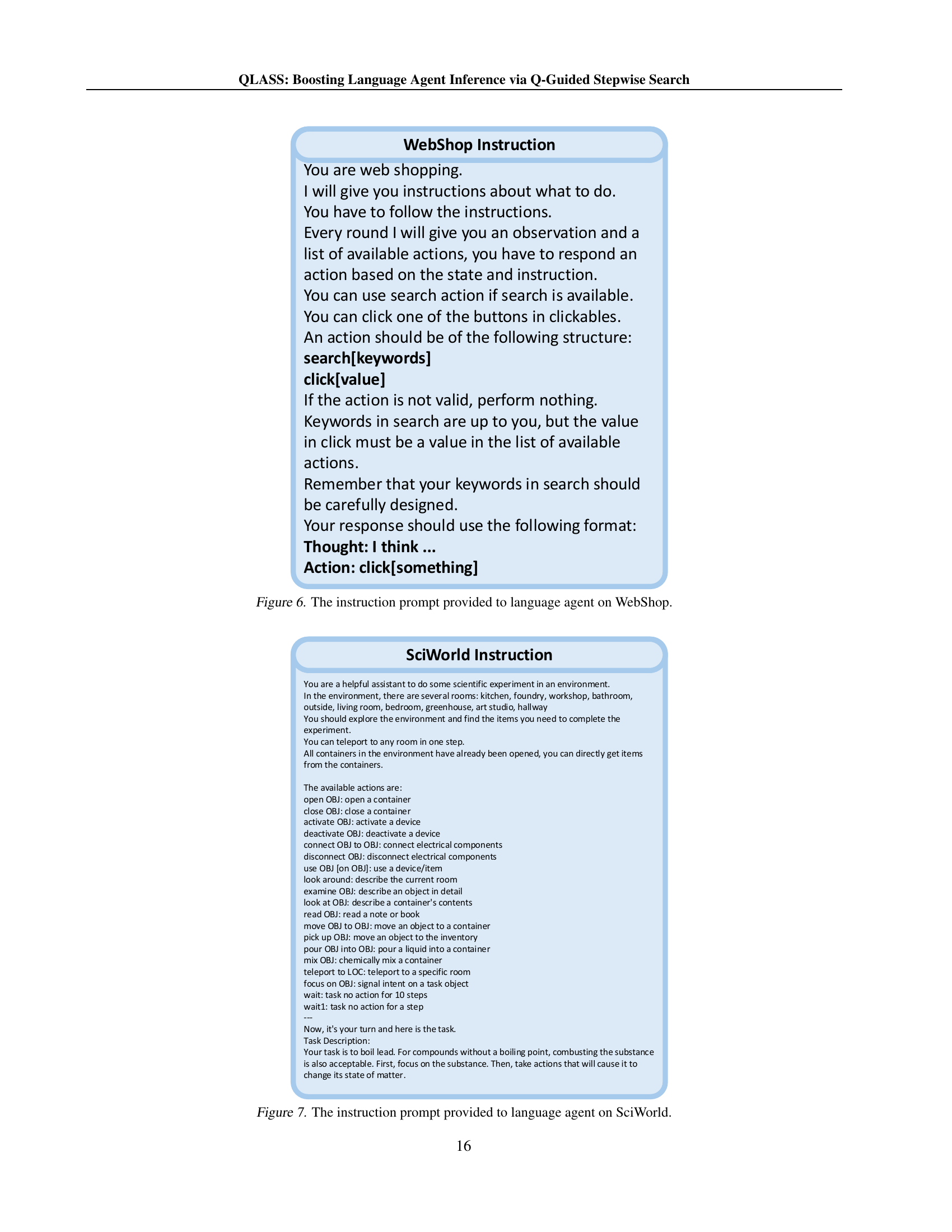

🔼 This figure displays the instruction prompt given to the language agent in the WebShop environment. The prompt instructs the agent that it is engaging in a web-shopping task and should follow instructions provided. The instructions specify that each turn, the agent will receive an observation and a list of actions, from which it must select an appropriate action. It details that an action can be a

searchfollowed by keywords or aclickon a selectable item. The prompt emphasizes that search keywords must be carefully selected and gives a template for the agent’s response, which should include a thought and action.read the caption

Figure 6: The instruction prompt provided to language agent on WebShop.

🔼 This figure displays the detailed instructions given to the language agent within the SciWorld environment. These instructions provide a comprehensive overview of the agent’s capabilities, available actions, and the expected response format. The instructions detail the various actions the agent can perform, such as opening, closing, activating, deactivating devices, connecting, and disconnecting electrical components, using items, and moving objects. It also specifies the command format the agent should use when interacting with the environment and provides a sample task for the agent to complete. This ensures the agent understands the environment’s constraints and its role in completing the tasks.

read the caption

Figure 7: The instruction prompt provided to language agent on SciWorld.

🔼 This figure displays the instructions given to the language agent in the ALFWorld environment. The instructions detail the agent’s role as an intelligent agent within a household setting, tasked with performing actions to accomplish a given goal. The prompt specifies the agent’s need to follow the provided format when responding. The format includes a ‘Thought’ section for planning, followed by an ‘Action’ section detailing the specific action to be performed. Available actions are clearly listed and explained. An example task is presented at the end of the instruction, requiring the agent to put a clean knife on the countertop. The prompt aims to guide the agent toward effective and coherent behavior within the environment.

read the caption

Figure 8: The instruction prompt provided to language agent on ALFWorld.

🔼 This figure shows an example of how task descriptions are paraphrased using GPT-3.5-Turbo to increase action diversity during inference in the WebShop environment. The original task, ‘I need a long-lasting 6.76 fl oz bottle of l’eau d’issey, and price lower than 100.00 dollars,’ is rephrased in four different ways, each with slightly altered wording but conveying the same essential information. This perturbation technique helps the model generate a wider variety of actions, leading to more effective exploration and improved performance.

read the caption

Figure 9: An illustrative example on task perturbation.

More on tables

| Method | WebShop | SciWorld | ALFWorld | ||

|---|---|---|---|---|---|

| Seen | Unseen | Seen | Unseen | ||

| GPT-4 | 63.2 | 64.8 | 64.4 | 42.9 | 38.1 |

| GPT-3.5-Turbo | 62.4 | 16.5 | 13.0 | 7.9 | 10.5 |

| Reflexion (Shinn et al., 2023)♠ | 64.2 | 60.3 | 64.4 | 45.7 | 55.2 |

| Base Agent (Llama-2-7B-Chat) | 17.9 | 3.8 | 3.1 | 0.0 | 0.0 |

| SFT | 63.1 | 67.4 | 53.0 | 60.0 | 67.2 |

| RFT (Yuan et al., 2023) | 63.6 | 71.6 | 54.3 | 62.9 | 66.4 |

| PPO (Schulman et al., 2017) | 64.2 | 59.4 | 51.7 | 22.1 | 29.1 |

| Best-of-N | 67.9 | 70.2 | 57.6 | 62.1 | 69.4 |

| ETO (Song et al., 2024) | 67.4 | 73.8 | 65.0 | 68.6 | 72.4 |

| QLASS | 70.3 | 75.3 | 66.4 | 77.9 | 82.8 |

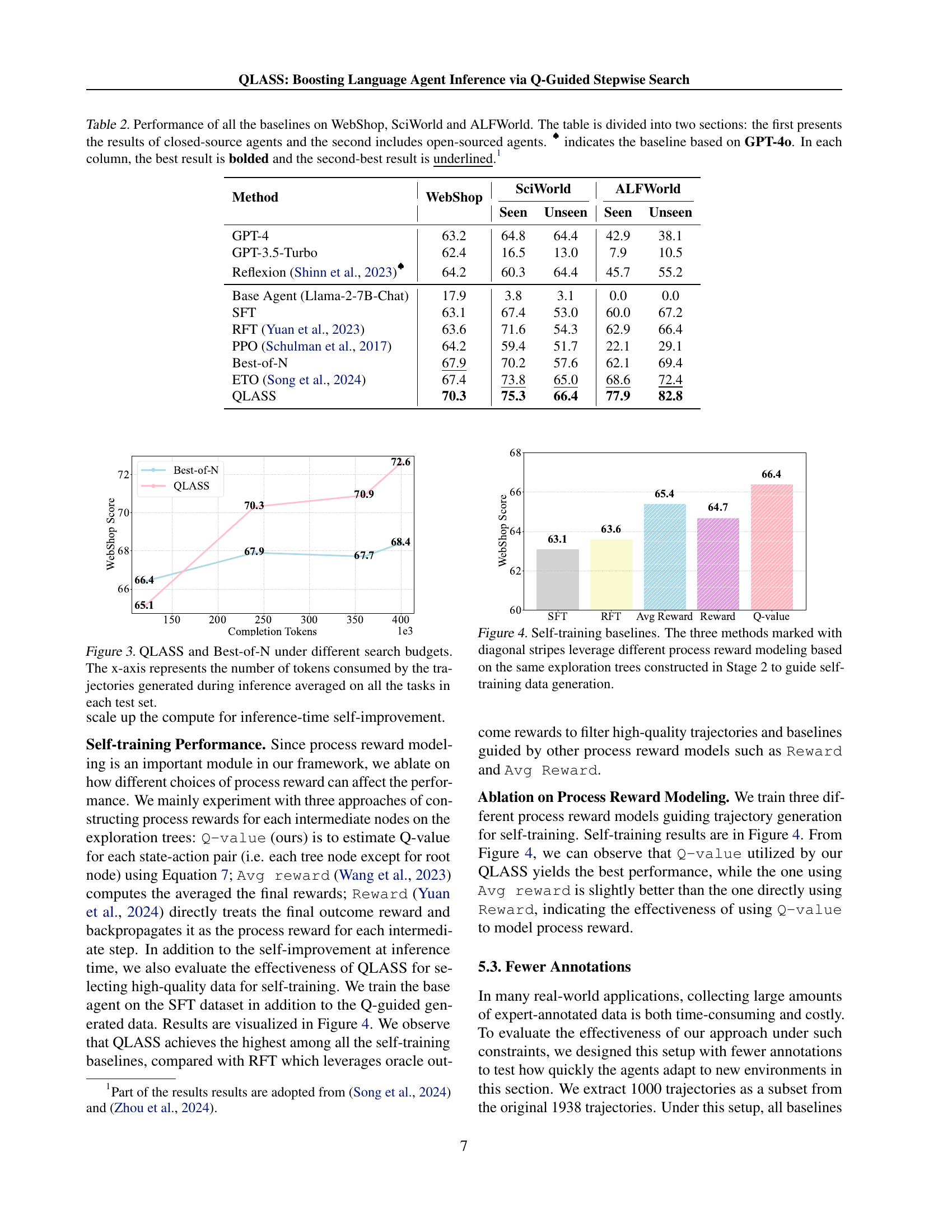

🔼 Table 2 presents a comprehensive comparison of various language agent models’ performance across three distinct interactive tasks: WebShop, SciWorld, and ALFWorld. The table is structured in two parts. The first section showcases the results obtained from closed-source, commercially available models, specifically highlighting the performance of GPT-4 (indicated by the symbol ‘♠’). The second part displays the performance of open-source language agent models. For each task and model, the table presents the results for both ‘seen’ and ‘unseen’ test sets, allowing for a nuanced evaluation of generalization capabilities. Within each column (representing a specific test set), the best-performing model’s result is highlighted in bold, and the second-best result is underlined, providing a clear visual representation of the comparative performance.

read the caption

Table 2: Performance of all the baselines on WebShop, SciWorld and ALFWorld. The table is divided into two sections: the first presents the results of closed-source agents and the second includes open-sourced agents. ♠ indicates the baseline based on GPT-4o. In each column, the best result is bolded and the second-best result is underlined.111

| Method | WebShop | WebShop-1000 |

|---|---|---|

| SFT | 63.1 | 21.7 |

| ETO | 67.4 | 66.7 |

| Best-of-N | 67.9 | 47.1 |

| QLASS | 70.3 | 67.3 |

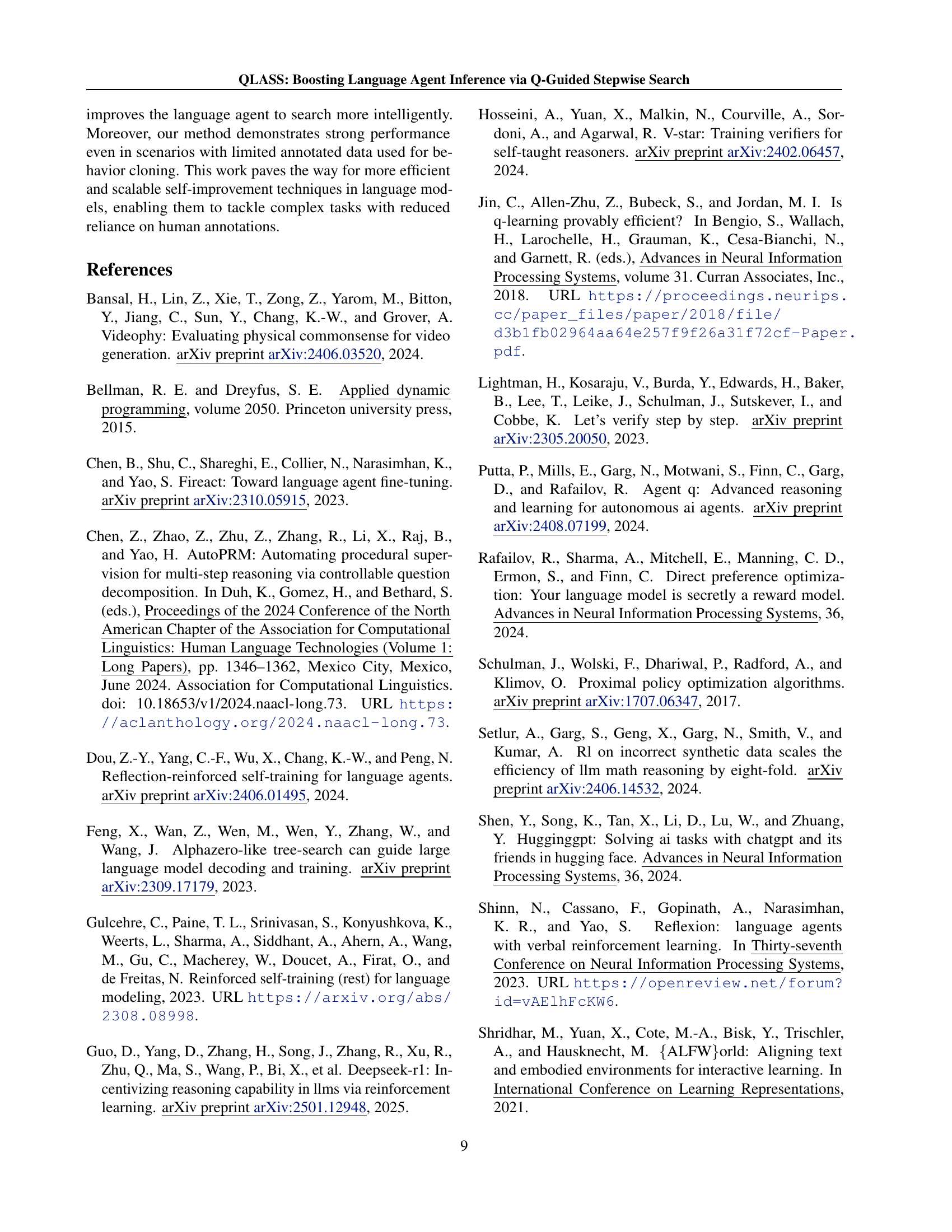

🔼 This table presents a comparison of average reward scores achieved by different methods on the WebShop dataset. The experiment used only 1000 annotated trajectories for behavior cloning, representing a reduced data scenario. The best performing method’s score is highlighted in bold, and the second-best is underlined. This allows for an evaluation of the methods’ robustness and effectiveness under limited data conditions.

read the caption

Table 3: Average reward comparison on WebShop with 1000 annotated trajectories for behavior cloning. The best result is bolded, and the second-best result is underlined.

| Base LLM | Method | SciWorld | |

|---|---|---|---|

| Seen | Unseen | ||

| Llama-2-13B | SFT | 68.1 | 57.6 |

| ETO | 71.4 | 68.6 | |

| QLASS | 72.7 | 69.3 | |

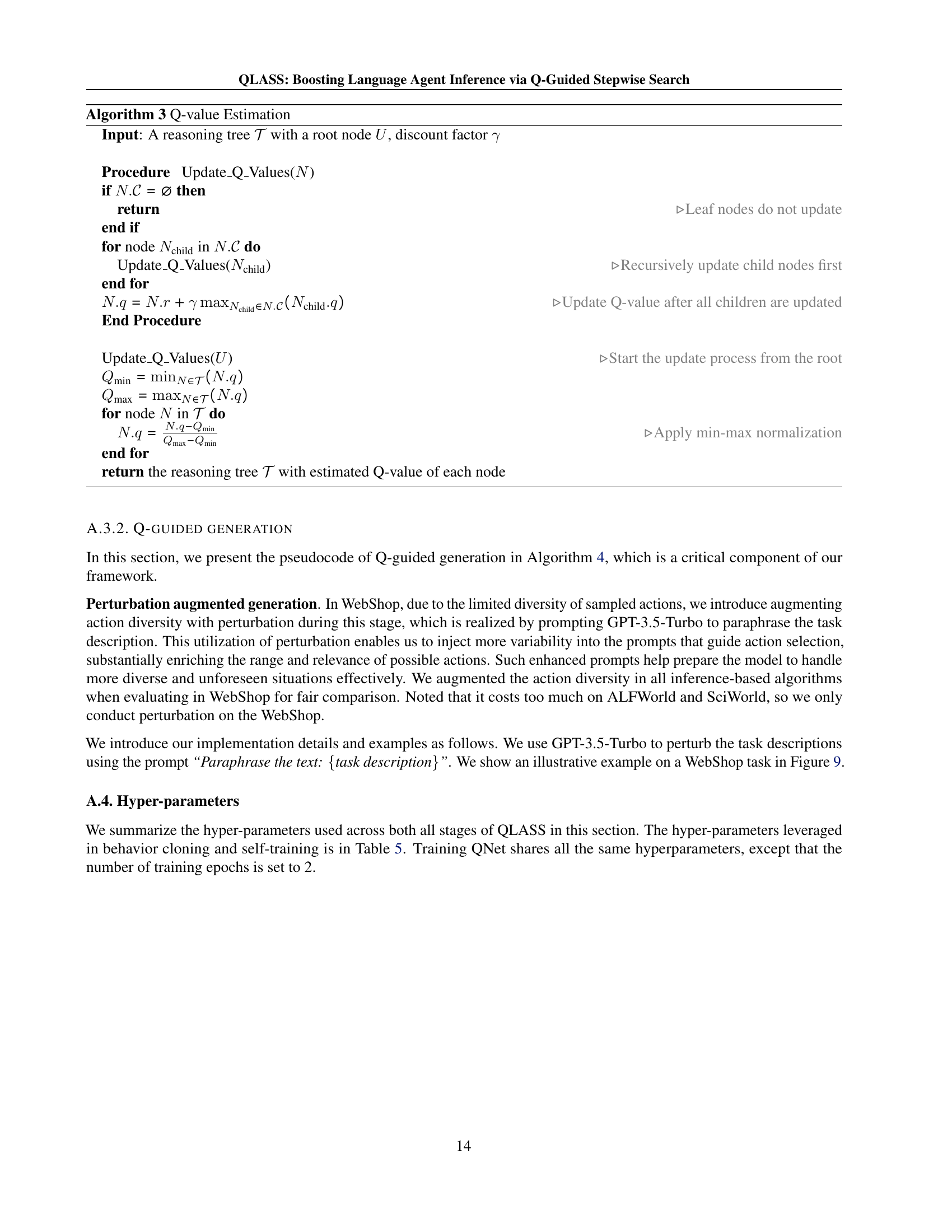

🔼 This table presents a comparison of the performance of different models on the SciWorld benchmark, using a larger language model (Llama-2-13B) as the base model. It showcases the results of three different approaches: the standard supervised fine-tuning (SFT), the self-improvement method ETO, and the proposed QLASS method. The table highlights the performance of each approach in terms of the average reward obtained on the seen and unseen test sets of SciWorld.

read the caption

Table 4: The performance on a different base LLM on SciWorld.

| Hyperparameter | Value |

| Batch size | 64 |

| Number of training epochs | 3 |

| Weight decay | 0.0 |

| Warmup ratio | 0.03 |

| Learning rate | 1e-5 |

| LR scheduler type | Cosine |

| Logging steps | 5 |

| Model max length | 4096 |

| Discount factor | 0.9 |

| Maximum expansion depth on WebShop | 3 |

| Maximum expansion depth on SciWorld | 6 |

| Maximum expansion depth on ALFWorld | 8 |

| Action candidate set size for inference | 2 |

| Sampled trajectory number for self-training | 1 |

| Exploration temperature | 0.7 |

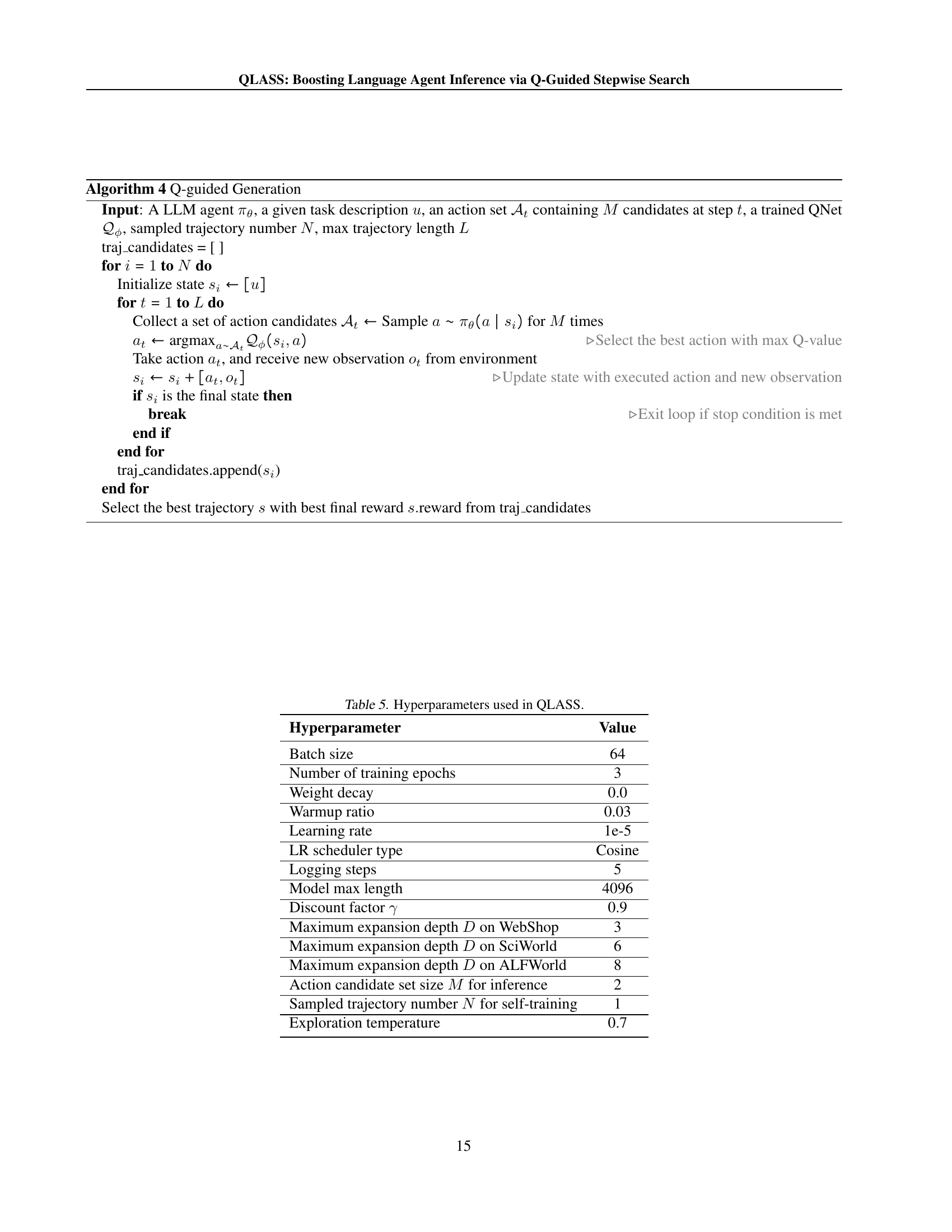

🔼 This table lists the hyperparameters used in the QLASS model, including batch size, number of training epochs, weight decay, learning rate, learning rate scheduler type, logging steps, model maximum length, discount factor, maximum expansion depth for each environment, action candidate set size for inference, sampled trajectory number for self-training, and exploration temperature. These parameters control various aspects of the model’s training and inference process.

read the caption

Table 5: Hyperparameters used in QLASS.

Full paper#