TL;DR#

Current video generation models often struggle with generating realistic videos due to their focus on 2D pixel information, leading to artifacts like object morphing and unnatural movements. These models lack an understanding of 3D geometry and physics, which is crucial for generating scenes with realistic object interactions.

To address this, the authors introduce PointVid, a novel framework that incorporates 3D point trajectories into the video generation process. By augmenting 2D videos with 3D information and applying a 3D point regularization technique, PointVid significantly improves the quality and realism of generated videos. The results demonstrate that PointVid effectively reduces artifacts, preserves object shapes during interactions, and generates videos with smooth, physically plausible motions. This approach provides a significant step towards generating more realistic and believable videos.

Key Takeaways#

Why does it matter?#

This paper is important because it addresses the limitations of existing video generation models by incorporating 3D information. This improves the realism and physical plausibility of generated videos, opening new avenues for research in computer vision and graphics. The proposed methods and dataset are valuable resources for researchers working on video generation, especially those interested in physically realistic simulations.

Visual Insights#

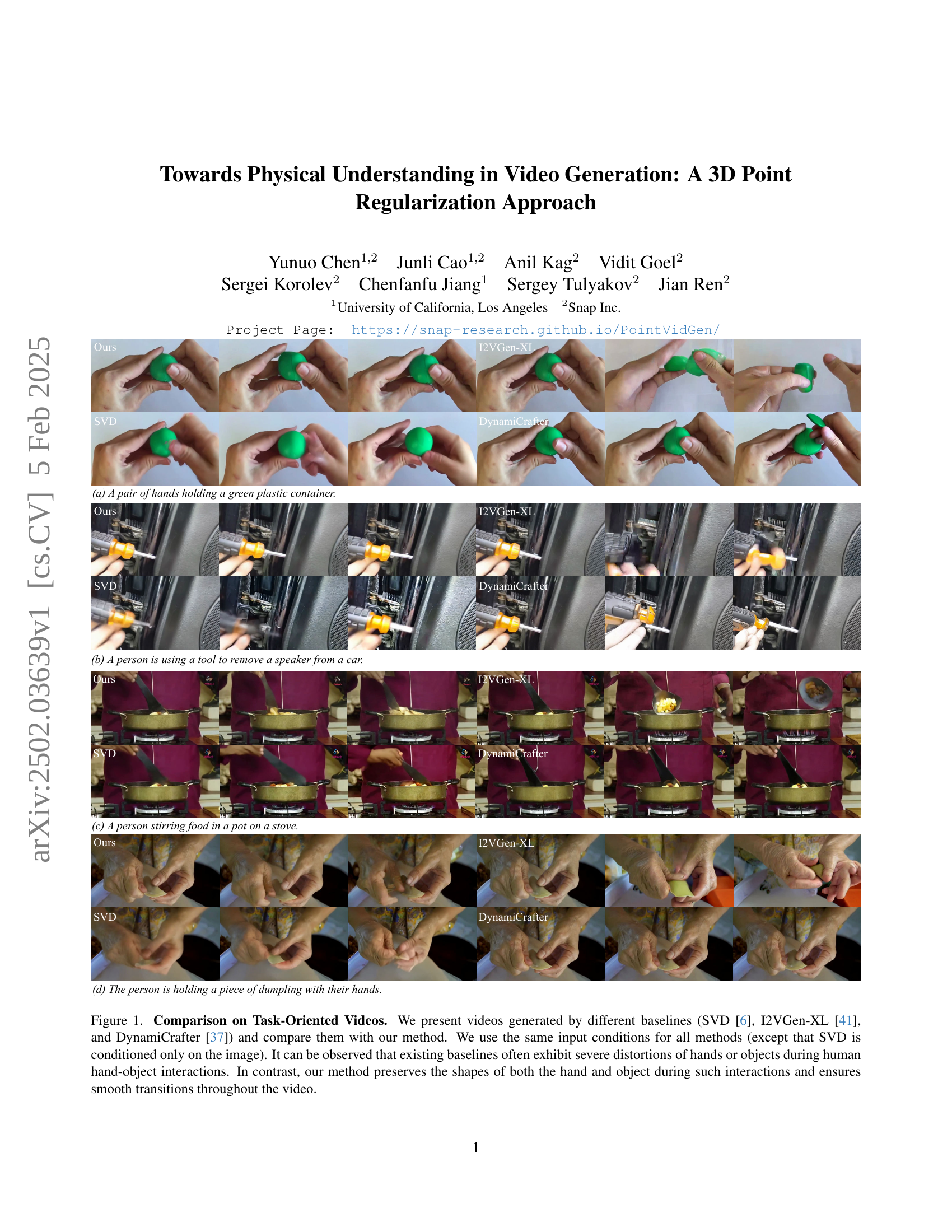

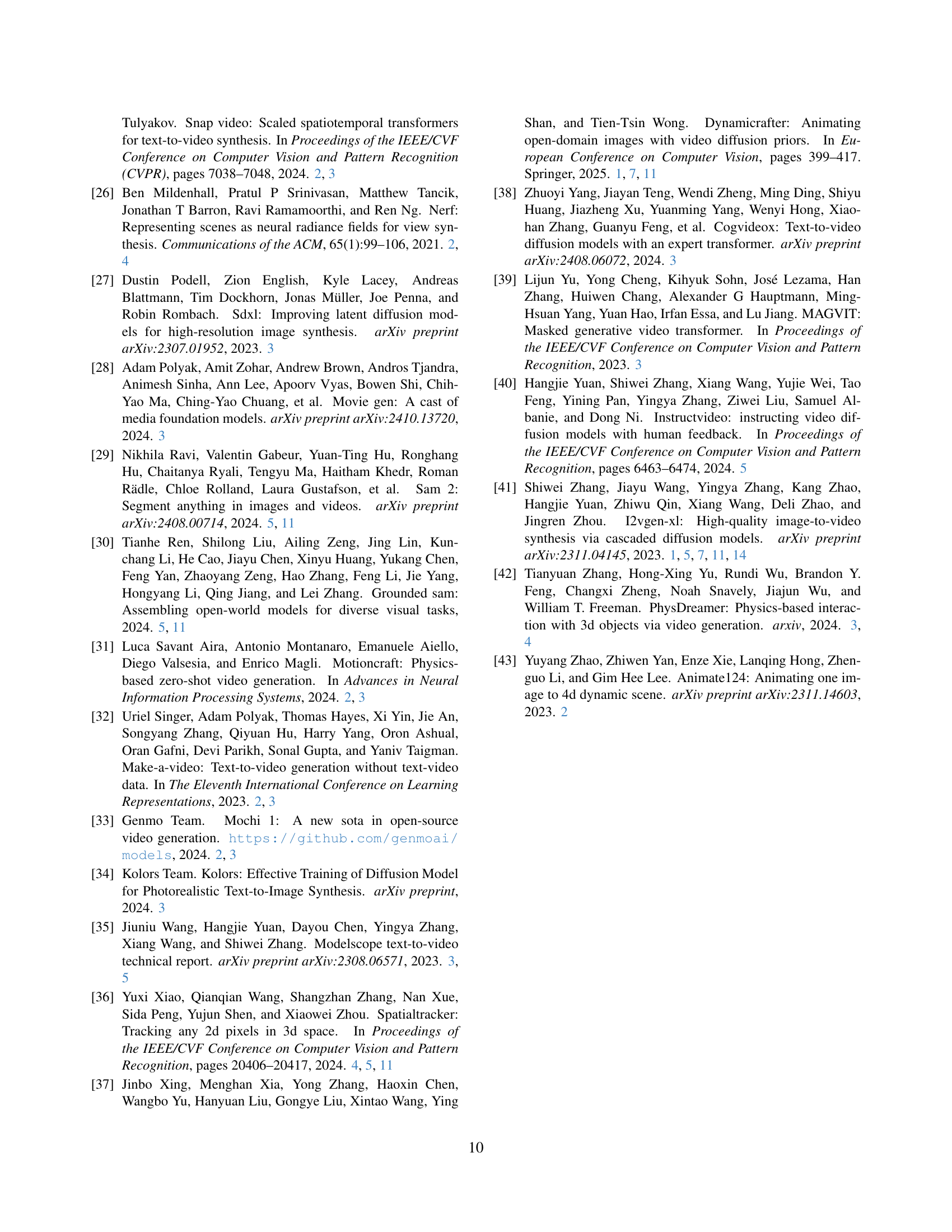

🔼 This figure compares videos generated by four different methods: the authors’ new method and three existing baselines (SVD, I2VGen-XL, and DynamiCrafter). The comparison focuses on task-oriented videos, which involve complex human-object interactions. The results show that the existing methods often produce unrealistic distortions of hands or objects, while the new method preserves their shapes and ensures smooth transitions, demonstrating better physical understanding in video generation.

read the caption

Figure 1: Comparison on Task-Oriented Videos. We present videos generated by different baselines (SVD [6], I2VGen-XL [41], and DynamiCrafter [37]) and compare them with our method. We use the same input conditions for all methods (except that SVD is conditioned only on the image). It can be observed that existing baselines often exhibit severe distortions of hands or objects during human hand-object interactions. In contrast, our method preserves the shapes of both the hand and object during such interactions and ensures smooth transitions throughout the video.

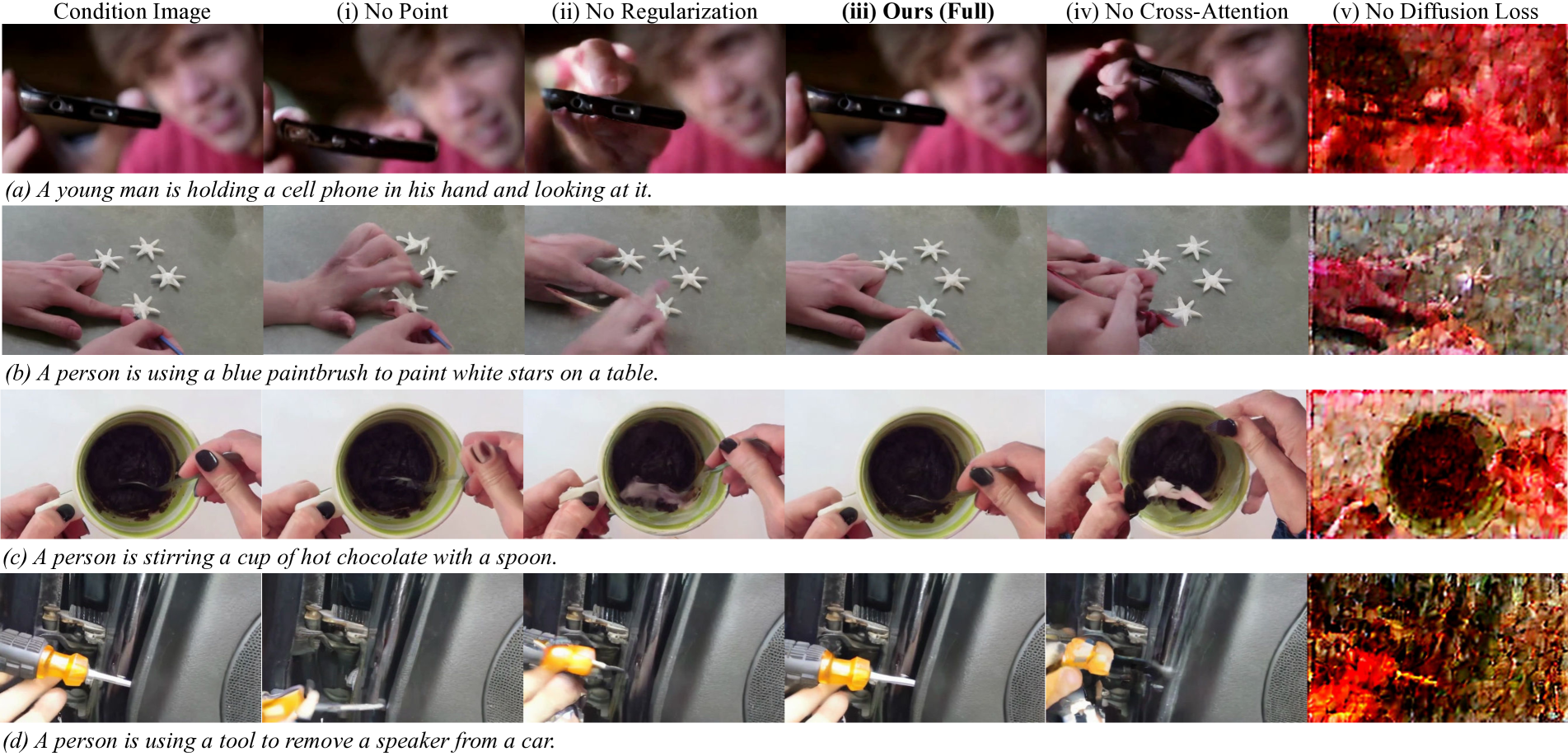

| Method | SC | BC | MS | AQ | IQ | PC |

|---|---|---|---|---|---|---|

| I2VGen-XL | 0.83247 | 0.89147 | 0.95706 | 0.44055 | 0.58532 | 0.32665 |

| Ours | 0.95892 | 0.95202 | 0.98456 | 0.43369 | 0.60423 | 0.37434 |

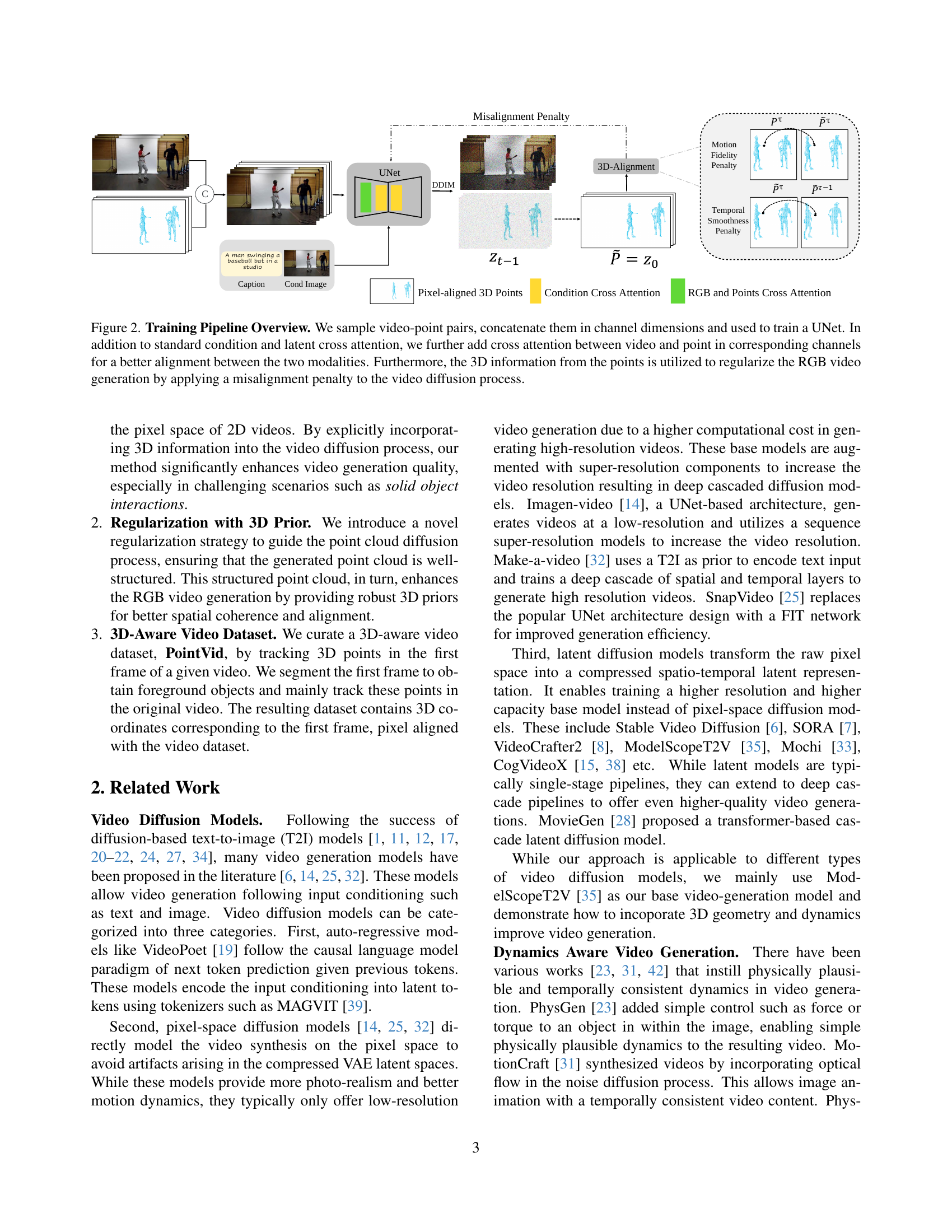

🔼 This table presents a quantitative comparison of the proposed video generation method against other existing methods using two benchmark datasets, VBench and VideoPhy. VBench assesses various aspects of video quality, including subject and background consistency, motion smoothness, overall aesthetic and image quality. VideoPhy focuses specifically on the physical plausibility and common sense of the generated videos. The table shows that the proposed method, by incorporating 3D knowledge, achieves significant improvements in metrics related to physical correctness, temporal consistency, and overall quality.

read the caption

Table 1: Quantitative Evaluation. We evaluate various aspects of our method using the VBench [16] and VideoPhy [4] benchmarks. The evaluated metrics are as follows: (VBench) SC: subject consistency, BC: background consistency, MS: motion smoothness, AQ: aesthetic quality, IQ: imaging quality; (VideoPhy) PC: physical commonsense. By incorporating 3D knowledge, our video model shows substantial improvement in metrics such as physical commonsense, motion smoothness, and subject/background consistency. This demonstrates that our method generates significantly more temporally consistent and physically plausible videos.

In-depth insights#

3D PointVidGen#

The hypothetical project title “3D PointVidGen” suggests a system for video generation leveraging three-dimensional point cloud data. This approach likely aims to overcome limitations of existing 2D-centric video generation models by incorporating explicit 3D spatial understanding. The “Point” aspect indicates the use of point clouds as a 3D representation, likely chosen for its efficiency and flexibility compared to mesh-based or volumetric models. “VidGen” signifies video generation as the core functionality. The prefix “3D” emphasizes the fundamental shift towards a richer, more physically accurate representation of the visual world. This 3D awareness is crucial for generating videos featuring realistic object interactions, complex motions, and accurate shape preservation, addressing common issues such as object morphing or unnatural deformations found in current methods. The system likely involves a training phase using a dataset of videos augmented with corresponding 3D point cloud trajectories, enabling the model to learn the relationship between 2D pixel movements and their underlying 3D geometry. The resulting videos would exhibit improved realism and consistency by adhering more closely to the laws of physics and the inherent constraints of the three-dimensional space.

3D Point Augmentation#

The concept of “3D Point Augmentation” in video generation involves enriching standard 2D video data with supplementary 3D spatial information. This augmentation is crucial because traditional video models primarily learn from 2D pixel data, limiting their understanding of physical interactions and object dynamics in three dimensions. By incorporating 3D point trajectories aligned with 2D pixels, the model gains a better grasp of object shapes, motion, and spatial relationships. This enhanced understanding facilitates the generation of more realistic and physically plausible videos, especially in complex scenes with interacting objects. The success of this approach hinges on effectively aligning the 3D point data with the 2D video frames. The process needs to accurately capture and represent 3D motion while maintaining alignment with the corresponding pixel movements within the video. This augmentation strategy empowers the video generation model to overcome limitations inherent in relying solely on 2D information, resulting in outputs with improved realism and coherence.

Physical Regularization#

The concept of “Physical Regularization” in video generation aims to enforce realistic physical properties within the generated videos. It addresses the common issue of unrealistic or inconsistent object behavior, such as morphing, unnatural movements, or violations of physical laws like gravity and inertia. This is achieved by incorporating 3D information into the video generation process, often through techniques like 3D point tracking and regularization. The goal is not merely to generate visually appealing videos, but to create ones that adhere to the physical laws of the real world. This is achieved by incorporating 3D information and regularization techniques to constrain the generated video and ensure consistency and plausibility. Effective physical regularization results in more realistic simulations of physical phenomena like object interaction and deformation.

Ablation Experiments#

Ablation experiments systematically remove components of a model to understand their individual contributions. In the context of a video generation model using 3D point regularization, such experiments would likely involve removing or deactivating key elements like 3D point augmentation, 3D regularization, and cross-attention mechanisms. By analyzing the changes in video quality, physical accuracy, and other metrics after each ablation, researchers can assess the relative importance of each component. Significant drops in performance after removing 3D augmentation would indicate the crucial role of 3D information for realistic video generation. Similarly, reduced physical plausibility after removing regularization suggests its effectiveness in enforcing realistic motion and shape consistency. Analyzing the effects of removing cross-attention would reveal whether the interaction between 2D and 3D data is critical for successful fusion and output generation. The results of these experiments provide critical insights into the design, functionality, and limitations of the video generation model. They allow for a better understanding of which features are essential and which may be redundant, thus guiding future improvements and optimizations.

Future of 3D Video#

The future of 3D video is bright, driven by technological advancements in areas like display technology, capture methods, and compression techniques. Higher resolutions and frame rates will become commonplace, creating more immersive and realistic experiences. Improved rendering techniques will address limitations in current 3D video, such as motion sickness and artifacts. The integration of artificial intelligence (AI) will enhance the creation and editing processes, enabling more efficient and creative storytelling. The increasing affordability and accessibility of 3D technologies will fuel wider adoption across various industries and applications. VR/AR integration will further push the boundaries of 3D video, creating new interactive and engaging content formats. However, challenges such as standardization, bandwidth limitations, and the high production cost of 3D content still need to be overcome. Despite these, the ongoing development and innovation guarantee that the future of 3D video will be exciting, with possibilities spanning from entertainment and education to healthcare and engineering.

More visual insights#

More on figures

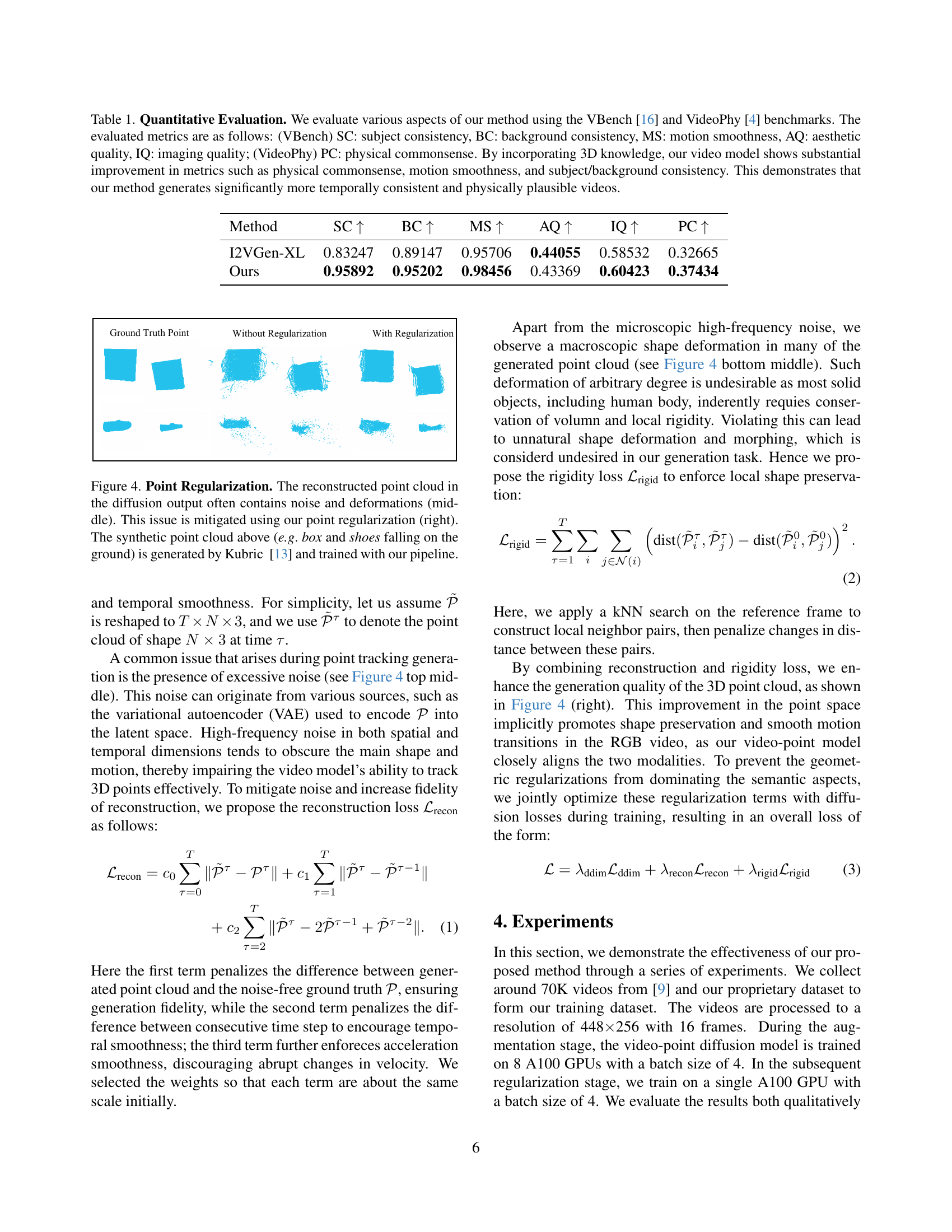

🔼 This figure illustrates the training pipeline for a video generation model enhanced with 3D point cloud information. The pipeline begins by sampling paired video and 3D point data. These data are then concatenated along the channel dimension and fed into a UNet architecture. The UNet utilizes standard conditional and latent cross-attention mechanisms; however, it notably incorporates an additional cross-attention layer between video and point data channels to ensure improved alignment. Finally, 3D point information is leveraged to regularize the generation of RGB video frames by applying a misalignment penalty within the diffusion model. This penalty refines the generated video, ensuring consistency between the 3D motion information and the 2D video frames.

read the caption

Figure 2: Training Pipeline Overview. We sample video-point pairs, concatenate them in channel dimensions and used to train a UNet. In addition to standard condition and latent cross attention, we further add cross attention between video and point in corresponding channels for a better alignment between the two modalities. Furthermore, the 3D information from the points is utilized to regularize the RGB video generation by applying a misalignment penalty to the video diffusion process.

🔼 This figure illustrates the PointVid dataset generation process. Starting with a video, the first frame is used as a reference. Semantic segmentation isolates foreground objects, creating masks. Pixels are then randomly selected, weighted to favor foreground areas. 3D point tracking follows these selected pixels across the video’s frames. The output is a dataset where each frame’s pixels contain 3D coordinates for tracked foreground points; background pixel data is set to zero.

read the caption

Figure 3: PointVid Dataset Generation Workflow. Given an input video, we use the first frame as a reference frame and perform semantic segmentation to obtain masks for foreground objects. Next, we randomly sample pixels with a distribution favoring pixels inside foreground objects. We perform 3D point tracking on these queried pixels, and map these points to the input video frames. The resulting data point contains 3D coordinates of tracked foreground pixels while remaining pixels are zeroed out.

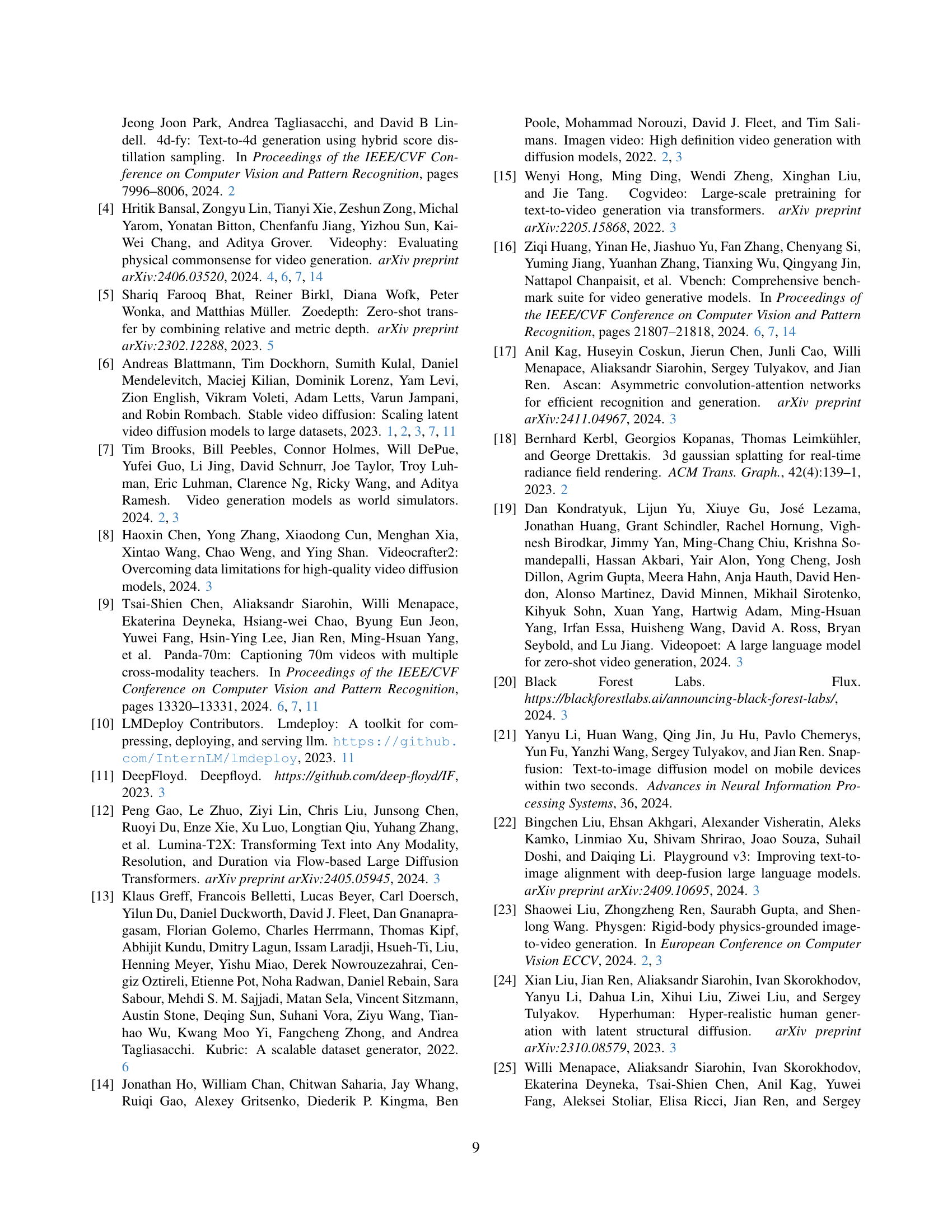

🔼 This figure demonstrates the effectiveness of the proposed point regularization method. The middle column shows a point cloud with noise and deformations, a common issue in diffusion-based video generation. The right column displays the result after applying the point regularization, showcasing a significantly improved, cleaner point cloud. The examples used are synthetic point clouds generated by Kubric [13] and subsequently processed using the authors’ pipeline, illustrating how the method addresses common issues like noise reduction and deformation correction in point cloud reconstructions.

read the caption

Figure 4: Point Regularization. The reconstructed point cloud in the diffusion output often contains noise and deformations (middle). This issue is mitigated using our point regularization (right). The synthetic point cloud above (e.g. box and shoes falling on the ground) is generated by Kubric [13] and trained with our pipeline.

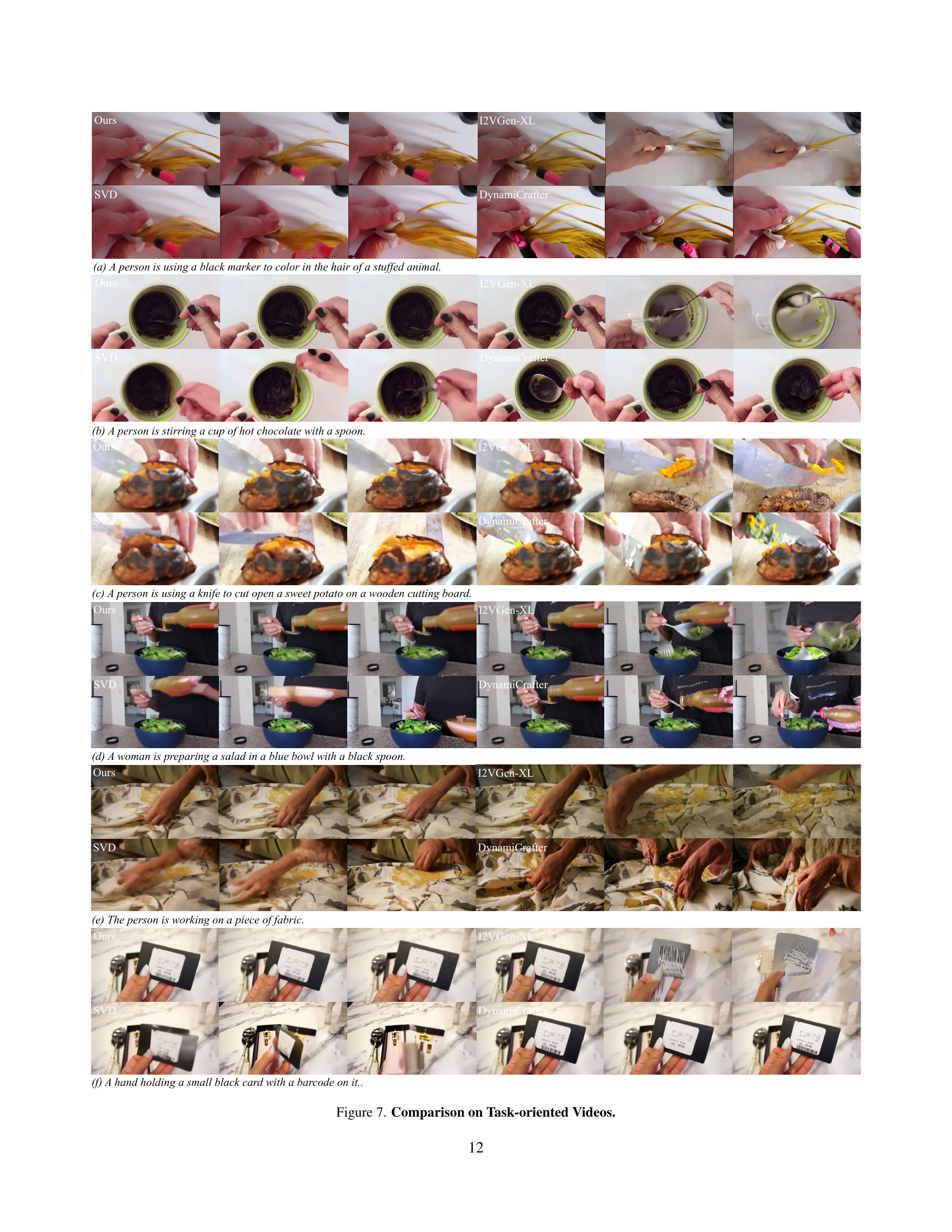

🔼 This figure shows an ablation study comparing three models: a baseline model trained only on 2D video data, a model with point augmentation (adding 3D point cloud information), and a model with both point augmentation and regularization. The results demonstrate that while point augmentation alone introduces a degree of 3D awareness, leading to improved generation quality compared to the baseline, it still produces some artifacts. Applying regularization to the model further improves the quality by mitigating these artifacts and resulting in more physically plausible video generation.

read the caption

Figure 5: Ablation on Point Augmentation and Regularization. Our point-augmented model demonstrates a degree of 3D-awareness compared to the model fine-tuned on video data alone, but it still exhibits some artifacts, which are mitigated through regularization.

🔼 Figure 6 presents a comparison of videos generated by different methods across various categories, including static objects, dynamic objects, humans and animals. The figure demonstrates the superiority of the proposed method (Ours) in producing videos with smooth transitions between object shapes and motions, eliminating artifacts such as object morphing that are present in the baseline methods (I2VGen-XL, SVD, DynamiCrafter). Each row shows examples of the same scene generated by the different approaches, highlighting the visual differences and the improved results obtained using the method described in the paper.

read the caption

Figure 6: Comparison on General Videos. We showcase the generated videos across various categories, including static and dynamic objects, humans, and animals. Our method ensures smooth transitions in object shape and motion and eliminates morphing artifacts.

🔼 Figure 7 presents a comparison of task-oriented video generation results across different models: the proposed method, SVD [6], I2VGen-XL [41], and DynamiCrafter [37]. Each row shows a different task, such as coloring a stuffed animal, stirring hot chocolate, cutting a sweet potato, preparing a salad, working on fabric, and holding a card. The goal is to highlight the ability of each method to realistically portray hand-object interaction and maintain consistent object shapes and motions throughout the video. The proposed method aims to demonstrate improved shape preservation, motion smoothness, and overall realism compared to existing baselines.

read the caption

Figure 7: Comparison on Task-oriented Videos.

🔼 Figure 8 presents a qualitative comparison of video generation results across various general video categories, showcasing the performance of the proposed method alongside several baselines (Ours, SVD, I2VGen-XL, and DynamiCrafter). The figure displays example video frames generated by each method for a selection of diverse scenes, including a gymnast on a pommel horse, a person on a rooftop, someone petting a fox, and metal melting in a furnace. These examples highlight the capabilities of the proposed approach to generate videos with improved realism and physical consistency compared to the baselines, particularly in terms of object motion, shape preservation, and overall coherence.

read the caption

Figure 8: Comparison on General Categories.

Full paper#