TL;DR#

Current image-to-video generation models often lack intuitive controls for shot design, especially concerning camera and object motions. This makes it difficult for users to realize their creative vision for video content. Existing methods frequently rely on textual descriptions or cumbersome bounding box annotations, limiting expressiveness and precision. Furthermore, the intertwining nature of camera and object motion makes it hard for systems to correctly interpret user intent.

MotionCanvas overcomes these limitations by introducing a user-friendly interface that allows for intuitive control of both camera and object motions in a scene-aware manner. It achieves this by translating user-specified scene-space motion intents into spatiotemporal motion-conditioning signals for a video diffusion model. The key innovation is a Motion Signal Translation module that effectively bridges the gap between 3D scene-space understanding and 2D screen-space requirements of video generation models. The method is demonstrated to be effective in various scenarios, showcasing enhanced creative workflows for video editing and content creation.

Key Takeaways#

Why does it matter?#

This paper is important as it presents MotionCanvas, a novel framework for controllable image-to-video generation, addressing the limitations of existing methods. It offers intuitive controls for both camera and object motion, improving creative workflows and opening avenues for advancements in video editing and animation.

Visual Insights#

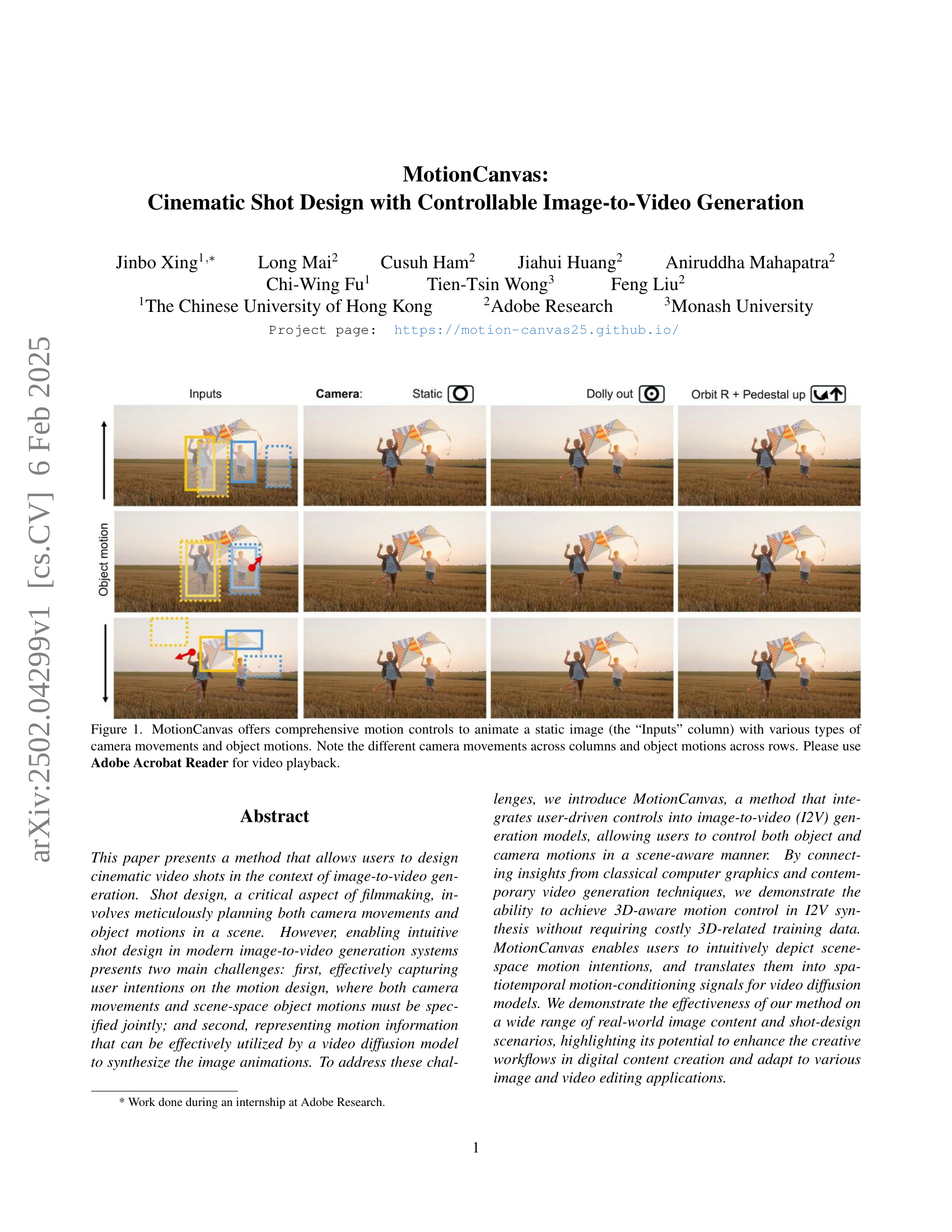

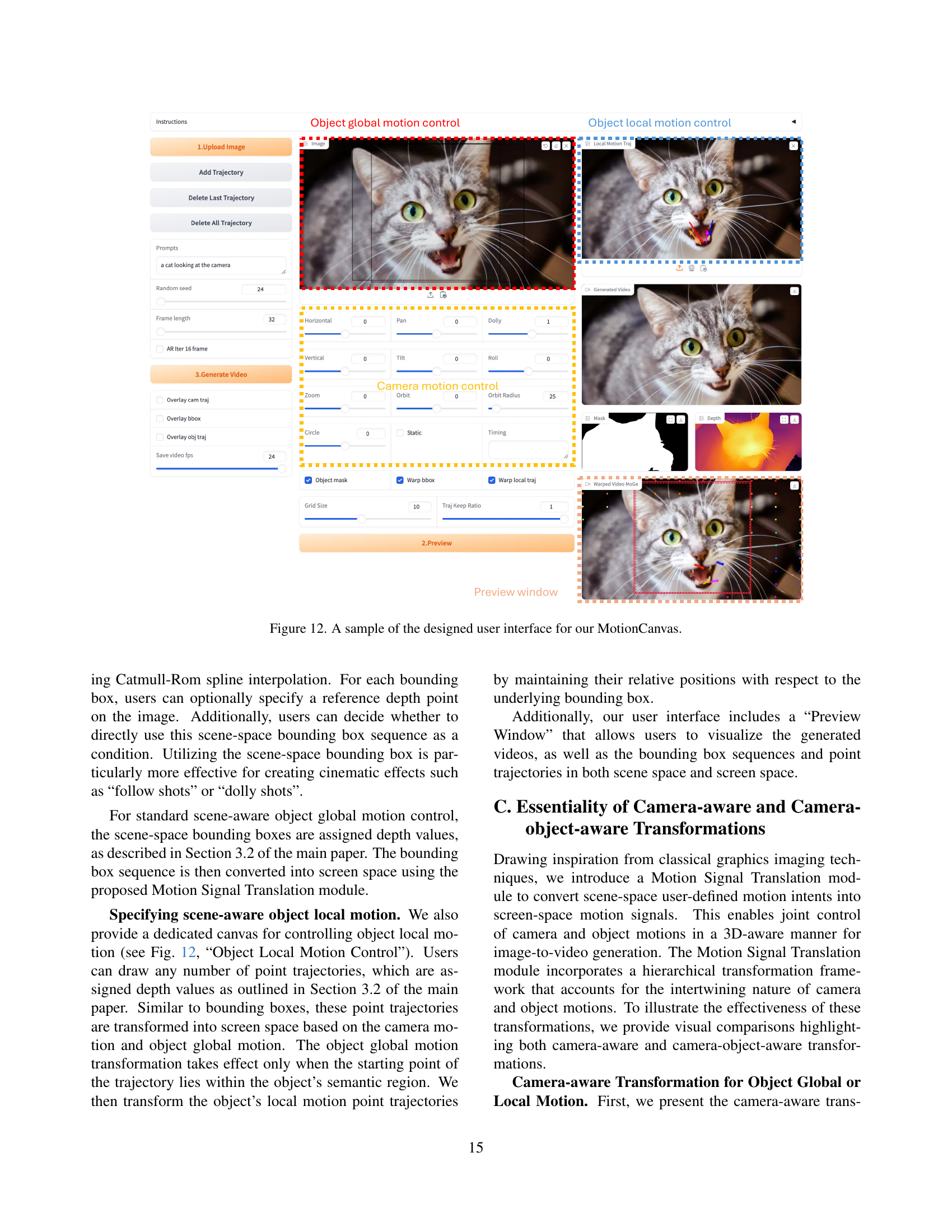

🔼 This figure demonstrates the core functionality of MotionCanvas, showcasing its ability to generate diverse video animations from a single static image. The ‘Inputs’ column displays the initial static image, which serves as the foundation for all generated videos. Each row represents a unique type of object motion, while each column shows a different camera movement. By systematically varying both object and camera motions, the figure visually highlights the comprehensive control MotionCanvas offers over the image-to-video generation process. To view the animations, please use Adobe Acrobat Reader.

read the caption

Figure 1: MotionCanvas offers comprehensive motion controls to animate a static image (the “Inputs” column) with various types of camera movements and object motions. Note the different camera movements across columns and object motions across rows. Please use Adobe Acrobat Reader for video playback.

| Method | RotErr | TransErr | CamMC | FVD | FID |

|---|---|---|---|---|---|

| MotionCtrl | 0.8460 | 0.2567 | 1.2455 | 48.03 | 11.34 |

| CameraCtrl | 0.6355 | 0.2332 | 0.9532 | 39.46 | 13.14 |

| Ours∗ | 0.6334 | 0.2188 | 0.9453 | 34.09 | 7.60 |

🔼 This table presents a quantitative comparison of the MotionCanvas model with state-of-the-art methods for image-to-video generation on the RealEstate 10K dataset. The comparison uses a subset of 1,000 test images. Metrics include Rotation Error (RotErr), Translation Error (TransErr), Camera Motion Control (CamMC), Fréchet Video Distance (FVD), and Fréchet Inception Distance (FID). The asterisk (*) indicates that the results for MotionCanvas were obtained without fine-tuning on the dataset (zero-shot performance).

read the caption

Table 1: Quantitative comparison with state-of-the-art methods on the RealEstate10K test set (1K). ∗ denotes zero-shot performance.

In-depth insights#

I2V Motion Control#

Image-to-video (I2V) motion control is a crucial aspect of realistic video generation, bridging the gap between static images and dynamic scenes. Effective I2V motion control enables users to precisely define and manipulate both camera movements and object motions within a scene, resulting in greater creative flexibility and higher-quality videos. Existing methods often struggle with the intricacy of jointly specifying these motions, leading to ambiguity and limitations in expressiveness. A key challenge lies in effectively translating high-level user intentions into low-level control signals that video generation models can readily interpret. Successful I2V motion control systems require intuitive user interfaces, robust motion representation schemes (such as bounding boxes or point trajectories), and sophisticated algorithms to map user input to model-compatible signals. Furthermore, the ability to handle complex and coordinated motion sequences, and to generate long videos with natural transitions between shots, is a significant challenge that demands further research. Ultimately, the goal is to create I2V systems that allow users to seamlessly and intuitively create dynamic videos with the same level of fine-grained control available in traditional video editing software.

3D-Aware Design#

A 3D-aware design approach in image-to-video generation is crucial for creating realistic and engaging animations. It allows for a more intuitive and natural design process by enabling users to plan camera and object movements within a three-dimensional space, unlike 2D screen-space approaches. This 3D understanding allows for a more accurate representation of user intent, reducing ambiguity and improving the overall quality and coherence of the generated videos. For example, 3D awareness is essential for coordinating camera movements with object interactions. A camera dolly-zoom effect, where the camera moves closer to a subject while simultaneously zooming out, would be challenging to achieve without 3D spatial understanding. A 3D-aware system can capture these spatial relationships accurately. Furthermore, 3D awareness facilitates handling of object occlusion and depth effects naturally, leading to more visually convincing results. The resulting videos are more aligned with intuitive design principles and produce a more immersive and realistic viewing experience. Challenges may include the computational cost of 3D processing and the need for robust 3D scene reconstruction from 2D inputs, but the benefits significantly outweigh the difficulties for achieving high-quality, controllable video generation.

Motion Signal Trans.#

The core of MotionCanvas lies in its ability to translate high-level user intentions into low-level signals a video diffusion model can understand. The “Motion Signal Translation” module is crucial to this process; it acts as a bridge between intuitive 3D scene-space motion design (as performed by the user) and the 2D screen-space signals required by the video generation model. This translation is non-trivial because users naturally think in 3D scene coordinates, while the model operates on 2D screen projections. The module’s sophistication comes from its depth awareness and handling of the interplay between camera and object motions. It accounts for perspective distortions and camera movement, translating 3D bounding boxes and point trajectories to accurately reflect intended screen-space movement. The depth estimation step is essential; without it, the 2D projection would be inaccurate and lead to unrealistic animations. Essentially, this module elegantly solves the problem of mapping user-specified motion onto the constraints of the underlying model, enabling a significant improvement in realism and user control over the generated videos. The use of point tracking for camera movement and bounding box sequences for object motion allows for robust and flexible representation of motion intent.

DiT-Based I2V#

DiT-Based I2V, or Diffusion Transformer-based Image-to-Video, represents a significant advancement in AI-driven video generation. It leverages the power of diffusion models, known for their ability to create high-quality images, and extends this capability to the temporal domain of video. The DiT architecture likely incorporates transformer networks, allowing it to effectively process and model long-range dependencies within video sequences. This is crucial for generating coherent and realistic video animations, as it captures the complex interplay between consecutive frames. The use of a pre-trained DiT model likely provides a strong foundation for the I2V task, potentially reducing training time and improving the overall quality of the generated videos. However, a key challenge with diffusion-based models, including DiT-based I2V, is computational cost; generating high-resolution videos can be very demanding. Therefore, optimization techniques, such as efficient inference strategies or model compression, are critical for practical applications. Further research might explore incorporating additional conditioning mechanisms, beyond simple image inputs, such as text descriptions or motion control signals, to enhance the level of user control and creative expression within DiT-based I2V systems. The success of such methods hinges on effectively handling these conditioning signals within the DiT framework.

Future I2V Research#

Future research in image-to-video (I2V) generation should prioritize enhanced controllability and user experience. Current methods often lack fine-grained control over both camera and object motion, hindering creative expression. More intuitive interfaces are needed, enabling users to easily specify complex spatiotemporal dynamics. Addressing limitations in handling long videos and complex scenes is also crucial; current models often struggle with temporal consistency and detailed object interactions. Improving the efficiency of the models is another important aspect; current methods are computationally expensive. Exploring hybrid approaches combining generative models with classical computer graphics techniques could offer greater control and realism. Furthermore, research into 3D-aware I2V generation is critical; this could provide greater scene understanding and more realistic motion synthesis without the need for explicit 3D data. Finally, future work should focus on developing robust methods for handling various video styles and object categories, making I2V tools more accessible and versatile for diverse creative applications.

More visual insights#

More on figures

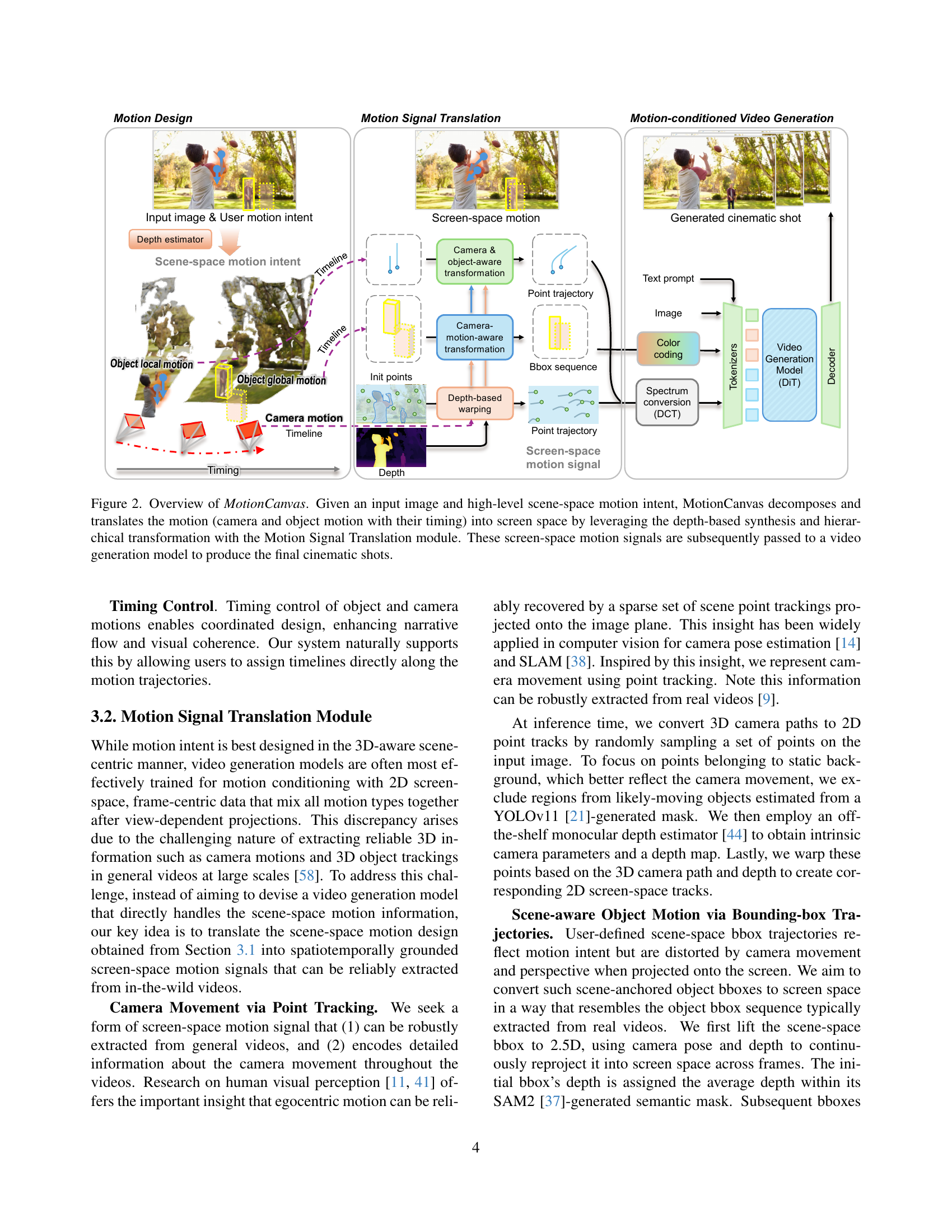

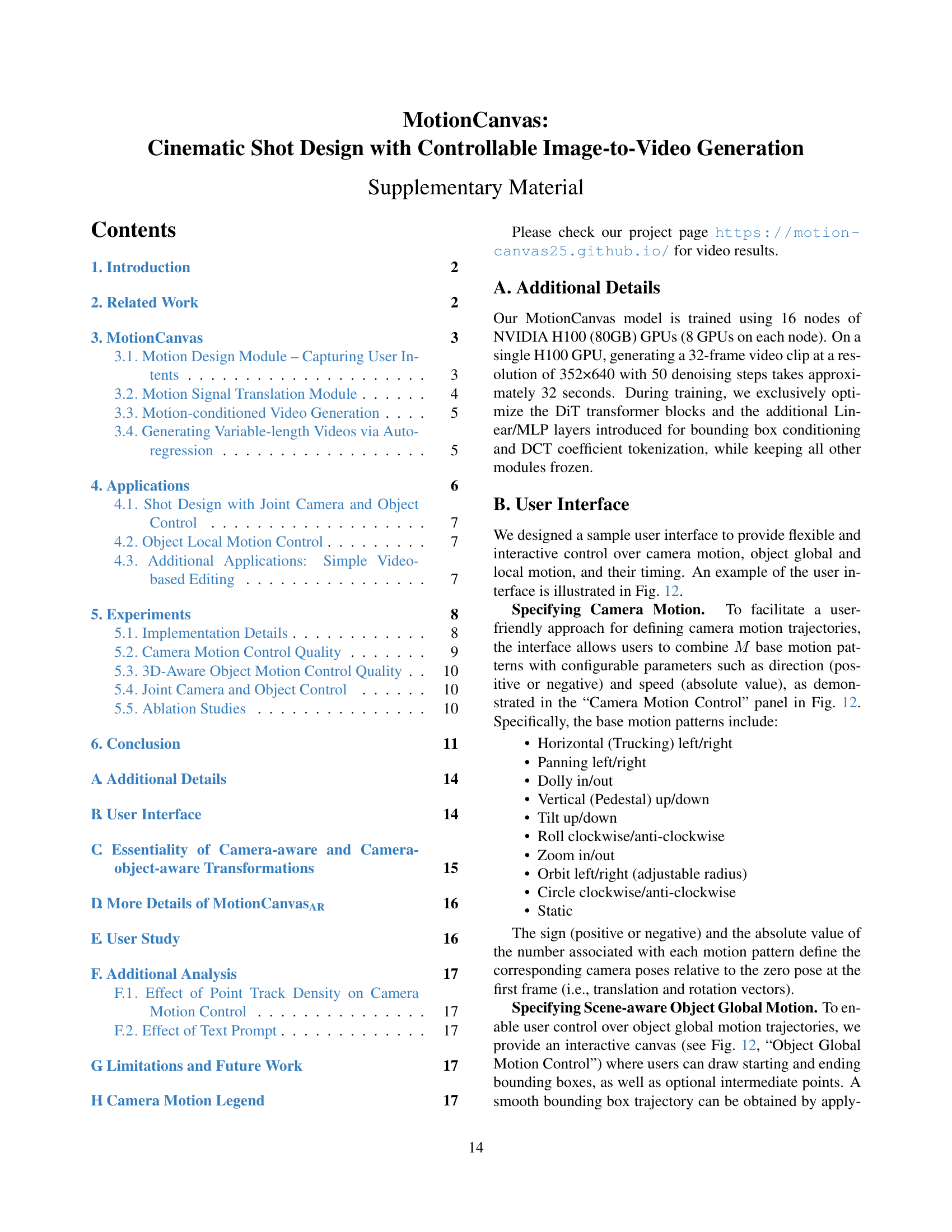

🔼 MotionCanvas processes user-specified scene-space motion into screen-space representations for video generation. It takes an input image and high-level motion intentions (camera movements and object manipulations with timing). The Motion Signal Translation module converts these scene-space motions to screen-space signals using depth-based synthesis and hierarchical transformations. These screen-space signals then guide a video generation model, creating the final cinematic video.

read the caption

Figure 2: Overview of MotionCanvas. Given an input image and high-level scene-space motion intent, MotionCanvas decomposes and translates the motion (camera and object motion with their timing) into screen space by leveraging the depth-based synthesis and hierarchical transformation with the Motion Signal Translation module. These screen-space motion signals are subsequently passed to a video generation model to produce the final cinematic shots.

🔼 This figure illustrates the architecture of the motion-conditioned video generation model used in MotionCanvas. The process begins with an input image and bounding boxes (bboxes) representing objects. These are encoded using a 3D Variational Autoencoder (VAE). The encoded bboxes are combined with other conditional information (e.g., point trajectories, text prompts) and then fed into a Diffusion Transformer (DiT)-based video generation model. The DiT model processes this combined information to generate the final video output.

read the caption

Figure 3: Illustration of our motion-conditioned video generation model. The input image and bbox color frames are tokenized via a 3D-VAE encoder and then summed. The resultant tokens are concatenated with other conditional tokens, and fed into the DiT-based video generation model.

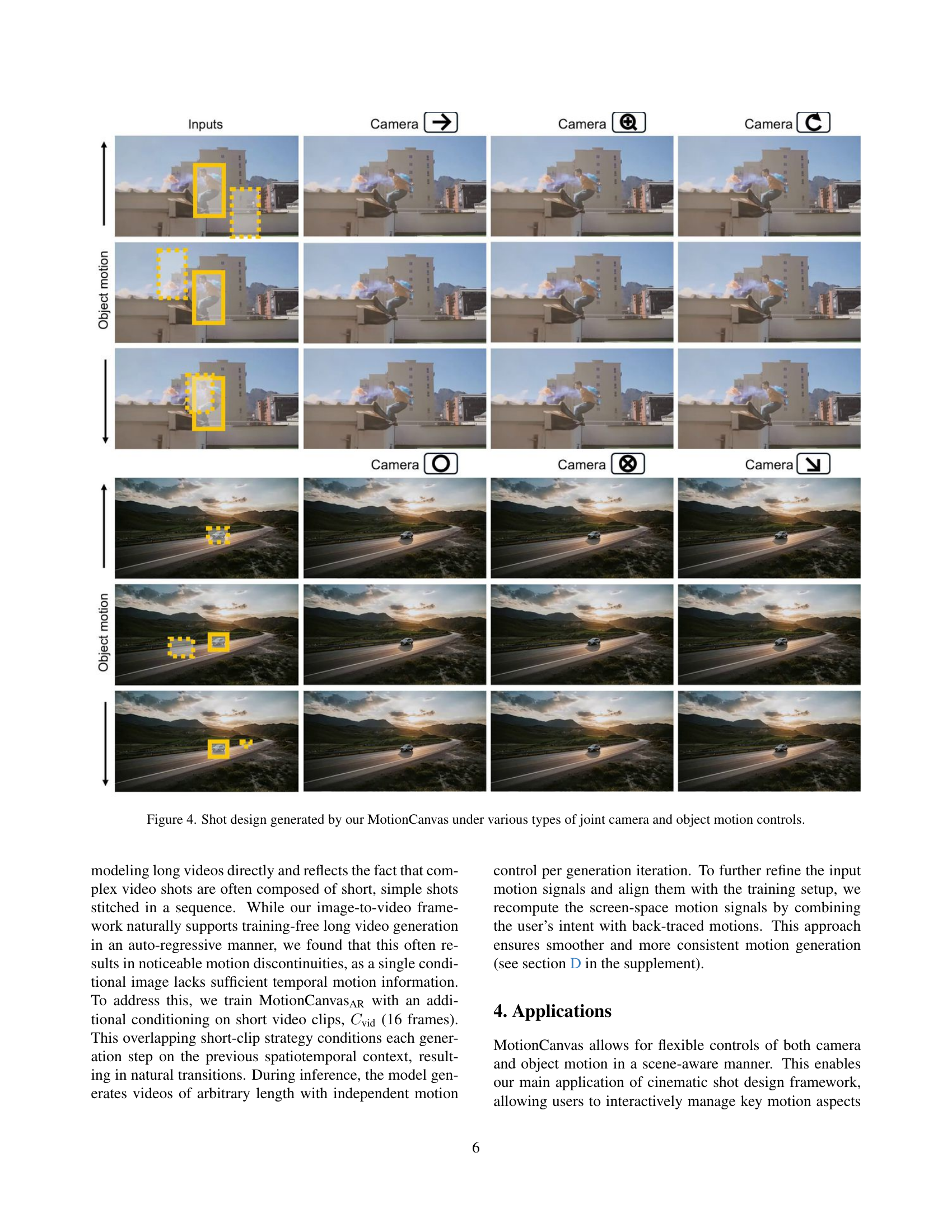

🔼 This figure shows examples of video shots generated by MotionCanvas, demonstrating its ability to control both camera and object movements simultaneously. Each row represents a different type of object motion (e.g., static objects, objects moving along a track, etc.) applied to the same input image. Each column illustrates a different camera motion technique (e.g., static camera, dolly zoom, orbit, etc.). This figure highlights the versatility and controllability of the MotionCanvas system in creating diverse cinematic shots from a single still image.

read the caption

Figure 4: Shot design generated by our MotionCanvas under various types of joint camera and object motion controls.

🔼 This figure showcases the ability of MotionCanvas to generate long videos with complex and consistent camera movements, while simultaneously demonstrating control over distinct object motions within each video. Multiple videos are shown, all sharing identical camera trajectories but exhibiting varied object movements, highlighting the model’s capacity for independent control of camera and object animation.

read the caption

Figure 5: Long videos with the same complex sequences of camera motion while different object motion controls in each case generated by our MotionCanvas.

🔼 This figure showcases the capabilities of MotionCanvas in generating videos with intricate and nuanced control over object motion. The top row displays examples of videos featuring diverse and fine-grained local object motions, demonstrating the system’s ability to precisely manipulate individual object parts. The bottom row presents videos where these local object movements are seamlessly integrated with camera motion controls, highlighting the system’s capacity to coordinate complex scene-space actions for cinematic shot design.

read the caption

Figure 6: Generated videos with diverse and fine-grained local motion controls (upper), and in coordination with camera motion control (bottom).

🔼 This figure demonstrates the versatility of MotionCanvas in video editing applications. The top row showcases motion transfer, where the motion from a source video is seamlessly applied to a different image. The bottom row exemplifies the ability to modify video content by adding, removing, or changing objects within the scene.

read the caption

Figure 7: Results when our method is applied for: (upper) motion transfer, and (bottom) video editing for changing objects, adding and removing objects.

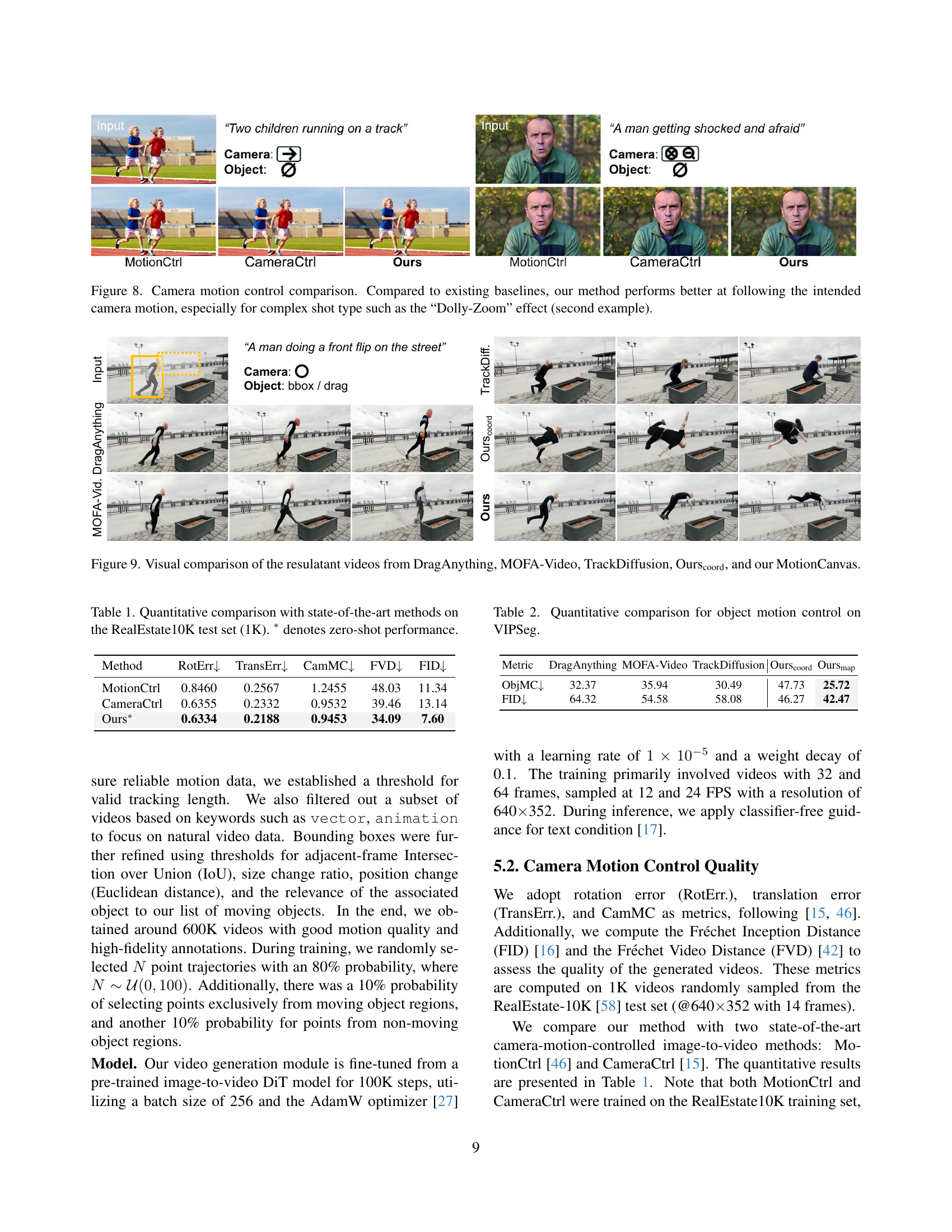

🔼 This figure demonstrates a comparison of camera motion control between MotionCanvas and two existing baselines (MotionCtrl and CameraCtrl) across different shot designs. The results show that MotionCanvas is more effective at accurately reproducing intended camera movements. The comparison is particularly striking in the second example, a complex ‘Dolly-Zoom’ shot, where MotionCanvas achieves significantly better accuracy.

read the caption

Figure 8: Camera motion control comparison. Compared to existing baselines, our method performs better at following the intended camera motion, especially for complex shot type such as the “Dolly-Zoom” effect (second example).

🔼 Figure 9 presents a visual comparison of video generation results from five different methods: DragAnything, MOFA-Video, TrackDiffusion, Ourscoord, and MotionCanvas. Each method was given the same input image and task of animating a person performing a front flip. The comparison highlights the differences in motion quality, smoothness, and adherence to the intended motion parameters between various approaches. It showcases how MotionCanvas improves upon existing methods by generating more natural and realistic animations with better control and accuracy in object and camera movement.

read the caption

Figure 9: Visual comparison of the resulatant videos from DragAnything, MOFA-Video, TrackDiffusion, Ourscoordcoord{}_{\text{coord}}start_FLOATSUBSCRIPT coord end_FLOATSUBSCRIPT, and our MotionCanvas.

More on tables

| Metric | DragAnything | MOFA-Video | TrackDiffusion | Ours | Ours |

|---|---|---|---|---|---|

| ObjMC | 32.37 | 35.94 | 30.49 | 47.73 | 25.72 |

| FID | 64.32 | 54.58 | 58.08 | 46.27 | 42.47 |

🔼 This table presents a quantitative comparison of different methods for object motion control using the VIPSeg dataset. Metrics used likely include measures of accuracy (how well the generated object motion matches the intended motion), quality (how realistic or natural the motion appears), and possibly others such as the Fréchet Inception Distance (FID), which evaluates the visual similarity of generated videos to real ones. The table compares the performance of the proposed ‘MotionCanvas’ method against several state-of-the-art baselines, allowing for a quantitative evaluation of its effectiveness in controlling object motion.

read the caption

Table 2: Quantitative comparison for object motion control on VIPSeg.

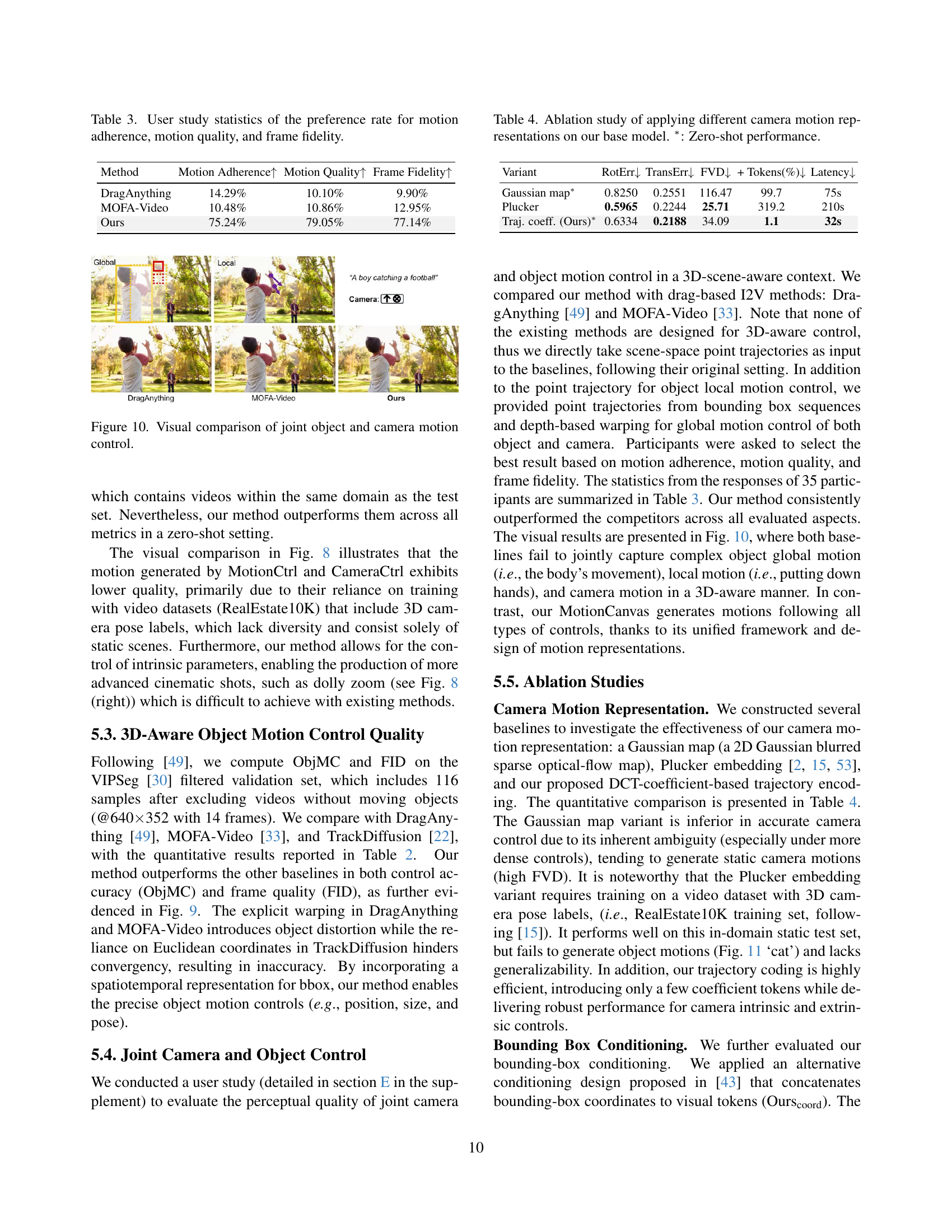

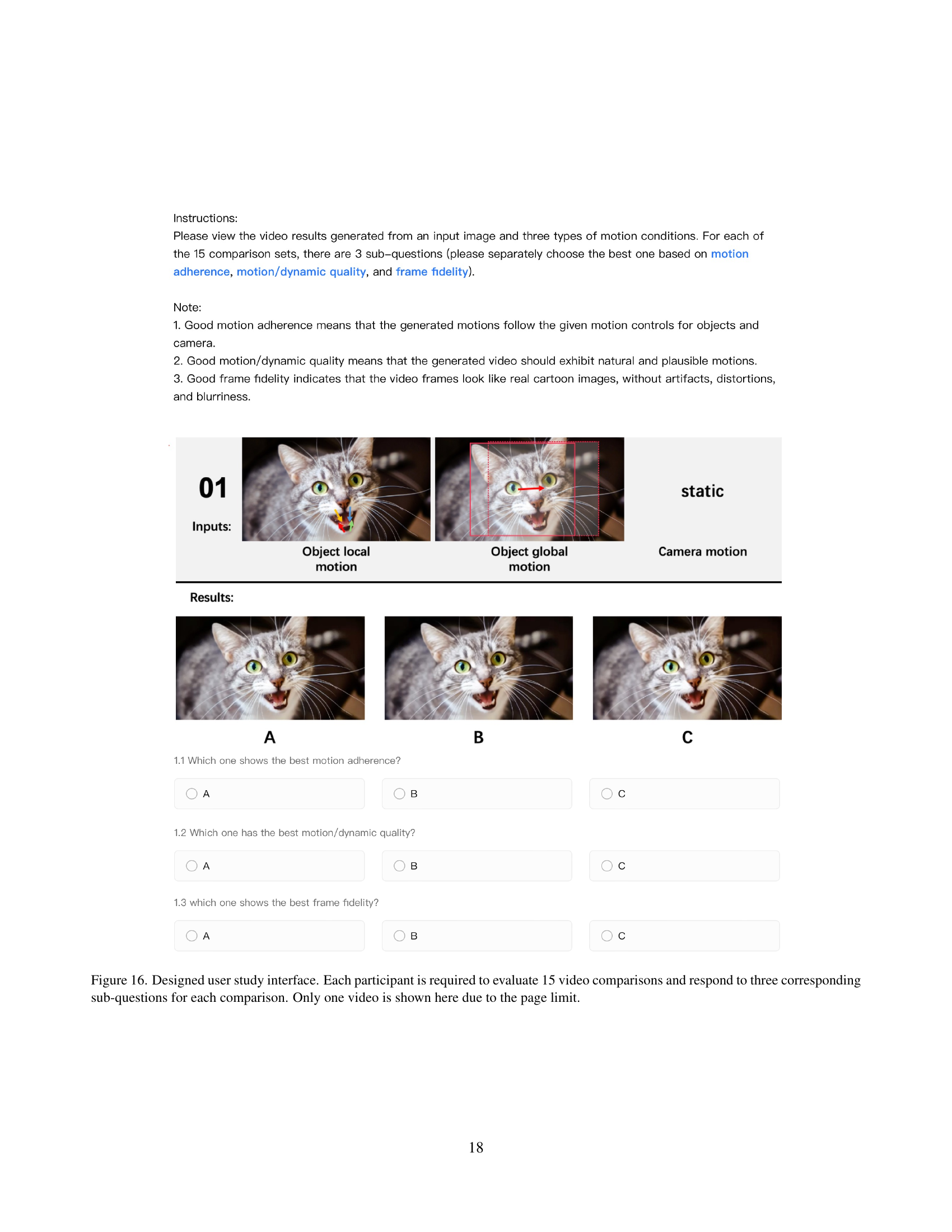

| Method | Motion Adherence | Motion Quality | Frame Fidelity |

|---|---|---|---|

| DragAnything | 14.29% | 10.10% | 9.90% |

| MOFA-Video | 10.48% | 10.86% | 12.95% |

| Ours | 75.24% | 79.05% | 77.14% |

🔼 This table presents the results of a user study comparing three different methods for generating videos with motion control: DragAnything, MOFA-Video, and MotionCanvas. Participants rated each method based on three criteria: motion adherence (how well the generated motion matched the intended motion), motion quality (how natural and realistic the motion appeared), and frame fidelity (the visual quality of the generated video frames). The table shows the percentage of times each method was preferred for each criterion.

read the caption

Table 3: User study statistics of the preference rate for motion adherence, motion quality, and frame fidelity.

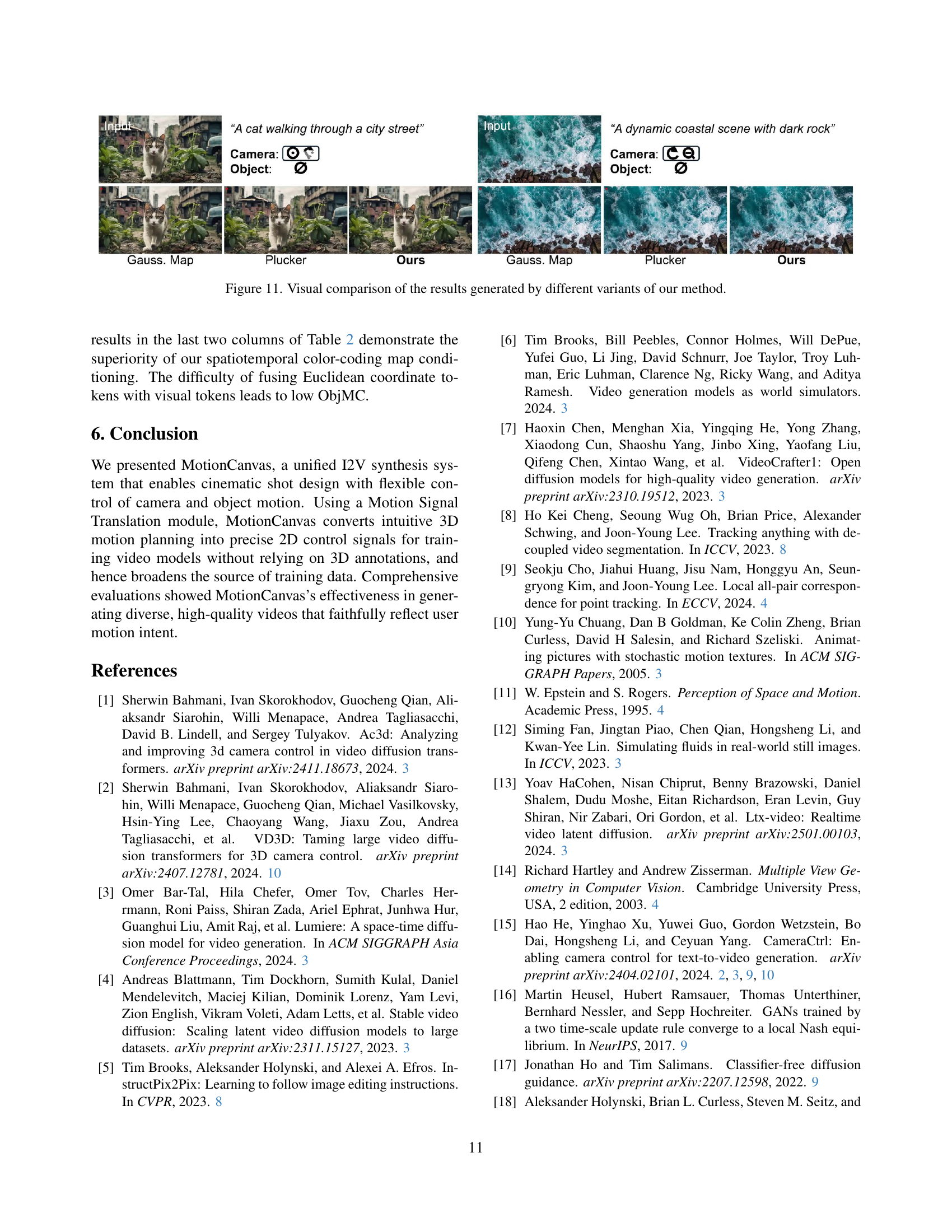

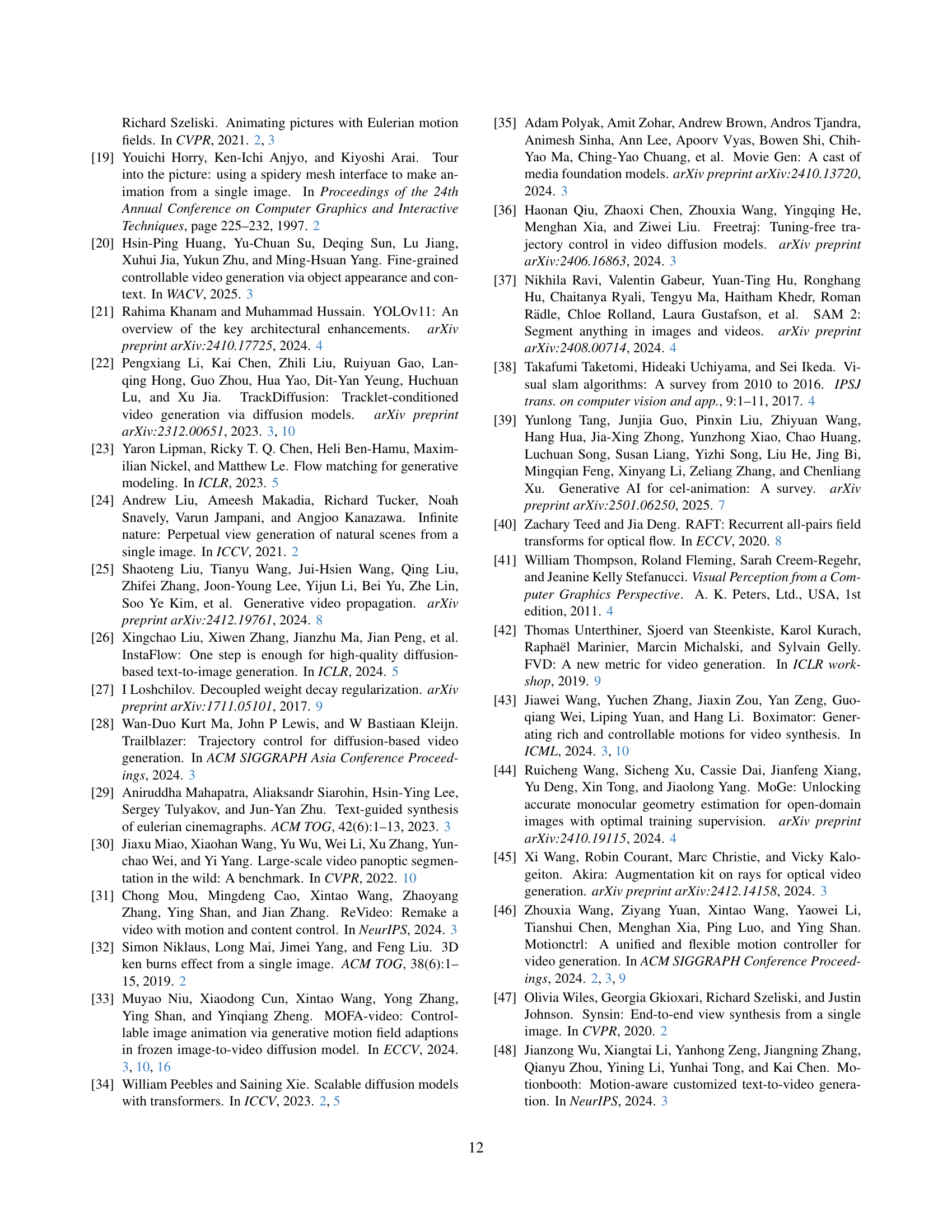

| Variant | RotErr | TransErr | FVD | + Tokens(%) | Latency |

|---|---|---|---|---|---|

| Gaussian map∗ | 0.8250 | 0.2551 | 116.47 | 99.7 | 75s |

| Plucker | 0.5965 | 0.2244 | 25.71 | 319.2 | 210s |

| Traj. coeff. (Ours)∗ | 0.6334 | 0.2188 | 34.09 | 1.1 | 32s |

🔼 This ablation study investigates the impact of different camera motion representations on the performance of the MotionCanvas model. The base model is tested with three different camera motion representations: Gaussian map, Plucker coordinates, and the proposed trajectory coefficients. The table shows the results for each representation, including rotation error (RotErr), translation error (TransErr), Fréchet Video Distance (FVD), the number of additional tokens required, and the inference time (Latency). The study demonstrates that the proposed trajectory coefficient representation outperforms other methods in both quantitative metrics and efficiency. The * indicates that the results are obtained without any fine-tuning on the target dataset (Zero-shot performance).

read the caption

Table 4: Ablation study of applying different camera motion representations on our base model. ∗: Zero-shot performance.

Full paper#