TL;DR#

Current text-to-video generation models are computationally expensive, requiring powerful GPUs and substantial memory. This limits accessibility and increases costs. The paper addresses this limitation by focusing on mobile devices, which poses unique challenges in terms of processing power and memory capacity. Existing methods for video generation are not optimized for resource-constrained environments, leading to significant performance bottlenecks.

The researchers introduce On-device Sora, a novel framework that tackles these challenges using three key techniques: Linear Proportional Leap (reduces denoising steps), Temporal Dimension Token Merging (minimizes token processing), and Concurrent Inference with Dynamic Loading (efficiently manages memory). Their experiments show that On-device Sora produces high-quality videos comparable to those generated by state-of-the-art models running on powerful GPUs, but directly on an iPhone 15 Pro. This demonstrates a significant leap towards democratizing video generation technology, making it accessible and affordable for a wider range of users and applications.

Key Takeaways#

Why does it matter?#

This paper is important because it demonstrates the feasibility of high-quality video generation directly on mobile devices—a significant advancement with implications for accessibility, privacy, and cost-effectiveness. It opens new avenues for research in efficient diffusion models, addressing the challenges of resource-constrained environments. The open-sourcing of the code further accelerates progress in this rapidly evolving field.

Visual Insights#

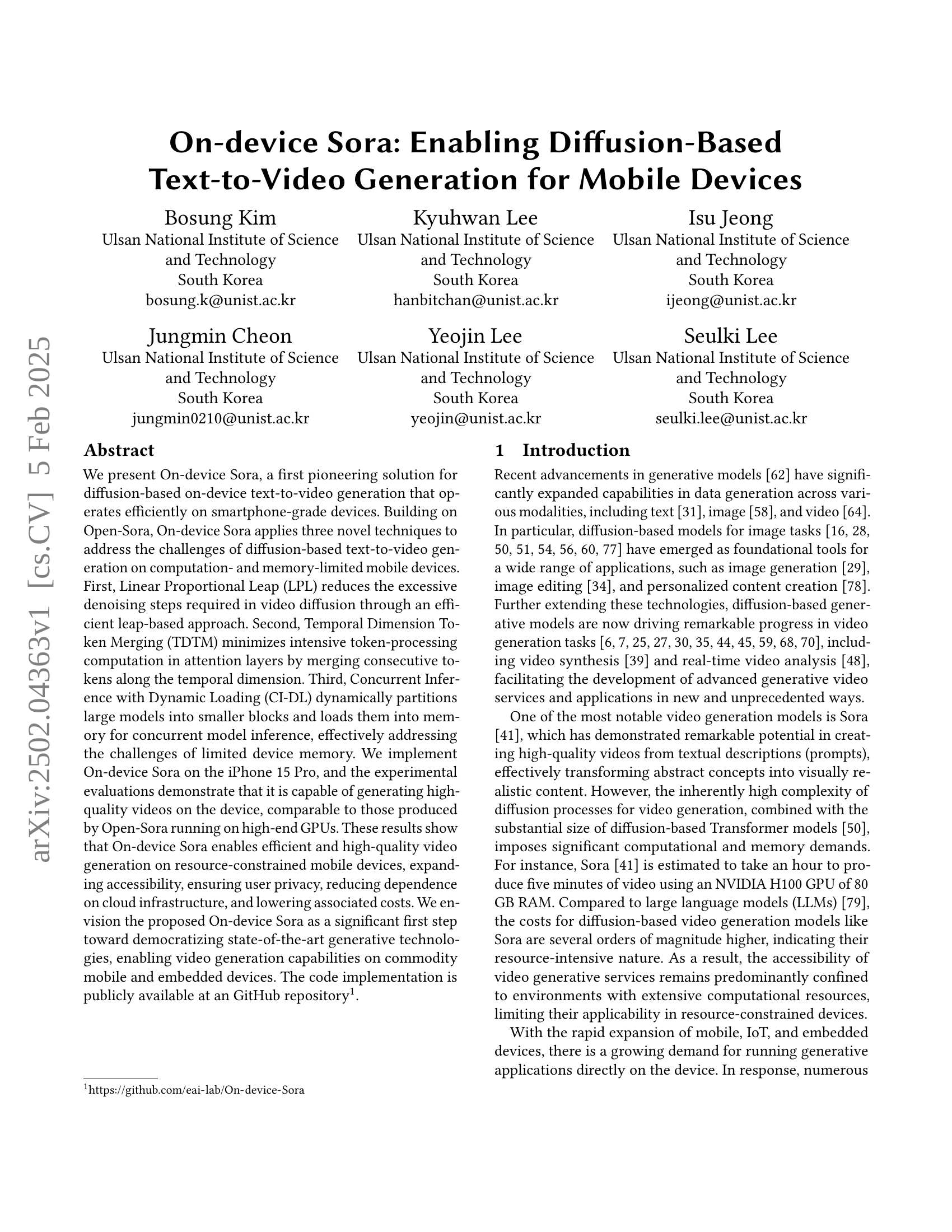

🔼 Open-Sora is a text-to-video generation model. The figure illustrates the three main stages of its video generation process. First, text input (a prompt describing the desired video) is converted into a numerical representation (embedding). Second, this embedding is used as input for a latent video generation step which produces a compressed, encoded representation of the video. Finally, this latent video representation is decoded and upscaled to create a full-resolution, viewable video output.

read the caption

Figure 1. Open-Sora (Zheng et al., 2024) generates realistic videos from the user prompt (text) through three stages: 1) prompt embedding, 2) latent video generation, and 3) video decoding.

| Component |

|

|

| |||

|---|---|---|---|---|---|---|

| T5 (Raffel et al., 2020) | 1 | 110.505 | 110.505 | |||

| STDiT (Zheng et al., 2024) | 50 | 35.366 | 1768.320 | |||

| VAE (Doersch, 2016) | 1 | 135.047 | 135.047 |

🔼 This table details the computational cost of running the Open-Sora model on an iPhone 15 Pro. It breaks down the number of times each component of the model (T5 for text embedding, STDiT for latent video generation, and VAE for video decoding) is executed, along with the time each execution takes and the total time spent on each component. This provides a clear illustration of the model’s performance bottlenecks and the computational burden placed on the mobile device. The information presented is crucial for understanding the challenges involved in adapting a high-performance model for mobile deployment.

read the caption

Table 1. The number of executions (iterations) of each model component (i.e., T5 (Raffel et al., 2020), STDiT (Zheng et al., 2024), and VAE (Doersch, 2016)) in Open-Sora (Zheng et al., 2024) and their total latencies on iPhone 15 Pro (Apple, 2023).

In-depth insights#

On-device Diffusion#

On-device diffusion models represent a significant advancement in AI, enabling the deployment of powerful generative models on resource-constrained devices like smartphones. The core challenge lies in optimizing these models for reduced computational cost and memory footprint, which is crucial for real-time or near real-time performance on mobile hardware. This optimization typically involves techniques like model quantization, pruning, and architectural modifications to reduce model size and improve efficiency. Techniques such as Linear Proportional Leap and Temporal Dimension Token Merging aim to further optimize the diffusion process, reducing the number of iterations required and lessening the computational burden of processing high-dimensional data. Another key aspect is concurrent inference, which seeks to maximize hardware utilization and reduce overall processing time. The ability to perform diffusion-based generation directly on the device offers several key advantages, including improved user privacy (data does not leave the device), reduced latency, and decreased reliance on cloud infrastructure and associated costs. However, the trade-offs between model size, accuracy, and speed must be carefully considered, as aggressive optimization can impact the quality of the generated output. Future research is expected to explore these trade-offs and focus on further improving on-device model performance, possibly through advancements in hardware and software.

Sora’s Optimization#

Sora’s optimization for mobile devices presents significant challenges due to the model’s inherent complexity and size. The paper addresses this by introducing three novel techniques: Linear Proportional Leap (LPL) reduces denoising steps, Temporal Dimension Token Merging (TDTM) optimizes token processing in attention layers, and Concurrent Inference with Dynamic Loading (CI-DL) efficiently manages memory constraints. LPL drastically cuts down on the computationally expensive denoising process, while TDTM reduces the computational burden of handling numerous tokens. CI-DL cleverly addresses memory limitations by dynamically loading model blocks, maximizing resource utilization. The combination of these techniques enables efficient and high-quality video generation on resource-constrained devices, achieving a significant speedup compared to running Sora on high-end GPUs. This represents a substantial advancement in making high-quality generative video technology accessible on commodity mobile devices, overcoming previous limitations in computational power and memory.

Mobile Video Gen#

Mobile video generation is a rapidly evolving field with significant challenges and opportunities. The core difficulty lies in balancing the high computational demands of video generation models with the limited resources of mobile devices. This necessitates innovative approaches such as model compression, efficient algorithms, and hardware acceleration techniques. The potential benefits are vast, including enhanced accessibility, improved user privacy through on-device processing, and reduced reliance on cloud infrastructure. Addressing computational limitations is crucial, possibly through techniques like model quantization, pruning, and knowledge distillation. Efficient algorithms are also key, for example, methods that reduce the number of denoising steps in diffusion-based models. Finally, hardware acceleration could play a role, leveraging the capabilities of mobile GPUs and potentially future NPUs for enhanced performance. The future of mobile video generation will likely depend on the synergistic advancement of these three areas.

LPL & TDTM Impact#

The combined impact of Linear Proportional Leap (LPL) and Temporal Dimension Token Merging (TDTM) on on-device video generation is significant. LPL drastically reduces the computational burden of the iterative denoising process inherent in diffusion models, achieving significant speedups without compromising video quality. This is crucial for resource-constrained mobile devices. TDTM further enhances efficiency by cleverly merging temporal tokens, thereby reducing the computational cost of attention mechanisms in the model. The synergy between these two techniques allows the generation of high-quality videos on devices with limited computing resources. While each method individually offers improvements, their combined effect is greater than the sum of its parts, making on-device video generation a realistic and efficient possibility. The results show that the combination of LPL and TDTM drastically improves speed and efficiency without significant quality degradation, making diffusion-based text-to-video generation more accessible on mobile devices.

Future of Sora#

The future of Sora hinges on addressing its current limitations while capitalizing on its strengths. Improving efficiency on mobile devices remains crucial; exploring hardware acceleration through NPUs and further model optimizations like quantization and pruning will be key. Expanding Sora’s capabilities beyond text-to-video generation is another promising avenue; integrating multimodal inputs (images, audio) and exploring diverse video editing applications are exciting possibilities. Addressing ethical considerations related to generative AI is paramount; mechanisms to mitigate bias, ensure responsible use, and prevent misuse are vital for its long-term success. Open-sourcing efforts should continue, fostering collaboration and driving innovation within the research community. Finally, research into more robust and efficient training methods is needed to reduce reliance on extensive computational resources for model development. Ultimately, Sora’s future is bright, but realizing its full potential requires a concerted effort across engineering, ethical, and societal domains.

More visual insights#

More on figures

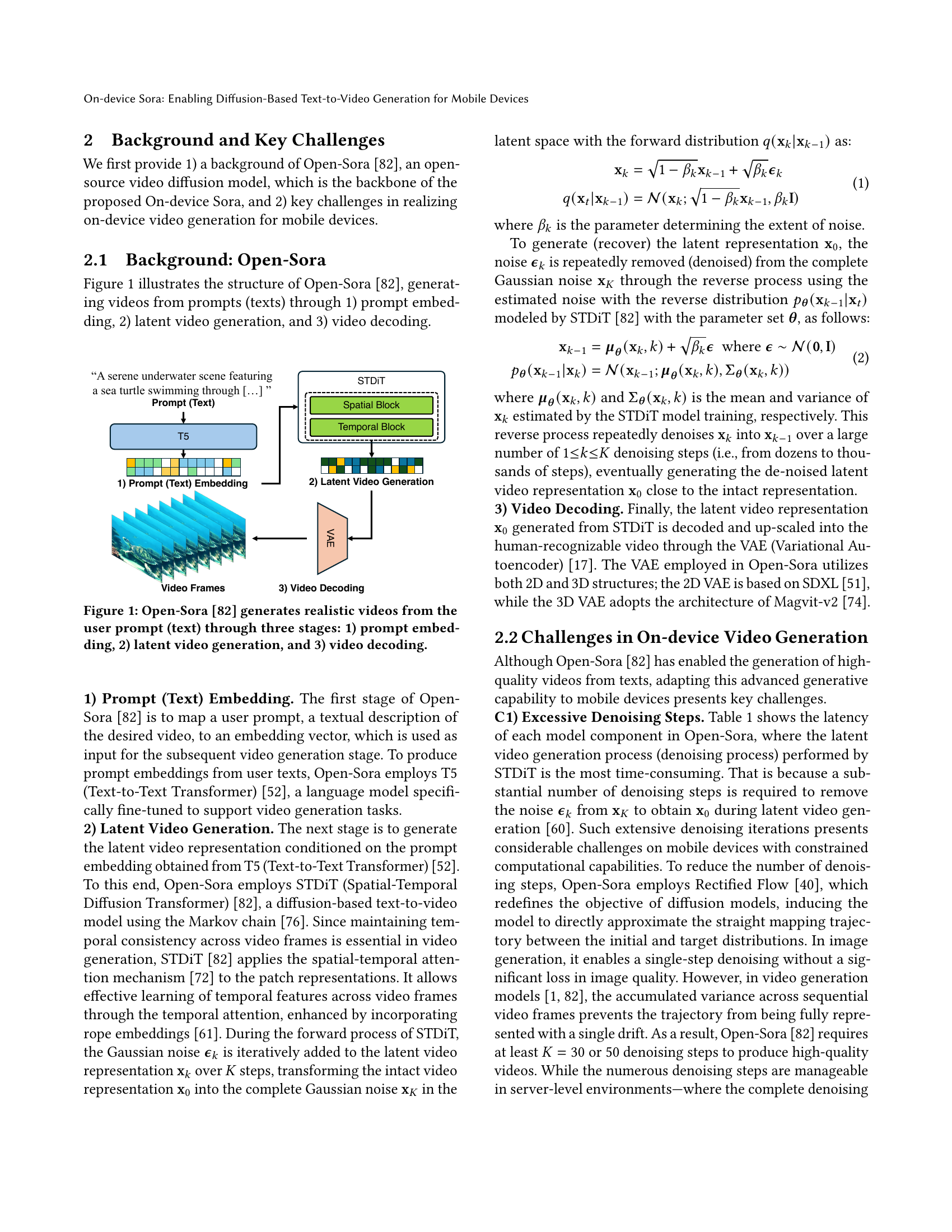

🔼 On-device Sora is a system designed for efficient text-to-video generation on mobile devices. This figure illustrates the three main techniques used to achieve this efficiency: 1) Linear Proportional Leap reduces the number of steps in the video diffusion process. 2) Temporal Dimension Token Merging decreases the computational cost of processing tokens within the model’s attention layers. 3) Concurrent Inference with Dynamic Loading handles the large model size by partitioning it into smaller blocks and loading those blocks into memory concurrently for processing, addressing memory limitations of mobile devices.

read the caption

Figure 2. On-device Sora enables efficient text-to-video generation directly on the device by employing three key approaches: 1) Linear Proportional Leap, 2) Temporal Dimension Token Merging, and 3) Concurrent Inference with Dynamic Loading.

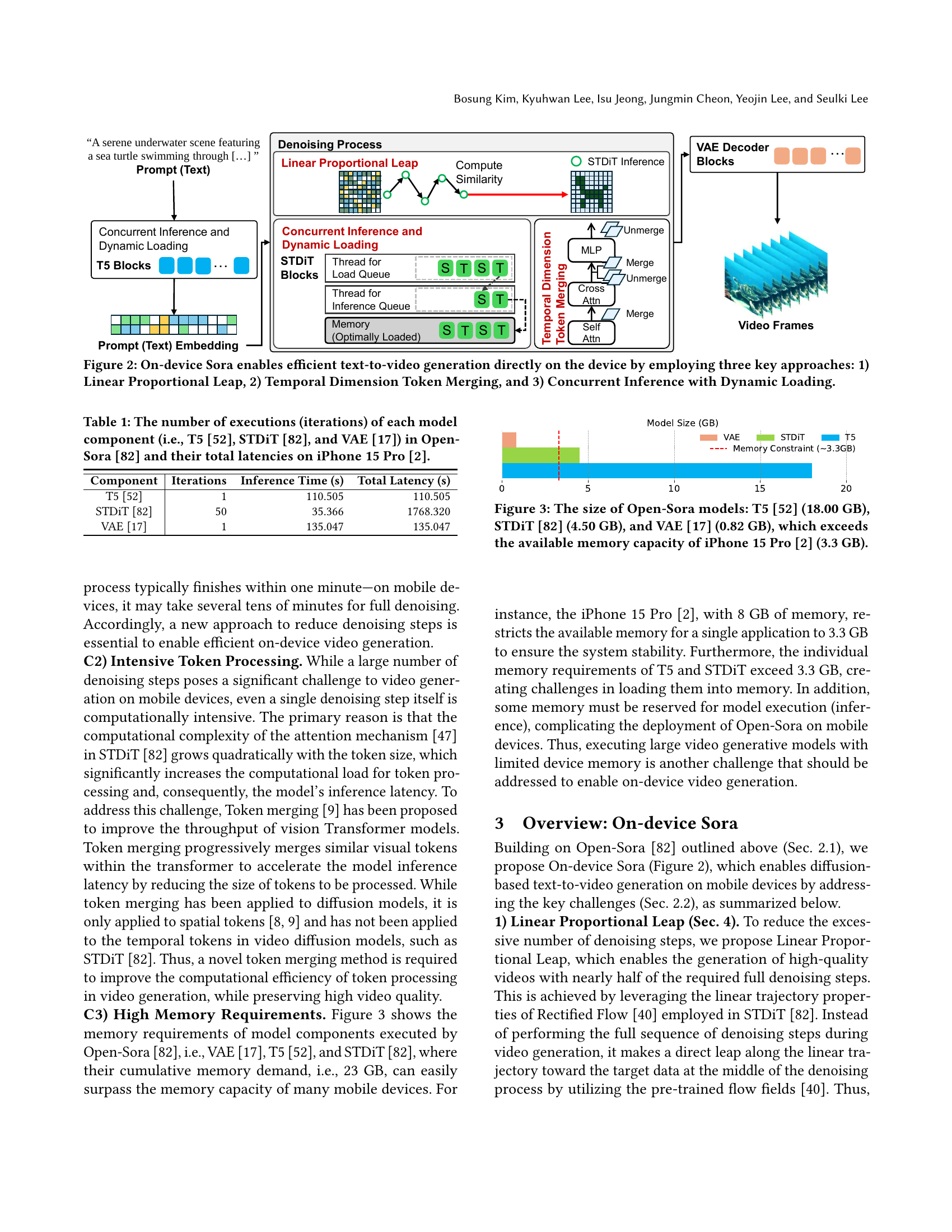

🔼 This figure illustrates the substantial memory requirements of the Open-Sora model components (T5, STDiT, and VAE). The T5 model alone is 18GB, STDiT is 4.5GB, and VAE is 0.82GB, resulting in a cumulative memory demand of 23.32GB. This significantly exceeds the 3.3GB memory limit of an iPhone 15 Pro, highlighting a key challenge in deploying Open-Sora on mobile devices.

read the caption

Figure 3. The size of Open-Sora models: T5 (Raffel et al., 2020) (18.00 GB), STDiT (Zheng et al., 2024) (4.50 GB), and VAE (Doersch, 2016) (0.82 GB), which exceeds the available memory capacity of iPhone 15 Pro (Apple, 2023) (3.3 GB).

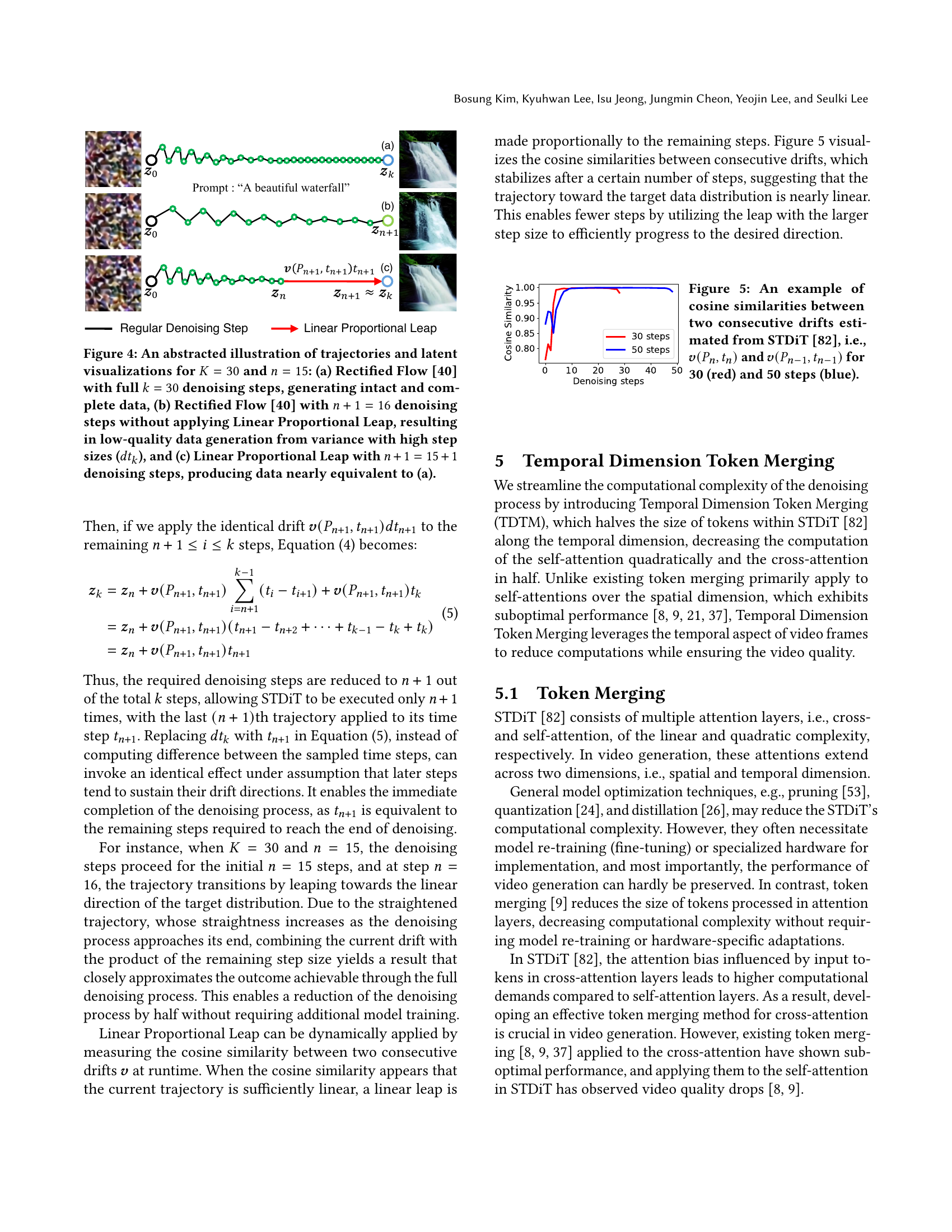

🔼 Figure 4 illustrates the concept of Linear Proportional Leap (LPL) by comparing three scenarios of the denoising process in diffusion models using Rectified Flow. Panel (a) shows the standard Rectified Flow process with 30 denoising steps, resulting in high-quality data. Panel (b) demonstrates what happens with only 16 steps using Rectified Flow without LPL; the resulting data is of low quality due to the large steps taken. Finally, panel (c) shows how LPL achieves similar quality to panel (a) using only 16 steps by strategically leaping towards the target distribution in the later stages of the process, instead of taking numerous small steps.

read the caption

Figure 4. An abstracted illustration of trajectories and latent visualizations for K=30𝐾30K=30italic_K = 30 and n=15𝑛15n=15italic_n = 15: (a) Rectified Flow (Liu et al., 2022) with full k=30𝑘30k=30italic_k = 30 denoising steps, generating intact and complete data, (b) Rectified Flow (Liu et al., 2022) with n+1=16𝑛116n+1=16italic_n + 1 = 16 denoising steps without applying Linear Proportional Leap, resulting in low-quality data generation from variance with high step sizes (dtk𝑑subscript𝑡𝑘dt_{k}italic_d italic_t start_POSTSUBSCRIPT italic_k end_POSTSUBSCRIPT), and (c) Linear Proportional Leap with n+1=15+1𝑛1151n+1=15+1italic_n + 1 = 15 + 1 denoising steps, producing data nearly equivalent to (a).

🔼 This figure shows a graph plotting cosine similarity values against the number of denoising steps in a video generation model. Cosine similarity measures the similarity between consecutive drift vectors, which represent the change in the model’s latent representation during the denoising process. The graph shows two lines representing results for 30 and 50 denoising steps, respectively. The higher cosine similarity values indicate that the trajectory of changes in the model’s latent representation becomes more linear as the denoising process progresses. This supports the Linear Proportional Leap (LPL) method, as a more linear trajectory allows for a shorter denoising process.

read the caption

Figure 5. An example of cosine similarities between two consecutive drifts estimated from STDiT (Zheng et al., 2024), i.e., 𝒗(Pn,tn)𝒗subscript𝑃𝑛subscript𝑡𝑛\boldsymbol{v}(P_{n},t_{n})bold_italic_v ( italic_P start_POSTSUBSCRIPT italic_n end_POSTSUBSCRIPT , italic_t start_POSTSUBSCRIPT italic_n end_POSTSUBSCRIPT ) and 𝒗(Pn−1,tn−1)𝒗subscript𝑃𝑛1subscript𝑡𝑛1\boldsymbol{v}(P_{n-1},t_{n-1})bold_italic_v ( italic_P start_POSTSUBSCRIPT italic_n - 1 end_POSTSUBSCRIPT , italic_t start_POSTSUBSCRIPT italic_n - 1 end_POSTSUBSCRIPT ) for 30 (red) and 50 steps (blue).

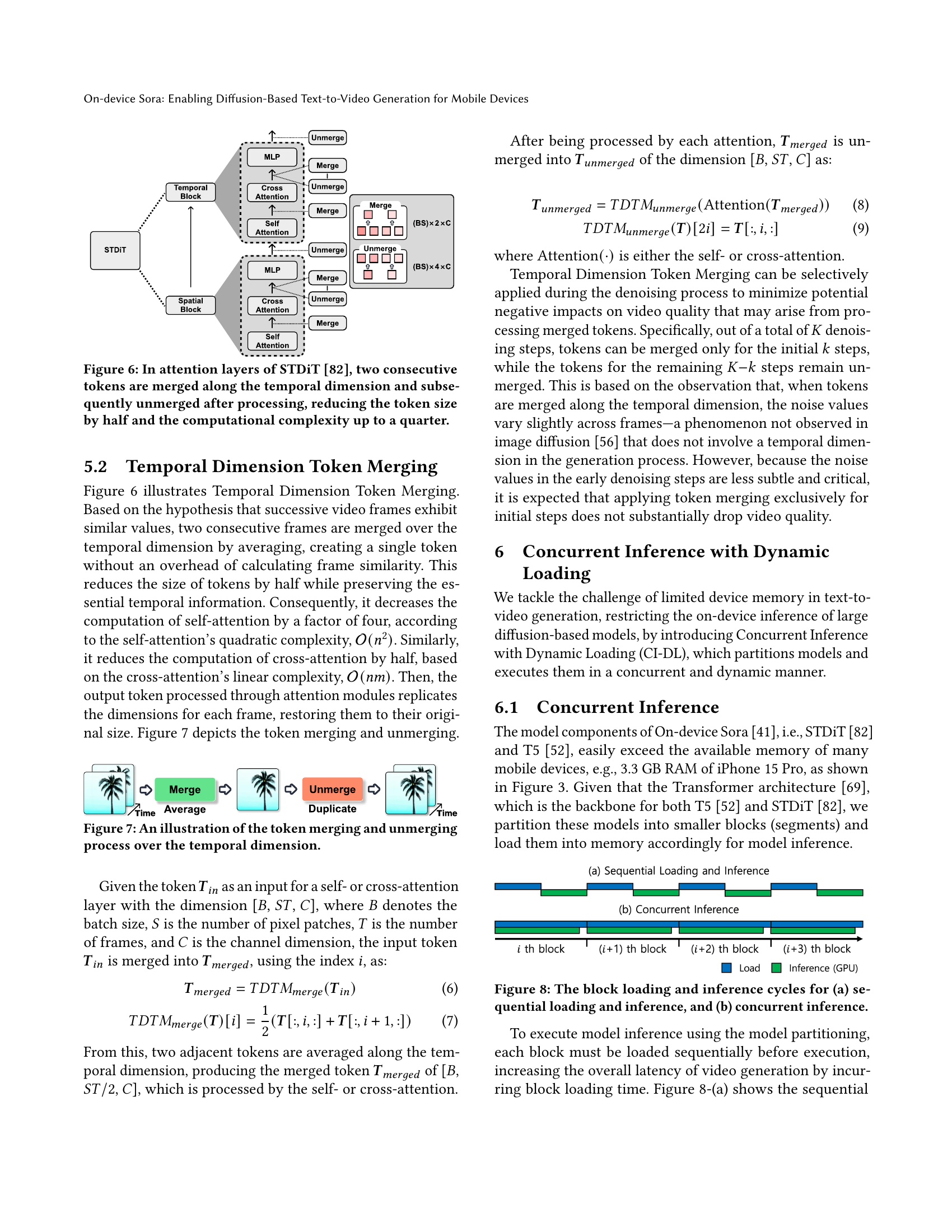

🔼 This figure illustrates the Temporal Dimension Token Merging (TDTM) technique used in the STDiT model. Consecutive tokens representing adjacent frames in a video sequence are merged along the temporal dimension. This merging operation reduces the number of tokens processed in the attention layers by half. Consequently, because the computational complexity of the attention mechanism is quadratic with respect to the number of tokens, TDTM reduces the overall computational complexity of those layers by up to a quarter. After passing through the attention mechanism, the merged tokens are unmerged to restore the original size and structure.

read the caption

Figure 6. In attention layers of STDiT (Zheng et al., 2024), two consecutive tokens are merged along the temporal dimension and subsequently unmerged after processing, reducing the token size by half and the computational complexity up to a quarter.

🔼 This figure illustrates the process of Temporal Dimension Token Merging (TDTM) in the paper. It shows how two consecutive tokens representing video frames are merged along the temporal dimension, effectively reducing computational complexity. The merging is done by averaging the features of the two frames, creating a single token. After processing, the single token is then unmerged back into two tokens to preserve the information needed for the video generation. This process reduces the number of tokens processed and hence lowers the computational cost of the attention layers in the network.

read the caption

Figure 7. An illustration of the token merging and unmerging process over the temporal dimension.

🔼 Figure 8 illustrates the performance difference between sequential and concurrent model block loading and inference. In sequential loading (a), each block is loaded into memory before processing, causing idle time on the GPU. The concurrent inference method (b), however, loads and processes blocks concurrently, keeping the GPU busy and improving efficiency. This is visually represented by the overlap of red (loading) and black (inference) bars in (b), highlighting the simultaneous execution, which is absent in (a).

read the caption

Figure 8. The block loading and inference cycles for (a) sequential loading and inference, and (b) concurrent inference.

🔼 This figure showcases example video outputs generated by both On-device Sora and Open-Sora, highlighting the visual quality and similarity between the two models. The videos are 68 frames long and have a resolution of 256x256 pixels. Two examples are shown: a burning stack of leaves and a close-up of a lemur. This visual comparison demonstrates the comparable quality of video generated by On-device Sora, which is optimized for mobile devices, when compared to the original Open-Sora model running on more powerful hardware.

read the caption

Figure 9. Example videos generated by On-device Sora and Open-Sora (Zheng et al., 2024) (68 frames, 256×256 resolution).

🔼 This figure illustrates how Dynamic Loading enhances Concurrent Inference in the On-device Sora model. Concurrent Inference allows parallel loading and execution of model blocks, improving efficiency. Dynamic Loading further optimizes this by keeping frequently used blocks in memory, reducing loading times. The diagram shows a comparison of sequential loading and concurrent loading with dynamic loading, demonstrating how the system loads and processes blocks to improve the speed of video generation.

read the caption

Figure 10. The block loading and inference cycle for Dynamic Loading applied with Concurrent Inference.

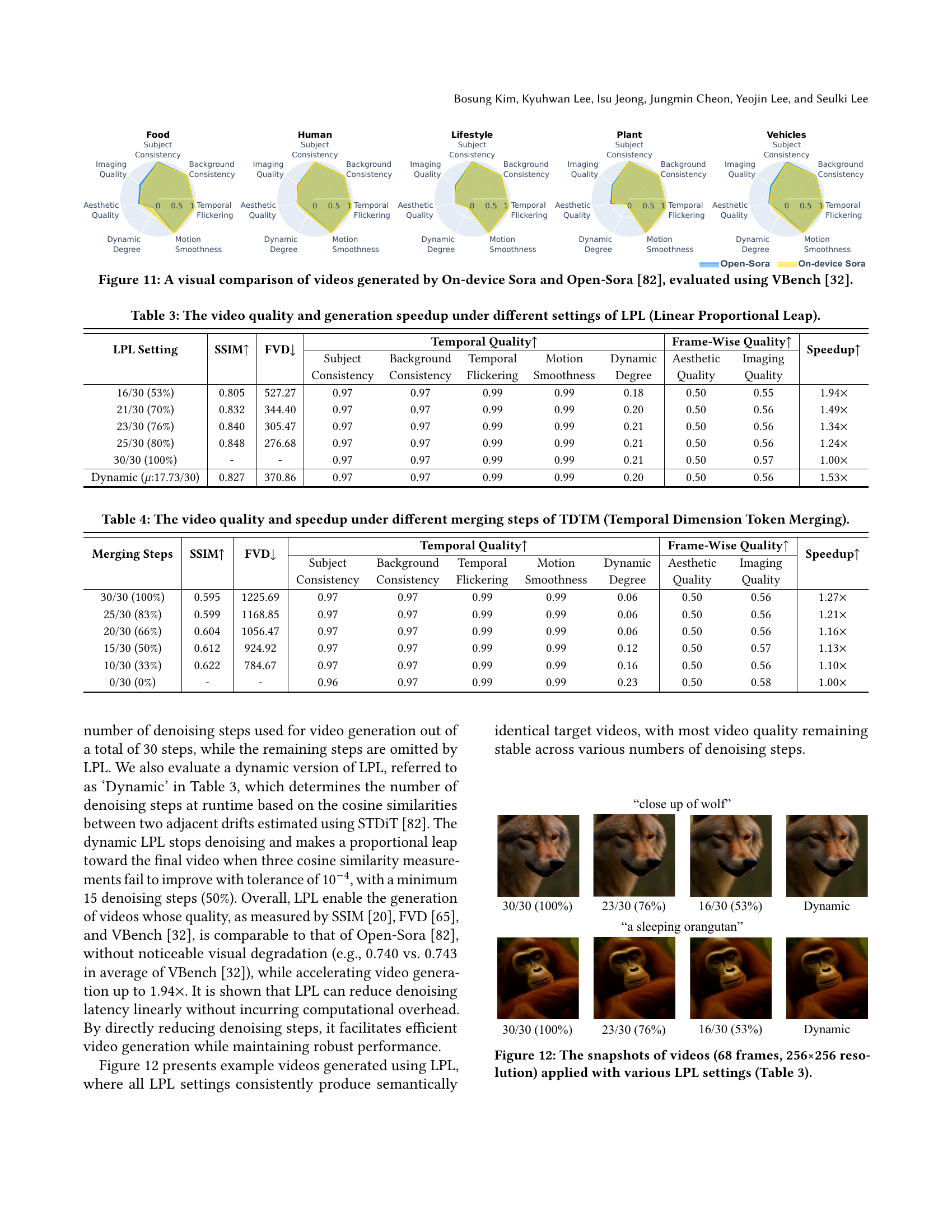

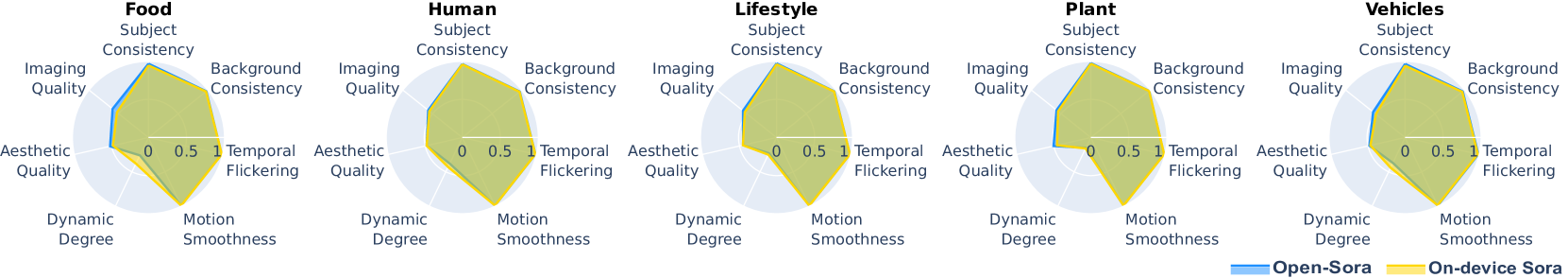

🔼 Figure 11 presents a visual comparison of video samples generated using On-device Sora and Open-Sora, focusing on the quality differences between the two approaches. The videos were created using the same prompts, allowing for a direct comparison based on visual quality metrics. The quality assessment was performed using VBench, a benchmark tool providing an overall evaluation score along with specific metrics such as temporal consistency, background consistency, flickering, motion smoothness, dynamic degree, aesthetic quality and imaging quality. This comparison visualizes the performance of On-device Sora in producing high-quality videos on a mobile device, despite the computational limitations compared to the Open-Sora model running on a high-end GPU.

read the caption

Figure 11. A visual comparison of videos generated by On-device Sora and Open-Sora (Zheng et al., 2024), evaluated using VBench (Huang et al., 2024).

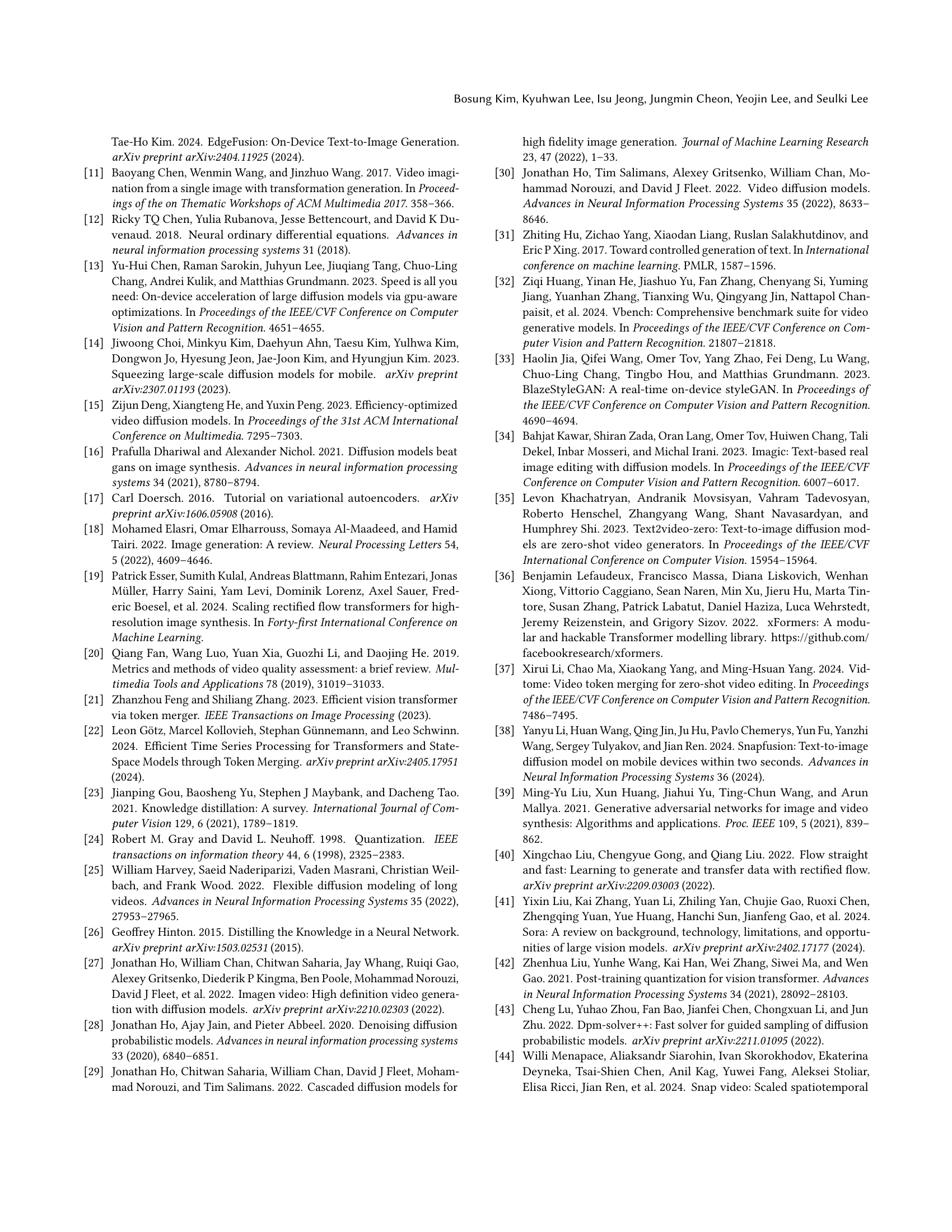

🔼 This figure shows example video frames generated by On-device Sora using different Linear Proportional Leap (LPL) settings. Each row represents a different LPL setting from Table 3 in the paper, indicating the number of denoising steps used before the linear leap is applied. The videos are 68 frames long and have a resolution of 256x256 pixels. The purpose of the figure is to visually demonstrate that despite using fewer denoising steps with different LPL settings, the generated videos maintain similar semantic content and quality.

read the caption

Figure 12. The snapshots of videos (68 frames, 256×256 resolution) applied with various LPL settings (Table 3).

More on tables

| Iterations |

🔼 This table presents a quantitative comparison of video quality generated by On-device Sora and Open-Sora using the VBench benchmark. The comparison is broken down by eight video categories (Animal, Architecture, Food, Human, Lifestyle, Plant, Scenery, Vehicles), each assessed across multiple metrics reflecting temporal consistency, background quality, flickering, motion smoothness, dynamic range, and overall aesthetic quality. Each metric is scored between 0 and 1, providing a detailed and comparable evaluation of the video quality generated by each method at a resolution of 256x256 pixels and a length of 68 frames.

read the caption

Table 2. The VBench (Huang et al., 2024) evaluation by category: On-device Sora vs. Open-Sora (Zheng et al., 2024) (68 frames, 256×256 resolution).

| Inference Time (s) |

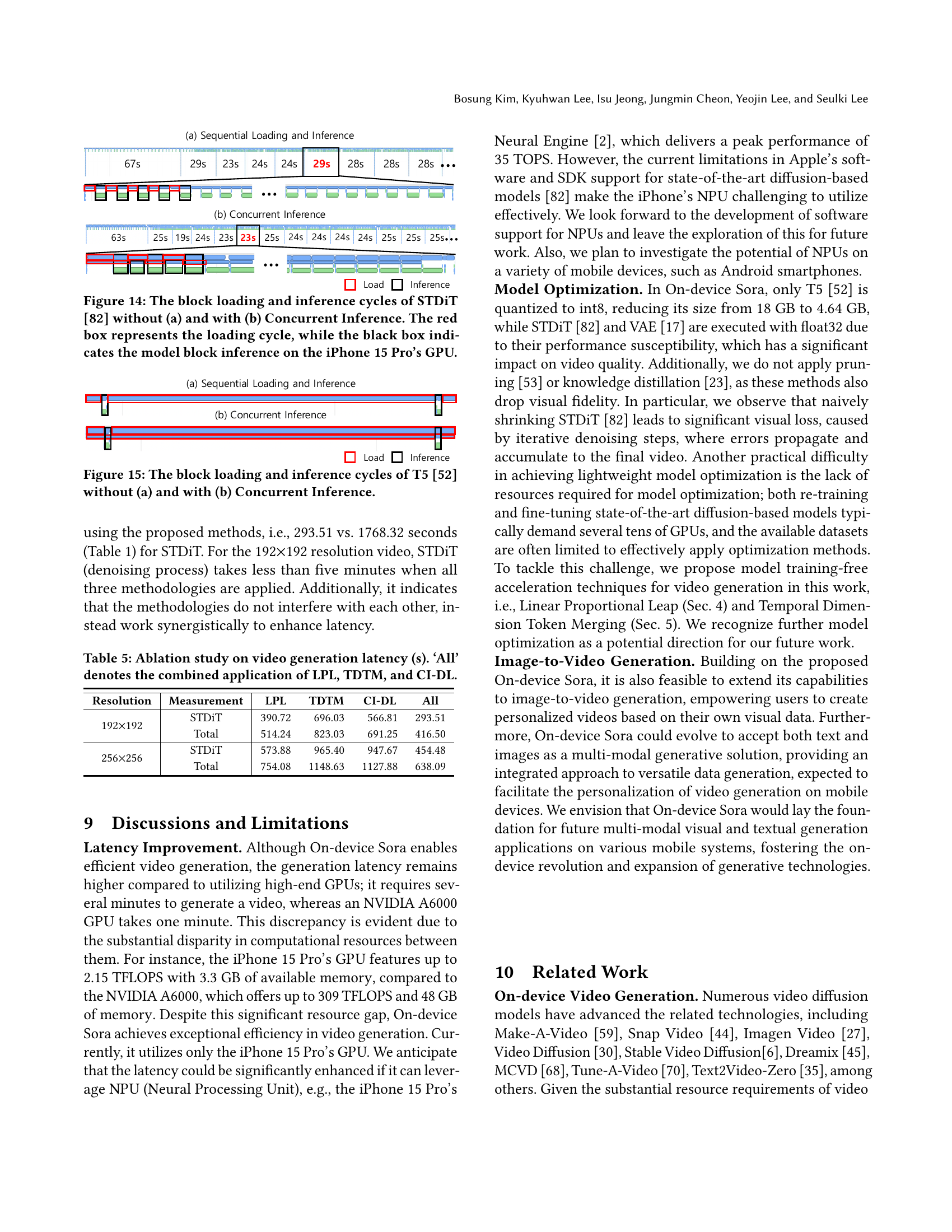

🔼 This table presents a quantitative analysis of the impact of the Linear Proportional Leap (LPL) technique on video generation quality and speed. Different LPL settings are tested, each representing a varying percentage of denoising steps completed using the standard method before transitioning to the LPL method for faster generation. The table shows the resulting video quality metrics (SSIM, FVD, subject consistency, background consistency, etc.) and the speedup achieved compared to the baseline of completing all denoising steps without LPL. This allows for an assessment of the trade-off between speed and quality resulting from different degrees of LPL application. The dynamic LPL setting is also included as an alternative approach.

read the caption

Table 3. The video quality and generation speedup under different settings of LPL (Linear Proportional Leap).

| Total Latency (s) |

🔼 This table presents a quantitative analysis of the impact of Temporal Dimension Token Merging (TDTM) on video generation quality and speed. Different numbers of denoising steps are subjected to TDTM, ranging from fully applying TDTM to all 30 steps to not applying it at all. The evaluation metrics include SSIM, FVD, and VBench scores. This allows for assessment of the trade-offs between computational efficiency and visual quality when employing TDTM at varying degrees.

read the caption

Table 4. The video quality and speedup under different merging steps of TDTM (Temporal Dimension Token Merging).

| Category | Method | Temporal Quality | Frame-Wise Quality | |||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

|

|

|

|

|

| ||||||||||||||

| Animal | Open-Sora | 0.97 | 0.98 | 0.99 | 0.99 | 0.15 | 0.51 | 0.56 | ||||||||||||

| On-device Sora | 0.95 | 0.97 | 0.99 | 0.99 | 0.28 | 0.48 | 0.55 | |||||||||||||

| Architecture | Open-Sora | 0.99 | 0.98 | 0.99 | 0.99 | 0.05 | 0.53 | 0.60 | ||||||||||||

| On-device Sora | 0.98 | 0.98 | 0.99 | 0.99 | 0.12 | 0.49 | 0.56 | |||||||||||||

| Food | Open-Sora | 0.97 | 0.97 | 0.99 | 0.99 | 0.26 | 0.52 | 0.60 | ||||||||||||

| On-device Sora | 0.95 | 0.97 | 0.99 | 0.99 | 0.38 | 0.48 | 0.53 | |||||||||||||

| Human | Open-Sora | 0.96 | 0.97 | 0.99 | 0.99 | 0.38 | 0.48 | 0.57 | ||||||||||||

| On-device Sora | 0.96 | 0.96 | 0.99 | 0.99 | 0.43 | 0.48 | 0.55 | |||||||||||||

| Lifestyle | Open-Sora | 0.97 | 0.97 | 0.99 | 0.99 | 0.23 | 0.45 | 0.56 | ||||||||||||

| On-device Sora | 0.96 | 0.97 | 0.99 | 0.99 | 0.25 | 0.45 | 0.53 | |||||||||||||

| Plant | Open-Sora | 0.98 | 0.98 | 0.99 | 0.99 | 0.15 | 0.50 | 0.58 | ||||||||||||

| On-device Sora | 0.97 | 0.98 | 0.99 | 0.99 | 0.16 | 0.46 | 0.55 | |||||||||||||

| Scenery | Open-Sora | 0.98 | 0.98 | 0.99 | 0.99 | 0.10 | 0.50 | 0.50 | ||||||||||||

| On-device Sora | 0.97 | 0.98 | 0.99 | 0.99 | 0.17 | 0.48 | 0.47 | |||||||||||||

| Vehicles | Open-Sora | 0.97 | 0.97 | 0.99 | 0.99 | 0.37 | 0.48 | 0.54 | ||||||||||||

| On-device Sora | 0.94 | 0.96 | 0.98 | 0.99 | 0.44 | 0.47 | 0.49 | |||||||||||||

🔼 This table presents an ablation study to show the effects of applying different optimization techniques individually and in combination on video generation latency. It compares the latency of generating videos at two different resolutions (192x192 and 256x256 pixels). The techniques evaluated are: Linear Proportional Leap (LPL), Temporal Dimension Token Merging (TDTM), and Concurrent Inference with Dynamic Loading (CI-DL). The ‘All’ column shows the combined effect of applying all three methods. The latency is measured for both the STDiT (Spatial-Temporal Diffusion Transformer) model component specifically and the overall video generation process (including T5 for text embedding and VAE for video decoding). The results are the average of three independent experimental runs.

read the caption

Table 5. Ablation study on video generation latency (s). ‘All’ denotes the combined application of LPL, TDTM, and CI-DL.

Full paper#