TL;DR#

Current character animation methods often fail to create realistic interactions between animated characters and their surroundings. This is mainly due to the fact that existing methods primarily focus on motion signals extracted from separate videos, ignoring the spatial and interactive relationships between characters and their environments. This limitation results in animations that lack authenticity and fail to represent the complexities of real-world human-object interactions.

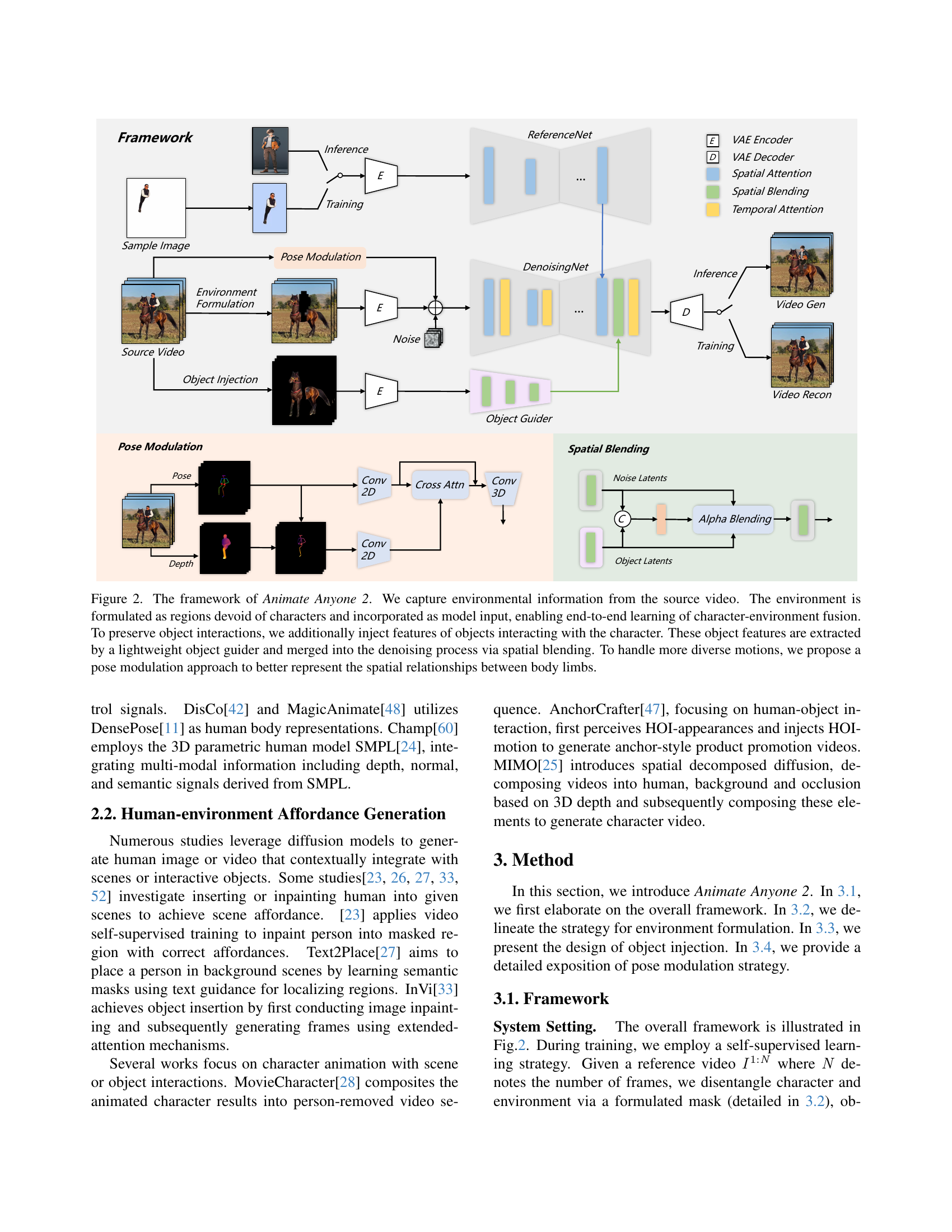

To overcome these limitations, Animate Anyone 2 is proposed. It captures environmental representations from the source video, along with motion and object interaction signals. A shape-agnostic mask strategy improves the boundary representation between characters and the environment. Object interaction fidelity is increased by injecting object features, and a pose modulation strategy enhances the ability to handle diverse motion patterns. The results significantly outperform state-of-the-art methods, demonstrating improvements in animation quality, realism and handling complex interactions, thereby addressing the issues of previous methods.

Key Takeaways#

Why does it matter?#

This paper is important because it significantly advances the field of character image animation by addressing the limitation of existing methods that struggle to realistically integrate characters within their environments. The introduction of environment affordance allows for more natural and believable animations, opening new avenues for research in areas like virtual reality, filmmaking, and advertising. The novel techniques presented, such as shape-agnostic masking and object injection, offer valuable tools for researchers working on similar problems, and the superior performance demonstrated on various benchmarks makes this work a significant contribution. The depth-wise pose modulation improves handling diverse motions.

Visual Insights#

| Method | SSIM | PSNR | LPIPS | FVD |

|---|---|---|---|---|

| MRAA [36] | 0.672 | 29.39 | 0.672 | 284.82 |

| DisCo [42] | 0.668 | 29.03 | 0.292 | 292.80 |

| MagicAnimate [48] | 0.714 | 29.16 | 0.239 | 179.07 |

| Animate Anyone [15] | 0.718 | 29.56 | 0.285 | 171.90 |

| Champ* [60] | 0.802 | 29.91 | 0.234 | 160.82 |

| UniAnimate* [44] | 0.811 | 30.77 | 0.231 | 148.06 |

| Ours | 0.778 | 29.82 | 0.248 | 158.97 |

| Ours* | 0.812 | 30.82 | 0.223 | 144.65 |

🔼 This table presents a quantitative comparison of different methods for character image animation on the TikTok benchmark dataset. The metrics used are SSIM (structural similarity index), PSNR (peak signal-to-noise ratio), LPIPS (learned perceptual image patch similarity), and FVD (Fréchet Video Distance). Higher SSIM and PSNR values indicate better image quality, while lower LPIPS and FVD values represent better perceptual similarity and temporal consistency, respectively. The asterisk (*) next to some method names indicates that those methods utilized additional video data during pre-training.

read the caption

Table 1: Quantitative comparison on Tiktok benchmark. * means utilizing other video data for pretraining.

In-depth insights#

Env Affordance Anim#

The concept of “Env Affordance Anim” suggests a novel approach to character animation that emphasizes the interaction between animated characters and their environment. Instead of treating the environment as a static backdrop, this method would aim to model how the environment’s properties (affordances) influence character movement, behavior, and interactions. This involves dynamically generating animations where the character’s actions are responsive to the possibilities and limitations presented by the surrounding scene. Key advantages would likely be more realistic and engaging animations, with characters exhibiting natural behaviors like navigating obstacles, using tools, or interacting with other objects appropriately. This also implies a more complex model that accounts for physics and interactions, going beyond simply overlaying animation onto a background. Challenges may include the computational cost of handling dynamic interactions, accurate modeling of diverse environments, and creating robust algorithms that can generalize to unseen scenarios. Ultimately, this approach could lead to significantly more natural and believable animated characters within rich virtual worlds.

Shape-Agnostic Masks#

The concept of “Shape-Agnostic Masks” in character animation is a significant advancement. It directly addresses the limitations of previous methods that relied on precise character masks. Traditional methods suffered from shape leakage and artifacts, especially when dealing with diverse character shapes and clothing styles in unseen data. By intentionally disrupting the direct correspondence between the mask boundary and character outlines during training, a shape-agnostic approach allows the model to learn more robustly and generalize better to novel characters. This strategy focuses on learning the contextual relationship between the character and its environment, rather than memorizing specific shapes. The use of a shape-agnostic mask promotes character-environment coherence without enforcing strict boundary constraints. This approach is particularly valuable in real-world scenarios involving diverse and complex interactions between characters and their surroundings, significantly improving the fidelity and naturalism of the resulting animations. The technique’s success hinges on the ability to learn the contextual information effectively, which is achieved by forcing the model to focus on environmental context rather than relying on precise shape matching during both training and inference.**

Object Injection#

The concept of ‘Object Injection’ in the context of image animation is crucial for achieving high-fidelity results. It addresses the shortcoming of existing methods, which often fail to accurately represent the interaction between animated characters and environmental objects. Object Injection focuses on precisely integrating object features into the animation process, ensuring that these features seamlessly blend with the character’s movements and the surrounding environment. This is achieved by first using an object guider to detect and extract relevant object features from the input video, and then merging these features into the animation generation process using a spatial blending mechanism. This spatial blending is key, allowing for the subtle and realistic interplay of character and object. The method effectively preserves intricate interaction dynamics, resulting in a far more natural and believable animation. The use of a lightweight object guider is especially insightful as it avoids adding significant computational overhead to the already complex animation process. It allows for flexible handling of both simple and complex object interactions, making the animation process more scalable and versatile. Furthermore, the focus on injection of multi-scale features enhances the fine details of the interactions, preventing visual artifacts and enhancing the overall fidelity of the animation. This is a significant advancement, moving beyond simply placing objects in the scene to dynamically and realistically modeling their interaction with the animated character.

Pose Modulation#

The concept of ‘Pose Modulation’ in the context of character animation is crucial for achieving natural and believable movement. The authors recognize the limitations of existing methods, which often rely on simplistic skeleton representations, lacking detailed inter-limb relationships and hierarchical dependencies. Their proposed ‘Depth-wise Pose Modulation’ elegantly addresses this by incorporating structured depth information alongside skeleton signals. This addition provides richer contextual understanding, allowing the model to better capture the intricate spatial dynamics and interactions between body parts. This approach also enhances robustness by mitigating the impact of errors commonly found in pose estimation from real-world videos. The integration of depth data improves the model’s ability to handle diverse and complex poses, resulting in smoother, more realistic character animations. The use of Conv3D for temporal motion modeling further refines the animation, ensuring consistency and preventing errors from propagating across frames. This strategy is vital for generating high-fidelity results, demonstrating a significant improvement over previous approaches. Overall, the innovation lies in moving beyond simple skeleton-based representations to incorporate richer depth information, yielding more natural and sophisticated character movements.

Anim 2 Limitations#

Animate Anyone 2 (Anim2), while a significant advancement in character image animation, exhibits limitations. The model struggles with complex hand-object interactions, particularly when objects occupy small pixel regions, potentially introducing visual artifacts. Shape discrepancies between the source and target characters can also lead to deformation artifacts in the generated animation, highlighting a need for improved robustness in handling diverse character morphologies. The performance is also impacted by the accuracy of object segmentation, relying heavily on the quality of the input masks. In scenarios with intricate human-object interactions, the model may fail to completely capture the detailed interactions, producing unnatural or incomplete representations. Finally, the method’s reliance on self-supervised training and the generation of composite background/character images could potentially lead to biases and limitations in its generalization capabilities when encountering novel scenarios or variations outside its training dataset. Addressing these limitations through improved robustness to shape variations, enhanced processing of fine-grained object detail, and exploration of alternative training strategies would further enhance Anim2’s overall performance and applicability.

More visual insights#

More on tables

| Method | SSIM | PSNR | LPIPS | FVD |

|---|---|---|---|---|

| Animate Anyone[15] | 0.761 | 28.41 | 0.324 | 228.53 |

| Champ[60] | 0.771 | 28.69 | 0.294 | 205.79 |

| MimicMotion[56] | 0.767 | 28.52 | 0.307 | 212.48 |

| Ours | 0.809 | 29.24 | 0.259 | 172.54 |

🔼 Table 2 presents a quantitative comparison of different character animation methods on a new dataset created by the authors. The dataset is designed to evaluate performance across a wider range of scenarios and motion complexity than existing benchmarks. The metrics used include SSIM, PSNR, LPIPS, and FVD, measuring image quality and video consistency. The results show that the proposed ‘Animate Anyone 2’ method significantly outperforms other leading approaches in all metrics, highlighting its robustness and superior performance in more generalized animation tasks.

read the caption

Table 2: Quantitative comparison on our dataset. Our approach demonstrates superior performance across generalized scenarios.

| Method | SSIM | PSNR | LPIPS | FVD |

|---|---|---|---|---|

| Baseline | 0.785 | 28.71 | 0.291 | 195.45 |

| Ours | 0.794 | 28.83 | 0.276 | 186.17 |

🔼 This table presents a quantitative comparison of the proposed Animate Anyone 2 model against a baseline method for character-environment integration. The baseline method creates a pseudo integration by directly compositing character animation results onto the original video background using image inpainting. The comparison uses the metrics SSIM (Structural Similarity Index), PSNR (Peak Signal-to-Noise Ratio), LPIPS (Learned Perceptual Image Patch Similarity), and FVD (Fréchet Video Distance) to evaluate the quality of the character animation and its integration with the environment. Higher values for SSIM and PSNR and lower values for LPIPS and FVD indicate better quality and more seamless integration.

read the caption

Table 3: Quantitative comparison with baseline on our dataset. Baseline refers to the pseudo character-environment integration.

| Method | SSIM | PSNR | LPIPS | FVD |

|---|---|---|---|---|

| w/o Spatial Blending | 0.789 | 28.74 | 0.283 | 191.23 |

| w/o Pose Modulation | 0.769 | 28.56 | 0.301 | 211.15 |

| Ours | 0.794 | 28.83 | 0.276 | 186.17 |

🔼 This table presents the results of an ablation study evaluating the impact of different components of the proposed Animate Anyone 2 model. It shows how the model’s performance (measured by SSIM, PSNR, LPIPS, and FVD) changes when key components such as spatial blending, pose modulation, and environment formulation are removed. This helps to understand the contribution of each component to the overall performance of the model.

read the caption

Table 4: Quantitative ablation study.

Full paper#