TL;DR#

Current methods for personalized video generation struggle with temporal inconsistencies and quality. Existing approaches often fail to maintain subject identity across frames and lack detailed information preservation. They also suffer from the inefficient use of reference images during feature encoding and uniform propagation of reference features, leading to both identity degradation and temporal coherence issues.

CustomVideoX tackles these challenges with a novel framework. It uses a 3D Reference Attention mechanism that directly interacts reference features with every video frame, improving efficiency and effectiveness. A Time-Aware Attention Bias dynamically adjusts reference bias over time, optimizing the influence of reference features at different stages. Further, an Entity Region-Aware Enhancement module focuses on key entities, enhancing consistency. CustomVideoX outperforms existing methods on video quality and consistency benchmarks.

Key Takeaways#

Why does it matter?#

This paper is important because it addresses the challenge of personalized video generation, a significant area of current research. CustomVideoX offers a novel approach to zero-shot video customization, surpassing existing methods in video quality and temporal consistency. This opens avenues for research in efficient model adaptation, large-scale video data synthesis, and improved benchmarks for evaluating video generation. The work also has broad implications for various applications, including digital art, advertising, and video editing.

Visual Insights#

🔼 Figure 1 showcases CustomVideoX’s ability to generate videos with natural-looking movements and high-fidelity details. It displays four examples of video generation. Each example shows how CustomVideoX accurately replicates the fine details of the specified objects (like a cat, dog, cartoon character, or plush toy) while realistically animating them in dynamic settings. The resulting videos combine natural-looking movement with the preservation of object details, highlighting the effectiveness of the method.

read the caption

Figure 1: CustomVideoX synthesizes natural motions while preserving the fine-grained object details.

| Methods | DreamBench | VideoBench | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| CLIP-T | CLIP-I | DINO-I | T.Cons | D.D | CLIP-T | CLIP-I | DINO-I | T.Cons | D.D | |

| BLIP-Diffusion (Li et al., 2023) | 29.97 | 84.39 | 84.14 | 95.87 | 54.87 | 29.85 | 88.83 | 87.99 | 96.88 | 36.00 |

| IP-Adapter (Ye et al., 2023) | 28.87 | 82.00 | 82.18 | 95.16 | 56.64 | 29.38 | 86.92 | 87.87 | 96.23 | 42.00 |

| -Eclipse (Patel et al., 2024) | 34.01 | 82.92 | 84.10 | 95.98 | 59.29 | 32.25 | 89.45 | 89.94 | 97.54 | 38.00 |

| SSR-Encoder (Zhang et al., 2024) | 29.32 | 83.24 | 83.92 | 95.42 | 59.29 | 29.97 | 87.01 | 87.74 | 96.63 | 40.00 |

| MS-Diffusion (Wang et al., 2024) | 33.74 | 85.47 | 87.54 | 96.65 | 53.98 | 32.64 | 90.55 | 91.29 | 97.35 | 54.00 |

| VideoBooth (Jiang et al., 2024) | 28.99 | 76.42 | 77.90 | 96.17 | 45.13 | 28.92 | 82.60 | 84.06 | 96.75 | 46.00 |

| CustomVideoX (Ours) | 34.28 | 85.47 | 88.17 | 96.77 | 50.44 | 33.38 | 90.26 | 91.49 | 97.26 | 46.00 |

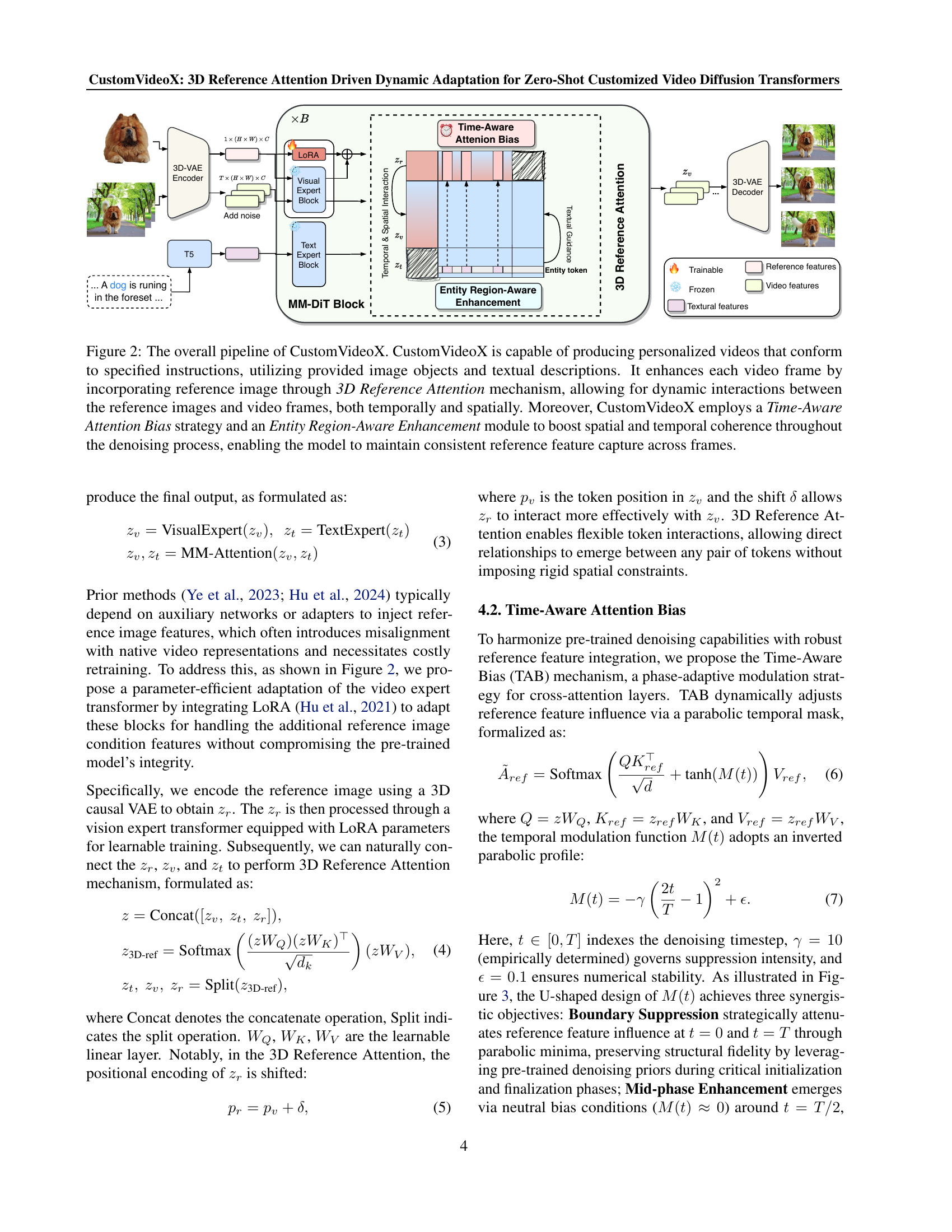

🔼 Table 1 presents a quantitative comparison of various video generation methods using two benchmark datasets: DreamBench and VideoBench. For each method, performance is evaluated across multiple metrics, including CLIP-Text (CLIP-T), CLIP-Image (CLIP-I), DINO-Image (DINO-I), Temporal Consistency (T.Cons), and Dynamic Degree (D.D). CLIP-T and CLIP-I assess the alignment between generated videos and textual/visual descriptions. DINO-I measures similarity using a different image embedding method. T.Cons evaluates the coherence between consecutive video frames, while D.D quantifies motion dynamics. The table highlights the best-performing method for each metric in bold and the second-best method with an underline. This allows for a detailed comparison of different approaches to video generation in terms of both quality and temporal consistency.

read the caption

Table 1: Quantitative comparison of different methods across DreamBench and VideoBench benchmarks. Bold represents the best result and data underline represents the second-best result.

In-depth insights#

3D Ref-Attn Fusion#

The conceptual heading ‘3D Ref-Attn Fusion’ suggests a method in video generation that leverages three-dimensional attention mechanisms to integrate reference images effectively. This approach likely goes beyond simple 2D attention by considering temporal relationships between video frames and spatial relationships within the reference image. The ‘3D’ aspect suggests the model processes both spatial and temporal information simultaneously, enabling more natural and consistent video generation. The ‘Ref-Attn’ component signifies the core role of attention mechanisms in selectively focusing on relevant features from the reference image, guiding the generation process. The fusion part implies a seamless integration of the reference image features into the video generation process, likely within the diffusion model framework. A successful implementation would likely produce videos where the subject’s appearance and actions closely match the reference image while adhering to textual descriptions, exhibiting improved temporal coherence and visual quality compared to prior methods. The inherent challenge lies in managing the impact of reference features, avoiding over-reliance and preserving the desired creative variability. Careful consideration of attention weight modulation across time steps would be crucial to avoid artifacts, ensuring smooth transitions and natural motion. In essence, 3D Ref-Attn Fusion represents an advanced strategy aiming for high-fidelity and coherent personalized video generation.

Zero-Shot VidGen#

Zero-shot video generation (VidGen) represents a significant advancement in AI-driven content creation. The ability to generate videos from text prompts without requiring any prior training data specific to the video’s content is a remarkable feat. This approach bypasses the need for extensive, often laborious, data collection and annotation, making it significantly more efficient and scalable. However, challenges remain; maintaining temporal coherence and visual fidelity across frames presents a substantial hurdle. Successfully generating realistic and coherent videos, especially those involving complex actions or interactions, requires overcoming these challenges. The effectiveness of the zero-shot model depends heavily on the quality and robustness of its pre-trained model and the inherent limitations of the diffusion model architecture itself. Furthermore, evaluating the quality of zero-shot generated videos is also complex; quantitative metrics need to be carefully chosen to accurately reflect the subjective nature of video quality, encompassing both visual realism and semantic consistency with text prompts. Despite its challenges, the potential impact of zero-shot VidGen is immense, with future applications ranging from personalized animation to interactive storytelling and advertising, and research in this area continues to push the boundaries of AI content creation.

Time-Aware Bias#

The concept of “Time-Aware Bias” in the context of video generation using diffusion models is a clever approach to address the challenge of effectively integrating reference image information throughout the video generation process. The core idea is to dynamically modulate the influence of the reference features across different stages of the diffusion process. Instead of uniformly applying the reference information, the system gradually increases its weight during intermediate denoising steps, allowing the model to effectively utilize the reference image for structural information and object identity establishment early on, but then decreasing its influence later to permit more detailed temporal refinement and reduce over-reliance on the reference at the cost of video fluidity. This parabolic weighting scheme helps the model balance consistent identity preservation with temporal coherence, thereby preventing overfitting to the reference image and producing more natural and realistic-looking videos. The adaptive modulation is key to ensuring both fine-grained detail and overall coherence within the generated video, making it a notable advancement in customized video generation techniques. The implementation highlights a nuanced understanding of the diffusion process itself.

VideoBench#

The proposed benchmark, VideoBench, addresses a critical gap in evaluating customized video generation. Existing benchmarks often lack the scale and diversity needed to thoroughly assess a model’s ability to generate high-quality, personalized videos across a wide range of objects and scenarios. VideoBench significantly enhances evaluation by introducing over 50 objects and 100 prompts, ensuring comprehensive coverage and avoiding overlap with training data. This meticulous design allows for a robust assessment of the model’s generalization capabilities and its ability to maintain consistency and quality across diverse video generation tasks. The inclusion of VideoBench provides a much-needed standard for comparing the performance of various customized video generation approaches, facilitating future research and development in this rapidly evolving field. Its comprehensive nature makes it a valuable tool for researchers and developers alike. The use of VideoBench is crucial for advancing the state-of-the-art in customized video generation.

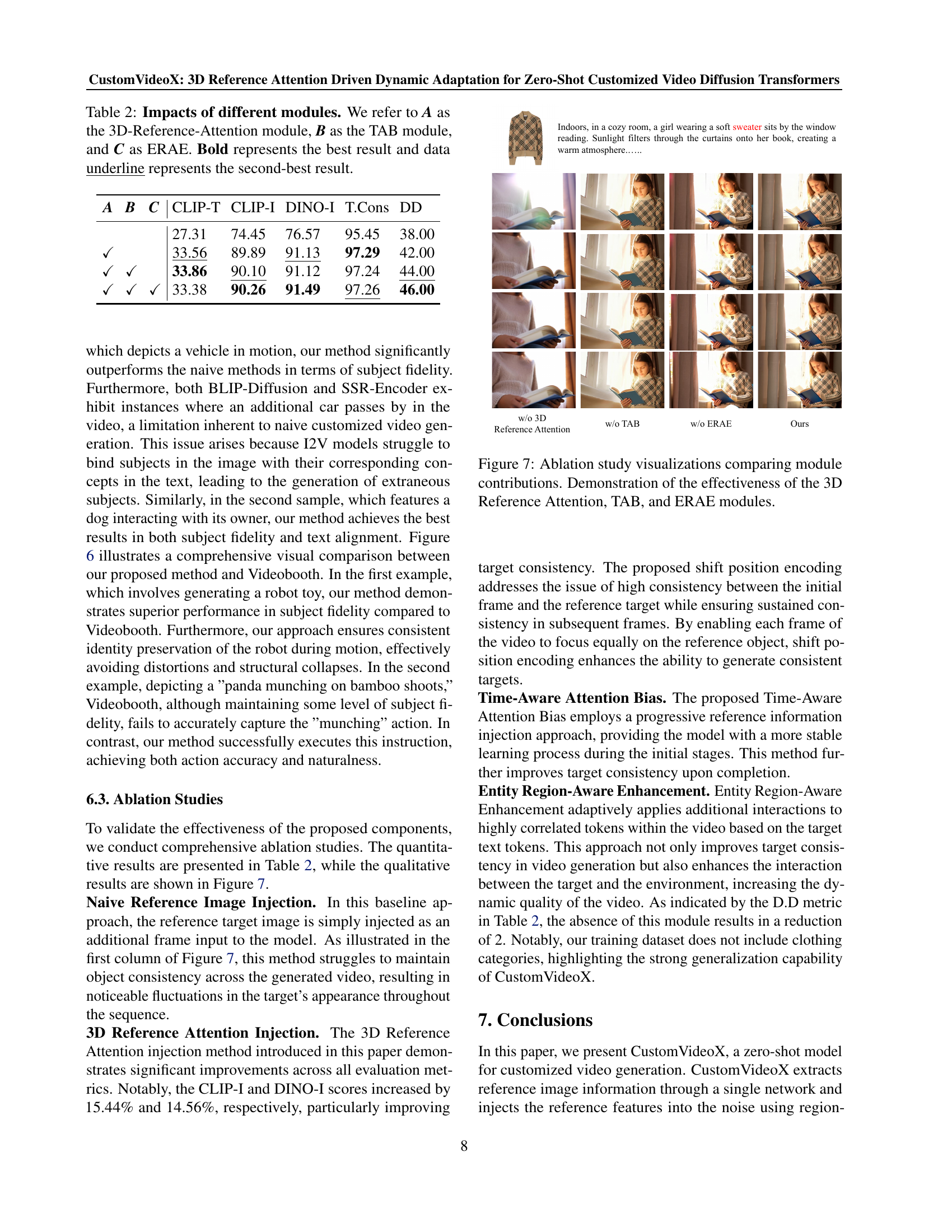

Ablation Studies#

The ablation study section of a research paper is crucial for understanding the contribution of individual components within a proposed model. In the context of a video generation model, an ablation study would systematically remove or disable specific modules (e.g., 3D Reference Attention, Time-Aware Attention Bias, Entity Region-Aware Enhancement) to assess their impact on overall performance. The results would quantify the contribution of each module to key metrics such as video quality, temporal consistency, and subject fidelity. A well-designed ablation study provides strong evidence for the effectiveness of the proposed model architecture, clarifying which parts are essential and which may be less critical. Careful attention is paid to the experimental design, including the order in which components are removed and the choice of evaluation metrics, to ensure robust and reliable findings. The results of the ablation study often guide future improvements to the model by highlighting areas of strength and weakness. This section shows the impact of removing each component, justifying the design choices and providing insights into how future model versions might be optimized.

More visual insights#

More on figures

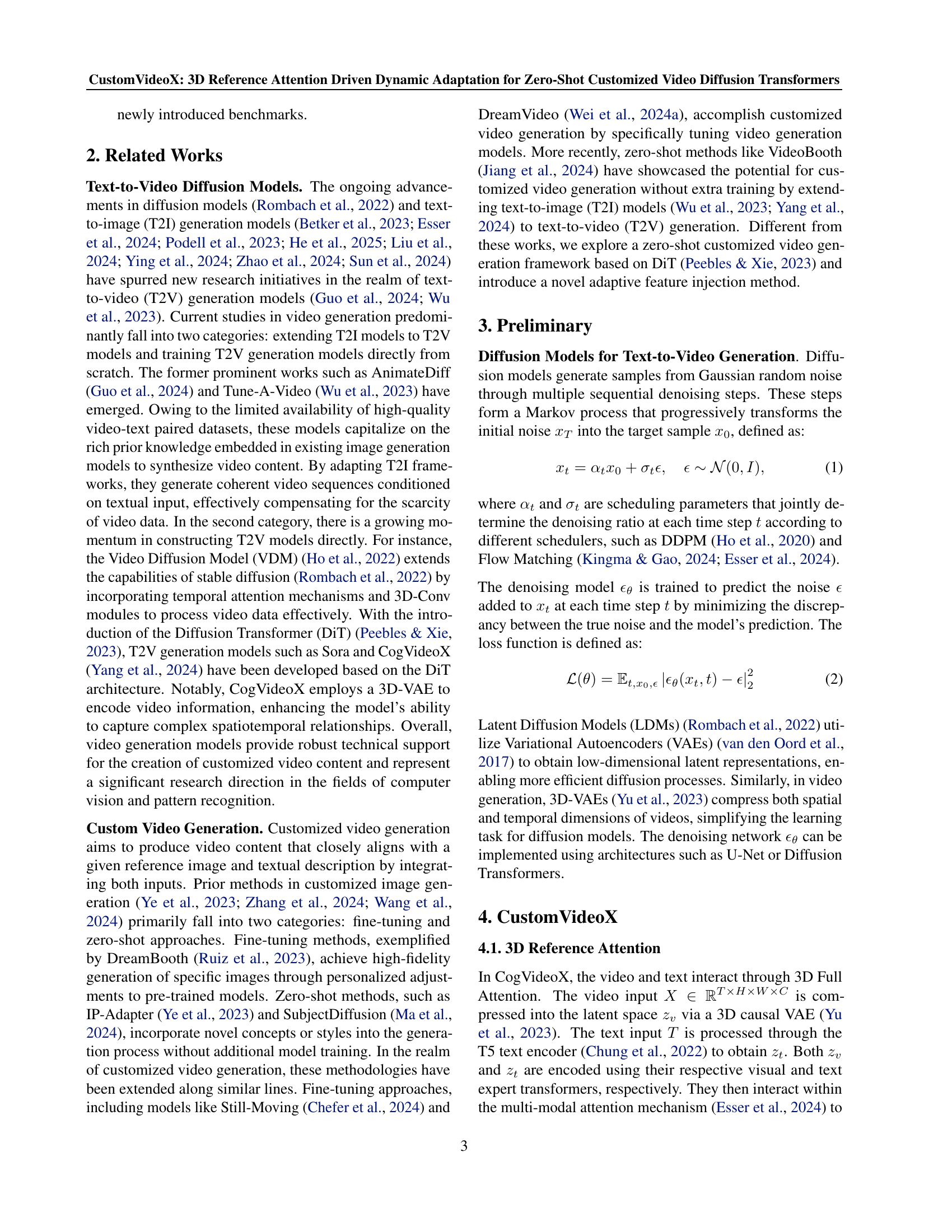

🔼 CustomVideoX uses a three-stage process to generate personalized videos. First, it takes a reference image and textual description as input. Second, it employs 3D Reference Attention to dynamically integrate reference image features with each video frame, ensuring both spatial and temporal consistency. Third, Time-Aware Attention Bias and Entity Region-Aware Enhancement modules further refine the process, optimizing the balance between reference image influence and textual guidance throughout the video generation process. This approach leads to videos that adhere to instructions while maintaining high quality and visual consistency.

read the caption

Figure 2: The overall pipeline of CustomVideoX. CustomVideoX is capable of producing personalized videos that conform to specified instructions, utilizing provided image objects and textual descriptions. It enhances each video frame by incorporating reference image through 3D Reference Attention mechanism, allowing for dynamic interactions between the reference images and video frames, both temporally and spatially. Moreover, CustomVideoX employs a Time-Aware Attention Bias strategy and an Entity Region-Aware Enhancement module to boost spatial and temporal coherence throughout the denoising process, enabling the model to maintain consistent reference feature capture across frames.

🔼 Figure 3 illustrates the comparison between time-aware attention bias and fixed attention bias during the video generation process. The y-axis represents the attention bias, and the x-axis represents the timestep in the sampling process. The blue line shows the fixed attention bias, which remains constant throughout the process. The orange line represents the time-aware attention bias, which dynamically adjusts the influence of reference features using a parabolic temporal mask. This parabolic function starts with minimal influence at the beginning and end of the generation sequence, gradually increasing in the middle before decreasing again. This dynamic modulation ensures a smoother and more consistent integration of reference features throughout the video generation process, improving the overall temporal coherence and visual quality.

read the caption

Figure 3: The time-aware attention bias v.s. fixed attention bias in the sampling process. TAB dynamically regulates the influence of reference features using a parabolic temporal mask, enhancing the consistency of reference images throughout the generation sequence.

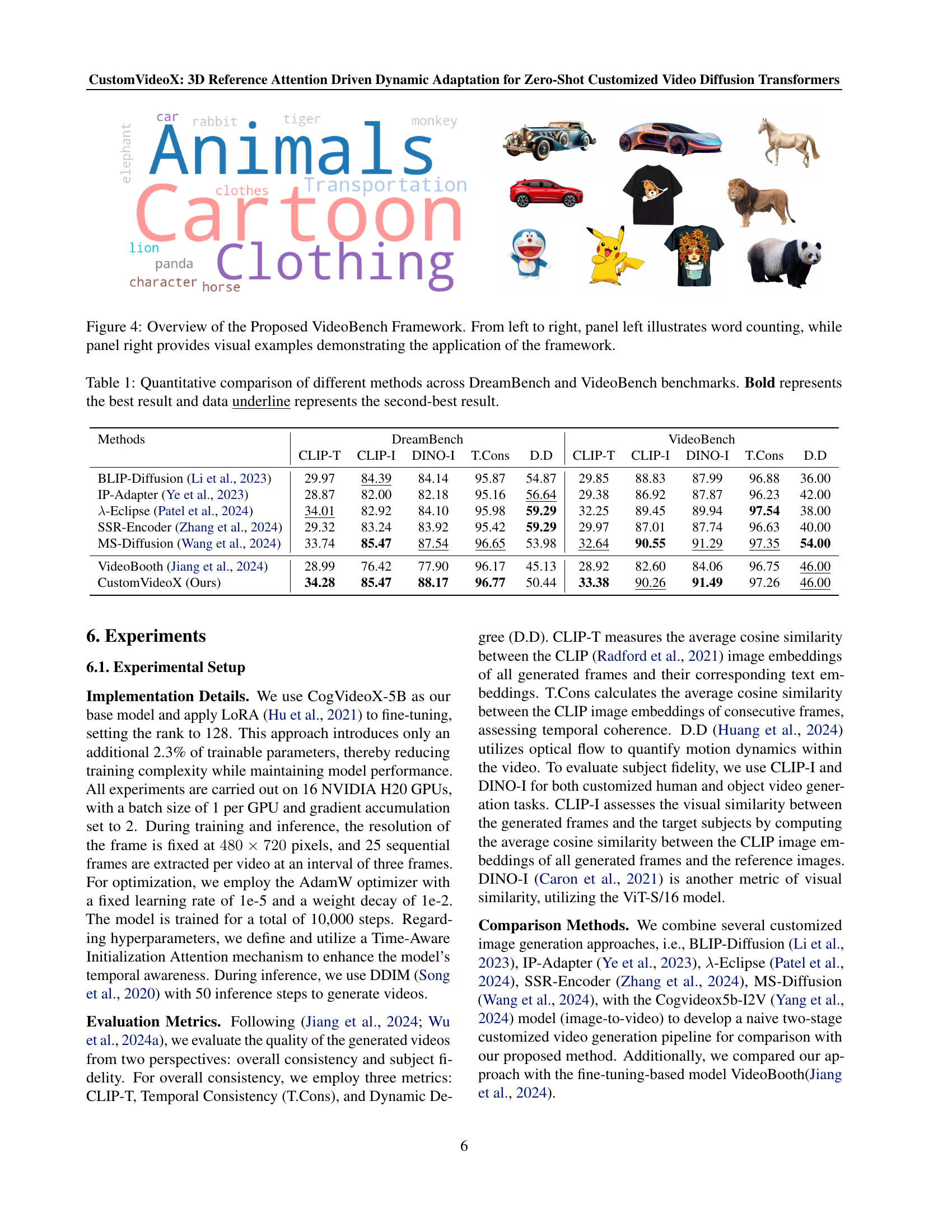

🔼 Figure 4 presents a comprehensive overview of the VideoBench framework, a novel benchmark designed for evaluating the performance of customized video generation models. The figure is divided into two main sections: a word count visualization (left panel) and a visual demonstration (right panel). The word count panel provides a quantitative analysis of the dataset, indicating the number of distinct objects and associated prompts used to create the benchmark, offering insights into its diversity and coverage. The visual demonstration panel displays example videos generated by various methods, showcasing the application and effectiveness of the VideoBench framework, thus highlighting its role in evaluating the quality, consistency, and overall performance of different customized video generation models.

read the caption

Figure 4: Overview of the Proposed VideoBench Framework. From left to right, panel left illustrates word counting, while panel right provides visual examples demonstrating the application of the framework.

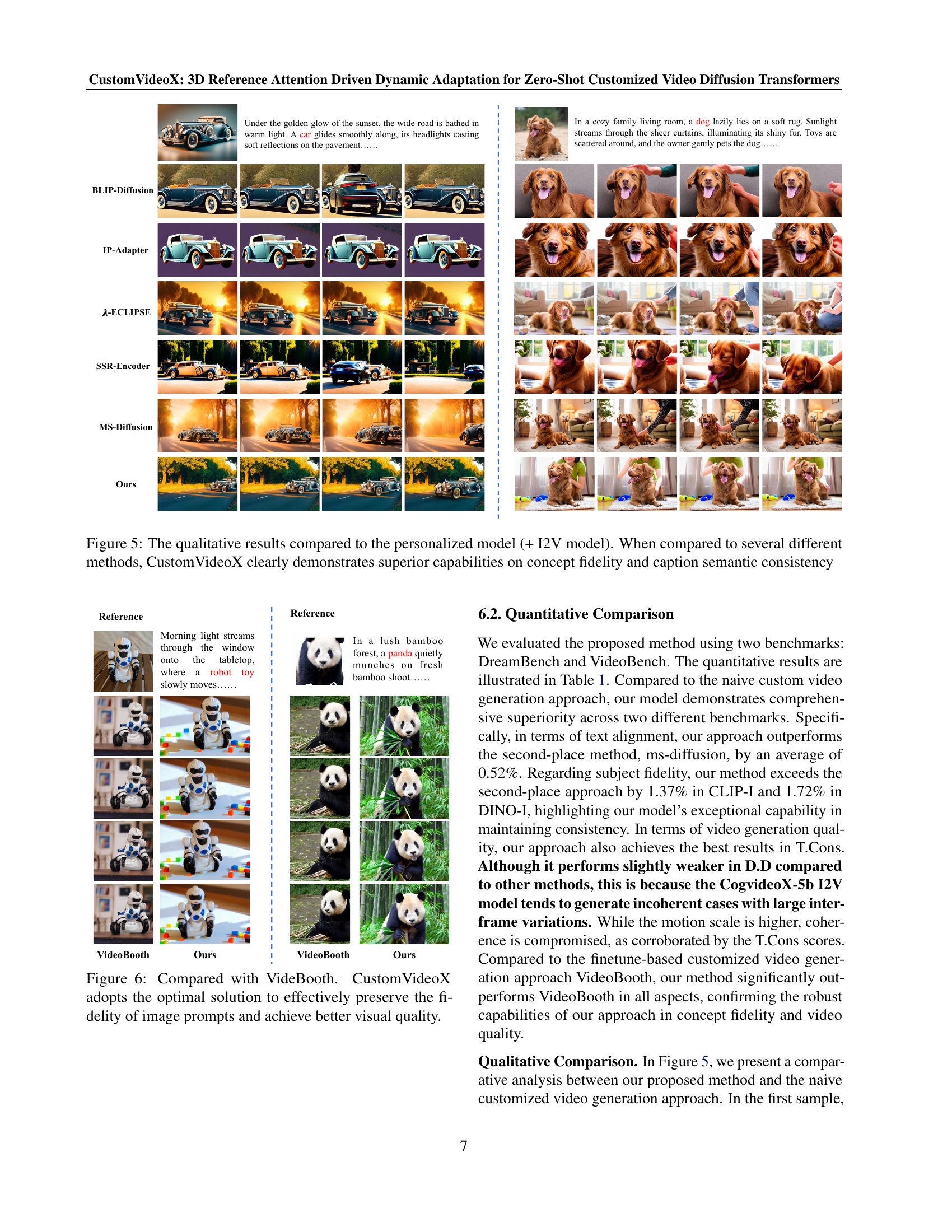

🔼 Figure 5 presents a qualitative comparison of video generation results between CustomVideoX and other methods. It showcases CustomVideoX’s superior ability to maintain concept fidelity and semantic consistency with the given captions, even when compared to models that use an image-to-video approach (+ I2V). The figure visually demonstrates the improved quality and alignment of CustomVideoX’s generated videos with textual descriptions, highlighting its advantages in personalized video generation.

read the caption

Figure 5: The qualitative results compared to the personalized model (+ I2V model). When compared to several different methods, CustomVideoX clearly demonstrates superior capabilities on concept fidelity and caption semantic consistency

🔼 Figure 6 presents a comparison of video generation results between CustomVideoX and VideoBooth, highlighting CustomVideoX’s superior ability to maintain the visual fidelity of the input image prompt while producing videos of higher overall quality. The figure visually demonstrates that CustomVideoX more accurately reflects the details and features of the reference image in the generated video sequence, showcasing an improvement in visual quality over VideoBooth.

read the caption

Figure 6: Compared with VideBooth. CustomVideoX adopts the optimal solution to effectively preserve the fidelity of image prompts and achieve better visual quality.

🔼 Figure 7 shows an ablation study comparing the effects of three key modules in the CustomVideoX model: 3D Reference Attention, Time-Aware Attention Bias (TAB), and Entity Region-Aware Enhancement (ERAE). Each row presents a video generated with different combinations of these modules enabled or disabled. By comparing the visual results across the rows, one can assess the individual contribution of each module to the overall quality and consistency of video generation. The results demonstrate the improvements to generated video clarity, object consistency, and temporal coherence from each component.

read the caption

Figure 7: Ablation study visualizations comparing module contributions. Demonstration of the effectiveness of the 3D Reference Attention, TAB, and ERAE modules.

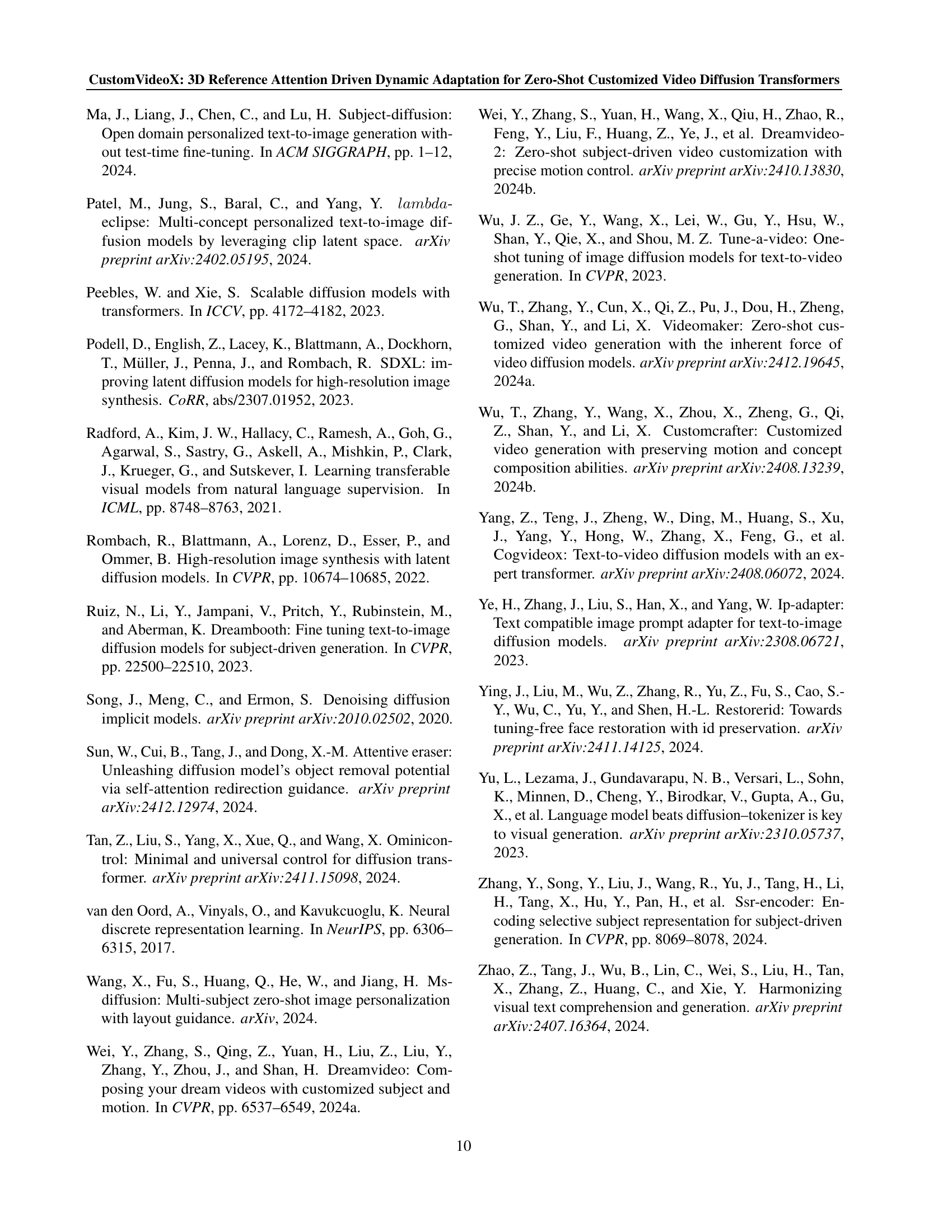

🔼 Figure 8 details the process of curating a high-quality video dataset for training a video generation model. The process starts by filtering videos based on resolution (requiring 1080p or higher), aesthetic score (above 5.3), and motion score to ensure high quality and dynamic content. Temporal consistency is also checked, discarding videos with poor coherence. Next, videos undergo further filtering using Qwen-2.5 (a language model) to identify the main entity and assess background complexity. Complex backgrounds are excluded, ensuring focus on clear subjects. Finally, Grounding SAM (a segmentation model) is used to extract the main object from the first frame of each selected video to create training data pairs, consisting of the extracted object and the corresponding video segment. This multi-stage filtering process ensures that the final dataset contains only high-quality videos with clear subjects and simple backgrounds suitable for training.

read the caption

Figure 8: The overview of video customization data collection pipeline. When dealing with complex scenarios that contain concepts with Qwen2.5 and Grounding SAM models. Our data pipeline could still extract precise video quality via video resolution, aesthetic score, motion score, and temporal consistency.

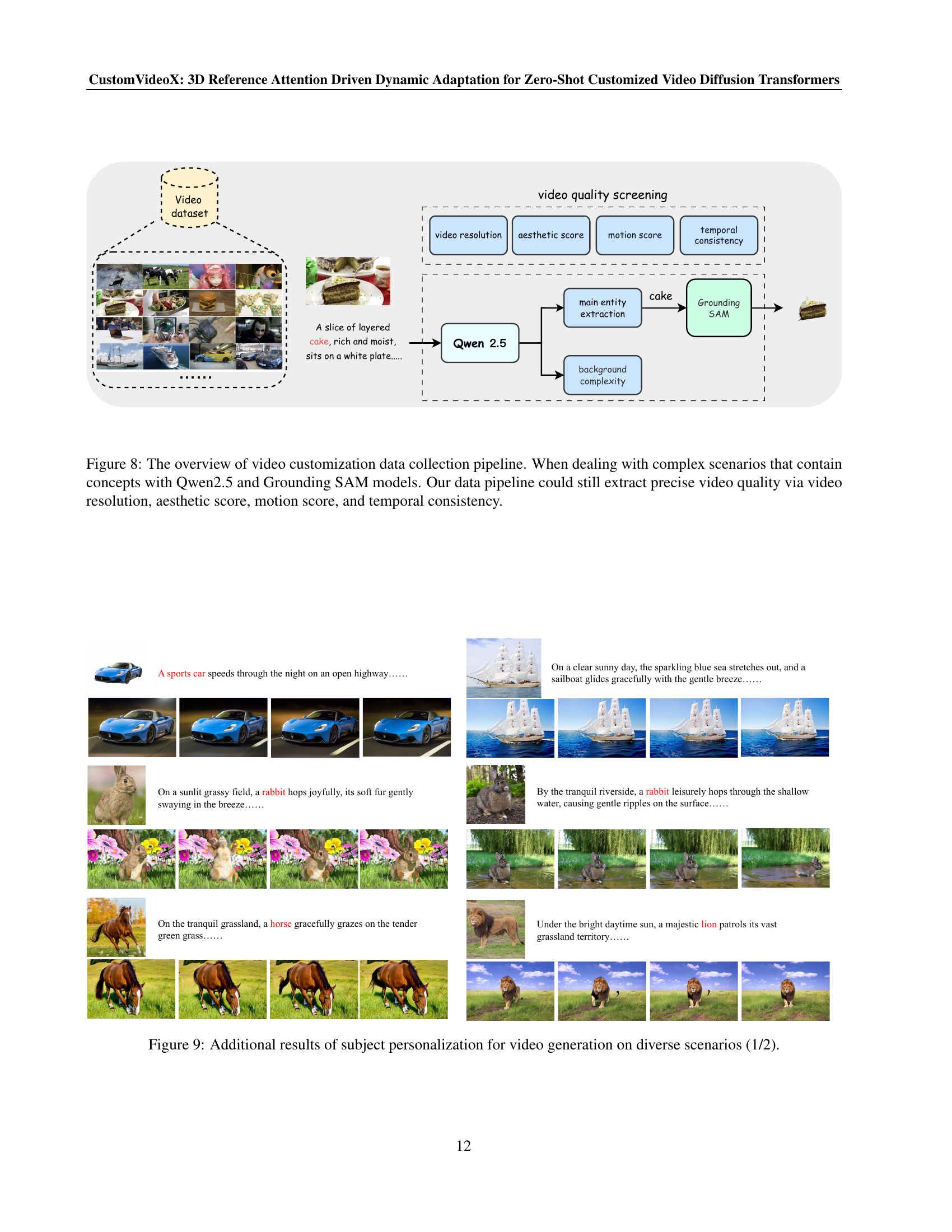

🔼 This figure displays example videos generated by the CustomVideoX model, showcasing its ability to personalize video generation across a variety of scenes and objects. The examples demonstrate the model’s capability to maintain subject consistency and produce high-quality videos, even with complex scenes and diverse object types, including animals, vehicles, and cartoon characters. Each row shows a reference image and the generated video sequence. The results highlight CustomVideoX’s performance in handling challenging video generation tasks.

read the caption

Figure 9: Additional results of subject personalization for video generation on diverse scenarios (1/2).

Full paper#