TL;DR#

Many researchers question whether Large Language Models (LLMs) truly understand concepts or simply mimic patterns like “stochastic parrots.” Existing studies mostly lack quantitative evidence. This research addresses the issue by proposing a novel benchmark, PHYSICO, focusing on physical concept understanding, to assess various levels of understanding in LLMs.

The PHYSICO benchmark uses a summative assessment approach, incorporating both low-level (natural language) and high-level (abstract grid representation and visual input) tasks. Experiments show that while LLMs excel at low-level tasks, they significantly underperform on high-level tasks compared to humans, revealing that LLMs primarily struggle due to difficulties in deep understanding, not the test format. This confirms the “stochastic parrot” phenomenon and provides a valuable tool for evaluating LLMs.

Key Takeaways#

Why does it matter?#

This paper is crucial because it provides quantitative evidence to support the widely debated claim that large language models (LLMs) may not truly understand what they generate, but rather act as “stochastic parrots.” This challenges the assumptions underlying many current applications of LLMs and opens avenues for research into improved LLM understanding and evaluation.

Visual Insights#

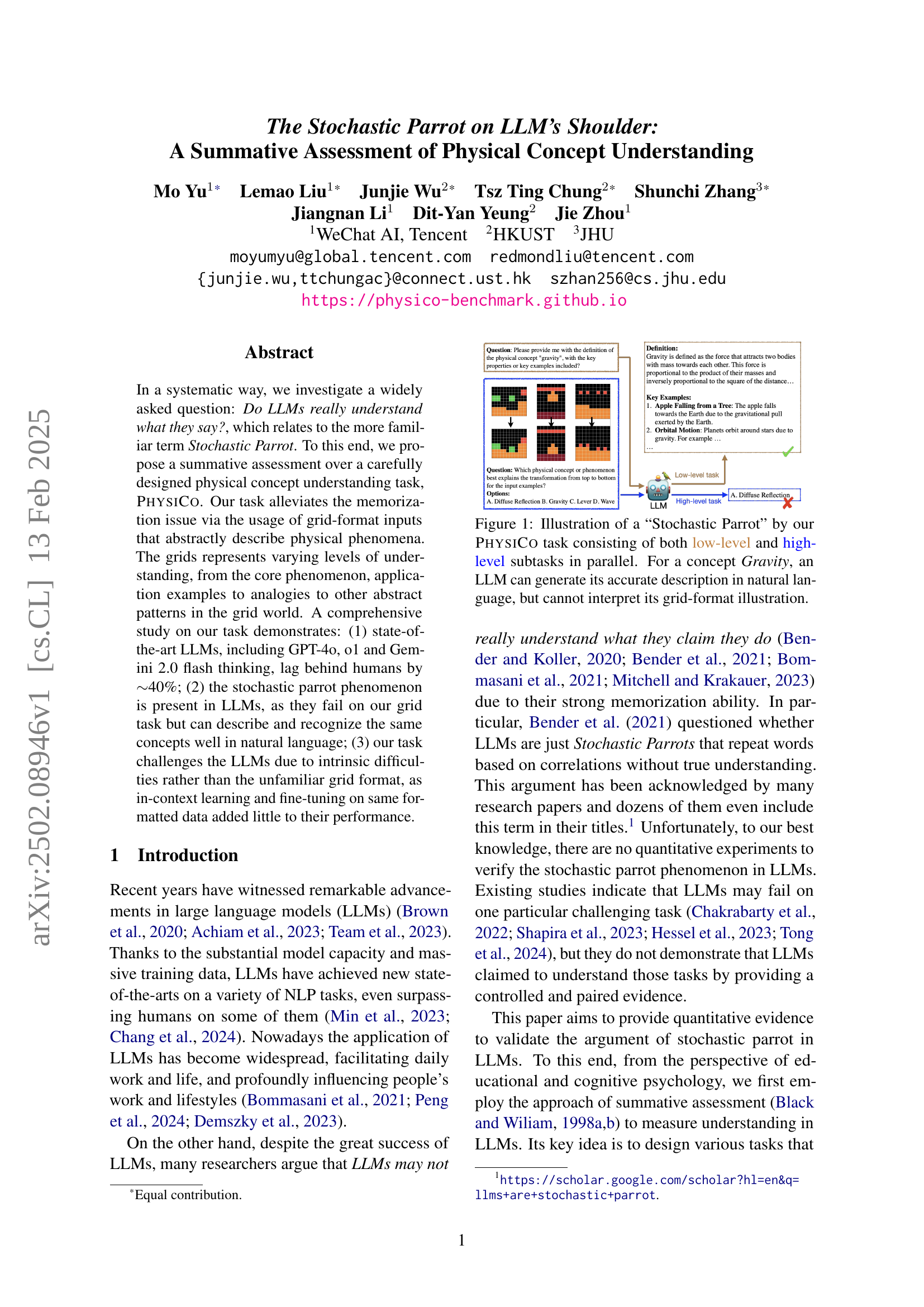

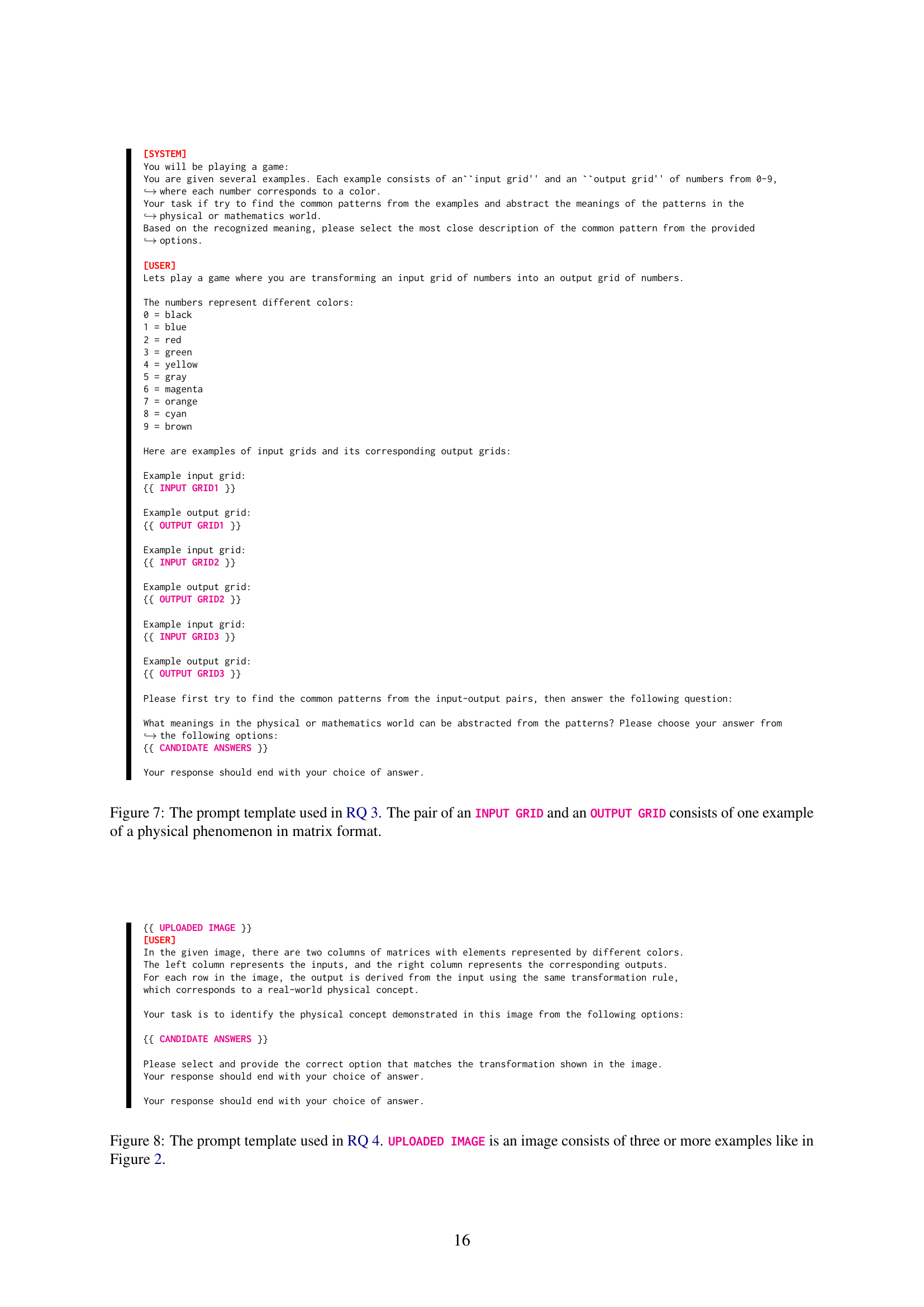

🔼 This figure illustrates the concept of a ‘Stochastic Parrot’ using the PhysiCo task. The task presents a concept (in this example, Gravity) in two ways: a natural language description (low-level task) and an abstract grid-based illustration (high-level task). The figure shows that LLMs can accurately generate a natural language description of Gravity, demonstrating a seemingly high level of understanding. However, they struggle to correctly interpret the grid-based representation, failing the high-level task, indicating that their understanding might be superficial, merely mimicking patterns (like a parrot) without true comprehension.

read the caption

Figure 1: Illustration of a “Stochastic Parrot” by our PhysiCo task consisting of both low-level and high-level subtasks in parallel. For a concept Gravity, an LLM can generate its accurate description in natural language, but cannot interpret its grid-format illustration.

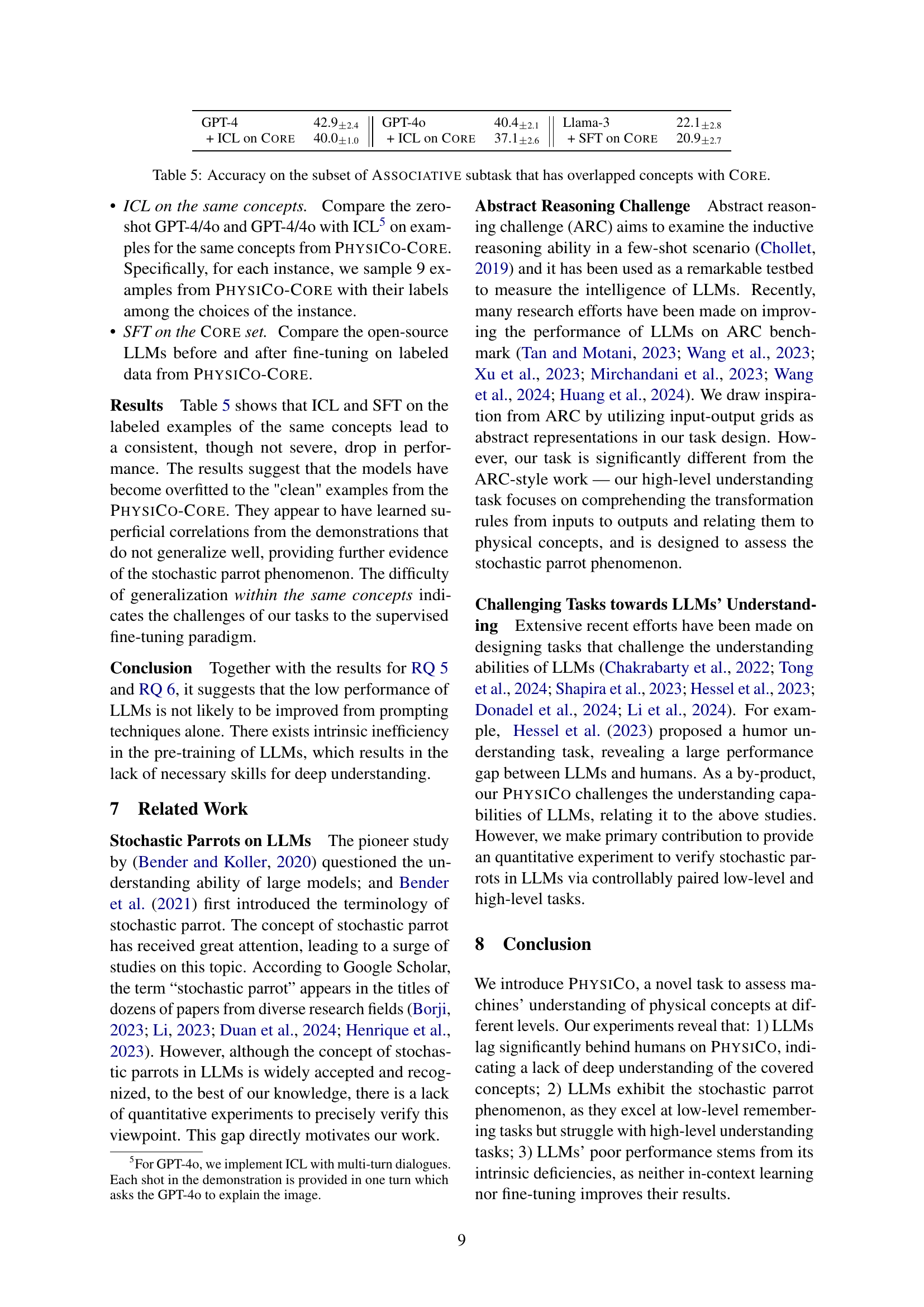

| (a) | Mistral | Llama-3 | GPT-3.5 | GPT-4 |

| 81.0 | 88.5 | 97.3 | 95.0 | |

| (b) | InternVL | LLaVA | GPT-4v | GPT-4o |

| 66.3 | 66.7 | 93.7 | 93.7 |

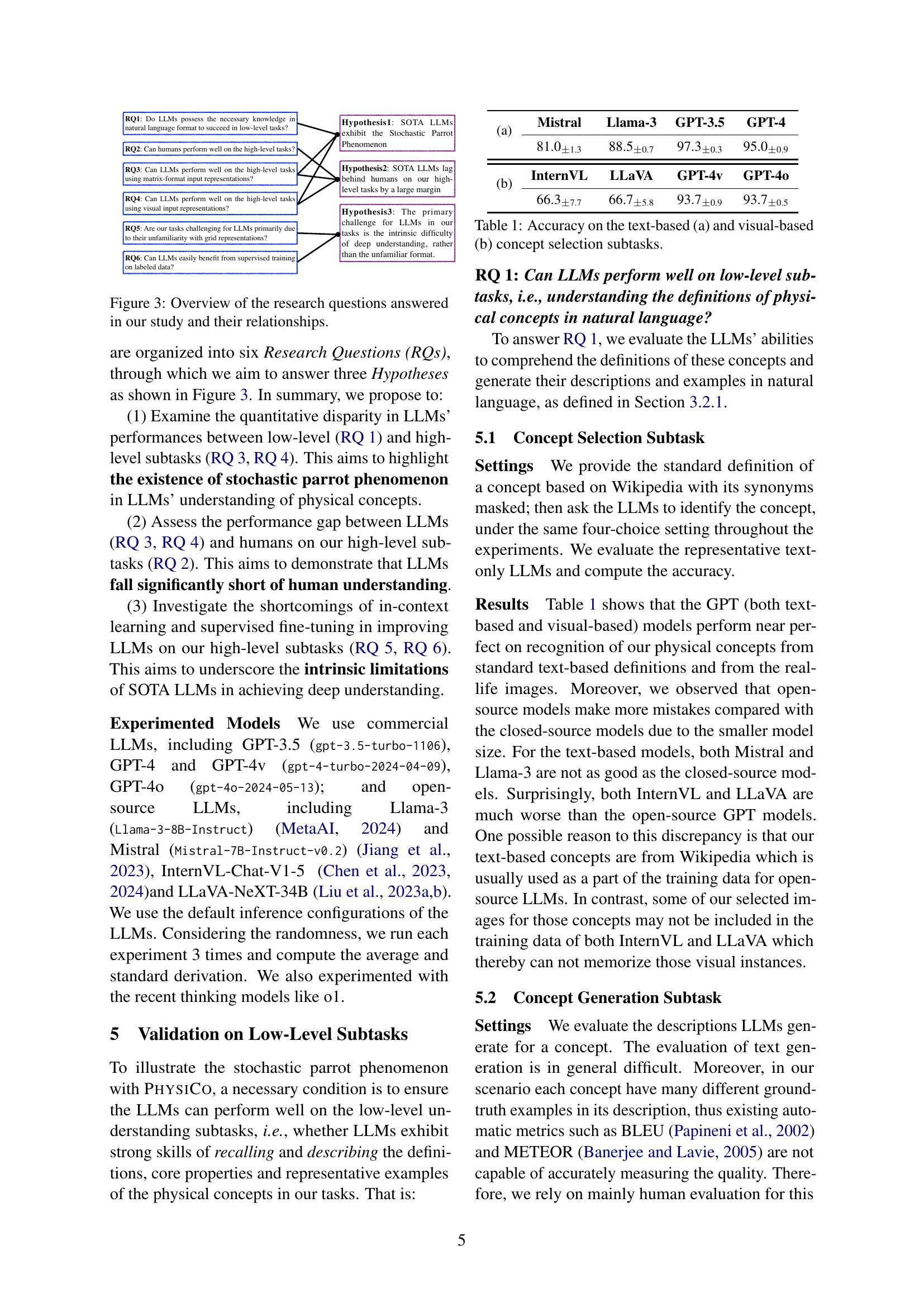

🔼 This table presents the accuracy of different LLMs on two concept selection subtasks. Subtask (a) uses text-based definitions of physical concepts, while subtask (b) uses visual representations (images) of the same concepts. The table shows the accuracy of each LLM on both subtasks, allowing for a comparison of their performance across different modalities and an assessment of their knowledge recall ability.

read the caption

Table 1: Accuracy on the text-based (a) and visual-based (b) concept selection subtasks.

In-depth insights#

LLM Concept Limits#

Large language models (LLMs) demonstrate impressive capabilities but are limited in their conceptual understanding. While LLMs excel at tasks involving pattern recognition and surface-level linguistic manipulation, they often struggle with tasks requiring deep conceptual understanding. This limitation manifests as a failure to generalize learned patterns to novel situations and an inability to robustly apply knowledge to unseen contexts. The research highlights that LLMs, despite exhibiting fluent language generation, may not possess genuine understanding and can be described as “stochastic parrots.” This is crucial because many applications rely on true comprehension, not just the superficial imitation of patterns. Addressing this conceptual limitation requires further research into improving LLM architectures and training methodologies to focus on building genuine knowledge representation, not just statistical correlations between words and phrases. Focusing on more complex and nuanced tasks, particularly those involving deeper semantic processing, will likely push the boundaries of LLMs’ conceptual capacity. Future advancements should concentrate on creating systems that can reason, infer, and genuinely understand, rather than simply predict the most probable next word or phrase.

Stochastic Parrot Effect#

The “Stochastic Parrot Effect” describes large language models (LLMs) that mimic human language impressively, but without genuine understanding. They excel at surface-level tasks, like paraphrasing or generating text based on learned statistical correlations in massive datasets. This is highlighted by the fact that they often fail on tasks requiring actual comprehension or reasoning about the underlying concepts, especially those demanding deep understanding of the physical world. LLMs can produce fluent text even when presented with abstract or unusual representations of information, showcasing their ability to manipulate language without genuine semantic grasp. This is significant because it exposes a critical limitation: LLMs may not truly understand the meaning they generate, leading to potentially misleading or inaccurate outputs. The assessment is, therefore, crucial to verify and quantify this effect in LLMs, and to develop methods for improving their ability to move beyond mere pattern recognition to demonstrate genuine comprehension.

PHYSICO Benchmark#

The hypothetical “PHYSICO Benchmark” presented in the research paper appears to be a novel and rigorous assessment designed to evaluate the true understanding of Large Language Models (LLMs) regarding physical concepts. Unlike simpler tests relying on textual input and output, PHYSICO likely leverages a multi-faceted approach. Grid-based representations of physical phenomena are likely used, forcing LLMs to move beyond simple memorization and demonstrate deeper comprehension. The benchmark’s strength lies in its ability to distinguish between superficial pattern recognition (the “stochastic parrot” phenomenon) and genuine conceptual understanding. By including both low-level and high-level tasks, PHYSICO can expose the limitations of LLMs, highlighting their ability to excel at rote memorization while struggling with complex, abstract reasoning. This systematic evaluation approach allows for quantitative analysis, providing valuable data to assess and potentially improve the reasoning capabilities of LLMs. The results from PHYSICO could lead to advancements in LLM architecture and training methods, pushing the field toward the development of genuinely intelligent AI systems.

Multimodal LLM Gap#

The concept of a “Multimodal LLM Gap” highlights the significant performance disparity between multimodal large language models (LLMs) and humans in tasks requiring deep understanding, especially when dealing with abstract representations of physical concepts or phenomena. While multimodal LLMs excel at low-level tasks such as image recognition and captioning, they struggle with high-level tasks involving reasoning, abstraction, and the integration of visual and textual information. This gap underscores the limitations of current multimodal LLMs in truly understanding concepts, often exhibiting a “stochastic parrot” behavior where they can manipulate words without genuine comprehension. Bridging this gap requires advancements in model architecture, training methodologies (e.g., incorporation of more diverse and nuanced datasets), and evaluation metrics that accurately assess deep understanding beyond surface-level performance. Further research should focus on developing tasks that specifically probe high-level cognitive abilities and investigate how to improve LLMs’ capacity for genuine knowledge representation and reasoning, rather than mere pattern recognition.

Future Research#

Future research should address several key limitations of the current study. Expanding the scope of PHYSICO to encompass a broader range of physical concepts and difficulty levels is crucial to ensure greater generalizability and robustness. This includes exploring more complex phenomena beyond high school physics. Additionally, the investigation of alternative assessment methods, such as those drawing on cognitive psychology, could provide richer insights into LLM understanding. The development of more nuanced metrics for evaluating high-level understanding is vital to move beyond simple accuracy scores and capture the subtleties of reasoning and knowledge application. Finally, exploring different LLM architectures and training methodologies could help to determine the extent to which the stochastic parrot phenomenon is inherent to current LLMs or an artifact of specific design choices. Addressing these points will significantly advance the field’s comprehension of LLM capabilities and limitations.

More visual insights#

More on tables

| Mistral | Llama-3 | GPT-3.5 | GPT-4 |

| 92.6 | 100 | 100 | 100 |

🔼 This table presents the results of human evaluations assessing the quality of concept descriptions generated by different large language models (LLMs). Human annotators evaluated each generated description, assigning a score of 0 if it contained factual errors or inaccurate examples, and a score of 1 otherwise. The table shows the accuracy scores achieved by each LLM, reflecting their ability to generate accurate and complete descriptions of the target concepts.

read the caption

Table 2: Human evaluations on concept generation.

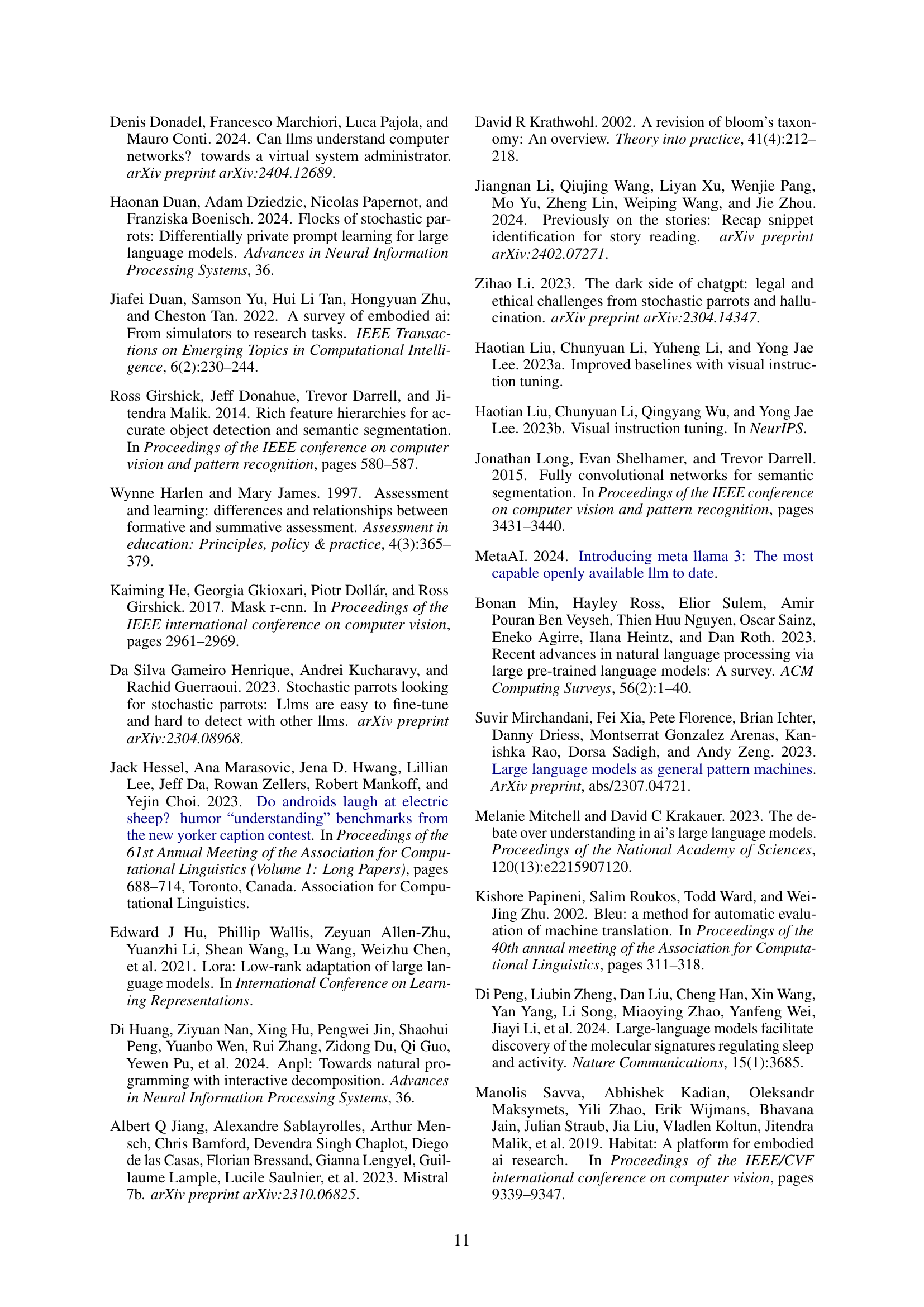

| Models | Dev | Test | ||

| Core-Dev | Core-Test | Assoc. | ||

| Random | 25.0 | 25.0 | 25.0 | |

| text-only | GPT-3.5 | 26.5 | 24.4 | 30.0 |

| GPT-4 | 41.3 | 28.2 | 38.3 | |

| GPT-4o | 34.0 | 31.3 | 35.5 | |

| o3-mini-high | 46.0∗ | 46.5 | 42.5 | |

| Mistral | 21.5 | 26.0 | 23.2 | |

| Llama-3 | 23.5 | 27.3 | 21.7 | |

| DeepSeek-R1 | 41.5 | 29.5 | 55.0 | |

| multi-modal | GPT-4v | 34.2 | 28.7 | 32.0 |

| GPT-4o | 52.3 | 45.2 | 36.5 | |

| +CoT | 46.0 | 43.5 | 39.5 | |

| o1 | 53.0 | 42.5 | 34.5 | |

| Gemini2 FTE | 49.8 | 43.2 | 36.8 | |

| InternVL | 26.3 | 26.9 | 24.8 | |

| LLaVA | 26.2 | 28.5 | 24.7 | |

| Humans | 92.0 | 89.5 | 77.8 | |

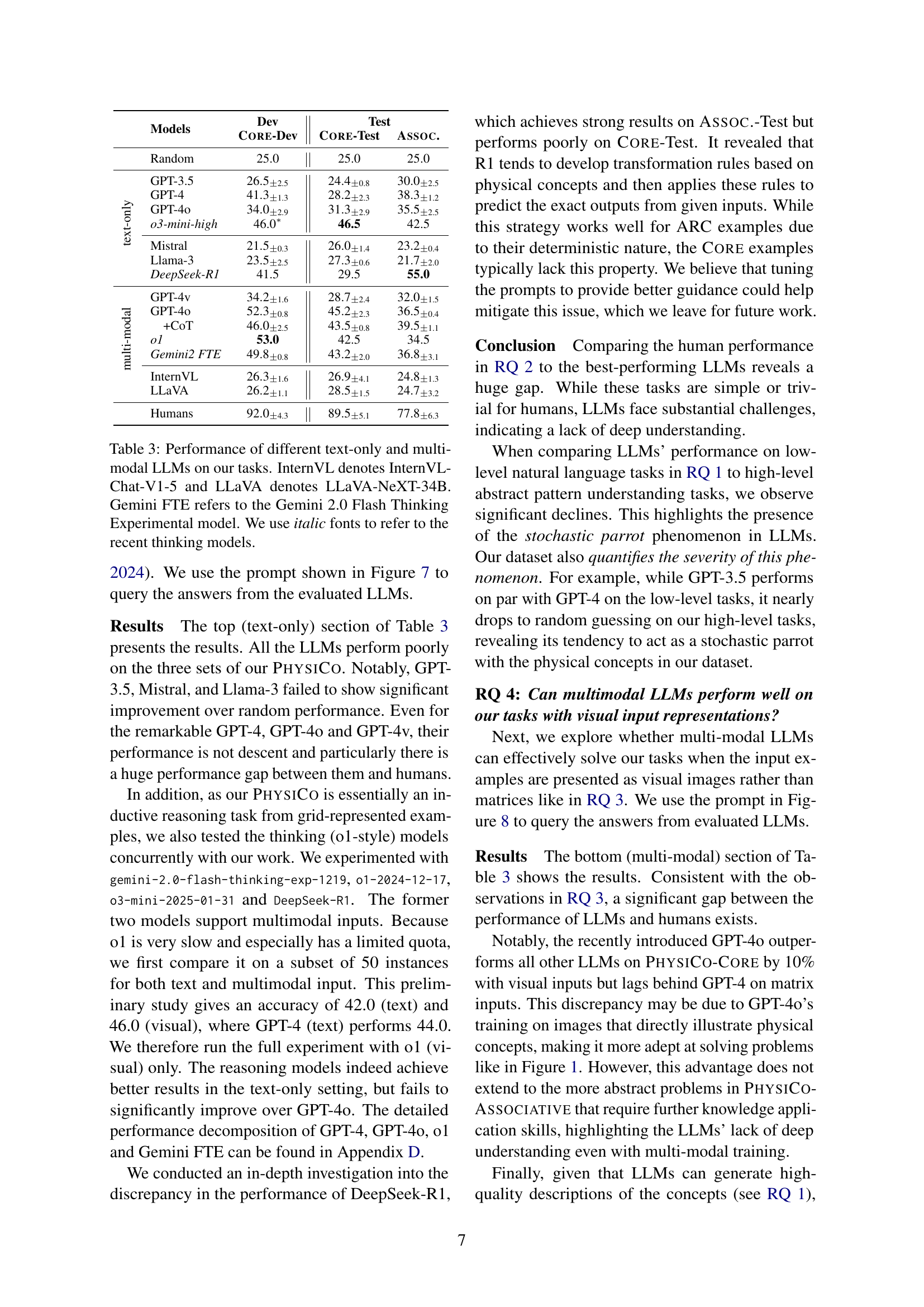

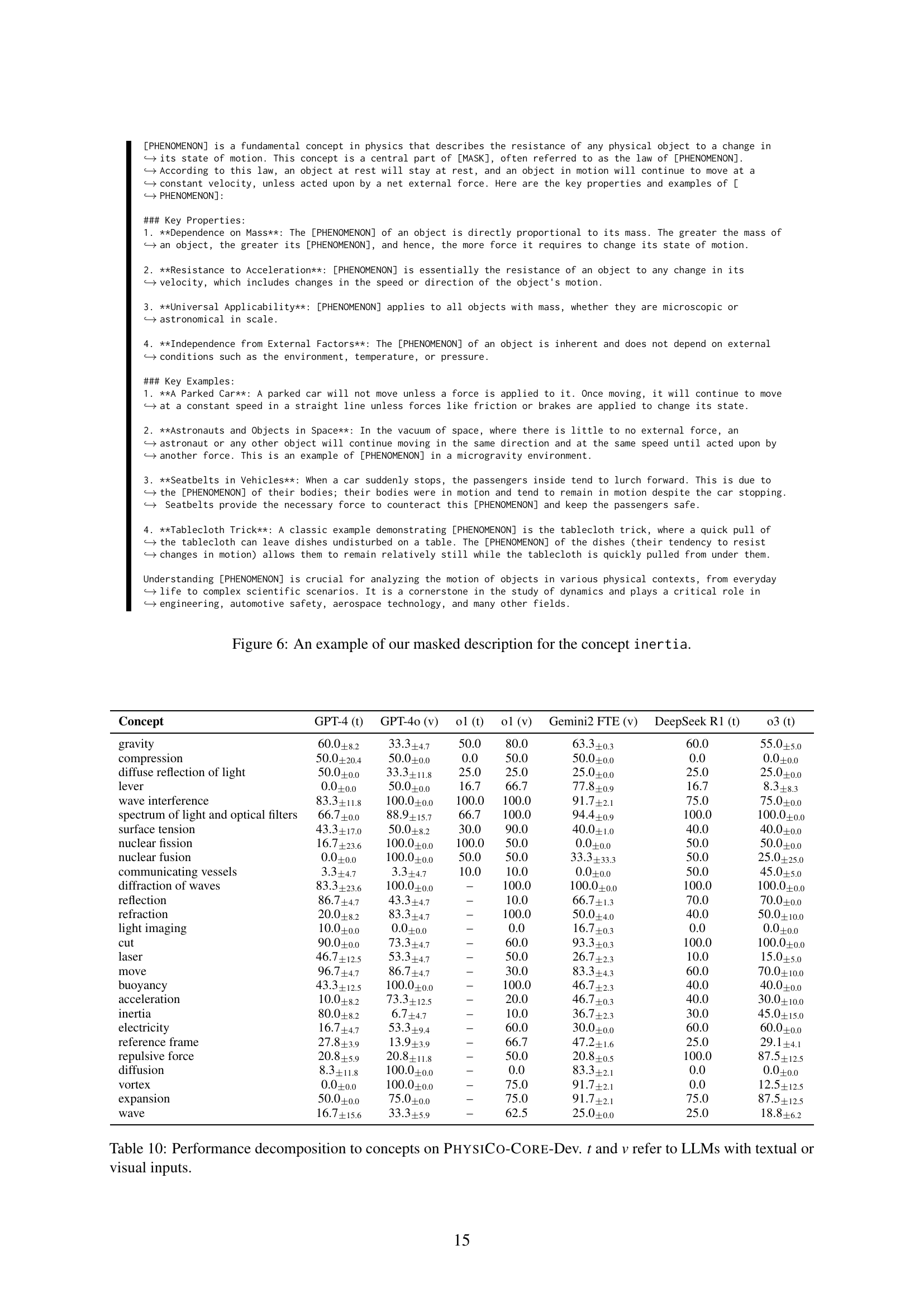

🔼 This table presents the performance of various large language models (LLMs) on the PHYSICO tasks. It compares the accuracy of text-only models (like GPT-3.5, GPT-4, Llama-3, Mistral) and multi-modal models (InternVL, LLaVA, Gemini 2.0 Flash Thinking). The results are broken down by task type (CORE-Dev, CORE-Test, and ASSOCIATIVE) to show how well the models perform on different levels of understanding. Recent models are indicated by italicized font. The table shows the accuracy of each model on different sub-tasks, revealing the relative strengths and weaknesses of various LLMs in handling complex physical concepts.

read the caption

Table 3: Performance of different text-only and multi-modal LLMs on our tasks. InternVL denotes InternVL-Chat-V1-5 and LLaVA denotes LLaVA-NeXT-34B. Gemini FTE refers to the Gemini 2.0 Flash Thinking Experimental model. We use italic fonts to refer to the recent thinking models.

| CoT - definitions | 46.0 | CoT - low-level | 50.7 |

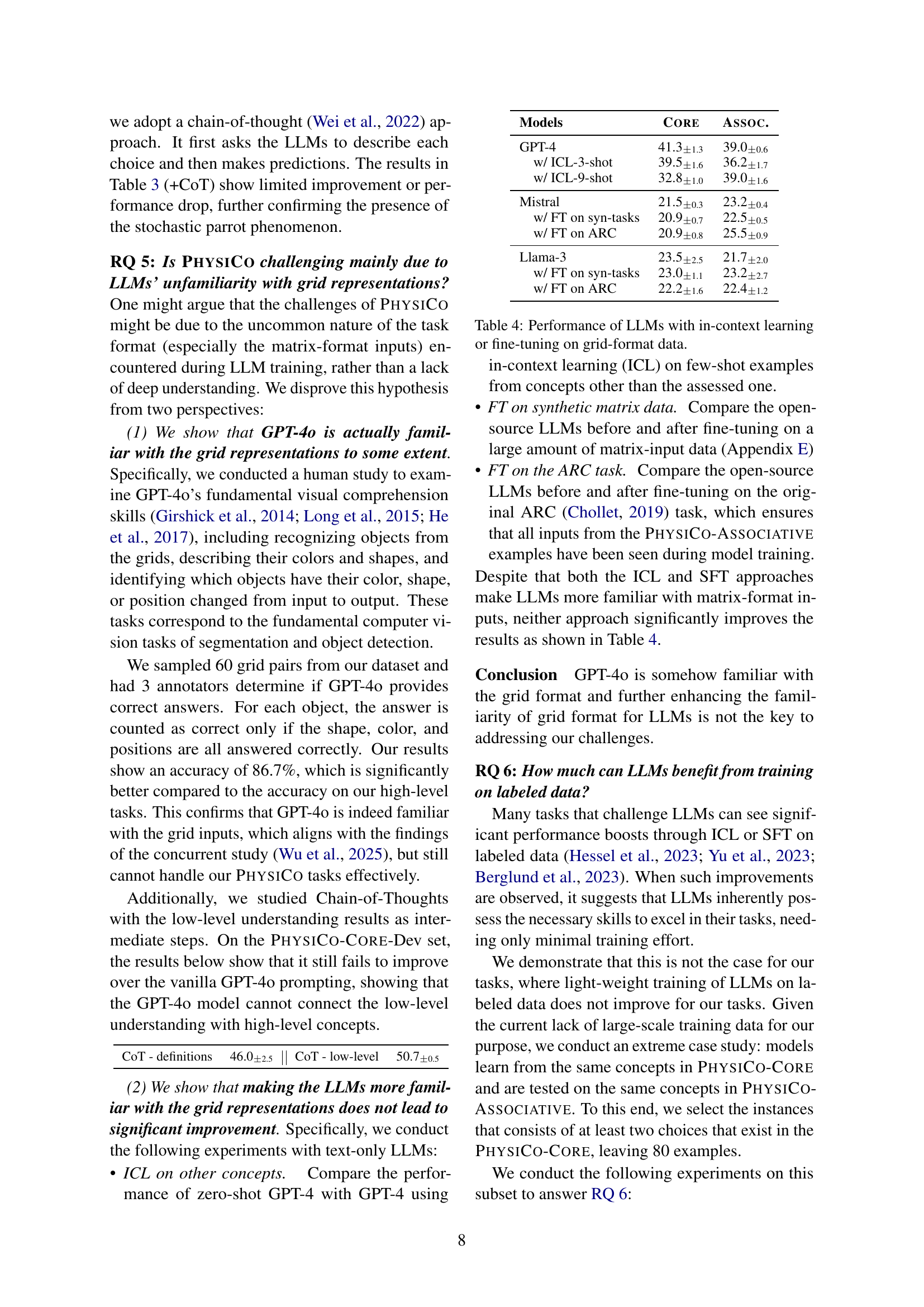

🔼 This table presents the performance of various Large Language Models (LLMs) on tasks involving grid-format data. It compares the performance of the LLMs under three different conditions: zero-shot (no additional training), in-context learning (ICL) with a few-shot examples, and fine-tuning (FT) on synthetic and ARC (Abstract Reasoning Corpus) datasets. The goal is to investigate how familiarity with the grid format affects LLM performance and whether it is possible to improve performance using additional training data. The results show whether fine-tuning improves LLM’s performance, and if the use of in-context learning with few-shot examples improves performance, compared to a zero-shot baseline.

read the caption

Table 4: Performance of LLMs with in-context learning or fine-tuning on grid-format data.

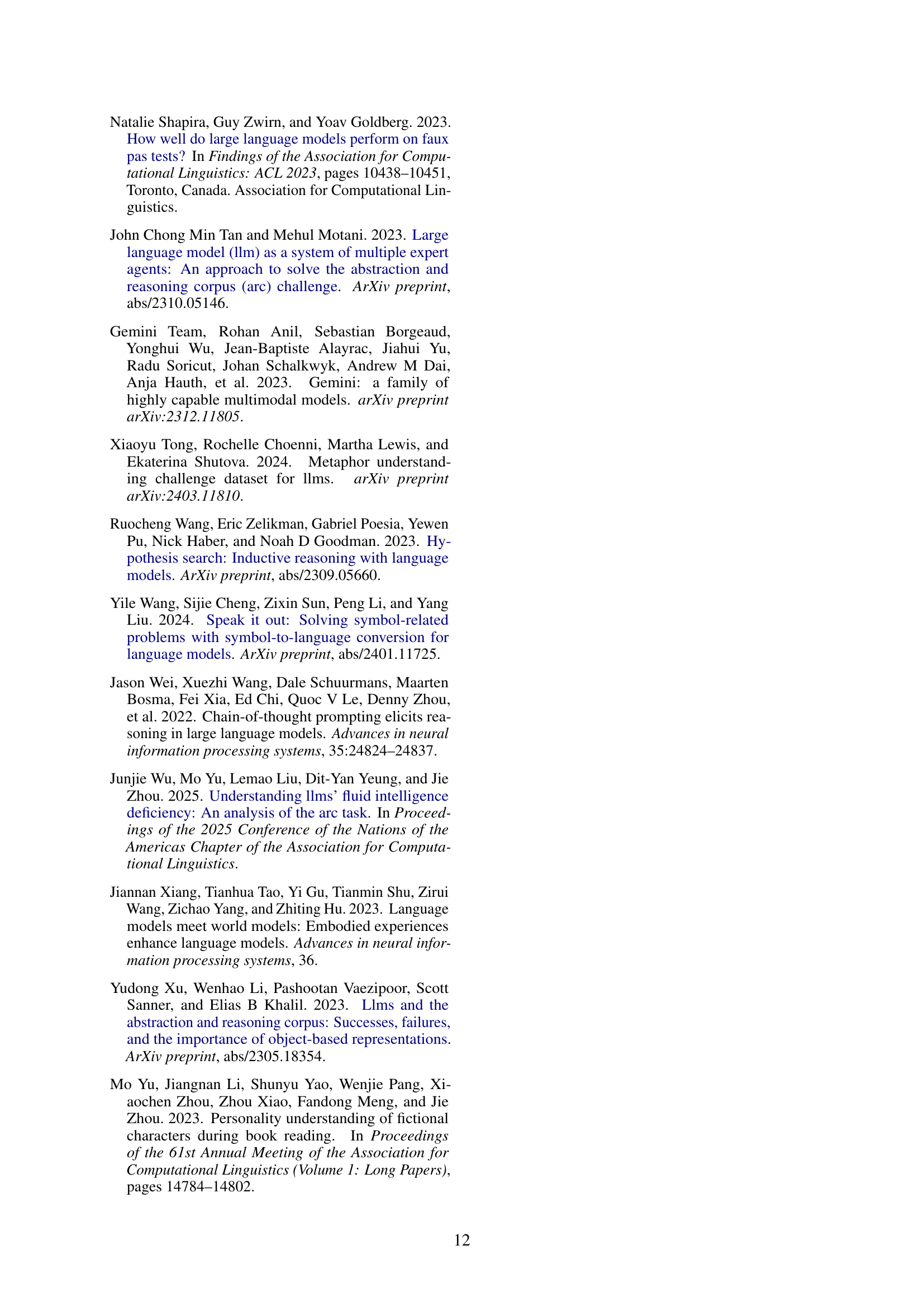

| Models | Core | Assoc. |

| GPT-4 | 41.3 | 39.0 |

| w/ ICL-3-shot | 39.5 | 36.2 |

| w/ ICL-9-shot | 32.8 | 39.0 |

| Mistral | 21.5 | 23.2 |

| w/ FT on syn-tasks | 20.9 | 22.5 |

| w/ FT on ARC | 20.9 | 25.5 |

| Llama-3 | 23.5 | 21.7 |

| w/ FT on syn-tasks | 23.0 | 23.2 |

| w/ FT on ARC | 22.2 | 22.4 |

🔼 This table presents the accuracy results of different language models on a subset of the PHYSICO-ASSOCIATIVE task. The subset includes only those instances whose concepts overlap with those in the PHYSICO-CORE task. This allows for a focused evaluation of the models’ ability to generalize knowledge learned from the core concepts to related, but not identical, scenarios.

read the caption

Table 5: Accuracy on the subset of Associative subtask that has overlapped concepts with Core.

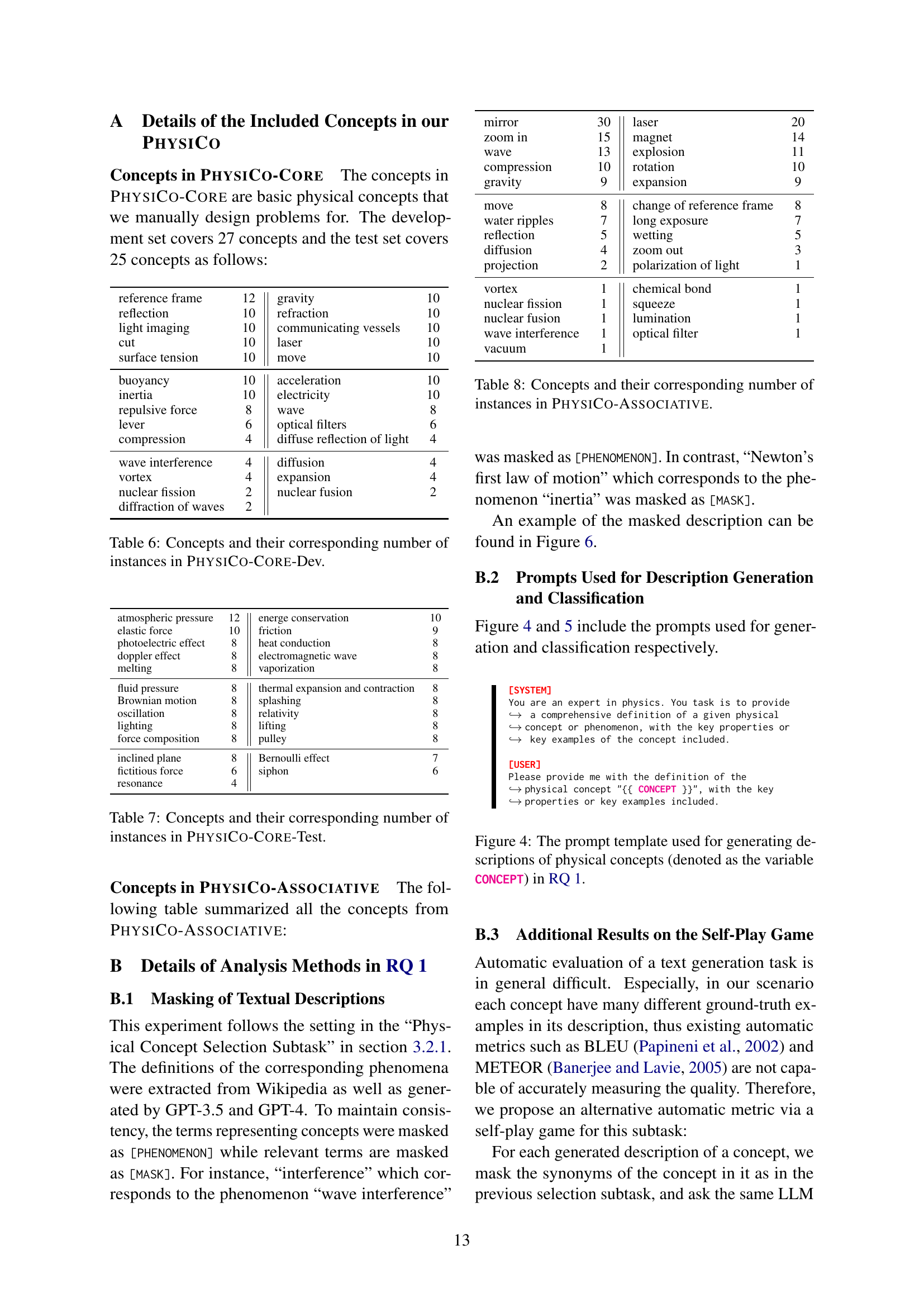

| GPT-4 | 42.9 | GPT-4o | 40.4 | Llama-3 | 22.1 |

| + ICL on Core | 40.0 | + ICL on Core | 37.1 | + SFT on Core | 20.9 |

🔼 This table lists the concepts used in the PHYSICO-CORE development dataset and the number of instances for each concept. The PHYSICO-CORE dataset focuses on basic physical concepts relevant to high school level physics. This table provides a summary of the data distribution within the development set, which is used for training and model development in the experiments.

read the caption

Table 6: Concepts and their corresponding number of instances in PhysiCo-Core-Dev.

| Mistral | Llama-3 | GPT-3.5 | GPT-4 | |

| Human | 92.6 | 100 | 100 | 100 |

| SP | 89.2 | 91.9 | 96.0 | 99.8 |

🔼 This table lists the physical concepts used in the PHYSICO-CORE-Test subset of the PHYSICO benchmark. For each concept, it shows the number of instances (examples) of that concept included in the test set. The PHYSICO benchmark is used to assess the ability of large language models (LLMs) to understand physical concepts. The CORE-Test set focuses on high-level understanding of the concepts, as opposed to simple memorization.

read the caption

Table 7: Concepts and their corresponding number of instances in PhysiCo-Core-Test.

Full paper#