TL;DR#

Automated fact-checking struggles to meet fact-checkers’ needs due to a lack of understanding of their workflows and explanation requirements. Existing AI tools often fail to provide the specific, context-rich explanations that are crucial for fact-checkers to effectively assess evidence, scrutinize outputs, and integrate automated tools into their workflows. This leads to a disconnect between current AI technology and the practical demands of professional fact-checking.

This research addresses this gap by conducting semi-structured interviews with fact-checking professionals to understand their workflow and explanation needs. The findings reveal significant unmet explanation needs and highlight crucial criteria for effective explanations that include tracing the model’s reasoning, referencing specific evidence, and highlighting uncertainty. The study provides actionable recommendations for developing more user-friendly automated fact-checking tools that meet the practical demands of fact-checkers, bridging the gap between AI capabilities and real-world fact-checking practices. The study emphasizes the importance of human-centered design in the development of automated fact-checking systems.

Key Takeaways#

Why does it matter?#

This paper is crucial for AI researchers and HCI professionals working on explainable AI and fact-checking. It bridges the gap between theoretical explainability and the practical needs of fact-checkers, offering valuable insights and recommendations for developing more effective automated tools. The findings highlight the importance of integrating user needs into the design process and identifying the specific types of explanations required for different stages of the fact-checking workflow. This research directly addresses current limitations in automated fact-checking and opens new avenues for improving the design and usability of explainable AI systems.

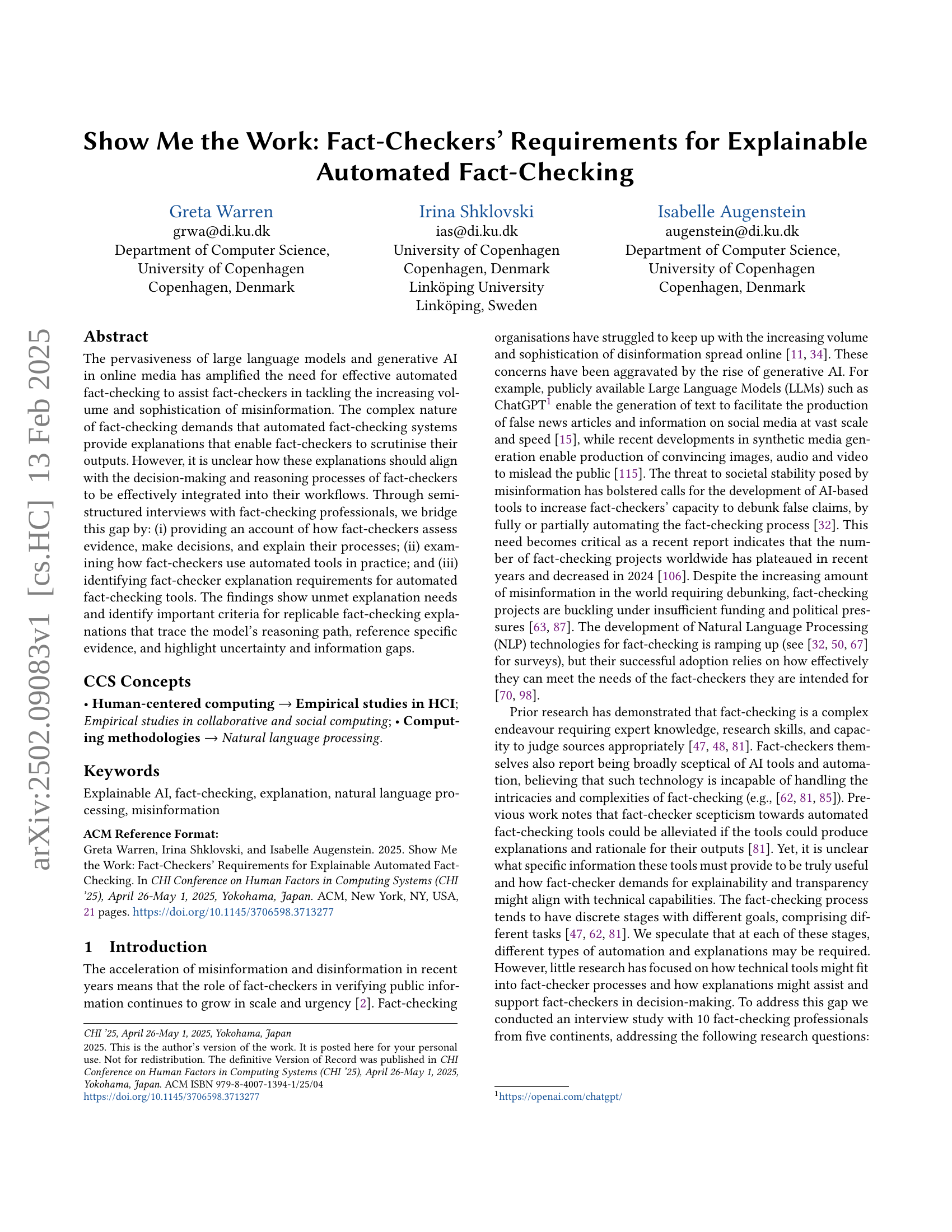

Visual Insights#

| Participant ID | Country | Occupation |

|

| Gender | ||||

|---|---|---|---|---|---|---|---|---|---|

| P1 | Ukraine | Investigative Journalist | Freelance | 8 years | Female | ||||

| P2 | Argentina | Fact-Checker | Independent | 4 years | Female | ||||

| P3 | Poland | Fact-Checker | Independent | 4 years | Male | ||||

| P4 | USA | Investigative Journalist & Trainer | Freelance | 12 years | Female | ||||

| P5 | Poland | Fact-Checker | Independent | 5 years | Female | ||||

| P6 | Ireland & USA | Fact-Checker & Project Manager | Independent | 4 years | Male | ||||

| P7 | Poland | Director & Journalist | Independent | 4 years | Male | ||||

| P8 | Zimbabwe | Fact-Checker | Independent | 2 years | Female | ||||

| P9 | Nigeria | Investigative Journalist | Independent | 4 years | Male | ||||

| P10 | India | Fact-Checker | Independent | 6 years | Male |

🔼 This table presents the demographic information of the ten fact-checking professionals who participated in the study. For each participant, identified by a code (P1-P10), the table lists their country of origin, occupation (e.g., Fact-Checker, Investigative Journalist), whether they work independently or for an organization, and their experience in fact-checking (in years). The gender of each participant is also included.

read the caption

Table 1. Demographics of the interview participants

In-depth insights#

Fact-Checker Needs#

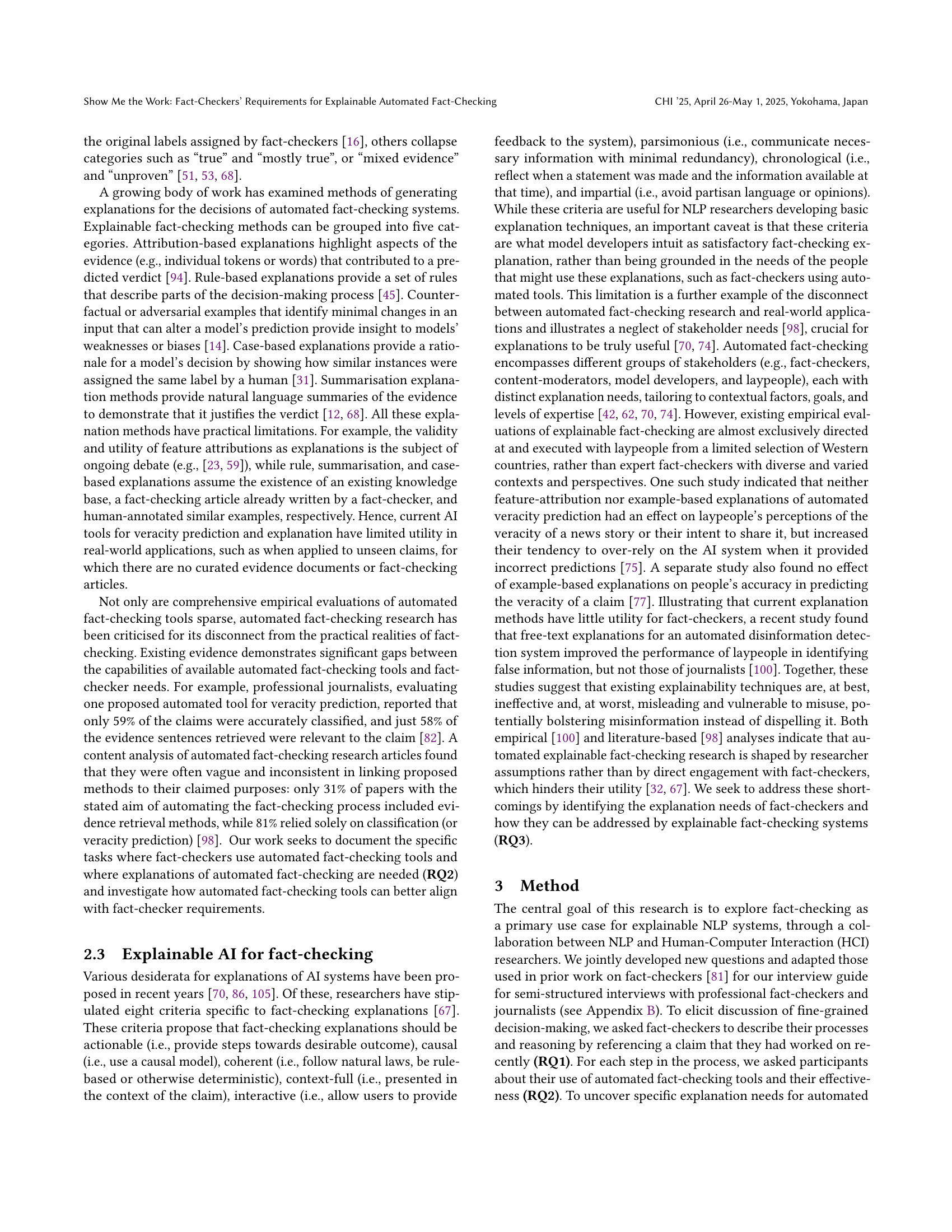

Fact-checkers require tools offering explainability and transparency, not merely binary true/false verdicts. They need systems that trace the model’s reasoning, referencing specific evidence and highlighting uncertainties or information gaps. This aligns with their need to build replicable, verifiable fact-checks, communicating complexity clearly to diverse audiences. Automated tools should support, not replace, the human element, integrating seamlessly into existing workflows. Explainability must be tailored to specific fact-checking tasks, providing different information at each stage (claim detection, evidence retrieval, verdict decision, communication). The systems should address biases inherent in both data and algorithms, ensuring fair and impartial outputs that build, not erode, public trust. Furthermore, ethical considerations are paramount, particularly concerning data sources and potential biases embedded within automated systems.

AI Tool Evaluation#

In evaluating AI tools for fact-checking, a multifaceted approach is crucial. Accuracy is paramount, but equally important is evaluating the explainability of the tool’s decisions. Fact-checkers need transparency to understand how the AI arrived at its conclusions, allowing for verification and validation. Reliability should also be assessed, considering the tool’s consistency across different claims and datasets. The efficiency of the tool, in terms of speed and resource usage, is a practical concern for fact-checkers. Finally, the user-friendliness of the tool’s interface is vital for successful integration into their workflows. A comprehensive evaluation, therefore, necessitates testing across these key dimensions with a focus on how the tool supports, rather than replaces, human judgment in the fact-checking process.

Explainable AI Gaps#

The concept of “Explainable AI Gaps” in the context of automated fact-checking highlights the critical disconnect between the capabilities of current explainable AI (XAI) systems and the actual needs of human fact-checkers. Existing XAI methods often fall short in providing explanations that are sufficiently detailed, actionable, and trustworthy for professional fact-checkers. These gaps manifest in several key areas: a lack of focus on primary sources, reliance on secondary sources which can be biased, insufficient explanation of the reasoning pathways employed by AI models, and a failure to address uncertainty and information gaps in a human-understandable way. The need to bridge these gaps is paramount to fostering trust and effective integration of XAI tools into fact-checking workflows. Future research should prioritize human-centered approaches to XAI development, closely collaborating with fact-checkers to understand their precise information needs and develop more intuitive and robust explanation methods. Addressing these “Explainable AI Gaps” is essential for building AI systems that truly augment rather than replace the critical role of human fact-checkers in maintaining the integrity of public information.

Methodology: Interviews#

A robust methodology section detailing the interview process would be crucial for evaluating the research’s validity. It should specify the participant recruitment strategy, clearly outlining how researchers identified and selected fact-checkers from diverse backgrounds and geographical locations to ensure a representative sample. The interview protocol should be meticulously described, including the type of questions asked (open-ended, structured, or a mix), the duration of each interview, and any methods used to ensure inter-rater reliability if multiple interviewers were involved. The analysis process is also key; a detailed description of the data analysis techniques employed (e.g., thematic analysis, grounded theory) and the steps taken to ensure rigor and transparency in identifying and interpreting themes and patterns from the interview data is essential. Finally, the section must address potential limitations of the interview approach, acknowledging factors that might influence the validity of the findings (e.g., sampling bias, interviewer bias, social desirability bias), and proposing strategies to mitigate these limitations. A strong methodology statement will strengthen the overall credibility of the research.

Future Research#

Future research should prioritize a human-centered approach, focusing on integrating automated fact-checking tools seamlessly into fact-checkers’ workflows. This involves developing explainable AI methods that align with fact-checkers’ reasoning processes, providing detailed explanations that trace the model’s steps, justify its decisions, and highlight potential biases. Further investigation is needed into handling multilingual and multimodal data, given that misinformation often targets diverse audiences and formats. Research should explore ways to address ethical concerns, including bias mitigation and transparency about data sources and model training, which are crucial for building trust and ensuring responsible AI development. Finally, evaluating automated systems’ impact on fact-checkers’ efficiency and user experience through rigorous empirical studies is essential for determining the actual value these tools bring to the fight against misinformation.

More visual insights#

More on tables

| Organisational |

| Context |

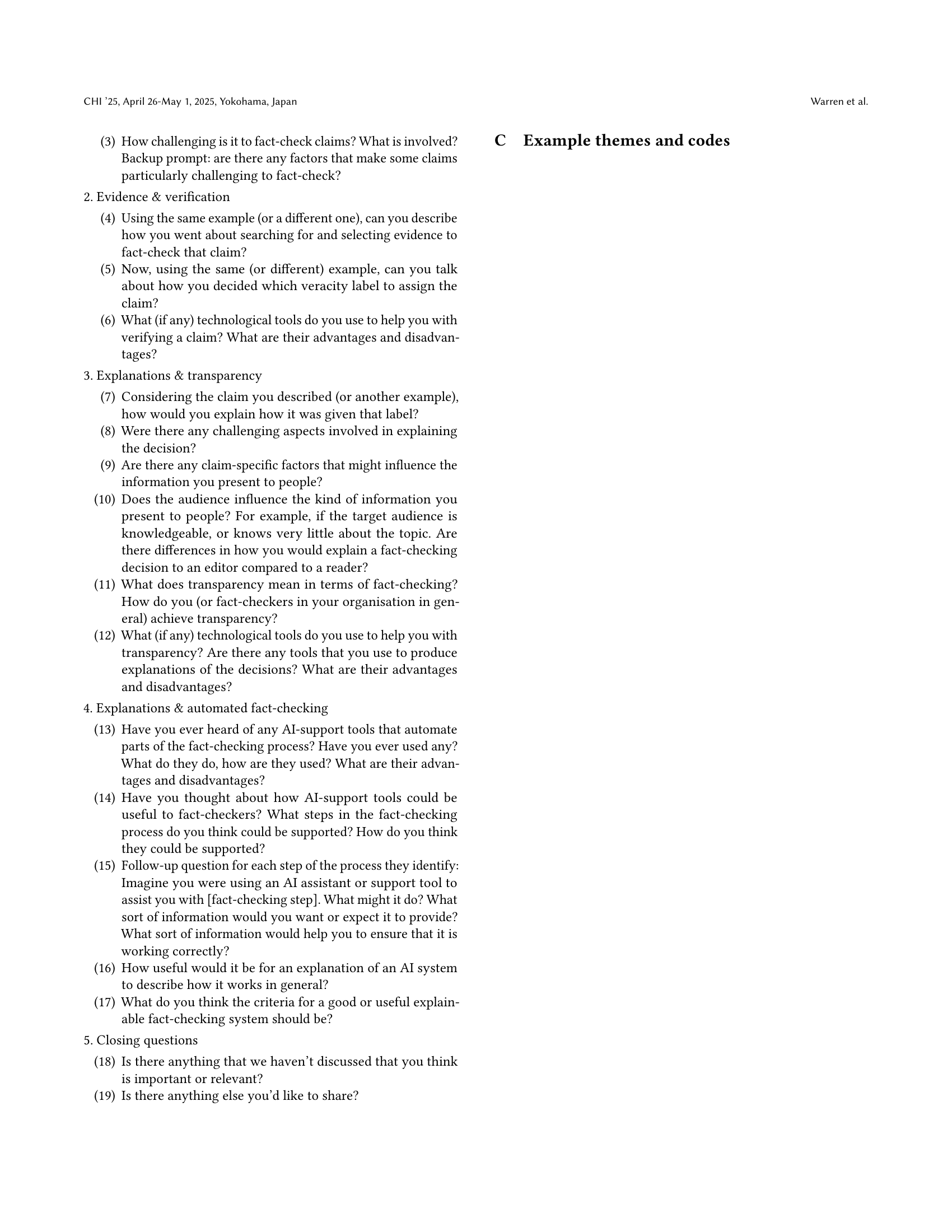

🔼 This table presents the key themes that emerged from the interviews regarding the three research questions. Each theme is categorized by its relevance to a specific research question (RQ1, RQ2, or RQ3). The number in parentheses after each theme indicates how many of the ten participants mentioned that specific theme during the interviews.

read the caption

Table 2. Key themes relevant to each research question, with the number of participants who mentioned each theme in parentheses.

| Fact-Checking |

| Experience |

🔼 This table presents key themes, subthemes, and example codes identified through the analysis of interview transcripts. It organizes findings related to three research questions (RQ1-RQ3) focusing on fact-checkers’ decision-making processes, tool usage, and explanation requirements for automated fact-checking systems. Each theme represents a major finding, broken down into subthemes that provide more detail. The example codes showcase specific instances from the interview data that support these themes and subthemes, offering concrete examples of fact-checkers’ perspectives.

read the caption

Table 3. Example themes, subthemes and codes developed from analysis of interview transcripts

Full paper#