TL;DR#

Large Language Models (LLMs) often struggle with complex reasoning tasks. Traditional methods like chain-of-thought prompting show some promise but are limited. This necessitates improved techniques to fully exploit LLM reasoning potential.

The paper introduces SQUARE (Sequential Question Answering Reasoning Engine), a novel prompting technique. SQUARE guides LLMs to generate and answer multiple sub-questions before tackling the main query, improving reasoning by systematically decomposing complex queries into smaller, manageable steps. Evaluations on several datasets show SQUARE’s superior performance over existing methods, demonstrating the effectiveness of its self-interrogation paradigm.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers working with LLMs because it introduces a novel prompting technique to significantly improve reasoning capabilities. SQUARE’s effectiveness across multiple datasets and models, along with its publicly available code, makes it readily applicable and promotes further research into advanced prompting strategies and self-interrogation paradigms.

Visual Insights#

🔼 The figure illustrates the SQUARE method’s operation. It shows how SQUARE prompts a language model to generate multiple question-answer pairs (N) related to the main query before answering it. This approach builds context and enables the model to explore different facets of the topic more thoroughly, enhancing its ability to answer complex questions accurately.

read the caption

Figure 1: The SQuARE methods prompts the model to generate N𝑁Nitalic_N question-answer pairs about the topic and then respond to the original query, having established additional context.

| You are a helpful question answerer who can provide an answer given a question and relevant context. |

| Generate {N} questions based on the given question and context, and shortly answer them. |

| Finally, provide an answer to the original question using what you learned from answering the questions you created. The answer should be a short span, just a few words. |

🔼 This table presents the main prompt used for the SQUARE technique. The prompt instructs the language model to act as a question answerer, generating a specified number of sub-questions based on the given question and context. It then requires the model to answer these sub-questions concisely before ultimately providing a brief answer to the original question, leveraging insights gained from the intermediate steps.

read the caption

Table 1: Main prompt for the SQuARE technique.

In-depth insights#

SQUARE: Reasoning Engine#

The SQUARE reasoning engine, as described in the research paper, presents a novel prompting technique designed to significantly enhance the reasoning capabilities of Large Language Models (LLMs). It achieves this by implementing a self-interrogation paradigm, prompting the model to generate and answer multiple auxiliary questions before tackling the main query. This systematic decomposition of complex questions allows for a more thorough exploration of various aspects of the topic, leading to more accurate and comprehensive answers. Unlike traditional chain-of-thought prompting, SQUARE’s iterative questioning process promotes a deeper understanding and more robust reasoning. The evaluation results across multiple datasets and LLMs, notably Llama and GPT-4, demonstrate that SQUARE consistently outperforms baseline methods, showcasing its effectiveness in improving LLM reasoning capabilities. The technique’s iterative, self-directed questioning sets it apart, moving beyond the limitations of single-step reasoning and showing significant potential for advancing LLM performance in complex reasoning tasks.

Self-Interrogation Paradigm#

The “Self-Interrogation Paradigm” presents a novel approach to enhancing Large Language Model (LLM) reasoning. Instead of relying solely on external prompts, this paradigm encourages the LLM to engage in a process of self-questioning. This involves prompting the model to generate and subsequently answer intermediate questions related to the main query. This iterative process allows for a more thorough exploration of the problem space, potentially uncovering hidden aspects or nuances that would be missed in a simpler chain-of-thought approach. The key benefit lies in the LLM’s active participation in shaping its own reasoning path, leading to more robust and comprehensive solutions, particularly in complex tasks. The efficacy of this approach relies on the model’s ability to generate relevant and insightful auxiliary questions, which may require careful prompt engineering and potentially further fine-tuning to optimize the model’s capacity for self-directed inquiry. Further research could explore the application of this paradigm to other LLM tasks and the development of techniques to guide and evaluate the self-interrogation process more effectively. Scalability and computational cost will also need to be addressed given the inherent iterative nature of the process.

LLM Reasoning Enhancements#

LLM reasoning enhancements are a critical area of research, focusing on improving the capacity of large language models to perform complex reasoning tasks. Current methods often fall short, particularly when dealing with multi-step reasoning or problems requiring external knowledge. Strategies like chain-of-thought prompting have shown promise, guiding the LLM through intermediate steps, but further advancements are needed. Self-interrogation techniques, where the LLM generates and answers its own auxiliary questions, offer a powerful approach to enhancing thoroughness and exploration of the problem space. Systematic decomposition of queries is key to unlocking improved reasoning capabilities, allowing the model to tackle complex problems more effectively. However, challenges remain in balancing thoroughness with computational efficiency, particularly for resource-intensive models. Furthermore, robust evaluation methodologies are crucial to objectively assess the effectiveness of different enhancement strategies, considering factors like dataset characteristics, and model architecture. Future research should focus on developing more adaptive and efficient prompting techniques and better methods to analyze and validate LLM reasoning outputs. Combining techniques, such as self-interrogation with external knowledge retrieval, and exploring various aggregation methods for combining sub-question answers, are promising avenues for future investigation.

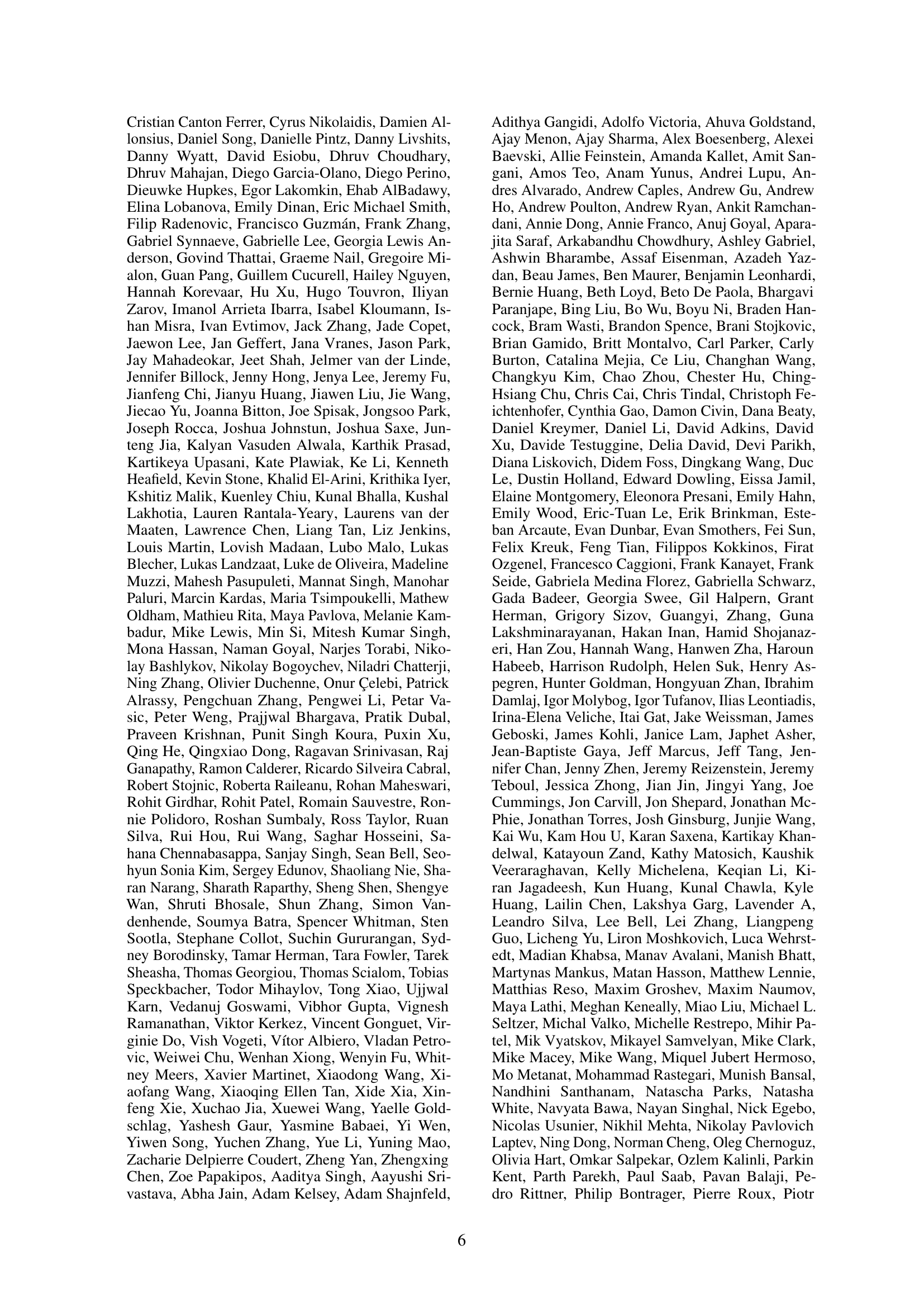

Ablation Study: Insights#

An ablation study systematically removes components of a model or system to assess their individual contributions. In the context of a question answering model, this might involve removing elements such as the self-interrogation module, the number of sub-questions generated, or the few-shot examples provided during training. Insights from such a study would highlight the relative importance of each component. For example, removing the self-interrogation module and observing a significant performance drop would indicate its critical role in enhancing reasoning capabilities. Similarly, analyzing the effect of varying the number of sub-questions would reveal the optimal level of decomposition for achieving a balance between accuracy and computational cost. The impact of removing few-shot examples would reveal the model’s ability to generalize knowledge without explicit examples, indicating its learning efficiency and robustness. Overall, the ablation study provides a granular understanding of the model’s internal workings and identifies key components driving its success, paving the way for further model improvements and optimization.

Future Research Needs#

Future research should prioritize enhancing the adaptability of SQUARE to diverse query complexities and domains. Determining the optimal number of sub-questions dynamically is crucial, avoiding redundancy or insufficient exploration. The generalizability of SQUARE across various question types and datasets beyond those initially tested needs further investigation. Addressing the computational cost associated with generating and answering multiple sub-questions is vital for real-world applications, potentially exploring efficient question generation mechanisms or parallel processing techniques. Finally, mitigating potential ethical concerns such as the generation of misleading or harmful information is paramount, requiring safeguards to ensure accuracy and fairness in responses. Rigorous evaluation across a broader range of LLM architectures is essential to ascertain the technique’s effectiveness and scalability.

More visual insights#

More on tables

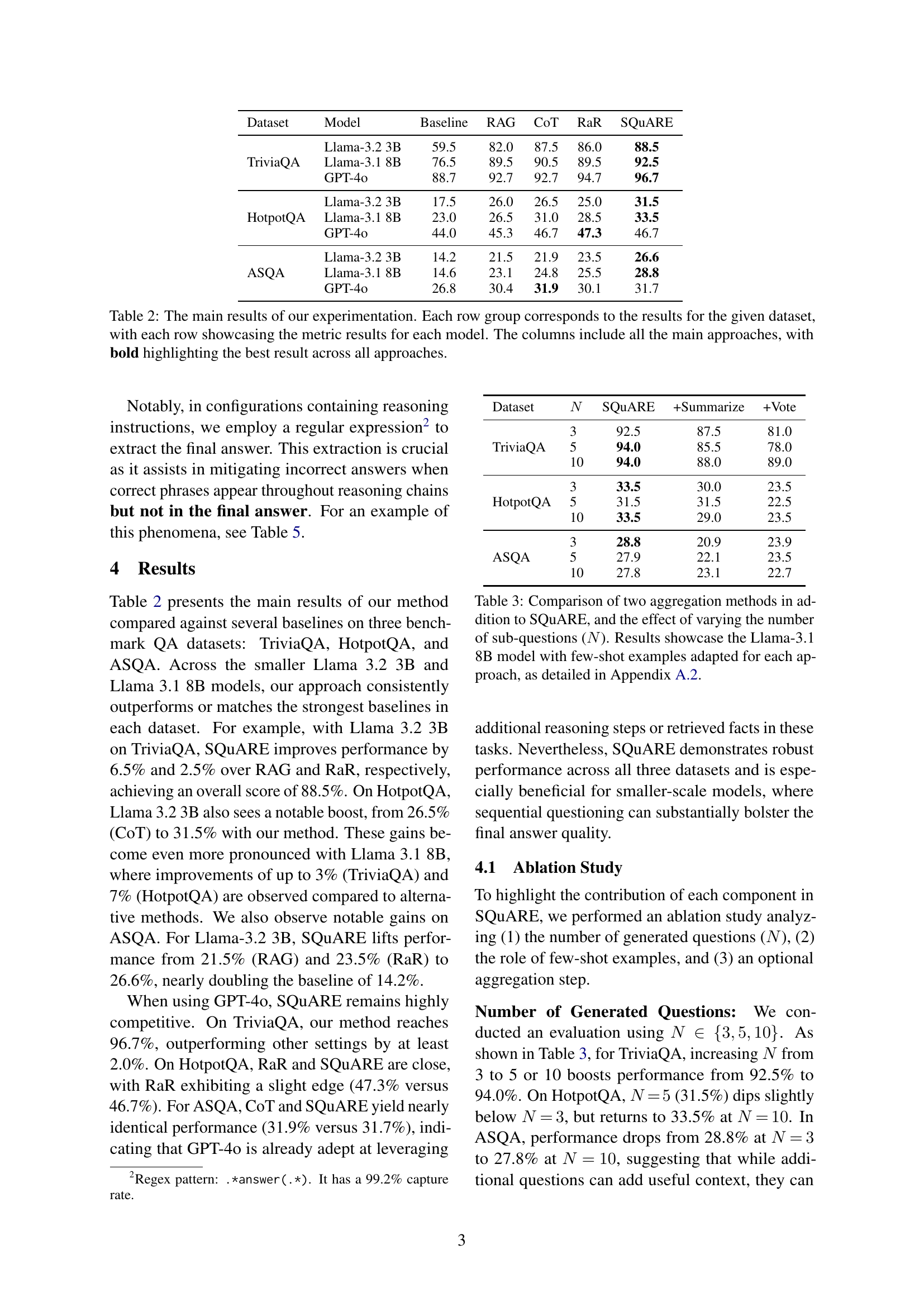

| Dataset | Model | Baseline | RAG | CoT | RaR | SQuARE |

|---|---|---|---|---|---|---|

| TriviaQA | Llama-3.2 3B | 59.5 | 82.0 | 87.5 | 86.0 | 88.5 |

| Llama-3.1 8B | 76.5 | 89.5 | 90.5 | 89.5 | 92.5 | |

| GPT-4o | 88.7 | 92.7 | 92.7 | 94.7 | 96.7 | |

| HotpotQA | Llama-3.2 3B | 17.5 | 26.0 | 26.5 | 25.0 | 31.5 |

| Llama-3.1 8B | 23.0 | 26.5 | 31.0 | 28.5 | 33.5 | |

| GPT-4o | 44.0 | 45.3 | 46.7 | 47.3 | 46.7 | |

| ASQA | Llama-3.2 3B | 14.2 | 21.5 | 21.9 | 23.5 | 26.6 |

| Llama-3.1 8B | 14.6 | 23.1 | 24.8 | 25.5 | 28.8 | |

| GPT-4o | 26.8 | 30.4 | 31.9 | 30.1 | 31.7 |

🔼 This table presents the performance comparison of different question answering methods on three benchmark datasets: TriviaQA, HotpotQA, and ASQA. Results are shown for three different large language models: Llama-3.2 3B, Llama-3.1 8B, and GPT-40. Each row shows the performance of a single model on a specific dataset using a different technique. The techniques include a baseline approach, Retrieval Augmented Generation (RAG), Chain-of-Thought (CoT) prompting, rephrase-and-respond (RaR), and the proposed SQUARE method. The best performing method for each model and dataset is highlighted in bold.

read the caption

Table 2: The main results of our experimentation. Each row group corresponds to the results for the given dataset, with each row showcasing the metric results for each model. The columns include all the main approaches, with bold highlighting the best result across all approaches.

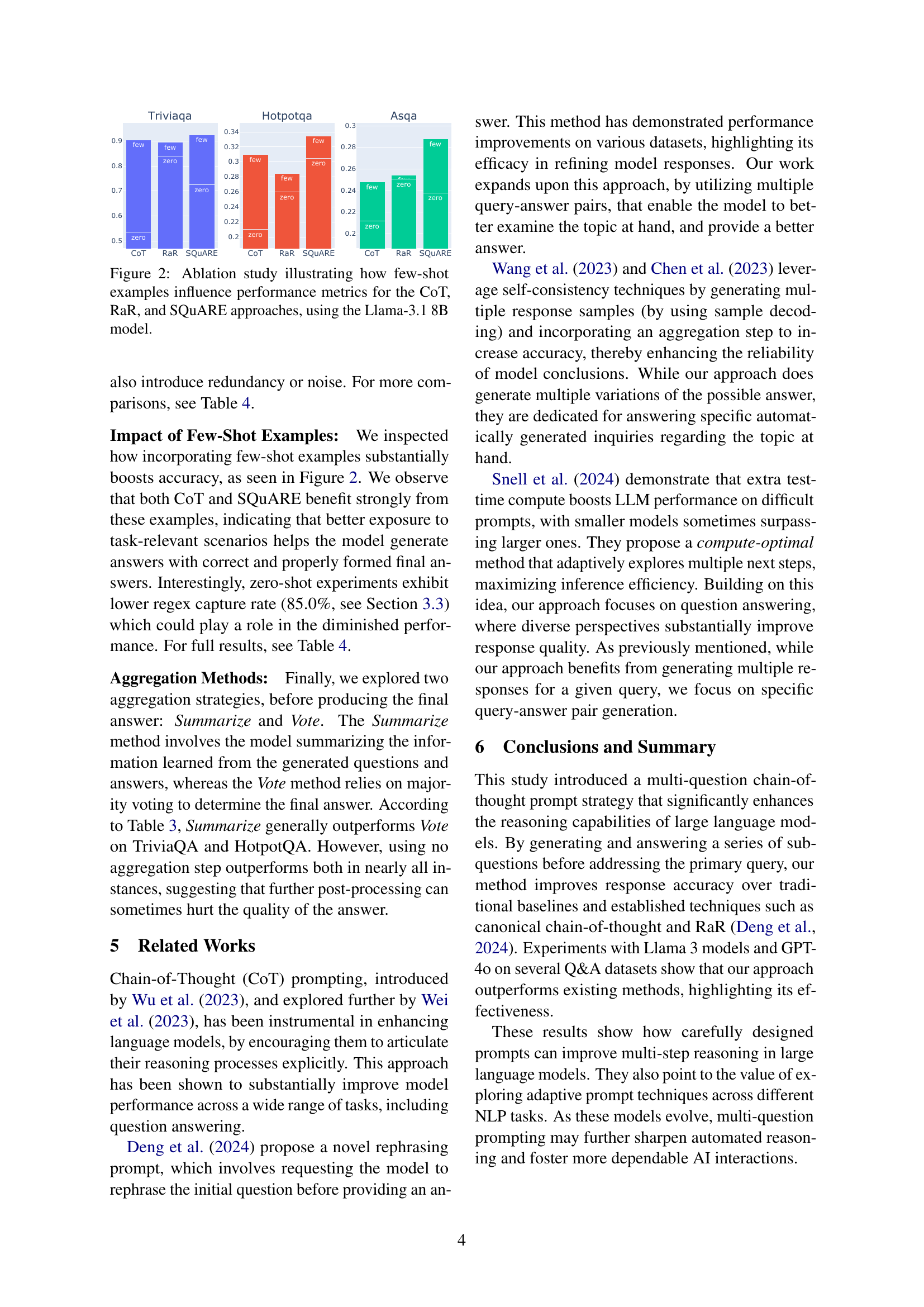

| Dataset | SQuARE | +Summarize | +Vote | |

|---|---|---|---|---|

| TriviaQA | 3 | 92.5 | 87.5 | 81.0 |

| 5 | 94.0 | 85.5 | 78.0 | |

| 10 | 94.0 | 88.0 | 89.0 | |

| HotpotQA | 3 | 33.5 | 30.0 | 23.5 |

| 5 | 31.5 | 31.5 | 22.5 | |

| 10 | 33.5 | 29.0 | 23.5 | |

| ASQA | 3 | 28.8 | 20.9 | 23.9 |

| 5 | 27.9 | 22.1 | 23.5 | |

| 10 | 27.8 | 23.1 | 22.7 |

🔼 This table compares three methods for aggregating the results of SQUARE: using no aggregation, summarizing the answers, and voting on the answers. It also shows how the performance of the Llama-3.1 8B model changes when varying the number of sub-questions (N) generated by the SQUARE method. The results are based on experiments using a few-shot learning approach with adapted examples, as detailed in Appendix A.2 of the paper.

read the caption

Table 3: Comparison of two aggregation methods in addition to SQuARE, and the effect of varying the number of sub-questions (N𝑁Nitalic_N). Results showcase the Llama-3.1 8B model with few-shot examples adapted for each approach, as detailed in Section A.2.

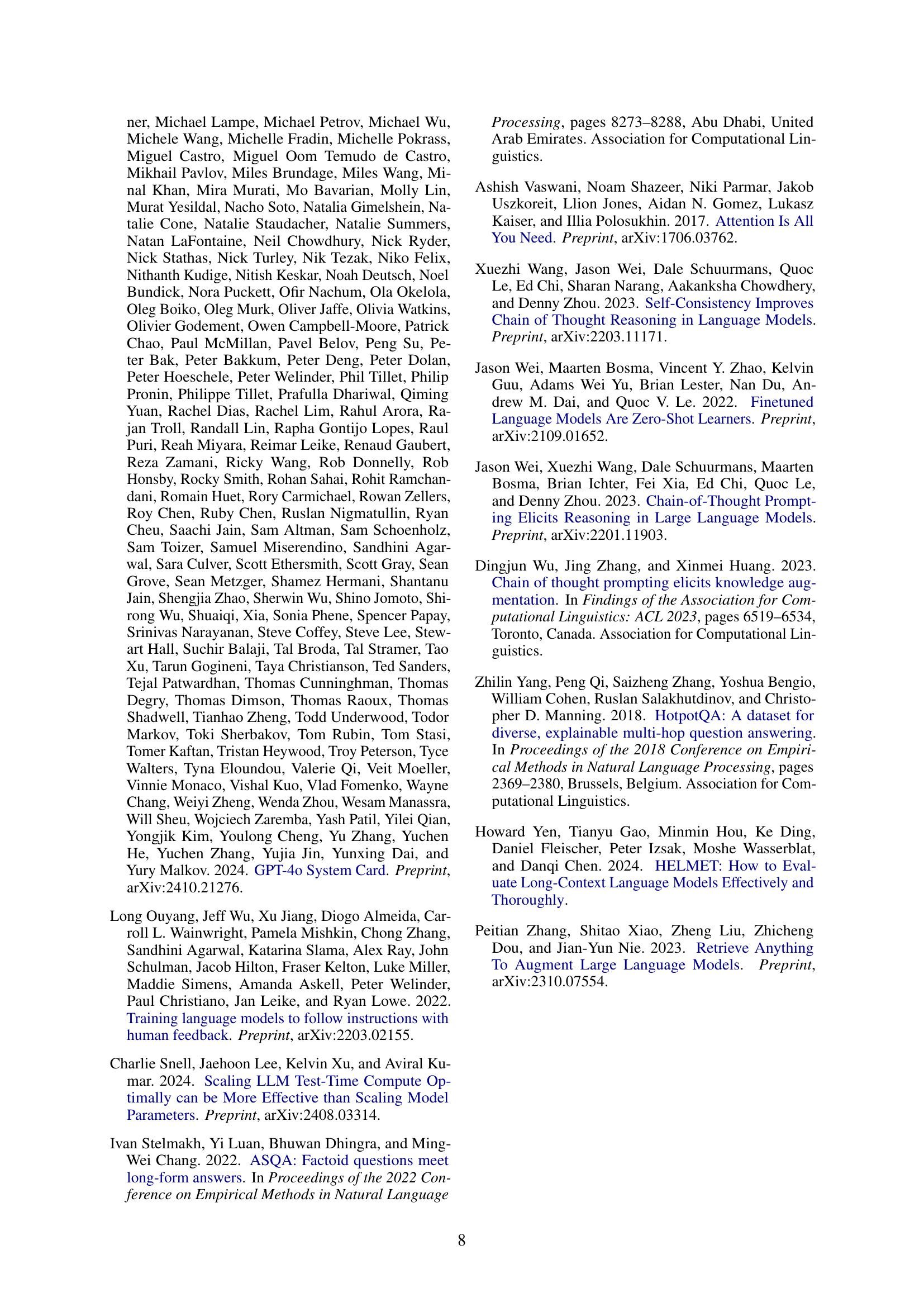

| Dataset | Model | Baseline | RAG | CoT | RaR | SQuARE-3 | SQuARE-5 | SQuARE-10 |

|---|---|---|---|---|---|---|---|---|

| TriviaQA | Llama-3.2 3B | 59.5 | 82.0 | 57.0 / 87.5 | 67.5 / 86.0 | 89.5 / 88.5 | 89.5 / 89.0 | 86.0 / 86.5 |

| Llama-3.1 8B | 76.5 | 89.5 | 53.5 / 90.5 | 84.0 / 89.5 | 72.5 / 92.5 | 89.0 / 94.0 | 76.5 / 94.0 | |

| GPT-4o | 88.7 | 92.7 | 01.3 / 92.7 | 87.3 / 94.7 | 84.7 / 96.7 | 84.0 / 96.7 | 86.0 / 95.3 | |

| HotpotQA | Llama-3.2 3B | 17.5 | 26.0 | 23.0 / 26.5 | 25.0 / 25.0 | 31.0 / 31.5 | 28.0 / 29.5 | 30.5 / 28.0 |

| Llama-3.1 8B | 23.0 | 26.5 | 21.0 / 31.0 | 26.0 / 28.5 | 30.5 / 33.5 | 33.5 / 31.5 | 29.5 / 33.5 | |

| GPT-4o | 44.0 | 45.3 | 06.7 / 46.7 | 40.0 / 47.3 | 45.3 / 46.7 | 44.7 / 47.3 | 40.0 / 46.0 | |

| ASQA | Llama-3.2 3B | 14.2 | 21.5 | 24.4 / 21.9 | 18.3 / 23.5 | 24.2 / 26.6 | 24.8 / 27.9 | 25.6 / 27.4 |

| Llama-3.1 8B | 14.6 | 23.1 | 21.2 / 24.8 | 25.1 / 25.5 | 23.8 / 28.8 | 29.1 / 27.9 | 30.0 / 27.8 | |

| GPT-4o | 26.8 | 30.4 | 02.1 / 31.9 | 20.7 / 30.1 | 24.8 / 31.7 | 30.2 / 29.4 | 29.6 / 31.1 |

🔼 This table presents a comprehensive summary of the experimental results obtained across three different question-answering datasets (TriviaQA, HotpotQA, and ASQA) using three distinct language models (Llama-3.2 3B, Llama-3.1 8B, and GPT-40). For each dataset-model combination, the table compares the performance of seven different configurations: a baseline, RAG (Retrieval-Augmented Generation), CoT (Chain-of-Thought), RaR (Rephrase and Respond), and three variations of the SQUARE method with different numbers of generated questions (N=3, N=5, and N=10). Where applicable, both zero-shot (no prior examples) and few-shot (with example queries) results are reported, offering a detailed comparison of various prompting techniques on different model sizes and datasets.

read the caption

Table 4: Summary of results; comparing seven configurations on three datasets, using three models. When applicable, zero-shot/few-shot results are shown.

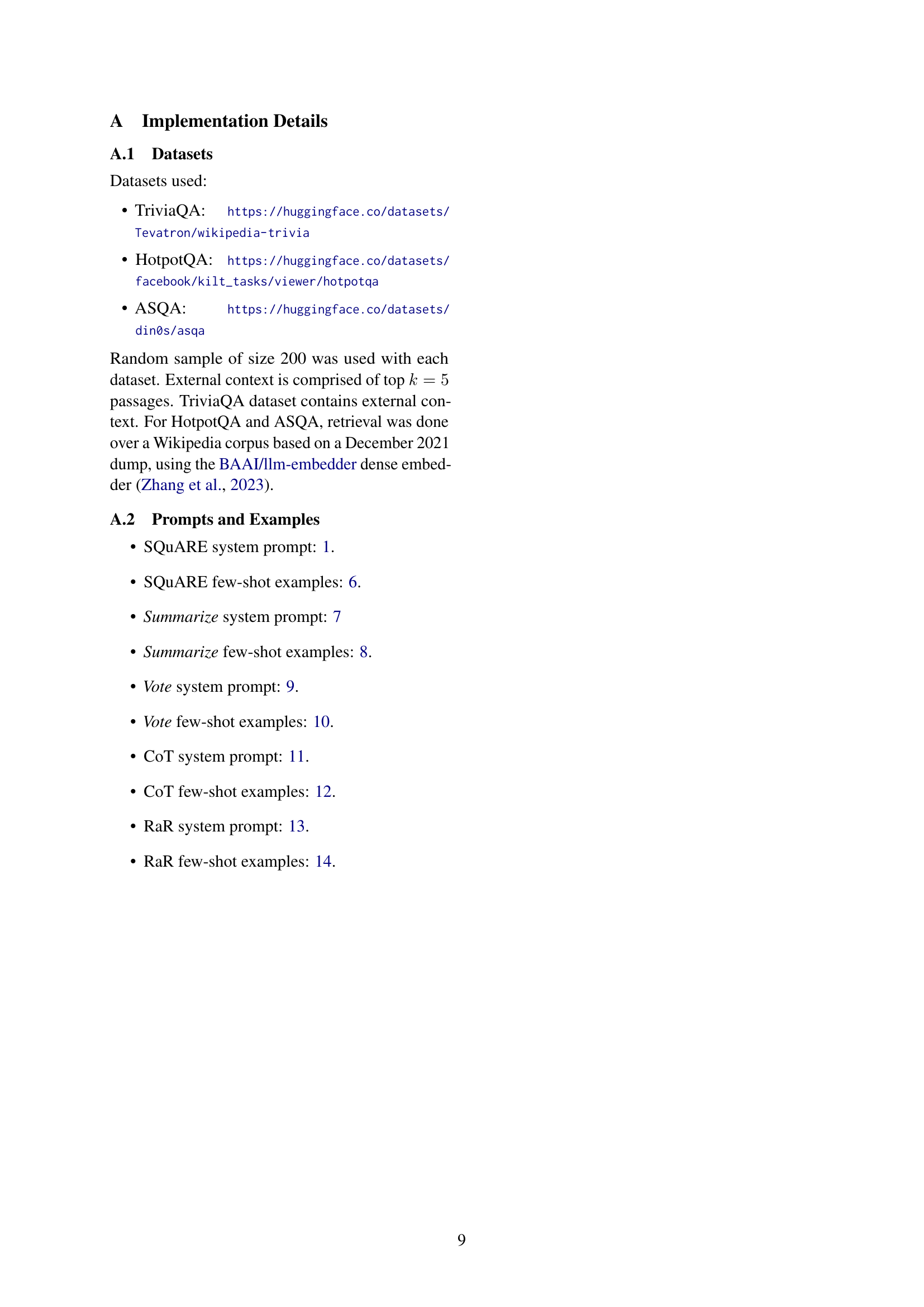

| Question: In what month is the annual documentary film festival, that is presented by the fortnightly published British journal of literary essays, held? |

|---|

| The annual documentary film festival, presented by the fortnightly published British journal, is the Open City Documentary Festival. |

| Let’s break down the context: |

| The journal is described as a fortnightly published British journal of literary essays. |

| The London Review of Books is mentioned as a publication associated with the London International Documentary Festival, but it is a monthly publication, not fortnightly. |

| The Open City Documentary Festival is not mentioned in the context as being associated with a specific journal, but it is a documentary film festival that takes place in London. |

| However, the Open City Documentary Festival is not the only festival that matches the description. The London Review of Bookseller is a monthly publication, but the journal described in the question is fortnightly. The London Review of Books is not the journal described in the question. |

| The Open City Documentary Festival is not the only festival that matches the description, but it is the only festival that is associated with a journal that is published twice a year, which could be interpreted as fortnightly. |

| However, the London Independent Film Festival is not the correct answer because it is held in April, and the journal is not mentioned in the context. |

| The London International Documentary Festival is held in March and April, but the journal is not mentioned in the context. |

| The Open City Documentary Festival is the only festival that matches the description of being associated with a journal that is published twice a year, which could be interpreted as fortnightly. |

| Answer: Open City. |

🔼 This table presents a verbatim example of an incorrect answer generated by a Llama-3.1 8B model using the chain-of-thought (CoT) prompting technique. The question is from the HotpotQA dataset. The correct answer, ‘March and April’, is present within the model’s reasoning steps. However, the model’s final answer is incorrect (‘Open City’). The discrepancy highlights the challenge of reliably extracting correct answers from the model’s intermediate reasoning steps and underscores the need for improved answer extraction methods.

read the caption

Table 5: Generated answer (verbatim) for a question from the HotpotQA dataset, produced by Llama-3.1 8B model, using the CoT technique with two few-shot examples. Correct answer is March and April, which is found in the reasoning chain, probably due to this fact appearing in the relevant external context provided to the model (not shown here). The final model’s answer is wrong, necessitating the need to correctly extract answers for better analysis and research.

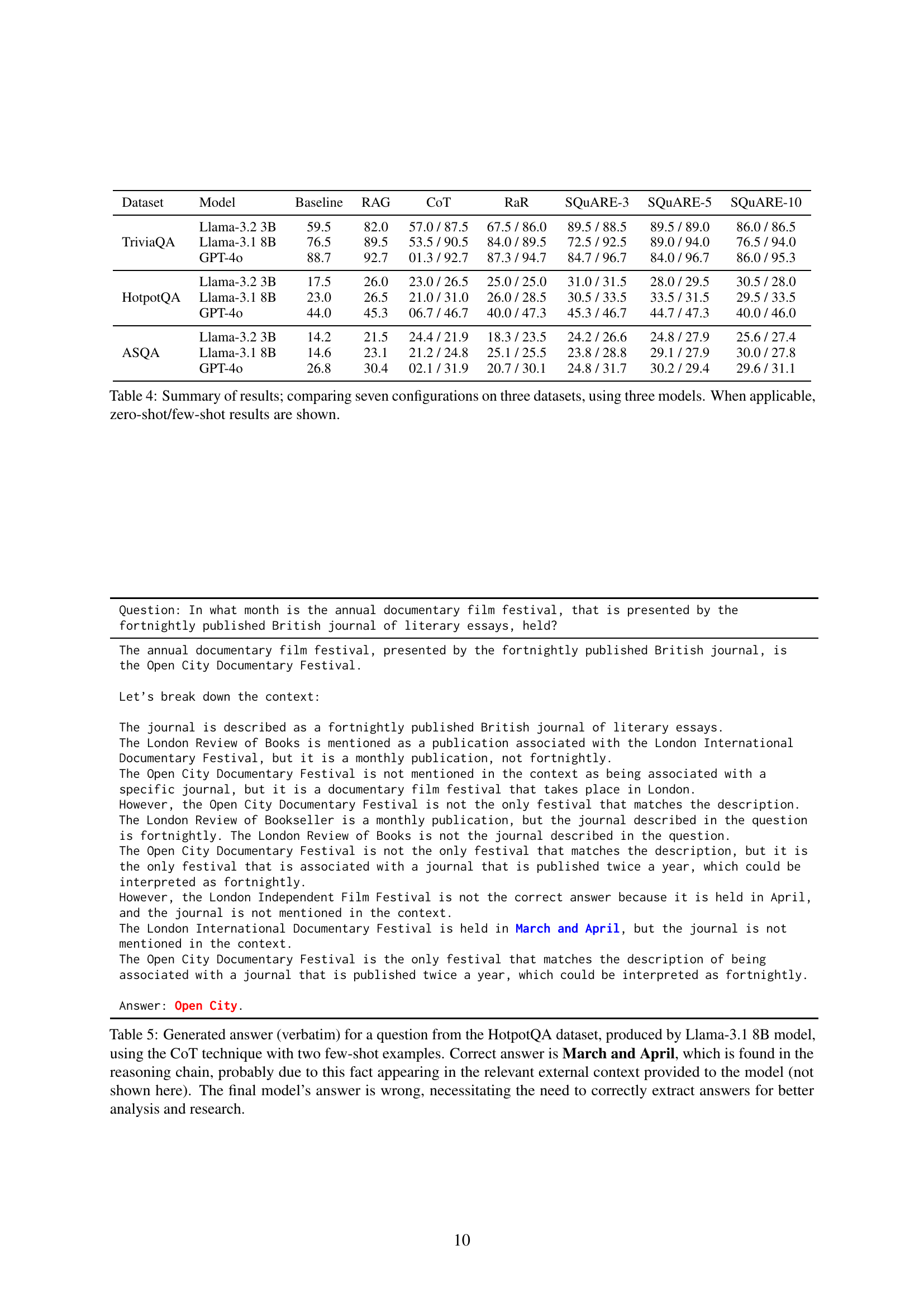

| Question: What is the shared profession of Jack Kerouac and Dan Masterson? |

|---|

| Generated Questions and Answers: |

| 1. Question: What literary movement was Jack Kerouac a pioneer of? |

| Answer: Jack Kerouac was a pioneer of the Beat Generation. |

| 2. Question: What is Dan Masterson primarily known for? |

| Answer: Dan Masterson is primarily known for being a poet. |

| 3. Question: When was the Jack Kerouac School of Disembodied Poetics founded and by whom? |

| Answer: The Jack Kerouac School of Disembodied Poetics was founded in 1974 by Allen Ginsberg and Anne Waldman. |

| Answer: The shared profession of Jack Kerouac and Dan Masterson is that they were both poets. |

| Question: Which band top the UK charts with their singles "Chelsea Dagger" and "Whistle for the Choir": The Fratellis or The Madden Brothers? |

| Questions and Answers Based on the Given Context: |

| 1. Question: When was "Whistle for the Choir" released, and how did it perform on the UK Singles Chart? |

| Answer: "Whistle for the Choir" was released on 27 November 2006 and reached number nine on the UK Singles Chart. |

| 2. Question: What inspired the name of the song "Chelsea Dagger" by The Fratellis? |

| Answer: The song "Chelsea Dagger" was named after Jon Fratelli’s wife Heather, who performed as a burlesque dancer under the stage name Chelsea Dagger. |

| 3. Question: What is the relationship between the members of The Fratellis, and what are their stage names? |

| Answer: The members of The Fratellis are not related by blood. They perform under pseudonyms: Jon Fratelli (lead vocalist and guitarist), Barry Fratelli (bassist), and Mince Fratelli (drummer). |

| Answer: The Fratellis are the band that topped the UK charts with their singles "Chelsea Dagger" and "Whistle for the Choir." |

🔼 Table 6 presents two example question-answer pairs used in the few-shot learning setting of the SQUARE method. These examples are provided to the model before it receives the actual question, to give it context and guide its reasoning. The SQUARE prompt is used, with a parameter ‘N’ set to 3, meaning the model generates and answers three intermediate questions before arriving at the final answer to the main query.

read the caption

Table 6: The two examples used in our few-shot configurations. Here, the SQuARE prompt is used, with N=3𝑁3N=3italic_N = 3.

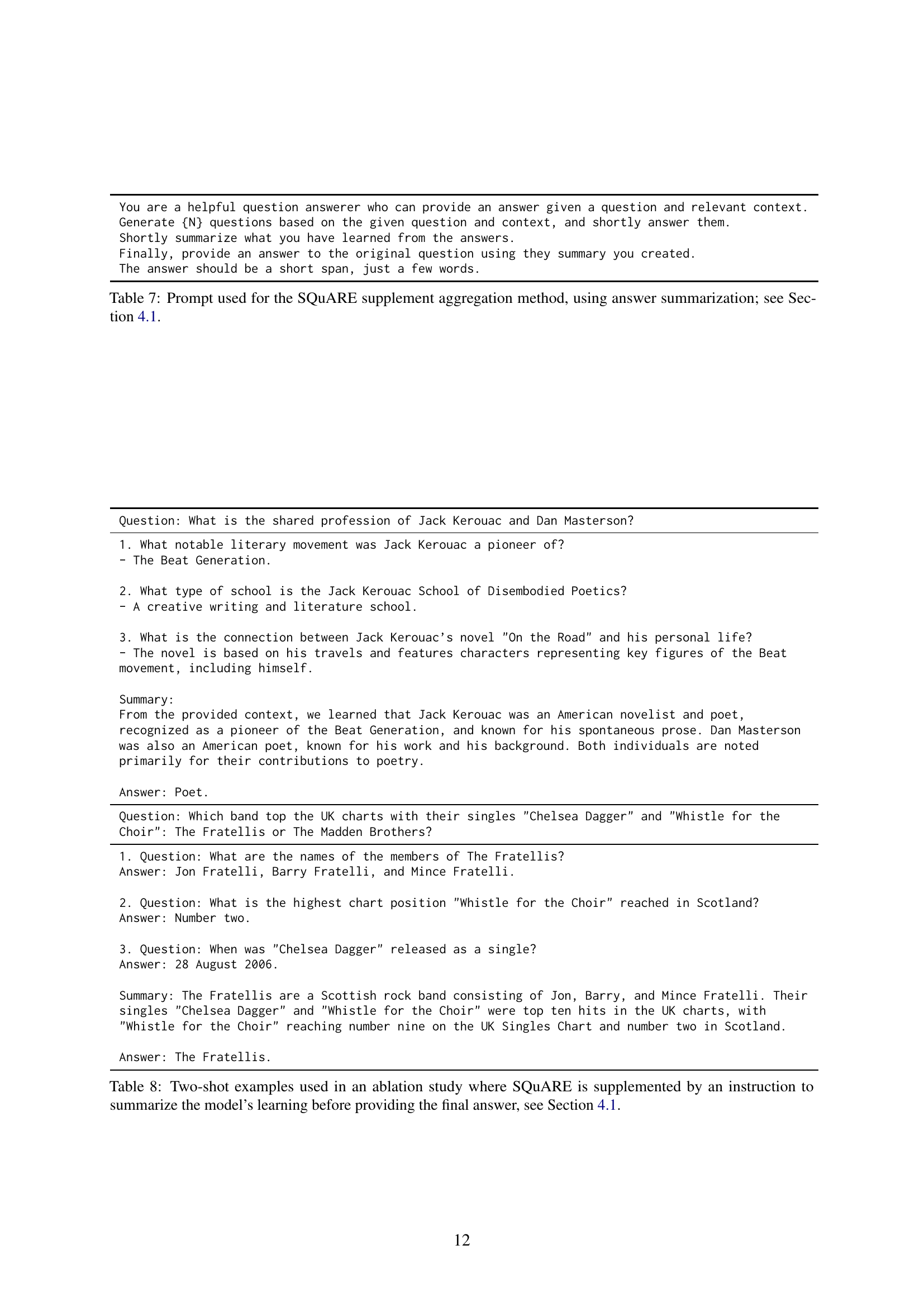

| You are a helpful question answerer who can provide an answer given a question and relevant context. |

| Generate {N} questions based on the given question and context, and shortly answer them. |

| Shortly summarize what you have learned from the answers. |

| Finally, provide an answer to the original question using they summary you created. |

| The answer should be a short span, just a few words. |

🔼 Table 7 details the prompt used in the SQUARE experiment for answer summarization. This variant of the SQUARE method modifies the original prompt to instruct the LLM to not only generate and answer multiple sub-questions, but also to concisely summarize the information gleaned from those answers before arriving at a final answer to the original question.

read the caption

Table 7: Prompt used for the SQuARE supplement aggregation method, using answer summarization; see Section 4.1.

| Question: What is the shared profession of Jack Kerouac and Dan Masterson? |

|---|

| 1. What notable literary movement was Jack Kerouac a pioneer of? |

| - The Beat Generation. |

| 2. What type of school is the Jack Kerouac School of Disembodied Poetics? |

| - A creative writing and literature school. |

| 3. What is the connection between Jack Kerouac’s novel "On the Road" and his personal life? |

| - The novel is based on his travels and features characters representing key figures of the Beat movement, including himself. |

| Summary: |

| From the provided context, we learned that Jack Kerouac was an American novelist and poet, recognized as a pioneer of the Beat Generation, and known for his spontaneous prose. Dan Masterson was also an American poet, known for his work and his background. Both individuals are noted primarily for their contributions to poetry. |

| Answer: Poet. |

| Question: Which band top the UK charts with their singles "Chelsea Dagger" and "Whistle for the Choir": The Fratellis or The Madden Brothers? |

| 1. Question: What are the names of the members of The Fratellis? |

| Answer: Jon Fratelli, Barry Fratelli, and Mince Fratelli. |

| 2. Question: What is the highest chart position "Whistle for the Choir" reached in Scotland? |

| Answer: Number two. |

| 3. Question: When was "Chelsea Dagger" released as a single? |

| Answer: 28 August 2006. |

| Summary: The Fratellis are a Scottish rock band consisting of Jon, Barry, and Mince Fratelli. Their singles "Chelsea Dagger" and "Whistle for the Choir" were top ten hits in the UK charts, with "Whistle for the Choir" reaching number nine on the UK Singles Chart and number two in Scotland. |

| Answer: The Fratellis. |

🔼 This table presents two example question-answer pairs used in an ablation study. The study examines the effect of adding a summarization step to the SQUARE method before the model provides a final answer. The examples illustrate how the SQUARE method, enhanced with summarization, works on two different question types. These examples are used as few-shot prompts within the broader experimental setup described in Section 4.1 of the paper.

read the caption

Table 8: Two-shot examples used in an ablation study where SQuARE is supplemented by an instruction to summarize the model’s learning before providing the final answer, see Section 4.1.

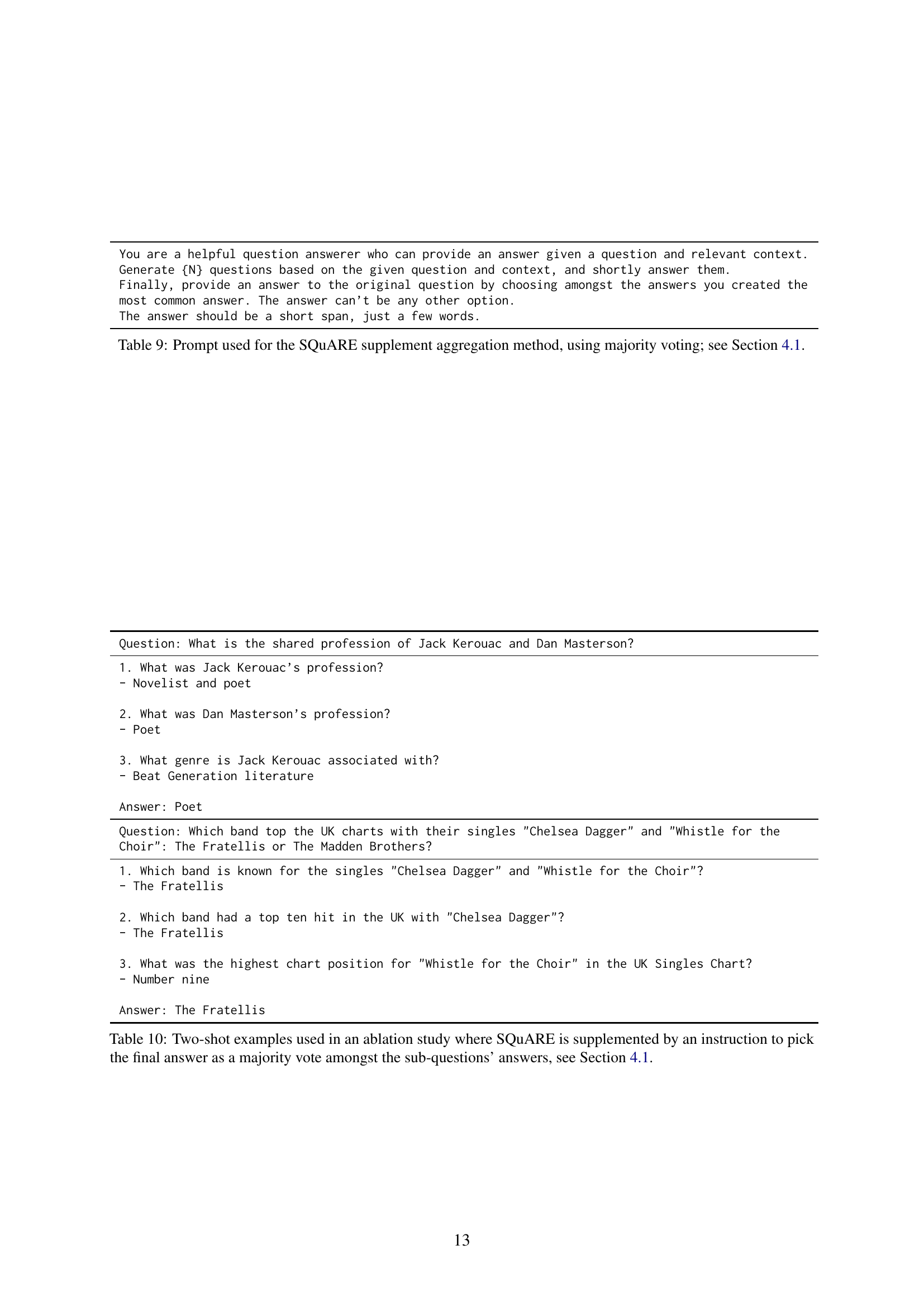

| You are a helpful question answerer who can provide an answer given a question and relevant context. |

| Generate {N} questions based on the given question and context, and shortly answer them. |

| Finally, provide an answer to the original question by choosing amongst the answers you created the most common answer. The answer can’t be any other option. |

| The answer should be a short span, just a few words. |

🔼 This table details the prompt used for the SQUARE (Sequential Question Answering Reasoning Engine) method’s supplement aggregation technique, specifically employing a majority voting approach to determine the final answer. The SQUARE method involves generating several sub-questions related to the main question and then using the answers to those sub-questions to arrive at a final answer. This particular variant uses a majority voting approach amongst the sub-questions’ answers to determine the final answer. This is contrasted with other aggregation methods explored in the paper (summarization and voting).

read the caption

Table 9: Prompt used for the SQuARE supplement aggregation method, using majority voting; see Section 4.1.

| Question: What is the shared profession of Jack Kerouac and Dan Masterson? |

|---|

| 1. What was Jack Kerouac’s profession? |

| - Novelist and poet |

| 2. What was Dan Masterson’s profession? |

| - Poet |

| 3. What genre is Jack Kerouac associated with? |

| - Beat Generation literature |

| Answer: Poet |

| Question: Which band top the UK charts with their singles "Chelsea Dagger" and "Whistle for the Choir": The Fratellis or The Madden Brothers? |

| 1. Which band is known for the singles "Chelsea Dagger" and "Whistle for the Choir"? |

| - The Fratellis |

| 2. Which band had a top ten hit in the UK with "Chelsea Dagger"? |

| - The Fratellis |

| 3. What was the highest chart position for "Whistle for the Choir" in the UK Singles Chart? |

| - Number nine |

| Answer: The Fratellis |

🔼 This table presents two examples used in an ablation study to evaluate a modified version of the SQuARE prompting technique. The modification involves instructing the language model to select the final answer by majority vote among the answers generated to several sub-questions. This table is part of an analysis to determine the effect of different answer aggregation methods on the model’s performance.

read the caption

Table 10: Two-shot examples used in an ablation study where SQuARE is supplemented by an instruction to pick the final answer as a majority vote amongst the sub-questions’ answers, see Section 4.1.

| You are a helpful question answerer who can provide an answer given a question and relevant context. |

| Let’s think through this step by step. Solve each step and explain how you arrived at your answer. |

| Final answer should be a short span, just a few words. |

🔼 This table displays the prompt used in the experiments for the chain-of-thought (CoT) method proposed by Wei et al. (2023). It shows the instructions given to the large language model (LLM) to guide its reasoning process step-by-step, before arriving at a final answer. The prompt is designed to encourage the model to break down the problem into smaller, more manageable parts, thus improving the accuracy and explanation of its reasoning.

read the caption

Table 11: Prompt used for the CoT Wei et al. (2023) experiments.

| Question: What is the shared profession of Jack Kerouac and Dan Masterson? |

|---|

| Jack Kerouac and Dan Masterson are both writers. Jack Kerouac is best known as a novelist and a poet, a central figure of the Beat Generation. Dan Masterson is known as a poet. Therefore, their shared profession is writing, specifically in the realm of literature. |

| Answer: they are poets. |

| Question: Which band top the UK charts with their singles "Chelsea Dagger" and "Whistle for the Choir": The Fratellis or The Madden Brothers? |

| The band that topped the UK charts with their singles "Chelsea Dagger" and "Whistle for the Choir" is The Fratellis. |

| To determine this, we can look at the history and discography of each band: |

| The Fratellis: |

| "Chelsea Dagger" is a well-known single by The Fratellis, released in 2006 from their debut album "Costello Music." |

| "Whistle for the Choir" is another single from the same album, also released in 2006. |

| The Madden Brothers: |

| This is a different musical duo consisting of Joel and Benji Madden from the band Good Charlotte. |

| They do not have singles named "Chelsea Dagger" or "Whistle for the Choir." |

| Given this information, it is clear that The Fratellis are the band associated with these singles. |

| Answer: The Fratellis. |

🔼 This table displays two example question-answer pairs used during the experiments. These examples are employed as few-shot learning examples to guide the model before answering the main questions. The examples cover two different question types to ensure that the model receives broad exposure.

read the caption

Table 12: The two-shot examples used for the CoT Wei et al. (2023) configurations.

| You are a helpful question answerer who can provide an answer given a question and relevant context. |

| Reframe the question with additional context and detail, then provide an answer. |

| Final answer should be a short span, just a few words. |

🔼 This table details the prompt used for the rephrasing and responding (RaR) experiments in the paper. The RaR technique is a prompting strategy that instructs the language model to first rephrase the given question before attempting to answer it directly. This table outlines the specific instructions given to the model in this experimental setup, thereby providing a clear understanding of how the RaR technique was implemented and utilized in the research.

read the caption

Table 13: Prompt used for the RaR Deng et al. (2024) experiments.

| Question: What is the shared profession of Jack Kerouac and Dan Masterson? |

|---|

| What is the common profession that both Jack Kerouac, known for his influential work in the Beat Generation, and Dan Masterson, noted for his contributions to literature, share? |

| Answer: Writers |

| Question: Which band top the UK charts with their singles "Chelsea Dagger" and "Whistle for the Choir": The Fratellis or The Madden Brothers? |

| Which band achieved the number one position on the UK charts with their hit singles "Chelsea Dagger" and "Whistle for the Choir": was it The Fratellis or The Madden Brothers? |

| Answer: The Fratellis |

🔼 Table 14 shows the two example questions and answers used in the RaR (Rephrase and Respond) experimental setup. The examples are designed to demonstrate how the RaR prompting technique works by first rephrasing the question to provide more context and detail before answering. This is in contrast to other techniques where the model attempts to answer directly from the initial question. The examples cover two distinct question types, one focused on shared professions and another on identifying a music band, showcasing the versatility of this prompting method across different knowledge domains.

read the caption

Table 14: The two-shot examples used for the RaR Deng et al. (2024) configurations.

Full paper#