TL;DR#

Generative AI release decisions often overlook crucial elements beyond mere availability. These elements influence how stakeholders interact with the system and components. The access considerations should be inclusive of resources and qualities that are needed by stakeholders to actively engage with the system components. System and component release and availability can be distinguished from accessibility because accessibility is more than just what is available. The considerations also influence deployment.

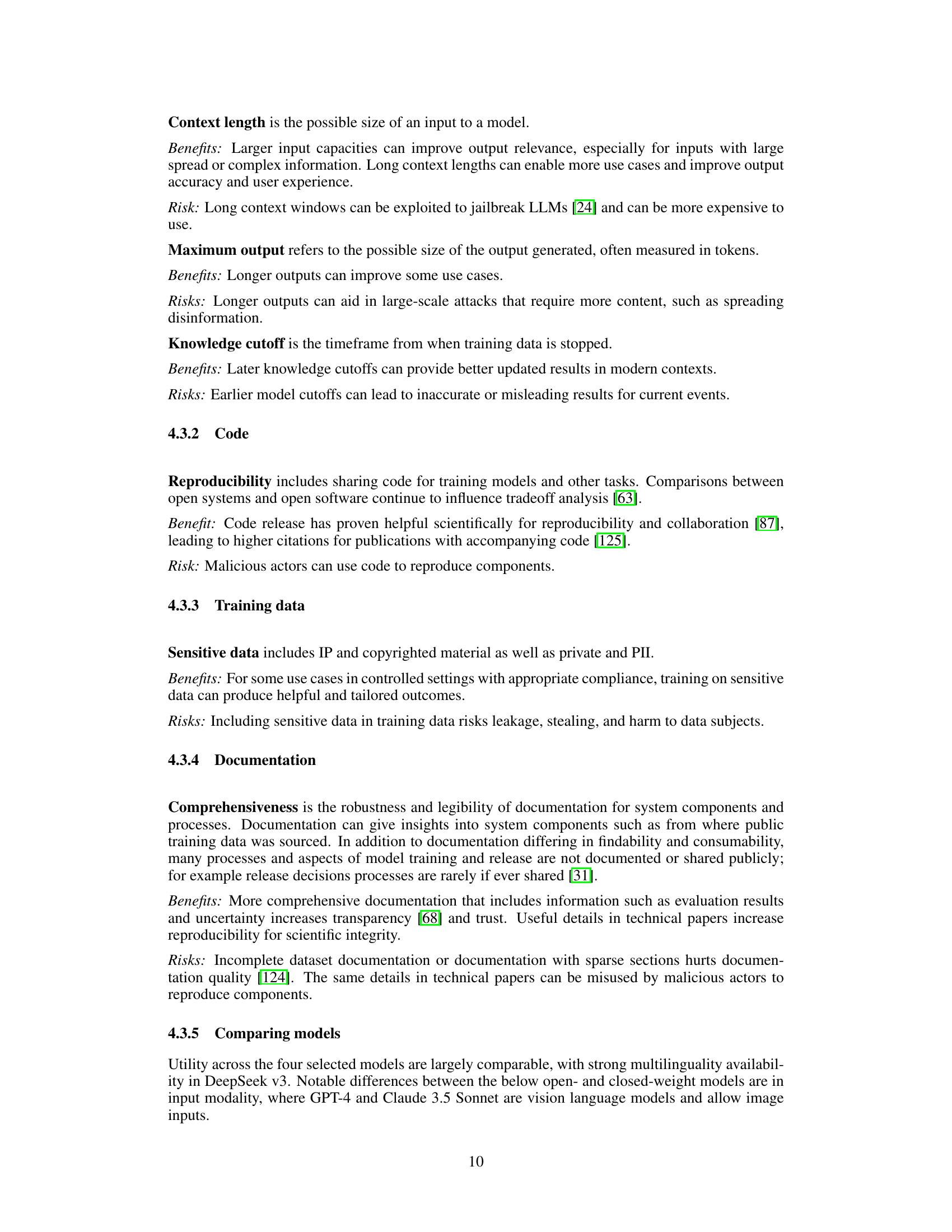

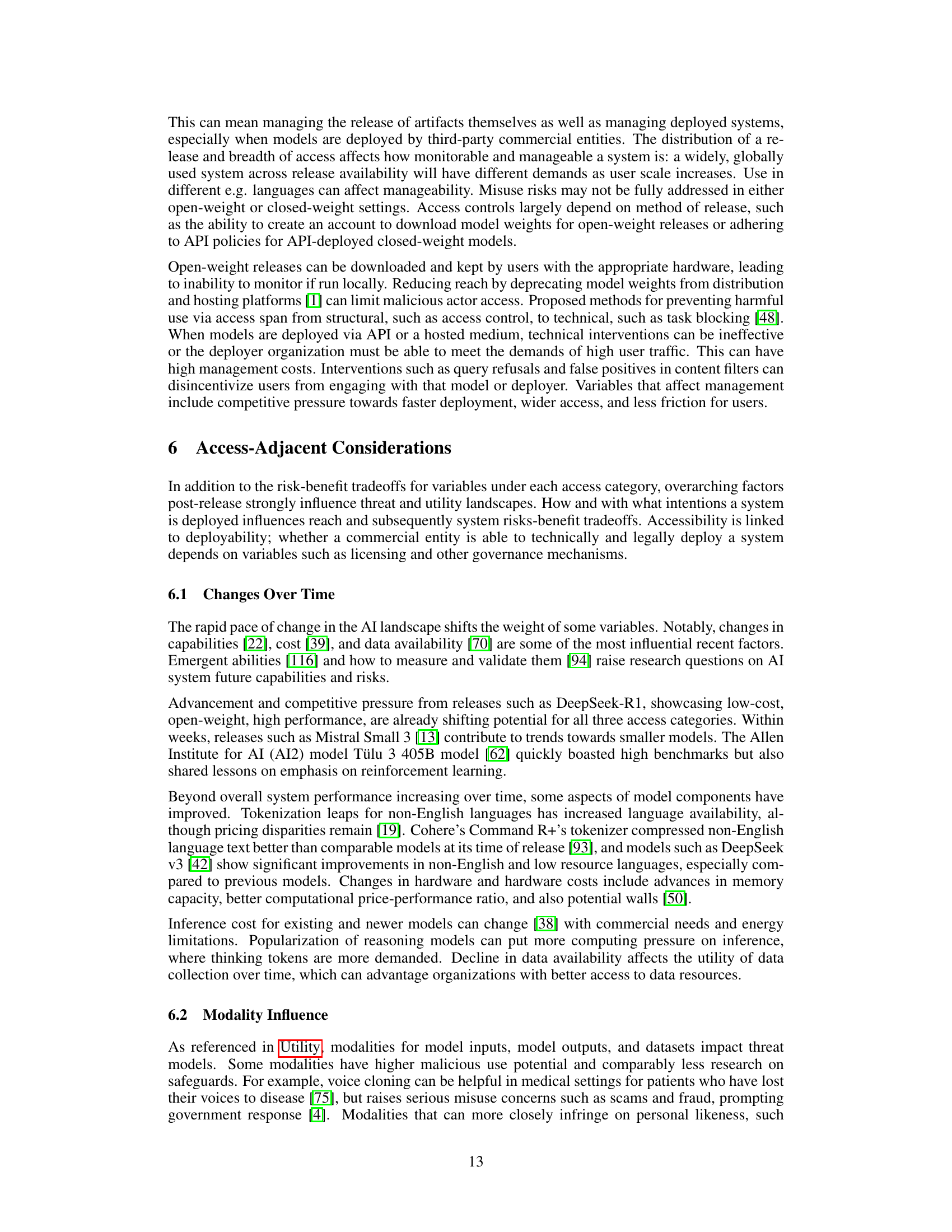

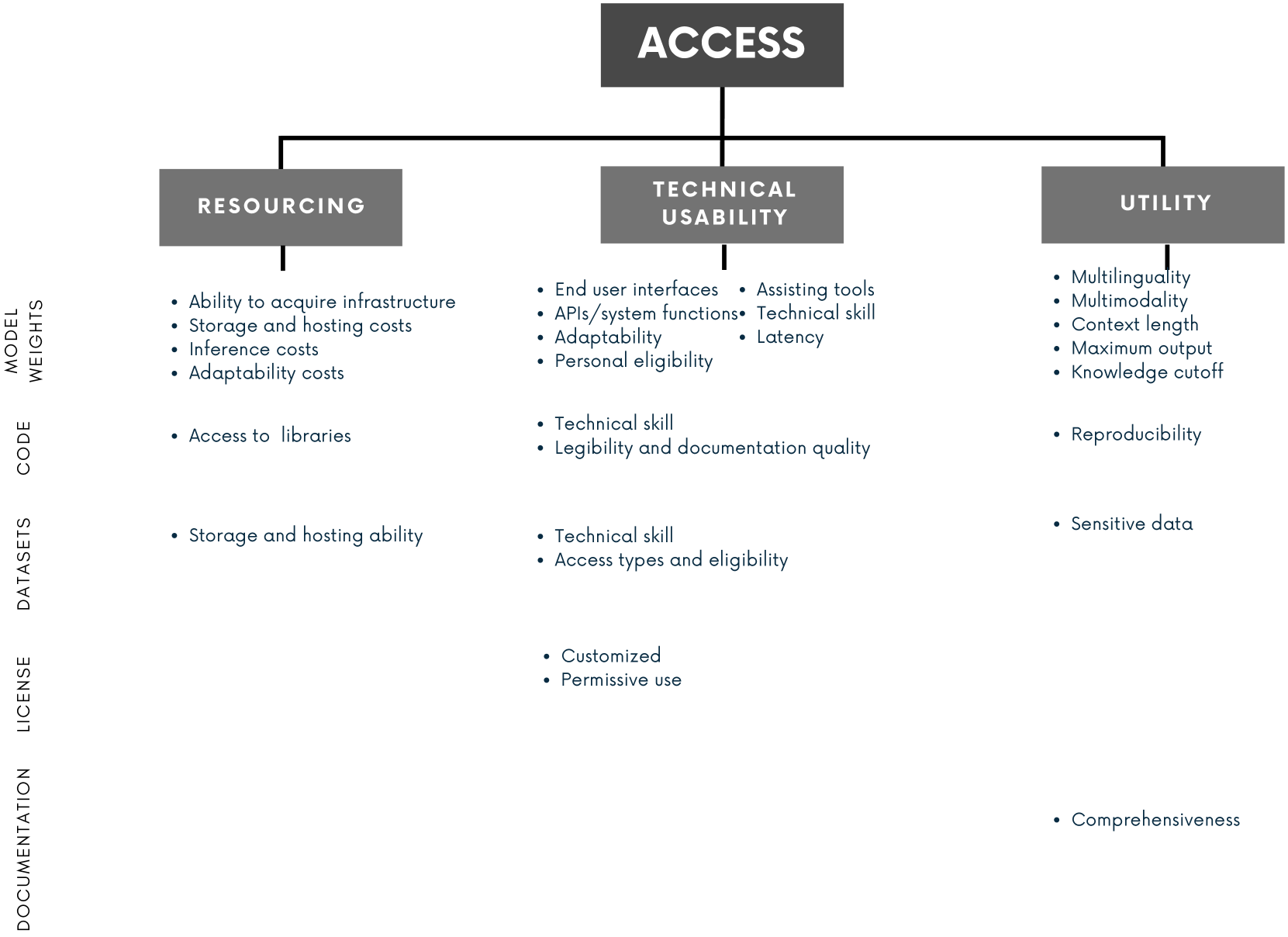

This paper deconstructs access along three axes: resourcing, technical usability, and utility. It provides an evaluation of variables per system component and clarifies related tradeoffs within each category. Four high-performance language models (two open-weight, two closed-weight) are compared for accessibility. This shows similar considerations for all based on access variables. Finally, they examine how scale affects the ability to manage and intervene on risks.

Key Takeaways#

Why does it matter?#

This paper is crucial for AI researchers as it refines the understanding of access beyond simple release decisions. It provides a detailed framework for evaluating the practical accessibility of AI systems. The access variables allow AI researchers to think more broadly about the risk/benefit analysis and it allows for more informed development and policy recommendations.

Visual Insights#

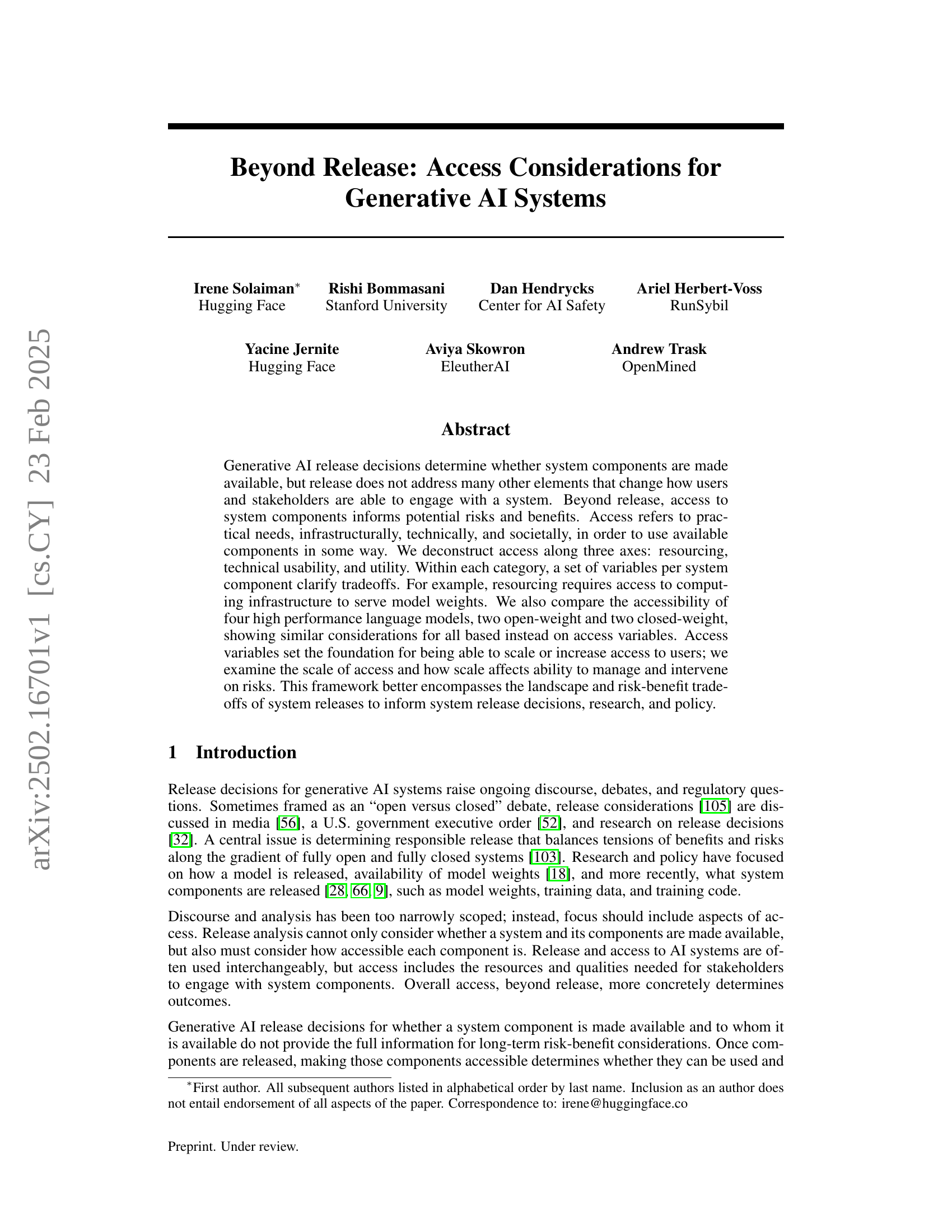

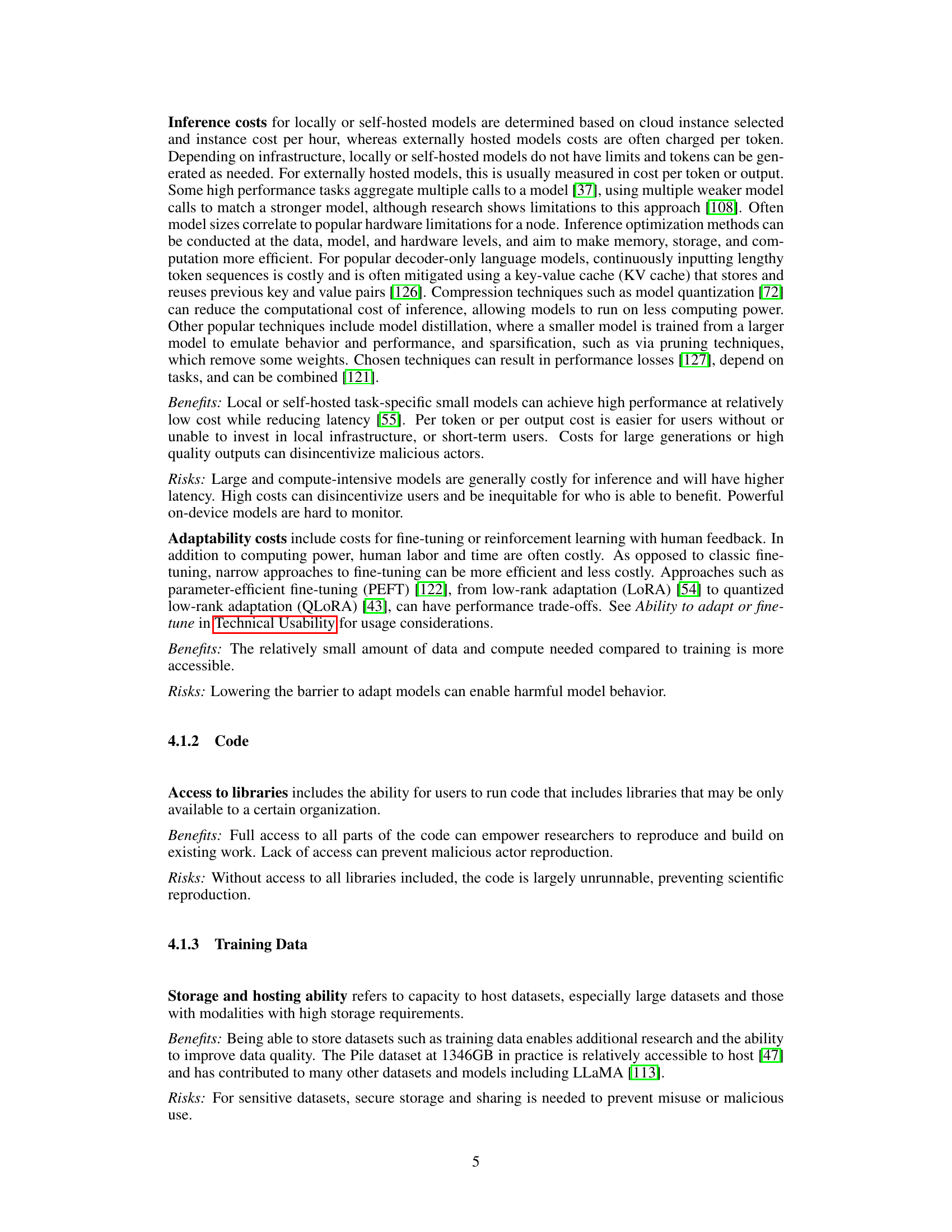

🔼 This figure illustrates the transition from a system’s components being merely available to their becoming truly accessible. It highlights the three key access dimensions—resourcing (infrastructure needs), technical usability (ease of use for various skill levels), and utility (practical benefits and applications)—that influence accessibility. Within each dimension, the figure indicates potential benefits (e.g., reduced access for harmful purposes, broader user base) and risks (e.g., limited researcher access, more layperson attackers) associated with the specific access level.

read the caption

Figure 1: From Available to Accessible with High Level Tradeoffs

In-depth insights#

Access, not open#

Access, not open suggests a shift in perspective from simply making AI systems and their components available (open) to ensuring they are usable and beneficial for a diverse range of stakeholders. It highlights that merely releasing model weights or code doesn’t guarantee equitable participation or responsible innovation. True accessibility encompasses resourcing (compute, storage), technical usability (interfaces, documentation), and utility (relevance, multilinguality). It calls for addressing practical barriers that prevent individuals and organizations, especially those with limited resources or technical expertise, from engaging with and contributing to the AI ecosystem. This perspective acknowledges that openness without access can exacerbate existing inequalities and potentially increase risks, as malicious actors may have an easier time exploiting available resources while marginalized groups are excluded. Ultimately, it underscores the importance of designing AI systems and release strategies that prioritize access alongside openness to maximize benefits and minimize harms.

Beyond release#

Beyond release considerations in generative AI systems are critical, extending beyond the simple availability of model weights or code. It encompasses the practical aspects of access, including resourcing, technical usability, and utility. Resourcing involves infrastructural needs, while usability concerns technical skills required. Utility covers aspects like multilinguality. Accessibility affects the potential for both beneficial and malicious use, influencing who can deploy and manage the models. Scaling considerations affect the intervention in violations.

Scalable Access#

Scalable access in AI systems is crucial, yet presents multifaceted challenges. Expanding access requires careful consideration of resourcing, technical usability, and utility. Resourcing includes infrastructural needs and costs, potentially limiting access for some. Technical usability determines how diverse users can engage, balancing broader access with potential misuse. Utility relates to the capabilities and their impact. Scaling accessibility positively affects usage but also exposes systems to malicious actors, influencing the capacity to manage risk and intervene effectively, highlighting a tension between broader access and manageability.

Usability levers#

Usability levers are crucial for AI systems, impacting user engagement. User-friendly interfaces democratize access, but also increase the risk of malicious use by less skilled actors. API design affects accessibility, balancing ease of integration with security. Controls like rate limiting are important, but must balance with user experience. Personal eligibility criteria may restrict access based on factors like age or location, affecting both safety and fairness. Technical skills for interaction and quality documentation impact system adoption. Latency affects usability, and locally hosted models offer speed improvements. A holistic approach considering both benefits and risks is essential.

Utility & harm#

The paper should deeply investigate the dual-edged nature of utility. While increased functionality (multilingual support, multimodal input) broadens accessibility and applicability, it also exposes the system to a wider range of potential misuse scenarios. The analysis must consider the disproportionate impact of harmful outputs on marginalized groups. For example, biased outputs in low-resource languages could reinforce existing societal inequalities. Additionally, they must investigate how differing modalities (text, image, audio) present unique challenges for content moderation and safety mechanisms. Finally, future research needs to explore adaptive mitigation strategies that account for the evolving landscape of utility and associated harms, like model output bias.

More visual insights#

More on figures

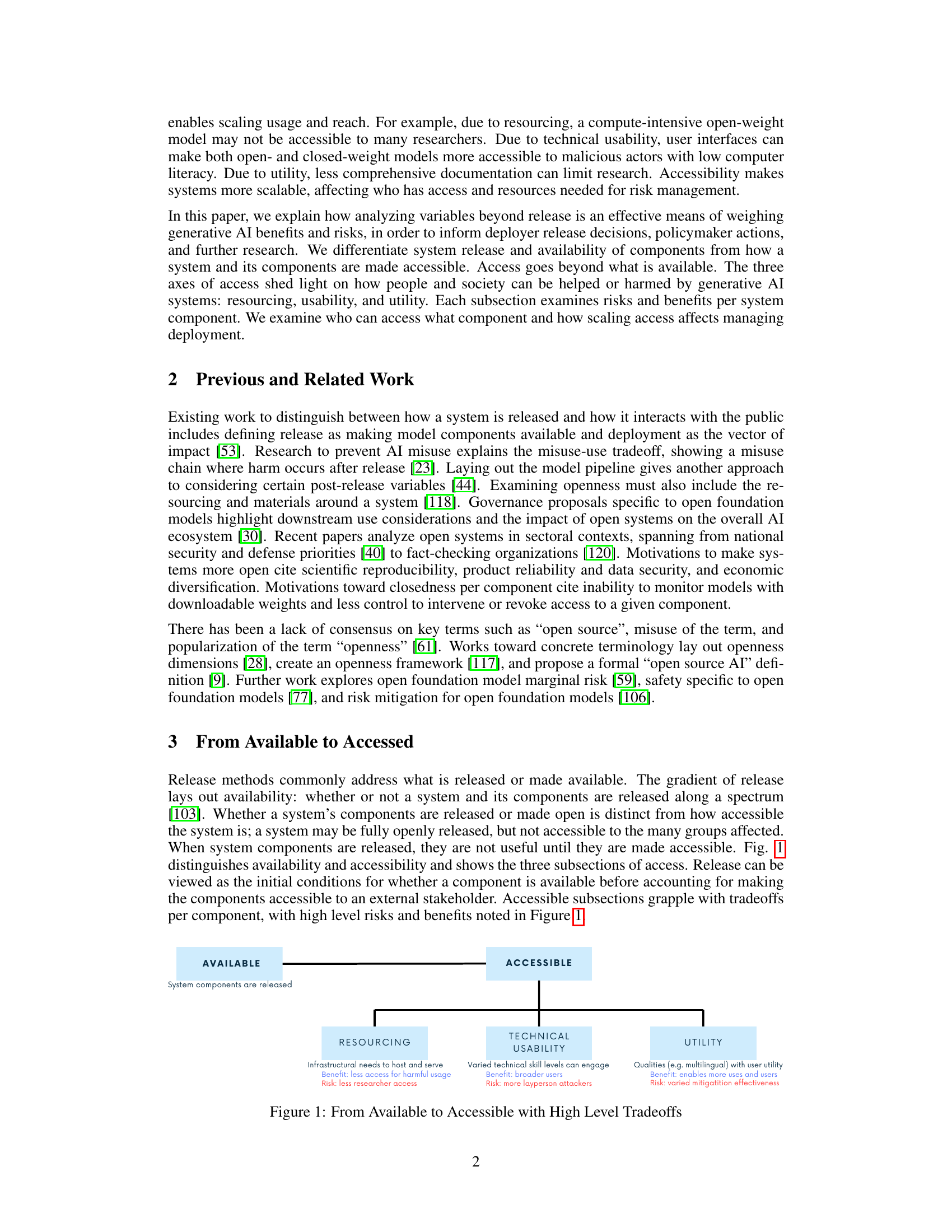

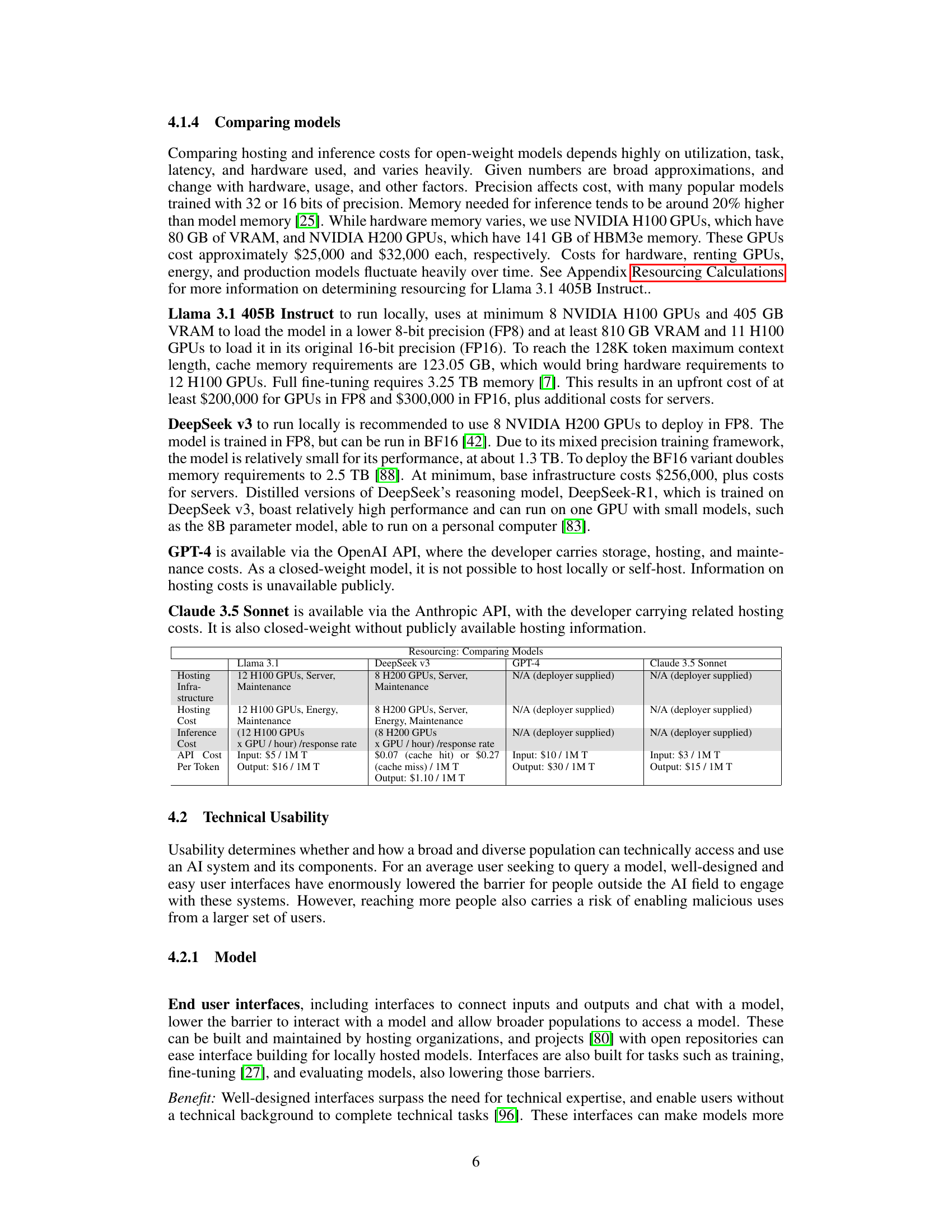

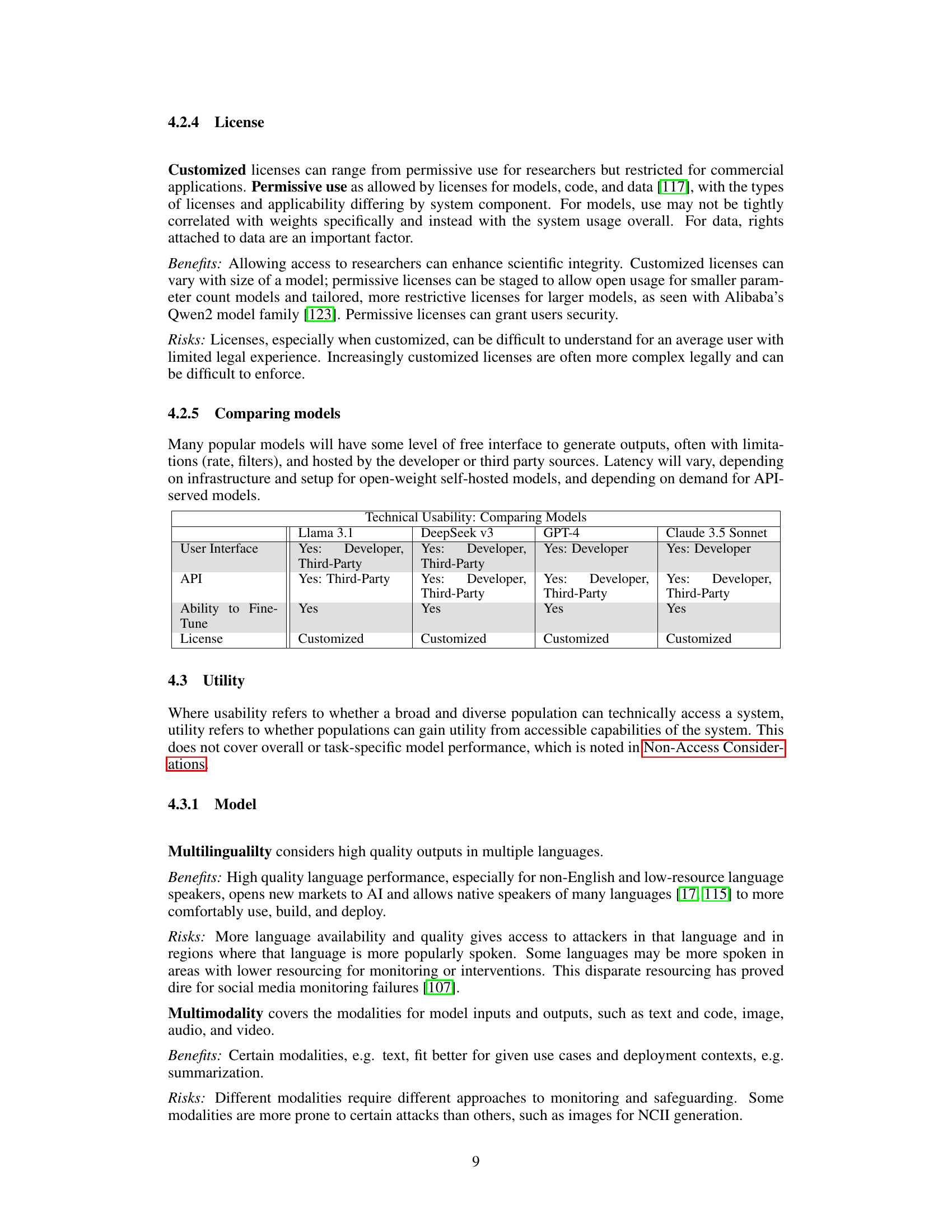

🔼 This figure breaks down the concept of ‘Access’ into three main categories: Resourcing, Technical Usability, and Utility. Each category is further divided into several variables that determine the degree of access. For example, Resourcing considers factors like the cost and availability of computing infrastructure needed to use the system. Technical Usability examines the ease of use for different user groups, including the required technical skills and interface quality. Finally, Utility assesses the usefulness and value of the system for various purposes, such as multilingual support and output quality. The figure helps to clarify the different aspects of accessibility beyond simply whether a system is released, highlighting the tradeoffs involved in different choices.

read the caption

Figure 2: Categories of Access and Respective Variables

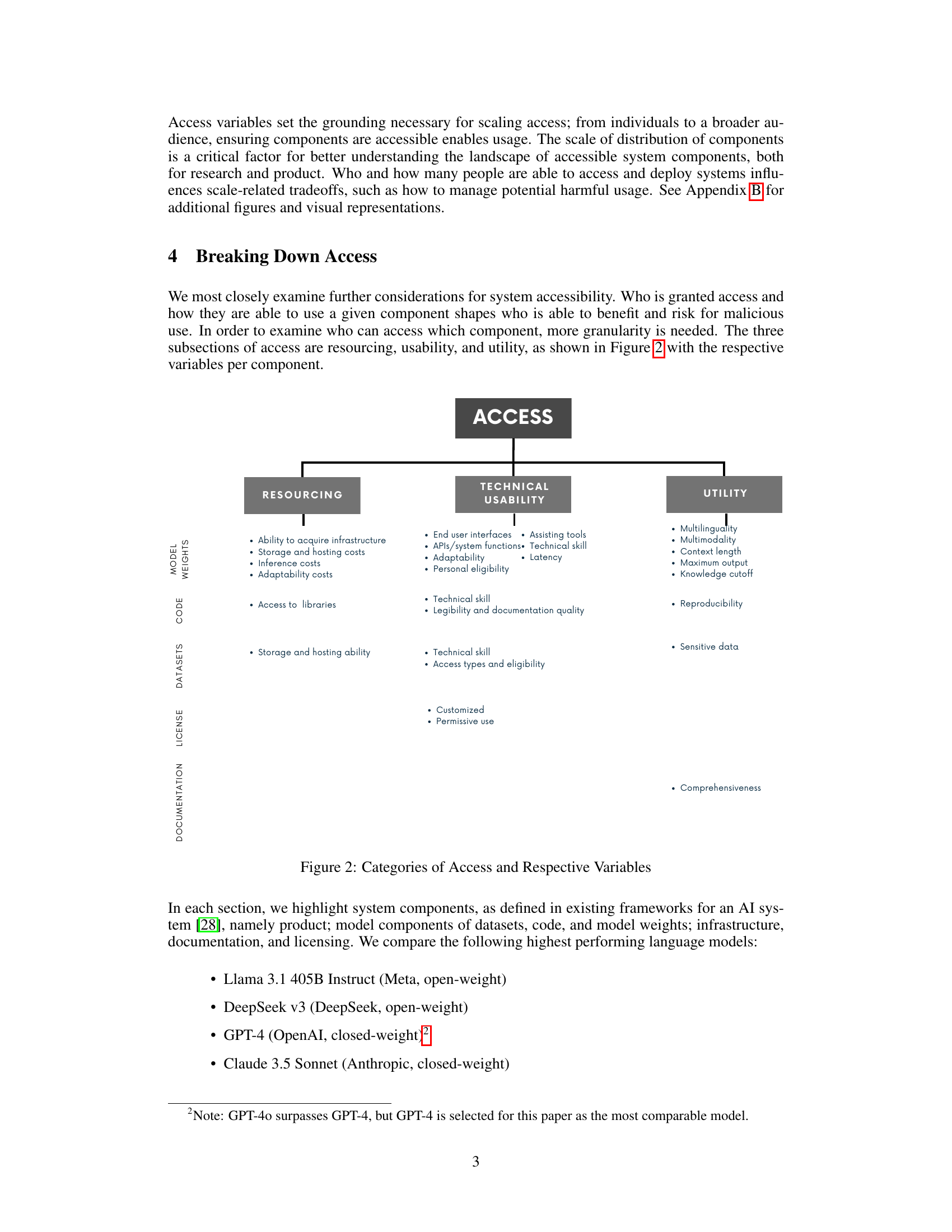

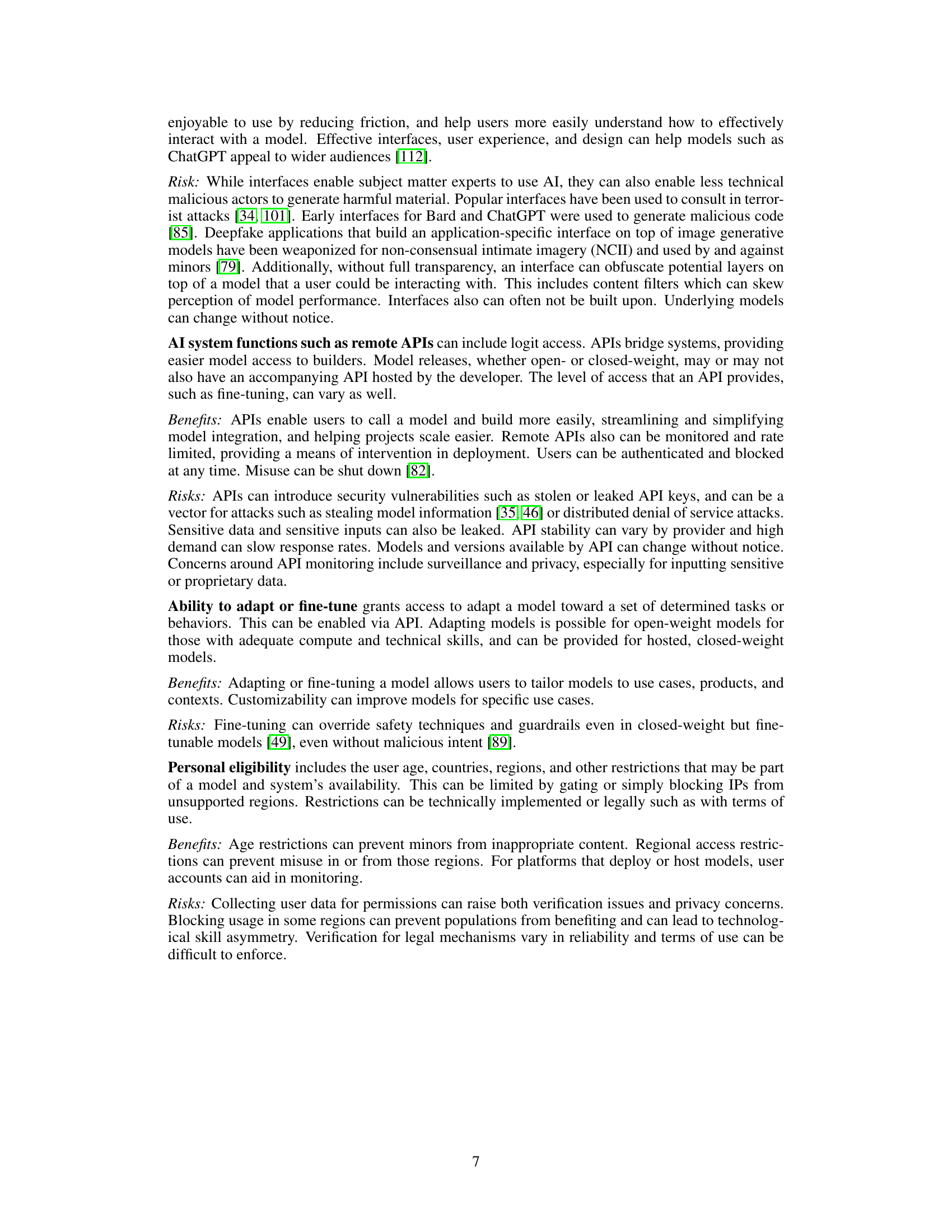

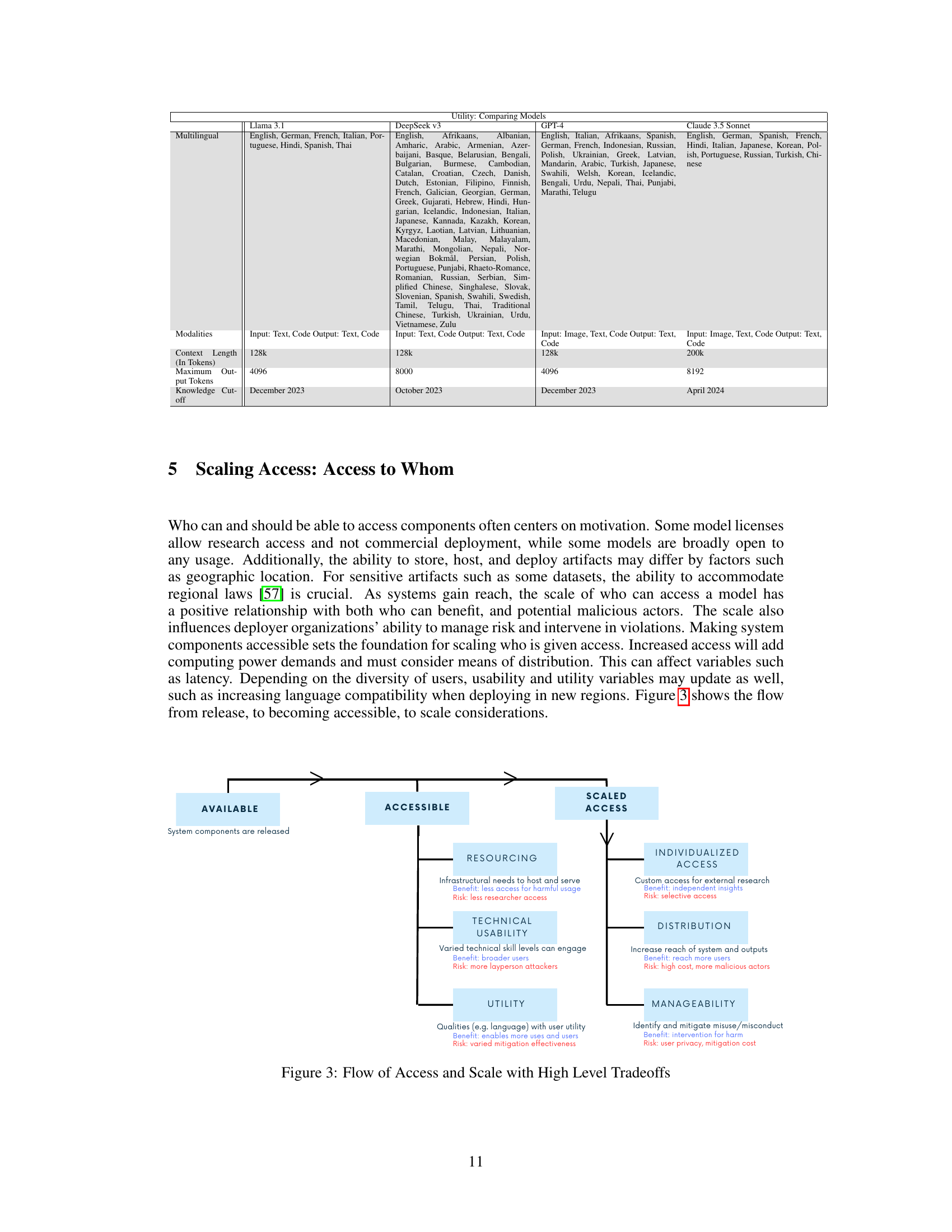

🔼 This figure illustrates the progression from an AI system’s initial availability to its eventual widespread accessibility, highlighting key trade-offs at each stage. It starts with the ‘Available’ stage, where system components are simply released. This then transitions to the ‘Accessible’ stage, emphasizing the three primary dimensions influencing accessibility: resourcing (infrastructure needs), technical usability (required technical skills), and utility (practical benefits for users). Finally, the figure depicts the ‘Scaled Access’ stage, which considers the impact of increasing access and reach on the system, including individualized access (for specific researchers), distribution (methods of making the system available to a broader audience), and manageability (ability to identify and mitigate harm). The figure emphasizes how the expansion of access generates both positive outcomes (wider reach and benefits) and negative ones (increased risk and costs).

read the caption

Figure 3: Flow of Access and Scale with High Level Tradeoffs

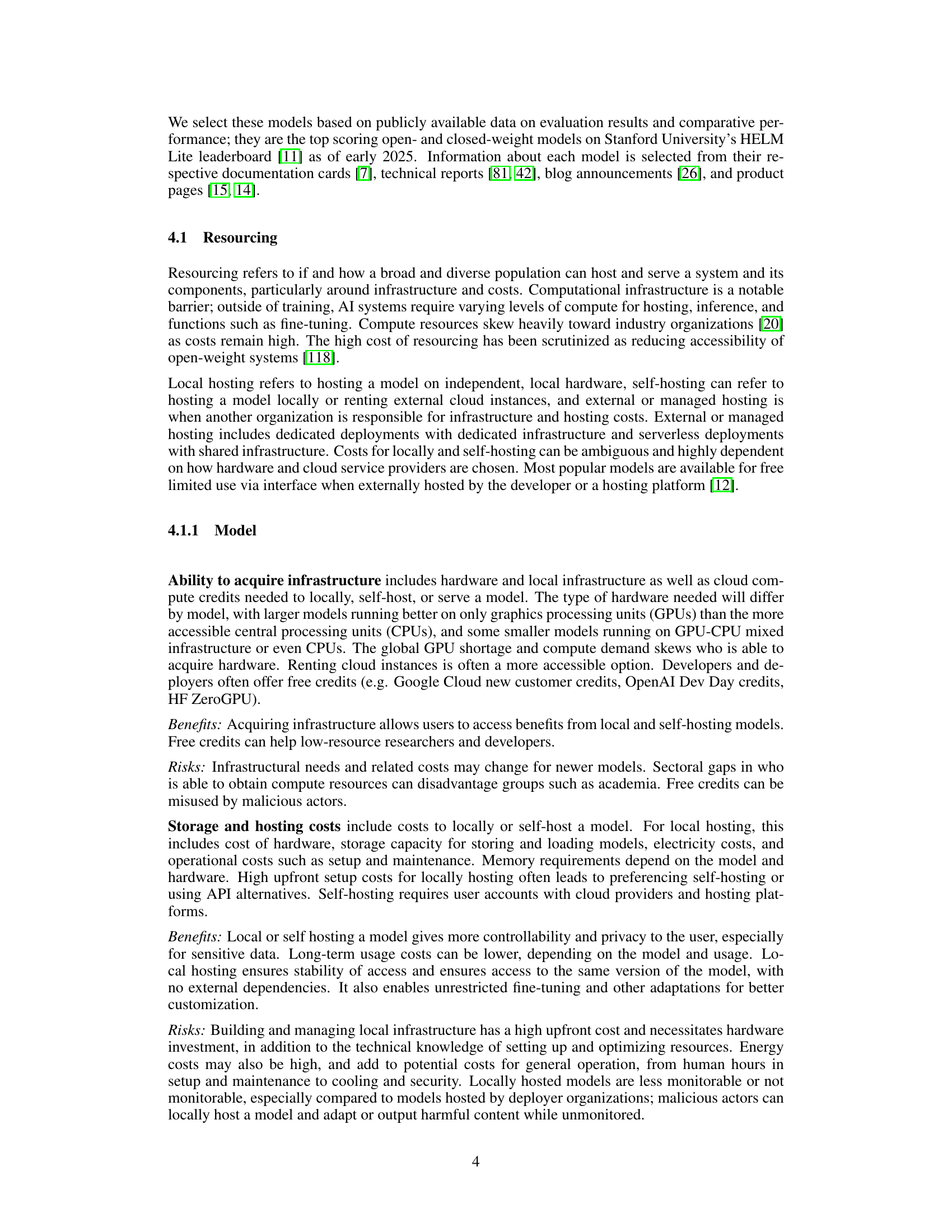

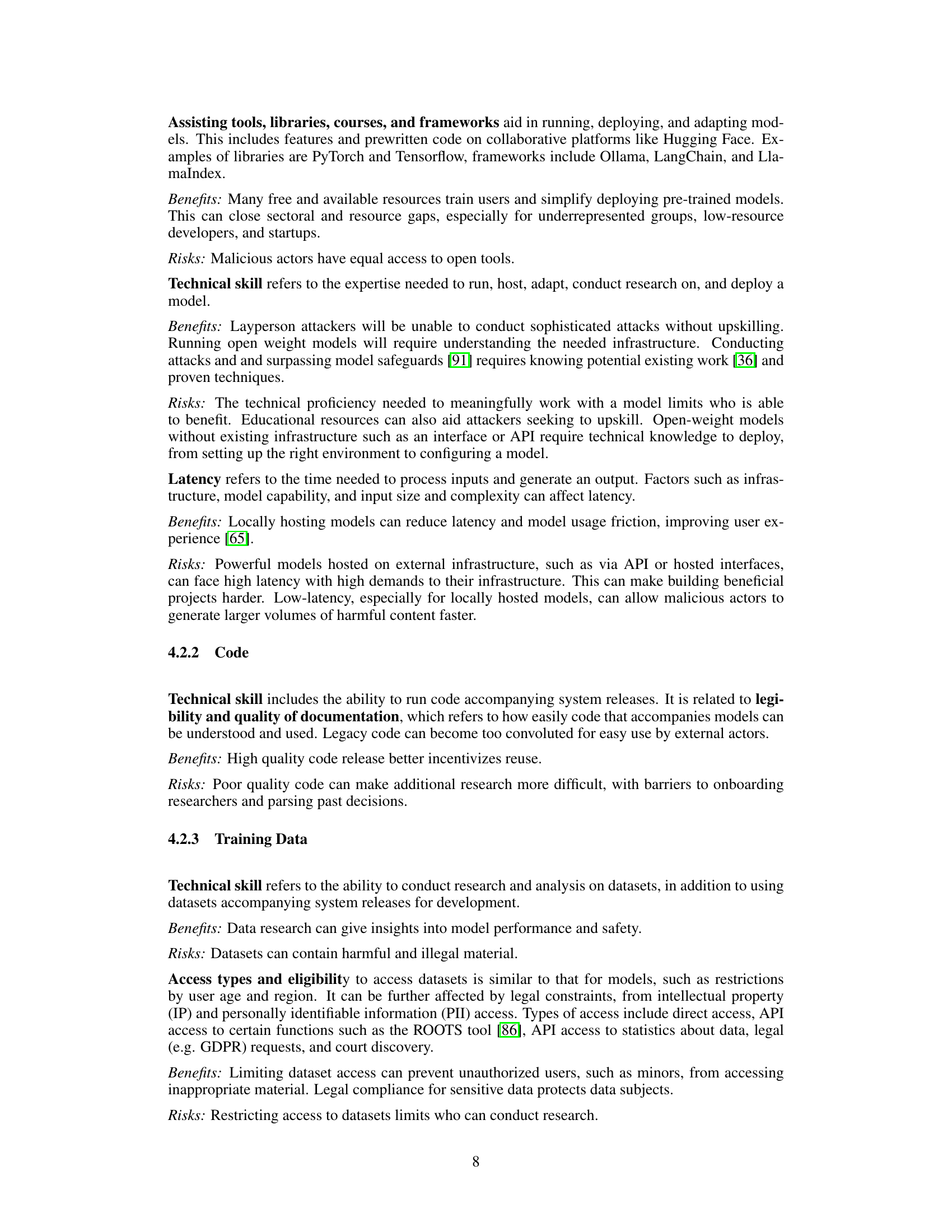

🔼 This figure illustrates the transition of an AI system from a state of mere availability to one of genuine accessibility, highlighting the key factors involved. The left side shows the ‘Available System,’ signifying that the system’s components (model weights, code, data, etc.) exist and are released. The central section focuses on the three axes of ‘Accessibility’: Resourcing (the infrastructural requirements for hosting and using the system), Technical Usability (the level of technical expertise needed to use the system), and Utility (the practical applications and usefulness of the system). The right side shows the ‘Accessible System,’ representing the stage where the system components are not only released but also practically usable by a wider range of users. The arrows and annotations illustrate the high-level tradeoffs between the benefits (e.g., broader access, more applications) and the risks (e.g., increased potential for misuse, higher costs) at each stage of the process.

read the caption

Figure 4: Shifting from Available to Accessible with High Level Tradeoffs

Full paper#