TL;DR#

Deep Reinforcement Learning (RL) has shown promising results in various fields, but dexterous robot manipulation remains limited due to challenges such as environment modeling, reward design, and object perception. Specifically, matching a simulated environment with the real world is difficult and time consuming. Also, the variety of object properties poses challenges in designing generalizable rewards and transferring policies from simulation to reality. Thus, it is hard to scale and generalize via autonomous exploration.

This work addresses these issues by introducing an automated real-to-sim tuning module to align simulated and real environments, along with a generalized reward design scheme that simplifies reward engineering for long-horizon tasks. To improve sample efficiency, a divide-and-conquer distillation process is employed and a mixture of sparse and dense object representations is adopted to bridge the sim-to-real perception gap. The proposed techniques enable a humanoid robot to perform dexterous manipulation tasks with robust generalization and high performance without human demonstrations.

Key Takeaways#

Why does it matter?#

This paper is important as it presents a comprehensive sim-to-real RL recipe for vision-based dexterous manipulation on humanoids. By tackling environment modeling, reward design, policy learning, and sim-to-real transfer, this work provides valuable insights and techniques that could significantly advance research in robotics and automation and opens new avenues for creating more capable and adaptable humanoid robots.

Visual Insights#

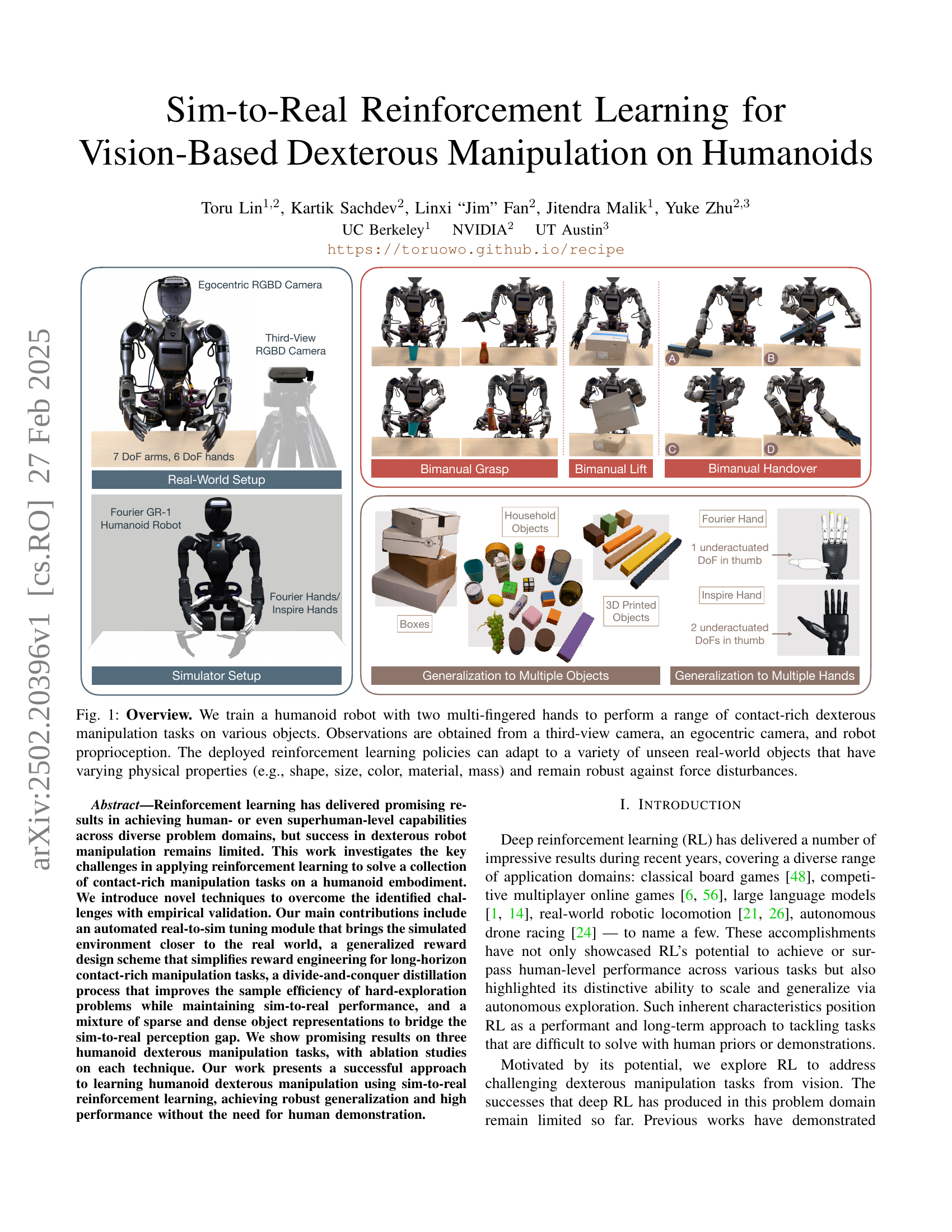

🔼 This figure illustrates a sim-to-real reinforcement learning (RL) framework for vision-based dexterous manipulation using humanoid robots. It highlights four key techniques that bridge the sim-to-real gap: automated real-to-sim environment tuning to reduce differences between simulated and real environments; a generalized reward design that breaks down complex manipulation tasks into simpler contact and object-state goals, thus simplifying reward engineering; sample-efficient policy learning through task-aware hand pose initialization and a divide-and-conquer distillation method; and vision-based sim-to-real transfer facilitated by using a combination of sparse and dense object representations for improved robustness and generalization.

read the caption

Figure 1: A sim-to-real RL recipe for vision-based dexterous manipulation. We close the environment modeling gap between simulation and the real world through an automated real-to-sim tuning module, design generalizable task rewards by disentangling each manipulation task into contact states and object states, improve sample efficiency of dexterous manipulation policy training by using task-aware hand poses and divide-and-conquer distillation, and transfer vision-based policies to the real world with a mixture of sparse and dense object representations.

| Autotune MSE | Lowest | Median | Highest |

|---|---|---|---|

| Grasp Success | 8 / 10 | 3 / 10 | 0 / 10 |

| Reach Success | 7 / 10 | 3 / 10 | 0 / 10 |

🔼 This table presents the results of an experiment evaluating the correlation between the mean squared error (MSE) of the autotuned robot model and the success rate of sim-to-real transfer for a robotic grasping task. Ten policy checkpoints, trained identically except for their random seed, were tested for each set of modeling parameters generated by the autotune module. The success rate was assessed by evaluating two stages of the grasp-and-reach task: grasp success and reach success. Lower MSE values, indicating better alignment between simulation and reality via autotuning, are associated with higher sim-to-real success rates, demonstrating the effectiveness of the autotune module in bridging the sim-to-real gap.

read the caption

TABLE I: Lower MSE from autotune correlates with higher sim-to-real success rate. For each set of modeling parameters, we test the sim-to-real transfer performance of 10 policy checkpoints (trained identically except for random seed). We evaluate success rate by stages on the grasp-and-reach task, and observe a correlation between lower MSE measured by autotune module and higher sim-to-real transfer success rate.

In-depth insights#

Auto Real2Sim#

The “Auto Real2Sim” heading suggests an automated approach to bridging the gap between real-world scenarios and their simulated counterparts, a crucial aspect of sim-to-real transfer learning, especially in robotics. Automated methods are essential because manual tuning is time-consuming and might not capture complex dynamics accurately. Such automation would involve algorithms that calibrate simulator parameters (physics, robot models, environments) to match real-world behavior based on sensor data. This likely involves optimization techniques that minimize the discrepancy between simulated and real-world data, possibly through parameter space search and error minimization. The benefits include reduced engineering effort, faster iteration cycles, and potentially more robust policies due to better alignment between simulation and reality.

Contact Goals#

When dealing with intricate manipulation tasks, especially those involving humanoid robots, effectively defining contact goals becomes paramount. A poorly defined contact goal can lead to unstable grasps or failed handovers, resulting in task failure. The paper may leverage techniques such as contact stickers, strategically placed on objects, to guide the robot’s hand and finger placements. These stickers can be procedurally generated, offering flexibility and adaptability to various object shapes and sizes. The contact goal is then framed as minimizing the distance between the robot’s fingertips and these designated contact points, simplifying reward engineering. Also, contact goals are specified via keypoint-based state representation that are procedurally generated on the surface of the simulated asset. By meticulously crafting these contact goals, the reward function can effectively guide the robot towards achieving secure and stable object manipulation, mitigating the risk of exploration in vast and complex action spaces.

Divide & Conquer#

Divide and conquer is a powerful strategy for tackling complex problems, particularly in reinforcement learning (RL) where exploration can be challenging. The core idea is to decompose a difficult task into smaller, more manageable subtasks. This approach can significantly improve sample efficiency by reducing the dimensionality of the search space and providing more frequent learning signals. Each subtask can be solved by a specialized policy, and then the knowledge gained from these policies can be integrated into a generalist policy through techniques like distillation. This allows the generalist policy to benefit from the expertise acquired by the specialists, leading to improved performance and generalization. Further the sub-task training will act like Teleoperators for task data collection in the simulation environment, and the generalist policy can act as a centralized model trained from curated data.

Sim2Real Vision#

Sim-to-Real vision is a crucial area in robotics, particularly for vision-based manipulation. The challenge lies in bridging the gap between simulated environments, where training data is abundant, and real-world scenarios, where unforeseen factors such as lighting variations, sensor noise, and unmodeled dynamics exist. Successful sim-to-real transfer necessitates robust perception algorithms that are invariant to these discrepancies. Techniques like domain randomization, where simulation parameters are deliberately varied, can improve a model’s ability to generalize. Another key aspect is the choice of object representation. While high-dimensional data like RGB images capture rich details, they are often more susceptible to the sim-to-real gap. Conversely, lower-dimensional representations such as object pose might be more transferable but lack sufficient information for complex tasks. Combining both sparse and dense information has proven effective. Furthermore, using domain adaptation strategies can help the model adapt to real world quirks.

Robust Humanoids#

Robust Humanoids represent a significant area in robotics, emphasizing their ability to maintain stable performance in various unpredictable and dynamic environments. Achieving robustness involves addressing challenges like balance control, perception under noisy conditions, and adapting to external disturbances. Designing robust humanoids often entails incorporating advanced control algorithms that account for uncertainties, implementing fault-tolerance mechanisms to handle hardware failures, and using robust perception techniques to accurately interpret sensor data despite noise and occlusions. Sim-to-real transfer is crucial, requiring careful modeling of dynamics and randomization to bridge the gap between simulation and the real world. Furthermore, energy efficiency and material strength are vital aspects, ensuring humanoids can operate reliably for extended periods and withstand physical stresses, thus enabling real-world applications in areas such as search and rescue, healthcare, and manufacturing. Research also focuses on robust decision-making, allowing humanoids to adapt their behavior based on changing task requirements and environmental conditions. A key element is the capability to recover from falls and unexpected impacts.

More visual insights#

More on figures

🔼 This figure showcases the successful learning of three dexterous manipulation tasks in a simulated environment using reinforcement learning. The left panel depicts a single-handed grasping task, where the robot hand successfully grasps an object. The middle panel illustrates a box-lifting task requiring the coordination of both hands to lift a box too large for a single hand. The right panel demonstrates a bimanual handover task, where an object is transferred between the robot’s two hands from right to left and then left to right. Each panel shows multiple snapshots of the robot during the task execution, highlighting the robot’s ability to successfully complete these complex manipulation tasks within the simulation.

read the caption

Figure 2: Policies learned in simulation. Left: grasp; middle: box lift; right: bimanual handover (right-to-left, left-to-right).

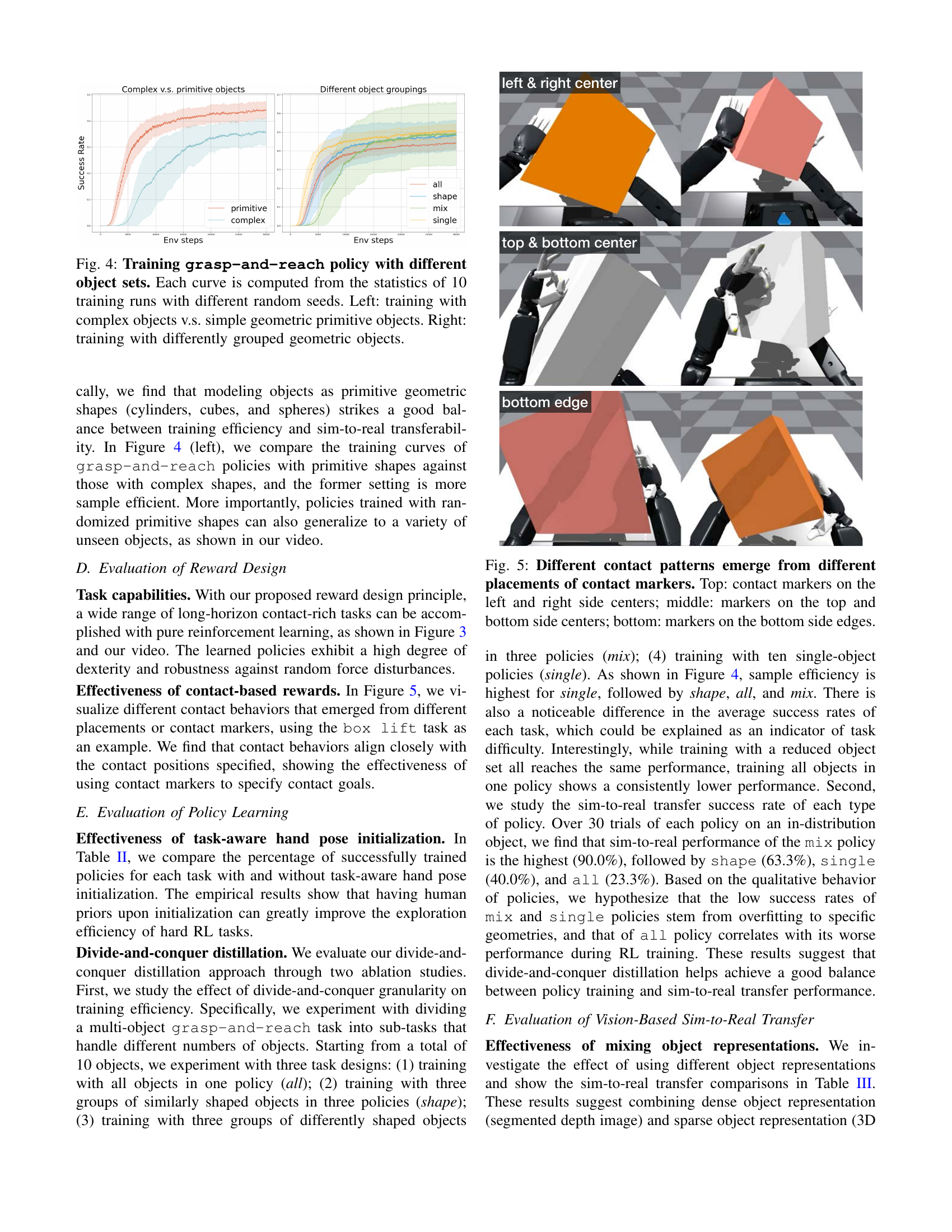

🔼 This figure shows the training curves for a grasp-and-reach policy trained on different sets of objects. The left panel compares training with complex real-world objects versus simpler geometric primitives. The right panel shows how grouping objects into different categories impacts training. Each curve represents the average success rate across 10 independent training runs, each with a different random seed, demonstrating the impact of object complexity and training data organization on learning performance.

read the caption

Figure 3: Training grasp-and-reach policy with different object sets. Each curve is computed from the statistics of 10 training runs with different random seeds. Left: training with complex objects v.s. simple geometric primitive objects. Right: training with differently grouped geometric objects.

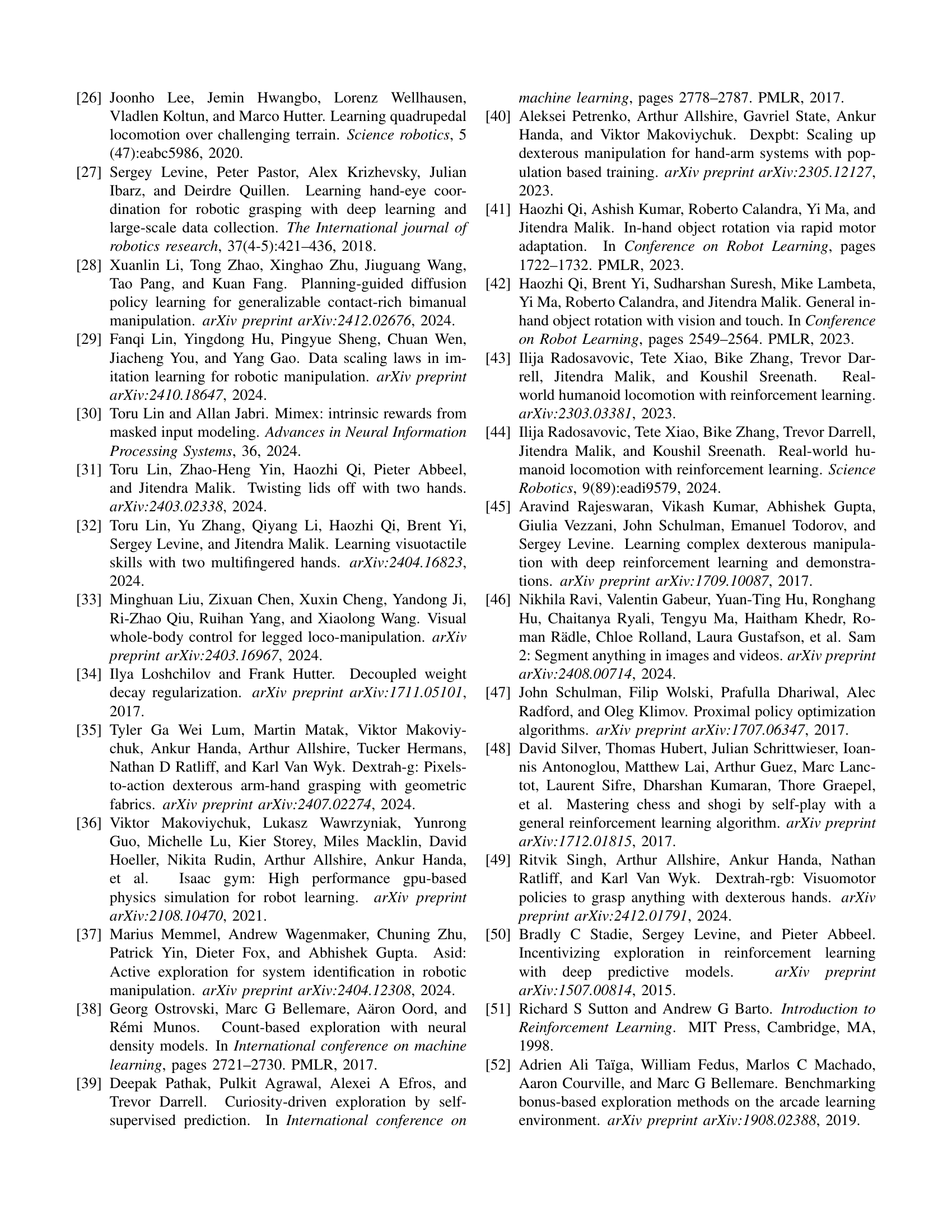

🔼 This figure visualizes how different placements of contact markers on a simulated object influence the resulting contact patterns learned by the robot during training. The top row shows markers placed on the left and right side centers of the object. The middle row shows markers placed on the top and bottom side centers. The bottom row shows markers placed only on the bottom edge. The variations in marker placement demonstrate how the reward function, which is based on these markers, shapes the robot’s learned behavior and resulting contact patterns during dexterous manipulation.

read the caption

Figure 4: Different contact patterns emerge from different placements of contact markers. Top: contact markers on the left and right side centers; middle: markers on the top and bottom side centers; bottom: markers on the bottom side edges.

🔼 This figure demonstrates the robustness of the learned policies against various external disturbances. Four scenarios are shown: a knock, a pull, a push, and a drag. Each of these actions is applied to the object being manipulated by the robot, and the figure shows that in each case, the robot’s learned policy successfully completes the task despite the unexpected force. This highlights the policy’s ability to generalize and adapt to real-world scenarios where perfect control is not always possible.

read the caption

Figure 5: Policy robustness. Our learned policies remain robust under different force perturbations, including knock (top left), pull (top right), push (bottom left), and drag (bottom right).

More on tables

| % Success | Grasping | Lifting | Handover |

|---|---|---|---|

| with Human Init | 80% | 90% | 30% |

| w/o Human Init | 60% | 90% | 0% |

🔼 Table II presents the impact of using human-generated data for initialization on the success rate of training reinforcement learning policies for three dexterous manipulation tasks: grasping, lifting, and handover. The table shows the percentage of successful policies (defined as achieving over 60% success rate across 10 evaluation episodes) when using human-provided initial hand poses versus starting from scratch. Each condition was tested using 10 different random seeds to assess the impact of initialization on policy learning reliability.

read the caption

TABLE II: Initializing with human data. Correlation between the percentage of successfully learned task policies and whether human play data is used for initialization. We define successfully learned policies as those that achieve over 60% episodic success during evaluation. For each task and each initialization setting, we test with 10 random seeds.

| Task | Grasping | Lifting | HandoverA | HandoverB |

| Depth + Pos | ||||

| Pickup | 10 / 10 | 10 / 10 | 10 / 10 | 10 / 10 |

| Task Success | 10 / 10 | 10 / 10 | 9 / 10 | 5 / 10 |

| Depth Only | ||||

| Pickup | 2 / 10 | 0 / 10 | 0 / 10 | 0 / 10 |

| Task Success | 2 / 10 | 0 / 10 | 0 / 10 | 0 / 10 |

🔼 This table compares the success rates of sim-to-real transfer for two different robot control policies: one using both depth images and 3D object position (Depth + Pos), and another using only depth images (Depth Only). The experiment focuses on three tasks: Grasping, Lifting, and Bimanual Handover. The Bimanual Handover task is further divided into two subtasks (HandoverA and HandoverB) because of its longer time horizon. An intermediate success metric, ‘Pickup Success’, is also included to show how often the robot successfully grasps the object. The results indicate that incorporating low-dimensional 3D position information alongside depth data significantly improves the sim-to-real transfer performance.

read the caption

TABLE III: Comparison of sim-to-real transfer performance between depth-and-position policy and depth-only policy. We separate the bimanual handover task into two columns due to its longer horizon. The pickup success is an intermediate success metric that measures how often the hands successfully pick up the object of interest. We find that combining low-dimensional representation (3D object position) with depth enables easier sim-to-real transfer.

| Object: Mass (kg) | [0.03, 0.1] |

|---|---|

| Object: Friction | [0.5, 1.5] |

| Object: Shape | |

| Object: Initial Position (cm) | |

| Object: Initial -orientation | |

| Hand: Friction | [0.5, 1.5] |

| PD Controller: P Gain | |

| PD Controller: D Gain | |

| Random Force: Scale | 2.0 |

| Random Force: Probability | 0.2 |

| Random Force: Decay Coeff. and Interval | 0.99 every 0.1s |

| Object Pos Observation: Noise | 0.02 |

| Joint Observation Noise. | |

| Action Noise. | |

| Frame Lag Probability | 0.1 |

| Action Lag Probability | 0.1 |

| Depth: Camera Pos Noise (cm) | 0.005 |

| Depth: Camera Rot Noise (deg) | 5.0 |

| Depth: Camera Field-of-View (deg) | 5.0 |

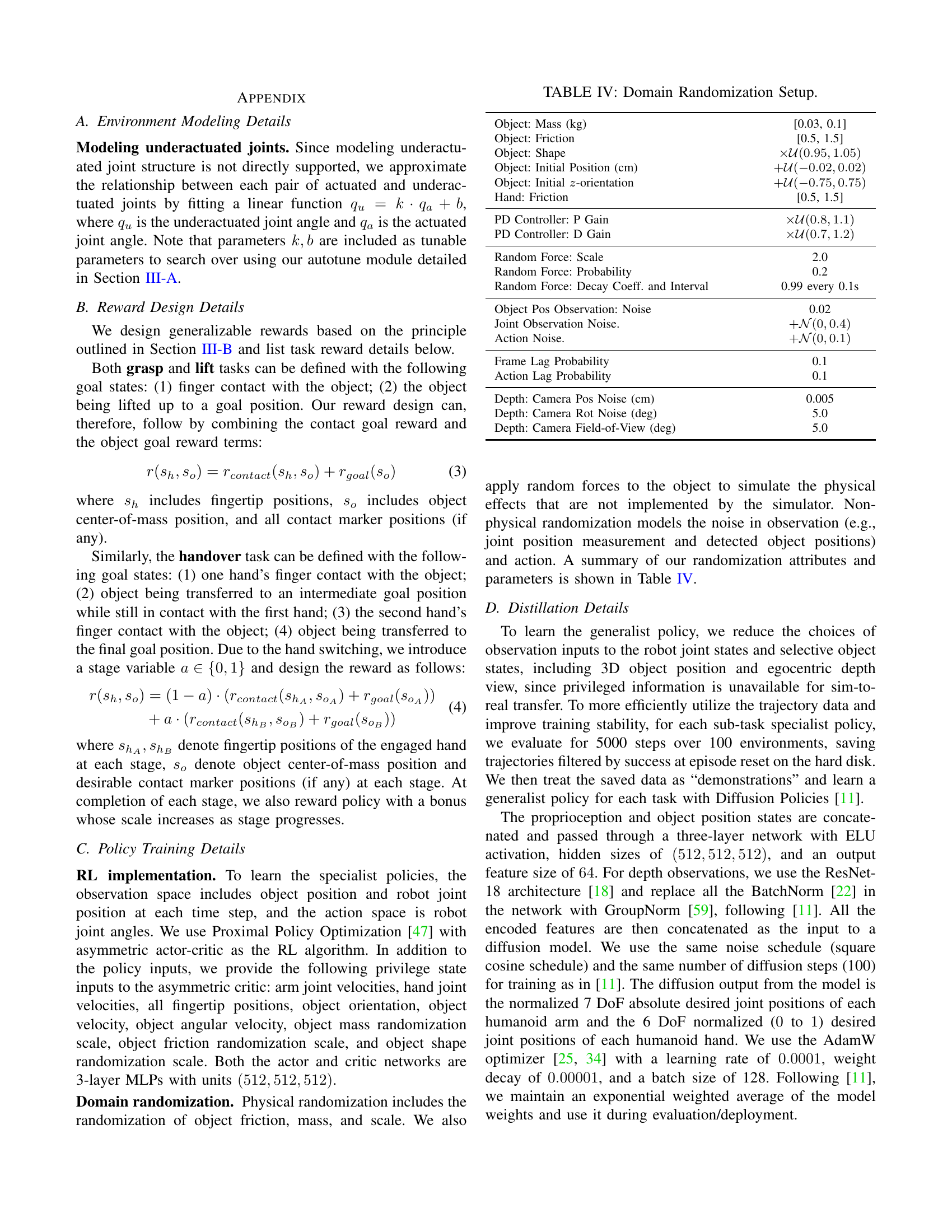

🔼 Table IV details the various parameters modified during domain randomization in the simulation environment. These parameters encompass object properties (friction, mass, shape, initial position and orientation), hand properties (friction), control parameters (PD controller gains), and random force parameters (scale, probability, and decay coefficient). Additionally, it includes noise added to object position observations, joint position observations, actions, and frame/action lag probabilities. Finally, it lists parameters related to the depth camera, including position, rotation and field-of-view noise.

read the caption

TABLE IV: Domain Randomization Setup.

Full paper#