TL;DR#

Large Language Models (LLMs) have shown promise in task planning, but struggle with long-horizon tasks and dynamic environments. Existing systems lack adaptability and real-time environmental awareness, hindering their robustness in real-world scenarios. To tackle this, current integrations still face limits in feedback quality and struggle with continuous adaptation and deployment complexities.

This paper introduces CLEA, a novel architecture using four specialized LLMs for closed-loop task management. It features an interactive task planner for dynamic subtask generation and a multimodal execution critic for assessing action feasibility. CLEA enhances robustness in object search and manipulation tasks, outperforming baselines with significant improvements in success and completion rates.

Key Takeaways#

Why does it matter?#

This paper introduces CLEA, advancing embodied AI by enhancing task planning & execution in dynamic environments. Its closed-loop architecture & open-source LLMs offer researchers a robust, reproducible platform for complex robotic tasks, pushing forward real-world AI applications.

Visual Insights#

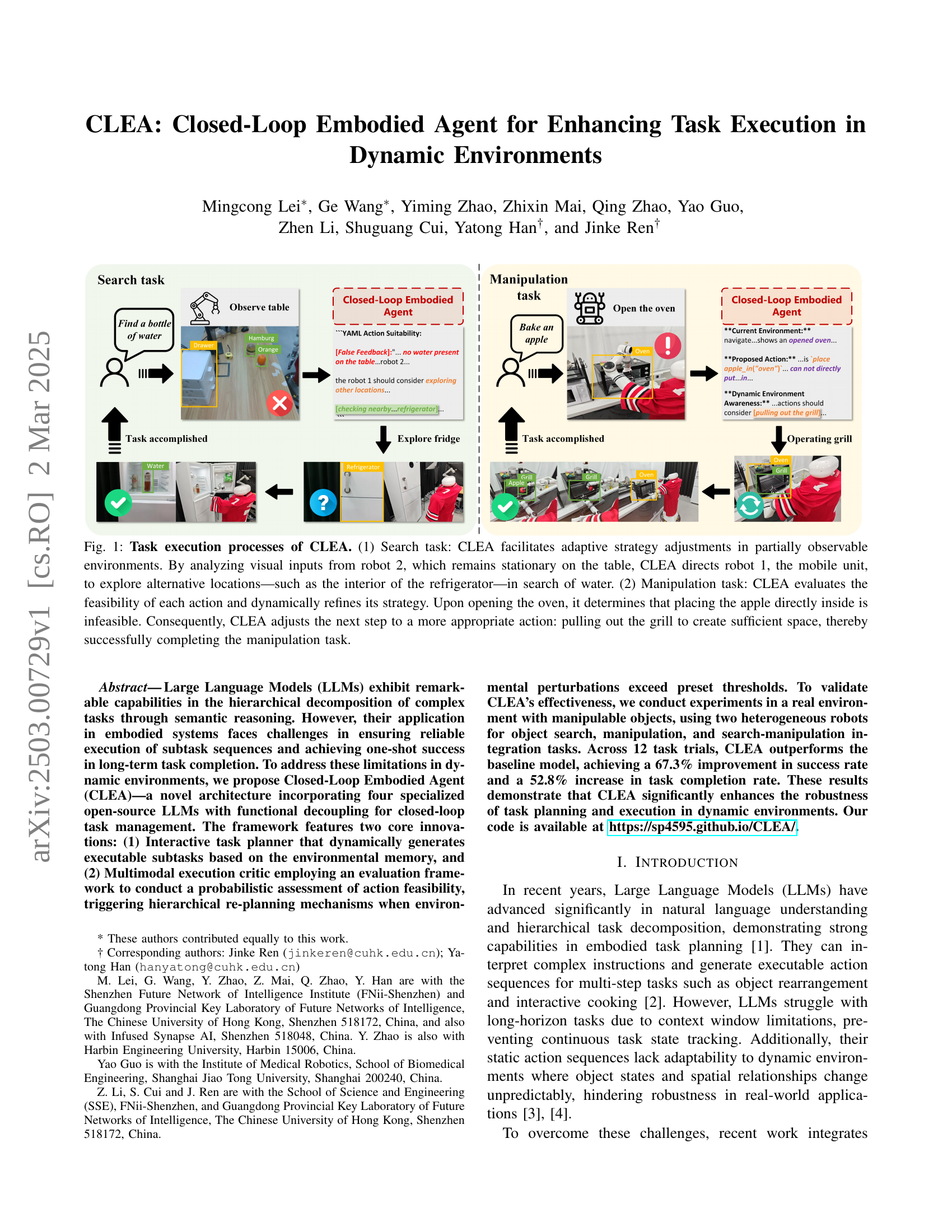

🔼 CLEA’s architecture is depicted in this figure. Environmental data is received by the Observer (VLM), which then sends it to the Summarizer (LLM). This module creates a memory representation that is used by the Planner (LLM) to create an initial sequence of actions based on the robot’s skill set and memory. The Critic (VLM) examines the actions’ feasibility and proposes alternative plans as necessary in response to changing conditions in the environment. This closed-loop system enables continuous adaptation to real-time situations.

read the caption

Figure 2: Overview of CLEA. The observer (VLM) provides environmental data, which the summarizer (LLM) processes into memory. The planner (LLM) generates an initial action sequence based on the robot’s skill pool and memory, while the critic (VLM) evaluates action feasibility and offers re-plan recommendations in response to environmental dynamics.

| Skill pool | Description |

|---|---|

| open(robot, openable_object) | robot open object |

| close(robot, openable_object) | robot close object |

| pick_from(robot, object, space) | robot pick object from space |

| release_to(robot, space) | robot release the object on its hand |

| to space | |

| go_to(robot, navi_point) | robot navigate to navigation point |

🔼 This table lists the predefined skills available to the robots within the CLEA system’s environment. Each skill is a function call that the planner can use to direct the robots’ actions. These skills encompass basic functionalities such as opening and closing containers, picking up and releasing objects, and navigating to specific points in the environment. The descriptions provide concise explanations of each skill’s function.

read the caption

TABLE I: Predefined skill pool in the environment

In-depth insights#

CLEA: Overview#

Based on the text, the CLEA framework is illustrated as having three main components: an observer, a memory module, and a planner-critic agent. The observer is key for converting visual data into a format usable by the language models, bridging the gap between what the robot sees and what the language model can understand. The memory module maintains a structured belief about the environment, using a history buffer of interactions and a summarizer to create beliefs based on this history. The planner-critic agent then tackles dynamic planning, divided into two sub-parts: the planner, which sets sub-goals and action sequences, and the critic, which evaluates the plan at each step, re-adjusting as needed. CLEA helps the robot understand its behavior, recognize when its current strategy is suboptimal, and correct in real-time.

Planner-Critic#

The Planner-Critic module is a crucial component for closed-loop decision-making in embodied agents. It likely involves two sub-modules: the Planner, responsible for generating action sequences based on current beliefs and environmental information, and the Critic, which evaluates the feasibility and effectiveness of those actions in real-time. The Planner likely employs hierarchical planning, generating sub-goals and action sequences to achieve them, while the Critic leverages sensory input and contextual understanding to assess action validity. The Critic’s feedback is essential for re-planning and adapting to dynamic environments, ensuring robustness and flexibility. The interaction between these modules enables the agent to dynamically adjust its plan in response to unexpected events or environmental changes, thereby facilitating successful task completion and error recovery.

Env. Dynamics#

In embodied AI, environmental dynamics pose significant challenges. Traditional task planning often struggles with unpredictable changes in object states and spatial relationships, leading to failures in long-horizon tasks. Robustness necessitates continuous adaptation through closed-loop feedback mechanisms, where agents perceive the environment, reason about actions, and execute accordingly. Key considerations include handling partial observability, as robots only have limited sensory input, and maintaining consistent task state tracking despite environmental perturbations. Incorporating memory and predictive models can aid in anticipating changes and refining plans in real-time. Addressing these complexities is crucial for developing truly adaptable and reliable robotic systems operating in dynamic real-world scenarios where disturbances frequently occur.

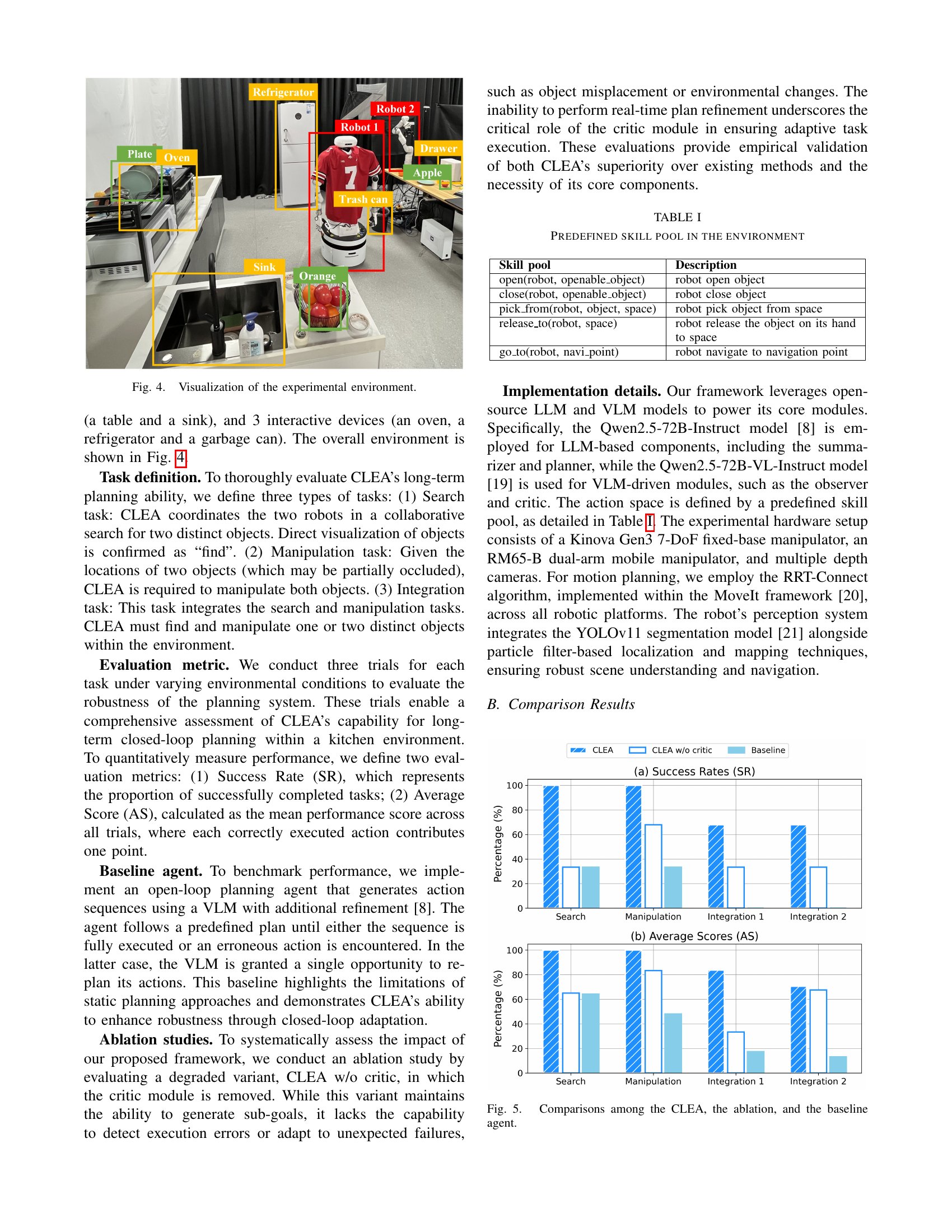

Robustness study#

Robustness in embodied AI systems, like CLEA, is paramount for real-world deployment. It entails the ability to maintain performance despite environmental changes, object misplacements, and unexpected robot behaviors. A rigorous robustness study involves testing the agent across diverse scenarios and tasks. Key metrics include success rate and average score, reflecting both task completion and action efficiency. Ablation studies are crucial for identifying the contribution of specific modules, such as the critic, in ensuring robustness. Analyzing failure modes, like outdated actions or critic errors, pinpoints areas for improvement. Benchmarking against simpler open-loop agents highlights the advantages of closed-loop planning in handling dynamic environments. Understanding robustness in embodied AI systems is essential for trustworthy application.

Failure analysis#

The failure analysis section of the paper offers valuable insights into the limitations and potential areas for improvement in the CLEA framework. The identification of “Invalid actions” as the most frequent failure mode highlights a crucial area where the LLM struggles with adhering to the predefined action format. This suggests a need for refining the interface between the LLM planner and the robotic platform, potentially through improved prompt engineering or a more flexible action representation. The “Critic failures”, where the critic fails to identify improper actions, underscores the limitations in the VLM’s perceptual capabilities. This calls for exploring more advanced visual reasoning techniques or incorporating additional sensory input to enhance the critic’s ability to accurately assess the environment and action feasibility. The “Multi-robot collaboration issues” point to a challenge in coordinating multiple agents, indicating that the LLMs are not particularly adept at understanding and managing complex inter-robot relationships. This suggests a direction for future work involving incorporating more sophisticated multi-agent reasoning capabilities into the CLEA framework.

More visual insights#

More on figures

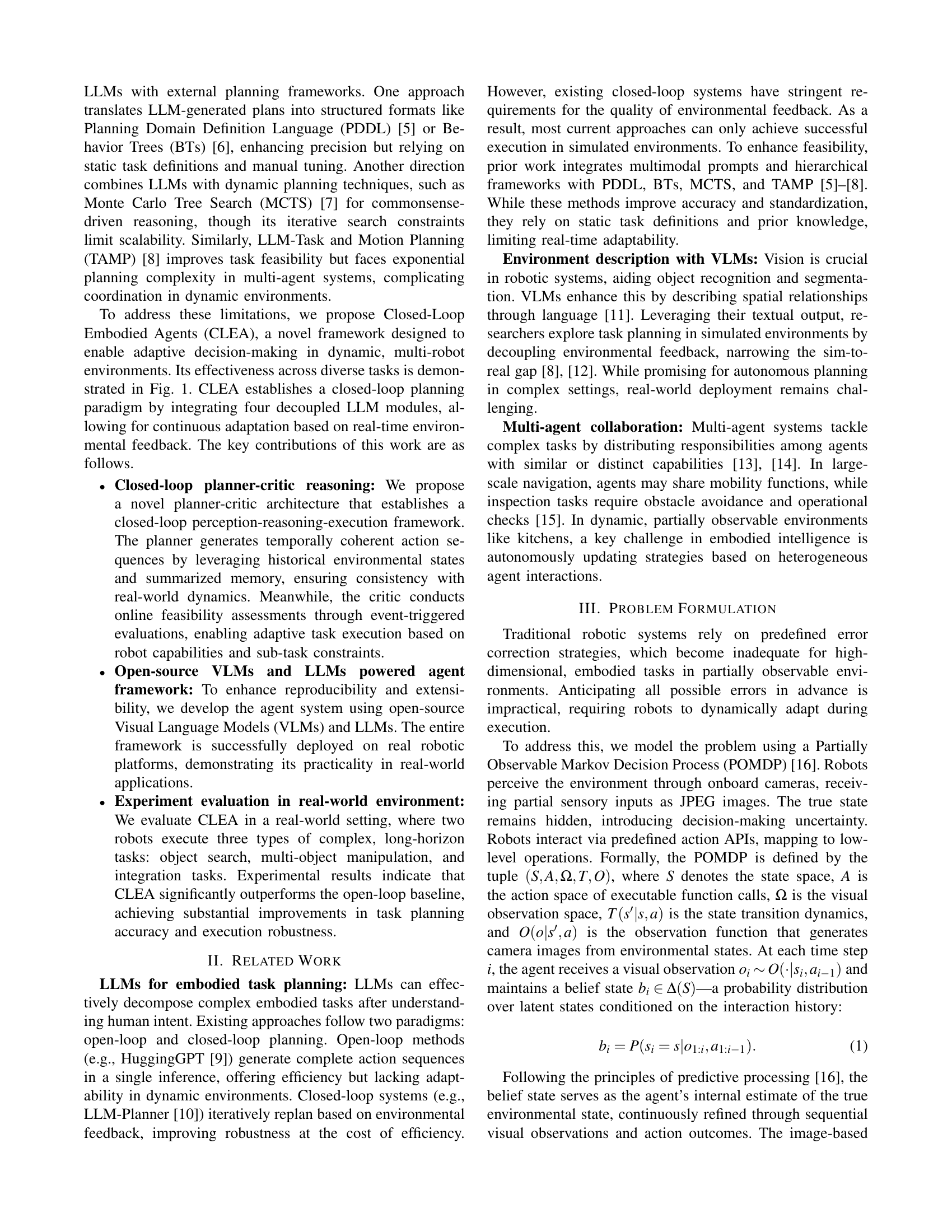

🔼 Figure 3 illustrates CLEA’s closed-loop reasoning process. Unlike traditional systems that simply halt upon detecting a failure (like not finding medication), CLEA performs internal reasoning. When the robot doesn’t find medication in the drawer, the critic module analyzes the situation. Instead of stopping, the critic suggests alternative actions, such as checking other compartments, enabling the robot to adapt and continue toward task completion.

read the caption

Figure 3: The reasoning and output of CLEA. Unlike traditional failure-detection classification systems, CLEA performs internal reasoning upon receiving visual input and provides structured outputs. In the case where no medication is found in an empty drawer, the planner does not halt its intent. Instead, the critic suggests exploring alternative locations and provides the correct advice to check other compartments of the drawer, thereby guiding the successful completion of the task.

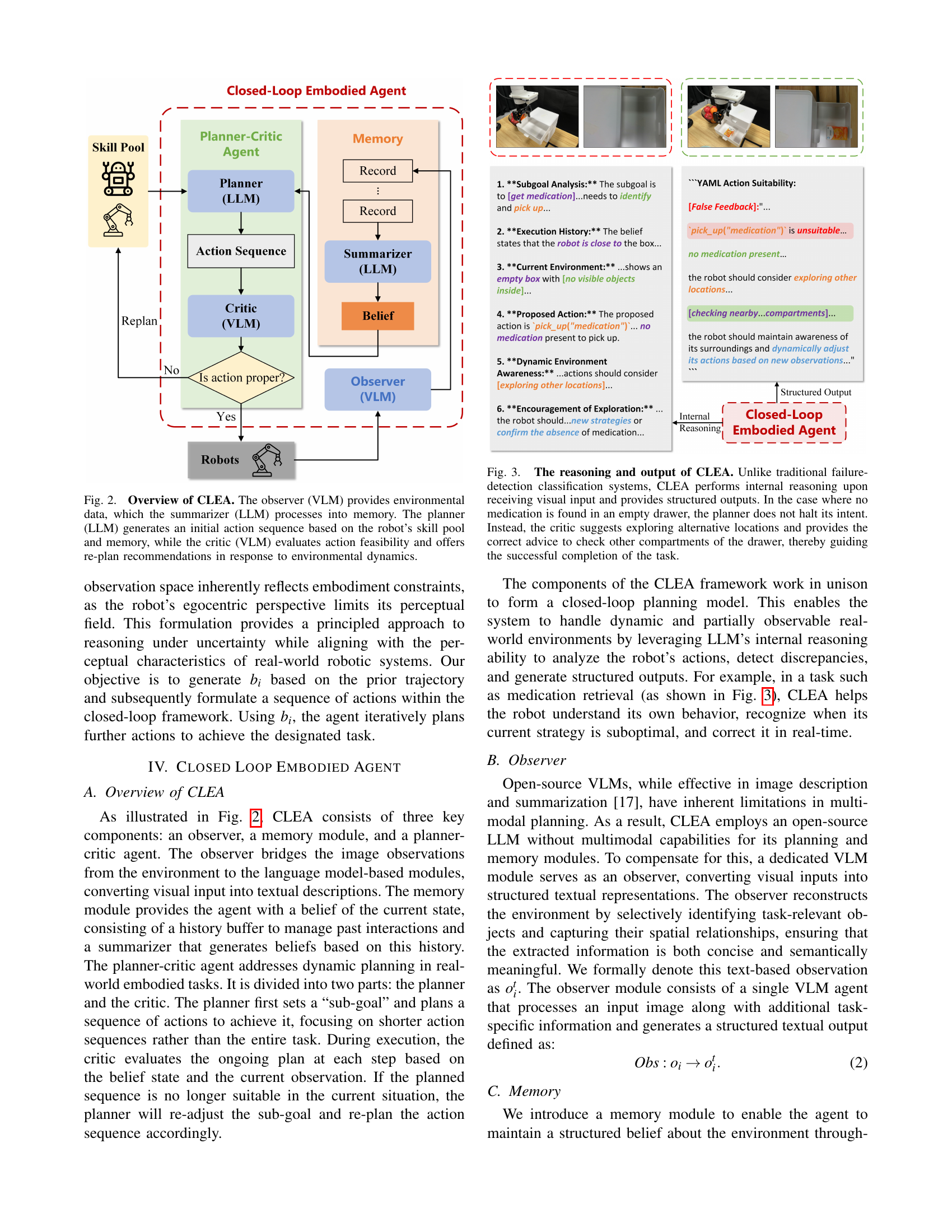

🔼 The figure visualizes the experimental setup used in the paper. It shows a kitchen-like environment with various objects, such as a refrigerator, oven, drawer, trash can, sink, plate, apple, and orange. Two robots, a dual-arm robot and a single-arm robot, are included, highlighting the multi-robot aspect of the experiments. The environment is designed to be partially observable and dynamic to test the robustness of the proposed system.

read the caption

Figure 4: Visualization of the experimental environment.

🔼 Figure 5 presents a comparative analysis of the performance of three different agents across three types of tasks: search, manipulation, and integration tasks. The three agents are: CLEA (the proposed closed-loop embodied agent), CLEA w/o critic (an ablation study removing the critic module from CLEA), and a baseline open-loop agent. The bar charts visually compare the Success Rates (SR) and Average Scores (AS) achieved by each agent on each task type. This allows for a direct comparison of the effectiveness of the closed-loop approach (CLEA) compared to the open-loop baseline and the importance of the critic module within the CLEA architecture.

read the caption

Figure 5: Comparisons among the CLEA, the ablation, and the baseline agent.

More on tables

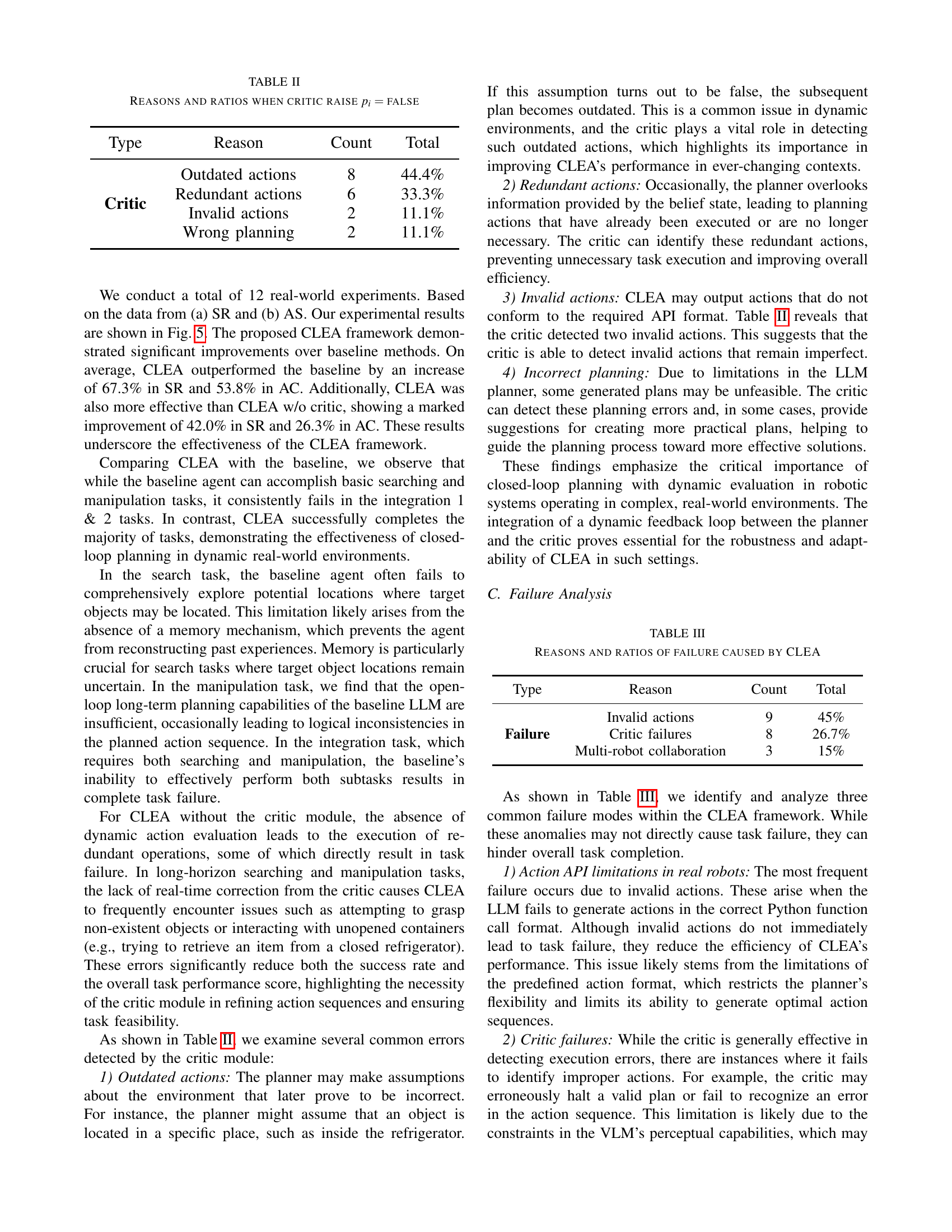

| Type | Reason | Count | Total |

|---|---|---|---|

| Critic | Outdated actions | 8 | 44.4% |

| Redundant actions | 6 | 33.3% | |

| Invalid actions | 2 | 11.1% | |

| Wrong planning | 2 | 11.1% |

🔼 This table presents a breakdown of the reasons why the critic module in CLEA (Closed-Loop Embodied Agent) flags an action as unsuitable (pi = false). It categorizes the instances of unsuitable actions into four main reasons: outdated actions, redundant actions, invalid actions, and wrong planning. For each reason, the table shows the count of occurrences and the percentage of the total number of instances where the critic raised a false flag.

read the caption

TABLE II: Reasons and ratios when critic raise pi=falsesubscript𝑝𝑖falsep_{i}=\text{false}italic_p start_POSTSUBSCRIPT italic_i end_POSTSUBSCRIPT = false

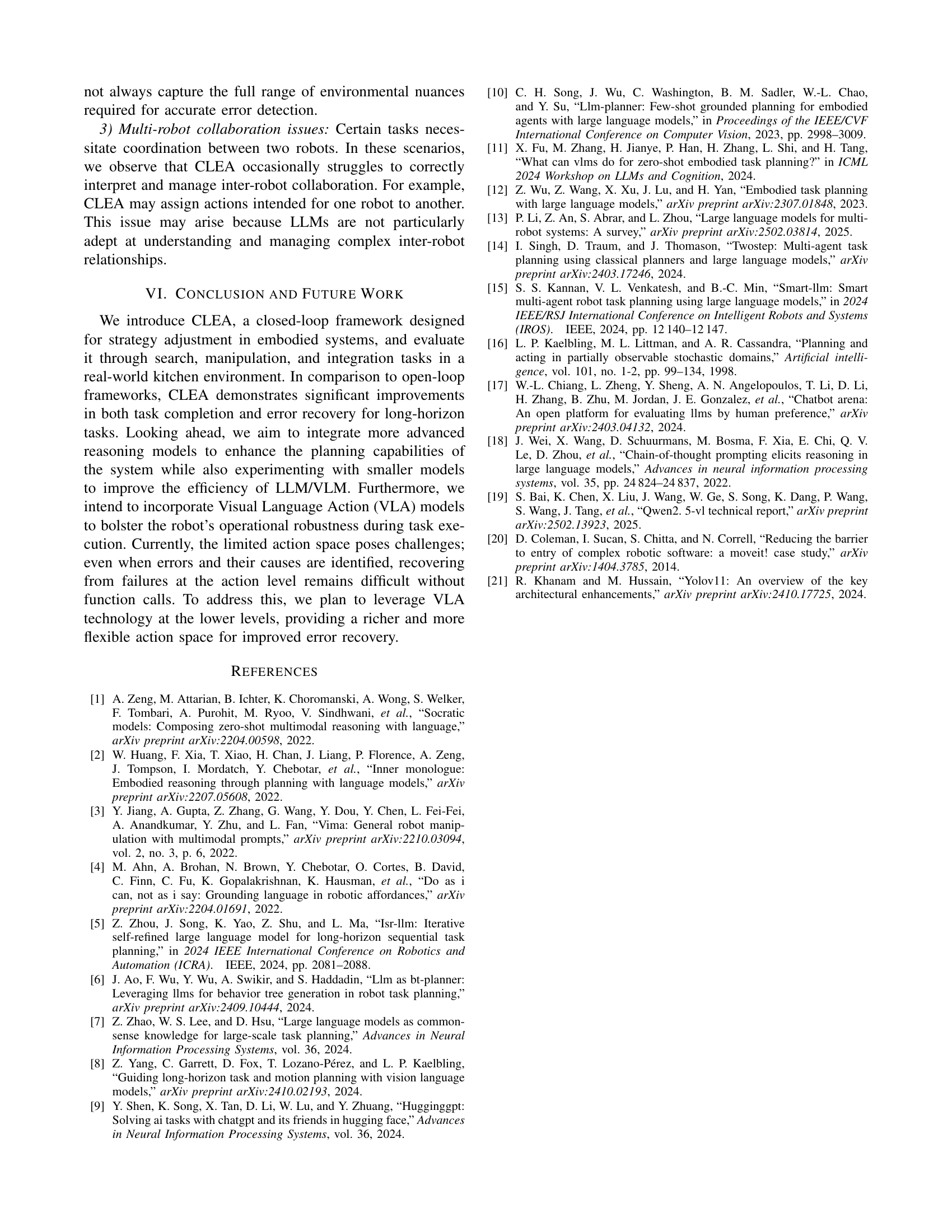

| Type | Reason | Count | Total |

|---|---|---|---|

| Failure | Invalid actions | 9 | 45% |

| Critic failures | 8 | 26.7% | |

| Multi-robot collaboration | 3 | 15% |

🔼 This table presents a failure analysis of the CLEA system, categorizing the reasons behind its failures and providing the count and percentage for each category. The categories include invalid actions generated by the system, failures originating from the critic module (which assesses action feasibility), and issues related to multi-robot collaboration.

read the caption

TABLE III: Reasons and ratios of failure caused by CLEA

Full paper#