TL;DR#

Instruction tuning uses high-quality data to refine language models, yet automated data selection struggles with large datasets. Current methods, tested on small datasets, face scaling issues when applied to the millions of samples used in practice. This paper systematically studies how well existing data selection methods perform when scaling up the dataset. It selects up to 2.5M samples from pools of up to 5.8M samples and evaluates them on 7 diverse tasks.

The study reveals that many recent methods fall short of random selection, even declining in performance with larger pools, despite using more compute. However, a variant of representation-based data selection (RDS+), using weighted mean pooling of pretrained LM hidden states, consistently outperforms other complex methods across all settings. RDS+ is also more compute-efficient, highlighting the importance of examining the scaling properties of data selection.

Key Takeaways#

Why does it matter?#

This work is important to researchers as it evaluates data selection methods at scales mirroring real-world instruction tuning. It highlights critical scaling issues in existing techniques and introduces a robust, efficient alternative, paving the way for more effective and scalable LM training pipelines.

Visual Insights#

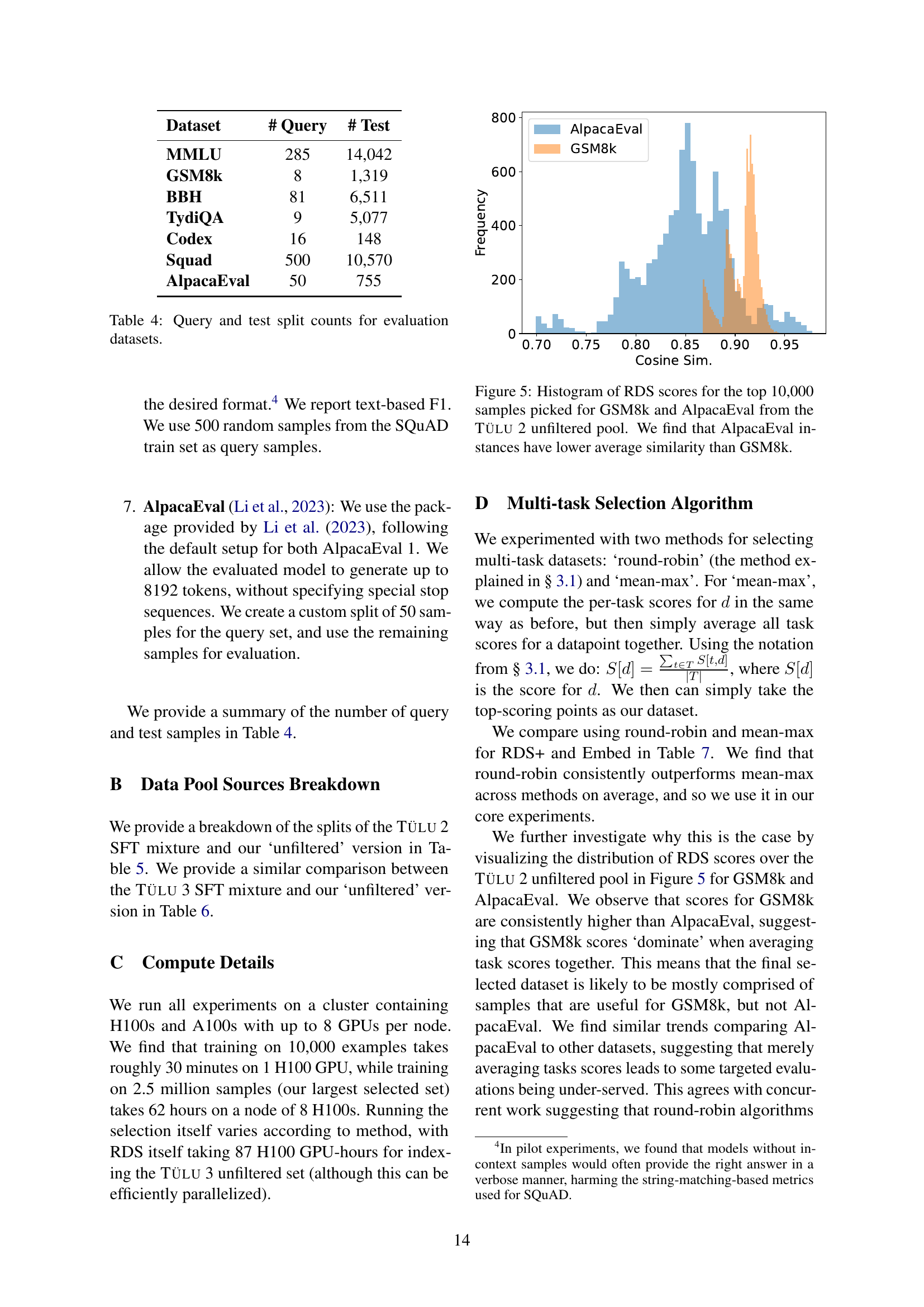

🔼 This figure compares the performance and computational cost of various data selection methods for instruction tuning. It shows the average performance achieved when selecting 10,000 data points from two different sized data pools: one with 200,000 samples and another significantly larger, with 5.8 million samples. The x-axis represents the estimated computational cost (FLOPS), while the y-axis shows the average performance across seven different tasks. The plot reveals that most methods either fail to improve or even decrease in performance when given access to a larger data pool, despite increased computation. Notably, RDS+ and Embed (GTR) show improvements with larger pools. The Pareto frontier (the best trade-off between performance and efficiency) is highlighted in red.

read the caption

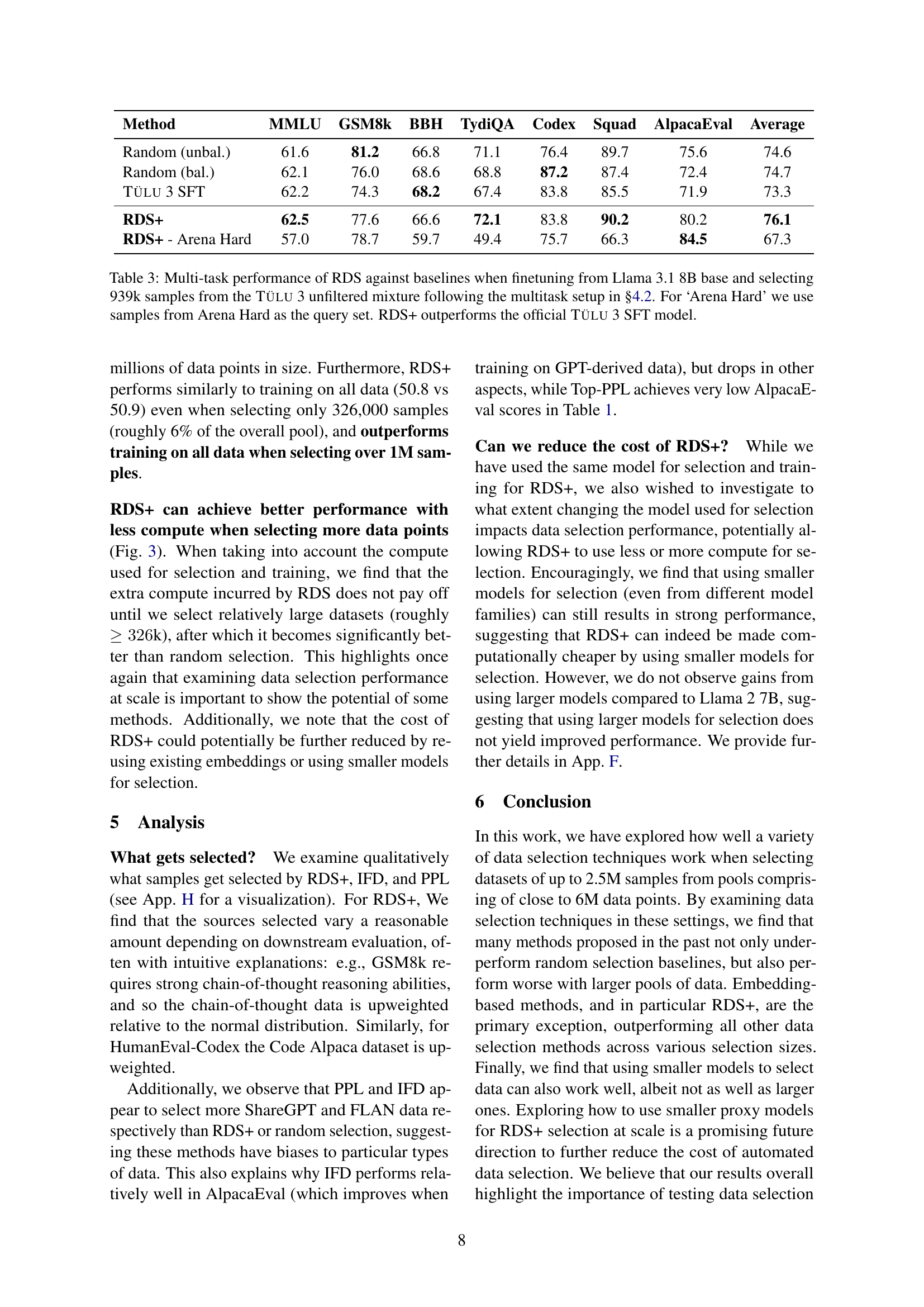

Figure 1: Performance against estimated compute cost of varied data selection methods when selecting 10k points from data pools consisting of 200k (left points) and 5.8M (right points) data points in the single-task setup described in §4.1. We do not run LESS with 5.8M samples due to its high compute cost. Most data selection methods do not improve in performance with a larger pool, with the exception of RDS+ and Embed (GTR). We shade the Pareto frontier of efficiency and performance in red.

| Dataset | # Query | # Test |

| MMLU | 285 | 14,042 |

| GSM8k | 8 | 1,319 |

| BBH | 81 | 6,511 |

| TydiQA | 9 | 5,077 |

| Codex | 16 | 148 |

| Squad | 500 | 10,570 |

| AlpacaEval | 50 | 755 |

🔼 This table presents a comparison of various data selection methods for instruction tuning, focusing on single-task performance. It evaluates the performance of language models trained on 10k data points selected from the Tülu 2 unfiltered dataset (both a downsampled version and the full set). The table shows the performance achieved by each data selection method across seven different tasks, highlighting the effectiveness of the methods in producing high-performing models. The results reveal that Representation-based Data Selection (RDS+) consistently outperforms other methods, including computationally more expensive ones such as LESS.

read the caption

Table 1: Single-task performance of different data selection techniques over the Tülu 2 unfiltered set. Each cell reports the performance of a model trained with 10k samples chosen for that particular target task. We show results selecting from a downsampled form or full set of the Tülu 2 unfiltered set. We find RDS performs best overall, even beating more computationally expensive methods like LESS.

In-depth insights#

RDS+ Scales Best#

RDS+ scales best because it likely leverages pre-trained knowledge effectively. As data pool size increases, methods relying on loss or gradients might overfit to noise, while RDS+ benefits from a richer representation space, capturing broader patterns. Its weighted pooling likely aids generalization. Scaling compute is key; a superior method justifies extra cost. Examining performance across data & compute scales reveals practical benefits. It is important to choose better way to have better scaling.

Data Pool Impacts#

Analyzing the impact of data pool composition is crucial for understanding the efficacy of data selection methods. Larger, more diverse pools, like the unfiltered sets, present both opportunities and challenges. They allow for selecting higher-quality data, potentially improving model performance, but also introduce complexity, as some selection methods struggle to scale or may even decline in performance with larger pools. Evaluating these methods across different pools reveals their true scaling properties and generalizability, impacting the choice of the data selection and revealing the need for careful consideration when building large-scale instruction-tuned models.

Scale Needs Exam#

The paper highlights the necessity of examining the scaling properties of automated data selection techniques for instruction tuning. Many existing methods, effective at smaller scales, may falter or even degrade in performance when applied to larger datasets and data pools. This underscores that strong performance at smaller scales doesn’t guarantee success when scaling up. The paper shows that some methods even underperform random selection when applied to the larger scales. RDS+ is a notable exception, demonstrating consistent performance improvement as the data pool size grows. The paper implies that evaluating data selection techniques only at small-scale is insufficient, because their scaling behaviors and computational costs can only be seen with increased datasets. Therefore, techniques must be validated by testing and performing them at a production scale.

Comp. Cost Matters#

Computational cost is a crucial factor in data selection for instruction tuning, especially at scale. Many data selection methods underperform random selection due to the overhead. Methods must be efficient to outweigh this cost. RDS+ balances performance and cost, outperforming random selection with less compute at larger scales. Smaller proxy models can further reduce costs. Analyzing cost benefits at scale reveals practical utility. Performance should be evaluated considering the selection compute.

Mix Tuning Robust#

The concept of ‘Mix Tuning Robust’ likely revolves around creating models that perform consistently well across diverse datasets and tasks. Robustness here implies resilience to variations in data quality, distribution, and task complexity. A successful mix tuning strategy would involve carefully selecting and weighting different datasets during training to prevent overfitting to specific domains. It requires a balance, preventing the model from memorizing specific patterns while ensuring it generalizes effectively. Techniques such as curriculum learning or dynamic data weighting could be crucial for ‘Mix Tuning Robust’. Moreover, the evaluation is important, testing the model on various datasets that it hasn’t explicitly been trained on to accurately evaluate its generalizability.

More visual insights#

More on figures

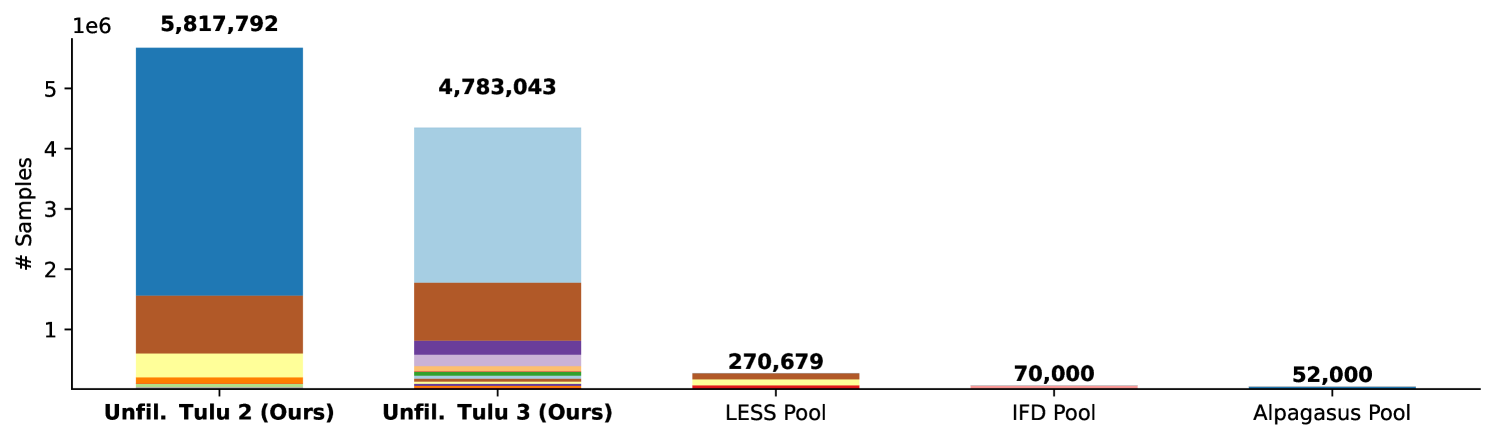

🔼 This figure compares the size and composition of the datasets used in this research with those from prior studies. The datasets in this paper, ‘Unfiltered TÜLU 2’ and ‘Unfiltered TÜLU 3’, are significantly larger and more diverse than those in previous works (Xia et al., 2024; Chen et al., 2024; Li et al., 2024b). The figure uses a stacked bar chart to visualize the relative proportions of different data sources within each dataset pool. The total number of samples in each pool is shown above each bar. More details about the composition of these datasets are available in Appendix B.

read the caption

Figure 2: Size and makeup of data pools considered in this work (unfiltered Tulu 2, 3) and in past work (Xia et al., 2024; Chen et al., 2024; Li et al., 2024b). We provide the size of each pool on top of each bar. Each color represents a different dataset. See App. B for more details on data pool composition.

🔼 This figure compares the average performance across multiple tasks of two data selection methods: balanced random sampling and RDS+. The x-axis shows the estimated FLOPs (floating point operations) cost, encompassing both the data selection process and model training. The y-axis displays the resulting average performance. Different points on the graph represent using varying percentages of the total data pool for selection. The key finding illustrated is that RDS+ consistently outperforms balanced random sampling, particularly when fewer data points are selected, and becomes significantly more FLOP-efficient as the amount of selected data increases.

read the caption

Figure 3: Average multi-task performance against FLOPs cost (including selection) for balanced random and RDS+. We label points with the % of the total data pool used. RDS+ outperforms random selection significantly when selecting less data, and is more FLOPs efficient at larger selection sizes. See App. E for details on FLOPs estimates.

🔼 This figure displays the average performance across multiple tasks for different numbers of samples selected from the data pool. The performance of the RDS+ method (a type of data selection method) is compared against balanced random sampling. The x-axis represents the number of samples used, ranging from a small subset to the entire data pool. The y-axis shows the average performance across the multiple tasks. The key takeaway is that RDS+ consistently outperforms random selection across all sample sizes.

read the caption

Figure 4: Average multi-task performance against number of samples selected. RDS+ consistently beats balanced random at all data sizes tested, up to using the entire data pool.

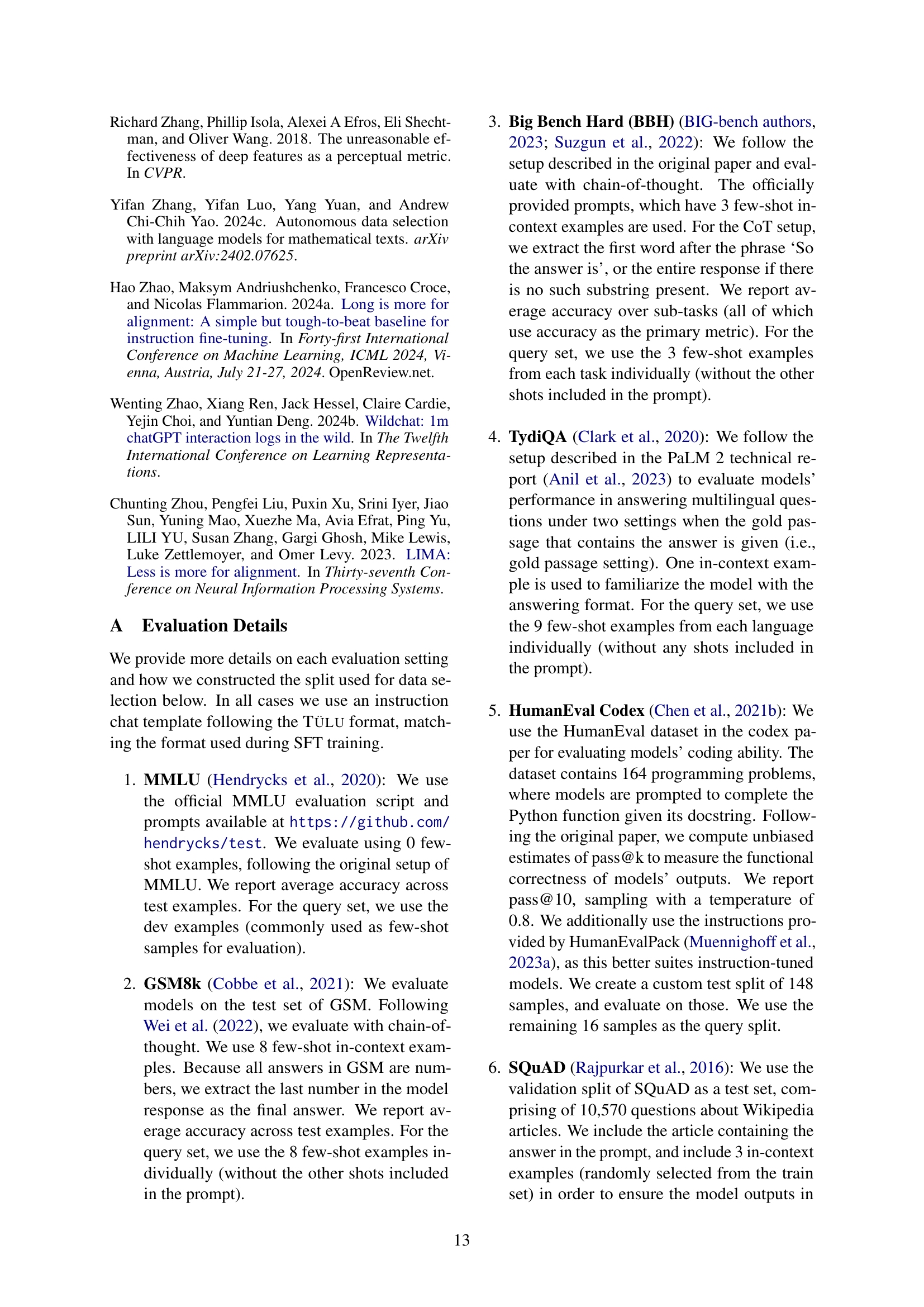

🔼 This histogram visualizes the distribution of similarity scores obtained using the Representation-based Data Selection (RDS) method. The top 10,000 data points selected for the GSM8K and AlpacaEval tasks from the Tülu 2 unfiltered dataset are analyzed. The x-axis represents the cosine similarity scores, and the y-axis shows the frequency of those scores. The comparison reveals a key observation: AlpacaEval instances exhibit lower average similarity scores compared to GSM8K instances, suggesting a difference in data characteristics relevant to the two tasks.

read the caption

Figure 5: Histogram of RDS scores for the top 10,000 samples picked for GSM8k and AlpacaEval from the Tülu 2 unfiltered pool. We find that AlpacaEval instances have lower average similarity than GSM8k.

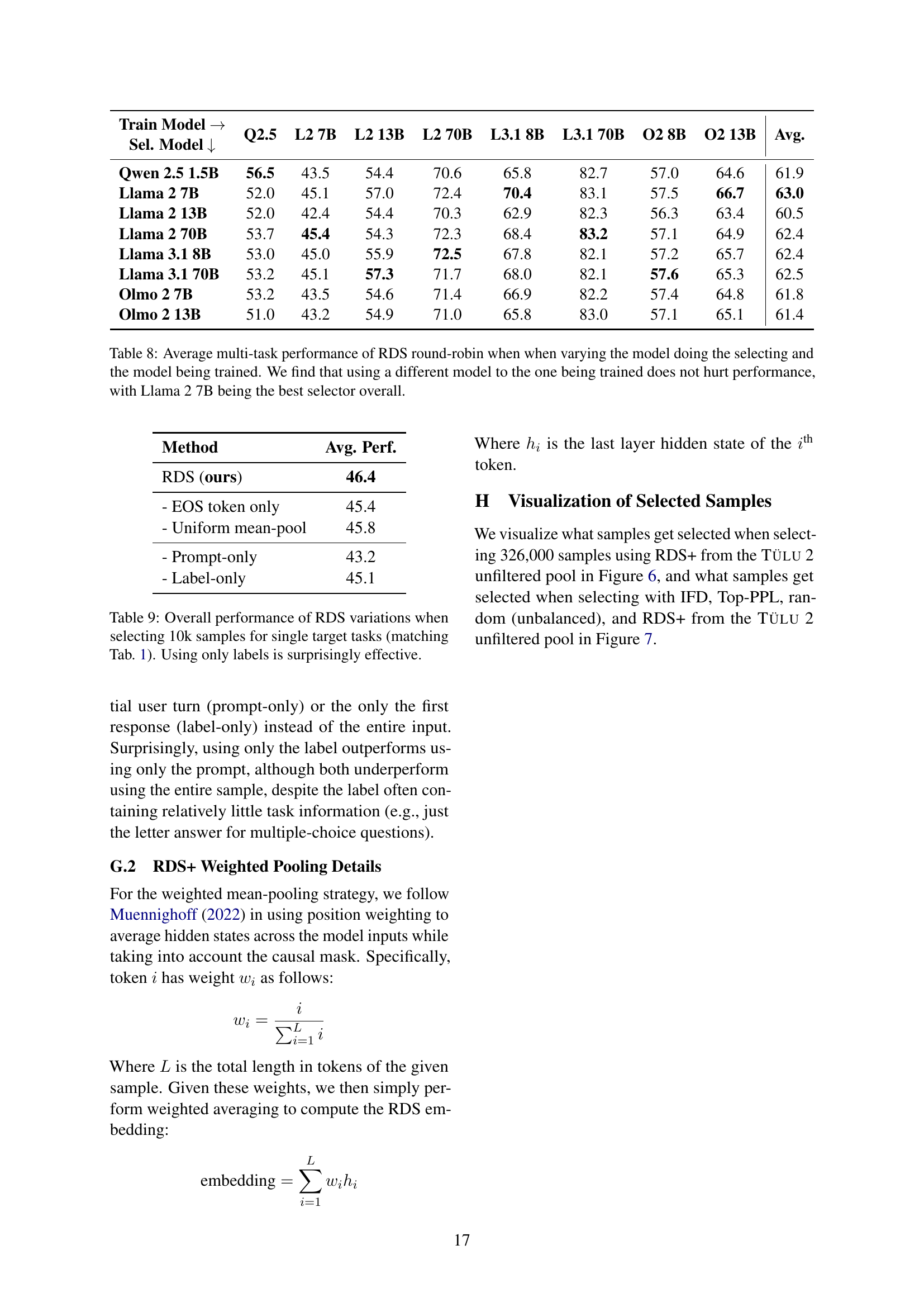

🔼 Figure 6 shows the source distribution of the 326,000 data points selected using the RDS+ method from the unfiltered TÜLU 2 dataset. It compares this distribution to a random sample of the same size (326,000) from the same pool. The figure visually represents the proportion of data points selected from different sources (e.g., FLAN V2, ShareGPT, etc.) for both the RDS+ selection and the random selection. The breakdown of the selected data points across various sources highlights the different data selection preferences between RDS+ and the random sampling method. The ‘round-robin’ designation in the caption indicates that this is the data distribution obtained when selecting data for a multi-task scenario using a round-robin strategy.

read the caption

Figure 6: Breakdown of what data gets selected when selecting 326,000 samples using RDS from the Tülu 2 unfiltered pool. ‘Random’ represents the samples chosen when randomly downsampling to 326,000 samples, and ‘round-robin’ refers to the samples selected by the multi-task round-robin selection.

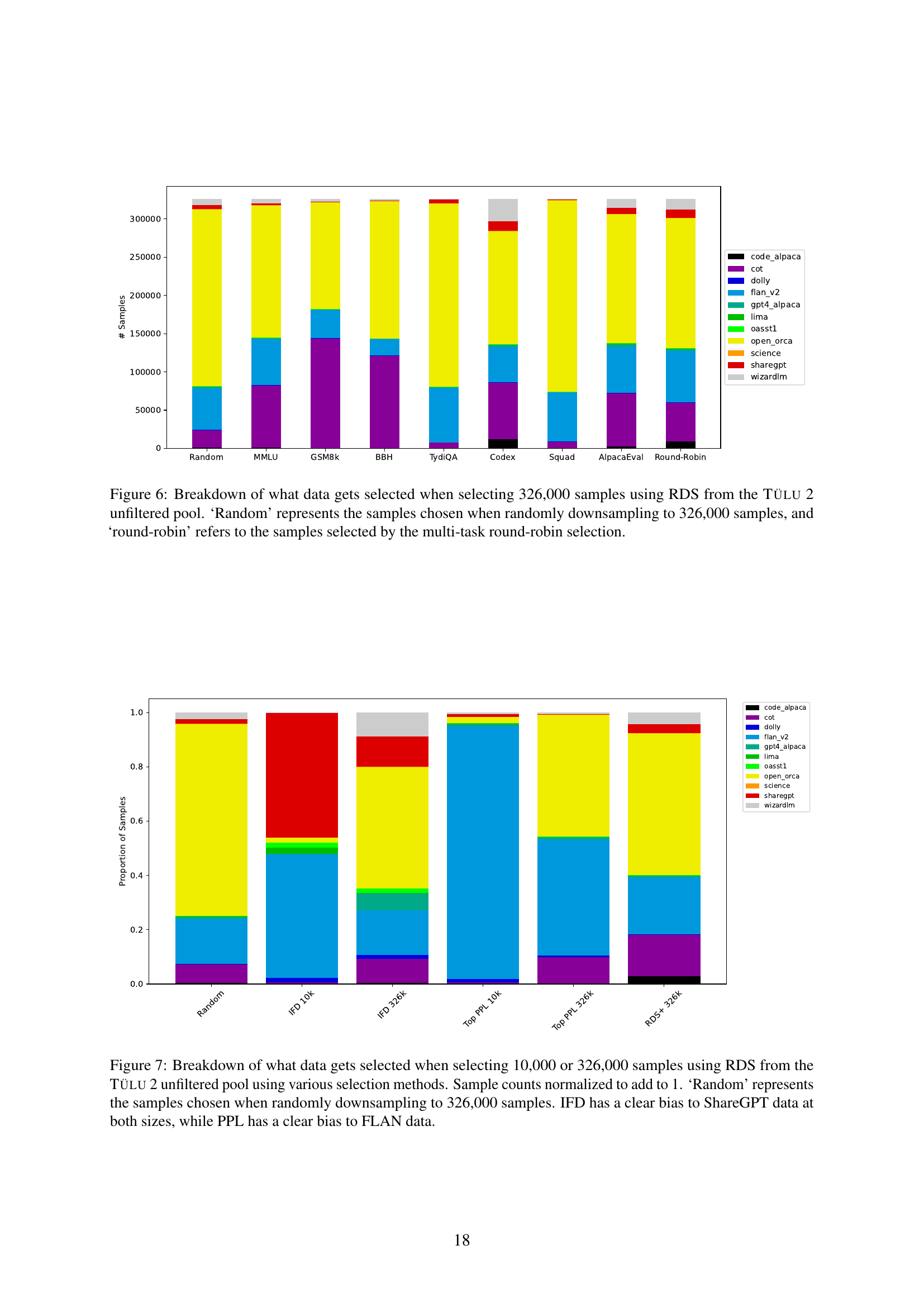

🔼 Figure 7 presents a comparative analysis of data selection methods applied to the Tülu 2 unfiltered dataset. It shows the proportions of data samples selected from different sources (FLAN, ShareGPT, etc.) by various methods including IFD, Top-PPL, RDS+, and random selection. Two sample sizes are compared: 10,000 and 326,000. The figure visually demonstrates that certain methods exhibit biases towards specific data sources, for example, IFD showing a preference for ShareGPT data and Top-PPL favoring FLAN data, regardless of the sample size. The random selection serves as a baseline for comparison, illustrating the non-uniformity of the other methods’ selections.

read the caption

Figure 7: Breakdown of what data gets selected when selecting 10,000 or 326,000 samples using RDS from the Tülu 2 unfiltered pool using various selection methods. Sample counts normalized to add to 1. ‘Random’ represents the samples chosen when randomly downsampling to 326,000 samples. IFD has a clear bias to ShareGPT data at both sizes, while PPL has a clear bias to FLAN data.

More on tables

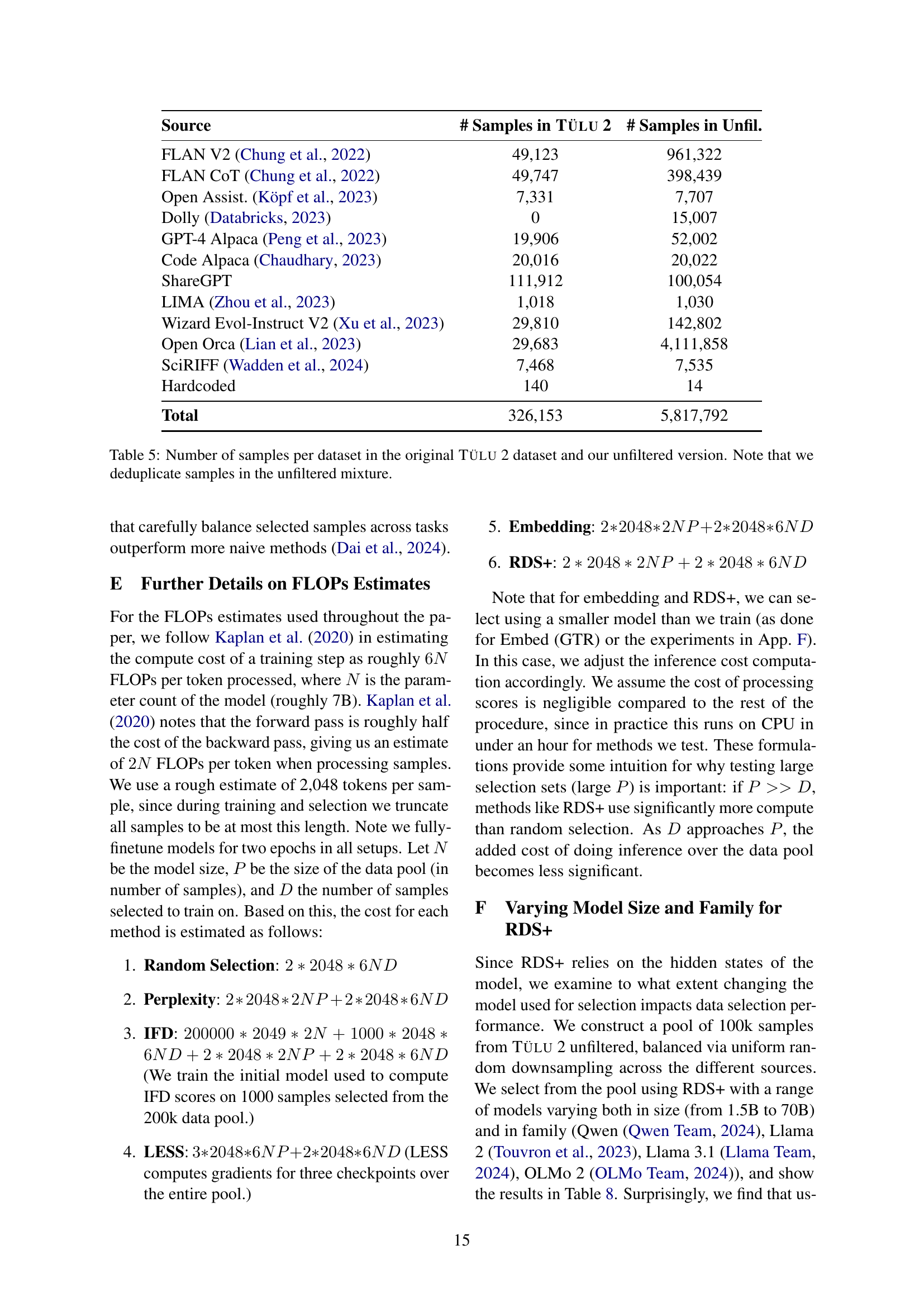

| Source | # Samples in Tülu 2 | # Samples in Unfil. |

| FLAN V2 (Chung et al., 2022) | 49,123 | 961,322 |

| FLAN CoT (Chung et al., 2022) | 49,747 | 398,439 |

| Open Assist. (Köpf et al., 2023) | 7,331 | 7,707 |

| Dolly (Databricks, 2023) | 0 | 15,007 |

| GPT-4 Alpaca (Peng et al., 2023) | 19,906 | 52,002 |

| Code Alpaca (Chaudhary, 2023) | 20,016 | 20,022 |

| ShareGPT | 111,912 | 100,054 |

| LIMA (Zhou et al., 2023) | 1,018 | 1,030 |

| Wizard Evol-Instruct V2 (Xu et al., 2023) | 29,810 | 142,802 |

| Open Orca (Lian et al., 2023) | 29,683 | 4,111,858 |

| SciRIFF (Wadden et al., 2024) | 7,468 | 7,535 |

| Hardcoded | 140 | 14 |

| Total | 326,153 | 5,817,792 |

🔼 This table presents the results of a multi-task data selection experiment. Seven different data selection methods were used to choose 326,000 samples from the complete, unfiltered TÜLU 2 dataset. The goal was to find a dataset that performed well across seven diverse evaluation tasks. For each method, a single model was trained on the selected dataset and its performance was measured on each of the seven tasks. The table shows the average performance across these tasks for each method. Additionally, it shows results when using samples from the WildChat and Arena Hard datasets for selection, highlighting the impact of dataset choice on performance.

read the caption

Table 2: Multi-task performance of dataset selection methods when selecting 326k samples from the full Tülu 2 unfiltered pool. Each row reflects the performance of a single model trained on a single dataset chosen to perform well across tasks. For ‘WildChat’ and ‘Arena Hard’ we use samples from WildChat and Arena Hard for selection.

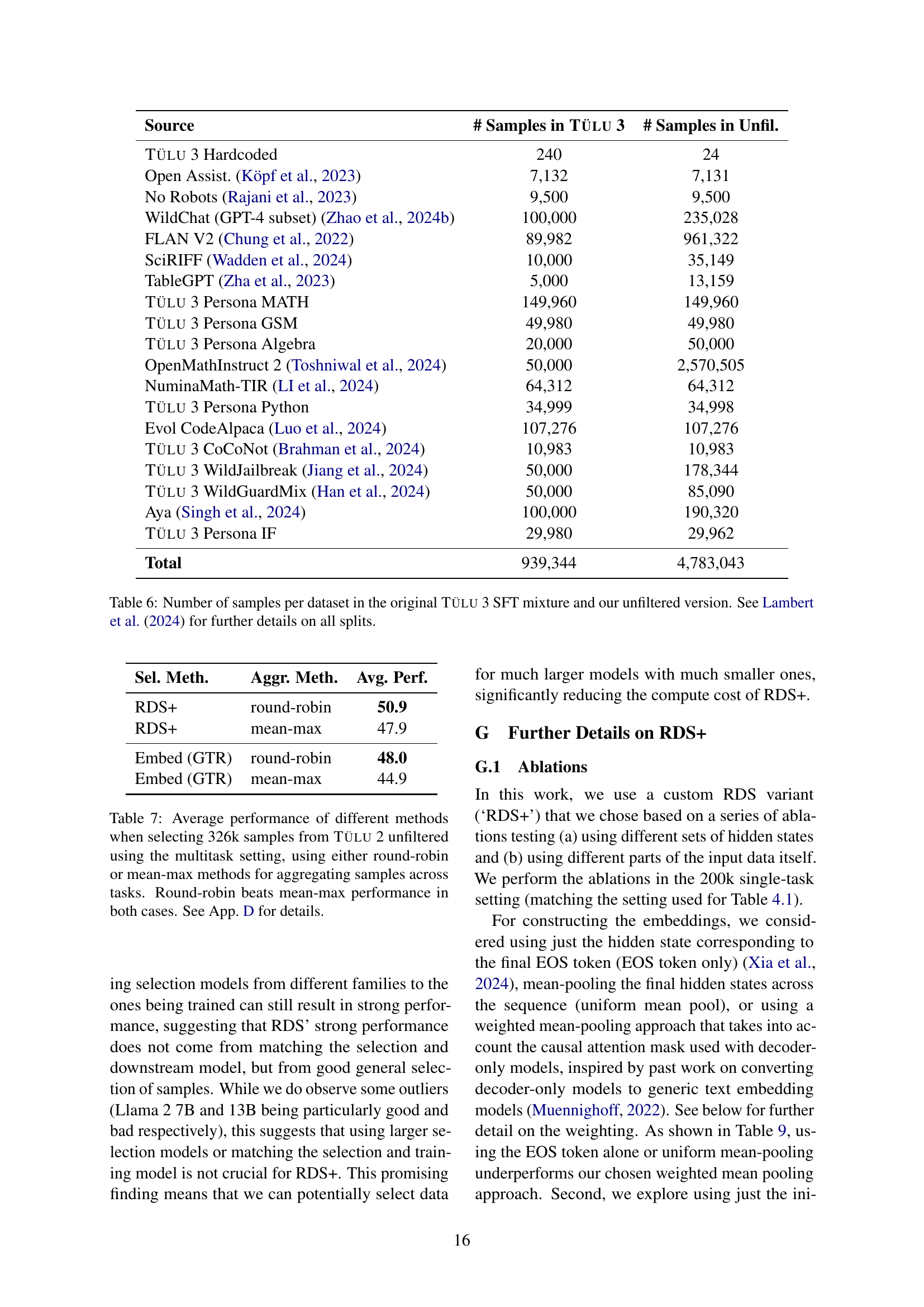

| Source | # Samples in Tülu 3 | # Samples in Unfil. |

| Tülu 3 Hardcoded | 240 | 24 |

| Open Assist. (Köpf et al., 2023) | 7,132 | 7,131 |

| No Robots (Rajani et al., 2023) | 9,500 | 9,500 |

| WildChat (GPT-4 subset) (Zhao et al., 2024b) | 100,000 | 235,028 |

| FLAN V2 (Chung et al., 2022) | 89,982 | 961,322 |

| SciRIFF (Wadden et al., 2024) | 10,000 | 35,149 |

| TableGPT (Zha et al., 2023) | 5,000 | 13,159 |

| Tülu 3 Persona MATH | 149,960 | 149,960 |

| Tülu 3 Persona GSM | 49,980 | 49,980 |

| Tülu 3 Persona Algebra | 20,000 | 50,000 |

| OpenMathInstruct 2 (Toshniwal et al., 2024) | 50,000 | 2,570,505 |

| NuminaMath-TIR (LI et al., 2024) | 64,312 | 64,312 |

| Tülu 3 Persona Python | 34,999 | 34,998 |

| Evol CodeAlpaca (Luo et al., 2024) | 107,276 | 107,276 |

| Tülu 3 CoCoNot (Brahman et al., 2024) | 10,983 | 10,983 |

| Tülu 3 WildJailbreak (Jiang et al., 2024) | 50,000 | 178,344 |

| Tülu 3 WildGuardMix (Han et al., 2024) | 50,000 | 85,090 |

| Aya (Singh et al., 2024) | 100,000 | 190,320 |

| Tülu 3 Persona IF | 29,980 | 29,962 |

| Total | 939,344 | 4,783,043 |

🔼 This table presents the results of a multi-task instruction tuning experiment. Using the Llama 3.1 8B model as a base, the researchers selected 939,000 samples from the unfiltered Tülu 3 dataset using various data selection methods. The performance of models fine-tuned on these selected datasets was evaluated across multiple tasks. The results show a comparison of the performance of RDS+ against various baselines, including a balanced random selection, and the official Tülu 3 SFT model. A separate row shows the results of using the ‘Arena Hard’ query set for selection with RDS+. The key takeaway is that RDS+ outperforms the official Tülu 3 SFT model.

read the caption

Table 3: Multi-task performance of RDS against baselines when finetuning from Llama 3.1 8B base and selecting 939k samples from the Tülu 3 unfiltered mixture following the multitask setup in §4.2. For ‘Arena Hard’ we use samples from Arena Hard as the query set. RDS+ outperforms the official Tülu 3 SFT model.

| Method | Avg. Perf. |

| RDS (ours) | 46.4 |

| - EOS token only | 45.4 |

| - Uniform mean-pool | 45.8 |

| - Prompt-only | 43.2 |

| - Label-only | 45.1 |

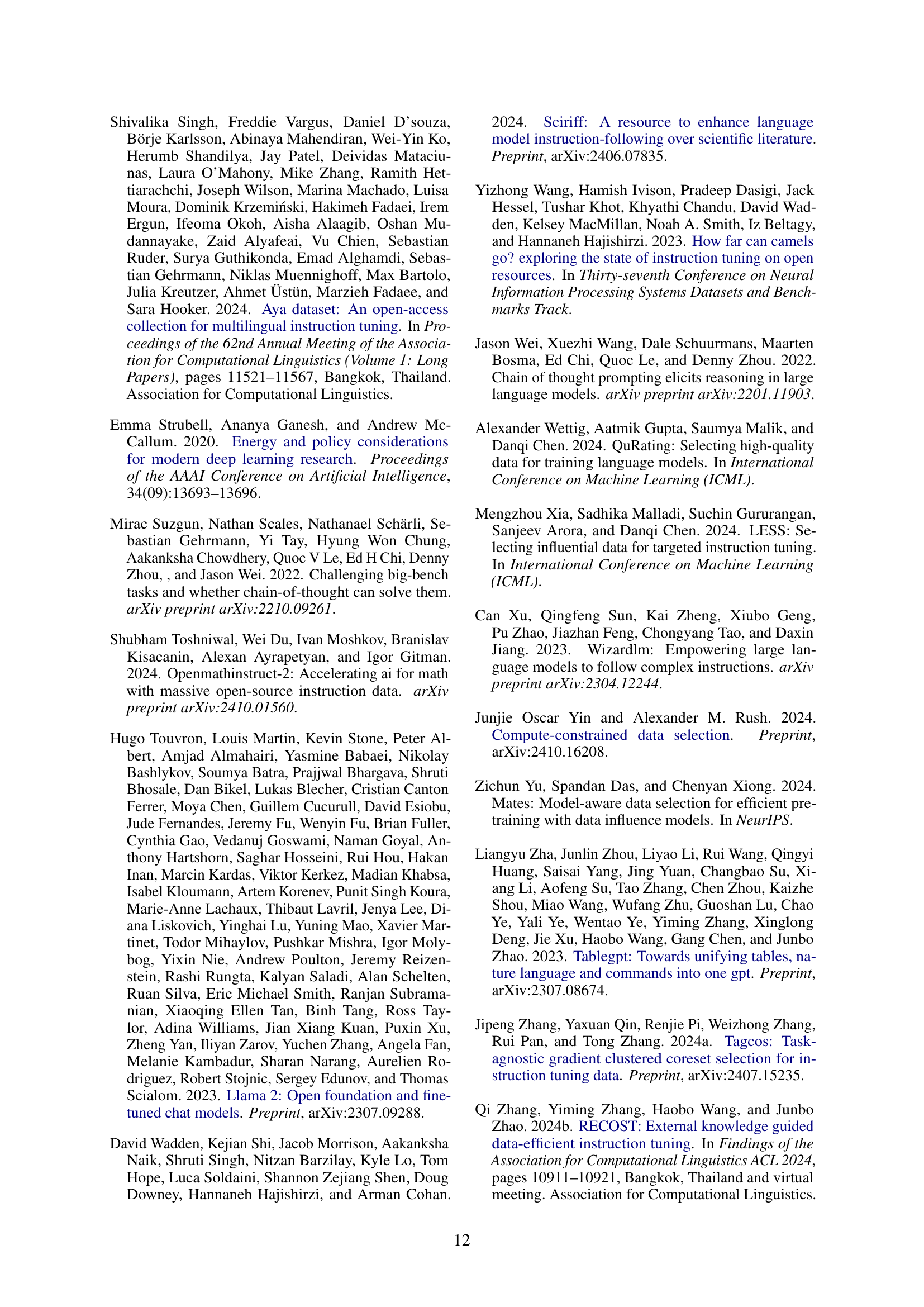

🔼 This table lists the number of query and test samples used for each evaluation dataset in the paper. The query samples are used as few-shot examples during model evaluation, while the test samples are used to measure the final model performance. The number of query samples varies depending on the specific dataset and the evaluation task, ranging from 8 to 500. The number of test samples is substantially larger than the number of query samples for all datasets.

read the caption

Table 4: Query and test split counts for evaluation datasets.

Full paper#