TL;DR#

Diffusion models excel in image and video generation but are computationally intensive. Flow matching, which streamlines the diffusion process for faster generation, offers a solution. However, the original training pipeline of flow matching isn’t optimal. This paper introduces two techniques to enhance flow matching.

The paper presents ProReflow, which incorporates progressive reflow and aligned v-prediction. Progressive reflow progressively reflows diffusion models in local timesteps until the whole diffusion progresses. Aligned v-prediction highlights direction matching over magnitude matching. Experiments show that the method improves performance significantly.

Key Takeaways#

Why does it matter?#

This paper is important for researchers because it improves the efficiency of diffusion models, making them more practical for real-time applications. The proposed techniques are relatively simple to implement and can be integrated into existing architectures. The improvement helps in creating high-quality images in fewer steps, reducing computational costs, and opening new possibilities for edge devices.

Visual Insights#

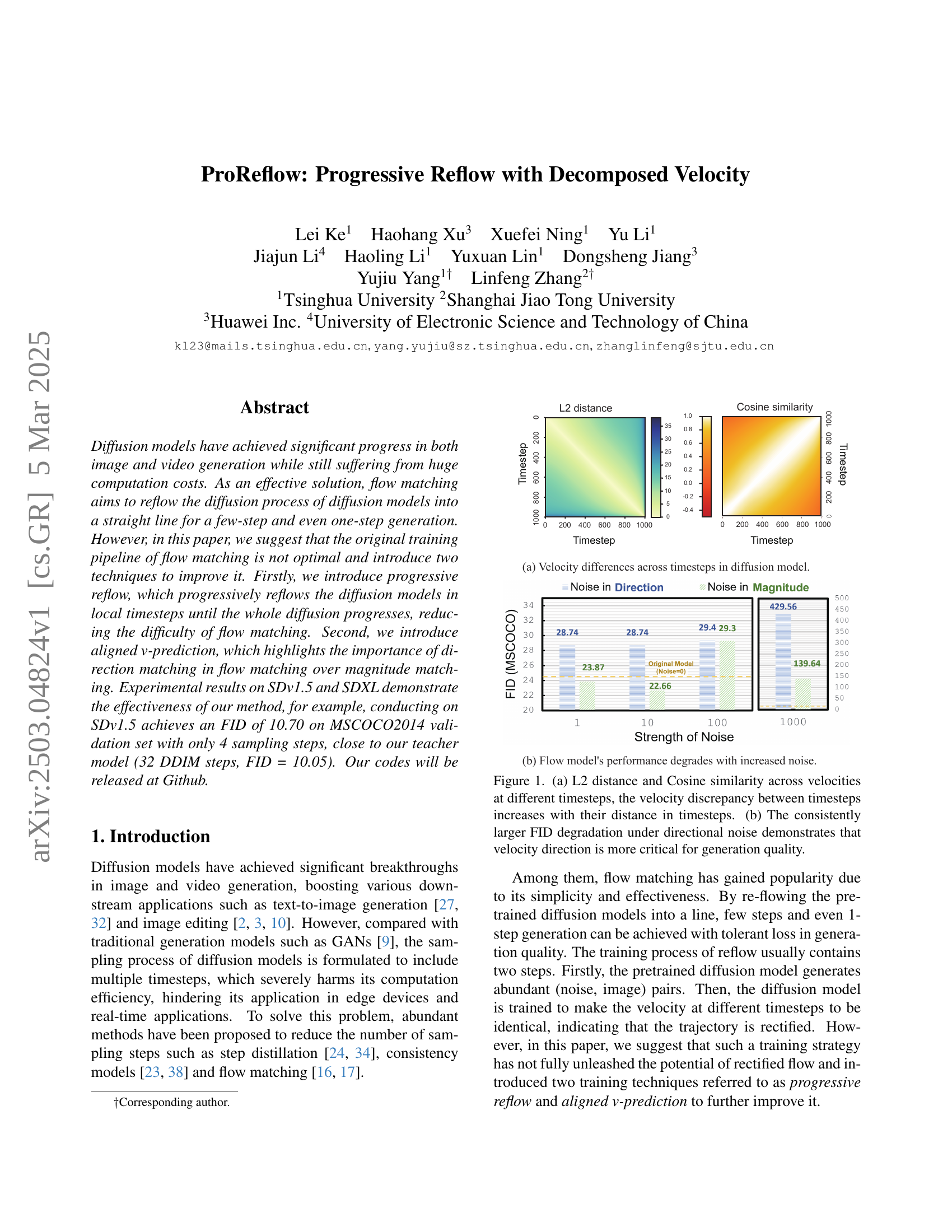

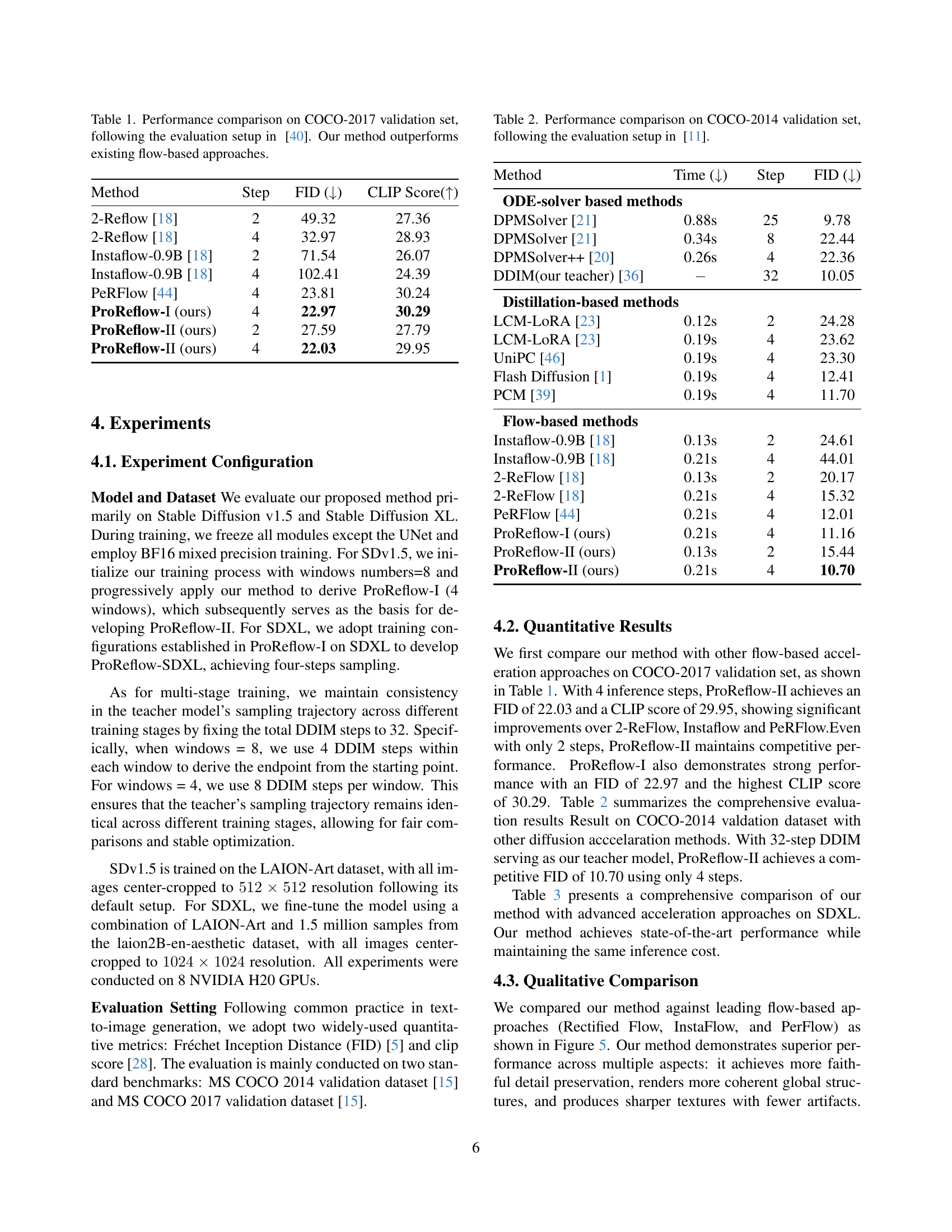

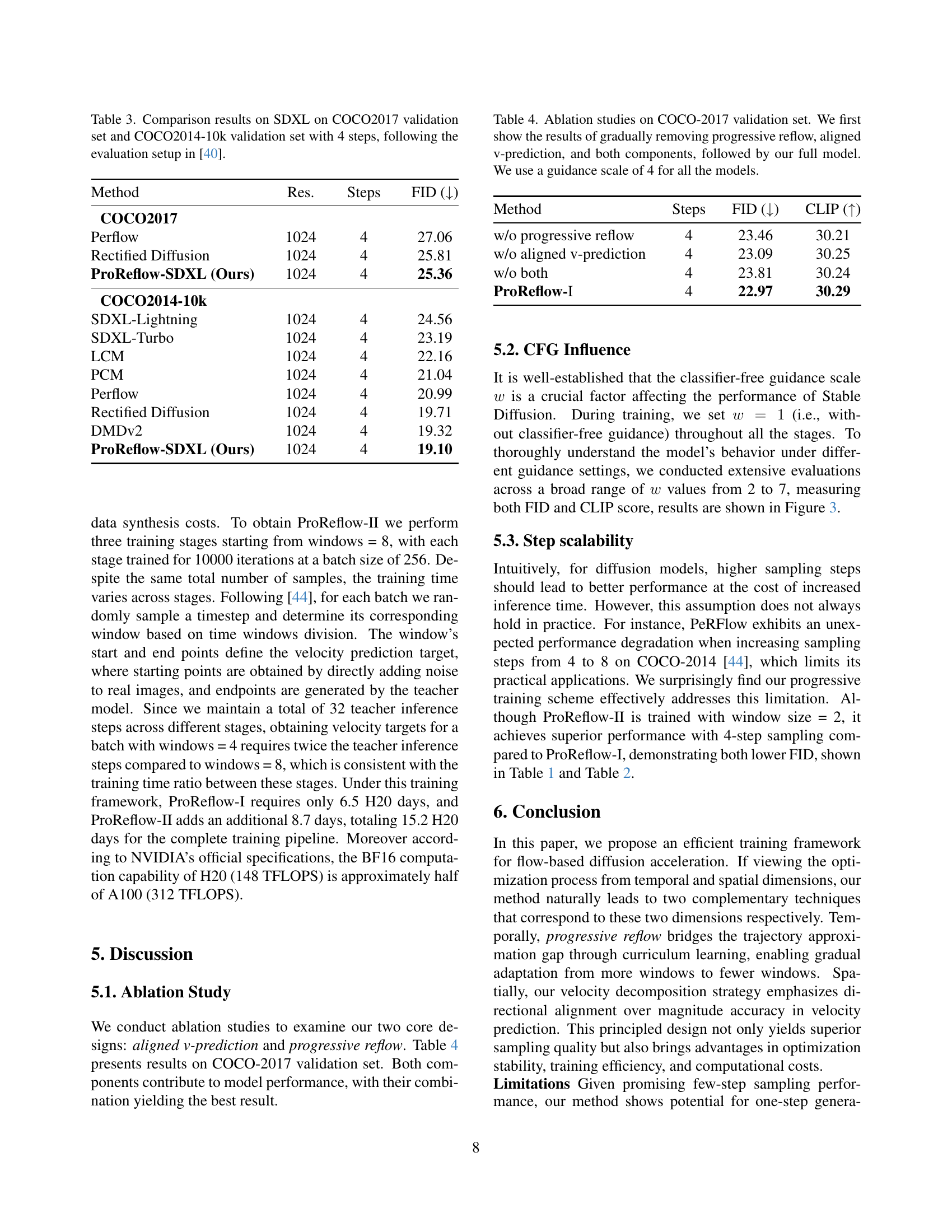

🔼 Figure 1 consists of two subfigures. Subfigure (a) visualizes the velocity differences across various timesteps within a diffusion model. It uses two metrics, L2 distance and cosine similarity, to quantify the discrepancy in velocities between different timesteps. The results show that the velocity discrepancy increases as the distance between timesteps grows. Subfigure (b) investigates the impact of noise on the quality of generated images. By introducing noise that only affects the direction and magnitude of velocity separately, it demonstrates that noise impacting direction causes a significantly higher FID degradation. This highlights the importance of accurately matching the direction of velocity during flow matching.

read the caption

Figure 1: (a) L2 distance and Cosine similarity across velocities at different timesteps, the velocity discrepancy between timesteps increases with their distance in timesteps. (b) The consistently larger FID degradation under directional noise demonstrates that velocity direction is more critical for generation quality.

| Method | Step | FID (↓) | CLIP Score(↑) |

|---|---|---|---|

| 2-Reflow [18] | 2 | 49.32 | 27.36 |

| 2-Reflow [18] | 4 | 32.97 | 28.93 |

| Instaflow-0.9B [18] | 2 | 71.54 | 26.07 |

| Instaflow-0.9B [18] | 4 | 102.41 | 24.39 |

| PeRFlow [44] | 4 | 23.81 | 30.24 |

| ProReflow-I (ours) | 4 | 22.97 | 30.29 |

| ProReflow-II (ours) | 2 | 27.59 | 27.79 |

| ProReflow-II (ours) | 4 | 22.03 | 29.95 |

🔼 This table compares the performance of different methods for accelerating the Stable Diffusion model on the COCO-2017 validation set. The methods are evaluated based on FID (Fréchet Inception Distance) and CLIP (Contrastive Language–Image Pre-training) scores. The number of sampling steps required by each method is also provided. The results show that the proposed ProReflow method significantly outperforms existing flow-based approaches in terms of both FID and CLIP scores, achieving state-of-the-art results with a reduced number of sampling steps.

read the caption

Table 1: Performance comparison on COCO-2017 validation set, following the evaluation setup in [40]. Our method outperforms existing flow-based approaches.

In-depth insights#

Reflow Revisited#

The concept of “Reflow Revisited” hints at a critical examination and potential refinement of existing flow-based generative models. It is an iterative process, aiming to rectify and enhance the velocity field within diffusion models. Progressive training and aligned v-prediction are used, likely addressing limitations such as trajectory inaccuracies and inefficiencies. It seems to explore alternative optimization strategies for flow matching. By revisiting reflow, one could achieve a better balance between computational efficiency and generation quality, or identify novel architectures that streamline the diffusion process. The primary goal would be enhance existing frameworks without fundamental architectural changes.

Local Reflows#

The concept of “Local Reflows,” though not explicitly present, alludes to a strategy for refining generative model trajectories in localized segments. The insight suggests that instead of globally optimizing the entire diffusion process at once, a more effective approach involves breaking down the trajectory into smaller, manageable windows. This allows the model to initially focus on learning consistent velocity predictions within adjacent timesteps, where the velocity discrepancies are less pronounced. The potential benefits are multifold, enabling more stable training dynamics, enhanced fine-grained control over trajectory shaping, and mitigation of the challenges associated with long-range dependencies. By progressively reducing the window size, the model gradually refines its ability to straighten the entire trajectory, resulting in improved generation quality and efficient few-step sampling. Overall, focusing on local reflows is expected to give a model better learning and fine-tuning capabilities.

Align Direction#

The idea of aligning directions in various fields, such as computer vision or machine learning, suggests a strategy where the orientation or heading of different elements or processes is harmonized. This alignment can be crucial for achieving better performance or convergence. For example, in optimization algorithms, aligning the search direction with the gradient can lead to faster convergence. Similarly, in generative models, aligning the latent space directions with meaningful attributes can enable more controlled generation. Directional alignment may involve techniques like regularization terms that penalize misalignment or loss functions that explicitly reward directional consistency. The approach often involves understanding the underlying geometry or structure of the data or the problem and then designing methods that encourage alignment with that structure. By prioritizing directional accuracy, systems can achieve superior results compared to those that only focus on magnitude or other scalar measures. This highlights the importance of vector-based thinking over scalar approaches.

SDXL Result#

When delving into the ‘SDXL Result,’ the expectation is to find a thorough examination of the proposed methodology’s efficacy on the SDXL model. This involves assessing enhancements in image quality, coherence, and detail fidelity, comparing the model’s performance against existing state-of-the-art techniques and baselines, using quantitative metrics such as FID scores and qualitative observations, while focusing on trade-offs between performance and computational efficiency in this setting. It’s also vital to note any parameter adjustments made to optimize performance, offering insights for real-world applications.

CFG’s Influence#

The section on CFG influence underscores its importance in diffusion models. It highlights that classifier-free guidance (CFG) significantly impacts the stability of Stable Diffusion. The training setup involves setting the CFG scale at 1 throughout all stages, indicating equal consideration for guided and unguided learning. Evaluations across a broad range of CFG values reveal insights into the model’s behavior under different guidance strengths. This emphasizes the crucial role of CFG in balancing fidelity and diversity in the generated images, requiring careful tuning for optimal results.

More visual insights#

More on figures

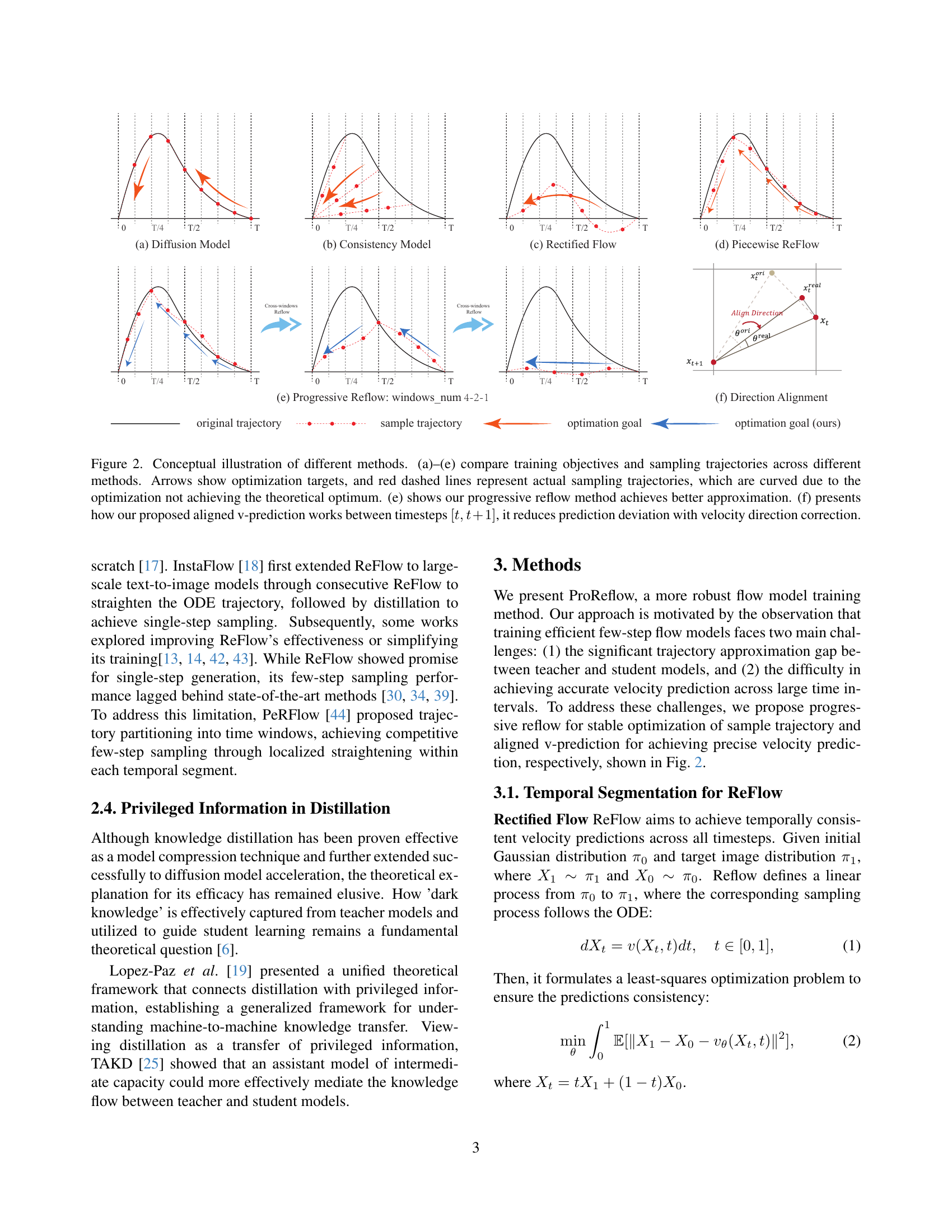

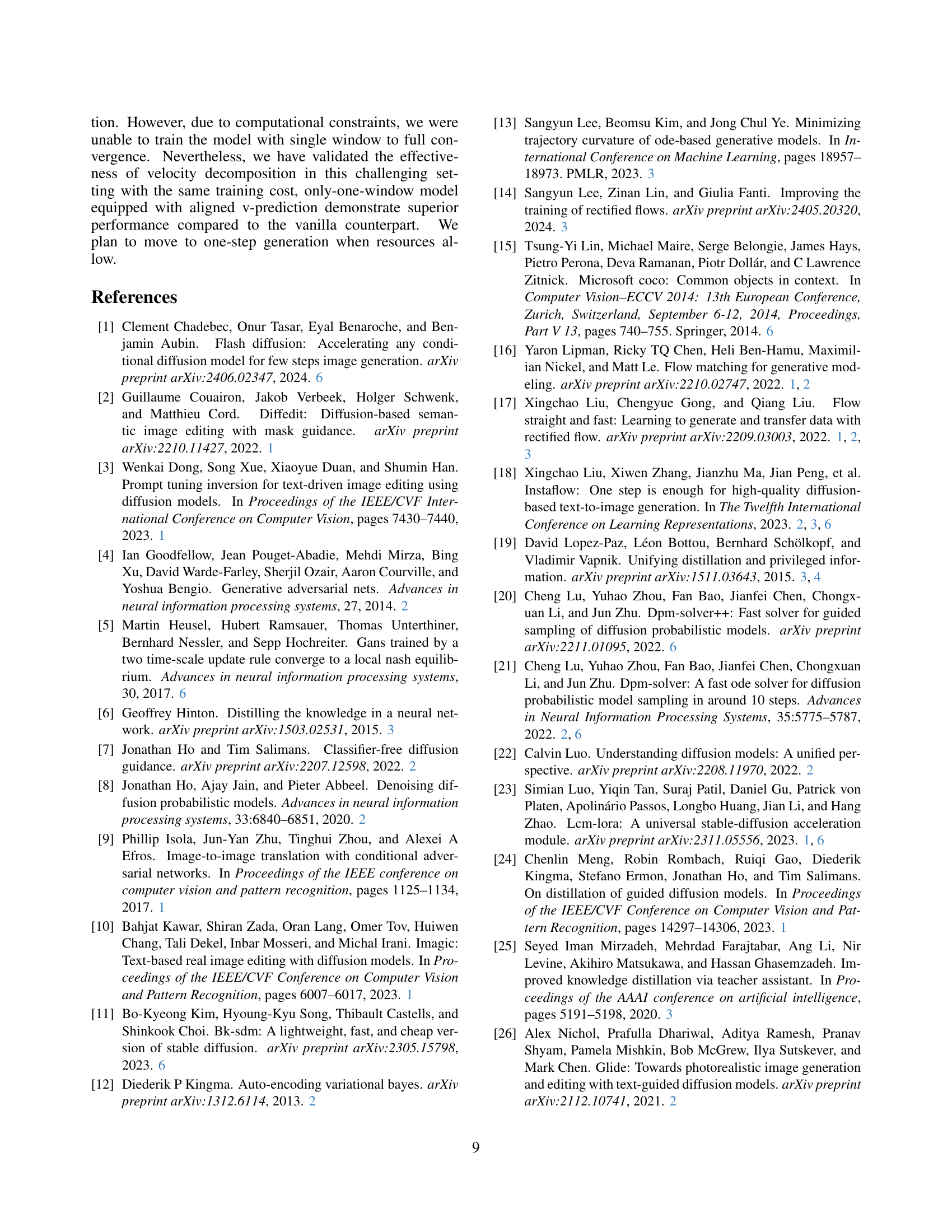

🔼 This figure compares different methods for training diffusion models, focusing on the optimization process and the resulting sampling trajectories. Subfigures (a) through (e) show how various approaches attempt to straighten the trajectory of a diffusion process (ideal trajectory is a straight line). Traditional methods (a)-(d) struggle to fully achieve this, resulting in curved trajectories, showing that optimization doesn’t reach the theoretical ideal. Subfigure (e) demonstrates the proposed Progressive Reflow method which provides a better approximation by breaking the process into smaller, easier-to-optimize steps. Subfigure (f) illustrates how the proposed Aligned v-Prediction technique refines velocity prediction by correcting the direction of the velocity vector between consecutive timesteps, thus improving accuracy.

read the caption

Figure 2: Conceptual illustration of different methods. (a)–(e) compare training objectives and sampling trajectories across different methods. Arrows show optimization targets, and red dashed lines represent actual sampling trajectories, which are curved due to the optimization not achieving the theoretical optimum. (e) shows our progressive reflow method achieves better approximation. (f) presents how our proposed aligned v-prediction works between timesteps [t,t+1]𝑡𝑡1[t,t+1][ italic_t , italic_t + 1 ], it reduces prediction deviation with velocity direction correction.

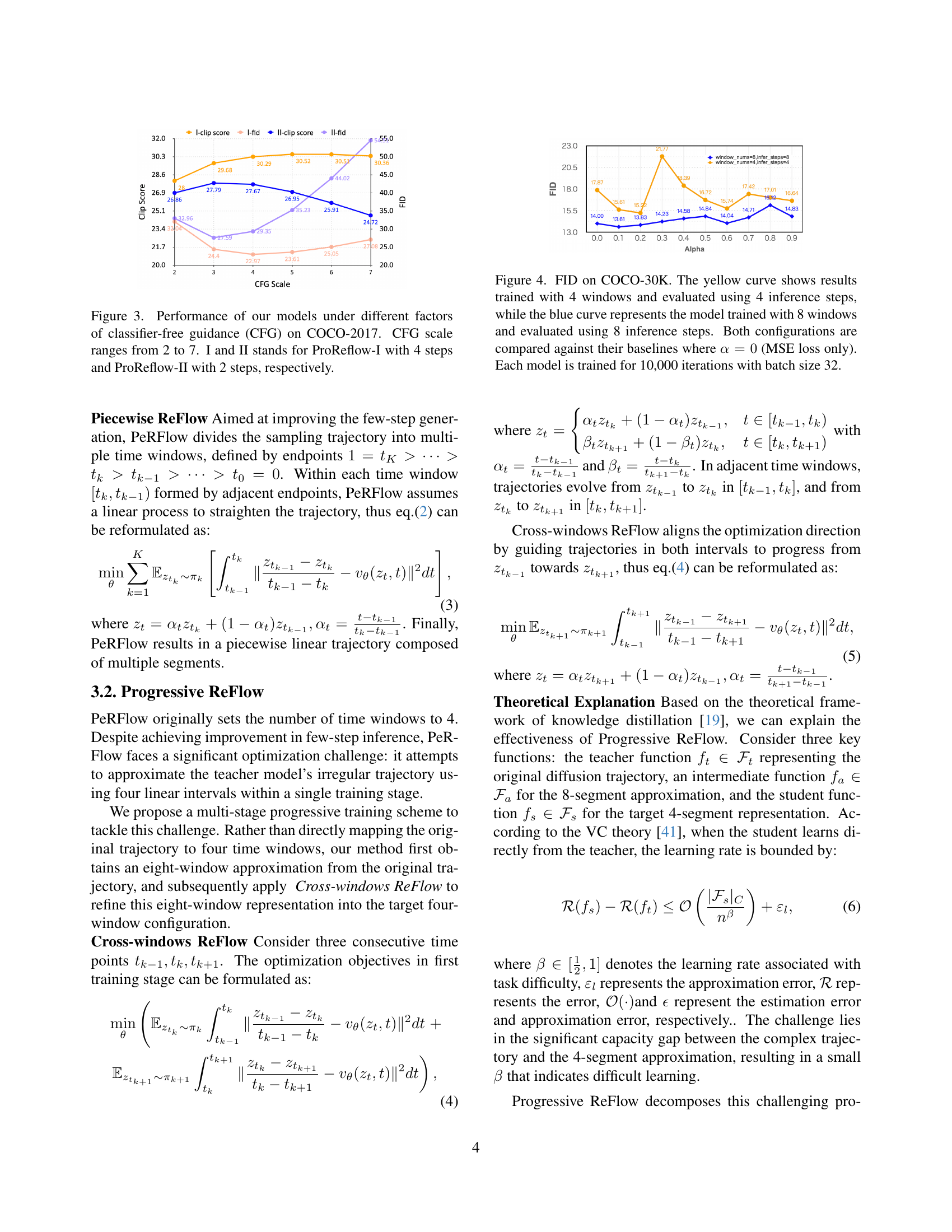

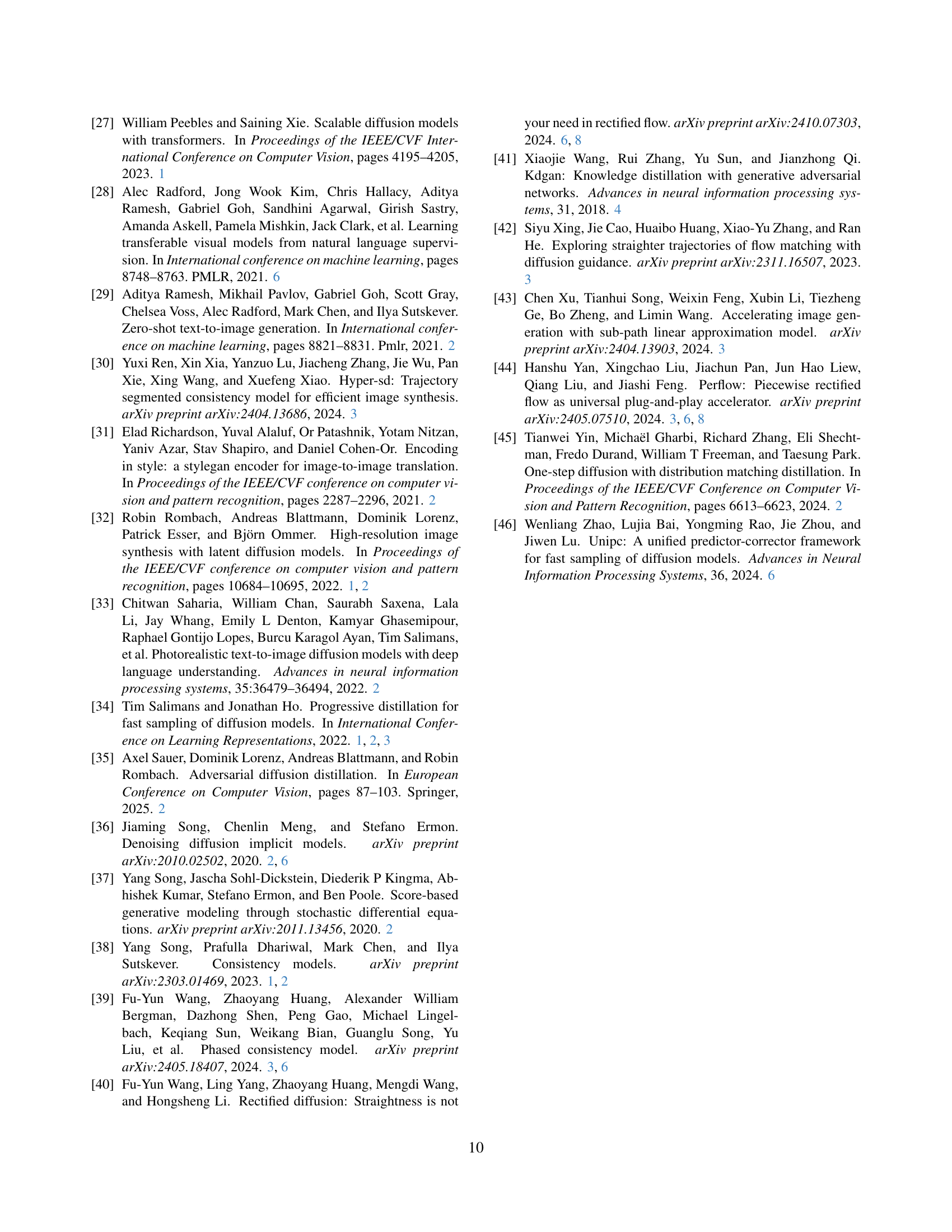

🔼 This figure analyzes the performance of two proposed models, ProReflow-I and ProReflow-II, under varying classifier-free guidance (CFG) scales. ProReflow-I uses 4 inference steps, while ProReflow-II employs only 2. The x-axis represents the CFG scale (ranging from 2 to 7), while the y-axis shows the resulting FID and CLIP scores. The results are evaluated on the COCO-2017 dataset, providing insights into model robustness and efficiency across different CFG settings. The curves reveal how FID and CLIP scores vary as the CFG scale changes for both models, helping to compare their performance.

read the caption

Figure 3: Performance of our models under different factors of classifier-free guidance (CFG) on COCO-2017. CFG scale ranges from 2 to 7. I and II stands for ProReflow-I with 4 steps and ProReflow-II with 2 steps, respectively.

More on tables

| Method | Time (↓) | Step | FID (↓) |

| ODE-solver based methods | |||

| DPMSolver [21] | 0.88s | 25 | 9.78 |

| DPMSolver [21] | 0.34s | 8 | 22.44 |

| DPMSolver++ [20] | 0.26s | 4 | 22.36 |

| DDIM(our teacher) [36] | 32 | 10.05 | |

| Distillation-based methods | |||

| LCM-LoRA [23] | 0.12s | 2 | 24.28 |

| LCM-LoRA [23] | 0.19s | 4 | 23.62 |

| UniPC [46] | 0.19s | 4 | 23.30 |

| Flash Diffusion [1] | 0.19s | 4 | 12.41 |

| PCM [39] | 0.19s | 4 | 11.70 |

| Flow-based methods | |||

| Instaflow-0.9B [18] | 0.13s | 2 | 24.61 |

| Instaflow-0.9B [18] | 0.21s | 4 | 44.01 |

| 2-ReFlow [18] | 0.13s | 2 | 20.17 |

| 2-ReFlow [18] | 0.21s | 4 | 15.32 |

| PeRFlow [44] | 0.21s | 4 | 12.01 |

| ProReflow-I (ours) | 0.21s | 4 | 11.16 |

| ProReflow-II (ours) | 0.13s | 2 | 15.44 |

| ProReflow-II (ours) | 0.21s | 4 | 10.70 |

🔼 This table presents a quantitative comparison of different methods for accelerating the diffusion process in image generation, specifically focusing on the COCO-2014 validation set. It compares the FID (Fréchet Inception Distance) scores achieved by various methods, including both ODE-solver based approaches (like DPM-Solver and its variants), distillation-based methods, and flow-based methods (including the proposed ProReflow). The number of steps required for sampling and the inference time are also included to demonstrate the efficiency of each approach. The evaluation setup follows the guidelines described in reference [11]. Lower FID values indicate better image quality.

read the caption

Table 2: Performance comparison on COCO-2014 validation set, following the evaluation setup in [11].

| Method | Res. | Steps | FID (↓) |

| COCO2017 | |||

| Perflow | 1024 | 4 | 27.06 |

| Rectified Diffusion | 1024 | 4 | 25.81 |

| ProReflow-SDXL (Ours) | 1024 | 4 | 25.36 |

| COCO2014-10k | |||

| SDXL-Lightning | 1024 | 4 | 24.56 |

| SDXL-Turbo | 1024 | 4 | 23.19 |

| LCM | 1024 | 4 | 22.16 |

| PCM | 1024 | 4 | 21.04 |

| Perflow | 1024 | 4 | 20.99 |

| Rectified Diffusion | 1024 | 4 | 19.71 |

| DMDv2 | 1024 | 4 | 19.32 |

| ProReflow-SDXL (Ours) | 1024 | 4 | 19.10 |

🔼 This table presents a comparison of different methods’ performance on the Stable Diffusion XL (SDXL) model for image generation. It shows the FID (Fréchet Inception Distance) scores achieved by various methods on two benchmark datasets: COCO2017 and COCO2014-10k. The evaluation uses 4 sampling steps, following the methodology described in reference [40]. The results highlight the performance of the proposed ProReflow-SDXL method compared to other existing approaches.

read the caption

Table 3: Comparison results on SDXL on COCO2017 validation set and COCO2014-10k validation set with 4 steps, following the evaluation setup in [40].

| Method | Steps | FID (↓) | CLIP (↑) |

|---|---|---|---|

| w/o progressive reflow | 4 | 23.46 | 30.21 |

| w/o aligned v-prediction | 4 | 23.09 | 30.25 |

| w/o both | 4 | 23.81 | 30.24 |

| ProReflow-I | 4 | 22.97 | 30.29 |

🔼 This table presents the ablation study results on the COCO-2017 validation set. It systematically investigates the impact of each component of the proposed ProReflow method (progressive reflow and aligned v-prediction) on the model’s performance. The study starts with the full ProReflow model, and then progressively removes one component at a time, assessing the effect on FID and CLIP scores using a consistent guidance scale of 4 across all experiments. This allows for a precise evaluation of each component’s individual contributions and their synergistic effect.

read the caption

Table 4: Ablation studies on COCO-2017 validation set. We first show the results of gradually removing progressive reflow, aligned v-prediction, and both components, followed by our full model. We use a guidance scale of 4 for all the models.

Full paper#