TL;DR#

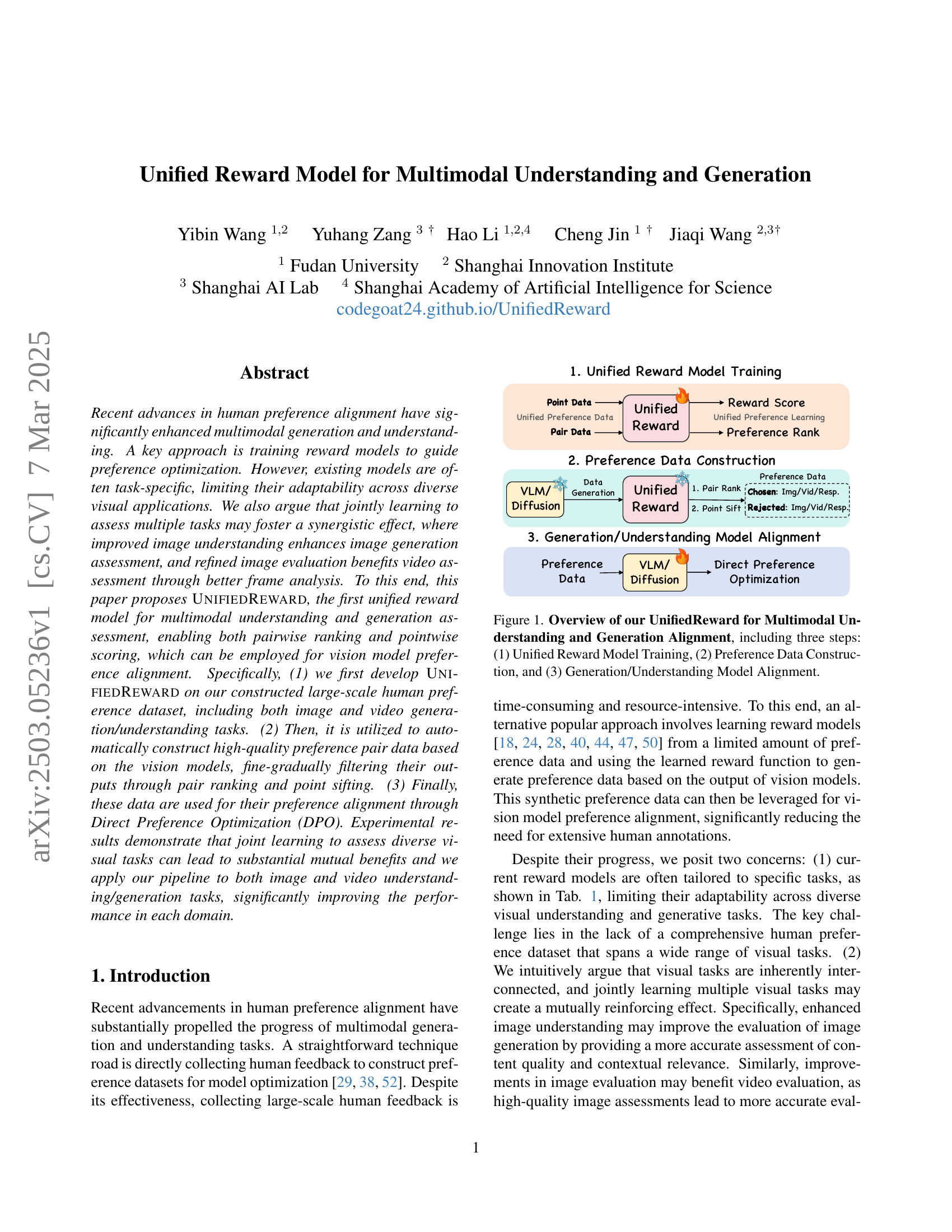

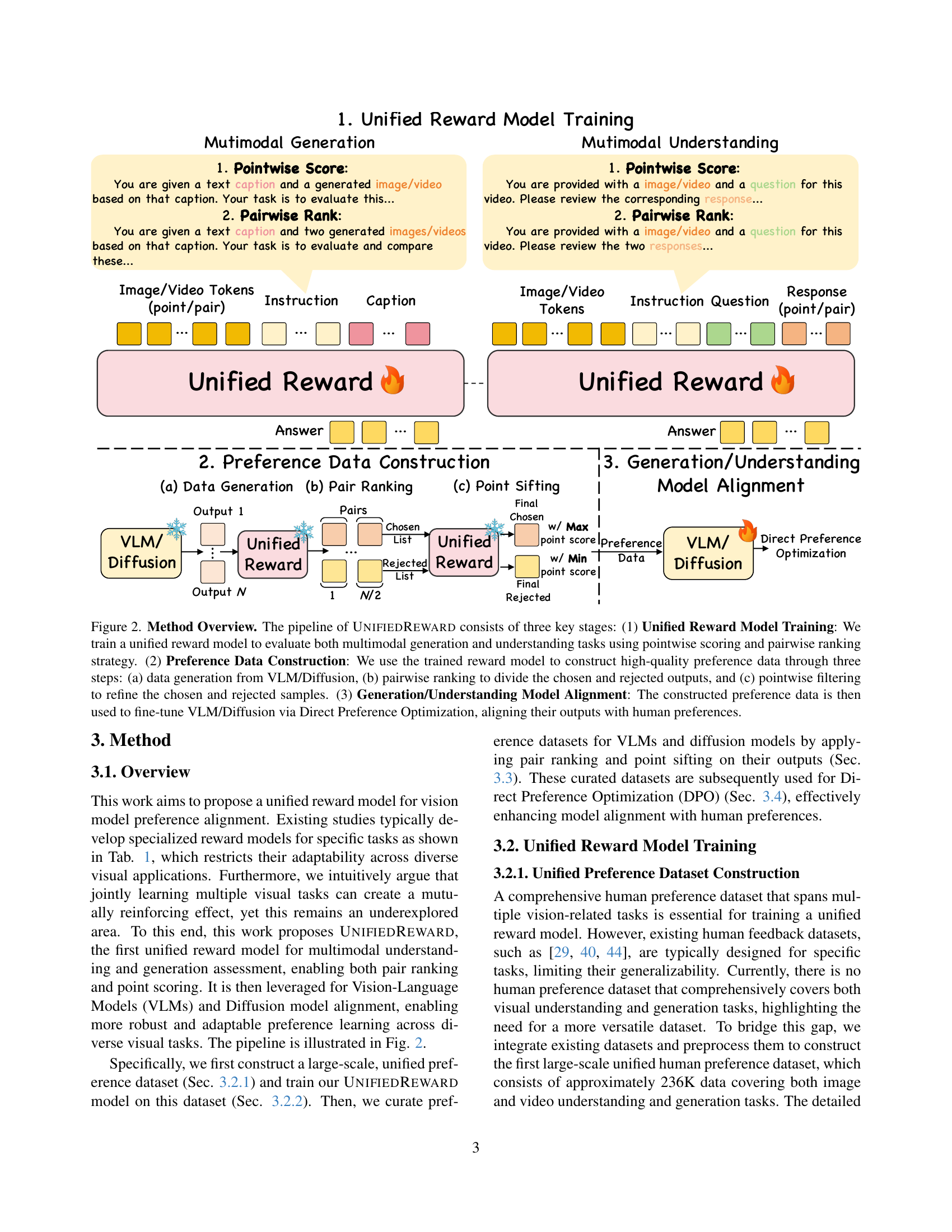

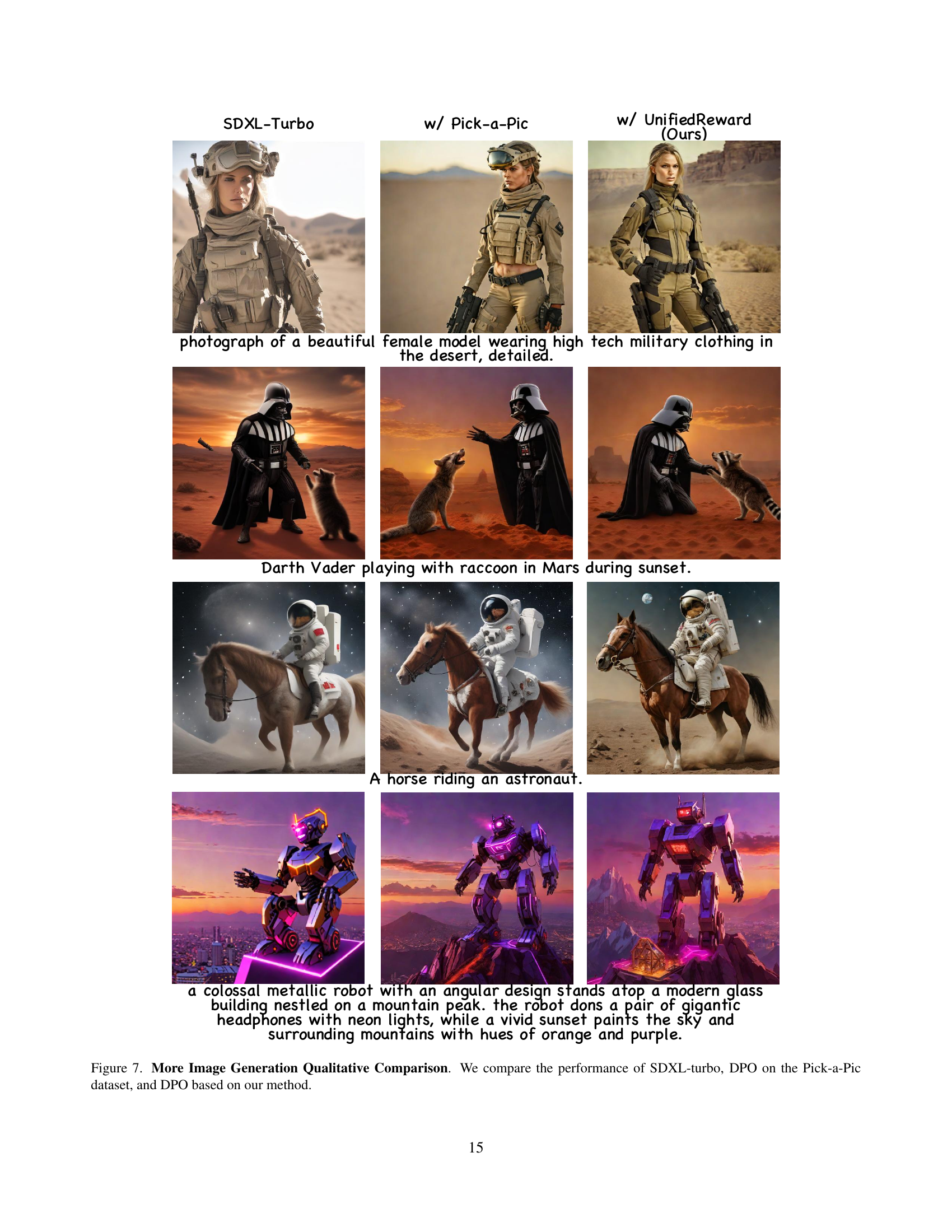

Human preference alignment has boosted multimodal models, mainly via reward models. Yet, most models are task-specific, which limits their use in visual tasks. They should leverage a shared understanding in different tasks to improve performance. To solve this issue, this paper creates a reward model called UNIFIEDREWARD for assessing multimodal understanding and generation. It supports both pairwise ranking and pointwise scoring.

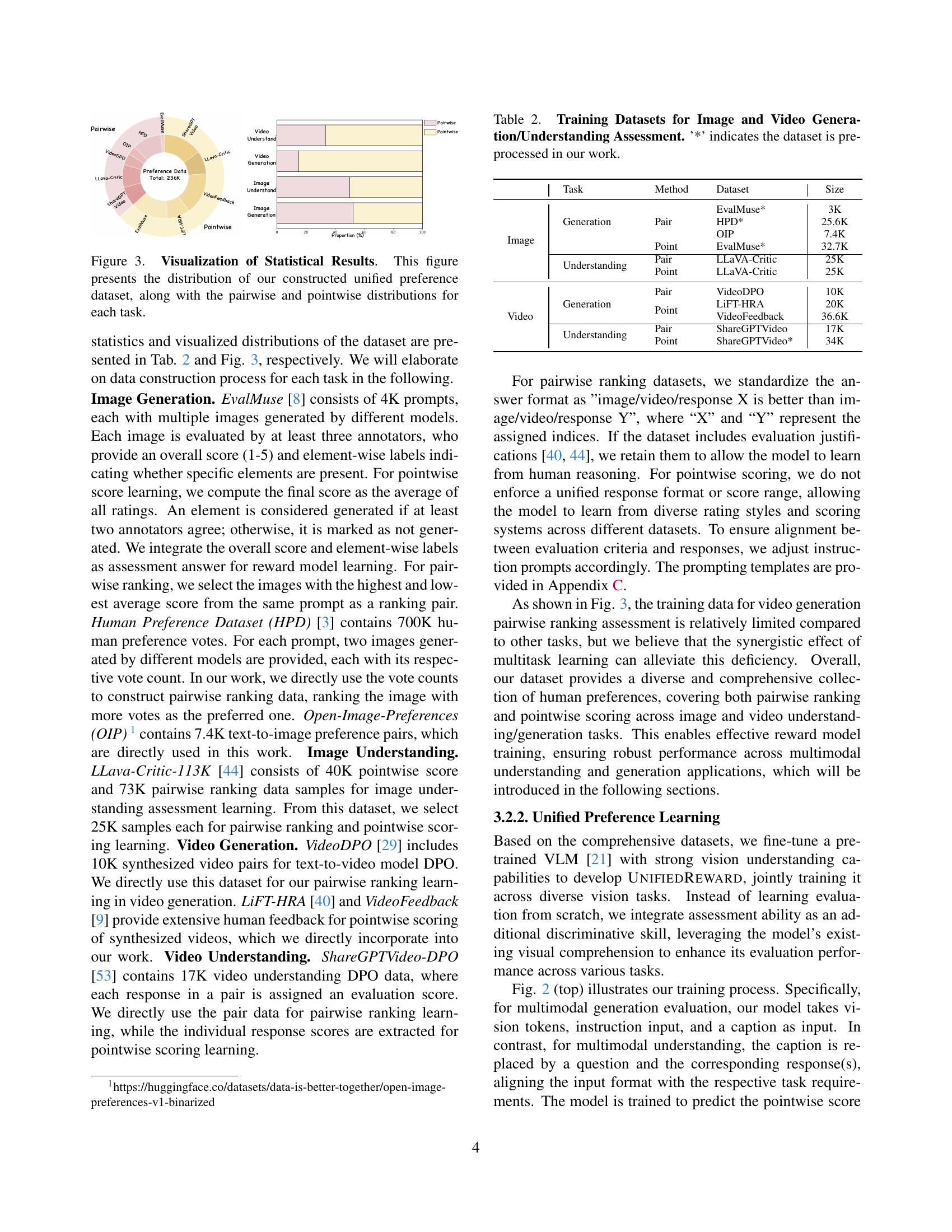

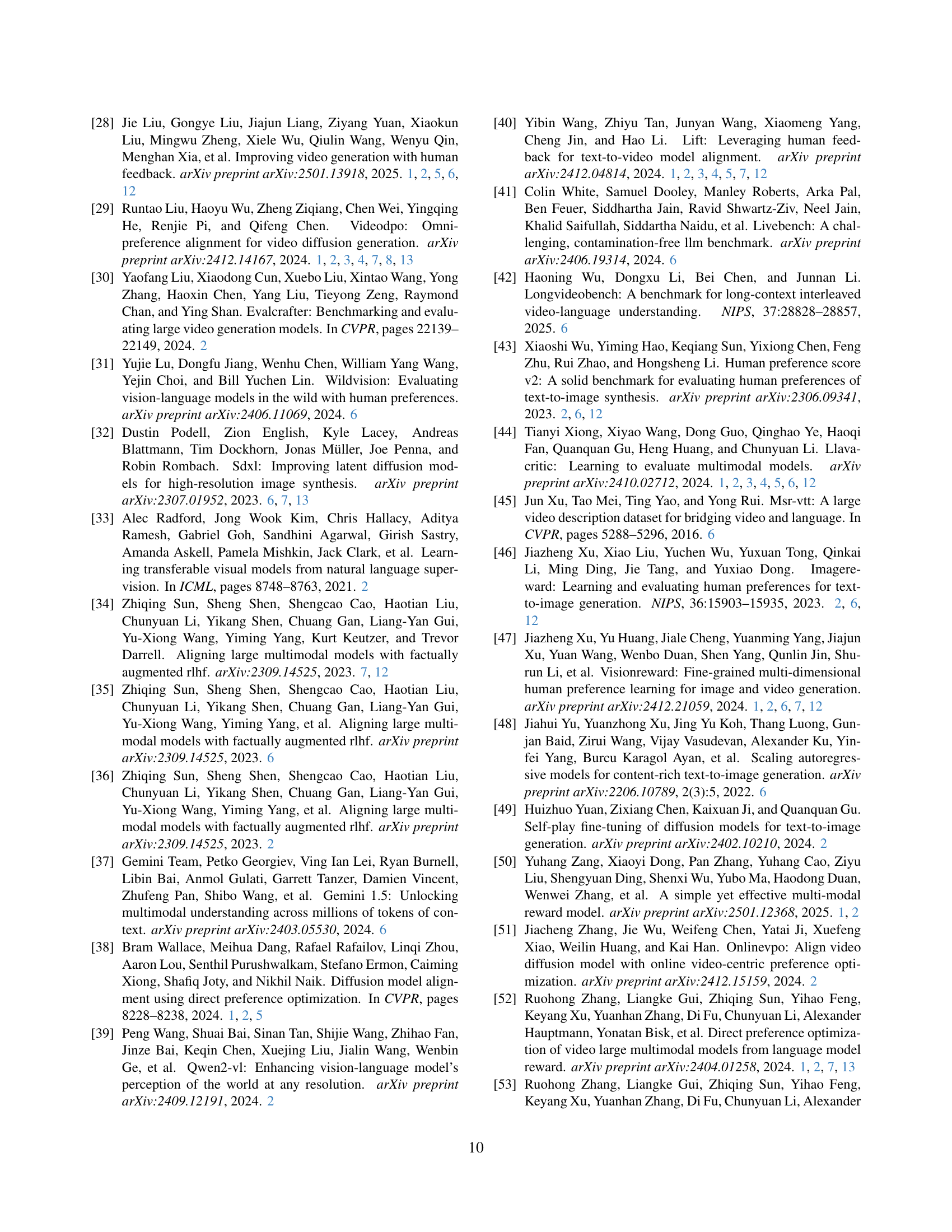

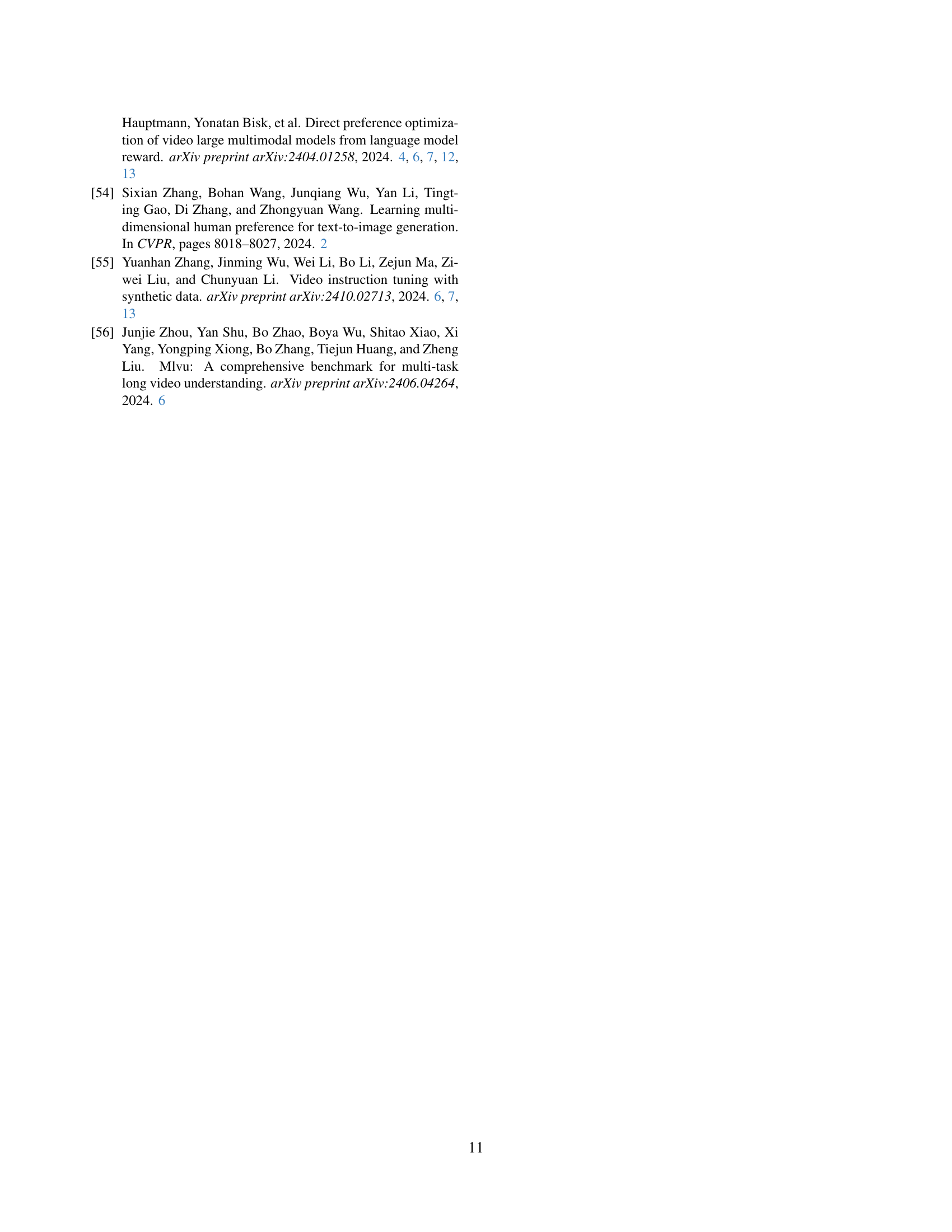

UNIFIEDREWARD is the first model of its kind, allowing use in various vision tasks. The pipeline first builds a human preference dataset with image/video tasks. Then, it picks quality data using models with ranking and point sifting. Finally, it adjusts models via Direct Preference Optimization (DPO). Experiments show joint learning boosts visual tasks, improving performance in both image and video fields.

Key Takeaways#

Why does it matter?#

This paper introduces a unified reward model for both image and video tasks, enhancing performance across visual domains. It’s significant for its potential to improve diverse AI applications and guide future research in reward-based AI evaluation.

Visual Insights#

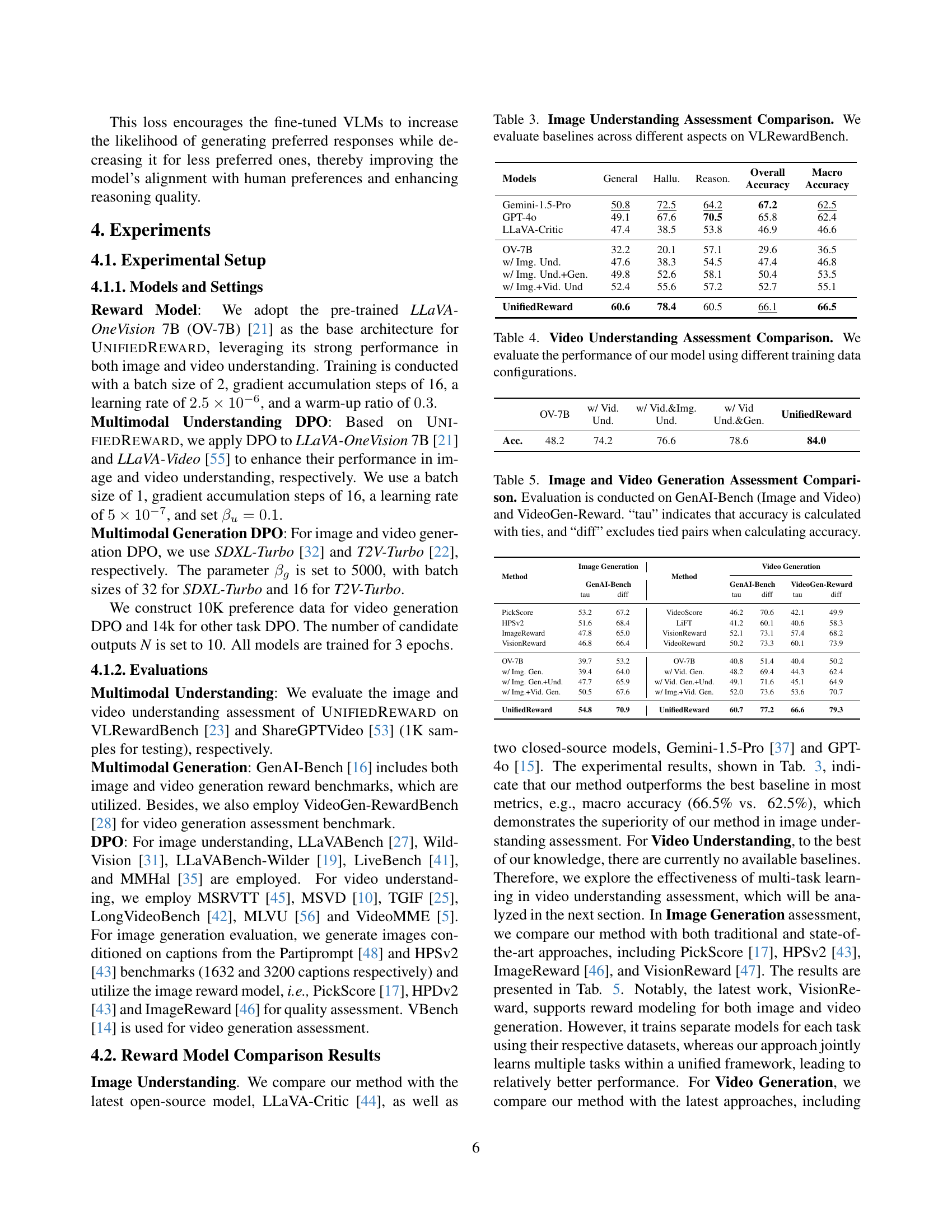

| \topruleReward Model | Method | \makecell[c]Image | |||

|---|---|---|---|---|---|

| Generation | \makecell[c]Image | ||||

| Understand | \makecell[c]Video | ||||

| Generation | \makecell[c]Video | ||||

| Understand | |||||

| \midrulePickScore’23 [pickscore] | Point | \checkmark | |||

| HPS’23 [hps] | Point | \checkmark | |||

| ImageReward’23 [hps] | Point | \checkmark | |||

| LLaVA-Critic’24 [llava-critic] | Pair/Point | \checkmark | |||

| IXC-2.5-Reward’25 [zang2025internlm] | Pair/Point | \checkmark | \checkmark | ||

| VideoScore’24 [LiFT] | Point | \checkmark | |||

| LiFT’24 [LiFT] | Point | \checkmark | |||

| VisionReward’24 [visionreward] | Point | \checkmark | \checkmark | ||

| VideoReward’25 [videoreward] | Point | \checkmark | |||

| \midruleUnifiedReward | Pair/Point | \checkmark | \checkmark | \checkmark | \checkmark |

| \bottomrule |

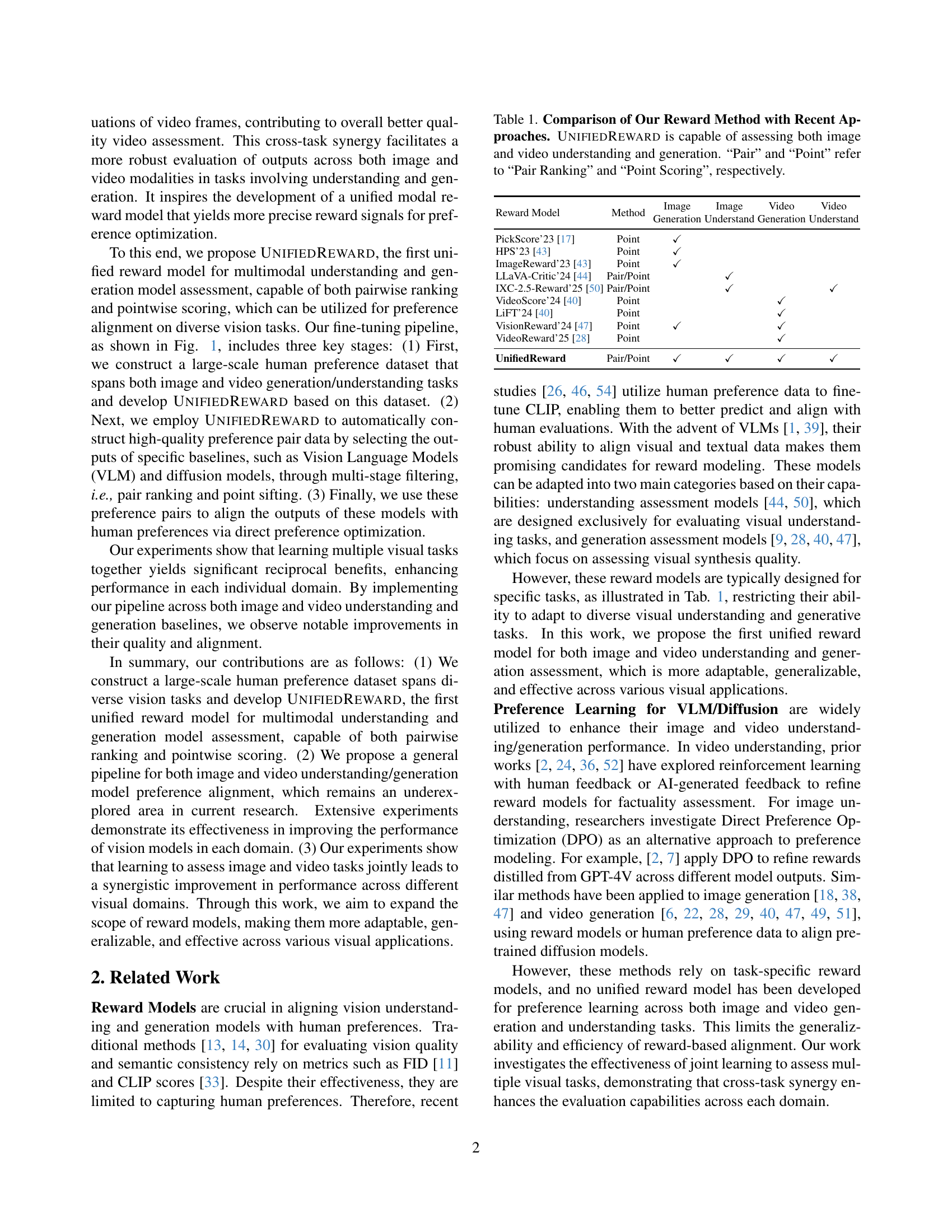

🔼 This table compares the capabilities of UNIFIEDREWARD, a novel unified reward model, with existing reward models in the literature. It shows that UNIFIEDREWARD can assess both image and video understanding and generation tasks, unlike most existing models that specialize in one task or modality. The comparison highlights UNIFIEDREWARD’s ability to perform both pairwise ranking and pointwise scoring, enhancing its versatility in aligning vision models with human preferences.

read the caption

Table \thetable: Comparison of Our Reward Method with Recent Approaches. \ourmethodis capable of assessing both image and video understanding and generation. “Pair” and “Point” refer to “Pair Ranking” and “Point Scoring”, respectively.

Full paper#